Abstract

Flying insects employ elegant optical-flow-based strategies to solve complex tasks such as landing or obstacle avoidance. Roboticists have mimicked these strategies on flying robots with only limited success, because optical flow (1) cannot disentangle distance from velocity and (2) is less informative in the highly important flight direction. Here, we propose a solution to these fundamental shortcomings by having robots learn to estimate distances to objects by their visual appearance. The learning process obtains supervised targets from a stability-based distance estimation approach. We have successfully implemented the process on a small flying robot. For the task of landing, it results in faster, smooth landings. For the task of obstacle avoidance, it results in higher success rates at higher flight speeds. Our results yield improved robotic visual navigation capabilities and lead to a novel hypothesis on insect intelligence: behaviours that were described as optical-flow-based and hardwired actually benefit from learning processes.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All data are publicly available at https://doi.org/10.34894/KLKP1M.

Code availability

All code bases (in C, Python and MATLAB) that have been used in the experiments are publicly available at https://doi.org/10.34894/KLKP1M.

References

Floreano, D. & Wood, R. J. Science, technology and the future of small autonomous drones. Nature 521, 460–466 (2015).

Franceschini, N., Pichon, J.-M. & Blanes, C. From insect vision to robot vision. Philos. Trans. R. Soc. Lond. B 337, 283–294 (1992).

Webb, B. Robots in invertebrate neuroscience. Nature 417, 359–363 (2002).

Franceschini, N. Small brains, smart machines: from fly vision to robot vision and back again. Proc. IEEE 102, 751–781 (2014).

Gibson, J. J. The Ecological Approach to Visual Perception (Houghton Mifflin, 1979).

Collett, T. S. Insect vision: controlling actions through optic flow. Curr. Biol. 12, R615–R617 (2002).

Srinivasan, M. V., Zhang, S. W., Chahl, J. S., Stange, G. & Garratt, M. An overview of insect-inspired guidance for application in ground and airborne platforms. Proc. Inst. Mech. Eng. G 218, 375–388 (2004).

Srinivasan, M. V., Zhang, S.-W., Chahl, J. S., Barth, E. & Venkatesh, S. How honeybees make grazing landings on flat surfaces. Biol. Cybern. 83, 171–183 (2000).

Baird, E., Boeddeker, N., Ibbotson, M. R. & Srinivasan, M. V. A universal strategy for visually guided landing. Proc. Natl Acad. Sci. USA 110, 18686–18691 (2013).

Ruffier, F. & Franceschini, N. Visually guided micro-aerial vehicle: automatic take off, terrain following, landing and wind reaction. In Proc. 2004 IEEE International Conference on Robotics and Automation Vol. 3, 2339–2346 (IEEE, 2004).

Herisse, B., Russotto, F. X., Hamel, T. & Mahony, R. Hovering flight and vertical landing control of a VTOL unmanned aerial vehicle using optical flow. In 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems 801–806 (2008); https://doi.org/10.1109/IROS.2008.4650731

Alkowatly, M. T., Becerra, V. M. & Holderbaum, W. Bioinspired autonomous visual vertical control of a quadrotor unmanned aerial vehicle. J. Guid. Control Dyn. 38, 249–262 (2015).

Van Breugel, F., Morgansen, K. & Dickinson, M. H. Monocular distance estimation from optic flow during active landing maneuvers. Bioinspir. Biomim 9, 2 (2014).

Howard, D. & Kendoul, F. Towards evolved time to contact neurocontrollers for quadcopters. In Proc. Australasian Conference on Artificial Life and Computational Intelligence 336–347 (Springer, 2016).

Scheper, K. Y. W. & de Croon, G. C. H. E. Evolution of robust high speed optical-flow-based landing for autonomous MAVs. Rob. Auton. Syst. (2020); https://doi.org/10.1016/j.robot.2019.103380

Hagenaars, J. J., Paredes-Vallés, F., Bohté, S. M. & de Croon, G. C. H. E. Evolved neuromorphic control for high speed divergence-based landings of MAVs. Preprint at https://arxiv.org/pdf/2003.03118.pdf (2020).

Santer, R. D., Rind, F. C., Stafford, R. & Simmons, P. J. Role of an identified looming-sensitive neuron in triggering a flying locust’s escape. J. Neurophysiol. 95, 3391–3400 (2006).

Muijres, F. T., Elzinga, M. J., Melis, J. M. & Dickinson, M. H. Flies evade looming targets by executing rapid visually directed banked turns. Science 344, 172–177 (2014).

Nelson, R. & Aloimonos, J. Obstacle avoidance using flow field divergence. Pattern Anal. Mach. I, 1102–1106 (1989).

Green, W. E. & Oh, P. Y. Optic-flow-based collision avoidance. IEEE Robot. Autom. Mag. 15, 96–103 (2008).

Conroy, J., Gremillion, G., Ranganathan, B. & Humbert, J. S. Implementation of wide-field integration of optic flow for autonomous quadrotor navigation. Auton. Robots 27, 189 (2009).

Zingg, S., Scaramuzza, D., Weiss, S. & Siegwart, R. MAV navigation through indoor corridors using optical flow. In 2010 IEEE International Conference on Robotics and Automation 3361–3368 (IEEE, 2010).

Milde, M. B. et al. Obstacle avoidance and target acquisition for robot navigation using a mixed signal analog/digital neuromorphic processing system. Front. Neurorobot. 11, 28 (2017).

Rind, F. C., Santer, R. D., Blanchard, J. M. & Verschure, P. F. M. J. in Sensors and Sensing in Biology and Engineering (eds. Barth, F. G. et al.) 237–250 (Springer, 2003).

Hyslop, A. M. & Humbert, J. S. Autonomous navigation in three-dimensional urban environments using wide-field integration of optic flow. J. Guid. Control Dyn. 33, 147–159 (2010).

Serres, J. R. & Ruffier, F. Optic flow-based collision-free strategies: from insects to robots. Arthropod Struct. Dev. 46, 703–717 (2017).

De Croon, G. C. H. E. Monocular distance estimation with optical flow maneuvers and efference copies: a stability-based strategy. Bioinspir. Biomim. 11, 1–18 (2016).

Stevens, J.-L. & Mahony, R. Vision based forward sensitive reactive control for a quadrotor VTOL. In Proc. 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 5232–5238 (IEEE, 2018).

Sanket, N. J., Singh, C. D., Ganguly, K., Fermüller, C. & Aloimonos, Y. GapFlyt: active vision based minimalist structure-less gap detection for quadrotor flight. IEEE Robot. Autom. Lett. 3, 2799–2806 (2018).

Bertrand, O. J. N., Lindemann, J. P. & Egelhaaf, M. A bio-inspired collision avoidance model based on spatial information derived from motion detectors leads to common routes. PLoS Comput. Biol. 11, e1004339 (2015).

Varma, M. & Zisserman, A. Texture classification: are filter banks necessary? In Proc. 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Vol. 2, II–691 (IEEE, 2003).

Mitchell, T. et al. Machine learning. Annu. Rev. Comput. Sci 4, 417–433 (1990).

Bishop, C. M. Pattern Recognition and Machine Learning (Springer, 2006).

Qiu, W. et al. UnrealCV: virtual worlds for computer vision.In Proc. 25th ACM International Conference on Multimedia 1221–1224 (ACM, 2017); https://doi.org/10.1145/3123266.3129396

Mancini, M., Costante, G., Valigi, P. & Ciarfuglia, T. A. Fast robust monocular depth estimation for obstacle detection with fully convolutional networks. In Proc. 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 4296–4303 (IEEE, 2016).

Mori, T. & Scherer, S. First results in detecting and avoiding frontal obstacles from a monocular camera for micro unmanned aerial vehicles. In Proc. IEEE International Conference on Robotics and Automation 1750–1757 (IEEE, 2013); https://doi.org/10.1109/ICRA.2013.6630807

Chaumette, F., Hutchinson, S. & Corke, P. in Springer Handbook of Robotics (eds. Siciliano, B. & Khatib, O.) 841–866 (Springer, 2016).

Scaramuzza, D. & Fraundorfer, F. Visual odometry [tutorial]. IEEE Robot. Autom. Mag. 18, 80–92 (2011).

Engel, J., Schöps, T. & Cremers, D. LSD-SLAM: large-scale direct monocular SLAM. In Proc. European Conference on Computer Vision (ECCV) 834–849 (Springer, 2014).

Zhou, T., Brown, M., Snavely, N. & Lowe, D. G. Unsupervised learning of depth and ego-motion from video. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 1851–1858 (IEEE, 2017).

Gordon, A., Li, H., Jonschkowski, R. & Angelova, A. Depth from videos in the wild: unsupervised monocular depth learning from unknown cameras. Preprint at https://arxiv.org/pdf/1904.04998.pdf (2019).

Gibson, J. J. The Perception of the Visual World (Houghton Mifflin, 1950).

Brenner, E. & Smeets, J. B. J. Depth perception. Stevens’ Handb. Exp. Psychol. Cogn. Neurosci. 2, 1–30 (2018).

Lehrer, M. & Bianco, G. The turn-back-and-look behaviour: bee versus robot. Biol. Cybern. 83, 211–229 (2000).

Stach, S., Benard, J. & Giurfa, M. Local-feature assembling in visual pattern recognition and generalization in honeybees. Nature 429, 758–761 (2004).

Andel, D. & Wehner, R. Path integration in desert ants, Cataglyphis: how to make a homing ant run away from home. Proc. R. Soc. Lond. B 271, 1485–1489 (2004).

Dyer, A. G., Neumeyer, C. & Chittka, L. Honeybee (Apis mellifera) vision can discriminate between and recognise images of human faces. J. Exp. Biol. 208, 4709–4714 (2005).

Fry, S. N. & Wehner, R. Look and turn: landmark-based goal navigation in honey bees. J. Exp. Biol. 208, 3945–3955 (2005).

Rosten, E., Porter, R. & Drummond, T. Faster and better: a machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 32, 105–119 (2010).

de Croon, G. C. H. E. & Nolfi, S. ACT-CORNER: active corner finding for optic flow determination. In Proc. IEEE International Conference on Robotics and Automation (ICRA 2013) (IEEE, 2013); https://doi.org/10.1109/ICRA.2013.6631243

Lucas, B. D. & Kanade, T. An iterative image registration technique with an application to stereo vision. In Proc. International Joint Conference on Artificial Intelligence Vol. 81, 674–679 (ACM, 1981).

Laws, K. I. Textured Image Segmentation. PhD thesis, Univ. Southern California (1980).

Games, E. Unreal Simulator (Epic Games, 2020); https://www.unrealengine.com

Kisantal, M. Deep Reinforcement Learning for Goal-directed Visual Navigation (2018); http://resolver.tudelft.nl/uuid:07bc64ba-42e3-4aa7-ba9b-ac0ac4e0e7a1

Pulli, K., Baksheev, A., Kornyakov, K. & Eruhimov, V. Real-time computer vision with OpenCV. Commun. ACM 55, 61–69 (2012).

Alcantarilla, P. F. & Solutions, T. Fast explicit diffusion for accelerated features in nonlinear scale spaces. IEEE Trans. Patt. Anal. Mach. Intell. 34, 1281–1298 (2011).

Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proc. 13th Scandinavian Conference on Image Analysis 363–370 (ACM, 2003).

Sanket, N. J., Singh, C. D., Fermüller, C. & Aloimonos, Y. PRGFlow: benchmarking SWAP-aware unified deep visual inertial odometry. Preprint at https://arxiv.org/pdf/2006.06753.pdf (2020).

Wofk, D., Ma, F., Yang, T.-J., Karaman, S. & Sze, V. Fastdepth: fast monocular depth estimation on embedded systems. In Proc. 2019 International Conference on Robotics and Automation (ICRA) 6101–6108 (ICRA, 2019).

Herissé, B., Hamel, T., Mahony, R. & Russotto, F.-X. The landing problem of a VTOL unmanned aerial vehicle on a moving platform using optical flow. In Proc. 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems 1600–1605 (2010); https://doi.org/10.1109/IROS.2010.5652633

Ho, H. W., de Croon, G. C. H. E., van Kampen, E., Chu, Q. P. & Mulder, M. Adaptive gain control strategy for constant optical flow divergence landing. IEEE Trans. Robot. (2018); https://doi.org/10.1109/TRO.2018.2817418

Acknowledgements

We thank M. Kisantal for creating the UnrealCV environment that was used in this work. We also thank F. Muijres, M. Karasek and M. Wisse for their valuable feedback on earlier versions of the manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the conception of the project and were involved in the analysis of the results and revising and editing the manuscript. G.C.H.E.d.C. programmed the majority of the software, with help from C.D.W. for the implementation in Paparazzi for the real-world experiments. G.C.H.E.d.C. performed all simulation and real-world experiments. G.C.H.E.d.C. created all graphics for the figures.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks Yiannis Aloimonos and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Landing performance for different landing strategies.

For each strategy we show the height (red lines), and the divergence (purple lines) over time. Thick solid lines show the average over 5 landings, while the grey area shows the sampled standard deviation. All landings aimed for a divergence of D*=-0.3 and started in hover, that is, at D=0. a, Landing with a fixed gain K (as in, for example,60), resulting in oscillations close to the surface. b, Landing with an adaptive gain as in61. The performance during landing is good, but slowly increasing the gain takes a rather long time (in the order of 14 seconds). c, Landing with the proposed self-supervised learning strategy. It can immediately start landing and has a good performance all the way down. Note the quicker landings with respect to a fixed gain and the absence of evident oscillations in the divergence.

Extended Data Fig. 2 Dense distance estimation from optical flow and oscillations.

First (top) row: Dense horizontal optical flow images, determined with the Farnebäck algorithm57. Second row: Histogram of each optical flow image. The x-axis represents the optical flow in pixels/frame. Third row: Corresponding dense gain (distance) images. Fourth row: Histogram of each gain image. Although the optical flow changes substantially during oscillatory motion of the flying robot (rows 1–2), the gain values are of comparable magnitude (rows 3–4). Smaller optical flow does lead to noisier gain estimates.

Extended Data Fig. 3 Example images from the Unreal simulator.

These images are taken at 960 × 960 pixel resolution. The environment contains a variety of trees and lighting conditions. The border of the environment is a smooth, grey, stone wall (bottom right screenshot). The flowers on the ground and the leaves of the trees move in the wind. The simulated flying robot perceives the environment at a 240 × 240 pixel resolution.

Extended Data Fig. 4 Distance-collision curves for the four different avoidance methods.

The curves are obtained by varying the parameters of the methods that balance false positives (turning when it is not necessary) and false negatives (not detecting an obstacle). For the predictive gain method this is the gain threshold, for the divergence methods that use optical flow vectors or feature size increases it is the divergence threshold, and for the fixed gain method it is the control gain. The numbers next to the graphs are threshold / parameter values. Per parameter value, N=30 runs have been performed, and the error bars show the standard error \(\sigma /\sqrt N\). The stars indicate the operating points shown in Fig. 3 in the main article.

Supplementary information

Supplementary Information

Supplementary Information, including sections and associated figures.

Supplementary Video 1

Overview video of the article.

Supplementary Video 2

Long video with raw experimental footage.

Supplementary Video 3

Video that shows both a landing with a fixed control gain and one with the proposed method.

Supplementary Video 4

Video of simulation experiments.

Rights and permissions

About this article

Cite this article

de Croon, G.C.H.E., De Wagter, C. & Seidl, T. Enhancing optical-flow-based control by learning visual appearance cues for flying robots. Nat Mach Intell 3, 33–41 (2021). https://doi.org/10.1038/s42256-020-00279-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-020-00279-7

This article is cited by

-

Finding the gap: neuromorphic motion-vision in dense environments

Nature Communications (2024)

-

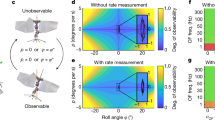

Accommodating unobservability to control flight attitude with optic flow

Nature (2022)