Abstract

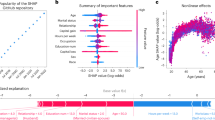

Variable importance is central to scientific studies, including the social sciences and causal inference, healthcare and other domains. However, current notions of variable importance are often tied to a specific predictive model. This is problematic: what if there were multiple well-performing predictive models, and a specific variable is important to some of them but not to others? In that case, we cannot tell from a single well-performing model if a variable is always important, sometimes important, never important or perhaps only important when another variable is not important. Ideally, we would like to explore variable importance for all approximately equally accurate predictive models within the same model class. In this way, we can understand the importance of a variable in the context of other variables, and for many good models. This work introduces the concept of a variable importance cloud, which maps every variable to its importance for every good predictive model. We show properties of the variable importance cloud and draw connections to other areas of statistics. We introduce variable importance diagrams as a projection of the variable importance cloud into two dimensions for visualization purposes. Experiments with criminal justice, marketing data and image classification tasks illustrate how variables can change dramatically in importance for approximately equally accurate predictive models.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets analysed in this paper are publicly available. The COMPAS dataset is available in Propublica’s repository7. The image dataset of dogs and cats is available at ImageNet8. The image we use in Fig. 6 is provided in the Supplementary Information. Our experiment on the in-vehicle coupon recommendation dataset in the Supplementary Information uses data from ref. 21. Source data are provided with this paper.

Code availability

The code we use in our paper can be downloaded from ref. 22.

References

Breiman, L. et al. Statistical modeling: the two cultures (with comments and a rejoinder by the author). Stat. Sci. 16, 199–231 (2001).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Fisher, A., Rudin, C. & Dominici, F. All models are wrong, but many are useful: learning a variable’s importance by studying an entire class of prediction models simultaneously. J. Mach. Learn. Res. 20, 1–81 (2019).

Semenova, L., Rudin, C. & Parr, R. A study in Rashomon curves and volumes: a new perspective on generalization and model simplicity in machine learning. Preprint at https://arxiv.org/abs/1908.01755 (2020).

Lin, J., Zhong, C., Hu, D., Rudin, C. & Seltzer, M. Generalized and scalable optimal sparse decision trees. Preprint at https://arxiv.org/abs/2006.08690 (2020).

Flores, A. W., Bechtel, K. & Lowenkamp, C. T. False positives, false negatives, and false analyses: a rejoinder to machine bias: there’s software used across the country to predict future criminals. And it’s biased against blacks. Fed. Prob. 80, 38–46 (2016).

Larson, J., Mattu, S., Kirchner, L. & Angwin, J. How we analyzed the COMPAS recidivism algorithm. GitHub https://github.com/propublica/compas-analysis (2017).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. Preprint at https://arxiv.org/abs/1409.1556 (2014).

Brown, G., Pocock, A., Zhao, M.-J. & Luján, M. Conditional likelihood maximisation: a unifying framework for information theoretic feature selection. J. Mach. Learn. Res. 13, 27–66 (2012).

Vinh, N. X., Zhou, S., Chan, J. & Bailey, J. Can high-order dependencies improve mutual information based feature selection? Pattern Recognit. 53, 46–58 (2016).

Choi, Y., Darwiche, A. & Van den Broeck, G. Optimal feature selection for decision robustness in Bayesian networks. In Proc. 26th International Joint Conference on Artificial Intelligence (IJCAI) 1554–1560 (AAAI, 2017).

Van Haaren, J. & Davis, J. Markov network structure learning: a randomized feature generation approach. In Twenty-Sixth AAAI Conference on Artificial Intelligence (AAAI, 2012).

Coker, B., Rudin, C. & King, G. A theory of statistical inference for ensuring the robustness of scientific results. Preprint at https://arxiv.org/abs/1804.08646 (2018).

Friedman, J. H. Greedy function approximation: a gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001).

Velleman, P. F. & Welsch, R. E. Efficient computing of regression diagnostics. Am. Stat. 35, 234–242 (1981).

Casalicchio, G., Molnar, C. & Bischl, B. Visualizing the feature importance for black box models. Preprint at https://arxiv.org/abs/1804.06620 (2018).

Gevrey, M., Dimopoulos, I. & Lek, S. Review and comparison of methods to study the contribution of variables in artificial neural network models. Ecol. Model. 160, 249–264 (2003).

Harel, J., Koch, C. & Perona, P. Graph-based visual saliency. In Advances in Neural Information Processing Systems 545–552 (NeurIPS, 2007).

Strobl, C., Boulesteix, A.-L., Kneib, T., Augustin, T. & Zeileis, A. Conditional variable importance for random forests. BMC Bioinformatics 9, 307 (2008).

Wang, T. et al. A Bayesian framework for learning rule sets for interpretable classification. J. Mach. Learn. Res. 18, 2357–2393 (2017).

Dong, J. & Rudin, C. Jiayun-Dong/vic v1.0.0. Zenodo https://doi.org/10.5281/zenodo.4065582 (2020).

Hayashi, F. Econometrics (Princeton Univ. Press, 2000).

Author information

Authors and Affiliations

Contributions

Both authors contributed to the conception, analytics and writing of the study. The experiments were conducted by J.D. The code was designed by J.D.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks Professor Kristian Kersting and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

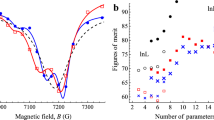

Extended Data Fig. 1 Logistic loss function.

Logistic loss.

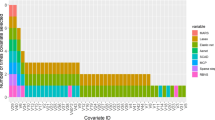

Extended Data Fig. 2 VID of decision tree models for the recidivism experiment.

VID for Recidivism: decision trees. This is the projective of the VIC onto the space spanned by the four variables of interest: age, race, prior criminal history and gender. Unlike Fig. 4, the VIC is generated by the Rashomon set that consists of the all the good decision trees instead of logistic regression models. However, the diagrams should be interpreted in the same way as before.

Supplementary information

Supplementary Information

Supplementary experiment.

Source data

Source Data Fig. 1

Source data for our experiment.

Rights and permissions

About this article

Cite this article

Dong, J., Rudin, C. Exploring the cloud of variable importance for the set of all good models. Nat Mach Intell 2, 810–824 (2020). https://doi.org/10.1038/s42256-020-00264-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-020-00264-0