Abstract

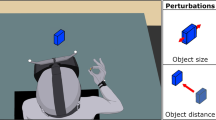

Humans perform object manipulation in order to execute a specific task. Seldom is such action started with no goal in mind. In contrast, traditional robotic grasping (first stage for object manipulation) seems to focus purely on getting hold of the object—neglecting the goal of the manipulation. Most metrics used in robotic grasping do not account for the final task in their judgement of quality and success. In this Perspective we suggest a change of view. Since the overall goal of a manipulation task shapes the actions of humans and their grasps, we advocate that the task itself should shape the metric of success. To this end, we propose a new metric centred on the task. Finally, we call for action to support the conversation and discussion on such an important topic for the community.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Santina, C. D. et al. Learning from humans how to grasp: a data-driven architecture for autonomous grasping with anthropomorphic soft hands. IEEE Robot. Autom. Lett. 4, 1533–1540 (2019).

Østergaard, E. H. White Paper: The Role of Cobots in Industry 4.0 (Universal Robots, 2018).

Flanagan, J. R., Bowman, M. C. & Johansson, R. S. Control strategies in object manipulation tasks. Curr. Opin. Neurobiol. 16, 650–659 (2006).

Santello, M., Flanders, M. & Soechting, J. F. Postural hand synergies for tool use. J. Neurosci. 18, 10105–10115 (1998).

Santello, M. et al. Hand synergies: integration of robotics and neuroscience for understanding the control of biological and artificial hands. Phys. Life Rev. 17, 1–23 (2016).

Cutkosky, M. R. On grasp choice, grasp models, and the design of hands for manufacturing tasks. IEEE Trans. Robot. Autom. 5, 269–279 (1989).

Ansuini, C., Santello, M., Massaccesi, S. & Castiello, U. Effects of end-goal on hand shaping. J. Neurophysiol. 95, 2456–2465 (2006).

Ansuini, C., Giosa, L., Turella, L., Altoè, G. & Castiello, U. An object for an action, the same object for other actions: effects on hand shaping. Exp. Brain Res. 185, 111–119 (2008).

Cohen, R. G. & Rosenbaum, D. A. Where grasps are made reveals how grasps are planned: generation and recall of motor plans. Exp. Brain Res. 157, 486–495 (2004).

Friedman, J. & Flash, T. Task-dependent selection of grasp kinematics and stiffness in human object manipulation. Cortex 43, 444–460 (2007).

Lukos, J., Ansuini, C. & Santello, M. Choice of contact points during multidigit grasping: effect of predictability of object center of mass location. J. Neurosci. 27, 3894–3903 (2007).

Crajé, C., Lukos, J. R., Ansuini, C., Gordon, A. M. & Santello, M. The effects of task and content on digit placement on a bottle. Expe. Brain Res. 212, 119–124 (2011).

Feder, K. P. & Majnemer, A. Handwriting development, competency, and intervention. Dev. Med. Child Neurol. 49, 312–317 (2007).

Cini, F., Ortenzi, V., Corke, P. & Controzzi, M. On the choice of grasp type and location when handing over an object. Sci. Robot. 4, eaau9757 (2019).

Gibson, J. J. The Ecological Approach to Visual Perception (Houghton Mifflin, 1979).

Johnson-Frey, S. H. The neural bases of complex tool use in humans. Trends Cogn. Sci. 8, 71–78 (2004).

Cisek, P. & Thura, D. in Reach-to-Grasp Behavior: Brain, Behavior, and Modelling Across the Life Span (eds Corbetta, D. & Santello, M.) Ch. 5 (CRC, 2018).

Gibson, K. Tools, language and intelligence: evolutionary implications. Man 26, 255–264 (1991).

Iriki, A. & Taoka, M. Triadic (ecological, neural, cognitive) niche construction: a scenario of human brain evolution extrapolating tool use and language from the control of reaching actions. Philos. Trans. R. Soc. Lond. B 367, 10–23 (2012).

Johansson, R. & Cole, K. J. Sensory-motor coordination during grasping and manipulative actions. Curr. Opin. Neurobiol. 2, 815–823 (1993).

Wolpert, D. M., Diedrichsen, J. & Flanagan, J. R. Principles of sensorimotor learning. Nat. Rev. Neurosci. 12, 739–751 (2011).

Sacheli, L. M., Arcangeli, E. & Paulesu, E. Evidence for a dyadic motor plan in joint action. Sci. Rep. 8, 5027 (2018).

Corbetta, D. & Snapp-Childs, W. Seeing and touching: the role of sensory-motor experience on the development of infant reaching. Infant Behav. Dev. 32, 44–58 (2009).

Napier, J. R. The prehensile movements of the human hand. Bone Joint J. 38-B, 902–913 (1956).

Landsmeer, J. M. F. Power grip and precision handling. Ann. Rheum. Dis. 21, 164–70 (1962).

Kamakura, N., Matsuo, M., Ishii, H., Mitsuboshi, F. & Miura, Y. Patterns of static prehension in normal hands. Am. J. Occup. Ther. 34, 437–445 (1980).

Iberall, T. The nature of human prehension: three dextrous hands in one. In Proc. 1987 IEEE International Conference on Robotics and Automation 396–401 (IEEE, 1987).

Iberall, T. Human prehension and dexterous robot hands. Int. J. Robot. Res. 16, 285–299 (1997).

Feix, T., Bullock, I. & Dollar, A. M. Analysis of human grasping behavior: object characteristics and grasp type. IEEE Trans. Haptics 7, 311–323 (2014).

Osiurak, F., Rossetti, Y. & Badets, A. What is an affordance? 40 years later. Neurosci. Biobehav. Rev. 77, 403–417 (2017).

Eppner, C., Deimel, R., Álvarez Ruiz, J., Maertens, M. & Brock, O. Exploitation of environmental constraints in human and robotic grasping. Int. J. Robot. Res. 34, 1021–1038 (2015).

Nakamura, Y. C., Troniak, D. M., Rodriguez, A., Mason, M. T. & Pollard, N. S. The complexities of grasping in the wild. In Proc. 2017 IEEE-RAS 17th International Conference on Humanoid Robotics 233–240 (IEEE, 2017).

Bicchi, A. Hands for dexterous manipulation and robust grasping: a difficult road toward simplicity. IEEE Trans. Robot. Autom. 16, 652–662 (2000).

Controzzi, M., Cipriani, C. & Carrozza, M. C. in The Human Hand as an Inspiration for Robot Hand Development (eds Balasubramanian, R. & Santos, V. J.) 219–246 (Springer, 2014).

Roa, M. A. & Suárez, R. Grasp quality measures: review and performance. Auton. Robots 38, 65–88 (2015).

Feix, T., Romero, J., Schmiedmayer, H., Dollar, A. M. & Kragic, D. The grasp taxonomy of human grasp types. IEEE Trans. Hum. Mach. Sys. 46, 66–77 (2016).

Falco, J., Van Wyk, K., Liu, S. & Carpin, S. Grasping the performance: facilitating replicable performance measures via benchmarking and standardized methodologies. IEEE Robot. Autom. Mag. 22, 125–136 (2015).

Bicchi, A. & Kumar, V. Robotic grasping and contact: a review. In Proc. 2000 IEEE International Conference on Robotics and Automation 348–353 (IEEE, 2000).

Gonzalez, F., Gosselin, F. & Bachta, W. Analysis of hand contact areas and interaction capabilities during manipulation and exploration. IEEE Trans. Haptics 7, 415–429 (2014).

Abbasi, B., Noohi, E., Parastegari, S. & Zefran, M. Grasp taxonomy based on force distribution. In 2016 25th IEEE International Symposium on Robot and Human Interactive Communication 1098–1103 (IEEE, 2016).

Stival, F. et al. A quantitative taxonomy of human hand grasps. J. Neuroeng. Rehabil. 16, 28 (2019).

Mahler, J. et al. Guest editorial open discussion of robot grasping benchmarks, protocols, and metrics. IEEE Trans. Autom. Sci. Eng. 15, 1440–1442 (2018).

Goldberg, K. Robots and the return to collaborative intelligence. Nat. Mach. Intell. 1, 2–4 (2019).

Leitner, J., Frank, M., Forster, A. & Schmidhuber, J. Reactive reaching and grasping on a humanoid: towards closing the action-perception loop on the iCub. In 2014 11th International Conference on Informatics in Control, Automation and Robotics 102–109 (IEEE, 2014).

Redmon, J. & Angelova, A. Real-time grasp detection using convolutional neural networks. In Proc. IEEE International Conference on Robotics and Automation 1316–1322 (IEEE, 2015).

Morrison, D., Corke, P. I. & Leitner, J. Closing the loop for robotic grasping: a real-time, generative grasp synthesis approach. Robotics: Science and Systems https://doi.org/10.15607/RSS.2018.XIV.021 (2018).

Hjelm, M., Ek, C. H., Detry, R. & Kragic, D. Learning human priors for task-constrained grasping. In Proc. 10th International Conference on Computer Vision Systems (eds Nalpantidis, L., Krueger, V., Eklundh, J.-O. & Gasteratos, A.) 207–217 (Springer, 2015).

Song, H. O., Fritz, M., Goehring, D. & Darrell, T. Learning to detect visual grasp affordance. IEEE Trans. Autom. Sci. Eng. 13, 798–809 (2016).

Nguyen, A., Kanoulas, D., Caldwell, D. G. & Tsagarakis, N. G. Detecting object affordances with convolutional neural networks. In Proc. 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems 2765–2770 (IEEE, 2016).

Detry, R., Papon, J. & Matthies, L. Task-oriented grasping with semantic and geometric scene understanding. In Proc. 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems 3266–3273 (IEEE, 2017).

Kokic, M., Stork, J. A., Haustein, J. A. & Kragic, D. Affordance detection for task-specific grasping using deep learning. In Proc. 2017 IEEE-RAS 17th International Conference on Humanoid Robotics 91–98 (IEEE, 2017).

Chemero, A. An outline of a theory of affordances. Ecol. Psychol. 15, 181–195 (2003).

Montesano, L., Lopes, M., Bernardino, A. & Santos-Victor, J. Learning object affordances: from sensory-motor coordination to imitation. IEEE Trans. Robot. 24, 15–26 (2008).

Osiurak, F., Jarry, C. & Gall, D. L. Grasping the affordances, understanding the reasoning: toward a dialectical theory of human tool use. Psychol. Rev. 117, 517–540 (2010).

Hsu, J. Machines on mission possible. Nat. Mach. Intell. 1, 124–127 (2019).

Ijspeert, A. J., Nakanishi, J., Hoffmann, H., Pastor, P. & Schaal, S. Dynamical movement primitives: learning attractor models for motor behaviors. Neural Comput. 25, 328–373 (2013).

Kappler, D., Chang, L. Y., Pollard, N. S., Asfour, T. & Dillmann, R. Templates for pre-grasp sliding interactions. Robot. Auton. Sys. 60, 411–423 (2012).

Puhlmann, S., Heinemann, F., Brock, O. & Maertens, M. A compact representation of human single-object grasping. In Proc. of 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems 1954–1959 (IEEE, 2016).

Cheng, G., Ramirez-Amaro, K., Beetz, M. & Kuniyoshi, Y. Purposive learning: Robot reasoning about the meanings of human activities. Sci. Robot. 4, eaav1530 (2019).

Kupcsik, A., Hsu, D. & Lee, W. S. in Robotics Research Vol. 1 (eds Bicchi, A. & Burgard, W.) 161–176 (Springer, 2018).

Acknowledgements

V.O. is supported by the UK National Centre for Nuclear Robotics initiative, funded by EPSRC EP/R02572X/1. M.B. is partially supported by the EU H2020 project ‘SOFTPRO: Synergy-based Open-source Foundations and Technologies for Prosthetics and RehabilitatiOn’ (no. 688857), and by the Italian Ministry of Education and Research (MIUR) in the framework of the CrossLab project (Departments of Excellence). M.A.R.’s work has been partially funded by the European Commission’s Eighth Framework Program as part of the project Soft Manipulation (grant number H2020-ICT-645599). J.L. and P.C. are supported by the Australian Research Council Centre of Excellence for Robotic Vision (project no. CE140100016). F.C. and M.C. are supported by the European Research Council (project acronym MYKI; project number 679820). The authors want to thank K. Goldberg for his invaluable comments and constructive criticism.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ortenzi, V., Controzzi, M., Cini, F. et al. Robotic manipulation and the role of the task in the metric of success. Nat Mach Intell 1, 340–346 (2019). https://doi.org/10.1038/s42256-019-0078-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-019-0078-4

This article is cited by

-

Hybrid hierarchical learning for solving complex sequential tasks using the robotic manipulation network ROMAN

Nature Machine Intelligence (2023)