Abstract

Neuromorphic computing takes inspiration from the brain to create energy-efficient hardware for information processing, capable of highly sophisticated tasks. Systems built with standard electronics achieve gains in speed and energy by mimicking the distributed topology of the brain. Scaling-up such systems and improving their energy usage, speed and performance by several orders of magnitude requires a revolution in hardware. We discuss how including more physics in the algorithms and nanoscale materials used for data processing could have a major impact in the field of neuromorphic computing. We review striking results that leverage physics to enhance the computing capabilities of artificial neural networks, using resistive switching materials, photonics, spintronics and other technologies. We discuss the paths that could lead these approaches to maturity, towards low-power, miniaturized chips that could infer and learn in real time.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$99.00 per year

only $8.25 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Change history

21 July 2021

A Correction to this paper has been published: https://doi.org/10.1038/s42254-021-00358-7

References

Lockery, S. R. The computational worm: spatial orientation and its neuronal basis in C. elegans. Curr. Opin. Neurobiol. 21, 782–790 (2011).

French, R. M. Catastrophic forgetting in connectionist networks. Trends Cogn. Sci. 3, 128–135 (1999).

Zenke, F., Poole, B. & Ganguli, S. Continual learning through synaptic intelligence. Int. Conf. Mach. Learn. 70, 3987–3995 (2017).

Kirkpatrick, J. et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl Acad. Sci. USA 114, 3521–3526 (2017).

Hassabis, D., Kumaran, D., Summerfield, C. & Botvinick, M. Neuroscience-inspired artificial intelligence. Neuron 95, 245–258 (2017).

Lake, B. M., Ullman, T. D., Tenenbaum, J. B. & Gershman, S. J. Building machines that learn and think like people. Behav. Brain Sci. 40, e253 (2017).

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl Acad. Sci. USA 79, 2554–2558 (1982).

Friston, K. The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127–138 (2010).

Chialvo, D. R. Emergent complex neural dynamics. Nat. Phys. 6, 744–750 (2010).

Rabinovich, M. I., Varona, P., Selverston, A. I. & Abarbanel, H. D. I. Dynamical principles in neuroscience. Rev. Mod. Phys. 78, 1213–1265 (2006).

Gerstner, W., Kistler, W. M., Naud, R. & Paninski, L. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition (Cambridge Univ. Press, 2014).

Sompolinsky, H., Crisanti, A. & Sommers, H. J. Chaos in random neural networks. Phys. Rev. Lett. 61, 259–262 (1988).

Engel, A. K., Fries, P. & Singer, W. Dynamic predictions: oscillations and synchrony in top–down processing. Nat. Rev. Neurosci. 2, 704–716 (2001).

Buzsaki, G. Rhythms of the Brain (Oxford Univ. Press, 2011).

McDonnell, M. D. & Ward, L. M. The benefits of noise in neural systems: bridging theory and experiment. Nat. Rev. Neurosci. 12, 415–426 (2011).

Hinton, G. E. & Salakhutdinov, R. R. Reducing the dimensionality of data with neural networks. Science 313, 504–507 (2006).

Hoppensteadt, F. C. & Izhikevich, E. M. Oscillatory neurocomputers with dynamic connectivity. Phys. Rev. Lett. 82, 2983–2986 (1999).

Jaeger, H. & Haas, H. Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304, 78–80 (2004).

Laje, R. & Buonomano, D. V. Robust timing and motor patterns by taming chaos in recurrent neural networks. Nat. Neurosci. 16, 925–933 (2013).

Schliebs, S. & Kasabov, N. Evolving spiking neural network — a survey. Evol. Syst. 4, 87–98 (2013).

Beyeler, M., Dutt, N. D. & Krichmar, J. L. Categorization and decision-making in a neurobiologically plausible spiking network using a STDP-like learning rule. Neural Netw. 48, 109–124 (2013).

Dayan, P. & Abbott, L. F. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems (MIT Press, 2005).

Antle, M. C. & Silver, R. Orchestrating time: arrangements of the brain circadian clock. Trends Neurosci. 28, 145–151 (2005).

Big data needs a hardware revolution. Nature 554, 145–146 (2018).

Indiveri, G. et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 73 (2011).

Furber, S. Large-scale neuromorphic computing systems. J. Neural Eng. 13, 051001 (2016).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Silver, D. et al. Mastering the game of Go without human knowledge. Nature 550, 354–359 (2017).

Xu, X. et al. Scaling for edge inference of deep neural networks. Nat. Electron. 1, 216–222 (2018).

Ielmini, D. & Waser, R. (eds) Resistive Switching: From Fundamentals of Nanoionic Redox Processes to Memristive Device Applications (Wiley, 2016).

Wouters, D. J., Waser, R. & Wuttig, M. Phase-change and redox-based resistive switching memories. Proc. IEEE 103, 1274–1288 (2015).

Ha, S. D., Shi, J., Meroz, Y., Mahadevan, L. & Ramanathan, S. Neuromimetic circuits with synaptic devices based on strongly correlated electron systems. Phys. Rev. Appl. 2, 064003 (2014).

Chanthbouala, A. et al. A ferroelectric memristor. Nat. Mater. 11, 860–864 (2012).

Strukov, D. B. & Likharev, K. K. A reconfigurable architecture for hybrid CMOS/nanodevice circuits. Proc. ACM/SIGDA Int. Symp. Field Progr. Gate Arrays https://doi.org/10.1145/1117201.1117221 (2006).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

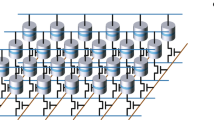

Bayat, F. M. et al. Implementation of multilayer perceptron network with highly uniform passive memristive crossbar circuits. Nat. Commun. 9, 2331 (2018).

Narayanan, P. et al. Toward on-chip acceleration of the backpropagation algorithm using nonvolatile memory. IBM J. Res. Dev. 61, 11:1–11:11 (2017).

Li, C. et al. Three-dimensional crossbar arrays of self-rectifying Si/SiO2/Si memristors. Nat. Commun. 15666 (2017).

Ambrogio, S. et al. Neuromorphic learning and recognition with one-transistor-one-resistor synapses and bistable metal oxide RRAM. IEEE Trans. Electron. Devices 63, 1508–1515 (2016).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Hirtzlin, T. et al. Digital biologically plausible implementation of binarized neural networks with differential hafnium oxide resistive memory arrays. Front. Neurosci. 13, 1383 (2020).

Ji, Y. et al. Flexible and twistable non-volatile memory cell array with all-organic one diode–one resistor architecture. Nat. Commun. 4, 2707 (2013).

Fuller, E. J. et al. Parallel programming of an ionic floating-gate memory array for scalable neuromorphic computing. Science 364, 570–574 (2019).

Noé, P. et al. Toward ultimate nonvolatile resistive memories: the mechanism behind ovonic threshold switching revealed. Sci. Adv. 6, eaay2830 (2020).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Feldmann, J., Youngblood, N., Wright, C. D., Bhaskaran, H. & Pernice, W. H. P. All-optical spiking neurosynaptic networks with self-learning capabilities. Nature 569, 208–214 (2019).

Shen, Y. et al. Deep learning with coherent nanophotonic circuits. Nat. Photon. 11, 441–446 (2017).

Lin, X. et al. All-optical machine learning using diffractive deep neural networks. Science 361, 1004–1008 (2018).

Larger, L. et al. Photonic information processing beyond Turing: an optoelectronic implementation of reservoir computing. Opt. Express 20, 3241–3249 (2012).

Vandoorne, K. et al. Experimental demonstration of reservoir computing on a silicon photonics chip. Nat. Commun. 5, 3541 (2014).

Moughames, J. et al. Three dimensional waveguide-interconnects for scalable integration of photonic neural networks. Optica 7, 640–646 (2020).

Heuser, T., Große, J., Kaganskiy, A., Brunner, D. & Reitzenstein, S. Fabrication of dense diameter-tuned quantum dot micropillar arrays for applications in photonic information processing. APL Photon. 3, 116103 (2018).

Hayenga, W. E., Garcia-Gracia, H., Hodaei, H., Fainman, Y. & Khajavikhan, M. Metallic coaxial nanolasers. Adv. Phys. X 1, 262–275 (2016).

Miscuglio, M. et al. All-optical nonlinear activation function for photonic neural networks [Invited]. Opt. Mater. Express 8, 3851–3863 (2018).

Diehl, P. U., Zarrella, G., Cassidy, A., Pedroni, B. U. & Neftci, E. Conversion of artificial recurrent neural networks to spiking neural networks for low-power neuromorphic hardware. Proc. IEEE Int. Conf. Reboot. Comput. https://doi.org/10.1109/ICRC.2016.7738691 (2016).

Diehl, P. U. et al. Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing. Proc. Int. Joint Conf. Neural Netw. https://doi.org/10.1109/IJCNN.2015.7280696 (2015).

Esser, S. K., Appuswamy, R., Merolla, P., Arthur, J. V. & Modha, D. S. Backpropagation for energy-efficient neuromorphic computing. Advances Neural Inform. Process. Systems 28, 1117–1125 (2015).

Poirazi, P., Brannon, T. & Mel, B. W. Pyramidal neuron as two-layer neural network. Neuron 37, 989–999 (2003).

David, B., Idan, S. & Michael, L. Single cortical neurons as deep artificial neural networks. Preprint at bioRxiv https://doi.org/10.1101/613141 (2019).

Conrad, M., Engl, E. & Jolivet, R. B. Energy use constrains brain information processing. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM.2017.8268370 (2017).

Gidon, A. et al. Dendritic action potentials and computation in human layer 2/3 cortical neurons. Science 367, 83–87 (2020).

London, M. & Häusser, M. Dendritic computation. Annu. Rev. Neurosci. 28, 503–532 (2005).

Lenk, K. et al. A computational model of interactions between neuronal and astrocytic networks: the role of astrocytes in the stability of the neuronal firing rate. Front. Comput. Neurosci. 13, 92 (2020).

Mead, C. & Ismail, M. (eds) Analog VLSI Implementation of Neural Systems (Springer, 1989).

Boahen, K. A neuromorph’s prospectus. Comput. Sci. Eng. 19, 14–28 (2017).

Arthur, J. V. & Boahen, K. A. Silicon-neuron design: a dynamical systems approach. IEEE Trans. Circuits Syst. I Regul. Pap. 58, 1034–1043 (2011).

Ohno, T. et al. Short-term plasticity and long-term potentiation mimicked in single inorganic synapses. Nat. Mater. 10, 591–595 (2011).

La Barbera, S., Vuillaume, D. & Alibart, F. Filamentary switching: synaptic plasticity through device volatility. ACS Nano 9, 941–949 (2015).

Stoliar, P. et al. A leaky-integrate-and-fire neuron analog realized with a mott insulator. Adv. Funct. Mater. 27, 1604740 (2017).

Valle, Jdel et al. Subthreshold firing in Mott nanodevices. Nature 569, 388–392 (2019).

Pickett, M. D., Medeiros-Ribeiro, G. & Williams, R. S. A scalable neuristor built with Mott memristors. Nat. Mater. 12, 114–117 (2013).

Kumar, S., Strachan, J. P. & Williams, R. S. Chaotic dynamics in nanoscale NbO2 Mott memristors for analogue computing. Nature 548, 318–321 (2017).

Parihar, A., Shukla, N., Jerry, M., Datta, S. & Raychowdhury, A. Computational paradigms using oscillatory networks based on state-transition devices. Proc. Int. Joint Conf. Neural Netw. https://doi.org/10.1109/IJCNN.2017.7966285 (2017).

Sharma, A. A., Bain, J. A. & Weldon, J. A. Phase coupling and control of oxide-based oscillators for neuromorphic computing. IEEE J. Explor. Solid State Comput. Devices Circuits 1, 58–66 (2015).

Li, S., Liu, X., Nandi, S. K., Venkatachalam, D. K. & Elliman, R. G. High-endurance megahertz electrical self-oscillation in Ti/NbOx bilayer structures. Appl. Phys. Lett. 106, 212902 (2015).

Yi, W. et al. Biological plausibility and stochasticity in scalable VO2 active memristor neurons. Nat. Commun. 9, 4661 (2018).

Fell, J. & Axmacher, N. The role of phase synchronization in memory processes. Nat. Rev. Neurosci. 12, 105–118 (2011).

Ignatov, M., Ziegler, M., Hansen, M. & Kohlstedt, H. Memristive stochastic plasticity enables mimicking of neural synchrony: memristive circuit emulates an optical illusion. Sci. Adv. 3, e1700849 (2017).

Arnaud, F. et al. Truly Innovative 28nm FDSOI technology for automotive micro-controller applications embedding 16MB phase change memory. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM.2018.8614595 (2018).

Suri, M. et al. Phase change memory as synapse for ultra-dense neuromorphic systems: application to complex visual pattern extraction. Proc. Int. Electron Devices Meeting https://doi.org/10.1109/IEDM.2011.6131488 (2011).

Boybat, I. et al. Neuromorphic computing with multi-memristive synapses. Nat. Commun. 9, 2514 (2018).

Tuma, T., Pantazi, A., Gallo, M. L., Sebastian, A. & Eleftheriou, E. Stochastic phase-change neurons. Nat. Nanotechnol. 11, 693–699 (2016).

Boyn, S. et al. Learning through ferroelectric domain dynamics in solid-state synapses. Nat. Commun. 8, 14736 (2017).

Oh, S., Hwang, H. & Yoo, I. K. Ferroelectric materials for neuromorphic computing. APL Mater. 7, 091109 (2019).

Alzate, J. G. et al. 2 MB array-level demonstration of STT-MRAM process and performance towards L4 cache applications. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM19573.2019.8993474 (2019).

Vansteenkiste, A. et al. The design and verification of MuMax3. AIP Adv. 4, 107133 (2014).

Grollier, J. et al. Neuromorphic spintronics. Nat. Electron. https://doi.org/10.1038/s41928-019-0360-9 (2020).

Torrejon, J. et al. Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017).

Borders, W. A. et al. Integer factorization using stochastic magnetic tunnel junctions. Nature 573, 390–393 (2019).

Burgt, Yvande et al. A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing. Nat. Mater. 16, 414–418 (2017).

Pecqueur, S. et al. Neuromorphic time-dependent pattern classification with organic electrochemical transistor arrays. Adv. Electron. Mater. 4, 1800166 (2018).

Fon, W. et al. Complex dynamical networks constructed with fully controllable nonlinear nanomechanical oscillators. Nano Lett. 17, 5977–5983 (2017).

Coulombe, J. C., York, M. C. A. & Sylvestre, J. Computing with networks of nonlinear mechanical oscillators. PLoS ONE 12, e0178663 (2017).

Likharev, K. K. & Semenov, V. K. RSFQ logic/memory family: a new Josephson-junction technology for sub-terahertz-clock-frequency digital systems. IEEE Trans. Appl. Supercond. 1, 3–28 (1991).

Russek, S. E. et al. Stochastic single flux quantum neuromorphic computing using magnetically tunable Josephson junctions. Proc. IEEE Int. Conf. Reboot. Comput. https://doi.org/10.1109/ICRC.2016.7738712 (2016).

Schneider, M. L. et al. Ultralow power artificial synapses using nanotextured magnetic Josephson junctions. Sci. Adv. 4, e1701329 (2018).

Wang, M. et al. Robust memristors based on layered two-dimensional materials. Nat. Electron. 1, 130–136 (2018).

Shi, Y. et al. Electronic synapses made of layered two-dimensional materials. Nat. Electron. 1, 458–465 (2018).

Chaudhuri, R. & Fiete, I. Computational principles of memory. Nat. Neurosci. 19, 394–403 (2016).

Romeira, B., Avó, R., Figueiredo, J. M. L., Barland, S. & Javaloyes, J. Regenerative memory in time-delayed neuromorphic photonic resonators. Sci. Rep. 6, 1–12 (2016).

Appeltant, L. et al. Information processing using a single dynamical node as complex system. Nat. Commun. 2, 468 (2011).

Larger, L. et al. High-speed photonic reservoir computing using a time-delay-based architecture: million words per second classification. Phys. Rev. X 7, 011015 (2017).

Antonik, P., Haelterman, M. & Massar, S. Brain-inspired photonic signal processor for generating periodic patterns and emulating chaotic systems. Phys. Rev. Appl. 7, 054014 (2017).

Antonik, P., Marsal, N., Brunner, D. & Rontani, D. Human action recognition with a large-scale brain-inspired photonic computer. Nat. Mach. Intell. 1, 530–537 (2019).

Soudry, D., Castro, D. D., Gal, A., Kolodny, A. & Kvatinsky, S. Memristor-based multilayer neural networks with online gradient descent training. IEEE Trans. Neural Netw. Learn. Syst. 26, 2408–2421 (2015).

Yu, S. Neuro-inspired computing with emerging nonvolatile memorys. Proc. IEEE 106, 260–285 (2018).

Lastras-Montaño, M. A. & Cheng, K.-T. Resistive random-access memory based on ratioed memristors. Nat. Electron. 1, 466–472 (2018).

Shi, Y. et al. Adaptive quantization as a device-algorithm co-design approach to improve the performance of in-memory unsupervised learning with SNNs. IEEE Trans. Electron. Devices 66, 1722–1728 (2019).

Hirtzlin, T. et al. Outstanding bit error tolerance of resistive ram-based binarized neural networks. Proc. IEEE Int. Conf. Artificial Intell. Circuits Systems https://doi.org/10.1109/AICAS.2019.8771544 (2019).

Lin, X., Zhao, C. & Pan, W. Towards accurate binary convolutional neural network. Advances Neural Inform. Process. Systems 30, 345–353 (2017).

Penkovsky, B. et al. In-memory resistive ram implementation of binarized neural networks for medical applications. Proc. IEEE Process. Design Automat. Test Europe Conf. https://doi.org/10.23919/DATE48585.2020.9116439 (2020).

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R. & Bengio, Y. Binarized neural networks. Advances Neural Inform. Process. Systems 29, 4107–4115 (2016).

Rastegari, M., Ordonez, V., Redmon, J. & Farhadi, A. XNOR-net: ImageNet classification using binary convolutional neural networks. Comput. Vision 4, 525–542 (2016).

Hirtzlin, T. et al. Hybrid analog-digital learning with differential RRAM synapses. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM19573.2019.8993555 (2019).

Shi, Y. et al. Neuroinspired unsupervised learning and pruning with subquantum CBRAM arrays. Nat. Commun. 9, 5312 (2018).

Shi, Y., Nguyen, L., Oh, S., Liu, X. & Kuzum, D. A soft-pruning method applied during training of spiking neural networks for in-memory computing applications. Front. Neurosci. 13, 405 (2019).

Ernoult, M., Grollier, J. & Querlioz, D. Using memristors for robust local learning of hardware restricted Boltzmann machines. Sci. Rep. 9, 1851 (2019).

Ishii, M. et al. On-chip trainable 1.4M 6T2R PCM synaptic array with 1.6K stochastic LIF neurons for spiking RBM. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM19573.2019.8993466 (2019).

Querlioz, D., Bichler, O., Dollfus, P. & Gamrat, C. Immunity to device variations in a spiking neural network with memristive nanodevices. IEEE Trans. Nanotechnol. 12, 288–295 (2013).

Bill, J. & Legenstein, R. A compound memristive synapse model for statistical learning through STDP in spiking neural networks. Neuromorphic Eng. 8, 412 (2014).

Querlioz, D., Bichler, O., Vincent, A. F. & Gamrat, C. Bioinspired programming of memory devices for implementing an inference engine. Proc. IEEE 103, 1398–1416 (2015).

Bi, G.-Q. & Poo, M.-M. Synaptic modification by correlated activity: Hebb’s postulate revisited. Annu. Rev. Neurosci. 24, 139–166 (2001).

Jo, S. H. et al. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 10, 1297–1301 (2010).

Kim, S. et al. Experimental demonstration of a second-order memristor and its ability to biorealistically implement synaptic plasticity. Nano Lett. 15, 2203–2211 (2015).

Barbera, S. L., Vincent, A. F., Vuillaume, D., Querlioz, D. & Alibart, F. Interplay of multiple synaptic plasticity features in filamentary memristive devices for neuromorphic computing. Sci. Rep. 6, 39216 (2016).

Serb, A. et al. Unsupervised learning in probabilistic neural networks with multi-state metal-oxide memristive synapses. Nat. Commun. 7, 12611 (2016).

Pedretti, G. et al. Memristive neural network for on-line learning and tracking with brain-inspired spike timing dependent plasticity. Sci. Rep. 7, 5288 (2017).

Srinivasan, G. & Roy, K. ReStoCNet: residual stochastic binary convolutional spiking neural network for memory-efficient neuromorphic computing. Front. Neurosci. 13, 189 (2019).

Mozafari, M., Kheradpisheh, S. R., Masquelier, T., Nowzari-Dalini, A. & Ganjtabesh, M. First-spike-based visual categorization using reward-modulated STDP. IEEE Trans. Neural Netw. Learn. Syst. 29, 6178–6190 (2018).

Mizrahi, A. et al. Controlling the phase locking of stochastic magnetic bits for ultra-low power computation. Sci. Rep. 6, 30535 (2016).

Dalgaty, T., Castellani, N., Querlioz, D. & Vianello, E. In-situ learning harnessing intrinsic resistive memory variability through Markov chain Monte Carlo sampling. Preprint at https://arxiv.org/abs/2001.11426 (2020).

Pinna, D. et al. Skyrmion gas manipulation for probabilistic computing. Phys. Rev. Appl. 9, 064018 (2018).

Mizrahi, A. et al. Neural-like computing with populations of superparamagnetic basis functions. Nat. Commun. 9, 1533 (2018).

Romera, M. et al. Vowel recognition with four coupled spin-torque nano-oscillators. Nature 563, 230–234 (2018).

Wang, Z. et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 1, 137–145 (2018).

Türel, Ö., Lee, J. H., Ma, X. & Likharev, K. K. Neuromorphic architectures for nanoelectronic circuits. Int. J. Circ. Theor. Appl. 32, 277–302 (2004).

Demis, E. C. et al. Atomic switch networks — nanoarchitectonic design of a complex system for natural computing. Nanotechnology 26, 204003 (2015).

Neckar, A. et al. Braindrop: a mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 107, 144–164 (2019).

Fujii, K. & Nakajima, K. Harnessing disordered-ensemble quantum dynamics for machine learning. Phys. Rev. Appl. 8, 024030 (2017).

Yamamoto, Y. et al. Coherent Ising machines — optical neural networks operating at the quantum limit. npj Quantum Inf. 3, 1–15 (2017).

Tacchino, F., Macchiavello, C., Gerace, D. & Bajoni, D. An artificial neuron implemented on an actual quantum processor. npj Quantum Inf. 5, 1–8 (2019).

Mochida, R. et al. A 4M synapses integrated analog ReRAM based 66.5 TOPS/W neural-network processor with cell current controlled writing and flexible network architecture. Proc. IEEE Symp. VLSI Technology https://doi.org/10.1109/VLSIT.2018.8510676 (2018).

Ishii, M. et al. On-chip trainable 1.4M 6T2R PCM synaptic array with 1.6K stochastic LIF neurons for spiking RBM. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM19573.2019.8993466 (2019).

Liu, Q. et al. A fully integrated analog ReRAM based 78.4TOPS/W compute-in-memory chip with fully parallel MAC computing. Proc. IEEE Int. Solid-State Circuits Conf. https://doi.org/10.1109/ISSCC19947.2020.9062953 (2020).

Golonzka, O. et al. Non-volatile RRAM embedded into 22FFL FinFET technology. Proc. Symp. VLSI Technology https://doi.org/10.23919/VLSIT.2019.8776570 (2019).

Golonzka, O. et al. MRAM as embedded non-volatile memory solution for 22FFL FinFET technology. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM.2018.8614620 (2018).

Ambrogio, S. et al. Reducing the impact of phase-change memory conductance drift on the inference of large-scale hardware neural networks. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM19573.2019.8993482 (2019).

Chen, P.-Y., Peng, X. & Yu, S. NeuroSim+: an integrated device-to-algorithm framework for benchmarking synaptic devices and array architectures. Proc. IEEE Int. Electron Devices Meeting https://doi.org/10.1109/IEDM.2017.8268337 (2017).

Dally, W. J. et al. Hardware-enabled artificial intelligence. Proc. IEEE Symp. VLSI Circuits https://doi.org/10.1109/VLSIC.2018.8502368 (2018).

Caulfield, H. J. & Dolev, S. Why future supercomputing requires optics. Nat. Photon. 4, 261–263 (2010).

Tucker, R. S. The role of optics in computing. Nat. Photon. 4, 405 (2010).

Attwell, D. & Laughlin, S. B. An energy budget for signaling in the grey matter of the brain. J. Cereb. Blood Flow Metab. 21, 1133–1145 (2001).

Strubell, E., Ganesh, A. & McCallum, A. Energy and policy considerations for modern deep learning research. AAAI 34, 13693–13696 (2019).

Nvidia AI. BERT meets GPUs. Medium https://medium.com/future-vision/bert-meets-gpus-403d3fbed848 (2020).

Schneidman, E., Freedman, B. & Segev, I. Ion channel stochasticity may be critical in determining the reliability and precision of spike timing. Neural Comput. 10, 1679–1703 (1998).

Branco, T., Staras, K., Darcy, K. J. & Goda, Y. Local dendritic activity sets release probability at hippocampal synapses. Neuron 59, 475–485 (2008).

Harris, J. J., Jolivet, R., Engl, E. & Attwell, D. Energy-efficient information transfer by visual pathway synapses. Curr. Biol. 25, 3151–3160 (2015).

Acknowledgements

This work was supported as part of the Q-MEEN-C, an Energy Frontier Research Center funded by the US Department of Energy (DOE), Office of Science, Basic Energy Sciences (BES), under award DE-SC0019273 and by the European Research Council ERC under grant bioSPINspired (682955) and NANOINFER (715872). A.M. received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement number 824103 (NEUROTECH).

Author information

Authors and Affiliations

Contributions

All authors wrote the Perspective article.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information

Nature Reviews Physics thanks Wei Lu and the other, anonymous, reviewers for their contribution to the peer review of this work.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Glossary

- Astrocytes

-

Cells in the brain that assist neurons for blood and metabolism regulation. Evidence points to their role in communication and processing.

- Axons

-

Nerve fibres that conduct the action potentials away from the soma to other neurons.

- Boltzmann machines

-

Stochastic Hopfield networks that use a Boltzmann distribution inspired by statistical physics in their sampling function.

- Dendrites

-

Branched extensions of neurons, which conduct stimulation received from another neuron towards the neuron soma.

- Fan-out

-

Typical number of connections spreading from a given point in a circuit. In the brain, one neuron is connected to 10,000 others, that is, it has a 10,000 fan-out.

- Hopfield networks

-

Specific type of recurrent neural network (neural network containing recurrent loops) that has neurons functioning as binary threshold nodes.

- Kerr effect

-

Change of the refractive index of a material due to an applied electric field, proportional to the square of the field amplitude.

- Modified National Institute of Standards and Technology database

-

(MNIST). Dataset of 28 × 28 pixel images of handwritten digits, widely used as a benchmark for image classification.

- Recurrent loops

-

Connections from neurons to themselves or to neurons in preceding layers (that is, on the input side) of the network. These loops are key for processing time-varying inputs.

- Reservoir computing

-

Specific type of neural network for which an assembly of neurons — the reservoir — has fixed random recurrent connections, and only connections from the reservoir to the output are trained.

- Somas

-

Cell bodies of neurons, containing the nucleus. They are considered a key processing part of the neuron.

- Spatial light modulators

-

Components, for example, based on liquid crystals, used in optical computing to induce a spatially varying modulation on a beam of light.

- Spikes

-

Short peaks of electrical potential at the membrane of a neuron, used to encode and communicate information. Also known as action potentials.

- Spintronic

-

Spintronics is the field of study of systems in which information is encoded using the magnetic properties of electrons. The name is a contraction of ‘spin’ and ‘electronics’.

Rights and permissions

About this article

Cite this article

Marković, D., Mizrahi, A., Querlioz, D. et al. Physics for neuromorphic computing. Nat Rev Phys 2, 499–510 (2020). https://doi.org/10.1038/s42254-020-0208-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42254-020-0208-2

This article is cited by

-

Harnessing synthetic active particles for physical reservoir computing

Nature Communications (2024)

-

Memristive tonotopic mapping with volatile resistive switching memory devices

Nature Communications (2024)

-

Task-adaptive physical reservoir computing

Nature Materials (2024)

-

Crystallization kinetics of nanoconfined GeTe slabs in GeTe/TiTe\(_2\)-like superlattices for phase change memories

Scientific Reports (2024)

-

History-dependent nano-photoisomerization by optical near-field in photochromic single crystals

Communications Materials (2024)