Abstract

Ultrafast diffraction imaging is a powerful tool to retrieve the geometric structure of gas-phase molecules with combined picometre spatial and attosecond temporal resolution. However, structural retrieval becomes progressively difficult with increasing structural complexity, given that a global extremum must be found in a multi-dimensional solution space. Worse, pre-calculating many thousands of molecular configurations for all orientations becomes simply intractable. As a remedy, here, we propose a machine learning algorithm with a convolutional neural network which can be trained with a limited set of molecular configurations. We demonstrate structural retrieval of a complex and large molecule, Fenchone (C10H16O), from laser-induced electron diffraction (LIED) data without fitting algorithms or ab initio calculations. Retrieval of such a large molecular structure is not possible with other variants of LIED or ultrafast electron diffraction. Combining electron diffraction with machine learning presents new opportunities to image complex and larger molecules in static and time-resolved studies.

Similar content being viewed by others

Introduction

The retrieval of complex molecular structures with electron or X-ray diffraction is challenging due to the multi-dimensional solution space in which a global extremum has to be found1 to extract structural information from the diffraction data. Simple convergence strategies are readily implemented for small molecules, but such methods quickly become intractable for complex systems. For example, relativistic 3.7 MeV ultrafast electron diffraction (UED) time resolved the ring-opening reaction of 1,3-cyclohexadiene, but the method could not identify the complex transient structure beyond 3 Å, which is expected to arise from three possible isomers2. X-ray diffraction imaging with free-electron laser pulses requires a careful balance between sufficient beam brightness and avoiding structural damage by the strong X-ray pulses3,4,5. Such issues severely limit the number of studies and necessitate ab initio calculations6,7,8. Laser-induced electron diffraction (LIED)1,9,10,11,12,13,14,15,16,17,18,19,20,21,22 is a powerful laser-based UED method that images even singular molecular structures with combined sub-atomic picometer and femtosecond-to-attosecond spatiotemporal resolution. Here the challenge of structural retrieval arises from the strong-field nature of self-imaging the structure1,16,17,18,20,23,24 by recolliding a laser-driven attosecond wavepacket after photo ionization. In LIED, an electron wave packet is: (i) tunnel ionized from the parent molecule in the presence of strong laser field; (ii) accelerated and driven back by the oscillating electric field of the laser; and (iii) rescattered against the parent ion’s atomic cores. The geometrical information of the target nuclei is encoded in the detected momentum distribution of rescattered electrons. LIED often uses the quantitative rescattering (QRS) theory13,14 to retrieve the structure of simple molecular systems. The QRS theory enables the extraction of field-free elastic electron scattering cross-sections from electron rescattering measurements performed under the presence of a strong laser field. The molecular structure is embedded onto the momentum distribution of the highly energetic rescattering electrons. But for large and complex molecular structures, the QRS retrieval quickly becomes intractable due to the difficulty in identifying a unique solution in the multi-dimensional solution space. A somewhat remedy is found by reducing the dimensionality of the problem with FT-LIED1,17,24,25. Nevertheless, a multi-peak fitting procedure is needed to identify bond distances. Such an approach becomes ambiguous when the radial distribution function does not exhibit clear and separable structures. We note that similar problems arise in essentially all implementations of structural imaging and many retrieval algorithms severely limit the size of the molecular system under investigation.

To overcome these limitations, we employ a machine learning (ML) algorithm for LIED (ML-LIED) to accurately extract the three-dimensional (3D) molecular structure of larger and more complex molecules. The ML-LIED method avoids the use of chi-square fitting algorithms, multi-peak identification procedures, and ab initio calculations. The method draws from the interpolation and learning capabilities of ML which significantly reduces the required molecular configurations to train the system for a much larger solution space. We demonstrate our method’s capability by extracting a single accurate molecular structure with sub-atomic picometer spatial resolution on the symmetric top linear system acetylene (C2H2), an asymmetric top 2D system, carbon disulfide (CS2) and a complex large 3D system, (+)-fenchone (C10H16O). For the specific problem of LIED, we employ ML with a convolutional neural network (CNN). Comparing with the commonly used artificial neural networks such as a fully connected neural network26 or recurrent neural network (RNN)27, the CNN is well suited for problems in image recognition to identify subtle features from an image at different levels of complexity similar to a human brain28,29. ML algorithms are usually trained either with supervised or unsupervised learning. Under the supervised learning scenario, both the classification and regression methods are commonly chosen. The classification method is generally used to predict a specific category of data, such as in facial recognition29 and crystal determination30. Here, we implement the regression method to quantitatively extract the structural parameters that identify the molecular structure. The molecular structure is found via the relationship between the molecular configuration and the molecular interference signal through the corresponding two-dimensional differential cross-sections (2D-DCSs) from its database. Using 2D-DCSs as an input, our ML algorithm takes full advantage of the complete molecular interference signal rather than only considering a one-dimensional (1D) portion of the interference signal as is typically used in other methods16,17. Moreover, the ML CNN is capable to interpolate between samples in the database of precalculated structures to provide a meaningful configuration to measured data. This feature of ML CNN is especially important to identify large and complex molecular structures, since it is simply impossible to calculate all possible molecular structures with sufficient structural resolution and due to the many degrees of freedom. We show a sufficiently reduced database suffices, which only considers (i) changes in a few important groups of atoms and (ii) a molecule-wide global change, allowing the algorithm to learn the relationship between the molecular structures and corresponding interference signals in our input database. Thus, our ML model, together with the CNN algorithm and regression method, provides a new way forward to identify the structure of large complex molecules and transient structures with similar geometric configurations in time-resolved pump-probe measurements.

This paper starts with a description of the ML scheme for LIED, followed by details on our ML model’s training with a CNN and its subsequent validation. We then predict the molecular structure of C2H2 and CS2 using the ML algorithm and experimental LIED data, followed by a comparison of the ML-predicted molecular structures to those retrieved with the QRS method. We then demonstrate our ML model’s capability to accurately predict the 3D molecular structure of (+)-fenchone, which failed to retrieve with FT-LIED and QRS-LIED. Lastly, we discuss the advantages, limitations, and many applications of the ML framework in retrieving large complex gas-phase molecular structures in the context of structural retrieval and time-resolved imaging of chemical reactions.

Results

ML scheme for LIED

We employ machine learning (ML as an image recognition system to predict the measured molecular structure, a schematic of which is shown in Fig. 1. We start by generating a database containing the 3D Cartesian coordinate of each atom in thousands of different molecular structures (as labels), spanning a coarse array of possible structures. For each structure, we generate the corresponding 2D-DCS map (stored as images in the database) by calculating the DCS of the elastic scattering of electrons on atoms in the molecule using the independent atomic model (IAM)14,31,32. The 2D-DCSs are calculated as a function of the electron’s return energy (i.e., energy at the instance of rescattering) and rescattering angle (i.e., the change in angle caused by rescattering). The measured 2D-DCS signal, σtot, is comprised of two components: (i) the incoherent sum of atomic scatterings, σatom, and (ii) a modulating coherent molecular scattering signal, σcoherent, which is approximately one order lower than σatom, i.e. σtot = σatom + σcoherent. Here, the σatom signal contributes as a background signal to our total scattering signal and it depends on the number and types of atoms but it is independent of the molecular structure. The two-center σcoherent signal is dependent on the internuclear distance between two atoms. Next, we subtract the slowly varying background from the 2D-DCS maps of the ML database33,34 and from the measured data to enhance the DCS. We note that the exact functional form for the subtraction is irrelevant as it is applied to database entries and data. For simplicity, one can use the equilibrium structure for a known molecular system. This procedure leads to clearly visible fringe patterns in the resulting difference 2D-DCS maps. We show them for the two small molecules in Fig. 1b, c. These fringe patterns are unique to the individual molecular configuration, making it significantly easier for the machine algorithm and its neural network to find the relationship between the molecular structures and their corresponding 2D-DCS maps. The database is split into three datasets to train, validate and test the model (see Fig. 1a). The training set trains the ML model to find the relationship between the molecular structures and their corresponding 2D-DCSs. The validation set is then used to assess the accuracy of the model during the training process and to determine the hyperparameters for training the model, ensuring that the trained model is not overfitting or underfitting the validation data. After the training process, the final model’s quality and reliability are tested using the test set of molecules, which we have previously measured with LIED, C2H2 and CS2. Once the model is validated, we use the experimental 2D-DCS map as the input for our ML model to extract the molecular structure that most likely corresponds to the measured LIED signal (see Table 1).

a A database of molecular structures and their corresponding simulated 2D-DCSs are split into three sets: training, validation, and test sets. Here, the simulated 2D-DCS maps are calculated via the independent atomic model (IAM). Once the machine learning (ML) model is validated, the experimental 2D-DCS map is used as an input to predict the molecular structure that most likely contributes to the measured interference signal. b, c Exemplary simulated difference 2D-DCS maps for four structures of carbon disulfide (b) and acetylene (c) calculated from subtracting the corresponding 2D-DCS of the equilibrium molecular structure33,34. Fringe patterns are visible, enhancing the difference in the elastic scattering signal for the different molecular structures. The molecular coordinates (used as labels in ML algorithm) for C2H2 (a) and CS2 (b) molecules in Cartesian and polar coordinates, respectively.

CNN training of ML algorithm

Our ML algorithm utilizes a CNN to discriminate subtle features between the 2D-DCS maps, which are used as the algorithm’s input data. The architecture of CNN is composed of two parts: convolutional layers and a fully connected neural network. A 2D-DCS map is first passed through the convolutional layers to extract features using different convolution filters, convoluted across every source pixel of the input map. The filters provide various feature maps that possess distinct subtle features present in the 2D-DCS map, making the image recognition process more efficient (see Fig. 2a). The collection of feature maps is the output of the convolution process, subsequently used as the input of the fully connected neural network (see Fig. 2b). The neural network consists of layers, and each layer contains neurons (blue circles) comprising of different weighting factors, wi. These weighting factors across all the connected layers in the neural network ultimately determine the relationship between the 2D-DCS and the molecular structure. The collection of features maps is first flattened to a 1D array and then multiplied by each neuron’s weight in the first layer. The atomic position’s predicted value is calculated from all the weights in each neuron within all layers (see Fig. 2b). At the final layer, the predicted value is compared to the real value through the cost function (see Fig. 2c), given by

where ypre and yreal are the predicted and real values of the atom’s position, respectively. This whole procedure is iterated (from Fig. 2a–c) to minimize the difference between the predicted and real value of the atomic position and to minimize the cost function. At each iteration, the filters and weights are optimized. The new optimized weight, ωiter+1, in each iteration is calculated by subtracting the partial derivative of the cost function from the current weight, ωiter+1, as shown in Eq. (2).

a The 2D-DCS is convoluted by different filters to generate a collection of feature maps. b The collection of feature maps is first flattened into a one-dimensional array and multiplied by the weights in each neuron of all layers to predict the atomic position for each atom in the molecule. c Contour plot of the cost function for two weights (ωi and ωi+1). The blue dot represents the cost function value, and the red arrows show the direction of the gradient of the cost function. After five iterations, the cost function is minimized.

Figure 2c shows a schematic contour plot of the cost function with respect to the two weights (ωi and ωi+1). The evolution of the cost value (blue dots) after five iterations is shown. The change in gradient of the cost function is also given by the red arrows, with the arrow length illustrating the step size taken at each iteration, called the learning rate (α). The weights are randomly initialized at the beginning. After five iterations, we observe a decrease in the cost function, which indicates that the model is optimized and that the predicted values are close to the real values. In reality, our CNN model calculates a cost function based on thousands of parameters (e.g., weights, biases, filters) instead of only two weights, as discussed above. At each iteration, all the parameters are simultaneously updated and optimized to minimize the cost function and the difference between predicted and real value. This capability is unique to the ML CNN and does not exist for simple regression methods or evolutionary algorithms.

Training and evaluation of ML model

We next evaluate the accuracy of the predicted molecular structure generated by our ML model during and after the training process. Training, validation, and testing sets are generated from the normalized difference DCS maps and their molecular structures (see Supplementary Note 1 and 2). We use the mean absolute error (MAE) during the training process, also known as the prediction error, to evaluate the model’s accuracy using the training and validation data. 3a shows the reduction in the MAE with an increasing number of times an entire dataset is iterated through the neural network during the model’s training, referred to as the iteration number. With increasing iteration number, the MAE converges to a constant value of ~0.016 for both the training and validation sets of data, confirming that the model is well-trained, predicting a structure very similar to that of the input. Moreover, a similar MAE for both sets of data signifies that the model does not overfit or underfit the data. Once we find a converged MAE, we use the test data to evaluate the ML’s reliability and accuracy after the training process assuming no knowledge of the input molecular structure to mimic our experimental data. Here, we use the 2D-DCSs of the test set as our input to generate the predicted structures. These predicted structures are then compared to the molecular structures from the test set of the input data. We obtain a MAE of ~0.015 using the test data (red cross in Fig. 3a), which is in good agreement with the converged MAE value (~0.016) achieved at the end of the training process using the training and validation datasets. This MAE value confirms that our final ML model is accurate and reliable. We then predict the molecular structure from our measured DCS using a normalized difference map as our ML model’s input. The normalized difference map comprises the normalized experimental 2D-DCS subtracted by the normalized theoretical 2D-DCS of the equilibrium structure33,34. The normalization process ensures that the experimental and theoretical DCS values are on the same order of magnitude. We also avoid the need for a fitting factor as is typically used in QRS-LIED (see Supplementary Note 3). The molecular structure predicted by our ML model and the experimental DCS input is then used to calculate its corresponding theoretical 2D-DCS. We then evaluate the correlation between the normalized theoretical and experimental 2D-DCS using the Pearson correlation (see Supplementary Note 4). We obtain a Pearson correlation value of 0.94 (see Fig. 3b), which indicates that both DCSs are strongly correlated and that the predicted molecular structure is accurate and reliable.

a Mean absolute error (MAE) obtained for each iteration number that the neural network convolutes the training and validation sets of our simulated data. The MAE obtained with the test data is indicated by the red cross. b Correlation between normalized experimental and theoretical two-dimensional differential cross-section (2D-DCS), reshaped into a 1D array. A linear fit (blue dashed line) and the 90% confidence interval (blue shaded area) using the bootstrapping method are applied to the correlated scatters. A Pearson correlation coefficient of 0.94 is obtained, indicating a strong correlation between the two data. c Predicted structural parameters obtained by machine learning.

Extracting measured molecular structure with ML

As a first step, we test the ML framework by applying it to the small linear symmetric top and non-linear asymmetric top molecules, whose structure we have previously determined with LIED. We extract the molecular structure of CS2 and C2H2 from the experimental 2D-DCS using our ML model trained on five separate datasets. Figure 3c shows the average structural parameters of the so determined structure for C2H2 (CS2) of RCC = 1.23 ± 0.11 Å and RCH = 1.08 ± 0.03 Å (RCS = 1.87 ± 0.14 Å and θSCS = 104.7 ± 6.4°). These values are in excellent agreement with the values retrieved by the QRS model; see the comparison in Tab. 1. After training our ML model on five separate datasets, the predicted structures vary slightly. This is because the neural network during the training process uses a random number generator to select the input–target pairs of data. Thus, each neuron’s corresponding initial weights and biases are also randomly chosen, leading to slightly different starting conditions in the CNN training of the ML model. Using random initial conditions for the neurons ensures that systematic errors are minimized. In addition, we generated predicted structures from the ML model, trained on five separate occasions, to ensure the reliability of the predicted values. We find that the uncertainty in our ML-predicted structural parameters arise from two contributions: the predicted model error and the experimental statistical error. The predicted model error is obtained by calculating the MAE between the absolute and calculated value of the structural parameter using the test set as described earlier. The experimental error arises from the standard error in our experimental DCS, following a Poissonian statistical distribution (see Supplementary Note 5). We include the experimental error into the predicted values using a modified experimental DCS that contains the extrema of the experimental error. Then, we study the variance in the predicted structure that includes the experimental error relative to the original unmodified experimental DCS. This procedure leads to extracted structures with picometer accuracy.

Extracting complex 3D molecular structure

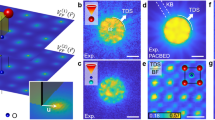

With the successful retrieval of the smaller 1D and 2D molecules, we put our ML framework to the test to extract the structure of gas-phase (+)-fenchone (C10H16O; 27 atoms), measured with LIED. For such a large and complex 3D molecule, the ML has the decisive advantage to interpolate and learn between the course grids of precalculated structures and to take into account a manifold of degrees of freedom in the solution space. Thus, we can use a sufficiently reduced database that only considers (i) four groups of atoms of the molecule (see inset of Fig. 4a) and (ii) a molecule-wide global change in structure. Next, we train our ML model to find the relationship between the molecular structures and corresponding 2D-DCSs with such reduced database. This approach drastically minimizes computational time. Figure 4a shows the MAE achieved at each iteration number using the neural network, convolved with training and validation sets of simulated data. This achieves a MAE of 0.02. Consequently, Fig. 4b shows a strong correlation between the experimental and predicted theoretical 2D-DCS with a Pearson correlation coefficient of 0.94. As example, Fig. 4c shows the extracted (x, y, z) 3D Cartesian coordinates of seven atoms of (+)-fenchone (green circles). We find that the ML-LIED-measured (+)-fenchone structure shows only slight deviations from the equilibrium ground-state neutral molecular structure (red triangles) which are involuntarily caused by the presence of the LIED laser field. The degree of uncertainty of the predicted 3D positions (green circles) are shown on top of the predicted 3D molecular structure in Fig. 4d. This shows that ML-LIED is capable to extract a complex 3D molecular structure such as (+)-fenchone.

a Mean absolute error (MAE) at each iteration number using a neural network convoluted with the training and validation sets of simulated data. The MAE obtained with the test data is indicated by the red cross. The inset shows a schematic of the four groups of the (+)-fenchone molecule which the ML algorithm has been trained on. b Correlation between normalized experimental and theoretical 2D-DCS similar to Fig. 3b. A linear fit (dashed lined) and the 90% confidence interval (blued shaded area) using the bootstrapping method are applied to the correlated scatters. A Pearson correlation coefficient of 0.94 is obtained, indicating that a strong correlation between the two data exists. c The predicted (x, y, z) 3D positions for seven atoms in (+)-fenchone using ML model (green circles), where the error bars are derived from the predicted model error plus the experimental statistical error. The equilibrium ground-state 3D positions of neutral (+)-fenchone are shown (red triangles). d Schematic of the predicted 3D molecular structure. The green circles indicate the area of uncertainty.

Discussion

This work establishes a ML-based framework to overcome present limitations in structural retrieval from diffraction measurements. The problem with present methods is the need to compare a measured diffraction pattern with a precalculated structure and the extremely poor scaling of pattern matching methods with the quickly increasing number of degrees of freedom of larger complex molecular structures. This is compounded by the need precalculate a very large set of molecular configurations in different orientations and with high resolution. Further, identification of such a precalculated set reduces to finding a global extremum in a multi-dimensional solution space which is a difficult inverse problem to solve. These issues are tractable for small molecular systems and for systems where dimensionality of the problem can be reduced significantly. This is however not possible for large and complex molecular structures and the total calculation time scales as n × 3N, where N is the number of atoms and n is the number of steps. To put this into perspective, it takes approximately 5 min to calculate a single 2D-DCS map for (+)-fenchone on a standard desktop computer (i3 Intel processor, 8 GB RAM). A 20-atom system with n = 5 steps will require an unrealistic 1.4 × 109 h of calculation time. Thus, it is not feasible at the present time to extract complex molecular structures via calculating all possible configurations for all degrees of freedom. To overcome the unfavorable scaling of the problem, we make use of the fact that a ML framework can simultaneously identify a large multitude of features by pattern matching on an interpolated dataset, despite having initially trained the ML framework on a coarse ensemble of structures. We showed that this permits ML-LIED to reveal the 3D location of each atom in the molecule, providing significantly more detailed structural information than any other method that relies on the identification of non-overlapping peaks in the scattering radial distribution function. Such identification is near impossible for larger and complex molecular structures due to the large number of peaks in the radial distribution that overlap due to the multitude of unresolvable two-atom combinations. The reduced computational demands for complex molecules may prove decisive to address transient structures and transition states of complex molecules. ML-LIED has the advantage that once the ML model is validated, the molecular structures can be identified for each experimental 2D-DCS map, avoiding the high computational cost of repeated chi-square fittings as in time-resolved QRS-LIED. Lastly, using 2D-DCSs as an input instead of 1D-DCSs utilizes the complete measured molecular interference signal thus maximizing confidence in the identification of the measured molecular structure. We also note that the ML framework draws from unambiguous pattern matching conditions. It is conceivable, though very unlikely, that different non-equilibrium structures may be identified as the same structure. The ML framework is amendable to be adapted and trained on the existence of a combined total interference signal from two or more molecular structures. This may allow to predict the multiple molecular structures contributing to the total measured interference signal. It should be noted that the same problem of contributions from multiple molecular structures to the measured signal can also arise in other LIED and UED methods, and it is not unique to just ML-LIED. This possibility for ML-LIED may overcome standing problems in time-resolved UED studies of isomerization or ring-opening reactions, e.g., where the molecular structure changes will lead to subtle changes in the measured 2D-DCS. Thus, the combination of LIED with a ML framework and CNN provides a powerful new opportunity to determine the structure of large molecules.

Methods

LIED data

In this work, we used experimental data for C2H216, CS220 and (+)-fenchone, which were measured with a reaction microscope35 to demonstrate our ML algorithm. We calculated the 2D-DCS of 40,000–120,000 possible molecular structures in our database using the IAM.

ML framework

We generate thousands of structures and calculated their 2D DCS as an input dataset. Our CNN contained 3 convolutional layers and 30 fully connected layers, with the first convolutional layer containing 32 filters with kernel size 5 × 5, the second layer has 32 filters with kernel size 3 × 3, and the third has 32 filters with kernel size 3 × 3. A batch size of 120 was used, and batch normalization is used to avoid overfitting. Our model was trained and validated with an iteration number of greater than 50 to generate a model that does not overfit or underfit the input data. The training of the ML model costs 1–2 h to run on the Google cloud GPU (NVIDIA® Tesla® V100). To predict a molecular structure with an experimental 2D-DCS input requires less than 1 min of calculation time.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The codes used in this study are available from the corresponding author upon reasonable request.

References

Amini, K. & Biegert, J. Chapter Three — Ultrafast electron diffraction imaging of gas-phase molecules. Adv. At. Mol. Opt. Phys. 69, 163–231 (2020).

Wolf, T. J. A. et al. The photochemical ring-opening of 1,3-cyclohexadiene imaged by ultrafast electron diffraction. Nat. Chem. 11, 504–509 (2019).

Neutze, R., Wouts, R., van der Spoel, D., Weckert, E. & Hajdu, J. Potential for biomolecular imaging with femtosecond X-ray pulses. Nature 406, 752–757 (2000).

Gaffney, K. J. & Chapman, H. N. Imaging atomic structure and dynamics with ultrafast X-ray scattering. Science 316, 1444–1448 (2007).

Quiney, H. M. & Nugent, K. A. Biomolecular imaging and electronic damage using X-ray free-electron lasers. Nat. Phys. 7, 142–146 (2011).

Küpper, J. et al. X-ray diffraction from isolated and strongly aligned gas-phase molecules with a free-electron laser. Phys. Rev. Lett. 112, 083002 (2014).

Minitti, M. P. et al. Imaging molecular motion: femtosecond X-ray scattering of an electrocyclic chemical reaction. Phys. Rev. Lett. 114, 255501 (2015).

Ruddock, J. M. et al. A deep UV trigger for ground-state ring-opening dynamics of 1,3-cyclohexadiene. Sci. Adv. 5, eaax6625 (2019).

Zuo, T., Bandrauk, A. D. & Corkum, P. B. Laser-induced electron diffraction: a new tool for probing ultrafast molecular dynamics. Chem. Phys. Lett. 259, 313–320 (1996).

Lein, M., Marangos, J. P. & Knight, P. L. Electron diffraction in above-threshold ionization of molecules. Phys. Rev. A 66, 051404 (2002).

Corkum, P. B. & Krausz, F. Attosecond science. Nat. Phys. 3, 381–387 (2007).

Meckel, M. et al. Laser-induced electron tunneling and diffraction. Science 320, 1478–1482 (2008).

Chen, Z., Le, A.-T., Morishita, T. & Lin, C. D. Quantitative rescattering theory for laser-induced high-energy plateau photoelectron spectra. Phys. Rev. A 79, 033409 (2009).

Lin, C. D., Le, A.-T., Chen, Z., Morishita, T. & Lucchese, R. Strong-field rescattering physics—self-imaging of a molecule by its own electrons. J. Phys. B 43, 122001 (2010).

Blaga, C. I. et al. Imaging ultrafast molecular dynamics with laser-induced electron diffraction. Nature 483, 194–197 (2012).

Pullen, M. G. et al. Imaging an aligned polyatomic molecule with laser-induced electron diffraction. Nat. Commun. 6, 7262 (2015).

Pullen, M. G. et al. Influence of orbital symmetry on diffraction imaging with rescattering electron wave packets. Nat. Commun. 7, 11922 (2016).

Wolter, B. et al. Ultrafast electron diffraction imaging of bond breaking in di-ionized acetylene. Science 354, 308–312 (2016).

Ito, Y., Carranza, R., Okunishi, M., Lucchese, R. R. & Ueda, K. Extraction of geometrical structure of ethylene molecules by laser-induced electron diffraction combined with ab initio scattering calculations. Phys. Rev. A 96, 053414 (2017).

Amini, K. et al. Imaging the Renner–Teller effect using laser-induced electron diffraction. Proc. Natl Acad. Sci. USA 116, 8173–8177 (2019).

Fuest, H. et al. Diffractive imaging of C 60 structural deformations induced by intense femtosecond midinfrared laser fields. Phys. Rev. Lett. 122, 053002 (2019).

Karamatskos, E. T. et al. Atomic-resolution imaging of carbonyl sulfide by laser-induced electron diffraction. J. Chem. Phys. 150, 244301 (2019).

Wolter, B. et al. Strong-field physics with mid-IR fields. Phys. Rev. X 5, 021034 (2015).

Liu, X. et al. Imaging an isolated water molecule using a single electron wave packet. J. Chem. Phys. 151, 024306 (2019).

Xu, J. et al. Diffraction using laser-driven broadband electron wave packets. Nat. Commun. 5, 1–6 (2014).

Sanchez-Gonzalez, A. et al. Accurate prediction of X-ray pulse properties from a free-electron laser using machine learning. Nat. Commun. 8, 15461 (2017).

Salmela, L. et al. Predicting ultrafast nonlinear dynamics in fibre optics with a recurrent neural network. Nat. Mach. Intell. 3, 344–354 (2021).

Valueva, M. V. et al. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math. Comput. Simulat. 177, 232–243 (2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 770–778 (IEEE, 2016).

Kaufmann, K. et al. Crystal symmetry determination in electron diffraction using machine learning. Science 367, 564–568 (2020).

Schäfer, L. Electron diffraction as a tool of structural chemistry. Appl. Spectrosc. 30, 123–149 (1976).

McCaffrey, P. D., Dewhurst, J. K., Rankin, D. W. H., Mawhorter, R. J. & Sharma, S. Interatomic contributions to high-energy electron-molecule scattering. J. Chem. Phys. 128, 204304 (2008).

Herzberg, G. Molecular Spectra and Molecular Structure III: Electronic Spectra and Electronic Structure of Polyatomic Molecules (D. Van Nostrand, New York 1966).

Dalton, D. R. Foundations of Organic Chemistry: Unity and Diversity of Structures, Pathways, and Reactions (John Wiley & Sons, 2020).

Ullrich, J. et al. Recoil-ion and electron momentum spectroscopy: reaction-microscopes. Rep. Prog. Phys. 66, 1463 (2003).

Acknowledgements

J.B. and group acknowledge financial support from the European Research Council for ERC Advanced Grant “TRANSFORMER” (788218), ERC Proof of Concept Grant “miniX” (840010), FET-OPEN “PETACom” (829153), FET-OPEN “OPTOlogic” (899794), Laserlab-Europe (EU-H2020 871124), MCIN for PID2020-112664GB-I00 (AttoQM); AGAUR for 2017 SGR 1639, MINECO for “Severo Ochoa” (SEV- 2015-0522), Fundació Cellex Barcelona, the CERCA Programme/Generalitat de Catalunya, and the Alexander von Humboldt Foundation for the Friedrich Wilhelm Bessel Prize. We also acknowledge Marie Sklodowska-Curie Grant Agreement 641272. X.L. and J.B. acknowledge additional financial support from China Scholarship Council.

Author information

Authors and Affiliations

Contributions

J.B. conceived and supervised the project. X.L. analyzed the data and developed the machine learning algorithm. X.L. and A.S. generated the input database. X.L., K.A., A.S., B.B., T.S., and J.B. contributed to the data interpretation and writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Communications Chemistry thanks Thanh-Tung Nguyen-Dang and the other, anonymous, reviewers for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, X., Amini, K., Sanchez, A. et al. Machine learning for laser-induced electron diffraction imaging of molecular structures. Commun Chem 4, 154 (2021). https://doi.org/10.1038/s42004-021-00594-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42004-021-00594-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.