Abstract

A rapid and cost-effective noninvasive tool to detect and characterize neural silences can be of important benefit in diagnosing and treating many disorders. We propose an algorithm, SilenceMap, for uncovering the absence of electrophysiological signals, or neural silences, using noninvasive scalp electroencephalography (EEG) signals. By accounting for the contributions of different sources to the power of the recorded signals, and using a hemispheric baseline approach and a convex spectral clustering framework, SilenceMap permits rapid detection and localization of regions of silence in the brain using a relatively small amount of EEG data. SilenceMap substantially outperformed existing source localization algorithms in estimating the center-of-mass of the silence for three pediatric cortical resection patients, using fewer than 3 minutes of EEG recordings (13, 2, and 11mm vs. 25, 62, and 53 mm), as well for 100 different simulated regions of silence based on a real human head model (12 ± 0.7 mm vs. 54 ± 2.2 mm). SilenceMap paves the way towards accessible early diagnosis and continuous monitoring of altered physiological properties of human cortical function.

Similar content being viewed by others

Introduction

An ongoing challenge confronting basic scientists, as well as those at the translational interface, is the ability to access a rapid and cost-effective tool to uncover mechanistic details of neural function and dysfunction. For example, identifying the presence of stroke, establishing altered neural dynamics in traumatic brain damage, and monitoring changes in neural profile in athletes on the sidelines all pose major hurdles. In this paper, using scalp electroencephalography (EEG) signals with relatively little data, we provide theoretical and empirical support for a method for the noninvasive detection of neural silences. We adopt the term silences or regions of silence to refer to the areas of brain tissue with little or no neural activity. These regions reflect ischemic, necrotic, or lesional tissue, resected tissue (e.g., after epilepsy surgery), or tumors1,2. Dynamic regions of silence also arise in cortical spreading depolarizations (CSDs), which are slowly spreading waves of silences in the cerebral cortex3,4,5.

There has been growing utilization of EEG for diagnosis and monitoring of neurological disorders such as stroke6, and concussion7. Common imaging methods for detecting brain damage, e.g., magnetic resonance imaging (MRI)8,9, or computed tomography10, are not portable, are not designed for continuous (or frequent) monitoring, are difficult to use in many emergency situations, and may not even be available at medical facilities in many countries. However, many medical scenarios can benefit from portable, frequent/continuous monitoring of neural silences, e.g., detecting changes in tumor or lesion size/location and CSD propagation. Noninvasive scalp EEG is, however, widely accessible in emergency situations and can even be deployed in the field with only a few limitations. It is easy and fast to setup, portable, and of lower cost compared with other imaging modalities. Additionally, unlike MRI, EEG can be recorded from patients with implanted metallic objects in their body, e.g., pacemaker11.

Source vs. silence localization

An ongoing challenges of EEG is source localization, the process by which the location of the underlying neural activity is determined from the scalp EEG recordings. The challenge arises primarily from three issues: (i) the underdetermined nature of the problem (few sensors, many possible locations of sources); (ii) the spatial low-pass filtering effect of the distance and the layers separating the brain and the scalp; and (iii) noise, including exogenous noise, background brain activity, as well as artifacts, e.g., heart beats, eye movements, and jaw clenching12,13. In source localization paradigms applied to neuroscience data14,15,16, e.g., in event-related potential paradigms17,18, scalp EEG signals are aggregated over event-related trials to average out background brain activity and noise, permitting the extraction of the signal activity that is consistent across trials. The localization of a region of silence poses additional challenges, of which the most important is how the background brain activity is treated: while it is usually grouped with noise in source localization (e.g., authors in16 state: “EEG data are always contaminated by noise, e.g., exogenous noise and background brain activity”), estimating where background activity is present is of direct interest in silence localization where the goal is to separate normal brain activity (including background activity) from abnormal silences. Because source localization ignores this distinction, as we demonstrate in our experimental results below, classical source localization techniques, e.g., multiple signal classification (MUSIC)19,20, minimum norm estimation (MNE)15,21,22,23, and standardized low-resolution brain electromagnetic tomography (sLORETA)24, even after appropriate modifications, fail to localize silences in the brain (“Methods” details our modifications on these algorithms).

To avoid averaging out the background activity, we estimate the contribution of each source to the recorded EEG across all electrodes. This contribution is measured in an average power sense, instead of the mean, thereby retaining the contributions of the background brain activity. Our silence localization algorithm, referred to as SilenceMap, estimates these contributions, and then uses tools that quantify our assumptions on the region of silence (contiguity, small size of the region of silence, and being located in only one hemisphere) to localize it. Because of this, another difference arises: silence localization can use a larger number of time points (than typical source localization). For example, 160 s of data with the sampling frequency of 512 Hz provides SilenceMap with around 81,920 data points to be used, boosting the signal-to-noise ratio (SNR) over source localization techniques, which typically rely on only a few tens of event-related trials to average over and extract the source activity that is consistent across trials.

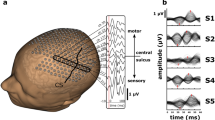

Further, we confront two additional difficulties: lack of statistical models of background brain activity, and the choice of the reference electrode. The first is dealt with either by including baseline recordings (in absence of silence; which we did not have for our experimental results) or utilizing a hemispheric baseline, i.e., an approximate equality in power measured at electrodes placed symmetrically with respect to the longitudinal fissure (see Fig. 1b). While the hemispheric baseline used here provides fairly accurate reconstructions, we note that this baseline is only an approximation, and an actual baseline is expected to further improve the accuracy. The second difficulty is related: to retain this approximate hemispheric symmetry in power, it is best to utilize the reference electrode on top of the longitudinal fissure (see Fig. 1a). Using these advances, we propose an iterative algorithm to localize the region of silence in the brain using a relatively small amount of data. In simulations and real data analysis, SilenceMap outperformed existing algorithms in localization accuracy for localizing silences in three participants with surgical resections using only 160 s of EEG signals across 128 electrodes (see “Results” for more details on finding the minimum amount of EEG data for localizing silences using SilenceMap).

a The EEG recording protocol and the locations of scalp electrodes. One of 10 reference electrodes (shown in red) is chosen along the longitudinal fissure for rereferencing against. b Average power of scalp potentials for different choices of reference electrodes. c Symmetric brain model of a patient (UD) with a right occipitotemporal lobectomy. d Steps of SilenceMap in a low-resolution source grid. A measure of the contribution of brain sources in the recorded scalp signals (\(\tilde{\beta }\)) is calculated relative to a hemispheric baseline. In the brain colormap, yellow indicates no contribution. A contiguous region of silence is localized based on a convex spectral clustering (CSpeC) framework in the low-resolution grid. e Steps of SilenceMap in a high-resolution source grid. The source covariance matrix (Cs) is estimated through an iterative method, and the region of silence is localized using the CSpeC framework. f Choosing the best reference electrode to reference against (Cz in this example), which results in minimum scalp power mismatch (ΔPow). The localized region of silence for this patient (UD) has 13 mm COM distance (ΔCOM) from the original region, with more than 38% overlap (JI = 0.384), and it is 32% smaller (Δk = 0.32).

Results

SilenceMap localizes the region of silence in two steps: (1) The first step finds a contiguous region of silence in a low-resolution source grid with the assumption that, at this resolution, the sources are uncorrelated across space. In this low-resolution grid, given that the sources are sufficiently separated, a reasonable approximation is to assume they have independent activity (see “Methods” for more details). We defined a measure for the contribution of brain sources to the recorded scalp signals (β), i.e., the larger the β, the greater the contribution of the brain source to the scalp potentials. However, β is not a perfect measure of the contribution since it is defined based on an identical distribution assumption on the non-silent sources, which does not hold in the real world. Therefore, using β does not reveal the silent sources, i.e., the smallest values of β (yellow regions in Fig. 1d) may not be located at the region of silence. However, looking closely at the values of β in the inferior surface of the brain (Fig. 1d) reveals a large hemispheric color difference at the region of silence (right occipitotemporal lobe). This motivated us to use a hemispheric baseline, i.e., instead of using β, we use \(\tilde{\beta }\), which is the ratio of β values of the mirrored sources, e.g., for source pair of (AL, AR), which are remote from the region of silence, \(\tilde{\beta }\) is close to 1 (red-colored sources), while for (BL, BR), where BR is located in the region of silence (see Fig. 1d), this ratio is close to zero (yellow-colored sources). A contiguous region of silence is localized based on a convex spectral clustering (CSpeC) framework25,26,27 in the low-resolution grid. (2) The second step of SilenceMap adopts the above localized region of silence as an initial guess in the high-resolution grid. Then, through iterations, the region of silence is localized based on the estimated source covariance matrix Cs, until the center-of-mass (COM) of the localized region of silence converged (see “Methods” and Fig. 1e for more details). All steps of SilenceMap, along with the intermediate results for patient UD, are summarized in Fig. 1. We have included similar overview figures for patients SN and OT in Supplementary Fig. 1 and Supplementary Fig. 2, respectively.

We validated the performance of SilenceMap through rigorous experiments, based on simulated and real datasets. We tested the robustness of SilenceMap with and without baseline (see “Methods” for more details), in different scenarios, e.g., different sizes and locations of region of silence, different EEG reference electrodes, and based on both visual and rest EEG datasets (see Fig. 1a). Finally, we used a real dataset to explore the validity of our hemispheric symmetry assumption.

Localization performance metrics

For all experiments, we used three performance metrics for determining the accuracy of the silence localization task: (i) center-of-mass (COM) distance (ΔCOM), (ii) Jaccard Index (JI), and (iii) size error (Δk).

(i) COM distance is simply defined as the Euclidean distance between the center-of-mass of the localized and actual region of silence, i.e.,

where fi is the three-dimensional (3D) location of source i in the brain, \({\mathcal{S}}\) and \({{\mathcal{S}}}^{GT}\) are the set of source indices of the localized region of silence and its ground truth, respectively. ΔCOM basically measures how far the localized region of silence is from the ground truth.

(ii) JI, first defined by Jaccard in ref. 28, is a widely used performance measure for the 2D image segmentation tasks29. In the silence localization task, since we are segmenting the region of silence in 3D space, we can calculate the JI based on the nodes/sources in the discretized brain as follows:

which measures how well the localized region of silence overlaps with the ground truth region in the brain, and it assumes values between 0 (no overlap) and 1 (perfect overlap). If there is minimal overlap and/or there is a large mismatch between the size of these two regions, JI has a small value.

(iii) Size error measures the error in estimation of the size of the region of silence, and is simply defined as follows:

where \(\hat{k}\) is the estimated number of silent sources in the localized region of silence.

Simulations

We simulated the EEG data of regions of neural silence, following the assumptions we made in “Methods: Problem statement”, and quantified the performance of SilenceMap.

Simulation results

We simulated scalp differential recordings for 100 different regions of silence, with the size of k = 50, on a high-resolution source grid with p = 1744 sources, and at varying locations on the cortex. The simulated regions of silence lie in only one hemisphere (see the assumptions in “Methods: Problem statement”), and are located no deeper than 3 cm from the surface of scalp, which covers the entire thickness of the gray matter30,31,32, while excluding deep sources located in the longitudinal fissure. In the longitudinal fissure, the source dipoles are located deep inside the brain, and mostly oriented tangential to the surface of the scalp, making it difficult for EEG to record their electrical activity13. The non-silent sources are assumed to have an identical distribution and correlation across space, and identical distribution over time. To explore the effect of different assumptions for the time-frequency characteristics of neural sources on the silence localization task, we considered two scenarios in the simulations: (i) a flat power spectral density (PSD) profile for the activities of non-silent sources, and (ii) a “Real PSD” profile, which is extracted from an open-source electrocorticography (ECoG) dataset used in33 and available through the open-source library in ref. 34. The detailed steps for the simulation are available in “Methods: Simulated Dataset”. For SilenceMap, we tried the 10 different reference electrodes located along the longitudinal fissure, i.e., Fpz, AFz, Fz, FCz, Cz, CPz, Pz, POz, Oz, and Iz, and chose the one with the minimum power mismatch ΔPow defined in Eq. (39). We reported the performance measures, i.e., ΔCOM, JI, and Δk under the average signal-to-noise ratio (SNRavg) level of 9 dB (see “Methods” for definition of SNRavg). For SilenceMap, we also reported the convergence rate (CR), which is the ratio of the number of converged cases over the total number of simulated regions of silence in the simulation experiment. The results of the simulations are summarized in Table 1, where ΔCOM, JI, and Δk are reported in the format of mean ± standard error (SE). Based on the results, SilenceMap outperformed (modifications on) state-of-the-art source localization algorithms: it has 42 mm smaller average COM distance, 41% more average overlap (JI), and 253% smaller size error, compared to the best performing modified source localization algorithms. In addition, SilenceMap showed very similar performance for the flat and Real PSDs, as revealed by examining the effect of changing the (temporal) PSD on the spatial correlation for the simulated brain sources (detailed discussion of this is in “Methods: Simulated dataset”). Our simulations assume identical distribution of brain sources. Noting that SilenceMap with baseline performs substantially better compared to SilenceMap without baseline (see the results in “Results: Real Data”), it appears to us that this assumption is far from accurate. The list of all parameters and their values used in the implementation of SilenceMap is available in Supplementary Note A (see Supplementary Table I).

Comparison of SilenceMap with source localization algorithms

We also compared the performance of SilenceMap under different simulated scenarios, as well as real experiments, with state-of-the-art source localization algorithms, modified for the silence localization task (details of modified MNE, MUSIC, and sLORETA algorithms for silence localization are explained in “Methods”). For all modified source localization methods, we chose Cz scalp electrode as the reference electrode but, for fair comparison with SilenceMap, also considered the effect of the reference electrode in the modified MNE, MUSIC, and sLORETA (see “Methods”). Based on the simulation results in Table 1, among the modified source localization algorithms, sLORETA shows the minimum average COM distance of 54mm, and MUSIC shows the maximum average overlap of 9% (JI = 0.09), and the minimum average size error of 284% (Δk = 2.84). This performance is still poor for the silence localization task, while SilenceMap shows good performance based on the simulation results in Table 1 (ΔCOM = 12 mm, JI = 0.50, Δk = 0.31). As evident, source localization algorithms, even after modification, perform poorly in localizing the neural regions of silence.

Real data

We also compared the performance of SilenceMap with the modified source localization algorithms, based on a real dataset of patients who have undergone lobectomy surgery, and have a clearly defined resected region in their brain.

Dataset

We recorded EEG signals using a BioSemi ActiveTwo system (BioSemi, Amsterdam), with a sampling frequency of 512 Hz, using a 128-electrode cap with electrodes located based on the standard 10-5 system35. In addition, we used four electrodes around the eyes, specifically, a pair on the top and bottom of the right eye to detect the vertical eye movements and blinks, and a pair at the outer canthi of each eye to monitor horizontal eye movements. One electrode was placed on the left collar bone to monitor heart beats, and two electrodes were placed on the mastoids. All electrodes were differentially recorded relative to the standard common-mode-sense and driven-right-leg electrodes. We monitored the electrode-gel-scalp contact quality through the data acquisition period using the Electrode Offsets option in the ActiView data acquisition software, which calculates the direct current (DC) potentials generated at the junction of the skin and electrolyte solution (gel) under the electrodes. This DC potential results in a voltage at the amplifier inputs (i.e., DC offset)36. Electrodes with larger than 20 mV offset were marked for removal and interpolation in the preprocessing step, and more conductive gel was added to the electrodes with high offset. This is important as the change in electrode impedance can alter the distribution of artifacts (e.g., eye blinks and eye movements) across the scalp and make it harder to detect and remove them using the preprocessing methods37. During data acquisition, the participant viewed a screen, located roughly 1 m away. A grating pattern of black and white bars was displayed at the center of the display along with a fixation cross for 2 s, followed by a rest state of 1–1.5 s, where a fixation cross was displayed on a gray-colored background (see Fig. 1a). We repeated this sequence 80 times during the recording session. We used the Rest and Visual sections of the recorded signal separately for the localization and compared the results from these analyses in this section. The steps for data analyses and preprocessing are available in “Methods: Data analysis”.

Participants

Three male pediatric patients were recruited for this experiment. Two patients (SN and OT) had resections in the left hemisphere and one (UD) had a resection in the right hemisphere. In two of these patients (OT and UD), lobectomy surgery was performed to control pharmacoresistant epilepsy, and, in the third patient (SN), surgery was performed for an emergent evacuation of a cerebral hematoma at day one of life. More information about these patients is included in Table 2.

OT and the parents of SN and UD provided consented for participation. SN and UD provided assent. All procedures were approved by the Carnegie Mellon University Institutional Review Board. The MRI scans of these participants are shown in Fig. 2, where the resected sections can be seen as large asymmetric dark regions38,39,40. The ground truth regions of silence are extracted based on these MRI scans (see “Methods” for more details). The first row in Fig. 3 shows the extracted ground truth regions of silence in the symmetric brain models of the three participants. The intact hemisphere is mirrored across the longitudinal fissure to construct these brain models (see Fig. 1c and “Methods” for more details). These patients have different sizes of regions of silence: UD has a region of silence with k = 60 sources/nodes out of total p = 1740 sources in the brain, SN has k = 120 out of p = 1758 sources, and OT has k = 55 out of p = 1744 total sources. Despite the relatively large sizes of the resected regions in these three pediatric patients, there is rather minimal, if any, observable effect of the resection on performance, indicative of substantial plasticity in the children’s brain41. This suggests that we cannot characterize the site or size of the resected areas with any precision using standard neuropsychological testing (see Supplementary Table II for neuropsychological test results for these patients, and Supplementary Note B for detailed discussion).

The first row shows the extracted ground truth regions of silence (red regions) overlaid on the resected cortical region of three patients based on their symmetric brain models extracted from the structural MRIs (see the MRI scans in Fig. 2); the second, third, and fourth rows show the performance in localization of the silent region using modified source localization algorithms (MNE, MUSIC, and sLORETA), through both visual illustration (red regions) and using performance metrics of center-of-mass (COM) distance (ΔCOM), Jaccard Index (JI), and size error (Δk). The fifth row shows the performance of SilenceMap without baseline, and the last two rows show the localization performance of SilenceMap with baseline, based on the Rest and Visual recordings respectively. p is the total number of sources in each brain model, and k is the size of ground truth region of silence.

Results of real dataset

We applied SilenceMap, along with the modified source localization algorithms, i.e., MNE, MUSIC, and sLORETA, on the preprocessed EEG recordings of the three participants, and the performance of silence localization is calculated by comparing against the extracted ground truth regions from the post-surgery MRI scans of these patients (see Fig. 2). The visual illustration of localized regions of silence (shown in red on the gray-colored semi-transparent brains), along with their ground truth regions and their corresponding performance measures are all shown in Fig. 3. Based on the Rest dataset, SilenceMap with hemispheric baseline outperforms the modified source localization algorithm: it reduces the COM distance by 12 mm, 46 mm, and 42 mm for UD, SN, and OT respectively, compared to the best performance among the source localization algorithms. It also improves the overlap (JI) by 22%, 49%, and 37%, and the size estimation by 122%, 42%, and 59% for UD, SN, and OT, respectively. SilenceMap with baseline performs well with values of ΔCOM = 2 mm, JI = 0.570, and Δk = 0.25 based on the Rest set, and ΔCOM = 3 mm, JI = 0.654, and Δk = 0.09 based on the Visual set. Comparing the results of Visual and Rest datasets for SilenceMap with baseline shows that, as expected, the localized regions of silence remain largely the same. This suggests that for each participant, the algorithm can localize the region of silence, regardless of the type of the task performed (Visual or Rest) by the participants during the EEG recording. In SilenceMap with baseline, based on the minimum value of the power mismatch (ΔPow defined in Eq. (39) in “Methods”), the best reference electrodes for UD, SN, and OT were Cz, Cz, and CPz, respectively, for the Rest set, and Cz, Pz, and CPz for the Visual set. Based on the results of the Visual set, participant OT shows the poorest localization performance, which might be due to the participant’s repetitive jaw clenching during the recording, even after appropriate preprocessing of the data. Jaw clenching is recognized as one of the most severe artifacts adversely impacting the signals of most EEG electrodes42.

Unlike the simulation results, without baseline, SilenceMap failed to localize the region of silence on the real dataset. One explanation for this is the assumption of the identical distribution of sources in designing the algorithm, which does not hold in the real data. Clearly, using the algorithm with hemispheric baseline is advantageous for better localization.

Validity of hemispheric symmetry assumption in SilenceMap with baseline

The hemispheric baseline approach used in SilenceMap is based on an approximate hemispheric symmetry assumption of the brain source activities in the healthy parts of the brain. To further explore the validity of this assumption, we quantified this hemispheric symmetry based on the scalp average power of a neurologically healthy control participant (DH, male, 25 years) whose EEG data were collected using the same protocol used for the patients (see Fig. 1a for the EEG recording protocol). DH provided informed consent. Excluding the ten electrodes on the longitudinal fissure (red electrodes in Fig. 1a), we calculated the mean absolute difference (MAD) of average power of pairs of scalp electrodes, which are symmetric with respect to the longitudinal fissure, e.g., (C1,C2), (T7,T8), and so on, as follows:

where, \(\widehat{\mathrm{Var}}({y}_{i}^{R})\) is the estimated variance of the recorded EEG signals referenced to the Cz electrode, preprocessed, and denoised signal \({y}_{i}^{R}\) at the electrode i in the right hemisphere (see “Methods” for noise removal steps), and \({y}_{i}^{L}\) is the signal of the corresponding electrode in the left hemisphere, and n = 128 is the total number of electrodes. Based on Fig. 4, MAD for the control participant is calculated based on the Rest set as 4.1 (μV)2, while for the UD, SN, and OT patients with regions of silence MAD is 23.3, 14.2, 16.5 (μV)2, respectively. The control participant had a substantially smaller hemispheric difference of scalp power compared to the patients, confirming that using the hemispheric baseline is helpful in localization of regions of silence in either hemisphere. There are two main reasons that the MAD of the healthy control is not perfectly symmetric: (i) brain sources have non-identical brain activities43,44, and this asymmetry is affected by factors such as age45, and (ii) the structure of the brain and the head (scalp, skull, cerebrospinal fluid (CSF), and brain) is not perfectly symmetric (see “Discussion” for more discussion), which results in a non-symmetric reflection/transformation of brain activities to the scalp potentials. The latter issue is addressed in SilenceMap, by normalization of the measure of source contribution (β in Eq. (35), in “Methods”) based on the head structure asymmetry. To improve the performance of SilenceMap, one might take into account the non-identical distribution of sources in the brain (and perhaps use a more realistic model for the source covariance matrix Cs) and normalize the source contribution measure accordingly. Another approach to address this might be to use an asymmetric baseline obtained during the recording of the brain without any region of silence.

Mean absolute difference of scalp average power (MAD) is reported for a healthy control participant (DH), in comparison to the three patients who have resected brain regions (UD, SN, and OT). The control participant shows substantially smaller MAD compared to the three patients with cortical regions of silence.

SilenceMap can localize the regions of silence with relatively little EEG data

As we showed earlier in this section, SilenceMap successfully localized the regions of silence based on only 160s of EEG data. Although this is already quite small, how does SilenceMap perform if we reduce this timespan. To understand this, we did a search for the timespan for [20, 40, 80, 120, 160]s, quantifying the performance for each timespan. For UD, 80 s of data showed almost the same performance as 160s (ΔCOM = 17 mm, JI = 0.382, Δk = 0.30), while 40 s showed substantial reduction. For SN, the minimum possible amount of data, without compromising the localization performance, is only 40 s (ΔCOM = 9 mm, JI = 0.440, Δk = 0.20), while for OT, this is 160 s, potentially due to the noisy EEG recording of OT. Nevertheless, the 160 s upper limit is still a relatively short amount of signal acquisition time.

In clinical applications, rapid recording and localization of neural silences might be required. The time-consuming steps for EEG installation—namely, the placement of electrodes and applying conductive gel (~30 min for the high-density EEG we used), electrode impedance monitoring and corrections, and the multistep and offline data preprocessing—may make it difficult to use the system in practice. There has been progress in recent years in developing portable and quick-to-administer EEG systems (e.g., dry, active, low-impedance electrodes, conductive sponge and hydrogel interfaces46,47,48), along with fast and real-time preprocessing and artifact removal techniques (e.g., in ref. 37). These developments along with SilenceMap (<3 min of EEG recording), and access to sufficient computational power (see “Methods” for computation-complexity analysis of SilenceMap), can enable rapid silence localization.

Introduced error in silence localization by using symmetric brain models

Morphological studies of the human brain have shown cortical asymmetry, and how it is affected by factors such as age, sex, and neurological disease49,50. Here, we used symmetric brain models of the patients with resection, since the pre-surgery MRI scans of these patients were not available (which may not even have been symmetrical in the first instance). Figure 5 shows the symmetric brain models along with the original models of the three patients. To quantify the introduced error in silence localization by using symmetric models, instead of the original model, we calculated the average distance of sources/nodes of the intact part of the resected hemisphere to the corresponding sources/nodes of the the structurally preserved hemisphere, mirrored across the longitudinal fissure (see Fig. 5). Following the 3D shape matching approach in ref. 51, for a specific source/node in the hemisphere with the region of silence, the corresponding source in the preserved hemisphere is defined as the node with the minimum distance to that specific source. Based on our calculations, the defined average distance between the symmetric brain model and the original brain model is 2.41 ± 0.055 mm, 2.50 ± 0.043 mm, and 2.03 ± 0.044 mm, for UD, SN, and OT, respectively. We excluded the resected parts of the brain in calculating the average distance between the symmetric brain model and the original brain model. To ensure that this average distance is not affected by excluding the resected regions, we also calculated the hemispheric distance in three healthy controls (intact brains) using an open-source MRI database (OASIS-152). The average distance between the symmetric and the original brain model was 2.33 ± 0.012 mm, 2.78 ± 0.016 mm, and 2.35 ± 0.012 mm, for OAS1_0004_MR1 (male, 28 years), OAS1_0005_MR1 (male, 18 years), and OAS1_0034_MR1 (male, 51 years), respectively (see Fig. 5). In fMRI studies, an acceptable motion and voxel displacement, especially in scans of children and adolescents, is typically up to 3 mm53. Since the average distance of the symmetric and the original brain models is <3 mm, using the symmetric brain model seems to be a reasonable choice for silence localization.

The average brain distance is shown in patients with lobectomy, i.e., UD with right occipitotemporal lobectomy, SN with left temporal lobectomy, and OT with left frontotemporal lobectomy, as well as in three healthy controls, namely, OAS1_0004_MR1, OAS1_0005_MR1, and OAS1_0034_MR1. The average distance for all patients and healthy controls are <3 mm, which makes the symmetric brain model a reasonable choice for the silence localization task, as it does not introduce substantial error.

Effect of error in the structural segmentation of MRI

Segmentation of structural MRI scans for patients with brain injuries is complicated54,55,56, and standard structural segmentation techniques (e.g., for example, FreeSurfer) may introduce error in silence localization. Standard segmentation methods use anatomical priors extracted from manually or semi-automatically annotated atlases of the healthy brain56. However, the anatomy of the damaged brain, especially following severe injury or large resection, diverges substantially from the anatomy of a healthy brain54. To address this, we used an open-source software, AFNI57,58, which is designed to improve the segmentation of the scans in patients with brain lesions and/or tumors. Additionally, we used specifically designed scripts, provided to us by Dr. Daniel Glen (Computer Engineer, NIH), to segment the structural MRI scans of the patients in our dataset57,58. We compared the performance of SilenceMap for the patients in our study with the ground truth regions of silence extracted using AFNI vs. the extracted ground truth using FreeSurfer (Supplementary Fig. 3 contains the results using AFNI). Based on these results, there is a small reduction in the silence localization performance using AFNI in comparison with results from FreeSurfer (Fig. 3 in “Results” and Supplementary Fig. 3 in Supplementary Note C). This suggests that standard techniques (used in FreeSurfer) perform reasonably well in MRI segmentation for our participants, and do not contribute substantially to the silence localization error.

Effects of brain-to-skull conductivity ratio

We also explored the effect of different assumptions for the brain-to-skull conductivity ratio on the performance of SilenceMap. Our results (Supplementary Fig. 4 includes results and further discussion is available in Supplementary Note D) confirm the robustness of our algorithm to imprecise knowledge of brain-to-skull conductivity ratio.

Discussion

In this paper, we introduced SilenceMap, an algorithm that localizes contiguous regions of silence in the brain based on noninvasive scalp EEG signals. The key technical ideas introduced here include ensuring that background brain activity is separated from silences, using hemispheric baseline, careful referencing, and utilization of a convex optimization framework for clustering. We compared the performance of SilenceMap in stimulations as well as real data comprising structural MRI scans of three patients with cortical resection. SilenceMap substantially outperformed appropriately modified state-of-the-art source localization algorithms, such as MNE, MUSIC, and sLORETA, and reduced the distance error (ΔCOM) by 46 mm, requiring <3 min of EEG signal acquisition time. We also explored potential errors introduced into the algorithmic calculations, e.g., due to our hemispheric baseline assumption, imprecise segmentation of the structural MRI data, or through inaccurate assessment of brain-to-skull conductivity. Our further analyses reveal the robustness of SilenceMap to these challenges. Altogether, the findings indicate that SilenceMap has considerable potential that permits more general adoption and this EEG-based silence localization method can be used in cases where common imaging modalities such as MRI and computed tomography are not applicable and/or unavailable.

Limitations and future directions

As promising as it appears, SilenceMap has its own limitations, which can serve as the focus of future investigations: (i) We used the electrode locations in SilenceMap in multiple steps of the algorithm, including in the estimation of the forward matrix, which is a function of the electrode locations. In placing the EEG cap for each patient, we manually adjust the cap’s location so that the electrodes are placed in the standard 10-5 arrangement. This could be improved by using new methods for guided EEG cap placement59. (ii) As mentioned in “Methods: Problem statement”, SilenceMap assumes that there is one region of silence in the brain, as is the case for the individuals in our real and simulated dataset. One can modify the algorithm to localize multiple regions of silence in the brain, either located in one or both hemispheres. (iii) SilenceMap showed substantial improvement in estimation of size of region of silence, compared to the state-of-the-art modified source localization algorithms. This size estimation in SilenceMap is based on the minimization of scalp average power error, as defined in Eq. (27) in “Methods”, which shows an average error of about 30% based on the real dataset in our paper. For applications where more precise estimation of the size of the regions of silence is needed, SilenceMap needs to be improved. (iv) We plan to examine silence localization in individuals with etiologies other than resection. We believe that further improvements and modifications might be needed to use SilenceMap for smaller and deeper regions of silence. (v) We do not consider the effect of the boundary between the intact and the resected brain tissue. In our analysis, the boundary or penumbra is considered to be active and healthy brain tissue. This is a reasonable assumption in surgical removal, and perhaps in other cases where there is a sharp boundary between the affected and the healthy tissue. However, this is not true in diffuse lesions and/or tumors in the brain, and investigation of the boundary effect in these cases requires further consideration. (vi) SilenceMap localizes stationary regions of silence. Extending the algorithm to localize evolving regions of silence, e.g., for CSD propagation, tumor or lesion expansion, will be important. (vii) Finally, there may be changes in the neural functional connectivity because of the region of silence. Estimating these changes are important for, for example, predicting diaschisis (remote effects of a resection) or other, wide-scale changes in signal propagation between regions of cortex.

Methods

Notation

In this paper, we use non-bold letters and symbols (e.g., a, γ, and θ) to denote scalars; lowercase bold letters and symbols (e.g., a, γ, and θ) to denote vectors; uppercase bold letters and symbols (e.g., A, E, and Δ) to denote matrices, and script fonts (e.g., \({\mathcal{S}}\)) to denote sets.

Problem statement

Following the standard approach in the source localization problems, we use the linear approximation of the well-known Poisson’s equation to write a linear equation, which relates the neural electrical activities in the brain to the resulting scalp potentials60,61. This linear equation is called forward model62. In this model, each group of neurons are modeled by a current source or dipole, which is assumed to be oriented normal to the cortical surface15.

The linear forward model can be written as below:

where A is the forward matrix, X is the matrix of measurements where each row represents the potentials recorded at one electrode, with reference at infinity, across time. S is the matrix of source signals, E is the measurement noise, T is the number of time points, p is the number of sources, and n is the number of scalp sensors.

In practice, we do not have the matrix X, since the reference at infinity cannot be recorded. Only a differential recording of scalp potentials is possible. If we define a (n − 1) × n matrix M with the last column to be all −1 and the first n − 1 columns compose an identity matrix, the differential scalp signals, with the last electrode’s signal as the reference, can be written as follows:

where Y is the matrix of differential signals of scalp, M is a matrix, which transforms the scalp signals with reference at infinity in the matrix X to the differential signals in Y. Equation (6) can be rewritten as follows:

where \(\widetilde{{\mathbf{A}}}={\mathbf{M}}{\mathbf{A}}\), and \(\widetilde{{\mathbf{E}}}={{\mathbf{M}}}{{\mathbf{E}}}\).

Objective

Given M, Y, and A, estimate the region of silence in S.

For this objective, we consider two different scenarios: (1) there are no baseline recordings for the region of silence, i.e., no scalp EEG recording is available where there is no region of silence, (2) with baseline recording, i.e., we consider the recording of the hemisphere of the brain, left or right, which does not have any region of silence, as the baseline for the silence localization task. Note that the location of the baseline hemisphere (left or right) is not assumed to be known a priori. Rather, locating the region of silence is the goal of this approach.

We make the following assumptions: (i) A and M are known, and Y has been recorded. (ii) \(\widetilde{{\mathbf{E}}}\) is additive white noise, whose elements are assumed to be independent across space. Thus, at each time point, the covariance matrix is Cz given by:

where \({\sigma }_{{z}_{i}}^{2}\) is the noise variance at electrode i, and it is assumed to be known (to see how this might be estimated see Supplementary Note E and Supplementary Fig. 5). (iii) k rows of S correspond to the region of silence, which are rows of all zeros. The correlations of source activities reduces as the spatial distance between the sources increases. We assume a spatial exponential decay profile for the source covariance matrix Cs, with identical variances (\({\sigma }_{s}^{2}\)) for all non-silent sources, whose signals model the background brain activities:

where fi is the 3D location of source i in the brain, γ is the exponential decay coefficient, and \({\mathcal{S}}\) is the set of indices of silent sources (\({\mathcal{S}}:= \{i| {s}_{it}=0\,\,\,{\text{for}}\,\ \,{\text{all}}\,\ \,t\in \{1,2,\cdots T\}\}\)). We assume that the elements of S have zero mean, and follow a wide-sense stationary (WSS) process. (iv) M is a (n − 1) × n matrix where the last column is \(-{\mathbb{1}}_{n-1 \times {\!}1}\) and the first n − 1 columns form an identity matrix (I(n−1)×(n−1)). (v) We assume p − k ≫ k, where p − k is the number of active, i.e., non-silent sources, and k is the number of silent sources. (vi) Silent sources are contiguous. We define contiguity based on a z-nearest neighbor graph, where the nodes are the brain sources (i.e., vertices in the discretized brain model). In this z-nearest neighbor graph, two nodes are connected with an edge, if either or both of these nodes is among the z-nearest neighbors of the other node, where z is a known parameter (see “Methods” to learn what values of z can be used). A contiguous region is defined as any connected subgraph of the defined nearest neighbor graph, i.e., between each two nodes in the contiguous region, there is at least one connecting path. (vii) For simplicity, we assume that silence lies in only one hemisphere (as is the case for the three individuals examined in the Results). However, the location of this hemisphere is not assumed to be known (see “Methods: SilenceMap with baseline recordings” for the details of SilenceMap with baseline).

With baseline recordings

In the absence of baseline recordings, estimating the region of silence proves difficult. In order to exploit prior knowledge about neural activity, we use the approximate symmetry of power of neural activity in the two hemispheres of a healthy brain (see the “Results” for more details on the hemispheric symmetry of scalp potentials, along with examples from the real dataset). (viii) As an additional simplification, we assume that even when there is a region of silence, if the electrode is located far away from the region of silence, then the symmetry still holds. For example, if the silence is in the occipital region, then the power of the signal at the electrodes in the frontal region (after subtracting noise power) is assumed to be symmetric in the two hemispheres (mirror imaged along the longitudinal fissure). This is only an approximation because (a) the brain activity is not completely symmetric, and (b) a silent source affects the signal everywhere, even far from the silent source (see Fig. 4 in the “Results”). Nevertheless, as we will see, this assumption enables more accurate inferences about the location of the silence region in real data using SilenceMap with baseline, in comparison to SilenceMap without baseline. The simplification assumptions in this section are summarized in Table 3, where we discuss the effect of each assumption, along with possible ways to relax them.

We first provide the details of this two-step algorithm under the condition where we do not have any baseline, and then with a hemispheric baseline.

SilenceMap without baseline recordings

If we do not have any baseline recording, we design the two-step silence localization algorithm as follows:

Low-resolution grid and uncorrelated sources

For the iterative method in the second step, we need an initial estimate of the region of silence to select the electrodes whose powers are affected the least by the region of silence. We coarsely discretize the cortex to create a very low-resolution source grid with sources that are located far enough from each other, so that the elements of S can be assumed to be uncorrelated across space:

Under this assumption of uncorrelatedness and identical distribution of brain sources in this low-resolution grid, we find a contiguous region of silence through the following steps:

(i) Cross-correlation: Eq. (7) can be written in the form of linear combination of columns of matrix \(\widetilde{{\bf{A}}}\) as follows:

where sit is the ith element of the tth column in S, \({\bf{Y}}=[{{\bf{y}}}_{1},\cdots \ ,{{\bf{y}}}_{T}]\in {{\mathbb{R}}}^{(n-1)\times {\!}T}\), \({\bf{S}}=[{{\bf{s}}}_{1},\cdots \ ,{{\bf{s}}}_{T}]\in {{\mathbb{R}}}^{p\times {\!}T}\), \(\widetilde{{\bf{A}}}=[{\widetilde{{\bf{a}}}}_{1},\cdots \ ,{\widetilde{{\bf{a}}}}_{p}]\in {{\mathbb{R}}}^{(n-1)\times p}\), and \(\widetilde{{\mathbf{E}}}=[{\widetilde{{\boldsymbol{\epsilon }}}}_{1},\cdots \ ,{\widetilde{{\boldsymbol{\epsilon }}}}_{T}]\in {{\mathbb{R}}}^{(n-1)\times {\!}T}\).

Based on Eq. (11), each column vector of differential signals, i.e., yt, is a weighted linear combination of columns of matrix \(\widetilde{{\bf{A}}}\), with weights equal to the corresponding source values. However, in the presence of silences, the columns of \(\widetilde{{\bf{A}}}\) corresponding to the silent sources do not contribute to this linear combination. Therefore, we calculate the cross-correlation coefficient μqt, which is a measure of the contribution of the qth brain source to the measurement vector yt (across all electrodes) at the tth time-instant, defined as follows:

This measure is imperfect because the columns of the \(\widetilde{{\bf{A}}}\) matrix are not orthogonal. The goal here is to attempt to quantify relative contributions of all sources to the recorded signals, and use that to arrive at a decision on which sources are silent because their contribution is zero.

(ii) Estimation of variance of μqt: In this step, we estimate the variances of the correlation coefficients calculated in the step (i). Based on Eq. (12) we have:

where \({\mathcal{S}}\) is the indices of silent sources. In (13), the equality (a) holds because of independence of noise and sources, and the assumption that they have zero mean, (b) holds because the elements of S, i.e., sit’s, are assumed to be uncorrelated and have zero mean in the low-resolution grid, and (c) holds because sit’s are assumed to be identically distributed. It is important to note that \({\sigma }_{s}^{2}\) in (13) is a function of source grid discretization and it does not have the same value in the low-resolution and high-resolution grids. We estimate the variance of μqt using its power spectral density, as is explained in detail in the Supplementary Note F.

Based on Eq. (13), the variance of μqt, excluding the noise variance, can be written as follows:

where \(\widetilde{{\mathrm{Var}}}({\mu }_{qt})\) is the variance of μqt without the measurement noise term, which is a function of the size and location of region of silence through the indices in \({\mathcal{S}}\). However, this variance term, as is, cannot be used to detect the silent sources, since some sources may be deep, and/or oriented in a way that they have weaker representation in the recorded signal yi, and consequently have smaller Var(μqt) and \(\widetilde{{\mathrm{Var}}}({\mu }_{qt})\).

(iii) Source contribution measure (β): To be able to detect the silent sources and distinguish them from sources, which inherently have different values of \(\widetilde{{\mathrm{Var}}}({\mu }_{qt})\), we need to normalize this variance term for each source by its maximum possible value, i.e., when there is no silent source (\({\widetilde{{\mathrm{Var}}}}^{{\mathrm{max}}}({\mu }_{qt})=\mathop{\sum }\nolimits_{i = 1}^{p}{({\widetilde{{\bf{a}}}}_{q}^{T}{\widetilde{{\bf{a}}}}_{i})}^{2}{\sigma }_{s}^{2}\)):

where \(\overline{{\mathrm{Var}}({\mu }_{qt})}\) is the normalized variance of μqt, without noise, and it takes values between 0 (all sources silent) and 1 (no silent source). Note that it does not only depend on whether \(q\in {\mathcal{S}}\), where \({\mathcal{S}}\) is the set of indices of silent sources. In general, this normalization requires estimation of source variance \({\sigma }_{s}^{2}\), but under the assumption that sources have identical distribution, they all have identical variances. Therefore, \({\sigma }_{s}^{2}\) in the denominator of Eq. (15) is the same for all sources. We multiply both sides of Eq. (15) by \({\sigma }_{s}^{2}\) and obtain:

Therefore,

where βq is called the contribution of the qth source in the differential scalp signals in Y, which takes values between 0 (all sources silent) and \({\sigma }_{s}^{2}\) (no silent sources). In Eq. (17), \(\widehat{{\mathrm{Var}}}({\mu }_{qt})\) is an estimate of variance of μqt, and \(\widehat{{{\bf{C}}}_{z}}\) is an estimate of noise covariance matrix (see Supplementary Note E and Supplementary Note F to see how these estimates might be obtained).

(iv) Localization of silent sources in the low-resolution grid: In this step, we find the silent sources based on the βq values defined in the previous step, through a convex spectral clustering (CSpeC) framework as follows:

where βT = [β1, ⋯ , βp] is the vector of source contribution measures, \({\bf{g}}={[{g}_{1},\cdots ,{g}_{p}]}^{T}\) is a relaxed indicator vector with values between 0 (for silent sources) and 1 (for active sources), k is the number of silent sources, i.e., the size of the region of silence, λ is a regularization parameter, and L is a graph Laplacian matrix defined in Eq. (23) below. Based on the linear term in the cost function of Eq. (18), the optimizer finds the solution \({\mathbf{g}}^{\star}\) that (ideally) has small values for the silent sources, and large values for the non-silent sources. The ℓ1 norm convex constraint controls the size of region of silence in the solution. To make the localized region of silence contiguous, we have to penalize the sources, which are located far from each other. This is done using the quadratic term in the cost function in Eq. (18) and through a graph spectral clustering approach, namely, relaxed RatioCut partitioning25,26,27. We define a z-nearest neighbor undirected graph with the nodes to be the locations of the brain sources (i.e., vertices in the discretized brain model), and a weight matrix W defined as follows:

where the link weight is zero (no link) between node i and j, if node i is not among the z-nearest neighbors of j, and node j is not among the z-nearest neighbors of i. In Eq. (19), we choose z to be equal to the number of silent sources, i.e., k, and θ is an exponential decay constant, which normalizes the distances of sources from each other in a discretized brain model, by their variance as follows:

where \(N=\frac{p(p-1)}{2}\) is the total number of inter-source distances, and \(\overline{\delta {\bf{f}}}\) is an estimated average of these inter-source distances, given by:

The degree matrix of the graph (D) is given by:

Using the degree and weight matrices defined in Eqs. (19) and (22), the graph Laplacian matrix, L in Eq. (18), is defined as follows:

Based on one of the properties of the graph Laplacian matrix63, we can write the quadratic term in the objective function of Eq. (18) as follows:

where \({\bf{g}}\in {{\mathbb{R}}}^{p}\). This quadratic term promotes the contiguity in the localized region of silence, e.g., an isolated node in the region of silence, which is surrounded by a number of active sources in the nearest neighbor graph, causes a large value in the quadratic term in Eq. (24), since wij has large value due to the contiguity, and the difference (gi − gj) has large value, since it is evaluated between pairs of silent (small gi)-active (large gj) sources.

For a given k, the regularization parameter λ in Eq. (18), is found through a grid-search and the optimal value (λ⋆) is found as the one which minimizes the total normalized error of source contribution and the contiguity term as follows:

In addition, the size of region of silence, i.e., k, is estimated through a grid-search as follows:

where (.)ii indicates the element of a matrix at the intersection of the ith row and the ith column, \(\widehat{{\mathrm{Var}}}({y}_{i})\) is the estimated variance of the ith differential signal in Y, and \({\widehat{\sigma }}_{{z}_{i}}^{2}\) is the estimated noise variance at the ith electrode location (see Supplementary Note E and Supplementary Note G to see how these might be estimated). In Eq. (26), Cs(k) is the source covariance matrix, when there are k silent sources in the brain. The estimate \(\hat{k}\) minimizes the cost function in Eq. (26), which is the squared error of difference between the powers of scalp differential signals, resulting from the region of silence with size k, and the estimated scalp powers based on the recorded data, with the measurement noise power removed. Under the assumption of identical distribution of sources, and lack of spatial correlation in the low-resolution source grid, and based on Eq. (10), we can rewrite Eq. (26) as follows:

where \({\widetilde{a}}_{ij}\) is the element of matrix \(\widetilde{{\bf{A}}}\) at the intersection of the ith row and the jth column, and \({\mathcal{S}}\) is the set of indices of k silent sources, i.e., indices of sources corresponding to the k smallest values in g⋆(λ⋆, k), which is the solution of Eq. (18). The second equality in Eq. (27) normalizes the power of electrode i using the maximum power of scalp signals for each i. This step eliminates the need to estimate σs in the low-resolution.

Finally, the region of silence is estimated as the sources corresponding to the \(\hat{k}\) smallest values in \({{\bf{g}}}^{\star }({\lambda }^{\star },{k})\). The 3D coordinates of the center-of-mass (COM) of the estimated contiguous region of silence in the low-resolution grid, i.e., \({{\bf{f}}}_{{\mathrm{COM}}}^{{\mathrm{low}}}\), is used as an initial guess for the silence localization in the high-resolution grid, as explained in the next step.

Iterative algorithm based on a high-resolution grid and correlated sources

In this step, we use a high-resolution source grid, where the sources are not uncorrelated anymore. We try to estimate the source covariance matrix Cs based on the spatial exponential decay assumption in (9). In each iteration, based on the estimated source covariance matrix, the region of silence is localized using a CSpeC framework.

(i) Initialization: In this step, we initialize the source variance \({\sigma }_{s}^{2}\), the exponential decay coefficient in the source covariance matrix γ, and the set of indices of silent sources \({\mathcal{S}}\) as follows:

where \({{\mathcal{S}}}^{(0)}\) is simply the index of nearest source in the high-resolution grid to the COM of the localized region of silence in the low-resolution grid, i.e., \({{\bf{f}}}_{{\mathrm{COM}}}^{{\mathrm{low}}}\).

For r = 1, 2, ⋯ R, we repeat the following steps until either the maximum number of iterations (R) is reached, or COM of the estimated region of silence \({{\bf{f}}}_{{\mathrm{COM}}}^{{\mathrm{high}}^{(r)}}\) has converged, where \({{\bf{f}}}_{{\mathrm{COM}}}^{{\mathrm{high}}^{(0)}}\) is the location of the source with index \({{\mathcal{S}}}^{(0)}\) in the high-resolution grid. The convergence criterion is defined as below:

where δ is a convergence parameter for COM displacement through iterations, and \({{\bf{f}}}_{{\mathrm{COM}}}^{{\mathrm{high}}}\in {{\mathbb{R}}}^{3\times 1}\).

(ii) Estimation of \({\sigma }_{s}^{2}\) and γ: In this step, we estimate the source variance \({\sigma }_{s}^{2}\), and the exponential decay coefficient of source covariance matrix γ, based on their values in the previous iteration and the indices of silent sources in \({{\mathcal{S}}}^{(r-1)}\). We define \({{\bf{C}}}_{s}^{{\mathrm{full}}}\) as the source covariance matrix when there are no silent sources in the brain, and use it to measure the effect of region of silence on the power of each electrode. The source covariance matrix in the previous iteration (r − 1) is calculated as follows:

and \({{\bf{C}}}_{s}^{{\mathrm{full}}}\) is given by:

where there is no zero row and/or column, i.e., there is no silence. To be able to estimate \({\sigma }_{s}^{2}\) and γ based on the differentially recorded signals in Y, we need to find the electrodes, which are the least affected by the region of silence. Based on the assumption (v) in “Methods: Problem statement”, the region of silence is much smaller than the non-silent brain region and some electrodes can be found on scalp, which are not substantially affected by the region of silence. We find these electrodes by calculating a power-ratio for each electrode, i.e., the power of electrode when there is a silent region, divided by the power of electrode when there is not any region of silent in the brain, as follows:

where h is a vector with values between 0 (all sources silent) and 1 (no silent source). Using this power-ratio, we select the electrodes as follows:

where \({{\mathcal{S}}}_{{\mathrm{elec}}}\) is the indices of the top ϕ electrodes, which have the least power reduction due to the silent sources in \({\mathcal{S}}\). Based on the differential signals of the selected ϕ electrodes in Eq. (33), γ(r) and \({\sigma }_{s}^{(r)}\) are estimated as the least-square solutions in the following equation:

(iii) Localization of silent sources in the high-resolution grid: Based on the correlatedness assumption of sources in the high-resolution grid, we modify the source contribution measure definition (from Eq. (17)) as follows:

where \({\beta }_{q}^{{\mathrm{high}}^{(r)}}\) takes values between 0 (all sources silent), and 1 (no silent source in the brain). The only difference between \({\beta }_{q}^{{\mathrm{high}}^{(r)}}\) in the high-resolution grid and βq in the low-resolution grid is in their denominators, which are essentially the variance terms in the absence of any silent source (\({\widetilde{{\mathrm{Var}}}}^{{{\max}}}({\mu }_{qt})\) in Eq. (15)). In βq, the denominator is divided by the source variance \({\sigma }_{s}^{2}\), to be able to calculate βq without estimation of \({\sigma }_{s}^{2}\). However, in the high-resolution grid, the denominator of \({\beta }_{q}^{{\mathrm{high}}^{(r)}}\) is simply \({\widetilde{{\mathrm{Var}}}}^{{\mathrm{max}}}({\mu }_{qt})\), which is calculated under the source correlatedness assumption and using the estimated \({{\bf{C}}}_{s}^{{\mathrm{full}}^{(r)}}\). Using the definition of source contribution measure \({\beta }_{q}^{{\mathrm{high}}^{(r)}}\) in the high-resolution grid, at iteration r, the contiguous region of silence is localized through a CSpeC framework, similar to the one defined in Eq. (18). However, we use the estimated source covariance matrix in each iteration to introduce a new set of constraints on the powers of the electrodes, which are less affected by the region of silence, i.e., the electrodes in \({{\mathcal{S}}}_{{\mathrm{elec}}}^{(r)}\), as defined in Eq. (33). Based on these power constraints, we obtain a convex optimization framework to localize the region of silence in the high-resolution brain model as follows:

where \({{\boldsymbol{\beta }}}^{{{\mathrm{high}}^{(r)}}^{T}}=[{\beta }_{1}^{{\mathrm{high}}^{(r)}},\cdots \ ,{\beta }_{p}^{{\mathrm{high}}^{(r)}}]\), \({\bf{g}}={[{g}_{1},\cdots ,{g}_{p}]}^{T}\), \({\boldsymbol{\zeta }}={[{\zeta }_{1},\cdots ,{\zeta }_{\phi }]}^{T}\), λ and ζi are regularization parameters, and \({\bar{{\bf{A}}}}_{i}\) is a diagonal matrix, with the elements of ith row of \(\widetilde{{\bf{A}}}\) on its main diagonal, defined as below:

In Eq. (36), ζi is chosen to be equal to the square of the residual error in (34), for each \(i\in {{\mathcal{S}}}_{{\mathrm{elec}}}\), i.e.,

In each iteration r, values of λ and k are found in a similar way as they are found in the low-resolution grid (see Eqs. (25) and (26)). However, to estimate k based on Eq. (26), in the high-resolution grid we use \({{\bf{C}}}_{s}(k)={{\bf{C}}}_{s}^{(r)}\), as is defined in Eq. (30). After each iteration, the set of silent indices in \({{\mathcal{S}}}^{(r)}\) is updated with the indices of the \(\hat{k}\) smallest values in the solution of Eq. (36), i.e., \({{\bf{g}}}^{\star (r)}({\lambda }^{\star },\hat{k},{\boldsymbol{\zeta }})\).

After convergence, i.e., when the convergence criterion is met (see Eq. (29)), the final estimate of region of silence is the set of source indices in \({{\mathcal{S}}}^{({r}_{\text{final}})}\).

Choosing the best reference electrode

the final solution \({{\mathcal{S}}}^{({r}_{\text{final}})}\) may change as we choose different EEG reference electrodes, which changes the matrix of differential signals of scalp Y and the forward matrix \(\widetilde{{\bf{A}}}\) in Eq. (7). The question is how to choose a reference electrode, which gives us the best estimation of region of silence? To address this question, we use an approach similar to the estimation of \(\hat{k}\), i.e., we choose the reference electrode, which gives us the minimum scalp power mismatch. We define the power mismatch ΔPow as follows:

where both \(\widetilde{{\bf{A}}}\) and yi are calculated based on a specific reference electrode. ΔPow is the total squared error between the normalized powers of scalp differential signals, resulting from the region of silence with size \(\hat{k}\), and the estimated scalp powers based on the recorded data with a specific reference.

SilenceMap with baseline recordings

If we consider a hemispheric baseline or, more generally, have a baseline recording, the 2-step SilenceMap algorithm remains largely the same. In an ideal case where we have a baseline recording of scalp potentials, we simply compare the contribution of each source in the recorded scalp signals when there is a region of silence in the brain, with its contribution to the baseline recording. This results in a minor modification of SilenceMap. The definitions of source contribution measures in Eqs. (17) and (35), need to be changed as follows:

where βq is defined in Eq. (17) for the low-resolution grid, and in Eq. (35) for the high-resolution grid, and \({\beta }_{q}^{{\mathrm{base}}}\) is the corresponding contribution measure of source q in the baseline recording. However, if the baseline recording is not available for the silence localization (as it was not available in our dataset used in the Results), based on the assumption of hemispheric symmetry in “Methods: Problem statement” (see assumption (viii)), one can use a hemispheric baseline. The source contribution measure is defined in a relative way, i.e., each source’s contribution measure is calculated in comparison with the corresponding source in the other hemisphere, as follows:

where \({{\mathcal{S}}}^{{\mathrm{LH}}}\) is the set of indices of sources in the left hemisphere and \({{\mathcal{S}}}^{{\mathrm{RH}}}\) is the set of indices of sources in the right hemisphere, and source indices, which are not in \({{\mathcal{S}}}^{{\mathrm{LH}}}\cup {{\mathcal{S}}}^{{\mathrm{RH}}}\), are located across the longitudinal fissure, which is defined as a strip of sources on the cortex, with a specific width zgap. The index qm in Eq. (41) is the index of the mirror source for source q, i.e., source q’s corresponding source in the other hemisphere.

Equation (41) reveals the advantage of having a baseline for the silence localization task, i.e., we can relax the identical distribution assumption of sources in the source contribution measure, which makes \(\tilde{\beta }\) robust against the violation of the identical distribution assumption of sources in the real world. The rest of the algorithm remains the same, as is explained in “Methods: SilenceMap without baseline recordings.”

To find the solution of the CSpeC optimization in Eqs. (18) and (36), CVX, a MATLAB package for specifying and solving convex programs64,65, is used. In addition, MATLAB nonlinear least-square solver is used to find the solution of Eq. (34).

Time complexity of SilenceMap

The bottleneck of time complexity among the steps in our algorithm is the high-resolution convex optimization (see Eq. (36)). This is classified as a convex quadratically constrained quadratic program. However, the quadratic constraints in Eq. (36) are all scalar and each can be rewritten in forms of two linear constraints. This reduces the problem to a convex quadratic program, which can be solved either using semidefinite programming66 or second-order cone programming (SOCP)67. However, it is much more efficient to solve the QPs using SOCP rather than using the general solutions for SDPs68,69. Following the steps in69, we can write our problem in Eq. (36) as a SOCP with ν = 2p + 2ϕ + 1 constraint of dimension one, and one constraint of dimension p + 1, where p is the number of sources in the brain and ϕ is the number of selected electrodes in Eq. (33). Using the interior-point methods, the time complexity of each iteration is \({\mathcal{O}}({p}^{2}(\nu +p+1))\approx {\mathcal{O}}({p}^{3})\), where the number of iterations for the optimizer is upper bounded by \({\mathcal{O}}(\sqrt{\nu +1})={\mathcal{O}}(\sqrt{2p+2\phi +2})\)69. Therefore, the CSpeC framework for high resolution (see Eq. (36)) has the worst case time complexity of \({\mathcal{O}}({p}^{3.5})\). Similarly, the low-resolution CSpeC framework (see Eq. (18)), has the same time complexity of \({\mathcal{O}}({p}^{3.5})\), since it only has 2ϕ less linear constraints, in comparison with the quadratic program version of Eq. (36). It is important to mention that this time complexity is calculated without considering the sparsity of the graph Laplacian matrix (L) defined in Eq. (23). Exploiting such sparsity may reduce the computational complexity of solving the equivalent SOCP for our CSpeC framework70. The other steps of SilenceMap have lower degrees of polynomial time complexity (e.g., the least-square solution in Eq. (34) with time complexity of \({\mathcal{O}}({2}^{2}\phi)\), where ϕ ≪ p). Therefore, the general time complexity of SilenceMap is \({\mathcal{O}}({\mathrm{itr}}_{{\mathrm{ref}}}({p}^{3.5}+{\mathrm{it{r}}}_{{\mathrm{conv}}}.{\mathrm{it{r}}}_{k}.{\mathrm{it{r}}}_{\lambda }({p}^{3.5})))\approx {\mathcal{O}}({\mathrm{it{r}}}_{{\mathrm{ref}}}.{\mathrm{it{r}}}_{{\mathrm{conv}}}.{\mathrm{it{r}}}_{k}.{\mathrm{it{r}}}_{\lambda }({p}^{3.5}))\), including the number of iterations for finding the optimal regularization parameters (itrλ iterations for finding λ⋆ in Eq. (25), and itrk iterations for finding k⋆ in Eq. (26)), the required number of iterations for convergence of SilenceMap to a region of silence in the high-resolution step (itrconv), and the number of iterations to find the best reference electrode (itrref). It is worth mentioning that time required to run SilenceMap depends on the resolution of the search grids for the parameters used in the algorithm (see Supplementary Table I in Supplementary Note A), the resolution of the cortical models used, and the convergence criterion defined (see Eq. (29)). We acknowledge that there is room for improvement of the implementation and the algorithm itself to obtain a faster silence localization tool, e.g., by exploiting the sparsity of the graph Laplacian matrix in solving Eq. (36), parallelizing the iterations of the algorithm, and exploring lower-cost clustering methods, and this is left for the future work.

Modification of source localization algorithms for comparison with SilenceMap

To compare the performance of SilenceMap with the state-of-the-art source localization algorithms, namely, MNE, MUSIC, and sLORETA, we modified them for the silence localization task. These modifications largely consist of adding additional steps to select the silent sources based on the estimated source localization in each algorithm. These modifications only make for a fairer analysis and answer the question of whether small modifications on existing source localization algorithms can localize silences. The details of these modifications are explained in this part.

Modified minimum norm estimation (MNE)

MNE is one of the most commonly used source localization algorithms15,21,22. In this algorithm, the brain source activities are estimated based on a minimal power assumption, and through the following regularization method:

where \(\widehat{{\bf{S}}}\) is the estimated matrix of source signals, \(\widetilde{{\bf{A}}}={\bf{MA}}\), Y is the matrix of scalp differential signals defined in Eq. (6), λ is the regularization parameter, and ∥.∥F∥.∥F denotes the Frobenius norm of a matrix. Equation (42) has the following closed form solution:

where I is the identity matrix, and λ is obtained using a grid-search and based on the L − curve method71. The MNE algorithm is kept unchanged until this point. \(\widehat{{\bf{S}}}\), the estimated localization across time, is used to localize silences. For a fair comparison, we do so by using the two-step approach used in SilenceMap, i.e., we start from a low-resolution source grid and localize the region of silence, which is used as an initial guess for source localization in a high-resolution grid.

Low-resolution grid: In a low-resolution source grid, we localize the region of silence through the following steps: (i) We initialize the number of silent sources as \(\hat{k}={k}_{0}\); (ii) The squares of the elements in \(\widehat{{\bf{S}}}\) (\({\hat{s}}_{ij}^{2},\,\forall \,i=\{1,2,\cdots p\},\,\forall \,j=\{1,2,\cdots t\}\)) are calculated for source power comparison; (iii) For each time point j, sort the estimated source powers \({\hat{s}}_{ij}^{2}\) in the ascending order and choose the first \(\hat{k}\) corresponding sources, which are the sources with the minimum power at time j. We name the set of indices of these sources as \({{\mathcal{S}}}_{{\mathrm{MNE}}}^{j}\); (iv) Based on the repetition of sources in \({{\mathcal{S}}}_{{\mathrm{MNE}}}^{j}\), we calculate a histogram. Then this histogram is normalized and sorted in the descending order (the source with the largest population of 1 has the first index). The normalized population of source q is shown as \({\tilde{\beta }}_{q}^{{\mathrm{MNE}}}\); (v) In this step, we find an estimate of the size of region of silence (\(\hat{k}\)). This is done by finding the knee point in the curve of \({\tilde{\beta }}_{q}^{{\mathrm{MNE}}}\) vs. q (see Fig. 6a). In a curve, the knee point is defined as the point where the curve has maximum curvature, i.e., the point where the curve is substantially different from a straight line72,73,74. To find the knee point in the curve of \({\tilde{\beta }}_{q}^{{\mathrm{MNE}}}\) vs. q, we define a measure of distance to the origin (\(q=0,{\,}{\tilde{\beta }}_{q}^{{\mathrm{MNE}}}=0\)) as follows:

where \({d}_{q}^{{\mathrm{origin}}}\) is the defined distance of point (\(q=0,{\tilde{\beta }}_{q}^{{\mathrm{MNE}}}=0\)) on the curve to the origin, and p is the total number of sources in the descritized brain model. Fig. 6b shows the calculated \({d}_{q}^{{\mathrm{origin}}}\) for the curve in Fig. 6a. We choose the closest point to the origin as the knee point (\(q=\hat{k}\)), where the index of this knee point \(\hat{k}\) is an estimation for k (see Fig. 6b).

a \({\tilde{\beta }}_{q}^{{\mathrm{MNE}}}\) is the normalized and sorted histogram of sources in a descending order, which captures the frequency of a source to being among the \(\hat{k}\) sources with the minimum power over time. In this curve, a Knee point is defined as the point with maximum curvature, i.e., the point where the curve is substantially different from a straight line; b Distances of points on the curve in a from the origin (\(q=0,{\tilde{\beta }}_{q}^{{\mathrm{MNE}}}=0\)). The index of the point with the minimum distance from the origin (\(\hat{k}\)) is chosen as an estimation of k.

(vi) In this step, we exploit the knowledge of contiguity of the region of silence and estimate the region based on the estimated number of silent sources \(\hat{k}\). First we choose the \(2\hat{k}\) sources with the minimum power over time, i.e., the \(2\hat{k}\) sources, which have maximum \({\tilde{\beta }}_{q}^{{\mathrm{MNE}}}\). Then we calculate the COM of the \(2\hat{k}\) selected sources in the low-resolution grid (\({{\bf{f}}}_{{\mathrm{MNE}}}^{{\mathrm{low}}}\)), and choose the \(\hat{k}\)-nearest neighbors of \({{\bf{f}}}_{{\mathrm{MNE}}}^{{\mathrm{low}}}\) as the estimated region of silence in the low-resolution grid.

High-resolution grid: We use the COM of the estimated region of silence in the low-resolution grid (\({{\bf{f}}}_{{\mathrm{MNE}}}^{{\mathrm{low}}}\)), as an initial guess and try to improve the localization performance in a high-resolution source grid. The steps are mainly the same as the steps used in the low-resolution grid, except in the last step (vi), where we use the COM of the estimated region of silence in the low-resolution grid, and choose the \(\hat{k}\)-nearest neighbors of \({{\bf{f}}}_{{\mathrm{MNE}}}^{{\mathrm{low}}}\) as the estimated region of silence in the high-resolution grid, where \(\hat{k}\) is the estimated size of region of silence in the high-resolution grid based on the knee point detection method in step (v).

Modified multiple signal classification (MUSIC)

MUSIC is a source localization algorithm, which is based on a sequential search of sources, rather than finding all sources at the same time19,20. In MUSIC, the singular value decomposition (SVD) of the matrix of scalp recording signals Y(n−1)×T (\(={{\bf{U}}}_{(n-1)\times (n-1)}{{\mathbf{\Sigma }}}_{(n-1)\times {\!}T}{{\bf{V}}}_{T\times {\!}T}^{T}\)) is used to reconstruct an orthogonal projection to the noise space of Y to quantify the contribution of each source in the recorded signal Y15. The MUSIC algorithm follows these steps for source localization: (i) We select the left singular vectors (columns of U), which correspond to the large singular values up to ρ% of the total energy of the matrix (\(\mathop{\sum }\nolimits_{i = 1}^{(n-1)}{{{\Sigma }}}_{ii}^{2}\)), where ρ is a constant. These selected singular vectors (Us) form a basis for the observation data; (ii) We construct an orthogonal projection matrix to the noise space of Y as \({{\bf{P}}}^{\perp }={{\bf{I}}}_{(n-1)\times (n-1)}-{{\bf{U}}}_{s}{{\bf{U}}}_{s}^{T}\). Using this matrix the MUSIC cost function is written as15:

where \({\widetilde{{\bf{a}}}}_{q}\) is the qth columns in \(\widetilde{{\bf{A}}}\), and \({\beta }_{q}^{{\mathrm{MUSIC}}}\) is a measure of contribution of source q in the noise space of the recorded scalp potentials in Y. The MUSIC algorithm is kept unchanged until this point. The next steps of the modified MUSIC algorithm, in both low-resolution and high-resolution grids closely follow the last two steps in the Modified MNE algorithm, and we use the measure of source contribution in MUSIC (\({\beta }_{q}^{{\mathrm{MUSIC}}}\)) instead of \({\widetilde{\beta }}_{q}^{{\mathrm{MNE}}}\), where the source with no contribution in the differential measured signal Y has \({\beta }_{q}^{{\mathrm{MUSIC}}}=1\). Therefore, the main difference between the MUSIC algorithm and the modified MUSIC, is that in the MUSIC, the measure of contribution of source \({\beta }_{q}^{{\mathrm{MUSIC}}}\) is used to fined the active sources, i.e., the sources with small \({\beta }_{q}^{{\mathrm{MUSIC}}}\) values, while for the silence localization the sources with large \({\beta }_{q}^{{\mathrm{MUSIC}}}\) values are selected based on the contiguity assumption of the region of silence and using the knee point thresholding mechanism (step (v) in the modified MNE).

Modified standardized low-resolution brain electromagnetic tomography (sLORETA)

We modify the source localization sLORETA algorithm, introduced in ref. 24, in the same way that we modified the MNE algorithms for the silence localization. However, the minimum-norm solution requires an additional step of normalization by the estimated source variances. Since we assume that the orientations of dipoles in the brain are known, i.e., they are normal to the surface of the brain, following equation (22) in ref. 24, the estimated power of source activities in the brain based on the sLORETA algorithm is given by the following equation:

where \({\tilde{s}}_{it}\) is the ith element of the tth column in the minimum-norm solution \(\widetilde{{\bf{S}}}\), which is given by Eq. (43), and \({{\bf{C}}}_{s}^{{\mathrm{sLORETA}}}\) is defined as24: