Abstract

With the help of machine learning, electronic devices—including electronic gloves and electronic skins—can track the movement of human hands and perform tasks such as object and gesture recognition. However, such devices remain bulky and lack an ability to adapt to the curvature of the body. Furthermore, existing models for signal processing require large amounts of labelled data for recognizing individual tasks for every user. Here we report a substrate-less nanomesh receptor that is coupled with an unsupervised meta-learning framework and can provide user-independent, data-efficient recognition of different hand tasks. The nanomesh, which is made from biocompatible materials and can be directly printed on a person’s hand, mimics human cutaneous receptors by translating electrical resistance changes from fine skin stretches into proprioception. A single nanomesh can simultaneously measure finger movements from multiple joints, providing a simple user implementation and low computational cost. We also develop a time-dependent contrastive learning algorithm that can differentiate between different unlabelled motion signals. This meta-learned information is then used to rapidly adapt to various users and tasks, including command recognition, keyboard typing and object recognition.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The collected finger datasets for various daily tasks performed in this study are available via GitHub at https://github.com/meta-skin/metaskin_natelec. Further data that support the plots within this paper and other findings of this study are available from the corresponding authors upon reasonable request.

Code availability

The source code used for TD-C learning, rapid adaptation and results are available via GitHub at https://github.com/meta-skin/metaskin_natelec.

References

Bergquist, T. et al. Interactive object recognition using proprioceptive feedback. In Proc. 2009 IROS Workshop: Semantic Perception for Robot Manipulation, St. Louis, MO (2009).

Emmorey, K., Bosworth, R. & Kraljic, T. Visual feedback and self-monitoring of sign language. J. Mem. Lang. 61, 398–411 (2009).

Proske, U. & Gandevia, S. C. The proprioceptive senses: their roles in signaling body shape, body position and movement, and muscle force. Physiol. Rev. 92, 1651 (2012).

Piaget, J. & Cook, M. T. The Origins of Intelligence in Children (WW Norton, 1952).

Edin, B. B. Cutaneous afferents provide information about knee joint movements in humans. J. Physiol. 531, 289–297 (2001).

Collins, D. F., Refshauge, K. M. & Gandevia, S. C. Sensory integration in the perception of movements at the human metacarpophalangeal joint. J. Physiol. 529, 505–515 (2000).

Edin, B. B. & Abbs, J. H. Finger movement responses of cutaneous mechanoreceptors in the dorsal skin of the human hand. J. Neurophysiol. 65, 657–670 (1991).

Liu, Y., Jiang, W., Bi, Y. & Wei, K. Sensorimotor knowledge from task-irrelevant feedback contributes to motor learning. J. Neurophysiol. 126, 723–735 (2021).

Hadders-Algra, M. Early human motor development: from variation to the ability to vary and adapt. Neurosci. Biobehav. Rev. 90, 411–427 (2018).

Altmann, G. T. & Dienes, Z. Rule learning by seven-month-old infants and neural networks. Science 284, 875–875 (1999).

Wang, J. X. Meta-learning in natural and artificial intelligence. Curr. Opin. Behav. Sci. 38, 90–95 (2021).

Sundaram, S. et al. Learning the signatures of the human grasp using a scalable tactile glove. Nature 569, 698–702 (2019).

Luo, Y. et al. Learning human–environment interactions using conformal tactile textiles. Nat. Electron. 4, 193–201 (2021).

Chun, S. et al. An artificial neural tactile sensing system. Nat. Electron. 4, 429–438 (2021).

Caesarendra, W., Tjahjowidodo, T., Nico, Y., Wahyudati, S. & Nurhasanah, L. EMG finger movement classification based on ANFIS. J. Phys. Conf. Ser. 1007, 012005 (2018).

Zhou, Z. et al. Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat. Electron. 3, 571–578 (2020).

Moin, A. et al. A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. Nat. Electron. 4, 54–63 (2021).

Kim, K. K. et al. A deep-learned skin sensor decoding the epicentral human motions. Nat. Commun. 11, 2149 (2020).

Yan, Y. et al. Soft magnetic skin for super-resolution tactile sensing with force self-decoupling. Sci. Robot. 6, eabc8801 (2021).

You, I. et al. Artificial multimodal receptors based on ion relaxation dynamics. Science 370, 961–965 (2020).

Kaltenbrunner, M. et al. An ultra-lightweight design for imperceptible plastic electronics. Nature 499, 458–463 (2013).

Tang, L., Shang, J. & Jiang, X. Multilayered electronic transfer tattoo that can enable the crease amplification effect. Sci. Adv. 7, eabe3778 (2021).

Araromi, O. A. et al. Ultra-sensitive and resilient compliant strain gauges for soft machines. Nature 587, 219–224 (2020).

Miyamoto, A. et al. Inflammation-free, gas-permeable, lightweight, stretchable on-skin electronics with nanomeshes. Nat. Nanotechnol. 12, 907–913 (2017).

Lee, S. et al. Nanomesh pressure sensor for monitoring finger manipulation without sensory interference. Science 370, 966–970 (2020).

Wang, Y. et al. A durable nanomesh on-skin strain gauge for natural skin motion monitoring with minimum mechanical constraints. Sci. Adv. 6, eabb7043 (2020).

Hendrycks, D. & Gimpel, K. A baseline for detecting misclassified and out-of-distribution examples in neural networks. In Proc. Int. Conf. Learning Representations (ICLR, 2017).

Shimodaira, H. Improving predictive inference under covariate shift by weighting the log-likelihood function. J. Stat. Plan. Inference 90, 227–244 (2000).

Choi, S. et al. Highly conductive, stretchable and biocompatible Ag–Au core–sheath nanowire composite for wearable and implantable bioelectronics. Nat. Nanotechnol. 13, 1048–1056 (2018).

Kim, K. K. et al. Highly sensitive and stretchable multidimensional strain sensor with prestrained anisotropic metal nanowire percolation networks. Nano Lett. 15, 5240–5247 (2015).

Ershad, F. et al. Ultra-conformal drawn-on-skin electronics for multifunctional motion artifact-free sensing and point-of-care treatment. Nat. Commun. 11, 3823 (2020).

Radford, A., Narasimhan, K., Salimans, T. & Sutskever, I. Improving language understanding by generative pre-training. OpenAI Blog (2018).

Radford, A. et al. Language models are unsupervised multitask learners. OpenAI Blog (2019).

Wu, Z., Xiong, Y., Yu, S. X. & Lin, D. Unsupervised feature learning via non-parametric instance discrimination. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 3733–3742 (IEEE, 2018).

Hjelm, R. D. et al. Learning deep representations by mutual information estimation and maximization. In Proc. Int. Conf. Learning Representations (ICLR) (2019).

Kim, D., Kim, M., Kwon, J., Park, Y.-L. & Jo, S. Semi-supervised gait generation with two microfluidic soft sensors. IEEE Robot. Autom. Lett. 4, 2501–2507 (2019).

He, K., Fan, H., Wu, Y., Xie, S. & Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 9729–9738 (IEEE, 2020).

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A simple framework for contrastive learning of visual representations. In Proc. 37th International Conference on Machine Learning 1597–1607 (PMLR, 2020).

Spelke, E. S., Katz, G., Purcell, S. E., Ehrlich, S. M. & Breinlinger, K. Early knowledge of object motion: continuity and inertia. Cognition 51, 131–176 (1994).

Iwana, B. K. & Uchida, S. Time series data augmentation for neural networks by time warping with a discriminative teacher. In 2020 25th International Conference on Pattern Recognition (ICPR) 3558–3565 (IEEE, 2020).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems 5998–6008 (Curran Associates, 2017).

McInnes, L., Healy, J., Saul, N. & Großberger, L. UMAP: uniform manifold approximation and projection. J. Open Source Softw. 3, 861 (2018).

Mahmood, M. et al. Fully portable and wireless universal brain–machine interfaces enabled by flexible scalp electronics and deep learning algorithm. Nat. Mach. Intell 1, 412–422 (2019).

Kim, D., Kwon, J., Han, S., Park, Y. L. & Jo, S. Deep full-body motion network for a soft wearable motion sensing suit. IEEE/ASME Trans. Mechatron. 24, 56–66 (2019).

Wen, F. et al. Machine learning glove using self‐powered conductive superhydrophobic triboelectric textile for gesture recognition in VR/AR applications. Adv. Sci. 7, 2000261 (2020).

Wang, M. et al. Gesture recognition using a bioinspired learning architecture that integrates visual data with somatosensory data from stretchable sensors. Nat. Electron. 3, 563–570 (2020).

Acknowledgements

Part of this work was performed at the Stanford Nano Shared Facilities (SNSF), supported by the National Science Foundation under award ECCS-2026822. K.K.K. acknowledges support from the National Research Foundation of Korea (NRF) for Post-Doctoral Overseas Training (2021R1A6A3A14039127). This work is partially supported by the NRF Grants (2016R1A5A1938472 and 2021R1A2B5B03001691).

Author information

Authors and Affiliations

Contributions

K.K.K., M.K., S.J., S.H.K. and Z.B. designed the study. K.K.K. and M.K. designed and performed the experiments. M.K. and K.K.K. developed the algorithms and analysed the data. B.T.N. and Y.N. conducted the experiments on the substrate’s property. Jin Kim performed the biocompatibility tests. K.P. assisted in the sensor printing and setups. J.M., Jaewon Kim, S.H. and J.C. carried out the nanomesh synthesis. K.K.K., M.K., S.E.R., S.J., S.H.K., J.B.-H.T. and Z.B. wrote the paper and incorporated comments and edits from all the authors.

Corresponding authors

Ethics declarations

Competing interests

A US patent filing is in progress by Zhenan Bao and Kyun Kyu Kim.

Peer review

Peer review information

Nature Electronics thanks Nitish Thakor and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Soft sensors with intelligence.

Taxonomy of augmented soft sensors combined with machine intelligence.

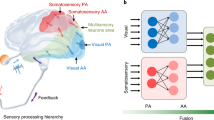

Extended Data Fig. 2 Learning robust motion representation from unlabeled data.

a, Schematic illustration of the wireless module that transfers multi-joint proprioceptive information. Random motions of PIP, MCP, and Wrist motions are collected. b, UMAP embedding of raw random finger motions and after motion extraction through TD-C learning.

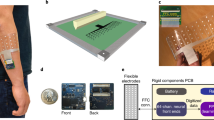

Extended Data Fig. 3 Wireless module for measuring changes of nanomesh.

a, Schematic illustration of the wireless module that transfers proprioceptive information through simple attachment above the printed nanomesh. Illustration and image of the module is shown. Flexible printed circuit board (FPCB), lithium polymer battery, and connector is shown. Right image depicts backview of the module. Nanomesh connector (NC) is applied, and electrical contact is made by simple attachment of the module to the printed nanomesh. b, Block diagram ofthe main components constituting the wireless module. Photograph shows real-time measurement through the module.

Extended Data Fig. 4 Model validation accuracies and transfer learning accuracies for sensor signal with and without substrate.

To investigate how substrate-less property contributes to the model discriminating different subtle hand motions, the same amount of sensor signals is collected while a user typing Numpad keys and interacting with 6 different objects. a, Collected dataset is divided into training and validation datasets with a ratio of 8:2 for normal supervised learning. b, For transfer learning, we apply our TD-C learning with unlabeled random motion data to pretrain our learning model and use the first five-shot demonstrations to further transfer learning. Directly attached to the finger surface, nanomesh without substrate outperforms sensor with substrates in different tasks and training conditions.

Extended Data Fig. 5 Model performance analysis and ablation studies for components in our learning models.

a, Confusion matrix for numpad typing data for each typing stroke after 20 transfer training. b, Confusion matrix for object recognition tasks for individual signal frame after 20 transfer training. c, More detailed comparison between TD-C Learning and supervised learning with last layer modification. For more precise comparison, we additionally trained TD-C learning model with labelled data used to train supervised model by removing labels. Even with the same number of training samples, our learning framework significantly outperform normal supervised learning when the model is transferred to predict different tasks. With more easily collectable unlabeled training samples, TD-C learning model pretrained with large random motion data shows higher accuracies in all transfer training epoch than other models. d, UMAP projection of latent vectors of labelled keypad typing data projected by our model pretrained with TD-C learning method. e, Ablation study for transfer accuracy comparison between applying timewise dependency loss and original contrastive learning loss. f, Ablation study for applying phase variable by comparing transfer accuracy trends for models with and without phase discrimination when inferencing different gestures in MFS.

Extended Data Fig. 6 Details of the learning model architecture.

a, Illustration of detailed layer structure for signal encoding model. The temporal signal patterns are encoded though transformer encoders with the aid of attention mechanism. Following linear blocks, MLP block and phase block, utilize encoded latent vectors to generate embedding vectors in our feature space and phase variables distinguishing active and inactive phases. b, Visualization of positional embedding used to advise model time-wise correlation between signal frames within a time window. Positional embedding allows the model to process temporal signal patterns in parallel using attention mechanisms enabling fast encoding of complex signal patterns for real time usages.

Extended Data Fig. 7 Ablation Studies on different learning methods and different temporal signal data augmentations.

a. Cosine similarity for supervised learning framework. b. Similarity based on TD-C learning. c, Examples of signal patterns before and after applying different data augmentations. d, Transfer accuracy comparison for learning models pretrained with different data augmentations predicting user numpad typing data. Jittering augmentation that does not change signal amplitude or frequencies allows the model to generate more transferable feature spaces. e, Summary table of prediction accuracy for different data augmentations. Compared to models trained with different data augmentations, the model trained with jittering shows 20% higher accuracy in average.

Extended Data Fig. 8 Prediction of full keyboard.

a, Each hand taking charge for the left half and right half of the keyboard. b, Confusion matrix of left side of keyboard. c, Confusion matrix of right part of keyboard. (Five-shot demonstrations for each key for transfer training dataset, accuracy left: 93.1 %, right 93.1 %).

Supplementary information

Supplementary Information

Supplementary Figs. 1–26, Tables 1–3.

Supplementary Video 1

Learning of command signals with gesture accumulation.

Supplementary Video 2

Virtual keypad input recognition.

Supplementary Video 3

Virtual keyboard input recognition.

Supplementary Video 4

Virtual keyboard input recognition (sentence).

Supplementary Video 5

Object recognition by rubbing.

Supplementary Video 6

Direct nanomesh printing.

Supplementary Video 7

Nanomesh with wireless measuring module.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kim, K.K., Kim, M., Pyun, K. et al. A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition. Nat Electron 6, 64–75 (2023). https://doi.org/10.1038/s41928-022-00888-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-022-00888-7

This article is cited by

-

Variable selection for multivariate functional data via conditional correlation learning

Computational Statistics (2024)

-

Wearable bioelectronics fabricated in situ on skins

npj Flexible Electronics (2023)