Abstract

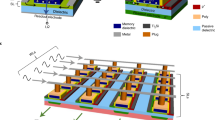

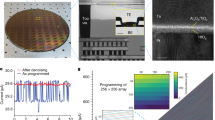

Memristors and memristor crossbar arrays have been widely studied for neuromorphic and other in-memory computing applications. To achieve optimal system performance, however, it is essential to integrate memristor crossbars with peripheral and control circuitry. Here, we report a fully functional, hybrid memristor chip in which a passive crossbar array is directly integrated with custom-designed circuits, including a full set of mixed-signal interface blocks and a digital processor for reprogrammable computing. The memristor crossbar array enables online learning and forward and backward vector-matrix operations, while the integrated interface and control circuitry allow mapping of different algorithms on chip. The system supports charge-domain operation to overcome the nonlinear I–V characteristics of memristor devices through pulse width modulation and custom analogue-to-digital converters. The integrated chip offers all the functions required for operational neuromorphic computing hardware. Accordingly, we demonstrate a perceptron network, sparse coding algorithm and principal component analysis with an integrated classification layer using the system.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding author upon reasonable request.

References

Chua, L. Memristor—the missing circuit element. IEEE Trans. Circuit Theory 18, 507–519 (1971).

Strukov, D. B., Snider, G. S., Stewart, D. R. & Williams, R. S. The missing memristor found. Nature 453, 80–83 (2008).

Jo, S. H. et al. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 10, 1297–1301 (2010).

Yu, S., Wu, Y., Jeyasingh, R., Kuzum, D. & Wong, H. S. P. An electronic synapse device based on metal oxide resistive switching memory for neuromorphic computation. IEEE Trans. Electron Devices 58, 2729–2737 (2011).

Zidan, M. A., Strachan, J. P. & Lu, W. D. The future of electronics based on memristive systems. Nat. Electron. 1, 22–29 (2018).

Krestinskaya, O., James, A. P. & Chua, L. O. Neuro-memristive circuits for edge computing: a review. Preprint at https://arxiv.org/abs/1807.00962 (2018)

Xia, Q. & Yang, J. J. Memristive crossbar arrays for brain-inspired computing. Nat. Mater. 18, 309–323 (2019).

Ielmini, D. & Wong, H.-S. P. In-memory computing with resistive switching devices. Nat. Electron. 1, 333–343 (2018).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Alibart, F., Zamanidoost, E. & Strukov, D. B. Pattern classification by memristive crossbar circuits using ex situ and in situ training. Nat. Commun. 4, 2072 (2013).

Bayat, F. M. et al. Implementation of multilayer perceptron network with highly uniform passive memristive crossbar circuits. Nat. Commun. 9, 2331 (2018).

Li, C. et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9, 2385 (2018).

Li, C. et al. Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2017).

Gao, L., Chen, P.-Y. & Yu, S. Demonstration of convolution kernel operation on resistive cross-point array. IEEE Electron Device Lett. 37, 870–873 (2016).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Du, C. et al. Reservoir computing using dynamic memristors for temporal information processing. Nat. Commun. 8, 2204 (2017).

Choi, S., Shin, J. H., Lee, J., Sheridan, P. & Lu, W. D. Experimental demonstration of feature extraction and dimensionality reduction using memristor networks. Nano Lett. 17, 3113–3118 (2017).

Le Gallo, M. et al. Mixed-precision in-memory computing. Nat. Electron. 1, 246–253 (2018).

Burr, G. W. et al. Experimental demonstration and tolerancing of a large-scale neural network (165,000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Electron Devices 62, 3498–3507 (2015).

Boybat, I. et al. Neuromorphic computing with multi-memristive synapses. Nat. Commun. 9, 2514 (2018).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Gao, B. et al. Ultra-low-energy three-dimensional oxide-based electronic synapses for implementation of robust high-accuracy neuromorphic computation systems. ACS Nano 8, 6998–7004 (2014).

Zidan, M. A. et al. A general memristor-based partial differential equation solver. Nat. Electron. 1, 411–420 (2018).

Hu, M. et al. Memristor-based analog computation and neural network classification with a dot product engine. Adv. Mater. 30, 1705914 (2018).

Xia, Q. et al. Memristor–CMOS hybrid integrated circuits for reconfigurable logic. Nano Lett. 9, 3640–3645 (2009).

Kim, K.-H. et al. A functional hybrid memristor crossbar-array/CMOS system for data storage and neuromorphic applications. Nano Lett. 12, 389–395 (2012).

Yang, J. J., Strukov, D. B. & Stewart, D. R. Memristive devices for computing. Nat. Nanotechnol. 8, 13–24 (2013).

Chen, B. et al. Efficient in-memory computing architecture based on crossbar arrays. In Proceedings of 2015 IEEE International Electron Devices Meeting (IEDM) 17.5.1–17.5.4 (IEEE, 2015).

Pershin, Y. V. & Di Ventra, M. Neuromorphic, digital and quantum computation with memory circuit elements. Proc. IEEE 100, 2071–2080 (2012).

Jeong, D. S. & Hwang, C. S. Nonvolatile memory materials for neuromorphic intelligent machines. Adv. Mater. 30, 1704729 (2018).

Olshausen, B. A. & Field, D. J. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381, 607–609 (1996).

Olshausen, B. A. & Field, D. J. Sparse coding with an overcomplete basis set: a strategy employed by V1? Vis. Res. 37, 3311–3325 (1997).

Sheridan, P. M., Du, C. & Lu, W. D. Feature extraction using memristor network. IEEE Trans. Neural Netw. Learn. Syst. 27, 2327–2336 (2016).

Rozell, C. J., Johnson, D. H., Baraniuk, R. G. & Olshausen, B. A. Sparse coding via thresholding and local competition in neural circuits. Neural Comput. 20, 2526–2563 (2008).

Lever, J., Krzywinski, M. & Altman, N. Points of significance: principal component analysis. Nat. Methods 14, 641–642 (2017).

Masters, D. & Luschi, C. Revisiting small batch training for deep neural networks. Preprint at https://arxiv.org/abs/1804.07612 (2018).

Keskar, N. S., Mudigere, D., Nocedal, J., Smelyanskiy, M. & Tang, P. T. P. On large-batch training for deep learning: generalization gap and sharp minima. Preprint at https://arxiv.org/abs/1609.04836 (2016).

Chen, P.-Y., Peng, X. & Yu, S. NeuroSim: a circuit-level macro model for benchmarking neuro-inspired architectures in online learning. IEEE Trans. Comput. Des. Integr. Circuits Syst. 37, 3067–3080 (2018).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Zidan, M. A. et al. Field-programmable crossbar array (FPCA) for reconfigurable computing. IEEE Trans. Multi-scale Comput. Syst. 4, 698–710 (2017).

Mikhailenko, D., Liyanagedera, C., James, A. P. & Roy, K. M2CA: modular memristive crossbar arrays. In Proceedings of 2018 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2018).

Xu, X. et al. Scaling for edge inference of deep neural networks. Nat. Electron. 1, 216–222 (2018).

Shafiee, A. et al. ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. In Proceedings of 43rd International Symposium on Computer Architecture 14–26 (IEEE, 2016).

Gokmen, T. & Vlasov, Y. Acceleration of deep neural network training with resistive cross-point devices: design considerations. Front. Neurosci. 10, 33 (2016).

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R. & Bengio, Y. Quantized neural networks: training neural networks with low precision weights and activations. Preprint at https://arxiv.org/abs/1609.07061 (2016).

Jacob, B. et al. Quantization and training of neural networks for efficient integer-arithmetic-only inference. Preprint at https://arxiv.org/abs/1712.05877 (2017)

Bishop, C. M. Pattern Recognition and Machine Learning Vol. 4 (Springer, 2006).

Mangasarian, O. L., Street, W. N. & Wolberg, W. H. Breast cancer diagnosis and prognosis via linear programming. Oper. Res. 43, 570–577 (1995).

Dheeru, D. & Karra Taniskidou, E. Machine Learning Repository (Univ. California–Irvine, 2017).

Acknowledgements

The authors acknowledge inspiring discussions with C. Liu, T. Chou, P. Brown, M.A. Zidan and P.M. Sheridan. This work was supported in part by the Defense Advanced Research Projects Agency (DARPA) through award HR0011-13-2-0015, the National Science Foundation (NSF) through awards CCF-1617315 and 1734871, and the Applications Driving Architectures (ADA) Research Centre, a JUMP Centre co-sponsored by SRC and DARPA.

Author information

Authors and Affiliations

Contributions

F.C., J.M.C., S.H.L., Z.Z., M.P.F. and W.D.L. conceived the project and constructed the research frame. S.H.L. prepared the memristor arrays and performed device integration. J.M.C., Y.L., V.B., Z.Z. and M.P.F. designed the CMOS chip. F.C. and J.M.C. prepared the test hardware and software platform. F.C. and S.H.L. performed the network measurements and software simulations. W.D.L. directed the project. F.C., J.M.C., S.H.L., Z.Z., M.P.F. and W.D.L. analysed the experimental data and wrote the manuscript. All authors discussed the results and implications and commented on the manuscript at all stages.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–30, Supplementary notes 1–11

Rights and permissions

About this article

Cite this article

Cai, F., Correll, J.M., Lee, S.H. et al. A fully integrated reprogrammable memristor–CMOS system for efficient multiply–accumulate operations. Nat Electron 2, 290–299 (2019). https://doi.org/10.1038/s41928-019-0270-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-019-0270-x

This article is cited by

-

Memristor-based storage system with convolutional autoencoder-based image compression network

Nature Communications (2024)

-

Purely self-rectifying memristor-based passive crossbar array for artificial neural network accelerators

Nature Communications (2024)

-

Memristive crossbar-based circuit design of back-propagation neural network with synchronous memristance adjustment

Complex & Intelligent Systems (2024)

-

Hardware implementation of memristor-based artificial neural networks

Nature Communications (2024)

-

Monolithic three-dimensional integration of RRAM-based hybrid memory architecture for one-shot learning

Nature Communications (2023)