Abstract

Reinforcement learning algorithms that use deep neural networks are a promising approach for the development of machines that can acquire knowledge and solve problems without human input or supervision. At present, however, these algorithms are implemented in software running on relatively standard complementary metal–oxide–semiconductor digital platforms, where performance will be constrained by the limits of Moore’s law and von Neumann architecture. Here, we report an experimental demonstration of reinforcement learning on a three-layer 1-transistor 1-memristor (1T1R) network using a modified learning algorithm tailored for our hybrid analogue–digital platform. To illustrate the capabilities of our approach in robust in situ training without the need for a model, we performed two classic control problems: the cart–pole and mountain car simulations. We also show that, compared with conventional digital systems in real-world reinforcement learning tasks, our hybrid analogue–digital computing system has the potential to achieve a significant boost in speed and energy efficiency.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Code availability

The computer code used in this study can be found at https://github.com/zhongruiwang/memristor_RL.

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding authors upon request.

References

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction. Second Edition (MIT Press, Cambridge, 2018).

Lillicrap, T. P. et al. Continuous control with deep reinforcement learning. Preprint at https://arXiv.org/abs/1509.02971 (2015).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature. 518, 529–533 (2015).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Silver, D. et al. Mastering the game of Go without human knowledge. Nature 550, 354–359 (2017).

Chen, Y. et al. Dadiannao: A machine-learning supercomputer. In Proceedings of the 47th Annual IEEE/ACM International Symposium on Microarchitecture 609–622 (IEEE Computer Society, 2014).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proceedings of the 44th Annual International Symposium on Computer Architecture 1–12 (ACM, 2011).

Chen, Y.-H., Krishna, T., Emer, J. S. & Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE. J. Solid-St. Circ. 52, 127–138 (2017).

van de Burgt, Y. et al. A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing. Nat. Mater. 16, 414–418 (2017).

Suri, M. et al. Phase change memory as synapse for ultra-dense neuromorphic systems: application to complex visual pattern extraction. In 2011 International Electron Devices Meeting (IEDM) 4.4.1–4.4.4 (IEEE, 2011).

Eryilmaz, S. B. et al. Brain-like associative learning using a nanoscale non-volatile phase change synaptic device array. Front. Neurosci. 8, 205 (2014).

Burr, G. W. et al. Experimental demonstration and tolerancing of a large-scale neural network (165,000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Elect. Dev. 62, 3498–3507 (2015).

Ambrogio, S. et al. Unsupervised learning by spike timing dependent plasticity in phase change memory (PCM) synapses. Front. Neurosci. 10, 56 (2016).

Strukov, D. B., Snider, G. S., Stewart, D. R. & Williams, R. S. The missing memristor found. Nature 453, 80–83 (2008).

Jo, S. H. et al. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 10, 1297–1301 (2010).

Yu, S., Wu, Y., Jeyasingh, R., Kuzum, D. & Wong, H. S. P. An electronic synapse device based on metal oxide resistive switching memory for neuromorphic computation. IEEE Trans. Elect. Dev 58, 2729–2737 (2011).

Ohno, T. et al. Short-term plasticity and long-term potentiation mimicked in single inorganic synapses. Nat. Mater. 10, 591–595 (2011).

Pershin, Y. V. & Di Ventra, M. Neuromorphic, digital, and quantum computation with memory circuit elements. Proc. IEEE 100, 2071–2080 (2012).

Lim, H., Kim, I., Kim, J. S., Hwang, C. S. & Jeong, D. S. Short-term memory of TiO2-based electrochemical capacitors: empirical analysis with adoption of a sliding threshold. Nanotechnology 24, 384005 (2013).

Sheridan, P., Ma, W. & Lu, W. Pattern recognition with memristor networks. In 2014 IEEE International Symposium on Circuits and Systems (ISCAS) 1078–1081 (IEEE, 2014).

La Barbera, S., Vuillaume, D. & Alibart, F. Filamentary switching: synaptic plasticity through device volatility. ACS Nano 9, 941–949 (2015).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal–oxide memristors. Nature 521, 61–64 (2015).

Hu, S. G. et al. Associative memory realized by a reconfigurable memristive Hopfield neural network. Nat. Commun. 6, 7522 (2015).

Serb, A. et al. Unsupervised learning in probabilistic neural networks with multi-state metal–oxide memristive synapses. Nat. Commun. 7, 12611 (2016).

Park, J. et al. TiOx-based RRAM synapse with 64-levels of conductance and symmetric conductance change by adopting a hybrid pulse scheme for neuromorphic computing. IEEE Elect. Dev. Lett. 37, 1559–1562 (2016).

Shulaker, M. M. et al. Three-dimensional integration of nanotechnologies for computing and data storage on a single chip. Nature 547, 74–78 (2017).

Hu, M. et al. Dot-product engine for neuromorphic computing: programming 1T1M crossbar to accelerate matrix-vector multiplication. In 53rd ACM/EDAC/IEEE Design Automation Conference (DAC) 1–6 (IEEE, 2016).

Hu, M. et al. Memristor-based analog computation and neural network classification with a dot product engine. Adv. Mater. 30, 1705914 (2018).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Li, C. et al. Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2018).

Le Gallo, M. et al. Mixed-precision in-memory computing. Nat. Electron. 1, 246–253 (2018).

Zidan, M. A. et al. A general memristor-based partial differential equation solver. Nat. Electron. 1, 411–420 (2018).

Nili, H. et al. Hardware-intrinsic security primitives enabled by analogue state and nonlinear conductance variations in integrated memristors. Nat. Electron. 1, 197–202 (2018).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Li, C. et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9, 2385 (2018).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Bayat, F. M. et al. Implementation of multilayer perceptron network with highly uniform passive memristive crossbar circuits. Nat. Commun. 9, 2331 (2018).

Boybat, I. et al. Neuromorphic computing with multi-memristive synapses. Nat. Commun. 9, 2514 (2018).

Chen, W.-H. et al. A 65nm 1Mb nonvolatile computing-in-memory ReRAM macro with sub-16ns multiply-and-accumulate for binary DNN AI edge processors. In 2018 IEEE International Solid-State Circuits Conference (ISSCC) 494–496 (IEEE, 2018).

Jeong, Y., Lee, J., Moon, J., Shin, J. H. & Lu, W. D. K-means data clustering with memristor networks. Nano Lett. 18, 4447–4453 (2018).

Li, C. et al. Long short-term memory networks in memristor crossbar arrays. Nat. Mach. Intel. 1, 49–57 (2019).

Nandakumar, S. et al. Mixed-precision architecture based on computational memory for training deep neural networks. In 2018 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2018).

Wang, Z. et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 1, 137–145 (2018).

Choi, S., Shin, J. H., Lee, J., Sheridan, P. & Lu, W. D. Experimental demonstration of feature extraction and dimensionality reduction using memristor networks. Nano Lett. 17, 3113–3118 (2017).

Barto, A. G., Sutton, R. S. & Anderson, C. W. Neuronlike adaptive elements that can solve difficult learning control problems. IEEE Trans. Syst. Man Cybern. SMC-13, 834–846, (1983).

Sutton, R. S. Generalization in reinforcement learning: successful examples using sparse coarse coding. In Advances in Neural Information Processing Systems 8 1038–1044 (NIPS, 1996).

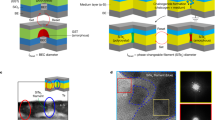

Jiang, H. et al. Sub-10 nm Ta channel responsible for superior performance of a HfO2 memristor. Sci. Rep. 6, 28525 (2016).

Tieleman, T. & Hinton, G. Lecture 6.5-rmsprop: divide the gradient by a running average of its recent magnitude. COURSERA: Neural Networks for Machine Learning 4, 26–31 (2012).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

LeCun, Y., Touresky, D., Hinton, G. & Sejnowski, T. A theoretical framework for back-propagation. In Proceedings of the 1988 Connectionist Models Summer School 21–28 (CMU, Pittsburgh, PA, Morgan Kaufmann, 1988).

Acknowledgements

This work was supported in part by the US Air Force Research Laboratory (AFRL) (Grant No. FA8750-15-2-0044), the Defense Advanced Research Projects Agency (DARPA) (Contract No. D17PC00304), the Intelligence Advanced Research Projects Activity (IARPA) (Contract 2014-14080800008) and the National Science Foundation (NSF) (ECCS-1253073). Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of AFRL. Part of the device fabrication was conducted in the clean room of the Centre for Hierarchical Manufacturing (CHM), an NSF Nanoscale Science and Engineering Centre (NSEC) located at the University of Massachusetts Amherst.

Author information

Authors and Affiliations

Contributions

J.J.Y. conceived the concept. J.J.Y., Q.X., Z.W. and C.L. designed the experiments. M.R. and P.Y. fabricated the devices. Z.W. and C.L. performed electrical measurements. W.S., D.B., Y.L., H.J., P.L., M.H., J.P.S., N.G., M.B., Q.W., A.G.B., Q.Q. and R.S.W. helped with experiments and data analysis. J.J.Y., Q.X., Z.W. and C.L. wrote the paper. All authors discussed the results and implications and commented on the manuscript at all stages.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figures 1–8, Supplementary Tables 1–7 and Supplementary Note 1.

Supplementary Video 1

The in situ online reinforcement learning process of the cart-pole environment with the 1T1R memristor crossbar array. The upper-left panel shows the evolution of performance, or the summed rewards per game epoch, of the learning agent. The performance clearly improved during the second half of the learning course. The middle-left panel shows the evolution of the loss function at each game step. The lower-left panel shows the game information including the cart position and pole angle at each time step. The remaining three panels of the first row show the real-time conductance maps of the memristor synapses of the three-layer neural networks during learning. The differential memristor pair orientation is vertical in layers 1 and 2 and horizontal in layer 3 (that is, for layer 1 and 2, adjacent rows form differential pairs, while for layer 3, adjacent columns form differential pairs.) The remaining three panels of the second row show the corresponding real-time weight matrices of the three-layer neural network based on the conductance reading of differential pairs. The remaining three panels of the last row show the gate voltage maps calculated by the RMSprop optimizer of the three-layer neural network at each time step.

Supplementary Video 2

The in situ online reinforcement learning process of the mountain car environment with the 1T1R memristor crossbar array. The upper-left panel shows the evolution of performance, or the summed rewards per game epoch, of the learning agent. The negative rewards clearly decreased in amplitude after the first epoch. The middle-left panel shows the evolution of the loss function at each game step. The lower-left panel shows the game information about the car position at each time step. The remaining three panels of the first row show the real-time conductance maps of the memristor synapses of the three-layer neural networks during learning. The differential pair orientation is vertical in layers 1 and 2 while horizontal in layer 3. (that is, for layer 1 and 2, adjacent rows form differential pairs, while for layer 3, adjacent columns form differential pairs.) The remaining three panels of the second row show the corresponding real-time weight matrices of the three-layer neural network based on the conductance readings of differential pairs. The remaining three panels of the last row show the gate voltage maps calculated by the RMSprop optimizer of the three-layer neural network at each time step.

Rights and permissions

About this article

Cite this article

Wang, Z., Li, C., Song, W. et al. Reinforcement learning with analogue memristor arrays. Nat Electron 2, 115–124 (2019). https://doi.org/10.1038/s41928-019-0221-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-019-0221-6

This article is cited by

-

Memristive tonotopic mapping with volatile resistive switching memory devices

Nature Communications (2024)

-

Memristive crossbar-based circuit design of back-propagation neural network with synchronous memristance adjustment

Complex & Intelligent Systems (2024)

-

Tailoring Classical Conditioning Behavior in TiO2 Nanowires: ZnO QDs-Based Optoelectronic Memristors for Neuromorphic Hardware

Nano-Micro Letters (2024)

-

Wearable in-sensor reservoir computing using optoelectronic polymers with through-space charge-transport characteristics for multi-task learning

Nature Communications (2023)

-

Generative complex networks within a dynamic memristor with intrinsic variability

Nature Communications (2023)