Abstract

Infectious diseases are emerging globally at an unprecedented rate while global food demand is projected to increase sharply by 2100. Here, we synthesize the pathways by which projected agricultural expansion and intensification will influence human infectious diseases and how human infectious diseases might likewise affect food production and distribution. Feeding 11 billion people will require substantial increases in crop and animal production that will expand agricultural use of antibiotics, water, pesticides and fertilizer, and contact rates between humans and both wild and domestic animals, all with consequences for the emergence and spread of infectious agents. Indeed, our synthesis of the literature suggests that, since 1940, agricultural drivers were associated with >25% of all — and >50% of zoonotic — infectious diseases that emerged in humans, proportions that will likely increase as agriculture expands and intensifies. We identify agricultural and disease management and policy actions, and additional research, needed to address the public health challenge posed by feeding 11 billion people.

Similar content being viewed by others

Main

Infectious diseases are emerging at an unprecedented rate with significant impacts on global economies and public health1. The social and environmental conditions that give rise to disease emergence are thus of particular interest, as are management approaches that might reduce the risk of emergence or re-emergence1. At the same time, undernutrition — the insufficient intake of one or more nutrients — remains a major source of global ill health2,3,4,5. Together, the unprecedented rate of infectious disease emergence and the need to sustainably feed the global population represent two of the most formidable ecological and public health challenges of the twenty-first century5,6,7,8,9 (Fig. 1), and they interact in complex ways (Fig. 2).

Agricultural production can improve human health by reducing food prices and enhancing nutrition, which can increase resistance to infectious diseases. However, freshwater habitats established for irrigation, as well as other agricultural inputs, often increase the risk of vector-borne diseases, such as malaria and schistosomiasis. In general, rural residents are most vulnerable to these increases in infectious disease, whereas consumers some distance away derive most of the benefits from increased food production. To maximize human health given the impending 11 billion humans projected on the planet by 2100, society must minimize the adverse consequences of agricultural growth while maximizing the health benefits. Images by Kate Marx.

Although modern agricultural technologies have reduced hunger, improved nutrition and spared some natural ecosystems from conversion to agriculture, current global agricultural production and distribution infrastructure still leaves billions of people’s diets deficient in one or more crucial nutrients, with major consequences for global morbidity and mortality10, and these deficiencies are expected to worsen with climate change11,12,13,14. Credible estimates are available for specific nutrient shortfalls — for example, 1.6 billion people suffering iron- or vitamin B12-deficiency anemia15, 0.8 billion people with insufficient dietary energy (that is, calorie) intake16, 33% of pre-school age children and 15% of pregnant women at risk of vitamin A deficiency17 — but the world still lacks a rigorous, recent assessment of the population suffering shortfalls of any one or more nutrients, although the number is surely in the billions. By 2100, the United Nations projects that the global population will grow by nearly 4 billion, to exceed 11 billion people18. Meeting the United Nations’ Sustainable Development Goal, to “eradicate hunger” (https://sustainabledevelopment.un.org/) for this expanding human population will necessitate a large increase in food supplies, with major changes to agricultural production and distribution systems, infrastructure and social protection programmes6 (Fig. 3). Despite large and, in many cases, growing inequalities, the global population is on average expected to become richer, and historically, with greater affluence has come greater consumption of food products in general and more animal-sourced foods in particular, both of which further increase food demand and thus the requirement for agricultural expansion and/or intensification19. Agriculture already occupies about half of the world’s land and uses more than two-thirds of the world’s fresh water20, and recent studies have suggested that agricultural production might need to double or triple by 2100 to keep pace with projected population growth and food demand6,7,8,21 (Fig. 3). Meeting this demand using present agricultural production systems could require replacing >109 hectares of natural ecosystems with agricultural production, approximately 7% of the global land area, which is larger than the continental United States, although continuous efficiency improvements in agriculture will compel re-evaluation of these estimates. In turn, this agricultural expansion could result in an estimated ~2-fold increase in irrigation, ~2.7-fold increase in fertilizer6,10,22 and 10-fold increase in pesticide use (Fig. 3).

a–f, Past and projected increases in global human population (a), nitrogen (b), phosphorous (c), fertilizer and pesticide use (d), cropland area (e), and irrigated land area (f) and associated 95% prediction bands (light blue bands). ‘Nitrogen’ refers to the normalized estimated global amount of nitrogen nutrients in fertilizers produced (originally reported in thousands of tonnes, now in metric tonnes). ‘Phosphorous’ refers to the normalized estimated global amount of P2O5 nutrients in fertilizers produced (originally reported in thousands of tonnes, now in metric tonnes). For a, data were collected from the World Bank. For b–f, data were collected from the Food and Agriculture Organization of the United Nations and statistical models were developed based on the relationships between these variables and global human population density. After the best model was identified, year was substituted for human population density based on the fit in a. These projections should be evaluated with considerable caution because the models assume that human population size is the only factor that affects fertilizer and pesticide use and the amount of arable and irrigated cropland, when in reality, many factors affect these responses (for example, climate, diets, type of crops grown and so on). They merely illustrate that even an extremely parsimonious model seems to track past patterns tolerably well and thus could serve as a basis for coarse projections. See Supplementary Methods for details of the statistical models used to generate these figures and Supplementary Table 1 for coefficients and statistics for the best-fitting models.

The challenges of feeding >11 billion people and managing infectious diseases intersect in several ways (Figs. 1 and 2). First, the expansion and intensification of agriculture are disproportionately occurring in tropical, developing countries6,22,23, where 75% of deaths are attributable to infectious diseases24, where the risk of disease emergence might be greatest, and where disease surveillance and access to health care, particularly for those infections that accompany extreme poverty, are most limited1,25. Second, agricultural expansion and intensification have historically come with massive habitat conversion, contamination with animal waste and increasing use of agricultural inputs, such as pesticides and antibiotic growth promoters. Beyond their direct adverse effects on human health26,27, agricultural biochemical inputs are known to have direct effects on emerging human infectious diseases, and can also serve as indirect drivers by contributing to the emergence of wildlife diseases28 that constitute important sources of emerging infections in humans1,29. However, the strengthening of agricultural production systems can also improve nutrition, which has pronounced benefits for combating many infectious diseases at the individual and population levels2,30.

Although concerns have been raised regarding the environmental impact of agricultural expansion and intensification6,8,9,10,22,31, their effects on infectious disease risk have not been synthesized. Here, we review both the beneficial and adverse effects of agricultural expansion and intensification on the transmission of human infectious diseases. We synthesize the pathways through which agricultural practices influence human infectious diseases, and vice versa, and identify opportunities to minimize the adverse consequences of agricultural growth while maximizing the human health benefits of agricultural development (Fig. 1).

Nutrition and infectious disease

Agricultural development can yield direct improvements to nutrition, and through several mechanisms, nutrition can be a critical determinant of infectious disease susceptibility and progression4,32 (Figs. 1 and 2). For example, immune responses are energetically costly33 and thus undernutrition often reduces the development and effectiveness of immune responses that can limit or clear infections. The relationship between infectious disease and nutritional status can function in reverse as well, because many parasitic infections place direct demands on host nutrition, causing undernutrition when food is limited34. In fact, some parasites, such as helminths, can even cause eating disorders, such as geophagy (desire to eat soil), bulimia and anorexia35. Enduring infections often require rapid and effective repair of tissue damage caused by parasites, which is also costly. Hence, certain infections can cause undernutrition, which itself can compromise both resistance and tolerance to infections34,36.

By improving nutrition, agricultural development should facilitate combating many infectious diseases. For example, death rates from acute respiratory infections, diarrhoea, malaria and measles, diseases that on average kill more than a child every 30 seconds (1 million per year)37, are much higher in children who suffer undernutrition than in those that do not3,4. In addition, poor maternal nutrition and associated impaired fetal growth are strongly associated with neonatal death from sepsis, pneumonia and diarrhoea4; undernourishment is a well-understood risk factor for tuberculosis38; and micronutrient deficiencies, such as vitamin A deficiency, have been linked to diarrhoea severity and malaria morbidity in some populations4. Although the traditional Green Revolution approach to food production has succeeded in reducing undernourishment arising from insufficient calorie and protein intake, it has been very slow in reducing micronutrient deficiencies39, which can be have significant effects on defence against disease. To make matters worse, where violence, unrest and terrorism impede access to food, such as in parts of Africa and central Asia, morbidity and mortality attributed to communicable diseases can be further exacerbated2.

Although research suggests that improving nutrition will generally improve responses to infectious diseases, there may be important exceptions. For certain diseases, such as schistosomiasis and many respiratory infections, pathogenesis is a product of host immunity, often from hyperinflammation40,41. Thus, undernutrition can actually decrease symptoms by reducing the strength and pathogenicity of immune responses40. In addition, as nutrition improves, some parasites might proliferate faster than immunity can increase34, resulting in more, rather than less, morbidity, with the classic example that dietary intake of iron can increase malaria-induced mortality42.

Rural economy, infrastructure and infectious disease

In this section, we review the links between rural economy, infrastructure and infectious disease.

Economic development

Economic development, especially agricultural development, has historically driven reductions in both infectious diseases and poverty across many settings. In fact, the poor financially benefit more from economic growth in the agricultural sector than in industrial or service sectors43,44. The early stages of economic development often involve the construction of infrastructure to facilitate food production and distribution, including roads, dams and irrigation networks43,44. More recently, the early stages of rural development often include rapid expansion of telecommunication, and to a lesser degree, electrification, both of which are promising but underutilized resources for disease monitoring and control in the developing world45. Other rural infrastructure that is more crucial to infectious disease prevention, such as safe water, sanitation and energy supplies, often follows or is developed concurrently43,44. In 43 developing countries, rural infrastructure that provided access to sanitation and safe water explained 20% and 37% of the difference in the prevalence of malnutrition and child mortality rates between the poorest and richest quintiles, respectively46. Moreover, clean water, sanitation and electricity can also facilitate the construction of schools and health clinics that can help to further reduce disease through education, prevention and treatment. Hence, if the history of the developed world repeats itself in the developing world, then it seems plausible that agricultural development necessary to feed 11 billion people might help to reduce infectious diseases by promoting economic development and rural infrastructure.

There are several important assumptions to this hypothesis, however, such as: (1) it is possible to feed 11 billion people; (2) the rate of economic development will match or exceed the pace of human population growth; and (3) developing countries are not in an infectious disease-driven ‘poverty trap’, which is the notion that poverty increases the chances of acquiring and succumbing to disease, and chronic disease traps humans in poverty47,48,49,50,51. If agricultural development lags behind population growth or if clean water supplies, sanitation and electricity do not immediately follow road, dam and irrigation construction, then the prevalence of many infectious diseases could remain unchanged or even increase as human populations grow52.

Agricultural irrigation and freshwater redistribution

One major change in rural infrastructure that often accompanies agricultural development is the redistribution of fresh water, which has well-known consequences for the transmission of infectious diseases. For example, because 40% of crop production comes from only the 16% of agricultural land that is irrigated, and irrigated lands accounted for much of the increased yields experienced during the Green Revolution10, the potential for increased irrigation infrastructure to exacerbate infectious diseases is a critical concern (Fig. 1). Importantly, agricultural development has caused both declines of certain types of fresh water, such as wetlands, and increases of others, such as dams, reservoirs and irrigation schemes.

One of the largest drivers of global wetland losses has been conversion to agriculture53, which has led to declines in diseases that rely on wetland habitats. For example, Japan’s successful eradication of schistosomiasis during the mid-twentieth century relied in part on conversion of wetlands to orchards in schistosomiasis-endemic areas54, although replacing oxen with horses, which are less susceptible to Schistosoma japonicum, as draft animals is also thought to have contributed substantially54. Similarly, some of the earliest successes in malaria control at the turn of the twentieth century occurred in the southern United States, where wetland drainage — including for agricultural conversion — was one of a suite of anti-transmission strategies used by the Rockefeller Foundation in its landmark malaria control efforts55.

Although wetlands often decline with agriculture, dams, reservoirs and irrigation networks often increase, and this redistribution of fresh water has been widely associated with increases in some vectors and hosts of human pathogens56. For example, construction of the Aswan High Dam in Egypt and the accompanying irrigation network was associated with a rise in mosquito vectors and the disfiguring mosquito-borne disease lymphatic filariasis, commonly known as human elephantiasis57. Likewise, dam and irrigation construction resulted in increases in malaria in Sri Lanka and India56,58, and a sevenfold increase in malaria in Ethiopia59. A meta-analysis of 58 studies revealed that humans living near irrigation schemes or in close proximity to large dam reservoirs had significantly higher risk of schistosome infections than humans that did not live near these water resources60, at least partly because of increased freshwater habitat for intermediate snail hosts. Similarly, a recent analysis of schistosomiasis case data from the past 70 years across sub-Saharan Africa showed far-reaching effects of dams and irrigation schemes, with increases in schistosomiasis risk extending up to hundreds of kilometres upstream from the dams themselves61. Hence, dams, reservoirs and irrigation networks related to agricultural expansion are likely to increase vector-borne and waterborne diseases unless water resources are developed in deliberate and coordinated ways to mitigate these risks62.

Urbanization, globalization and the movement of agricultural products

The world’s population became majority urban in 2007 and urbanization continues to outpace population growth, especially in developing countries. Urbanization necessarily extends food supply chains, as consumers reside further from farms and fisheries. Although only 23% of the food produced globally for human consumption is traded across international borders63, globalization is likewise elongating food supply chains. Furthermore, as the costs of international travel have declined over time, the movement of people across borders has likewise increased. The expanded spatial scope and increased frequency, speed and volume of people and agricultural products moving within and among countries necessarily facilitates the spread of pathogens. For example, in Ecuador, there is evidence that the construction of new roads has affected the epidemiology of diarrheal diseases by changing contact rates among people as well as between people and contaminated water sources64. Each year in the United States, there are already an estimated 48 million people with illnesses, 128,000 hospitalizations and 3,000 deaths from food-borne infections65, and imported foods — especially from developing countries with poor sanitation infrastructure and weak food safety enforcement — have been associated with a rise in food-borne illness66. In addition, globalization is already thought to be a factor in the spread of human influenza viruses that spillover from poultry and swine67,68. Although modernization of food supply chains, especially those linking farms in developing countries to high-income consumers in cities and high-income countries, has typically accelerated the diffusion of stricter food safety and quality standards69,70, as urbanization and globalization further extend food supply chains, enhanced monitoring for the spread of pathogens across transportation networks and strengthened food safety regulations will be needed.

Drugs use, agrochemicals and infectious disease

In this section, we review the effects of agricultural industrialization.

Anti-parasitic and antibiotic drug use

As the global human population increases, there will almost certainly be an increase in high density, industrialized livestock and aquaculture operations. Currently, such livestock operations are vulnerable to devastating losses of animals to disease. For instance, in just the last 25 years, an influenza A virus (H5N1) and a foot-and-mouth outbreak led to the destruction of more than 1.2 million chickens10 and 6 million livestock in China and Great Britain71, respectively, and a ‘mad cow disease’ epizootic led to the slaughter of 11 million cattle worldwide10. Similar scenarios have occurred in aquaculture. In the past three decades, there has been more than fourfold growth in industrial aquaculture worldwide72, which should continue to increase as food demand grows and wild fish and shellfish captures push the limits of renewable production73. Bacterial outbreaks in aquaculture are common, especially in developing countries where there are sanitary shortcomings72. In an effort to prevent these catastrophic disease-associated losses and improve animal growth, the agricultural industry uses a larger proportion of global antibiotic and anthelmintic production than human medicine, and most of the antibiotics are provided at non-therapeutic doses in the absence of any known disease74,75. In fact, although estimates are lacking for most countries in the world, in the United States, nearly nine times more antibiotics are given to animals than humans and, of the antibiotics given to animals, more than 12 times as many are used non-therapeutically as therapeutically74.

This widespread use of antibiotics and anti-parasitic drugs (for example, anthelmintics) in industrialized agriculture and aquaculture could have important implications for human infectious diseases because it seems to be driving microbial resistance to these drugs, some of which are also used in human medicine72,74,76. For example, livestock are a primary source of antibiotic-resistant Salmonella, Campylobacter and Escherichia coli strains that are pathogenic to humans74. There is evidence that antibiotic-resistance genes acquired from aquaculture are being transferred to human systems and these pathogens have subsequently caused outbreaks72. Anthelmintic resistance is also rife among parasitic worms of livestock and is strongly implied for parasitic worms of humans77. As livestock and aquaculture production expand to address growing food demands, it is likely that current antibiotics and anthelmintics will become less effective because of evolved resistance, and thus infectious diseases of domesticated animals and humans will be more difficult to treat75.

Pesticides, fertilizers and disease

Pesticides, particularly insecticides, have shared value for suppression of agricultural pest populations and disease-carrying insect vectors, such as mosquitoes and other flies. Because of the anticipated sharp increase in pesticide use by 2100 (Fig. 3), insecticide resistance is also expected to increase, with important implications for the control of diseases carried by insect vectors. Insecticides with widespread shared use for both crop protection and vector control include pyrethroid, organophosphate and organochlorine insecticides78. Several mosquito vectors of human and livestock pathogens have already evolved some resistance to these compounds78. If agricultural expansion and intensification is accompanied by an increased use of insecticides, vector resistance may become more common and the control of vector-borne diseases more challenging.

In addition to potentially driving vector resistance, increased pesticide use is likely to cause numerous other effects on host–parasite interactions. Pesticides can alter disease risk by modifying host susceptibility to parasites79,80,81. For example, many pesticides are immunomodulators that can increase infectious diseases of wildlife and humans82,83, or are endocrine disruptors of humans with potential downstream effects on immunity84. Even if pesticides do not directly affect immunity, detoxification of pesticides is energetically ‘expensive’ for the host, and thus pesticide exposure can reduce available energy resources for humans and zoonotic hosts to invest into parasite defences85,86.

Pesticides can also affect human disease risk by altering the densities of hosts or parasites or their natural enemies or mutualists87,88. Several pesticides can alter host behaviours or be directly toxic to hosts and parasites, which in turn can modify contact rates between parasites and human hosts. In addition, pesticides can alter community composition, which can indirectly affect behaviours or densities of intermediate and zoonotic hosts and parasites. Although chemical contaminants can be deadly to many free-living stages of parasites, both empirical trends and theoretical models suggest that stress associated with pesticide exposure can increase non-specific or generalist pathogens79,89,90. In addition to the impacts that pesticides can have on infectious diseases, they can also contribute to non-infectious diseases, such as cancers, birth defects, miscarriages and impaired childhood development91, which can further strengthen the poverty–infectious disease trap. Indeed, among African agricultural households, there is evidence of an association between increased pesticide use and increased time lost from work due to sickness27.

In addition to an increase in pesticides, nitrogen- and phosphorous-based fertilizers are expected to increase threefold by 2050 to boost food production, and most of this increase will occur in tropical regions already rich with pathogens6,10,22. Although the effects of environmental nutrient enrichment on disease are indirect and complex, with some infections increasing and others decreasing, two reviews on the subject suggest that elevated nutrient levels more often than not exacerbate the impact of infectious disease92,93. For example, phosphorous enrichment can benefit mosquitoes that transmit malaria and West Nile virus92,93. In addition, nitrogen- and phosphorous-based fertilizer use can increase the number of snails that transmit flatworms that cause human schistosomiasis92,93.

Agriculture, biodiversity, habitat and infectious disease

Conversion of natural habitat to agriculture can increase the abundance of ecotones (boundaries between ecological systems), change species composition and reduce native biodiversity94,95. Ecotones play an important role in a number of important emerging infectious diseases96,97, and reduced biodiversity that accompanies agricultural intensification can increase zoonotic disease emergence and can worsen already endemic diseases98,99,100. A recent global meta-analysis suggests that, based on the available literature, such biodiversity losses generally increase infections of wildlife and zoonotic infections of humans101. However, studies of the relationship between biodiversity change and infectious disease risk tend to focus on a single parasite or disease, often of non-human animals, which limits the ability to determine more broadly the effects of agricultural intensification on the overall burden of infectious disease in human populations102. Recent studies have found that the local loss of dense forests, largely from agricultural expansion, affected diarrheal diseases, acute respiratory infections and general fever in Cambodian children103 and infectious disease incidence in Nigerian children over a decade104,105. Proximate causes probably included reduced regulation of microbial contaminants in surface and ground waters, increased smoke from biomass burning, shifts in the ranges of insect vectors and decreased access to forest ecosystem services.

A major concern is the potential for positive feedbacks between poverty, biodiversity loss, soil degradation and infectious disease106,107. Several mechanisms can underpin these reinforcing relationships. The first arises as a result of poor rural peoples’ reliance on limited biophysical assets for their livelihoods. As households clear forests, deplete soils or overharvest biota to meet near-term consumption needs, this resource degradation can compromise future economic productivity and increase disease risk. In addition, poverty, environmental degradation, biodiversity loss and disease can quickly become mutually reinforcing responses to natural shocks, such as droughts or floods, or to protracted conflict in a region. There are also more direct examples. For instance, global net losses of tropical forests remained unchanged during the 1990s and 2000s (at approximately 6 million ha yr−1 or 0.38% annually108; also see https://glad.umd.edu/projects/global-forest-watch and https://www.globalforestwatch.org) and forest clearing can lead to transmission of zoonotic disease by increasing contact with wild animals. Conversely, encroachment of people and domesticated animals into natural areas can introduce diseases to wildlife that can devastate wild populations and create reservoirs for the disease to be transmitted back to domesticated animals109 (see next section for further discussion and citations). Especially as the agricultural frontier expands, considerable attention must be paid to monitoring and managing these biodiversity–poverty–disease feedbacks106.

Human diseases from domestic and wild animals

A central tenet of epidemiology is that the incidence of many infectious diseases should increase proportionally with host density because of increased contact rates and thus transmission among hosts110. Hence, increasing human and livestock densities could cause increases in infectious diseases unless investments in disease prevention are sufficient to prevent these increases. Given that most of the increase in human and livestock densities are expected to occur in developing countries where disease surveillance, pest control, sanitation, and medical and veterinary care are limited, there is little reason to expect that control efforts will keep up with the expected increases in infectious diseases associated with increasing densities of these hosts.

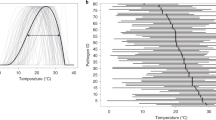

As host densities and thus transmission increase, theory suggests that parasite virulence should also increase under some circumstances111. When virulence of a pathogen is tied to its propagule generation within the body (such as occurs with many viral infections), the intermediate virulence hypothesis posits that an intermediate level of virulence maximizes parasite transmission because it balances producing many parasite offspring (increasing parasite fitness) with detriments to host survival due to pathology (decreasing parasite fitness). The balance in this trade-off determines parasite persistence. Hence, as host densities increase and transmission becomes more frequent, the cost of increased virulence declines, shifting the optimum towards higher virulence111. Consequently, for pathogens that experience this virulence trade-off, increases in human, crop and livestock densities have the potential to augment both the incidence and severity of infectious diseases.

Feeding 11 billion people — and the associated increase of land converted to agricultural production and livestock grazing — is expected to cause a surge in human–livestock, human–wild animal and livestock–wild animal contact rates, increasing the likelihood of ‘spillover’ events, which are defined as pathogen transmission from a reservoir host population to a novel host population112,113. As natural ecosystems are converted to crop land or range land, interactions among humans, and domesticated and wild animals, could increase66. Furthermore, if developing countries follow a trajectory similar to developed countries, then their demand for meat will increase, further increasing human–livestock, human–wild animal and livestock–wild animal contact rates114. These interactions are crucial because 77% of livestock pathogens are capable of infecting multiple host species, including wildlife and humans115, and based on published estimates from the 2000s, over half of all recognized human pathogens are currently or originally zoonotic29,116,117, as are 60–76% of recent emerging infectious disease events1,29,117 (Fig. 4). Examples of recent zoonotic disease emergences with enormous impacts on either livestock, humans or both, many of which might have agricultural drivers, include avian influenza, salmonellosis (poultry and humans), Newcastle disease (poultry), swine flu, Nipah virus (pigs and humans), Middle East respiratory syndrome (camels and humans), bovine tuberculosis, brucellosis (mostly cattle and humans), rabies (dogs and humans), West Nile virus, severe acute respiratory syndrome (SARS) and Ebola (humans)1,113.

To quantify the relationship between agricultural factors and disease emergence in humans through time, we used the human disease emergence database of Jones et al.1. We classified land-use change, food industry and agricultural industry as agricultural drivers of human disease emergence (see Supplementary Methods). These analyses revealed that agricultural drivers were associated with 25% of all diseases and nearly 50% of zoonotic diseases that emerged in humans since 1940 (Fig. 5). These values are even higher if we include the use of antimicrobial agents as an agricultural driver of human disease emergence, given that agricultural uses of antibiotics outpace medical uses in the developed world nearly nine to one74,118.

a,b, Agricultural drivers were associated with 25% of all (a) and nearly 50% of zoonotic (b) diseases that emerged in humans. For these figures, we use the definition of a zoonotic EID provided by Jones et al.1, which is a disease that emerged via non-human to human transmission, not including vectors. See Supplementary Methods for the methods used to develop this figure.

Several factors have materialized that facilitate spillover events associated with disease emergence113. Spillover appears to be a function of the frequency, duration and intimacy of interactions between a reservoir and novel host population112. For example, influenza is believed to have jumped from horses to humans soon after domesticating horses and then made additional jumps to humans from other domesticated animals, such as poultry and swine119. Similarly, when free-range turkeys were prevented from interacting with wild birds and when interactions between domesticated pigs and wild fruit bats were reduced, influenza and Nipah virus incidence dropped, respectively, suggesting wild-animal sources of these infections120,121. In sub-Saharan Africa, the high frequency and duration of environmental interactions between different species of Schistosoma worms infecting humans and cattle has undoubtedly facilitated their hybridization, and these hybrid schistosomes are more virulent to humans than their non-hybrid counterparts122. These factors associated with spillover and disease emergence could be targeted to reduce transmission potential as human populations and agricultural productivity increase.

Exploitation of natural resources and infectious disease

Given that most human population growth is expected to occur in developing, tropical countries, hunting, fishing and gathering pressures will almost certainly rise in subsistence economies13,123. The consequences of these pressures might initially increase contact rates between humans and wildlife. This could increase disease risk as bushmeat hunting is already thought to be responsible for several emerging human infectious diseases116. To make matters worse, bushmeat hunting is the second biggest threat to biodiversity behind habitat loss, and biodiversity losses can contribute to disease emergence101. However, if humans overexploit these natural resources, then human–wildlife interactions could eventually decline as species go extinct, which could reduce spillover events.

Changes in the composition of biodiversity associated with overhunting and overfishing can also have complex indirect effects on disease risk mediated by species interactions. For example, empirical evidence supports the notion that defaunation of large vertebrates in Africa should increase zoonotic disease risk by reducing the predators and competitors (large herbivores) of rodents, a common reservoir of human pathogens124. Similarly, overfishing of snail-eating cichlids in Lake Malawi in Africa seems to have been a causal factor in snail population and human schistosomiasis increases there125. Investigations of the effects of overexploitation on infectious diseases remain in their infancy, but the consequences for human populations could be profound.

Effects of human infectious diseases on food production

Up to this point, we have focused on the effects of feeding 11 billion people on infectious diseases, but the relationship is bidirectional. That is, human infectious diseases can also impact the agricultural and economic development necessary to feed the growing human population (Fig. 2). For example, the overuse of antibiotics, anthelmintics and pesticides to prevent diseases is driving resistance to these chemicals that will compromise future crop, livestock and aquaculture production. In sub-Saharan Africa, areas with higher historical tsetse-fly abundance, the vector of the parasite (Trypanosoma brucei) that causes African sleeping sickness in humans and cattle, experienced greater lags in the adoption of animal husbandry practices (for fertilizer and labour in agricultural enterprise) that hindered agricultural development and prosperity in Africa long before and after Europeans colonized126. In rural subsistence communities, any source of ill health can significantly impact people’s productivity, yields and agricultural output47,48,49. For example, human immunodeficiency virus/AIDS has reduced average life expectancy in sub-Saharan Africa by 5 years since 1997, and a Kenyan study found that crop production by rural subsistence-farming families dropped 57% after the death of a male head of household127. Many neglected tropical diseases (NTDs) impose devastating productivity losses for affected people that can impede agricultural development from local to national to regional scales49. Some low-income communities appear mired in this poverty–disease trap, and thus might require substantial investment in health systems to promote the necessary agricultural and economic growth to pull them out of the poverty–disease cycle47,48,49,50,51.

Advances and outlooks

The goal of identifying potential changes to infectious disease risk associated with feeding the growing human population is not only to draw attention to this important current and future problem but to also encourage preparation for these changes by stimulating agricultural- and disease-related research, management and policy that could maximize the human health benefits of agricultural development128. One urgent challenge is the problem of antibiotic, anthelmintic and pesticide resistance. Analyses demonstrate that, in some cases, improvements in growth and feed consumption can be achieved by improved hygiene instead of antibiotics76. Indeed, all antibiotics as growth promoters were banned in the European Union in 2006129. To curb antimicrobial consumption in food animal production, Van Boeckel et al.75 suggest: (1) enforcing global regulations to cap antimicrobial use, (2) adhering to nutritional guidelines leading to reduced meat consumption, and (3) imposing a global user fee on veterinary antimicrobial use. In addition, as recommended by the World Health Organization, International Organization for Epizootics, and Food and Agriculture Organization, national and international policies based on best management practices should be developed and implemented that document when and how antibiotics should be used, and agencies should be established to monitor their use, mandate reporting and enforce these policies76. Finally, given that host genetic variability can reduce disease risk110, large-scale industrial livestock operations could add genetic variability into their artificially selected food animals in an effort to reduce epidemics and epizootics. These changes promise to secure the long-term viability of antibiotics and anthelmintics for curing diseases of humans and non-human animals.

Another challenge that seems surmountable is to enhance education and health literacy. Not surprisingly, education has been documented as a major contributing factor to reducing infectious diseases, especially NTDs, and reducing NTDs can have reinforcing positive effects on the ability of humans to fight more deadly diseases, such as AIDS, malaria and tuberculosis49. Limiting NTDs could also have knock-on effects for education and health literacy because NTDs impede cognition, learning and school attendance49. Indeed, an investment of just US$3.50 per child for NTD control can result in the equivalent of an extra school year of education49. This is likely an underestimate because of unaccounted for indirect effects of deworming on learning. A recent study revealed large cognitive gains among children who were not dewormed but had older siblings who were130. Thus, enhanced education and NTD control have the potential to synergistically fuel agricultural and economic development and facilitate escape from the poverty–disease trap.

National and international shifts in investments could also potentially pay large dividends for nutrition, infectious disease control and poverty reduction. There is considerable evidence that the developing world will struggle to feed its growing human population because of the poverty trap of infectious disease49. However, evidence also suggests that this trap might be broken through investments in health infrastructure and preventive chemotherapy47,48,49. Curing worm diseases has the potential added benefit of reducing nutritional needs of cured individuals by ceasing the feeding of their parasites. By reducing food demand, these drugs could also protect the environment by reducing the conversion rate of natural areas to agriculture. This, in turn, might help to curb climate change, which is one of the greatest threats to global food security11 and might also increase disease risk131,132. Understanding the economic relationships among infectious disease treatment and prevention, food production, poverty and climate change in the developing world are important areas of future research.

As human populations increase, there might be more pathogen spillover events that could result in new emerging human infectious diseases. Historically, humans have combated emerging diseases through early detection followed by coordinated quarantine, as demonstrated by the SARS outbreak in 2003 and the Ebola outbreak in 2014. We recommend continued and improved coordinated international disease surveillance, but this approach reacts to rather than prevents disease. We recommend a shift in both research and disease management efforts towards proactive management approaches. One proactive approach that has promise for preventing zoonotic disease emergence is biodiversity conservation98,101, but more research is needed to evaluate its effectiveness across various types of zoonotic diseases and its costs and benefits relative to more proven reactive public health interventions. Ultimately, science needs a better understanding of pathogen spillover, the origins of disease emergence and post-spillover evolution so that human disease emergence can be better predicted and prevented112,113,133.

Improved and diversified plant and animal genetic material could also help to simultaneously reduce hunger and disease. Many efforts are already well underway; for example, introducing C4 traits into rice to enhance higher photosynthetic capacity in a warming world, and breeding drought- and flood-tolerant cereals, pulses and vegetables better suited to increased frequency of extreme weather events that can help sustainably enhance productivity. Crops and livestock genetically adapted to resist more pests can reduce pesticide and antibiotic use. In addition, given that micronutrient deficiencies are now the most widespread source of undernutrition globally, commodity research must diversify beyond merely boosting productivity of staple cereals. Far greater attention is needed on approaches that add mineral and vitamin content to foods39.

Other, perhaps more challenging, interventions also merit attention. Closing ‘yield gaps’ on underperforming lands, increasing cropping efficiency, eating less meat and excess per capita food consumption — which also have adverse health impacts on non-infectious diseases, such as high blood pressure, diabetes and heart disease — and reducing food waste and loss have the potential of doubling global food supplies6,114,134,135, while simultaneously allowing financial savings to be redirected towards health infrastructure to control disease. In addition, family planning, promoting female education and job market prospects, and enhancing early childhood survival have proved very effective at reducing human population growth136. Finally, producing food in more urban and suburban environments through vertical farming (the practice of growing produce in vertically stacked layers) also has potential to enhance food production locally, and might have reduced agrochemical and transportation costs and non-target effects relative to more traditional farming135.

Investments into predictive models could also pay dividends. Agricultural environments are complicated, multi-species systems that exhibit interactions at many levels. The complexity further increases from the tensions that arise in balancing expanding agricultural systems, social benefits and infectious disease risk. All of this complexity can overwhelm many analytical tools and experiments, which makes advances in mathematical modelling especially crucial to addressing these issues. Box 1 describes several examples of advances in mathematical modelling that illustrate their promise for analysing the links between agricultural practices and the dynamics of infectious diseases, projecting risks to future decades, influencing risks through either intentional (policies and interventions) or unintentional (continued habitat conversion and antibiotic use) actions, and incorporating economic and social costs of various public health or agricultural interventions. These models should help direct field experiments, which are desperately needed to parameterize and validate these models and to quantify key sources of uncertainty.

Ultimately, we believe ‘win–win’ scenarios for controlling infectious diseases, increasing agricultural productivity and improving nutrition are attainable. Although this sort of integrative thinking has been historically rare because agriculture and public health have been perceived as disparate disciplines, it is beginning to slowly penetrate these distinct fields of study. For example, several agrochemicals seem to increase the risk of human schistosomiasis and agriculturally derived zoonotic pathogens, and thus researchers are actively attempting to identify agrochemicals that might ‘kill two birds with one stone’, reducing crop pests and thus increasing food production while not increasing or even decreasing human pathogens137,138,139. Similarly, researchers are beginning to consider the introduction of prawns as biological control agents of schistosomiasis, which could simultaneously decrease disease and increase nutrition — because restored prawns can be fished, harvested and consumed without compromising their disease-controlling benefits61,140. We believe that more resourceful and creative thinking and interdisciplinary interactions (among medicine, public health, agriculture professionals, economists, anthropologists, sociologists, modellers, empiricists and wildlife biologists, in the developed and developing world) have great promise for sustainably and simultaneously improving human nutrition while controlling infectious diseases.

In conclusion, we hope that by synthesizing the complex intersection of food production and human health, we have highlighted the value of increasing agricultural- and disease-related research, management and policy, maximizing the benefits of agricultural development while minimizing its adverse effects on human health and the environment, and preparing for the imminent changes driven by the 11 billion people expected to inhabit Earth by 2100.

References

Jones, K. E. et al. Global trends in emerging infectious diseases. Nature 451, 990–993 (2008).

Roberts, L. Nigeria’s invisible crisis. Science 356, 18–23 (2017).

Sanchez, P. A. & Swaminathan, M. Hunger in Africa: the link between unhealthy people and unhealthy soils. Lancet 365, 442–444 (2005).

Black, R. E. et al. Maternal and child undernutrition: global and regional exposures and health consequences. Lancet 371, 243–260 (2008).

Naylor, R. The Evolving Sphere of Food Security (Oxford Univ. Press, 2014).

Foley, J. A. et al. Solutions for a cultivated planet. Nature 478, 337–342 (2011).

Godfray, H. C. J. et al. Food security: the challenge of feeding 9 billion people. Science 327, 812–818 (2010).

Kearney, J. Food consumption trends and drivers. Philos. Trans. R. Soc. Lond. B 365, 2793–2807 (2010).

Matson, P. A., Parton, W. J., Power, A. G. & Swift, M. J. Agricultural intensification and ecosystem properties. Science 277, 504–509 (1997).

Tilman, D., Cassman, K. G., Matson, P. A., Naylor, R. & Polasky, S. Agricultural sustainability and intensive production practices. Nature 418, 671–677 (2002).

Springmann, M. et al. Global and regional health effects of future food production under climate change: a modelling study. Lancet 387, 1937–1946 (2016).

Myers, S. S., Wessells, K. R., Kloog, I., Zanobetti, A. & Schwartz, J. Effect of increased concentrations of atmospheric carbon dioxide on the global threat of zinc deficiency: a modelling study. Lancet Glob. Health 3, e639–e645 (2015).

Myers, S. S., Wessells, K. R., Kloog, I., Zanobetti, A. & Schwartz, J. Rising atmospheric CO2 increases global threat of zinc deficiency. Lancet Glob. Health 3, e639–e645 (2015).

Golden, C. et al. Fall in fish catch threatens human health. Nature 534, 317–320 (2016).

de Benoist, B., McLean, E., Egll, I. & Cogswell, M. Worldwide Prevalence of Anaemia 1993–2005: WHO Global Database on Anaemia (WHO, 2008).

The State of Food Security and Nutrition in the World 2017: Building Resilience for Peace and Food Security (FAO, 2017).

Global Prevalence of Vitamin A Deficiency in Populations at Risk 1995–2005: WHO Global Database on Vitamin A Deficiency (WHO, 2009).

World Population Prospects: 2017 Revision (United Nations, 2017); http://esa.un.org/unpd/wpp/

Godfray, H. C. J. & Garnett, T. Food security and sustainable intensification. Phil. Trans. R. Soc. Lond. B 369, 20120273 (2014).

Alexandratos, N. & Bruinsma, J. World Agriculture Towards 2030/2050: 2012 Revision ESA Working Paper (FAO, 2012).

How to Feed the World: Global Agriculture Towards 2050 (FAO, 2009); www.fao.org/fileadmin/templates/wsfs/docs/Issues_papers/HLEF2050_Global_Agriculture.pdf

Tilman, D. et al. Forecasting agriculturally driven global environmental change. Science 292, 281–284 (2001).

Gibbs, H. K. et al. Tropical forests were the primary sources of new agricultural land in the 1980s and 1990s. Proc. Natl Acad. Sci. USA 107, 16732–16737 (2010).

Lozano, R. et al. Global and regional mortality from 235 causes of death for 20 age groups in 1990 and 2010: a systematic analysis for the Global Burden of Disease Study 2010. Lancet 380, 2095–2128 (2013).

Lustigman, S. et al. A research agenda for helminth diseases of humans: the problem of helminthiases. PLoS Negl. Trop. Dis. 6, e1582 (2012).

Pingali, P. L. & Roger, P. A. Impact of Pesticides on Farmer Health and the Rice Environment Vol. 7 (Springer Science & Business Media, 2012).

Sheahan, M., Barrett, C. B. & Goldvale, C. Human health and pesticide use in sub‐Saharan Africa. Agric. Econ. 48, 27–41 (2017).

Dobson, A. & Foufopoulos, J. Emerging infectious pathogens of wildlife. Philos. Trans. R. Soc. Lond. B 356, 1001–1012 (2001).

Taylor, L. H., Latham, S. M. & Woolhouse, M. E. J. Risk factors for human disease emergence. Philos. Trans. R. Soc. Lond. B 356, 983–989 (2001).

Evenson, R. E. & Gollin, D. Assessing the impact of the Green Revolution, 1960 to 2000. Science 300, 758–762 (2003).

Tilman, D. Global environmental impacts of agricultural expansion: the need for sustainable and efficient practices. Proc. Natl Acad. Sci. USA 96, 5995–6000 (1999).

Civitello, D. J., Allman, B. E., Morozumi, C. & Rohr, J. R. Assessing the direct and indirect effects of food provisioning and nutrient enrichment on wildlife infectious disease dynamics. Phil. Trans. R. Soc. Lond. B 373, 20170101 (2018).

Lochmiller, R. L. & Deerenberg, C. Trade-offs in evolutionary immunology: just what is the cost of immunity? Oikos 88, 87–98 (2000).

Knutie, S. A., Wilkinson, C. L., Wu, Q. C., Ortega, C. N. & Rohr, J. R. Host resistance and tolerance of parasitic gut worms depend on resource availability. Oecologia 183, 1031–1040 (2017).

Young, S. L., Sherman, P. W., Lucks, J. B. & Pelto, G. H. Why on earth? Evaluating hypotheses about the physiological functions of human geophagy. Q. Rev. Biol. 86, 97–120 (2011).

Rohr, J. R., Raffel, T. R. & Hall, C. A. Developmental variation in resistance and tolerance in a multi-host-parasite system. Funct. Ecol. 24, 1110–1121 (2010).

Webster, D. Malaria kills one child every 30 seconds. J. Public Health Policy 22, 23–33 (2001).

Odone, A., Houben, R. M., White, R. G. & Lönnroth, K. The effect of diabetes and undernutrition trends on reaching 2035 global tuberculosis targets. Lancet Diabetes Endocrinol. 2, 754–764 (2014).

Gómez, M. I. et al. Post-green revolution food systems and the triple burden of malnutrition. Food Policy 42, 129–138 (2013).

Akpom, C. A. Schistosomiasis: nutritional implications. Rev. Infect. Dis. 4, 776–782 (1982).

Sears, B. F., Rohr, J. R., Allen, J. E. & Martin, L. B. The economy of inflammation: when is less more? Trends Parasitol. 27, 382–387 (2011).

Sazawal, S. et al. Effects of routine prophylactic supplementation with iron and folic acid on admission to hospital and mortality in preschool children in a high malaria transmission setting: community-based, randomised, placebo-controlled trial. Lancet 367, 133–143 (2006).

Pinstrup-Andersen, P. & Shimokawa, S. in Rethinking Infrastructure for Development (eds Bourguignon, F. & Pleskovic, B.) 175–204 (World Bank, 2008).

Christiaensen, L., Demery, L. & Kuhl, J. The (evolving) role of agriculture in poverty reduction — an empirical perspective. J. Dev. Econ. 96, 239–254 (2011).

Déglise, C., Suggs, L. S. & Odermatt, P. SMS for disease control in developing countries: a systematic review of mobile health applications. J. Telemed. Telecare 18, 273–281 (2012).

Fay, M., Leipziger, D., Wodon, Q. & Yepes, T. Achieving child-health-related Millennium Development Goals: the role of infrastructure. World Dev. 33, 1267–1284 (2005).

Bonds, M. H., Keenan, D. C., Rohani, P. & Sachs, J. D. Poverty trap formed by the ecology of infectious diseases. Proc. R. Soc. Lond. B 277, 1185–1192 (2010).

Ngonghala, C. N. et al. General ecological models for human subsistence, health and poverty. Nat. Ecol. Evol. 1, 1153–1159 (2017).

Hotez, P. J., Fenwick, A., Savioli, L. & Molyneux, D. H. Rescuing the bottom billion through control of neglected tropical diseases. Lancet 373, 1570–1575 (2009).

Carter, M. R. & Barrett, C. B. The economics of poverty traps and persistent poverty: an asset-based approach. J. Dev. Stud. 42, 178–199 (2006).

Barrett, C. B., Carter, M. R. & Chavas, J. The Economics of Poverty Traps (Univ. Chicago Press, 2019).

Sokolow, S. H. et al. Nearly 400 million people are at higher risk of schistosomiasis because dams block the migration of snail-eating river prawns. Phil. Trans. R. Soc. Lond. B 372, 20160127 (2017).

Van Asselen, S., Verburg, P. H., Vermaat, J. E. & Janse, J. H. Drivers of wetland conversion: a global meta-analysis. PLoS ONE 8, e81292 (2013).

Tanaka, H. & Tsuji, M. From discovery to eradication of schistosomiasis in Japan: 1847–1996. Int. J. Parasitol. 27, 1465–1480 (1997).

Stapleton, D. H. Lessons of history? Anti-malaria strategies of the International Health Board and the Rockefeller Foundation from the 1920s to the era of DDT. Public Health Rep. 119, 206–215 (2004).

Amerasinghe, F. & Indrajith, N. Postirrigation breeding patterns of surface water mosquitoes in the Mahaweli Project, Sri Lanka, and comparisons with preceding developmental phases. J. Med. Entomol. 31, 516–523 (1994).

Harb, M. et al. The resurgence of lymphatic filariasis in the Nile delta. Bull. World Health Organ. 71, 49–54 (1993).

Tyagi, B. & Chaudhary, R. Outbreak offalciparummalaria in the Thar Desert (India), with particular emphasis on physiographic changes brought about by extensive canalization and their impact on vector density and dissemination. J. Arid Environ. 36, 541–555 (1997).

Ghebreyesus, T. A. et al. Incidence of malaria among children living near dams in northern Ethiopia: community based incidence survey. BMJ 319, 663–666 (1999).

Steinmann, P., Keiser, J., Bos, R., Tanner, M. & Utzinger, J. Schistosomiasis and water resources development: systematic review, meta-analysis, and estimates of people at risk. Lancet Infect. Dis. 6, 411–425 (2006).

Sokolow, S. H. et al. Nearly 400 million people are at higher risk of schistosomiasis because dams block the migration of snail-eating river prawns. Philos. Trans. R. Soc. Lond. B 372, 20160127 (2017).

Jobin, W. Dams and Disease: Ecological Design and Health Impacts of Large Dams, Canals and Irrigation Systems (CRC Press, 1999).

D’Odorico, P., Carr, J. A., Laio, F., Ridolfi, L. & Vandoni, S. Feeding humanity through global food trade. Earths Future 2, 458–469 (2014).

Eisenberg, J. N. et al. Environmental change and infectious disease: how new roads affect the transmission of diarrheal pathogens in rural Ecuador. Proc. Natl Acad. Sci. USA 103, 19460–19465 (2006).

Estimates of Foodborne Illness in the United States (CDC, 2011); https://www.cdc.gov/foodborneburden/index.html

Patz, J. A. et al. Unhealthy landscapes: policy recommendations on land use change and infectious disease emergence. Environ. Health Perspect. 112, 1092–1098 (2004).

Hosseini, P., Sokolow, S. H., Vandegrift, K. J., Kilpatrick, A. M. & Daszak, P. Predictive power of air travel and socio-economic data for early pandemic spread. PLoS ONE 5, e12763 (2010).

Vandegrift, K. J., Sokolow, S. H., Daszak, P. & Kilpatrick, A. M. in Year in Ecology and Conservation Biology 2010 (eds Ostfeld, R. S. & Schlesinger, W. H.) 113–128 (Wiley-Blackwell, 2010).

Swinnen, J. F. Global Supply Chains, Standards and The Poor: How the Globalization of Food Systems and Standards Affects Rural Development and Poverty (Cabi, 2007).

Reardon, T., Barrett, C. B., Berdegué, J. A. & Swinnen, J. F. Agrifood industry transformation and small farmers in developing countries. World Dev. 37, 1717–1727 (2009).

Foot and mouth disease. DEFRA http://footandmouth.fera.defra.gov.uk/index.cfm (2004).

Cabello, F. C. Heavy use of prophylactic antibiotics in aquaculture: a growing problem for human and animal health and for the environment. Environ. Microbiol. 8, 1137–1144 (2006).

Naylor, R. & Burke, M. Aquaculture and ocean resources: raising tigers of the sea. Annu. Rev. Environ. Resour. 30, 185–218 (2005).

Gorbach, S. L. Antimicrobial use in animal feed — time to stop. N. Engl. J. Med. 345, 1202–1203 (2001).

Van Boeckel, T. P. et al. Reducing antimicrobial use in food animals. Science 357, 1350–1352 (2017).

Silbergeld, E. K., Graham, J. & Price, L. B. Industrial food animal production, antimicrobial resistance, and human health. Annu. Rev. Public Health 29, 151–169 (2008).

Geerts, S. & Gryseels, B. Drug resistance in human helminths: current situation and lessons from livestock. Clin. Microbiol. Rev. 13, 207–222 (2000).

Hemingway, J., Hawkes, N. J., McCarroll, L. & Ranson, H. The molecular basis of insecticide resistance in mosquitoes. Insect Biochem. Mol. Biol. 34, 653–665 (2004).

Rohr, J. R., Raffel, T. R., Sessions, S. K. & Hudson, P. J. Understanding the net effects of pesticides on amphibian trematode infections. Ecol. Appl. 18, 1743–1753 (2008).

Rohr, J. R. et al. Agrochemicals increase trematode infections in a declining amphibian species. Nature 455, 1235–1239 (2008).

Rohr, J. R. et al. Predator diversity, intraguild predation, and indirect effects drive parasite transmission. Proc. Natl Acad. Sci. USA 112, 3008–3013 (2015).

Voccia, I., Blakley, B., Brousseau, P. & Fournier, M. Immunotoxicity of pesticides: a review. Toxicol. Ind. Health 15, 119–132 (1999).

Banerjee, B. D. The influence of various factors on immune toxicity assessment of pesticide chemicals. Toxicol. Lett. 107, 21–31 (1999).

Martin, L. B., Hopkins, W. A., Mydlarz, L. D. & Rohr, J. R. in Year in Ecology and Conservation Biology 2010 (eds Ostfeld, R. S. & Schlesinger, W. H.) 129–148 (Wiley-Blackwell, 2010).

Rohr, J. R. & McCoy, K. A. A qualitative meta-analysis reveals consistent effects of atrazine on freshwater fish and amphibians. Environ. Health Perspect. 18, 20–32 (2010).

Rohr, J. R. et al. Early-life exposure to a herbicide has enduring effects on pathogen-induced mortality. Proc. R. Soc. Lond. B 280, 20131502 (2013).

Rohr, J. R., Kerby, J. L. & Sih, A. Community ecology as a framework for predicting contaminant effects. Trends Ecol. Evol. 21, 606–613 (2006).

Clements, W. H. & Rohr, J. R. Community responses to contaminants: using basic ecological principles to predict ecotoxicological effects. Environ. Toxicol. Chem. 28, 1789–1800 (2009).

Lafferty, K. D. & Holt, R. D. How should environmental stress affect the population dynamics of disease? Ecol. Lett. 6, 654–664 (2003).

Jayawardena, U. A., Rohr, J. R., Navaratne, A. N., Amerasinghe, P. H. & Rajakaruna, R. S. Combined effects of pesticides and trematode infections on hourglass tree frog Polypedates cruciger. EcoHealth 13, 111–122 (2016).

Walker, S. P. et al. Child development: risk factors for adverse outcomes in developing countries. Lancet 369, 145–157 (2007).

Johnson, P. T. J. et al. Linking environmental nutrient enrichment and disease emergence in humans and wildlife. Ecol. Appl. 20, 16–29 (2010).

McKenzie, V. J. & Townsend, A. R. Parasitic and infectious disease responses to changing global nutrient cycles. EcoHealth 4, 384–396 (2007).

Green, R. E., Cornell, S. J., Scharlemann, J. P. & Balmford, A. Farming and the fate of wild nature. Science 307, 550–555 (2005).

Crist, E., Mora, C. & Engelman, R. The interaction of human population, food production, and biodiversity protection. Science 356, 260–264 (2017).

Despommier, D., Ellis, B. R. & Wilcox, B. A. The role of ecotones in emerging infectious diseases. EcoHealth 3, 281–289 (2006).

Borremans, B., Faust, C. L., Manlove, K., Sokolow, S. H. & Lloyd-Smith, J. Cross-species pathogen spillover across ecosystem boundaries: mechanisms and theory. Philos. Trans. R. Soc. Lond. B https://doi.org/10.1098/rstb.2018.0344 (in the press).

Jones, B. A. et al. Zoonosis emergence linked to agricultural intensification and environmental change. Proc. Natl Acad. Sci. USA 110, 8399–8404 (2013).

Cohen, J. M. et al. Spatial scale modulates the strength of ecological processes driving disease distributions. Proc. Natl Acad. Sci. USA 113, E3359–E3364 (2016).

Dobson, A. et al. Sacred cows and sympathetic squirrels: the importance of biological diversity to human health. PLoS Med. 3, 714–718 (2006).

Civitello, D. J. et al. Biodiversity inhibits parasites: broad evidence for the dilution effect. Proc. Natl Acad. Sci. USA 112, 8667–8671 (2015).

Myers, S. S. et al. Human health impacts of ecosystem alteration. Proc. Natl Acad. Sci. USA 110, 18753–18760 (2013).

Pienkowski, T., Dickens, B. L., Sun, H. & Carrasco, L. R. Empirical evidence of the public health benefits of tropical forest conservation in Cambodia: a generalised linear mixed-effects model analysis. Lancet Planet. Health 1, e180–e187 (2017).

Berazneva, J. & Byker, T. S. Does forest loss increase human disease? Evidence from Nigeria. Am. Econ. Rev. 107, 516–521 (2017).

Herrera, D. et al. Upstream watershed condition predicts rural children's health across 35 developing countries. Nat. Commun. 8, 811 (2017).

Barrett, C. B., Travis, A. J. & Dasgupta, P. On biodiversity conservation and poverty traps. Proc. Natl Acad. Sci. USA 108, 13907–13912 (2011).

Barrett, C. B. & Bevis, L. E. The self-reinforcing feedback between low soil fertility and chronic poverty. Nat. Geosci. 8, 907–912 (2015).

Achard, F. et al. Determination of tropical deforestation rates and related carbon losses from 1990 to 2010. Glob. Change Biol. 20, 2540–2554 (2014).

Despommier, D., Griffin, D., Gwadz, R. G., Hotez, P. & Knirsch, C. Parasitic Diseases 6th edn (Parasites Without Borders, 2018).

Parker, I. M. et al. Phylogenetic structure and host abundance drive disease pressure in communities. Nature 520, 542–544 (2015).

Lenski, R. E. & May, R. M. The evolution of virulence in parasites and pathogens: reconciliation between two competing hypotheses. J. Theor. Biol. 169, 253–265 (1994).

Lloyd-Smith, J. O. et al. Epidemic dynamics at the human-animal interface. Science 326, 1362–1367 (2009).

Plowright, R. K. et al. Pathways to zoonotic spillover. Nat. Rev. Microbiol. 15, 502–510 (2017).

Tilman, D. & Clark, M. Global diets link environmental sustainability and human health. Nature 515, 518–522 (2014).

Wiethoelter, A. K., Beltrán-Alcrudo, D., Kock, R. & Mor, S. M. Global trends in infectious diseases at the wildlife–livestock interface. Proc. Natl Acad. Sci. USA 112, 9662–9667 (2015).

Wolfe, N. D., Dunavan, C. P. & Diamond, J. Origins of major human infectious diseases. Nature 447, 279–283 (2007).

Grace, D. et al. Zoonoses — From Panic to Planning IDS Rapid Response Briefing (Institute of Development Studies, 2013).

Landers, T. F., Cohen, B., Wittum, T. E. & Larson, E. L. A review of antibiotic use in food animals: perspective, policy, and potential. Public Health Rep. 127, 4–22 (2012).

Worobey, M., Han, G.-Z. & Rambaut, A. A synchronized global sweep of the internal genes of modern avian influenza virus. Nature 508, 254–257 (2014).

Pulliam, J. R. et al. Agricultural intensification, priming for persistence and the emergence of Nipah virus: a lethal bat-borne zoonosis. J. R. Soc. Interface 9, 89–101 (2011).

Alexander, D. J. A review of avian influenza in different bird species. Vet. Microbiol. 74, 3–13 (2000).

Huyse, T. et al. Bidirectional introgressive hybridization between a cattle and human schistosome species. PLoS Pathog. 5, e1000571 (2009).

Brashares, J. S., Golden, C. D., Weinbaum, K. Z., Barrett, C. B. & Okello, G. V. Economic and geographic drivers of wildlife consumption in rural Africa. Proc. Natl Acad. Sci. USA 108, 13931–13936 (2011).

Keesing, F. & Young, T. P. Cascading consequences of the loss of large mammals in an African savanna. Bioscience 64, 487–495 (2014).

Allan, J. D. et al. Overfishing of inland waters. Bioscience 55, 1041–1051 (2005).

Alsan, M. The effect of the tsetse fly on African development. Am. Econ. Rev. 105, 382–410 (2015).

Yamano, T. & Jayne, T. S. Measuring the impacts of working-age adult mortality on small-scale farm households in Kenya. World Dev. 32, 91–119 (2004).

Lambin, E. F. & Meyfroidt, P. Global land use change, economic globalization, and the looming land scarcity. Proc. Natl Acad. Sci. USA 108, 3465–3472 (2011).

Thornton, P. K. Livestock production: recent trends, future prospects. Philos. Trans. R. Soc. Lond. B 365, 2853–2867 (2010).

Ozier, O. Exploiting externalities to estimate the long-term effects of early childhood deworming. Am. Econ. J. Appl. Econ. 10, 235–262 (2018).

Raffel, T. R. et al. Disease and thermal acclimation in a more variable and unpredictable climate. Nat. Clim. Change 3, 146–151 (2013).

Rohr, J. R. et al. Frontiers in climate change-disease research. Trends Ecol. Evol. 26, 270–277 (2011).

Olival, K. J. et al. Host and viral traits predict zoonotic spillover from mammals. Nature 546, 646–650 (2017).

Tilman, D., Balzer, C., Hill, J. & Befort, B. L. Global food demand and the sustainable intensification of agriculture. Proc. Natl Acad. Sci. USA 108, 20260–20264 (2011).

Despommier, D. The Vertical Farm: Feeding the World in the 21st Century (Macmillan, 2010).

Barro, R. J. Democracy and growth. J. Econ. Growth 1, 1–27 (1996).

Staley, Z. R., Rohr, J. R., Senkbeil, J. K. & Harwood, V. J. Agrochemicals indirectly increase survival of E. coli O157:H7 and indicator bacteria by reducing ecosystem services. Ecol. Appl. 24, 1945–1953 (2014).

Staley, Z. R., Senkbeil, J. K., Rohr, J. R. & Harwood, V. J. Lack of direct effects of agrochemicals on zoonotic pathogens and fecal indicator bacteria. Appl. Environ. Microbiol. 78, 8146–8150 (2012).

Halstead, N. T. et al. Agrochemicals increase risk of human schistosomiasis by supporting higher densities of intermediate hosts. Nat. Commun. 9, 837 (2018).

Hoover, C. M. et al. Modelled effects of prawn aquaculture on poverty alleviation and schistosomiasis control. Nat. Sustain. https://doi.org/10.1038/s41893-019-0301-7 (2019).

Beltran, S., Cézilly, F. & Boissier, J. Genetic dissimilarity between mates, but not male heterozygosity, influences divorce in schistosomes. PLoS ONE 3, e3328 (2008).

Garchitorena, A. et al. Disease ecology, health and the environment: a framework to account for ecological and socio-economic drivers in the control of neglected tropical diseases. Philos. Trans. R. Soc. Lond. B 372, 20160128 (2017).

Baeza, A., Santos-Vega, M., Dobson, A. P. & Pascual, M. The rise and fall of malaria under land-use change in frontier regions. Nat. Ecol. Evol. 1, 0108 (2017).

Acknowledgements

Funds were provided by grants to J.R.R. from the National Science Foundation (EF-1241889, DEB-1518681, IOS-1754868), the National Institutes of Health (R01GM109499, R01TW010286-01), the US Department of Agriculture (2009-35102-0543) and the US Environmental Protection Agency (CAREER 83518801). G.A.D. and S.H.S. were supported by National Science Foundation (CNH grant no. 1414102), NIH grants (R01TW010286-01) the Bill and Melinda Gates Foundation, Stanford GDP SEED (grant no. 1183573-100-GDPAO) and the SNAP-NCEAS-supported working group ‘Ecological levers for health: advancing a priority agenda for Disease Ecology and Planetary Health in the 21st century’. J.V.R. was supported by the National Science Foundation Water, Sustainability, and Climate program (awards 1360330 and 1646708), the National Institutes of Health (R01AI125842 and R01TW010286), and the University of California Multicampus Research Programs and Initiatives (award # 17-446315). M.E.C. was funded by National Science Foundation (DEB-1413925 and 1654609) and UMN’s CVM Research Office Ag Experiment Station General Ag Research Funds. We thank K. Marx for contributing the artwork in Fig. 1.

Author information

Authors and Affiliations

Contributions

J.R.R. developed the idea and wrote most of the paper. Figure 1 was developed by S.H.S. and G.A.D., Figs. 2 and 4 were developed by J.R.R., Fig. 3 was developed by K.H.N. and B.D., Fig. 5 was developed by D.J.C., M.E.C. and R.S.O., and Box 1 was developed by D.J.C., M.E.C. and J.V.R. All authors substantially contributed ideas, revisions and edits.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Methods and Supplementary Table 1.

Rights and permissions

About this article

Cite this article

Rohr, J.R., Barrett, C.B., Civitello, D.J. et al. Emerging human infectious diseases and the links to global food production. Nat Sustain 2, 445–456 (2019). https://doi.org/10.1038/s41893-019-0293-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41893-019-0293-3

This article is cited by

-

Nitrogen and phosphorus trends in lake sediments of China may diverge

Nature Communications (2024)

-

Immunogenetic-pathogen networks shrink in Tome’s spiny rat, a generalist rodent inhabiting disturbed landscapes

Communications Biology (2024)

-

Deep learning techniques for detection and prediction of pandemic diseases: a systematic literature review

Multimedia Tools and Applications (2024)

-

Colorimetric Detection of Staphylococcus aureus Based on Direct Loop-Mediated Isothermal Amplification in Combination with Lateral Flow Assay

BioChip Journal (2024)

-

Foodborne Viruses and Somatic Coliphages Occurrence in Fresh Produce at Retail from Northern Mexico

Food and Environmental Virology (2024)