Abstract

Sample size estimation is a crucial step in experimental design but is understudied in the context of deep learning. Currently, estimating the quantity of labeled data needed to train a classifier to a desired performance, is largely based on prior experience with similar models and problems or on untested heuristics. In many supervised machine learning applications, data labeling can be expensive and time-consuming and would benefit from a more rigorous means of estimating labeling requirements. Here, we study the problem of estimating the minimum sample size of labeled training data necessary for training computer vision models as an exemplar for other deep learning problems. We consider the problem of identifying the minimal number of labeled data points to achieve a generalizable representation of the data, a minimum converging sample (MCS). We use autoencoder loss to estimate the MCS for fully connected neural network classifiers. At sample sizes smaller than the MCS estimate, fully connected networks fail to distinguish classes, and at sample sizes above the MCS estimate, generalizability strongly correlates with the loss function of the autoencoder. We provide an easily accessible, code-free, and dataset-agnostic tool to estimate sample sizes for fully connected networks. Taken together, our findings suggest that MCS and convergence estimation are promising methods to guide sample size estimates for data collection and labeling prior to training deep learning models in computer vision.

Similar content being viewed by others

Introduction

Supervised learning with deep neural networks has achieved state of the art performance in a diverse range of applications. An adequate number of labeled samples is essential for training these systems but most real-world data is unlabeled. Label generation can be cumbersome, expensive and is a major barrier to the development and testing of such systems [1].

Ideally, when confronted with a task and unlabeled data, one would like to estimate how many examples need to be labeled to train a neural network for that task. In this paper, we take a step towards addressing this problem.

Consider a fully connected neural network f of pre-specified dimensions and a dataset X, which is initially unlabeled, but for which labels y can be obtained when needed. We define the minimum convergence size (MCS) for f on X to be the smallest number n such that a subset Xn of n examples drawn at random from X can be labeled and used to train f as a non-trivial classifier, that is, one whose area-under-the-curve (AUC) on a held-out test set is greater than 0.5:

Given that outcomes are balanced, an AUC > 0.5 implies that a model is able to identify some signal in the underlying data, and if that AUC is on the test dataset, this means that the signal identified by the model can generalize to unseen data. In this scenario, below the MCS, we would expect to see little or no correlation between sample size and model performance measured by AUC, whereas above the MCS we would expect to see a positive correlation.

We propose a method for empirically determining the MCS for f on X using only unlabeled data, and we call this estimate the Minimum Convergence Sample Estimate (MCSE). We do this by first constructing an autoencoder g [2], wherein the encoder part has a similar number of parameters and hidden layers as f. We train g on increasingly larger (unlabeled) subsets Xi of X. This may permit similarities in layer-wise learning between f and g. Under these circumstances, we empirically show that, at each step i, the reconstruction loss L of g is related to the generalization performance of f trained on a similarly sized sample. We also demonstrate how this can be used to determine the MCSE for f on X (Fig. 1).

a A fully connected network is trained on labeled data, and tested on a unlabeled data. Generalizability Performance is measured via AUC. Minimum convergence sample (MCS) reflects the minimum number of labeled samples required for a fully connected network to start generalizing. b An autoencoder with a similar structure as the fully connected network is trained on unlabeled data and the loss function measures how generalizable the FCN is. Minimum convergence sample estimate (MCSE) approximates the minimum convergence sample (MCS).

As an example, consider classification of the MNIST [3] dataset with a fully connected neural network (Fig. 2). A comparison of the test set AUC curve of f and the loss curve of autoencoder g shows that their inflection points occur at similar sample sizes. We then define the MCSE for f on MNIST as the sample size corresponding to the inflection point in the loss function of g:

f is a fully connected network with input dimension of 784 and output dimension 10, and g an autoencoder with an input dimension of 784 and a latent space of dimension 3. a The loss of the autoencoder displays a curve split into two phases: the quick phase and the slow phase. b The first derivative of the autoencoder loss function displays a decay phase and a growth phase. c The second derivative reveals a sharp inflection point where the slope changes from sharply decreasing to sharply increasing. d The area-under-the-curve metric on the test set displays a biphasic structure: a rapid growth phase and a slow growth phase. e The first derivative of the AUC curve reveals a rapidly increasing phase followed by a decreasing phase. f The second derivative of the AUC curve reveals an inflection point as a mirror image of the autoencoder loss curve.

With sample sizes above MCSE, the learnability of the dataset on f may be approximated by the ease with which g is able to embed a latent space that fully represents the data. We hypothesized the following relationship between generalization power of a classifier with respect to learnability of the dataset by the corresponding autoencoder:

β is a scaling constant. We tested this hypothesis by calculating the correlation coefficient below the MCSE and above the MCSE, and results are reported in Table 1. A significant R2 indicates a linear correlation between loss and power. We used eight different standard computer vision datasets to demonstrate our method. MNIST, EMNIST [4], QMNIST [5], KMINST [6] are character-recognition datasets composed of 28x28 pixel grayscale images. FMNIST[7] contains 28x28 pixel grayscale images of objects. CIFAR-10 [8] contains 32x32 pixel color images. STL-10 [9] contains 96x96 pixel color images with fewer labeled images than CIFAR-10, and many unlabeled images. FAKE is a dataset of randomly generated images in PyTorch. We also extended our study to synthetic data and a publicly available medical imaging dataset.

Results

Image data results

Our autoencoder-based method estimates the inflection point of the fully connected network’s loss function, and therefore provides an effective measure of the minimum sample size required for the network to learn meaningful representations and become statistically powerful for the FMNIST, EMNIST, QMNIST, CIFAR10, and STL10 datasets (Fig. 3). This technique has the potential to be highly useful for providing a priori estimates of the amount of labeled data that must be gathered at the onset of a project. In medical, biological, military intelligence, and other applications where substantial and costly domain expertise is required for labeling data, our empirical method can provide a valuable alternative to relying on subjective experience—the current state of the art for ML sample size estimation.

The top and middle rows are the results of individual datasets, while the bottom row is the combination of all eight tested datasets. The black striped line represents the autoencoder loss at the point of the inflection. The shaded region represents the error bars, with error determined as the autoencoder loss at ± ln(n), where n is the sample size at which the inflection point of the autoencoder loss occurs. The points are shaded by sample size. For each of these datasets, the autoencoder loss method appears to provide an unbiased estimate of the minimum convergence sample. The top row demonstrates appropriately sampled data while the middle row shows statistical power estimation on oversampled and undersampled data. The bottom row shows that linear interpolation using auto-encoder loss function generally works well in estimating learnability. a Test Area-Under-the-Curve Metric as a function of autoencoder loss. b Test Area-Under-the-Curve Metric as a function of the derivative of the autoencoder loss. c Test Area-Under-the-Curve metric as a function of the double derivative of the autoencoder loss. d Linear interpolation of autoencoder loss with respect to Test Area-Under-the-Curve metric.

The two main variants from our experiment were CIFAR-10, which has a larger input dimension, and STL-10, which has a smaller sample size. Although the CIFAR-10 dataset had a larger input dimension compared to the other datasets, the results were valid and interpretable, which suggests that this autoencoder method can be generalized across input sizes. Furthermore, we held the number of classes constant at ten for ease of interpretation, but the observed results hold for different label sets. Second, STL-10 had far fewer samples than the NIST datasets, suggesting that this method for estimating the minimum learnable sample size may be used on datasets with small sample sizes.

The fully connected network demonstrates a growth in statistical power above the compressibility on the MNIST dataset (Fig. 3). This may be due to the unknown and arbitrary data pre-processing steps conducted on the MNIST dataset [10], which allow a fewer number of samples for classification than compression, biasing the autoencoder’s learnable sample size estimate downward. However, it is reassuring that the fake data leads to autoencoder loss instability, resulting in error bars that exist out of the bounds of the estimate. This estimate suggests that the provided fully connected network would not be able to decipher a distribution from the fake data, which places a bound on the learnability of the dataset with respect to the network.

Through non-parametric correlation tests, we showed that loss and power are uncorrelated before the minimum convergence sample estimate, and that they are correlated above the minimum convergence sample estimate (Table 1). The high R2 values on datasets such as FMNIST and KMNIST validate the linear relationship between classifier power and autoencoder loss in Eq. (3). However, the higher values of Kendall’s τ and Spearman’s ρ, which both measure non-linear correlation, suggest that in some cases there is potentially a third stage of learning beyond compression. This may be related to the regularization of parameter weights or fine tuning of the network to ensure labels are semantically linked to their ground truth representation. Nevertheless, Kendall’s τ and Spearman’s ρ are able to fully capture these non-linear trends, even with extremely small sample sizes, as demonstrated with the STL-10 dataset, or with larger networks, as demonstrated with the CIFAR-10 dataset.

Finally, we demonstrate that this method works specifically for medical imaging datasets, where labeling is especially time-intensive and costly, and more generally on a synthetic datasets (Fig. 4a). We use a medical dataset consisting of X-ray images for pneumonia detection and show that the AE accurately predicts a minimum convergence sample at n = 1024 samples. We anticipate sample size estimation will reduce the dataset burden for medical imaging tasks.

a Minimum convergence sample estimation on a medical imaging dataset for pneumonia detection on Chest X-rays. b Minimum convergence sample estimation on a synthetic dataset (×10 bootstrapped). Boxes represent the 25th and 75th percentiles, and the whiskers and the whiskers represent the minimum and maximum values.

Synthetic data

To generalize our work more broadly to generic clustered representations, we demonstrate that this method works on synthetic data. We randomly sampled data-points from an n-dimensional hyper-cube with side-lengths equal to the class separation to train the fully connected network (FCN) classifier and autoencoder (Fig. 4b). The minimum convergence sample estimate, which is the sample size at which the autoencoder loss (red) first shows a significant improvement, occurs at n = 512 samples. Examination of the FCN classifier AUCs shows that no significant learning occurs until the sample size reaches the minimum convergence sample estimate of n = 512, at which point a significant improvement in AUC is observed.

We run the minimum convergence sample estimator across synthetic data, varying the number of input features, the number of classes, the number of informative features, and the number of hidden units. We find that MCSE invariantly captures the number of samples required for the fully connected network to generalize to an unseen dataset (Table 2).

Because the model works on synthetic data, we are able to provide a code-free, dataset-agnostic tool for researchers to a priori estimate the minimum number of labeled samples they need to train an effective fully connected network classifier. We deploy the application using Flask and PyTorch on a publicly accessible server. We ask for inputs such as priors on the minimum sample size estimate, the number of total features, informative features and classes. The algorithm can be bootstrapped to reduce error, and the size of each hidden layer in a 3-layer fully connected network can be modified as well, to accommodate for dataset complexity. This tool can be easily accessed at samplesizeforml.com. This tool is intended to be used a priori to data collection. Estimating minimum convergence sample on a synthetic dataset can provide a lower bound on the number of samples to collect. After collecting the minimum convergence sample, MCSE can be more precisely estimated and extrapolated via Eq. (3) to obtain an estimate of the number of samples required to achieve a desired performance (Fig. 5).

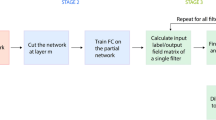

Stage 1 of the pipeline is to use hyper-parameters to estimate a minimum convergence sample. Stage 2 is to collect the number of samples estimated by the MCSE, and use that to determine the sample size required for a desired performance via MCSE and Eq. (3). Stage 3 is to collect the number of samples required, label those samples and train on the FCN to achieve the desired performance.

Limitations on label quality

Minimum convergence sample estimation, by design, does not account for label quality. Label quality is an essential part of experimental design, and past work has shown that label errors can destabilize bench-marking [11]. For minimum convergence sample estimation to provide a reasonable estimate of minimum convergence sample, it relies on labeled data providing semantically meaningful information.

In situations where label quality does not semantically capture meaningful information in the data, the minimum convergence sample estimate may not provide an accurate estimate of minimum convergence sample. Running the method on fake data generates non-interpretable results (Fig. 3d). The converse is also true, if an autoencoder converges while the fully connected network does not, this may serve as a warning on label and sample quality. To further quantitatively evaluate how label quality affects the minimum convergence sample estimate, we evaluate the minimum convergence sample estimate on synthetic data mislabeled a certain percentage of the time ranging from 0.1 to 50% (Table 3). We demonstrate that as label quality decreases, the MCSE becomes a worse estimator of MCS.

While most standard imaging data-sets have reasonable connections between label and data, more complex data-sets may have a weaker link. This is, therefore, a limitation of present work. A joint pipeline with other methods to ensure label quality provides a direction for future work [12, 13].

Discussion

Data collection is a universal step in the development of machine learning algorithms. The question of how much labeled data is needed to train a generalizable classifier is one that every data scientist working in supervised or semi-supervised learning must grapple with. The paradigm of big data answers with ‘as much data as we can get’. For many tasks, however, this convention is highly problematic. A priori sample size determination is a common practice for almost every field to mitigate some of these issues, and here we introduce a variant on sample size determination—the minimum convergence sample for machine learning. We additionally propose and validate a method to estimate a minimum convergence sample for deep learning algorithms with a focus on fully connected networks. This study makes several contributions to the field. The first is a simple method for estimating dataset learnability by a given model f. While prior work has characterized different methodologies by which learnability can be characterized, these works have focused on characterizing the expressivity of models relative to data rather than the learnability of data relative to a model[14, 15]. Du and colleagues [16, 17] have adapted tools from empirical process theory [18] for the estimation of sample complexity of CNNs and RNNs. However, empirical process theory may not extend well to networks which include nonlinear activation methods, like Rectified Linear Units.

In this work, we primarily focus on estimating the number of labeled samples needed to train fully connected networks. Many developments in deep learning have focused on reducing the number of samples. These approaches fall into two categories: (a) adding structural information about the data into the model or (b) having the model assign labels through semi-supervised learning approaches. An improvement in sample efficiency does not void sample determination, but rather increases the potential gap between the minimum convergence sample and number of collected labeled samples.

In the first category, improvements neural network architectures that take advantage of domain-specific and data-specific knowledge can reduce the minimum convergence samples. For example, neural network layers like convolutions takes advantage of spatial relationships of the pixels in an image to better learn representations of an image [19]. Convolutions improve sample efficiency and achieve generalizable performance at smaller samples than fully connected networks. The technique of a priori minimum convergence sample estimation we present should be easily adaptable to architectures like convolutional neural networks.

In the second category, semi-supervised learning methods can learn labels given a large set of unlabeled samples and smaller subset of labeled samples [20]. Semi-supervised learning relies on a few key assumptions, the most relevant of which is the low-density assumption. The low-density assumption in semi-supervised learning is that the decision boundary of a classifier should preferably pass through low-density regions in the input space. When a dataset is not representative of the population due to systemic inequities as seen in healthcare, this may result in the classifier providing biased labels for underrepresented classes. For example, there may be biases in data collection based upon race and ethnicity because of inequitable access to care [21]. A minimum convergence sample estimate may help guide data collection to adequately represent low-density subsets of the data.

Sample size determination and statistical power remain closely related for many important studies, including medical trials and social and psychological studies [22, 23]. Not having a priori sample size estimation has been recognized as a common mistake in the design of clinical trials and occurs more often when statisticians are not involved early in the trial design process [24]. Under-powered studies in neuroscience, and more specifically in Alzheimer’s disease have led to routine failures in replication [25,26,27]. Given the increasing presence of artificial intelligence in medicine and clinical trials [28], sample size determination for neural networks represents an important opportunity to advance the utility of artificial intelligence in these domains by increasing trial efficiency, efficacy, and power. Most grant applications in medicine require an estimate of sample sizes, and now an estimate can be reasonably provided for machine-learning based grant applications via the deployed Flask application. A sound method for conducting sample size estimation for machine learning models can ensure proper experiment design.

Some past work has surveyed the use of sample size determination in machine learning with respect to medical imaging applications [29]. These methods are split into Pre-Hoc and Post-Hoc methods. However, pre-hoc methods were not robust in the high-dimensional setting with large intraclass variability [30]. Other pre-hoc methods such as empirical process theory did not extend well to non-linear methods [16]. Post-hoc methods usually involve fitting a learning curve, but fitting a learning curve is trivial for minimum convergence sample estimation because any amount of data should result in a non-zero increase in performance on a training data-set. Moreover, these methods are task-specific, data-specific, and model-specific, as one learning curve has no relevance outside that specific task, model and data-set. Nevertheless, while our experiments validate minimum convergence sample estimation on toy data-sets, synthetic data, and one real-world example of medical imaging due to data availability, future work should further validate this method on more across different tasks and imaging types in the healthcare context.

Our second contribution is the proposal of a method to empirically estimate MCSE for a given fully connected neural network f. This function allows users to predict statistical power of a model without needing to train on the entire training set during every trial. It also includes an uncertainty on the estimate, in which the variance is inversely correlated to how structured the underlying data is. Our third contribution is a publicly available tool for minimum sample size estimation for fully connected neural networks.

Importantly, there are several natural opportunities to extend our work to more complex models, as discussed below. First, our paper only considered a fully connected network with a relatively simple architecture. One natural question that might extend from this work involves assessing how this method fares in estimating the statistical power of convolutional or recurrent neural networks. While adding convolutions would be relatively easy to do via the addition of another layer, adding attention mechanisms may require additional structural modifications to fully approximate the statistical power of recurrent neural network or transformers. For our method to be applicable to medical imaging tasks, we anticipate that extending this work to convolutional neural networks remains an important next step. Future work can validate MCSE on more complex architectures utilizing pre-trained networks and skip connections. Second, the loss function that was utilized in this current analysis was the reconstruction loss, which is a relatively simple choice of loss function. For variational autoencoders, the loss function changes to instead use a KL-divergence, while GANs use JS-divergence and WGANs use Wasserstein divergence [31,32,33]. Therefore, different autoencoders with various structural representations can also be used to represent a fully connected network with distinct losses and structural features. Future work should examine different reconstruction frameworks to approximate the statistical power of increasingly complex network architectures. Third, we have not explored the utility of a similar approach to aid in architecture search and identification of an optimal set of cases for labeling.

In summary, we present a novel method of estimating the minimum sample size required to train a fully connected neural network for a classification task. The distinguishing feature of our approach is that this estimate can be obtained prior to labeling any data, which can be advantageous in real-world settings where labeling is expensive or time-consuming.

Methods

Main

For the six MNIST variants and FAKE, we let f be a fully connected network with input dimension of 784 and output dimension 10, and we let g be an autoencoder with an input dimension of 784 and a latent space of dimension 3. The latent space dimension of 3 was chosen in the hope of avoiding over- or under-compression of the latent space. The only differences for CIFAR-10 and STL-10 were input dimensions of 1024 and 9216, respectively. We train both f and g on 13 different sample sizes at factors of ×2: 16, 32, 64, 128, 256, 512, 1024, 2048, 4096, 8192, 16,384, 32,768, and 50,000. Training was performed by bootstrapping 50 times on two NVIDIA GeForce GTX 1080 Ti GPUs using PyTorch. We tested all 50 bootstrapped models of f at different sample sizes on the test-size of 10,000 to find the test AUC. This protocol was completed on all eight datasets.

Then, we smoothed both the autoencoder loss function of g and the area-under-the-curve of f using a natural spline. Next, we obtained the second derivative of the spline on the autoencoder (Fig. 3).

After taking the second derivative of the loss function, we located the inflection point and its respective sample size as well as the value of the autoencoder loss at that value. Figure 2 was generated when we plotted the autoencoder loss of g and the AUC of f against the sample size. MCSE was drawn as a vertical line in Fig. 3, coinciding with the inflection point on the autoencoder loss function and provides a lower bound on the sample size required to improve model performance. The shaded area bars represent the error, determined as the autoencoder loss at the log(MCSE ± 1) sample sizes.

Third, we determined the correlation between autoencoder loss and area-under-the-curve using R2, Kendall’s τ and Spearman’s ρ (Table 1). These values demonstrated a significant coorelation above, but not below, the MCSE (inflection point of the autoencoder loss curve). To better demonstrate this finding, we plotted out the results for Eq. (3) for values above MCSE (Fig. 3).

Finally, we generalize these results to an n-dimensional hyper-cube and validate them on a medical imaging dataset. To generate the synthetic data set, we randomly sampled data-points from an n-dimensional hyper-cube with side-lengths equal to the class separation to generate the fully connected network classifier and an autoencoder. For the medical dataset, we use the publicly available Kaggle Chest X-ray dataset [34], and accurately predict the minimum number of labeled samples required to learn a meaningful classifier using a fully connected network.

Analysis of the publicly available NIH CXR dataset was carried out with approval of the Institutional Review Board at Icahn School of Medicine at Mount Sinai, New York, NY 10019. The requirement for informed consent was waived as the dataset was completely de-identified.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The image datasets can be accessed via the torchvision library https://pytorch.org/vision/stable/datasets.html. The synthetic dataset was generated via the sklearn library https://scikit-learn.org/stable/index.html. The de-identified X-ray dataset is publicly available https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia.

References

Sambasivan, N. et al. "everyone wants to do the model work, not the data work”: Data cascades in high-stakes ai. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, CHI ’21 (Association for Computing Machinery, New York, NY, USA, 2021).

Goodfellow, I., Bengio, Y. & Courville, A.Deep Learning, chap. 14 Autoencoders (MIT Press, 2016).

Deng, L. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Process. Mag. 29, 141–142 (2012).

Cohen, G., Afshar, S., Tapson, J. & Van Schaik, A. Emnist: Extending mnist to handwritten letters. In 2017 International Joint Conference on Neural Networks (IJCNN), 2921-2926 (IEEE, 2017).

Yadav, C. & Bottou, L. Cold case: The lost mnist digits.

Uday Prabhu, V. Kannada-mnist: A new handwritten digits dataset for the kannada language. Preprint at https://arxiv.org/abs/1908.01242 (2019).

Xiao, H., Rasul, K. & Vollgraf, R. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. Preprint at https://arxiv.org/abs/1708.07747 (2017).

Krizhevsky, A. Learning multiple layers of features from tiny images. Tech. Rep. (2009).

Coates, A., Ng, A. & Lee, H. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the fourteenth international conference on artificial intelligence and statistics, 215-223 (2011).

Yadav, C. & Bottou, L. Cold case: The lost mnist digits. Advances in neural information processing systems 32 (2019).

Northcutt, C. G., Athalye, A. & Mueller, J. Pervasive label errors in test sets destabilize machine learning benchmarks. In Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1) (2021).

Northcutt, C., Jiang, L. & Chuang, I. Confident learning: Estimating uncertainty in dataset labels. J. Artif. Intell. Res. 70, 1373–1411 (2021).

Jain, S. et al. Visualchexbert: addressing the discrepancy between radiology report labels and image labels. In Proceedings of the Conference on Health, Inference, and Learning, 105-115 (2021).

Guss, W. H. & Salakhutdinov, R. On characterizing the capacity of neural networks using algebraic topology. Preprint at https://arxiv.org/abs/1802.04443 (2018).

Goldfarb, D. Understanding deep neural networks using topological data analysis. Preprint at https://arxiv.org/abs/1811.00852 (2018).

Du, S. et al. How many samples are needed to estimate a convolutional or recurrent neural network? stat 1050, 30 (2019).

Du, S. & Lee, J. On the power of over-parametrization in neural networks with quadratic activation. In International conference on machine learning, 1329-1338 (PMLR, 2018).

Van de Geer, S. A.Applications of empirical process theory, vol. 91 (Cambridge University Press Cambridge, 2000).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

Van Engelen, J. E. & Hoos, H. H. A survey on semi-supervised learning. Mach. Learn. 109, 373–440 (2020).

Chen, I. Y. et al. Ethical machine learning in healthcare. Ann Rev. Biomed. Data Sci. 4, 123–144 (2021).

Heo, M. & Leon, A. C. Statistical power and sample size requirements for three level hierarchical cluster randomized trials. Biometrics 64, 1256–1262 (2008).

Röhmel, J. Statistical considerations of fda and cpmp rules for the investigation of new anti-bacterial products. Stat. Med. 20, 2561–2571 (2001).

Strasak, A. M., Zaman, Q., Pfeiffer, K. P., Göbel, G. & Ulmer, H. Statistical errors in medical research-a review of common pitfalls. Swiss Med. Wkly. 137, 44–49 (2007).

Button, K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 (2013).

Carneiro, C. F., Moulin, T. C., Macleod, M. R. & Amaral, O. B. Effect size and statistical power in the rodent fear conditioning literature–a systematic review. PloS one 13, e0196258 (2018).

Amanatkar, H. R., Papagiannopoulos, B. & Grossberg, G. T. Analysis of recent failures of disease modifying therapies in alzheimer’s disease suggesting a new methodology for future studies. Expert Rev. Neurother. 17, 7–16 (2017).

He, J. et al. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 25, 30–36 (2019).

Balki, I. et al. Sample-size determination methodologies for machine learning in medical imaging research: a systematic review. Can. Assoc. Radiologists J. 70, 344–353 (2019).

Dobbin, K. K. & Simon, R. M. Sample size planning for developing classifiers using high-dimensional dna microarray data. Biostatistics 8, 101–117 (2007).

DOERSCH, C. Tutorial on variational autoencoders. Stat 1050, 13 (2016).

Jolicoeur-Martineau, A. Gans beyond divergence minimization. arXiv preprint arXiv:1809.02145 (2018).

Arjovsky, M., Chintala, S. & Bottou, L. Wasserstein generative adversarial networks. In International conference on machine learning, 214-223 (PMLR, 2017).

Kermany, D. S. et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172, 1122–1131 (2018).

Acknowledgements

This work was supported in part through the computational resources and staff expertise provided by Scientific Computing at the Icahn School of Medicine at Mount Sinai. Research reported in this paper was supported by the Office of Research Infrastructure of the National Institutes of Health under award number S10OD026880 and S10OD030463. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

Conception and design: F.F.G., A.S.S., G.N.N. and E.K.O. Funding obtainment: G.N.N. and E.K.O. Provision of study data: F.F.G. Collection and assembly of data: F.F.G. and A.S.S. Data analysis and interpretation: all authors. Manuscript writing: all authors. Final approval of the manuscript: all authors. G.N.N. and E.K.O. are joint senior authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gulamali, F.F., Sawant, A.S., Kovatch, P. et al. Autoencoders for sample size estimation for fully connected neural network classifiers. npj Digit. Med. 5, 180 (2022). https://doi.org/10.1038/s41746-022-00728-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-022-00728-0