Abstract

Randomized controlled trials (RCTs) are regarded as the most reputable source of evidence. In some studies, factors beyond the intervention itself may contribute to the measured effect, an occurrence known as heterogeneity of treatment effect (HTE). If the RCT population differs from the real-world population on factors that induce HTE, the trials effect will not replicate. The RCTs eligibility criteria should identify the sub-population in which its evidence will replicate. However, the extent to which the eligibility criteria identify the appropriate population is unknown, which raises concerns for generalizability. We compared reported data from RCTs with real-world data from the electronic health records of a large, academic medical center that was curated according to RCT eligibility criteria. Our results show fundamental differences between the RCT population and our observational cohorts, which suggests that eligibility criteria may be insufficient for identifying the applicable real-world population in which RCT evidence will replicate.

Similar content being viewed by others

Introduction

Generalizability closes the gap between biomedical research and clinical practice1. When research is translated into the healthcare setting, the application of biomedical evidence to clinical care is known as evidence-based medicine (EBM). Since its inception in the 1990s, EBM has become the standard of operation for many clinicians2,3,4,5. EBM encourages clinicians to seek the most reputable evidence for any patient, according to a hierarchy of study quality in which randomized controlled trials (RCTs) are the best single study design5. RCTs are most often used to unbiasedly assess the effect of an intervention, such as a drug or procedure, on an outcome.

Although EBM may be employed successfully for many different clinical decisions, challenges remain. Underlying EBM’s success is the assumption that the effect shown in RCTs will replicate in real-world populations6,7. However, research has shown that factors beyond the intervention itself, such as age, sex, or medical history, may modify the measured effect, a phenomenon known as heterogeneity of treatment effect (HTE)8. If the RCT population differs from the real-world population based on factors that induce HTE, RCT results will not be replicated in real-world application. Realistically, clinicians cannot evaluate HTE on a case-by-case basis and must assume that HTE is not a significant factor. However, when applying evidence from RCTs, this assumption is likely unmet. Research has shown that HTE is often found to exist9,10. This raises concerns for reproducibility of studies in the presence of additional heterogeneity in real-world populations.

The RCT is well-regarded for many reasons, but randomization is the most important. Randomization ensures the highest possible internal validity, which speaks to whether the true effect is biased by systematic error11,12. The notion of internal validity does not speak to how well the causal relationship will generalize, only how unbiased it is for the study population. The patients for which the effect estimate is internally valid are nominally defined by eligibility criteria. These criteria both stipulate the characteristics that all study patients must share and nominally identify the real-world population for which the effect is internally valid. When operationalized, the eligibility criteria are represented as inclusion and exclusion criteria13,14,15; and with every addition of a criterion to a study population, a different sub-population is identified with increasingly controlled conditions16. If HTE exists, then application of eligibility criteria to a population may identify a sub-population of patients for which there is a more homogeneous effect of the intervention.

RCTs often employ very restrictive eligibility criteria and are often cited as poorly representative of the real-world, as many subpopulations may be excluded. This may result in poor external validity. External validity refers to the extent to which the treatment effect estimate applies those outside of the study with potentially different patient and treatment setting characteristics11. External validity always poses a concern, except in the circumstance in which HTE is known to be absent.

With poor external validity, replication of the study effect can be challenging17,18,19,20,21. Replication of trial evidence with real-world data, ideally, requires that the right persons, in the right treatment setting, exist in the right proportions. In the context of treating a population that differs significantly from the clinical trial population, it can be unclear how appropriate the evidence is for this new population. Presumably, the eligibility criteria of a study should be sufficient to identify the population in which the effect will replicate, which we call the applicable population.

To address this knowledge gap, we leverage observational data to assesses if RCT populations and real-world populations after application of eligibility criteria differ. If the populations differ, the evidence may not apply due to HTE. If HTE exists in observational populations, it may impede the replication of RCT effect estimates. These methods will contribute (i) a means to determine if the eligibility criteria are adequate for identifying the applicable population; (ii) a framework for evaluating the external validity of studies; and (iii) highlight tensions between assumptions of EBM practice and qualities of reputable evidence.

This research may encourage clinicians to reconsider the assumptions made when practicing EBM, and whether these assumptions are valid. Furthermore, the empirical evidence put forth by this study highlights the limitations of the current system of clinical knowledge generation. The current system sacrifices external validity in favor of internal validity, through the selection of the experimental population. Such a decision impedes the ability of experimental evidence to translate to the general population, resulting in non-optimal or damaging clinical care. This problem motivates the use of study populations that are more representative of the real-world and is only truly optimized when study populations and the populations targeted for treatment are one in the same. Such an analysis is called real-world evidence (RWE) generation, in which clinical knowledge is learned from the analysis of routinely collected, real-world data22. The results of this research identify the need for RWE in clinical medicine and underscore how RWE may improve the practice of EBM.

Results

Experimental vs. observational populations

The results of this study are presented in Tables 1–4 and Fig. 1.

a ACCOMPLISH trial b NCT01189890 trial (sitagliptin vs. glimepiride), c PROVE-IT trial d RENAAL trial. The shape of the marker corresponds to the data type. Circles (●) denote the standardized difference in the mean of continuous data. Pluses (+) denote the difference in percentage points of discrete data.

Sitagliptin vs. glimepiride

The sitagliptin vs. glimepiride trial in elderly patients with Type 2 Diabetes Mellitus is given in Table 1. Application of eligibility criteria to the Indication Only cohort identified the Indication + Eligibility criteria cohort that was more similar to the RCT with regard to BMI, Fasting plasma glucose, and HbA1c % (mean); and less similar to the RCT with regard to age, years since diabetes diagnosis, gender, HbA1c > 8%, race/ethnicity, and weight. Indication + Eligibility Criteria patients did not significantly differ from the trial in regards to BMI, weight, and HbA1c % (mean), all other baseline characteristics metrics did significantly differ.

These results highlight that the indicated real-world population and the real-world population that meets the stringent eligibility criteria have generally less progressed diabetes than those patients in the trial. This is exemplified by (i) years since diabetes diagnosis, which is 3.97 for the Indication Only cohort and 3.30 in the Indication + Eligibility Criteria cohort, but is 8.69 in the trial (p = 0.007) and (ii) fasting plasma glucose, which is 140.35 in the Indication Only cohort and 141.55 in the Indication + Eligibility Criteria cohort, but is 169.04 in the trial (p = 0.007). With regard to these two baseline characteristics metrics, the application of the eligibility criteria to the Indication Only cohort identified a subset of patients with a fasting plasma glucose that was more similar to the trial and a years since diabetes diagnosis that was less similar to the trial.

PROVE-IT

The atorvastatin vs. pravastatin trial in patients with a history of ACS (PROVE-IT Trial) is given in Table 2. Application of eligibility criteria to the Indication Only cohort identified the Indication + Eligibility Criteria cohort that was more similar to the RCT with regard to age, race/ethnicity, diabetes, hypertension, prior MI, peripheral artery disease, and prior statin therapy, and less similar to the RCT with regard to sex, current smoker, percutaneous coronary intervention, index event, and median lipid values. Indication + Eligibility Criteria patients differed significantly from the trial in regards to all baseline characteristics.

The results for this trial show that patients that meet either the Indication or the Indication subject to all criteria, have less severe cardiovascular lipid measurements than patients in the trial. This is demonstrated in the median lipid values, where in total cholesterol, LDL, HDL, and triglycerides are 171.67, 100.41, 45.07, and 141.95, respectively, in the Indication Only cohort and 169.55, 99.19, 45.07, and 138.00, respectively, in the Indication + Eligibility Criteria. This is compared to the 180.50, 106.00, 38.50, and 156.02, respectively, that is reported in the trial.

RENAAL

The losartan vs. placebo trial in patients with diabetic nephropathy (RENAAL Trial) is given in Table 3. Application of eligibility criteria to the Indication Only cohort identified the Indication + Eligibility Criteria cohort that was more similar to the RCT with regard to age, pulse, angina pectoris, coronary revascularization, stroke, lipid disorder, total cholesterol, serum triglycerides, hemoglobin, and glycosylated hemoglobin, and less similar to the RCT with regard to sex, race/ethnicity, blood pressure measurements, use of antihypertensive drugs, myocardial infarction, amputation, neuropathy, retinopathy, current smoking, laboratory values, LDL and HDL. Indication + Eligibility Criteria patients significantly differ from the trial in regards to angina pectoris, stroke, amputation, lipid disorder, glycosylated hemoglobin % all other baseline characteristics metrics significantly differ. Significance of median urinary alb:creatinine ratio measurements could not be assessed due to insufficient reporting in the EHR.

Similar to the trial results previously mentioned, patients enrolled in the RCT demonstrate hallmarks of advanced disease. A greater proportion of trial patients had a medical history of amputation (8.86%), neuropathy (51.02%), and retinopathy (63.71%), than compared to either the Indication Only cohort (1.60%, 19.83%, 5.40%, respectively) or the Indication + Eligibility Criteria cohort (0.00%, 11.11%, 4.17%).

ACCOMPLISH

The benazepril-amlopidine vs. benazepril-hydocholorothiazide trial in patients with systolic hypertension (ACCOMPLISH Trial) is given in Table 4. Application of eligibility criteria to the Indication Only cohort identified the Indication + Eligibility Criteria cohort that was more similar to the RCT with regard to age, potassium, lipid lowering agents, beta blockers, antiplatelet agents; history of MI, stroke, hospitalization for unstable angina, diabetes mellitus, eGFR < 60, coronary revascularization, CABG, PCI, left ventricular hypertrophy, current smoking, dyslipidemia, and AFib, and less similar to the RCT with regard to sex, race/ethnicity, weight, blood pressure measurements, pulse, creatinine, glucose, total cholesterol, HDL, and history of renal disease. Indication + Eligibility Criteria patients significantly differ from the trial in regards to all baseline characteristics, except for history of previous hospitalization for unstable angina. Significance of waist circumference and eGFR could not be assessed due to data availability and insufficient reporting in the EHR.

The results of the four trials are summarized in Fig. 1. In this figure, each quadrant of the plot corresponds to a trial. For each trial, the ΔRCT for baseline characteristics are plotted for Indication Only vs. RCT and indication + Eligibility Criteria vs. RCT. The minimum and maximum HbA1c measurements for the NCT01189890 trial were excluded in this plot due to biologically implausible values that were likely transcription errors.

Discussion

This research suggests that eligibility criteria are insufficient for identifying the applicable real-world population in which experimental treatment effects will replicate with confidence. The comparison between the trial and the Indication + Eligibility Criteria cohorts highlights that the RCT and real-world cohorts are not similar. This result suggests that the eligibility criteria may not identify the applicable patients if HTE exists.

In some cases, application of the eligibility criteria to the Indication Only cohort encouraged the mean feature to be more like that of the RCT. For example, the distribution of gender in the PROVE-IT trial. However, much more commonly, the application of the eligibility criteria to the Indication Only cohort also results in (i) an exacerbation of the difference between the Indication Only cohort and the RCT, as was seen with gender in the RENAAL trial; or (ii) an over-correction of the bias between the Indication Only cohort and the RCT, as was seen with gender in the ACCOMPLISH trial.

This evaluation, with something as fundamental as gender, demonstrates that the eligibility criteria do not strictly encourage the data to be more like that reported in the RCT baseline characteristics data. Often, the eligibility criteria identified a subset a patient that was less like the trial on certain baseline characteristics. This suggests that the eligibility criteria applied in a different setting may actually increase confounding and introduce new biases in such an analysis. This assertion is additionally supported by the summarization of results in Fig. 1. A clustering of points near the center of the plot (0.0, 0.0) indicates that the observational cohorts differ very little from the RCT. Points that lie on the 45-degree line are indicative of baseline characteristics that are unaffected by the addition of eligibility criteria to the Indication Only. Deviations from this perfect correlation highlight the extent to which application of eligibility criteria encourage or worsen representativeness to the RCT. The sitagliptin vs. glimepiride trial (NCT01189890) and PROVE-IT show the least impact of the RCT criteria. The high linearity of points in these plots suggests that the eligibility criteria do not identify a subset of patients that are meaningfully different from the Indication Only cohort. This is contrary to the ACCOMPLISH and RENAAL trials. In these plots, there is more variance in the distribution of points along the 45-degree line with certain features improving representativeness and others worsening.

The creation of these observational cohorts permits the comparison of real-world populations to summaries of clinical trials. A number of studies have previously examined the of misalignment between experimental populations and real-world populations by quantifying the discrepancy between these two data sources23,24,25,26,27,28,29,30,31,32,33,34. Despite this ongoing conversation regarding the lack of representativeness and generalizability of clinical trials, the relationship between the eligibility criteria and HTE and how they may contribute to poor external validity, remains poorly addressed. This research makes a thorough assessment of these two populations by comparing experimental cohorts with observational cohorts that were curated by carefully operationalizaed of eligibility criteria; it is highly rigorous and encourages confidence in our assessment of the inconsistencies between the trial and the real-world.

We believe our approach is limited in practice because the task is complex and requires many components to line up. Our methods require not only a substantial amount of observational data, but standardization of that data into a common data model. The use of the Observational Health Data Sciences and Informatics’ common data model (OHDSI CDM) in this research facilitated the normalization of medical concepts to a single code and made simplified the definition of the cohorts.

This study contributes a systematic evaluation of cohort characteristics under eligibility criteria. We show that discrepancies may appear as differences in aggregate features in the baseline characteristics. If these features have a meaningful effect on the outcome, the differences or imbalance between the cohorts, may result in confounding of the observational treatment effect. In this circumstance, the RCT evidence would not applicable to this real-world cohort. Computational methods could assist in identifying patients that match the RCT cohort for applicability, and perhaps such methods should be applied given the results shown here.

Experimental trial participants are not only an inherently poor representation of the target population, but this research suggests that factors beyond eligibility criteria may introduce new hidden bias. Furthermore, the importance of HTE and potential for feature imbalance, even under careful cohort curation, highlight the current methodological gap in trial replication. Through our replication efforts, we were also able to articulate a framework of external validity. As noted earlier, external validity refers to the extent to which the trial results can be applied outside of the experimental setting13.

Underlying the results of this research is the inherent tension that exists between the practice of EBM and what is regarded as credible evidence. The RCT, which is the most reputable source of biomedical knowledge, employs highly discriminative eligibility criteria that serve to identify a targeted effect of the intervention. This research uses real-world evidence to demonstrate that EBM practitioners cannot reasonably assume that trial participants are representative of real-world eligible patients. This raises the question as whether the RCT evidence is, therefore, applicable. This consideration is complicated by the inability of clinicians to determine who the applicable patients are. This is despite the publication of eligibility criteria, which are often incompletely or insufficiently reported in the modes of evidence most often consumed by clinicians35.

This research does have limitations. Most importantly, the trials presented in this research were selected according to a set of criteria that enabled their analysis using the tools described. These criteria included an active intervention and comparator, published eligibility criteria, and ease of operationalization of concepts. The trials that were investigated as part of this research represent common indications. It is possible that the results presented here are specific to trials of common conditions and may not be representative of rare condition trials.

The translation of clinical trial eligibility criteria to operationalized and computable queries may be prone to subjectivity. Although we sought to represent the criteria as unbiasedly as possible and consulted with clinicians to ensure accuracy, there is inherent ambiguity in the criteria themselves, which make perfect RCT representation impossible. Furthermore, information regarding the eligibility criteria may be found within the clinical note, which was not used when constructing the cohorts in this research. Additionally, when subjecting an observational cohort to many criteria, the resultant cohort may become very small, leading to a lack of power for the detection of relevant differences. In our evaluation of the external validity of trials, we compare aggregate metrics rather than a full distribution of features, which would be preferable. This comparison is the best we can do with the data that is available to us. However, such a comparison may fail to capture meaningful differences between the trial and real-world populations, as distributions with greatly differing functional forms may still have similar means.

Lastly, and most notably, experimental data and EHR data are fundamentally different, which makes comparison between these two sources difficult. Though differences to experimental data may be inherent, the EHR houses the information that is available to clinicians at the time when treatment decisions are made. Furthermore, it is a valuable resource for identifying the applicable patients to support the practice of EBM. We believe that discrepancies between experimental data and EHR data are necessary to study so that we may develop methodologies to ensure appropriate applicability at the point of care.

Based on the results of the research presented, the eligibility criteria, that nominally should be sufficient for effect replication, may not actually be sufficient if HTE exists. If HTE exists and the differences we observed in our cohort are common, factors beyond eligibility criteria may be necessary to identify applicable patients. This finding has significant implications on how we create and apply biomedical evidence.

The expectation of EBM is that the population of patients that a single clinician sees, is an applicable population, and will mirror the population in the RCT in all ways, including the distribution of the treatments effect. This assumption does not take into account variation undocumented factors that affect HTE. If factors that induce HTE are not accounted for in the eligibility criteria but exist, a clinician cannot reasonably assume that the treatment effect will be seen in his treated patient population. The discrepancies between experimental and real-world populations that are presented here may be due to a number of sources, including overly restrictive eligibility criteria, insufficient documentation of eligibility criteria, or the self-selection of trial participants. When seeking to rectify this gap and improve generalizability of RCT findings, these issues may be addressed by the relaxation of trial eligibility criteria, a thorough and accurate description of eligibility criteria (perhaps recorded in a codified manner), or the active recruitment of a representative experimental population. Regardless of the source of this discrepancy, until addressed, careful consideration beyond who is eligible for the trial is necessary to determine whether results of a given RCT are an appropriate source of evidence when considering the care of a given patient.

Methods

The comparison of experimental and observational populations

We hypothesized that significant baseline characteristic differences exist between clinical trial populations and observational cohorts that meet all eligibility criteria. Such differences could be the source of poor external validity in the presence of HTE. The presence of differences could be confirmed by comparing empirical distributions of features between the RCT data and real-world observational data. However, patient-level RCT data is rarely released, so such as assessment is infeasible for most published RCTs. The best available proxy is to compare the real-world observational cohort to the summary of baseline characteristics of RCTs, as commonly presented in Table 1 of RCT publications. We will refer to these summary statistics as baseline characteristics. The baseline characteristics summarize the baseline demographic and clinical characteristics for each arm of the study36. The intent of publishing this table is to describe the clinical trial population in detail and report the similarity of arms in the RCT post-randomization. This data can also be used to evaluate external validity, and by association, replicability37. To examine how potential differences between experimental and observational cohorts may contribute to poor replicability, we compared RCT baseline characteristics with the same metrics from observational EHR data.

Data

Observational clinical data was obtained from the Columbia University Irving Medical Center (CUIMC) clinical data warehouse (CDW). Data elements evaluated in this study include laboratory measurements, diagnosis codes, and medications. This database is comprises predominantly of emergency and inpatient visits with a smaller number of outpatient visits at the hospital’s teaching clinics. The data used for this research was formatted according to the OHDSI (http://www.ohdsi.org) CDM to support downstream interoperability within the OHDSI community and to support replication and extension by OHDSI collaborators. This research was approved by the Columbia University Institutional Review Board. Informed consent was waived as this the research could not practicably be carried out without the waiver. The code to collect and query the data is freely available at https://github.com/ameliaaveritt/Translating_Evidence_Into_Practice.

Cohort creation

Corresponding to each RCT, observational cohorts were curated from EHR data according to two approaches. The first approach curated based on only the indication of the drug (Indication Only), e.g., diabetes or heart failure. This cohort represents the most basic assessment that clinicians can make when considering a treatment for a patient, per EBM. The second approach curated based on both the indication of the drug and all published eligibility criteria (Indication + Eligibility Criteria). This cohort represents the most thorough assessment that clinicians can make under EBM.

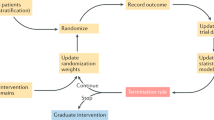

Both the Indication Only and Indication + Eligibility Criteria cohorts were constructed using OHDSI’s ATLAS tool. ATLAS is an analytics platform used to support the design and execution of observational analyses. Part of this platform includes the ability to create cohort definitions. Cohort definitions identify a set of patients that satisfy one or more criteria for a duration of time. The Indication Only and Indication + Eligibility Criteria cohorts were defined using this tool. The Indication and Eligibility Criteria that were extracted from published RCT documentation were operationalized using the Observational Medical Outcomes Partnership (OMOP) CDM and served as criteria for cohort definitions. This was a rigorously done procedure, in which medical doctors were consulted to ensure the accuracy of the operationalization and faithfulness to the original criteria. To operationalize the criteria, we created concept sets, which enumerate both the medical concepts that should be included in the definition of our criteria and excludes the concepts that should not be included. This procedure often employed the hierarchical relationships that exist in the OMOP CDM ontology, where in all descendants of a single concept could be selected as part of a concept set and selectively removed, if needed. This procedure is outlined in Fig. 2.

The process begins by identifying the resources (e.g., an RCT protocol) that detail the eligibility criteria of a trial. Each criterion is then extracted and mapped to codified concepts in a controlled vocabulary. The concept is then mapped to the OHDSI common data model (CDM), which aggregates the same concepts from different vocabularies, into a single standardized concept. This concept is then refined to best define the eligibility criterion.

Cohort comparisons

For each RCT under study, we calculated the pooled baseline characteristics using the metrics reported for both the intervention and comparator arms. Discrete data was summed across both arms and is presented as a percent. Continuous data was taken as the average of each arm’s reported metrics, weighted by the proportion of patients in that arm.

The Indication Only and Indication + Eligibility Criteria cohorts were queried to obtain metrics that corresponds to the RCT baseline characteristics. To explore the differences that exist between the observational patient cohorts and the RCT patient cohort, we calculated (i) the standardized difference in the means for continuous variables and (ii) percentage point differences between discrete variables (ΔRCT). If ΔRCT evaluates to zero, this indicates that the observational cohort does not differ from the trial cohort. If ΔRCT does not equal zero, this indicates that observational and trial cohorts differ, with greater magnitudes corresponding to greater discrepancies between the cohorts.

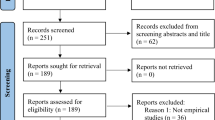

Trial selection

For this research, we purposefully picked landmark clinical trials, which are highly influential studies that are noted to change the practice of medicine. We began with a list of landmark trials, and after application of criteria that are outlined below, we decided on a small number. Our primary focus was landmark trials, but to increase the diversity of studies and to demonstrate applicability outside of efficacy trials, we evaluated a safety trial that met our criteria as well.

When selecting candidate trials for this research, there were practical considerations that informed our choice of trials38. The RCT must have an active intervention and comparator drug, as we would be unable to sufficiently codify a cohort exposed to a placebo. Additionally, the intervention cannot be a new investigatory drug, as it would not exist in our EHR. The eligibility criteria for the RCT must be published and accessible; and most of the eligibility criteria must be hard criteria that are easily operationalized into concept codes (e.g., “age of at least 55 years”). While most trials have inescapable soft criteria that are not easily operationalized (e.g., “no contraindications” or “no current participation in another clinical trial”), it is important that our chosen trials have few of these. Consider, for example, the soft criteria “expected survival of at least 2 years”, which embodies a judgment call by a healthcare practitioner that cannot reasonably replicated with data. Finally, we sought trials that detailed a patient population that exists within the CUIMC EHR. This would ensure that a sufficient number of patients remain in our cohorts after application of the eligibility criteria. As we are interested in comparing the RCT Table 1 metrics with the same metrics from our observational cohort, it is important that our observational data contain as many patients as possible, as greater number of patients will increase confidence that our reported data is truly representative of the CUIMC population.

To that end, we investigated four trials (1) the RENAAL Trial, which compared the effect of losartan and placebo on diabetic nephropathy39; (2) the ACCOMPLISH Trial40, which compared benazepril-amlodipine to benazepril and hydrochlorothiazide on CV-related mortality, (3) the PROVE-IT Trial41, which compared atorvastatin and pravastatin in patients with a history of acute coronary syndrome (ACS); and (4) the sitagliptin and glimepiride trial42, which compared sitagliptin and glimepiride in elderly, diabetic patients. RENAAL, ACCOMPLISH, and PROVE-IT are Landmark RCTs with efficacy endpoints, and the sitagliptin vs. glimepiride trial is a smaller trial with a safety endpoint. Details on how the Indication Only and Indication + Eligibility Criteria cohorts were created can be found in the Supplementary Information Tables 1–10.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

Due to the existence of protected health information, the data are not publicly available. However, due to the standardized nature of our data and the coded vocabulary for our data, external researchers can replicate our work through a network study in the OHDSI consortium where Columbia University serves as the coordinating center.

Code availability

To analyze the data that was presented in this study, this research implemented custom code. This code is freely available at https://github.com/ameliaaveritt/Translating_Evidence_Into_Practice

References

Wong, V. C. & Steiner P. M. Replication designs for causal inference. EdPolicyWorks Working Paper Series. 2018 [cited 2019 Mar 26]. Available from: http://curry.virginia.edu/uploads/epw/62_Replication_Designs.pdfhttp://curry.virginia.edu/edpolicyworks/wp

Djulbegovic, B. & Guyatt, G. H. Progress in evidence-based medicine: a quarter century on. Lancet 390, 415–423 (2017).

Djulbegovic, B. & Guyatt G. in Users Guide to Medical Literature. 3rd edn. (McGraw-Hill Education, 1976).

Djulbegovic, B., Guyatt, G. H. & Ashcroft, R. E. Epistemologic inquiries in evidence-based medicine. Cancer Cont. 16, 158–168.

Sackett, D. L., Rosenberg, W. M., Gray, J. A., Haynes, R. B. & Richardson, W. S. Evidence based medicine: what it is and what it isn’t. BMJ 312, 71–72 (1996).

Ioannidis, J. P. A. How to make more published research true. PLoS Med. 11, e1001747 (2014).

Contopoulos-Ioannidis, D. G., Alexiou, G. A., Gouvias, T. C. & Ioannidis, J. P. A. Life cycle of translational research for medical interventions. Science 321, 1298–1299 (2008).

Kent, D. M. et al. Risk and treatment effect heterogeneity: re-analysis of individual participant data from 32 large clinical trials. Int. J. Epidemiol. 45, dyw118 (2016).

Fredriksson, P. & Johansson, P. Dynamic treatment assignment. J. Bus. Econ. Stat. 26, 435–445 (2008).

Xie, Y., Brand, J. E. & Jann, B. Estimating heterogeneous treatment effects with observational data. Socio. Methodol. 42, 314–347 (2012).

Campbell, D. T. & Stanley, J. C. Experimental and Quasi-experimental Designs for Research. (Houghton Mifflin Company, Boston, 1963).

Burns, P. B., Rohrich, R. J. & Chung, K. C. The levels of evidence and their role in evidence-based medicine. Plast. Reconstr. Surg. 128, 305–310 (2011).

Campbell, D. T. & Stanley, J. C. Handbook of Research on Teaching. 1–84 (Houghton Mifflin Company, Boston, 1963).

Hyman, R. Quasi-Experimentation: Design and Analysis Issues for Field Settings. Vol. 46, 96–97 (Houghton Mifflin, 1982).

Anderson-Cook, C. M. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Vol. 100 (Wiley, 2005).

Velasco, E. in Encyclopedia of Research Design (ed. Salkind, N) (SAGE, Thousand Oaks Publications, 2010).

Wales, J. A., Palmer, R. L. & Fairburn, C. G. Can treatment trial samples be representative? Behav. Res. Ther. 47, 893–896 (2009).

Moher, D., Jadad, A. R. & Tugwell, P. Assessing the quality of randomized controlled trials. Current issues and future directions. Int. J. Technol. Assess. Health Care. 12, 195–208 (1996).

Britton, A. et al. Threats to applicability of randomised trials: exclusions and selective participation. J. Heal. Serv. Res. Policy 4, 112–121 (1999).

Karanis, Y. B., Canta, F. A. B., Mitrofan, L., Mistry, H. & Anger, C. ‘Research’ vs ‘real world’ patients: the representativeness of clinical trial participants. Ann. Oncol. (2016) https://doi.org/10.1093/annonc/mdw392.51/2800468/Research-vs-real-world-patients-the

Stuart, E. A., Bradshaw, C. P. & Leaf, P. J. Assessing the generalizability of randomized trial results to target populations. Prev. Sci. 16, 475–485 (2015).

Sherman, R. E. et al. Real-world evidence — what is it and what can it tell us? N. Engl. J. Med. 375, 2293–2297 (2016).

Kennedy-Martin, T., Curtis, S., Faries, D., Robinson, S. & Johnston, J. A literature review on the representativeness of randomized controlled trial samples and implications for the external validity of trial results. Trials 16, 495 (2015).

Badano, L. P. et al. Patients with chronic heart failure encountered in daily clinical practice are different from the “typical” patient enrolled in therapeutic trials. Ital. Hear. J. 41, 84–91 (2003).

Bosch, X. et al. Causes of ineligibility in randomized controlled trials and long-term mortality in patients with non-ST-segment elevation acute coronary syndromes. Int. J. Cardiol. 124, 86–91 (2008).

Collet, J. P. et al. Enoxaparin in unstable angina patients who would have been excluded from randomized pivotal trials. J. Am. Coll. Cardiol. 41, 8–14 (2003).

Costantino, G. et al. Eligibility criteria in heart failure randomized controlled trials: a gap between evidence and clinical practice. Intern. Emerg. Med. 4, 117–122 (2009).

Dhruva, S. S. & Redberg, R. F. Variations between clinical trial participants and medicare beneficiaries in evidence used for medicare national coverage decisions. Arch. Intern. Med. 168, 136 (2008).

Ezekowitz, J. A. et al. Acute heart failure. Circ. Hear. Fail. 5, 735–741 (2012).

Golomb, B. A. et al. The older the better: are elderly study participants more non-representative? A cross-sectional analysis of clinical trial and observational study samples. BMJ Open. 2, e000833 (2012).

Hutchinson-Jaffe, A. B. et al. Comparison of baseline characteristics, management and outcome of patients with non–ST-segment elevation acute coronary syndrome in versus not in clinical trials. Am. J. Cardiol. 106, 1389–1396 (2010).

Melloni, C. et al. Representation of women in randomized clinical trials of cardiovascular disease prevention. Circ. Cardiovasc. Qual. Outcomes 3, 135–142 (2010).

Steinberg, B. A. et al. Global outcomes of ST-elevation myocardial infarction: comparisons of the enoxaparin and thrombolysis reperfusion for acute myocardial infarction treatment-thrombolysis in myocardial infarction study 25 (ExTRACT-TIMI 25) registry and trial. Am. Heart J. 154, 54–61 (2007).

Uijen, A. A., Bakx, J. C., Mokkink, H. G. A. & van Weel, C. Hypertension patients participating in trials differ in many aspects from patients treated in general practices. J. Clin. Epidemiol. 60, 330–335 (2007).

Van Spall, H. G. C., Toren, A., Kiss, A. & Fowler, R. A. Eligibility criteria of randomized controlled trials published in high-impact general medical journals. JAMA 297, 1233 (2007).

Schulz, K. F., Altman, D. G. & Moher, D. CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. BMJ 340, c332 (2010).

Furler, J., Magin, P., Pirotta, M. & van Driel, M. Participant demographics reported in “Table 1” of randomised controlled trials: a case of “inverse evidence”? Int. J. Equity Health. 11, 14 (2012).

Bartlett, V. L., Dhruva, S. S., Shah, N. D., Ryan, P. & Ross, J. S. Feasibility of using real-world data to replicate clinical trial evidence. JAMA Netw. Open. 2, e1912869 (2019).

Brenner, B. M. et al. Effects of losartan on renal and cardiovascular outcomes in patients with type 2 diabetes and nephropathy. N. Engl. J. Med. 345, 861–869 (2001).

Jamerson, K. et al. Benazepril plus Amlodipine or hydrochlorothiazide for hypertension in high-risk patients. N. Engl. J. Med. 359, 2417–2428 (2008) http://www.nejm.org/doi/abs/10.1056/NEJMoa0806182.

Cannon, C. P. et al. Intensive versus moderate lipid lowering with statins after acute coronary syndromes. N. Engl. J. Med. 350, 1495–1504 (2004).

Hartley, P. Efficacy and tolerability of sitagliptin compared with glimepiride in elderly patients with type 2 diabetes mellitus and inadequate glycemic control: a randomized, double-blind, non-inferiority trial. Drugs Aging 32, 469–476 (2015).

Acknowledgements

This research is supported by grants R01LM009886-10 and T15LM007079 from The National Library of Medicine, and the grant, CU15-2317, from Janssen Inc.

Author information

Authors and Affiliations

Contributions

A.P. and P.R. designed the study. A.A. created the cohorts, performed the analysis, drafted the manuscript, and designed the figures. C.W., A.P., P.R. provided critical feedback and helped shape the research, analysis and manuscript.

Corresponding author

Ethics declarations

Competing interests

P.R. is an employee of Janssen Research and Development and a shareholder of Johnson & Johnson. All other authors have no relevant conflicts of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Averitt, A.J., Weng, C., Ryan, P. et al. Translating evidence into practice: eligibility criteria fail to eliminate clinically significant differences between real-world and study populations. npj Digit. Med. 3, 67 (2020). https://doi.org/10.1038/s41746-020-0277-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-020-0277-8

This article is cited by

-

More efficient and inclusive time-to-event trials with covariate adjustment: a simulation study

Trials (2023)

-

Outcomes of Biologic Use in Asian Compared with Non-Hispanic White Adult Psoriasis Patients from the CorEvitas Psoriasis Registry

Dermatology and Therapy (2023)

-

A review of research on eligibility criteria for clinical trials

Clinical and Experimental Medicine (2023)

-

Implementation strategies for hospital-based probiotic administration in a stepped-wedge cluster randomized trial design for preventing hospital-acquired Clostridioides difficile infection

BMC Health Services Research (2023)

-

Clinical Guidance on the Monitoring and Management of Trastuzumab Deruxtecan (T-DXd)-Related Adverse Events: Insights from an Asia-Pacific Multidisciplinary Panel

Drug Safety (2023)