Abstract

Digital health interventions (DHIs) have frequently been highlighted as one way to respond to increasing levels of mental health problems in children and young people. Whilst many are developed to address existing mental health problems, there is also potential for DHIs to address prevention and early intervention. However, there are currently limitations in the design and reporting of the development, evaluation and implementation of preventive DHIs that can limit their adoption into real-world practice. This scoping review aimed to examine existing evidence-based DHI interventions and review how well the research literature described factors that researchers need to include in their study designs and reports to support real-world implementation. A search was conducted for relevant publications published from 2013 onwards. Twenty-one different interventions were identified from 30 publications, which took a universal (n = 12), selective (n = 3) and indicative (n = 15) approach to preventing poor mental health. Most interventions targeted adolescents, with only two studies including children aged ≤10 years. There was limited reporting of user co-design involvement in intervention development. Barriers and facilitators to implementation varied across the delivery settings, and only a minority reported financial costs involved in delivering the intervention. This review found that while there are continued attempts to design and evaluate DHIs for children and young people, there are several points of concern. More research is needed with younger children and those from poorer and underserved backgrounds. Co-design processes with children and young people should be recognised and reported as a necessary component within DHI research as they are an important factor in the design and development of interventions, and underpin successful adoption and implementation. Reporting the type and level of human support provided as part of the intervention is also important in enabling the sustained use and implementation of DHIs.

Similar content being viewed by others

Introduction

In the UK, 25% of 17–19 year-olds experience significant levels of mental illness often accompanied by self-harm and sometimes escalating to suicide1. As 50% of mental health problems are established by the age of 14 and 75% by the age of 242, prevention and early intervention in children and young people are of critical importance3. Preventive interventions include those that support children and young people to develop skills to maintain mental health, that target pre-clinical risk factors, or respond to early signs of distress4. This review will apply the Gordon framework of prevention to mental health problems: universal (targeting whole populations, regardless of current mental health status), selective (targeting specified risk factors) and indicated levels (targeting early sub-clinical signs and symptoms)5. Tertiary prevention, targeting existing mental health disorders, is not within the scope of this review.

The rapid growth of digital technologies (e.g. smartphones, wearables) has created the potential for predictive prevention: the use of data to personalise preventive interventions6. The ubiquity of digital technologies offers an opportunity to support increased access to mental health interventions for children and young people7. To date, there is little evidence to demonstrate the successful implementation and subsequent impact of evidence-based digital mental health interventions for children and young people at scale8. This scoping review aims to further our understanding of the challenges to implementation faced by digital health interventions (DHIs) addressing the prevention and early intervention of mental health problems in children and young people by examining the reporting of factors that improve opportunities for successful adoption into real-world contexts. These include features related to the development, evaluation and implementation of DHIs9,10.

Previous studies have identified several challenges to the use of DHIs in routine service delivery including technical difficulties and low awareness of data standards and privacy11, as well as low engagement and retention rates amongst users12. Moreover, several gaps have been identified in research such as the lack of economic evaluations and implementation studies11,13. A scoping review of mental health apps for young people documented several advantages of apps including ubiquity, flexibility, and timely communication, but these were challenged by technical difficulties, poor adherence, and few studies that addressed privacy or conducted an economic evaluation14. The large numbers of publicly available digital mental health interventions (over 10,0008) highlight the growing gap between research and evaluation as well as practice and implementation. For instance, a recent review found only 2 of 15 evidence-based mental health apps were available to download despite their acceptability15 and the clear need for effective DHIs in routine care16.

Efficacious DHIs for mental health have been reviewed across the age-span of children and young people7, including university students17,18, and in relation to those with anxiety disorders19 and/or depression17,19,20,21,22,23,24. However, generalising efficacy of DHIs outside research settings is constrained if interventions are not sufficiently appropriate or appealing, challenging not only the engagement of children and young people25 but potentially also those who support them (e.g. parents and teachers). Engaging stakeholders in the development, implementation and evaluation of technologies is one of the pillars for responsible research and innovation (RRI) and a crucial element to develop new digital innovations in a socially desirable and acceptable way26. For example, researchers must consider the wider societal implications (e.g., workforce issues, training/skills) as well as consider how real-world uptake might differ from usage within a trial (e.g., limited mobile data) as engagement is a key barrier to the effectiveness of DHIs in mental healthcare8. Co-production with young people, and where relevant their parents25, as well as specialist technical and psychological input20, is important in identifying and potentially mitigating these problems.

There is clearly potential for DHIs to be effective in prevention and early intervention for children and young people but there is little research addressing the opportunities and challenges of their adoption into real-world contexts. This review examines factors related to successfully sustained DHIs9,10 and applies these to evidence-based interventions designed to prevent mental health disorders in children and young people. These include mapping the characteristics of studies, their participants, design elements, and features related to implementation. It aims to identify potential influences of successful adoption within the existing evidence-base and highlights factors that should be addressed by researchers in the design and reporting of the development, evaluation and implementation of DHIs. A scoping review approach was chosen as it is more suitable than a systematic review where the purpose of the review is to identify knowledge gaps, scope a body of literature to investigate the adequacy of research design and reporting27.

Methods and analysis

This scoping review uses the framework proposed by Arksey and O’Malley28 and is informed by PRISMA guidelines29.

Search strategy

Five electronic databases (ACMDL, PubMed, PsycInfo, Embase and Cochrane Library) were searched in June 2019 for all full-text publications in English published from 2013 to 19/06/2019. Reviews were searched when identified and any relevant articles included. A previous systematic review by Clarke et al.30 reported on 28 studies conducted between 2000 and early 2013. These studies were followed up so as to map whether these interventions had been subject to further research.

Study screening

Eligibility criteria were as follows:

-

peer-reviewed studies

-

DHIs for mental health disorders

-

children and young people aged 0–25 years old

-

universal, selective or indicated prevention

-

participants were not included in studies on the basis of a clinical diagnosis (e.g. depression or anxiety)

-

DHI delivered in any location

Screening was conducted by four researchers (AB, EBD, EPV and MK).

Data Extraction

The data extraction chart was developed iteratively using the Joanna Briggs Institute template31 by three researchers (AB, EPV and EBD), with input from other authors, to reflect categories identified within the previous review30 and the literature surrounding digital therapies and prevention for children and young people. Thirty-four categories were identified ranging from demographic information to key elements of prevention, implementation and involvement. Three researchers co-ordinated to ensure that the coding framework was appropriate and consistently applied. Four main categories were identified – study characteristics, user characteristics, usability and engagement, and implementation – from the data extraction chart.

Results

Study selection

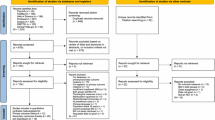

Thirty studies were identified from 791 studies (Fig. 1).

Study characteristics

The 30 studies included relate to 21 different interventions. These include 12 providing universal prevention (Table 1), 3 selective (Table 2) and 15 indicated prevention (Table 3). The majority of studies reported on interventions accessed via the internet (n = 21) while a small number used instant messaging (n = 2) or social media elements (n = 1). Some used an app (universal = 2, selective = 1), whilst those available only on a desktop or laptop computer (n = 8) mainly used game elements (n = 5).

The majority of participants were recruited from secondary education (n = 19; universal = 9, selective = 1, and indicated = 9). This was followed by primary healthcare (n = 4; selective = 1, and indicated = 3), universities (n = 3; universal = 2, and indicated = 1) and via the media (n = 3; universal = 1, and indicated = 2). One selective study identified potential participants through court documents.

User characteristics

The majority of studies did not report participant characteristics that are known risk-factors for mental health disorders (n = 16). These included living status, education of participants and/or parents, technology ownership, and rural-urban classification (Tables 4–6). Selective studies identified those with parents who were divorced, or had addictions or mental illness, and those at risk of depression. Just under half of the studies did not report information about participants’ ethnicity (n = 15). Those that did were inconsistent in how this was measured, with seven simply identifying ‘local’ and ‘non-local’ participants.

Universal interventions were aimed at young people whose ages ranged from 9 to 25 years old (mean = 17.35, SD = 3.97) whilst selective interventions were for between 11 and 25 years old (mean = 17.47, SD = 3.75). Indicated interventions included the broadest age range from 7 to 25 years old (mean = 16.15, SD = 3.27). The majority of interventions targeted participants between the ages of 15–16 years (n = 22, mean = 16.73, SD = 3.59). No interventions were aimed at children aged ≤6 years. Universal and selective primary prevention interventions were also not available to children aged ≤9 years.

Usability and engagement

Most studies reported capturing user experience through questionnaires (n = 9) that range from a series of questions around helpfulness and acceptability, to a single question asking if the user is satisfied. Only one study reported using multiple validated measures—the System Usability Scale and the Usefulness, Satisfaction and Ease of use (USE) questionnaire32. Other methods used included interviews (n = 3), focus groups (n = 2) and surveys (n = 1). Only six interventions reported on user involvement in the development of interventions. This included collaborative working on the content and design of the intervention with young people and other users, involvement in usability testing, and engagement of young people with lived experience to feedback on materials.

Only 8 studies identified completion criteria. Some used automated methods, such as the number of logins, to assess engagement (n = 7). Others used self-report (n = 2) or relied on attendance (e.g. interventions delivered within schools). Because most interventions did not report on how much of an intervention needed to be completed, it was difficult to identify whether participants have been sufficiently engaged. Reasons provided for dropout are linked to setting (e.g. school absence) and technical issues (e.g. logging in difficulties). Other factors included older age, higher and lower levels of anxiety, speaking a language other than English, feeling better or too unwell, being busy, or responding badly to the intervention.

Implementation

Most universal studies (n = 11/12) recruited all those that chose to participate who were within the age range. Only one study screened participants using a clinical interview for depression within the general school population, for the purpose of excluding those in the clinical range and identifying changes within a clinical score. Universal studies were delivered at home (n = 7), within schools (n = 4), and a lab (n = 1). Selective studies targeted specific at-risk populations (e.g. children of divorced parents) and were all delivered within a home setting (n = 3). Six of the fifteen indicated studies used clinical assessments to identify participants and these interventions were delivered in school (n = 1), multiple settings (n = 3) and home (n = 2). Nine used self-reported assessments and were delivered within the home (n = 5), multiple settings (n = 3) and schools (n = 1).

All indicated (n = 15) and one selective study included participants with a score indicating mental health symptomology. Studies variously excluded those with a mental health diagnosis, low levels of comprehension, those currently in treatment, those with scores that were too high or low on mental health symptomology, those not exclusively attracted to the opposite sex, and those with current suicidality.

The barriers and facilitators to implementation were different for those programmes delivered within schools, at home and across multiple settings. Within schools, completion was often challenged by absences. Relevance was a key issue across all settings with involved stakeholders needing to understand the programme and its outcomes, and feel that it was relevant to their experience. It was also necessary for the preventive DHI to be provided through an easily accessible and appropriate device. For instance, within schools it may be more difficult to implement app-based interventions due to restrictions on students’ smartphone use or it may create inequalities for those students without access to one. Technical issues across all settings challenged use; this was overcome when support was provided by researchers to those delivering (e.g. teachers) the intervention. Much of the data focused on implementation was around the acceptability, usability and content of the programme, rather than challenges and opportunities in real-world dissemination. Consent was challenging in two studies where parental consent was needed for those disclosing their sexuality, and another, delivered remotely, that noted some participants did not realise they were engaged in a research study. Despite the potential for DHIs to be delivered without clinical oversight only a small number of studies (n = 3) reported adverse events or potential negative impact in detail.

The research was funded by fellowships, granted to researchers, and national health research grants. Funding was also received from accelerator programmes, charitable organisations, and was mainly from research, private and health funding with a small amount of business funding. Some funding was received for developing the intervention, and others received funding for delivery of the research, but it was mainly unclear what this funding was provided for. Although the reports include statements that there were no conflicts of interest, the founders and developers of interventions were at times involved in the research.

Only six studies reported the cost of their intervention within the study. The current availability of interventions was difficult to assess as many were difficult to search because they had names associated with other websites (e.g. ‘stressbusters’) or were not named within the research. Only 38% (n = 8/21) are publicly available. Of those accessible online some were only available in certain countries (e.g. SPARX is currently not available in the United Kingdom) or specific languages (e.g. Kopstoring is only available in Dutch). Intervention costs were at times reported online (e.g. MoodGYM) but it was difficult to ascertain how to access these interventions as an individual because they were often country-specific or required a significant amount of personal information (e.g. e-Couch).

Discussion

DHIs offer the opportunity to provide children and young people with evidence-based interventions that prevent mental health disorders at an early stage. However, our scoping review suggests that DHIs are not yet meeting their potential and the design and reporting of research does not generally support real-world implementation. Whilst they are being delivered within several settings, studies most frequently recruited from secondary schools, colleges or universities. Younger children and those who do not engage in school or are often absent are not being included within research designs. Other known mental health risk-factors are not reported such as socio-economic status and ethnicity, and this limits the generalisability of the findings to populations where there are most need and potential benefit. This is particularly significant as ethnic minorities are less likely to be referred to or access formal mental health services33,34,35. Reporting participants’ demographic characteristics are essential to know if research is engaging with groups hardly reached (e.g., sexual and gender minorities) which often are at increased risk for mental health problems, including suicide36. Demographic information can also signal if equality, diversity and inclusion strategies have been applied to understand how results may generalize to different groups; thereby supporting adoption and implementation.

DHIs are designed mainly to deliver cognitive behavioural therapy (CBT) for indicated prevention to target depression and/or anxiety symptoms. This is unsurprising considering CBT is the recommended treatment for depression37 and anxiety38 in children and young people and the most studied intervention offline for anxiety and depression39. Fewer interventions aimed to improve general wellbeing, which suggests researchers may be more likely to develop interventions based on existing clinical guidelines despite the effectiveness of tackling general wellbeing in non-digital prevention interventions24.

Preventive DHIs can be flexible in how they are offered; whether in terms of when, where or how they are accessed. However, different settings may offer unique challenges and opportunities that prevent individuals or those delivering from benefiting. Our review found that few studies reported on factors related to implementation and this represents an important gap in understanding how DHIs are best adopted to support the prevention of mental health disorders in children and young people. Our findings suggest there are two key areas that must be addressed: systemic understanding of how technical issues can be solved/supported and ensuring that the programme content is not only relevant to children and young people but also to those supporting its use (e.g. parents and teachers). It is also important to consider the real-world contexts into which these DHIs might be implemented. For instance, when delivered remotely it is not possible to apply consistent eligibility criteria and yet only three studies address adverse events or the negative impact of the DHI. Co-production of content, design and usability can increase the likelihood of successful implementation within DHIs and RRI frameworks recommend user involvement in the design of new technologies. However, within this review, only 10 interventions reported on user experience and five interventions reported that users had been involved in the research. It is clear that there is a significant lack of consensus as to how the user experience should be captured or involvement reported.

Regarding sustainability, there is a need to design, develop and test interventions within an implementation framework; i.e. with the pipeline of adoption firmly sitting as the foundation of the work. Real-world accessibility of interventions was difficult to ascertain as many are only available in specific countries or languages and the costs of access are not always clear. While these DHIs hold great potential to be disseminated and used widely, our review indicates that too often software is not updated, and existing interventions do not take advantage of newer developments that have the potential to improve the predictive capacity of preventive interventions, such as sensors and wearables, machine learning or natural language processing methodologies40.

Another issue highlighted is that programme completion and reasons for dropout need to be addressed more clearly as it is difficult to assess how many modules or how engaged a user needs to be for it to be considered complete. Outcomes are potentially impacted by the type of technology used and the way in which data are collected. Many DHIs still rely on self-report, which is less objective and considered scientifically less rigorous, despite the potential for automated measurements. One study demonstrated high rates of follow up (MEMO—98% post-intervention and 92.5% at 12 months) that were attributed to strategies they developed to reduce dropout41. Another (MoodGYM, delivered nationally) had extremely low rates of engagement (8.5% logged into the first module)42. However, this may be due to differences in how this was measured and the method of delivery. The latter was done so automatically through logging access to the website whilst the former relied on self-report. Whether either can accurately capture engagement remains to be seen.

Strengths and limitations

This scoping review examined 30 studies representing 21 different DHIs for the prevention of mental health disorders amongst children and young people delivered in various settings. Our results provide an updated summary of factors related to the adoption of interventions into real-world contexts, reporting on stakeholder involvement, engagement and users’ experience. We have also expanded on past reviews by identifying the potential for these prevention interventions to be disseminated and used widely. This review has identified tools currently used to address user experience and engagement within these interventions and highlighted the gaps in the design and reporting of research (e.g., reporting risk factors, gaps in age range covered by interventions). We have mapped barriers and facilitators to real-world implementation including differences between research and practice (e.g., exclusion criteria, implementation, funding and costs). The findings of this review highlight that there is more work needed to address better research design and reporting of development, evaluation and implementation of DHIs for the prevention of mental health disorders in children and young people if their potential is to be fully realised.

The strength of this scoping review is that it looked broadly at mental health prevention across multiple settings, including user experience and involvement, implementation or the real-world uptake of interventions. However, it did not include grey literature and there may have been co-design and involvement within studies that went unreported in publications. An agreed DHI taxonomy would be beneficial to identify common core components between interventions. Their clinical, technical and evaluative mechanisms are reported in several ways, which challenges this reviews clarity.

Recommendations

More research is needed examining factors related to the successful adoption of preventive DHIs for children and young people within mental health and how they can be encouraged; addressing risk factors, ethical issues including consent processes for remote delivery, and younger age groups. We recommend that researchers report the amount of their programme that must be completed (minimum dose) and identify the availability and accessibility metrics of their intervention including costs (e.g. through an economic evaluation). Real-world implementation is imperative to consider8, and more research should address this within different settings and technologies. DHI research would also benefit from an agreed taxonomy for reporting the clinical, technical and evaluative components. The impact of these interventions, including negative reactions or the exclusion of certain populations, must also be addressed in future research. Finally, we recommend that funding is provided that ensures the sustainability of research-based DHIs from development through to real-world dissemination.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

NHS Digital. Mental Health of Children and Young People in England. Available at: https://digital.nhs.uk/data-and-information/publications/statistical/mental-health-of-children-and-young-people-in-england/2017/2017 (2018).

Kessler, R. C. et al. Lifetime prevalence and age-of-onset distributions of mental disorders in the World Health Organization’s World Mental Health Survey Initiative. World Psychiatry 6, 168–76 (2007).

Public Health England. Universal approaches to improving children and young people’s mental health and wellbeing. Report of the findings of a Special Interest Group. Available at: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/842176/SIG_report.pdf (2019).

National Public Health Partnership. Preventing Chronic Disease : A strategic framework. 1–55. Available at: https://commed.vcu.edu/Chronic_Disease/2015/NPHPProject.pdf (2001).

Gordon, R. An operational classification of disease prevention. Public Health Rep. 98, 107–109 (1983).

Department of Health and Social Care. Advancing our health: prevention in the 2020s. Available at: https://www.gov.uk/government/consultations/advancing-our-health-prevention-in-the-2020s (2019).

Hollis, C. et al. Annual research review: digital health interventions for children and young people with mental health problems—a systematic and meta-review. J. Child Psychol. Psychiatry 58, 474–503 (2017).

Fleming, T. et al. Beyond the trial: Systematic review of real-world uptake and engagement with digital self-help interventions for depression, low mood, or anxiety. J. Med. Internet Res. 20, e199 (2018).

Blandford, A. et al. Seven lessons for interdisciplinary research on interactive digital health interventions. Digit. Heal 4, 2055207618770325 (2018).

Mohr, D. C., Lyon, A. R., Lattie, E. G., Reddy, M. & Schueller, S. M. Accelerating digital mental health research from early design and creation to successful implementation and sustainment. J. Med. Internet Res. 19, 153 (2017).

Boydell, K. M. et al. Using technology to deliver mental health services to children and youth: a scoping review. J. Can. Acad. Child Adolesc. Psychiatry 23, 87–99 (2014).

Baumel, A., Muench, F., Edan, S. & Kane, J. M. Objective user engagement with mental health apps: systematic search and panel-based usage analysis. J. Med. Internet Res. 21, e14567 (2019).

Välimäki, M., Anttila, K., Anttila, M. & Lahti, M. Web-based interventions supporting adolescents and young people with depressive symptoms: systematic review and meta-analysis. JMIR mHealth uHealth 5, e180 (2017).

Seko, Y., Kidd, S., Wiljer, D. & McKenzie, K. Youth mental health interventions via mobile phones: a scoping review. Cyberpsychol Behav Soc Netw 17, 591–602 (2014).

Grist, R., Porter, J. & Stallard, P. Mental health mobile apps for preadolescents and adolescents: a systematic review. J. Med. Internet Res. 19, e176 (2017).

Bergin, A. & Davies, E. B. Technology matters: mental health apps—separating the wheat from the chaff. Child Adolesc. Ment. Health 25, 51–53 (2020).

Harrer, M. et al. Internet interventions for mental health in university students: A systematic review and meta-analysis. Int. J. Methods Psychiatr. Res. 28, 1–18 (2019).

Rith-Najarian, L. R., Boustani, M. M. & Chorpita, B. F. A systematic review of prevention programs targeting depression, anxiety, and stress in university students. J. Affect. Disord. 257, 568–584 (2019).

Rooksby, M., Elouafkaoui, P., Humphris, G., Clarkson, J. & Freeman, R. Internet-assisted delivery of cognitive behavioural therapy (CBT) for childhood anxiety: Systematic review and meta-analysis. J. Anxiety Disord. 29, 83–92 (2015).

Pennant, M. E. et al. Computerised therapies for anxiety and depression in children and young people: a systematic review and meta-analysis. Behav. Res. Ther. 67, 1–18 (2015).

Ebert, D. D. et al. Internet and computer-based cognitive behavioral therapy for anxiety and depression in youth: a meta-analysis of randomized controlled outcome trials. PLoS ONE 10, e0119895 (2015).

Rice, S. M. et al. Online and social networking interventions for the treatment of depression in young people: a systematic review. JMIR 16, e206 (2014).

Reyes-Portillo, J. A. et al. Web-based interventions for youth internalizing pROBLEMS: A SYSTEMATIC review. J. Am. Acad. Child Adolesc. Psychiatry 53, 1254–1270.e5 (2014).

Caldwell, D. M. et al. School-based interventions to prevent anxiety and depression in children and young people: a systematic review and network meta-analysis. Lancet Psychiatry 0366, 1–10 (2019).

Stasiak, K. et al. Computer-based and online therapy for depression and anxiety in children and adolescents. J. Child Adolesc. Psychopharmacol. 26, 235–245 (2016).

Jirotka, M., Grimpe, B., Stahl, B., Eden, G. & Hartswood, M. Responsible research and innovation in the digital age. Commun. ACM. https://doi.org/10.1145/3064940 (2016).

Munn, Z. et al. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med. Res. Methodol. 18, 143 (2018).

Arksey, H. & O’Malley, L. Scoping studies: towards a methodological framework. Int. J. Soc. Res. Methodol. 8, 19–32 (2005).

Moher, D., Liberati, A., Tetzlaff, J. & Altman, D. G. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ 62, 1006–1012 (2009).

Clarke, A. M., Kuosmanen, T. & Barry, M. M. A systematic review of online youth mental health promotion and prevention interventions. J. Youth Adolesc. 44, 90–113 (2014).

Peters, M. D. J. et al. Scoping reviews. JBI Reviewer’s Manual. https://doi.org/10.46658/JBIMES-20-12 (2019).

Lattie, E. G. et al. Teens engaged in collaborative health: the feasibility and acceptability of an online skill-building intervention for adolescents at risk for depression. Internet Interv. 8, 15–26 (2017).

Duncan, C., Rayment, B., Kenrick, J. & Cooper, M. Counselling for young people and young adults in the voluntary and community sector: an overview of the demographic profile of clients and outcomes. Psychol. Psychother. Theory, Res. Pract. 93, 36–53 (2020).

Yeh, M. et al. Referral sources, diagnoses, and service types of youth in public outpatient mental health care: a focus on ethnic minorities. J. Behav. Health Serv. Res. 29, 45–60 (2002).

Edbrooke-Childs, J. & Patalay, P. Ethnic differences in referral routes to youth mental health services. J. Am. Acad. Child Adolesc. Psychiatry 58, 368–375.e1 (2019).

Bostwick, W. B. et al. Mental health and suicidality among racially/ethnically diverse sexual minority youths. Am. J. Public Health 104, 1129–1136 (2014).

National Institute for Health and Care Excellence. Depression in children and young people: identification and management (NICE Guideline 134). Available at: https://www.nice.org.uk/guidance/ng134 (2019).

NICE. Social Anxiety Disorder: The NICE Guidelines on Recognition, Assessment and Treatment. (2013). Available at: http://www.nice.org.uk/guidance/cg159/resources/cg159-social-anxiety-disorder-full-guideline3.

Callahan, P., Liu, P., Purcell, R., Parker, A. G. & Hetrick, S. E. Evidence map of prevention and treatment interventions for depression in young people. Depress Res. Treat. 2012, 14–16 (2012).

Naslund, J. A. et al. Digital innovations for global mental health: opportunities for data science, task sharing, and early intervention. Curr. Treat. Options Psychiatry 6, 337–351 (2019).

Whittaker, R. et al. MEMO: an mHealth intervention to prevent the onset of depression in adolescents: a double-blind, randomised, placebo-controlled trial. J. Child Psychol. Psychiatry Allied Discip. 58, 1014–1022 (2017).

Lillevoll, K. R., Vangberg, H. C. B., Griffiths, K. M., Waterloo, K. & Eisemann, M. R. Uptake and adherence of a self-directed internet-based mental health intervention with tailored e-mail reminders in senior high schools in Norway. BMC Psychiatry 14, 14 (2014).

Perry, Y. et al. Preventing depression in final year secondary students: School-based randomized controlled trial. J. Med. Internet Res. 19, e369 (2017).

Kuosmanen, T., Fleming, T. M. & Barry, M. M. The implementation of SPARX-R computerized mental health program in alternative education: Exploring the factors contributing to engagement and dropout. Child. Youth Serv. Rev. 84, 176–184 (2018).

Calear, A. L., Christensen, H., Brewer, J., Mackinnon, A. & Griffiths, K. M. A pilot randomized controlled trial of the e-couch anxiety and worry program in schools. Internet Interv. 6, 1–5 (2016).

Calear, A. L. et al. Cluster randomised controlled trial of the e-couch Anxiety and Worry program in schools. J. Affect. Disord. 196, 210–217 (2016).

Burckhardt, R. et al. A web-based adolescent positive psychology program in schools: Randomized controlled trial. J. Med. Internet Res. 17, e187 (2015).

Bannink, R. et al. Effectiveness of a web-based tailored intervention (E-health4Uth) and consultation to promote adolescents' health: Randomized controlled trial. J. Med. Internet Res. 16, e143 (2014).

Woolderink, M. et al. An online health prevention intervention for youth with addicted or mentally III parents: Experiences and perspectives of participants and providers from a randomized controlled trial. J. Med. Internet Res. 17, e274 (2015).

Boring, J. L., Sandler, I. N., Tein, J. Y., Horan, J. J. & Velez, C. E. Children of divorce-coping with divorce: a randomized control trial of an online prevention program for youth experiencing parental divorce. J. Consult. Clin. Psychol. 83, 999–1005 (2015).

Sethi, S. Treating youth depression and anxiety: a randomised controlled trial examining the efficacy of computerised versus face-to-face cognitive behaviour therapy. Aust. Psychol. 48, 249–257 (2013).

Robinson, J. et al. Can an Internet-based intervention reduce suicidal ideation, depression and hopelessness among secondary school students: Results from a pilot study. Early Interv. Psychiatry 10, 28–35 (2016).

Robinson, J. et al. The safety and acceptability of delivering an online intervention to secondary students at risk of suicide: findings from a pilot study. Early Interv. Psychiatry 9, 498–506 (2015).

Hetrick, S. E. et al. Internet-based cognitive behavioural therapy for young people with suicide-related behaviour (Reframe-IT): a randomised controlled trial. Evid. Based Ment. Health 20, 76–85 (2017).

Stasiak, K., Hatcher, S., Frampton, C. & Merry, S. N. A pilot double blind randomized placebo controlled trial of a prototype computer-based cognitive behavioural therapy program for adolescents with symptoms of depression. Behav. Cogn. Psychother. 42, 385–401 (2014).

Bidargaddi, N. et al. Efficacy of a web-based guided recommendation service for a curated list of readily available mental health and well-being mobile apps for young people: randomized controlled trial. J. Med. Internet Res. 19, e141 (2017).

Taylor-Rodgers, E. & Batterham, P. J. Evaluation of an online psychoeducation intervention to promote mental health help seeking attitudes and intentions among young adults: randomised controlled trial. J. Affect. Disord. 168, 65–71 (2014).

Levin, M. E., Pistorello, J., Seeley, J. R. & Hayes, S. C. Feasibility of a Prototype web-based acceptance and commitment therapy prevention program for college students NIH public access. J Am Coll Heal. 62, 20–30 (2014).

Rodriguez, A. et al. A VR-based serious game for studying emotional regulation in adolescents. IEEE Comput. Graph. Appl. 35, 65–73 (2015).

Poppelaars, M. et al. A randomized controlled trial comparing two cognitive-behavioral programs for adolescent girls with subclinical depression: A school-based program (Op Volle Kracht) and a computerized program (SPARX). Behav. Res. Ther. 80, 33–42 (2016).

Lucassen, M. F. G., Merry, S. N., Hatcher, S. & Frampton, C. M. A. Rainbow SPARX: a novel approach to addressing depression in sexual minority youth. Cogn. Behav. Pract. 22, 203–216 (2015).

Smith, P. et al. Computerised CBT for depressed adolescents: Randomised controlled trial. Behav. Res. Ther. 73, 104–110 (2015).

March, S., Spence, S. H., Donovan, C. L. & Kenardy, J. A. Large-scale dissemination of internet-based cognitive behavioral therapy for youth anxiety:Feasibility and acceptability study. J. Med. Internet Res. 20, (2018).

Eisen, J. C. et al. Pilot Study of Implementation of an Internet-Based Depression Prevention Intervention (CATCH-IT) for Adolescents in 12 US Primary Care Practices. Prim. Care Companion CNS Disord. 15, e6 (2013).

Gladstone, T. et al. Understanding adolescent response to a technology-based depression prevention program. J. Clin. Child Adolesc. Psychol. 43, 102–114 (2014).

Ip, P. et al. Effectiveness of a culturally attuned Internet-based depression prevention program for Chinese adolescents: a randomized controlled trial. Depress Anxiety 33, 1123–1131 (2016).

Kramer, J., Conijn, B., Oijevaar, P. & Riper, H. Effectiveness of a web-based solution-focused brief chat treatment for depressed adolescents and young adults: Randomized controlled trial. J. Med. Internet Res. 16, e141 (2014).

Rickhi, B. et al. Evaluation of a spirituality informed e-mental health tool as an intervention for major depressive disorder in adolescents and young adults—a randomized controlled pilot trial. BMC Complement. Altern. Med. 15, 1–14 (2015).

Sportel, B. E., de Hullu, E., de Jong, P. J. & Nauta, M. H. Cognitive bias modification versus CBT in reducing adolescent social anxiety: a randomized controlled trial. PLoS ONE 8, e64355 (2013).

Acknowledgements

A.B., E.B.D., E.P.V., J.M., D.D., R.M. and C.H. receive financial support from the NIHR MindTech MedTech Co-operative and the NIHR Nottingham Biomedical Research Centre. This work has been funded by the National Institute for Health Research. The views represented are the views of the authors alone and do not necessarily represent the views of the Department of Health and Social Care in England, NHS, or the National Institute for Health Research. S.H. is funded by an Auckland Medical Research Foundation Douglas Goodfellow Repatriation Fellowship, A Better Start National Science Challenge (UOAX190), Cure Kids Research Fellow. J.R. is supported by a National Health and Medical Council Research Career Development Fellowship ID 1142348. S.M. is supported by Cure Kids, a charitable organisation in New Zealand. J.T. receives unrelated research support from Otsuka. E.S-B’s contribution was, in part supported, by funds from the NIHR Maudsley Biomedical Research Centre and e-Nurture Network (ES/5004467/1). T.F. accepted an honorarium from Shire/Takeda to discuss transition for young adults with ADHD at the Nurses ADHD Forum and is a research consultant to Place2Be.

Author information

Authors and Affiliations

Contributions

Conception/design—A.B., E.B.D., E.P.V., C.H., data collection—A.B., E.B.D., E.P.V., M.K., data analysis and interpretation—all authors, paper preparation—A.B., C.H., final approval—all authors.

Corresponding author

Ethics declarations

Competing interests

J.R., S.H. and S.M. were contributors to studies included in the review. D.D. reports grants, personal fees, non-financial support and other from Shire/Takeda, personal fees and non-financial support from Eli LIlly, personal fees and non-financial support from Medice, non-financial support from Qb Tech, other from Hachette, other from New Forest Parent Training Programme, outside the submitted work. All other authors have declared that they have no competing or potential conflicts of interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bergin, A.D., Vallejos, E.P., Davies, E.B. et al. Preventive digital mental health interventions for children and young people: a review of the design and reporting of research. npj Digit. Med. 3, 133 (2020). https://doi.org/10.1038/s41746-020-00339-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-020-00339-7

This article is cited by

-

Outcomes of Best-Practice Guided Digital Mental Health Interventions for Youth and Young Adults with Emerging Symptoms: Part II. A Systematic Review of User Experience Outcomes

Clinical Child and Family Psychology Review (2024)

-

An emerging framework for digital mental health design with Indigenous young people: a scoping review of the involvement of Indigenous young people in the design and evaluation of digital mental health interventions

Systematic Reviews (2023)

-

Are Digital Health Interventions That Target Lifestyle Risk Behaviors Effective for Improving Mental Health and Wellbeing in Adolescents? A Systematic Review with Meta-analyses

Adolescent Research Review (2023)

-

The Grow It! app—longitudinal changes in adolescent well-being during the COVID-19 pandemic: a proof-of-concept study

European Child & Adolescent Psychiatry (2023)

-

Interventions with Digital Tools for Mental Health Promotion among 11–18 Year Olds: A Systematic Review and Meta-Analysis

Journal of Youth and Adolescence (2023)