Abstract

We investigated how intelligent virtual assistants (IVA), including Amazon’s Alexa, Apple’s Siri, Google Assistant, Microsoft’s Cortana, and Samsung’s Bixby, responded to addiction help-seeking queries. We recorded if IVAs provided a singular response and if so, did they link users to treatment or treatment referral services. Only 4 of the 70 help-seeking queries presented to the five IVAs returned singular responses, with the remainder prompting confusion (e.g., “did I say something wrong?”). When asked “help me quit drugs” Alexa responded with a definition for the word drugs. “Help me quit…smoking” or “tobacco” on Google Assistant returned Dr. QuitNow (a cessation app), while on Siri “help me quit pot” promoted a marijuana retailer. IVAs should be revised to promote free, remote, federally sponsored addiction services, such as SAMSHA’s 1-800-662-HELP helpline. This would benefit millions of IVA users now and more to come as IVAs displace existing information-seeking engines.

Similar content being viewed by others

Introduction

Intelligent virtual assistants (IVA), such as Apple’s Siri, are transforming how the public seeks and finds information.1 IVAs are interfaces that enable users to interact with smart devices using spoken language in a natural way and provide a singular response to a query similar to speaking to a person.2 In contrast, traditional search engines return millions of relevant results to a query, relying on the user to collate the results and reach a conclusion. For example, querying “what’s the weather?” on a search engine would return multiple links from different weather services, but an IVA is designed to return a singular result—the current, local forecast.

Already half of US adults (46%) use IVAs3 and companies are substantially invested in the proliferation of IVAs. Yet, public health has done little to harness or study these technologies.4,5 One study4 found smartphone IVAs inconsistently recognized suicide-related queries with responses failing to direct users to the National Suicide Prevention Lifeline. Another study6 found that when asked a variety of health-related queries (i.e., queries originated by study participants and standard queries related to medication and emergency scenarios developed by researchers), IVAs directed users to take actions that could result in harm or death.

One of America’s most pressing public health emergencies is substance use disorder. Over 47,000 people died from an opioid overdose in 2017.7 Given the stigmatized nature of substance use disorder, IVAs could confidentially direct those in need to remote substance use resources. We performed an analysis of IVA responses to addiction help-seeking queries related to drugs and alcohol to assess what treatment resources, if any, are being promoted.

Results

Responses to help-seeking queries

When presented with the query “help me quit drugs”, only Amazon Alexa provided a singular response by defining the term drugs: “A drug is any substance that when inhaled, injected, smoked, consumed… causes a physiological change in the body…” No other IVA provided a singular response, including Apple Siri, Google Assistant, and Microsoft Cortana. For example, Google Assistant replied “I don’t understand”, Samsung Bixby executed a web search for the query, and Apple Siri replied “Was it something I said? I’ll go away if you say ‘goodbye’ ”.

The results were similar regardless of the substance cited in our queries (Table 1). All queries for alcohol and opioids across all IVAs failed to return any singular result, yielding confusion (e.g., Microsoft Cortana “I’m sorry. I couldn’t find that skill”). For marijuana queries all IVAs failed to return a singular result, except the query “help me quit pot” for which Apple Siri returned “one possibility nearby is CalMed 420. Want to try that one?” directing users to a local marijuana retailer.8 Only 2 of the 25 queries for tobacco returned singular results, with Google Assistant linking users to Dr. QuitNow (a mobile cessation app) for “help me quit…smoking or tobacco”.

Discussion

Among 70 addiction help-seeking queries presented to the five leading IVAs, only four queries elicited singular responses (one promoting a marijuana retailer) and only two queries linked to remote treatment or treatment referral programs. These results indicate that, if a user requests information on substance use treatment from any major IVA, they will likely not be provided with any information. Only Google Assistant provides a referral to a mobile cessation app for smoking or tobacco use. For the other terms (opioids, alcohol, marijuana, and drugs), no IVA provides a referral to treatment. Indeed, Siri’s referral to a marijuana retailer demonstrates that IVAs could be detrimental rather than helpful. Altogether, the IVAs’ responses to substance use help-seeking requests are a missed opportunity for promoting referrals to substance use treatment.

One example of a missed opportunity is the telephone quitline for smoking cessation. Telephone counseling has been extensively tested and recommended by the Clinical Practice Guidelines.9 There is a network of state quitlines with a national portal, a toll-free number, 1-800-QUIT-NOW. The number has been promoted since 2004.10 Moreover, the U.S. Centers for Disease Control and Prevention (CDC) has launched an aggressive and sustained nationwide media campaign since 2012 to encourage smokers to call this number to get help yielding.11 If an IVA responded with “Do you want to call 1-800-QUIT-NOW?” when prompted with “help me quit smoking,” the user could connect with a trained counselor. Promoting free government resources such as the quitline on IVAs is a logical extension of search engine’s recent adoption of OneBox strategies that prioritize free government resources in response to health queries. The missed opportunity herein is clear, and just as clear when examples for other substances are considered (i.e., 1-800-662-HELP for other addiction treatment referrals, including opioids).12 How then do these gaps in health promotion persist two decades into the digital revolution,13 and can they be closed?

First, health promotion is potentially outside the profit-driven mission of technology companies. However, these companies are still involved in the health promotion industry because of how the public uses their services. The health community can make technology companies another vendor for how health agencies disseminate their services. Given the public’s use of IVAs now and in the years to come,2 this may yield a larger return on investment for health agencies than their traditional paid campaigns.

Second, overriding algorithms represents a potential liability for technology companies who lack health subject matter expertise. Technology companies therefore may be reluctant to steer users to specific services for fear of public backlash that might reduce their user base or legal action when users are referred to dubious services selected by non-health experts. Providing subject matter expertise to technology companies, celebrating the cooperation of technology companies with public health to their users in social good campaigns, and regulating potential litigation against companies that promote the best available evidence-based resources can reduce liabilities that hinder action.

Third, public health is doing a poor job of prioritizing technology companies as partners. Public health can be more proactive and invest in identifying gaps between consumers’ help-seeking and information retrieval results. The return on investment in this area of research is high given that one study on IVAs has already changed the information consumers receive on several IVA-enabled devices.4 A larger investment in applied research could yield even greater responses.

Our study was limited to only a few substance help-seeking queries and may not be reflective of common queries, but the consistency of the negative results suggests omitted queries might yield similar findings. Our study was restricted to San Diego, CA thereby the singular IVA response that linked to a local marijuana retailer in one case may not be replicated in all settings. Still, our results are a strong call to action for this new area of research.

To ensure the best, free, public addiction services are promoted by IVAs, research toward addressing the systemic shortcomings we discovered can begin now. Such effort would galvanize a collaboration between technology companies and public health professionals to give the public the information they need to take charge of their health.

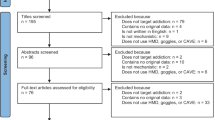

Methods

Procedure for help-seeking queries

We investigated five IVAs representing 99% of the smartphone and stand-alone IVA marketplace: Amazon Alexa (Echo Dot home device), Apple Siri (iPhone 7 smartphone), Google Assistant (ZTE Blade Spark (Z971) smartphone), Microsoft Cortana (Samsung Galaxy S8 smartphone), and Samsung Bixby (Samsung Galaxy S9 smartphone).14 The software for each device was up-to-date at the time of the study (January 2019) and the language was set to US English.

We mimicked addiction help-seeking queries on these IVAs in January 2019 in San Diego, CA. The common stem for all our prompts was “help me quit…” concluding with generic (e.g.,…“drugs”) and substance-specific requests (e.g.,…“drinking”) for the most used substances, including alcohol (140.6M users), tobacco (48.7M), marijuana (26.0M), and opioids (11.4M).15 Ultimately, this resulted in 14 types of queries, including “drugs” (for drugs), “alcohol” and “drinking” (for alcohol), “marijuana,” “pot,” and “weed” (for marijuana), “fentanyl,” “heroin,” “opioids,” and “painkillers” (for opioids), and “cigarettes,” “vaping,” “smoking,” and “tobacco” (for tobacco) across five devices for a total of 70 queries.

Recent research indicates that IVAs may struggle to comprehend medical terms.16 Consequently, each addiction help-seeking query was repeated by two different authors, who each independently recorded the response verbatim. The queries were spoken live (i.e., the voices were not recorded and then replayed) by two native English speakers. All responses were consistent. The IVAs with graphical user interfaces (Alexa is the only IVA without a graphical user interface) all transcribe the spoken query into text that is displayed on the screen. The authors ensured the accuracy of the query by confirming the query was correctly dictated. We assessed responses along two dimensions: (A) Did IVAs provide a singular response to addiction help-seeking queries (Yes/No)? (B) Among the singular responses from IVAs, what proportion linked to an available treatment or treatment referral service (Yes/No)? The former assesses if they are recognizing the query, the latter on how IVAs would ideally operate by providing a referral to treatment.17 The proportion of singular and treatment or treatment referral were calculated across IVAs and substance.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The data used in the study are public in nature, describing IVA responses to spoken queries. A listing of queries, responses, and our final codings are available upon request.

References

Miner, A. S., Milstein, A. & Hancock, J. T. Talking to machines about personal mental health problems. JAMA 318, 1217–1218 (2017).

McTear, M., Callejas, Z. & Griol, D. The Conversational Interface: Talking to Smart Devices (Springer, 2016).

Pew Research Center. Nearly half of Americans use digital voice assistants, mostly on their smartphones. http://www.pewresearch.org/fact-tank/2017/12/12/nearly-half-of-americans-use-digital-voice-assistants-mostly-on-their-smartphones/. Accessed 2 Feb 2019.

Miner, A. S. et al. Smartphone-based conversational agents and responses to questions about mental health, interpersonal violence, and physical health. JAMA Intern. Med. 176, 619–625 (2016).

Boyd M. & Wilson N. Just ask Siri? A pilot study comparing smartphone digital assistants and laptop Google searches for smoking cessation advice. PLOS ONE 13, e0194811 (2018).

Bickmore, T. W. et al. Patient and consumer safety risks when using conversational assistants for medical information: an observational study of Siri, Alexa, and Google Assistant. JMIR 20, e11510 (2018).

US Department of Health and Human Services. About the Epidemic. https://www.hhs.gov/opioids/about-the-epidemic/index.html. Accessed 20 Sep 2019.

Anderson, C. M. & Zhu, S.-H. Tobacco quitlines: looking back and looking ahead. Tob. Control. 16, i81–i86 (2007).

Ayers, J. W., Althouse, B. M. & Emery, S. Changes in Internet searches associated with the “Tips from Former Smokers” campaign. Am. J. Prev. Med. 48, e27–e29 (2015).

Ayers, J. W., Nobles, A. L. & Dredze, M. Media trends for the substance abuse and mental health services administration 800-662-HELP addiction treatment referral services after a celebrity overdose. JAMA Intern Med. 179, 441–442 (2019).

Ayers, J. W., Althouse, B. M. & Dredze, M. Could behavioral medicine lead the web data revolution? JAMA 311, 1399–1400 (2014).

Voicebot. Smart Speaker Consumer Adoption Report 2018—Voicebot. https://voicebot.ai/download-smart-speaker-consumer-adoption-report-2018/. Accessed 20 Sep 2019.

US Department of Health and Human Services. 2017 NSDUH Annual National Report. CBHSQ. https://www.samhsa.gov/data/report/2017-nsduh-annual-national-report. Accessed 20 Sep 2019.

Palanica, A., Thommandram, A., Lee, A., Li, M. & Fossat, Y. Do you understand the words that are coming outta my mouth? Voice assistant comprehension of medication names. npj Digital Med. 2, 55 (2019).

Gotham, H. J. Diffusion of mental health and substance abuse treatments: development, dissemination, and implementation. Clin. Psychol. Sci. Pract. 11, 160–176 (2004).

Caputi, T. C., Leas, E. C., Dredze, M. & Ayers, J. W. Online sales of marijuana: an unrecognized public health dilemma. Am. J. Prev. Med. 54, 719–721 (2018).

Fiore, M. C. et al. Treating tobacco use and dependence: 2008 update US Public Health Service Clinical Practice Guideline executive summary. Respir. Care 53, 1217–1222 (2008).

Acknowledgements

This work was supported by the Tobacco-Related Disease Research Program 587873. A.L.N. acknowledges support from NIDA through a T32 grant (T32 DA023356). E.C.L. acknowledges salary support from grant 1R01CA234539-01 from the National Cancer Institute at the National Institutes of Health and grant 28IR-0066. The funders played no role in the decision to publish, nor the conception, preparation, or revision of this work.

Author information

Authors and Affiliations

Contributions

J.W.A. initiated the project and the collaboration. A.L.N., E.C.L., and J.W.A. led the design, data collection, and data analysis. All authors participated in the drafting of the manuscript, its revision, read and agreed to the final submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nobles, A.L., Leas, E.C., Caputi, T.L. et al. Responses to addiction help-seeking from Alexa, Siri, Google Assistant, Cortana, and Bixby intelligent virtual assistants. npj Digit. Med. 3, 11 (2020). https://doi.org/10.1038/s41746-019-0215-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-019-0215-9

This article is cited by

-

Effects of a virtual voice-based coach delivering problem-solving treatment on emotional distress and brain function: a pilot RCT in depression and anxiety

Translational Psychiatry (2023)

-

Readiness for voice assistants to support healthcare delivery during a health crisis and pandemic

npj Digital Medicine (2020)

-

Chatbots in the fight against the COVID-19 pandemic

npj Digital Medicine (2020)