Abstract

Global warming exerts a strong impact on the Earth system. Despite recent progress, Earth System Models still project a large range of possible warming levels. Here we employ a generalized stochastic climate model to derive a response operator which computes the global mean surface temperature given specific forcing scenarios to quantify the impact of past emissions on current warming. This approach enables us to systematically separate between the “forcing-induced direct” and the “memory-induced indirect” trends. Based on historical records, we find that the direct-forcing-response is weak, while we attribute the major portion of the observed global warming trend to the indirect-memory responses that are accumulated from past emissions. Compared to CMIP6 simulations, our data-driven approach projects lower global warming levels over the next few decades. Our results suggest that CMIP6 models may have a higher transient climate sensitivity than warranted from the observational record, due to them having larger long-term memory than observed.

Similar content being viewed by others

Introduction

The climate crisis is of utmost concern to both the climate science community and the public1. The 2015 Paris Agreement has set a target of limiting the global temperature increase in this century to 2.0 oC, while trying at the same time to pursue a more ambitious target to limit the increase to 1.5 oC. Substantially different impacts between the warming of 1.5 and 2.0 oC have been widely reported2,3. To cope with this unprecedented challenge, effective actions are urgently needed4,5, and reliable estimations of climate response to anthropogenic greenhouse gas emissions are required.

The global climate system has a huge inertia6, delaying the temperature response to changes in greenhouse gas concentrations. Of the heat resulting from anthropogenic greenhouse gas emissions, more than 90% is stored in the ocean7. The ocean can in turn affect the air temperatures as well as the entire climate system in a slow and persistent way. Accordingly, even if no more carbon dioxide were emitted to the atmosphere now, or more realistically speaking, if the target of carbon neutralization were achieved, the global temperature may still rise for decades to reach an equilibrium state. For instance, for the estimation of the Equilibrium Climate Sensitivity (ECS), thousands of years of model simulations are needed to obtain the ECS8, which requires massive computational resources, but the estimate would still have a large uncertainty attached to it due to structural model issues9,10. Note that a more popular way, compared to the way of running models for thousands of years, is to estimate the ECS from a short, e.g., 4 × CO2 climate model experiment assuming the relationship between the top-of-atmosphere (TOA) radiative imbalance N and the global mean surface temperature change ΔT is linear as N = F − λΔT11. However, the facts that (i) the climate response is nonlinear12 and (ii) the feedback parameter λ varies over time scales13 make the estimation still challenging. In order to better design adaptation strategies, understanding how the global temperature reacts to the anthropogenic radiative forcing is an urgent issue.

Ever since the middle of the last century, when the “Hurst Phenomenon” was discovered14,15, it has been recognized that many climate variables are characterized by long-term climate memory14,16,17,18,19, which is relevant for understanding the inertia in the climate system. Different from the short-term memory, which describes the persistence of a given process on weather scales (i.e., a few days to around two weeks), long-term climate memory depicts the scaling behavior of multiple processes of different time scales. This cascade represents how multi-scale processes affect each other, i.e., fast processes may force slow processes to change, while slow processes may modulate the variations of fast processes on a longer scale20. Consequently, time series with scaling behaviors are characterized by the persistence of much longer time scales. This property is called long-term memory14. Based on the classical Brownian Motion and Random Walk theories, various approaches such as the Fluctuation Analysis (FA)21, the Detrended Fluctuation Analysis (DFA)22, have been developed and widely used over the past few decades to quantify the strength of the long-term climate memory in different climatic variables ranging from surface air temperatures19,23, sea surface temperatures24 and precipitation18, to relative humidity16, sea level17, and the atmospheric general circulation25. A physically understandable finding was that the air temperatures of islands have stronger long-term climate memory than those from inner continents, thus highlighting the impacts of the ocean heat capacity24,26. If large-scale spatially averaged temperatures (e.g., global mean surface temperature) are considered, strong climate memory has been reported27, which is in line with the fact that the ocean may store the heat and release it back to the atmosphere slowly, hence, producing a delayed response.

Over the past years, many efforts have been devoted to study the climate memory effects26,28,29,30,31,32,33,34,35,36,37,38,39,40,41. Beyond the classical energy-balance model (EBM) of a finite number of boxes26,28,29,30,31,32, recent studies have introduced the concept of scaling among multiple time scales to lift the restrictions of a single e-folding time in a box model33,34. By further exploiting the large memory characterized by scaling behavior, several advanced models such as the fractional energy balance equation (FEBE)35, have been developed to simulate and predict global temperatures35,36,37,38,39. In these models, there are usually 2–3 parameters that need to be fitted using both the historical temperature records and the forcing data. By further feeding radiative forcing (RF) data into the model, one, thus, can estimate external forcing-induced trends and extract the residual internal variability. However, with the current models it is still not clear how much of the warming is directly induced by the external forcings and how much is due to the climate memory effects, which is a crucial question for Detection and Attribution (D & A) studies. According to the concept of stochastic climate models (SCM) that was first proposed by Hasselmann in 197642, the slow processes in the climate system can be regarded as accumulative responses to continual excitations. If the continual excitations are considered as the fast external forcing, while the accumulative responses as the long-term internal memory, a straightforward question thus is, can we study global warming by separately considering the “forcing-induced direct trend” and the “memory-induced indirect trend”?

To address this question, we use the Fractional Integral Statistical Model (FISM)20,43. The FISM is a generalized version of the classic SCM. It incorporates fractional integral techniques and has been shown to be able to decompose a given climatic time series x(t) into two components,

where ε(t) represents the continual excitations (hereafter the direct-forcing-response), and M(t) the accumulated responses to the historical ε (hereafter the indirect-memory-response). Both ε(t) and M(t) have the same unit as the variable x(t), i.e., when studying temperatures, the unit is degree Celsius. One advantage of the FISM is that only one parameter (the integral order q, see the “Methods” section) is required to extract the direct-forcing-response and the indirect-memory-response from x(t), and the parameter can be objectively measured from the climatic variable of interest. Accordingly, the FISM has been successfully applied for various aspects, e.g., estimating climate predictability with climate memory effects properly considered44, correcting tree-ring width based paleo-reconstructions with non-climatic persistence reasonably removed45, among others. Here in this study, we focus on global mean surface temperature anomalies (GMTA). By employing FISM, we aim to (i) detect the “direct responses” of the GMTA to radiative forcings (RFs) and the “indirect responses” of the GMTA accumulated from climate memory, and (ii) depict a new picture of how the GMTA changes under the combined effects of external forcings and internal memory. Moreover, a new method for projecting the future warming trend is discussed.

Results

The “direct-forcing-response” and “indirect-memory-response”

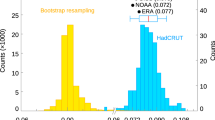

In order to extract the “direct-forcing-response” and the “indirect-memory-response” from the GMTA, we first need to measure the strength of the long-term climate memory in GMTA. Here, we apply the Detrended Fluctuation Analysis of second order (DFA2)46 to the monthly GMTA obtained from the Met Office Hadley Centre (HadCRUT5.0.1, anomaly data from 1850-2020, relative to the reference period 1850–1900). DFA2 calculates the fluctuation function F(s). If F(s) increases with the time scale s as a power law, F(s) ~ sα with α > 0.5, then long-term climate memory is present in the time series. As shown in Fig. 1a, a clear power law relation between F(s) and s is found with a slope of 0.90. This means that the GMTA is characterized by strong long-term climate memory. Based on this result, we further apply the Fractional Integral Statistical Model (FISM) to the GMTA with a proper fractional integral order q = 0.4 (see the “Methods” section, q = α − 0.5). After estimating the historical \(\varepsilon ({t}^{\prime})\) (see Eq. (4) in the “Methods” section, \({t}^{\prime} \,<\, t\), \({t}^{\prime}\) represents the historical time point and t is the considered present time point), we calculated the corresponding indirect-memory-response accumulated at t, M(t) (see Eq. (3) in the “Methods” section). From Fig. 1b, one can see a clear warming trend of the GMTA, especially during the past half century. We attribute most of the warming trend to the indirect-memory-response, as the direct-forcing-response (the blue curve) provides only a small contribution. Taking the global mean temperatures during 1850–1900 as a reference, the GMTA has increased by about 1.2 oC, and the climate memory-induced “indirect” trend accounts for more than 90% of the total warming trend. This is reasonable given the fact that more than 90% of the heat resulting from anthropogenic greenhouse gas emissions is stored in the ocean, and the surface temperature would respond to external forcings on long-time scales32. Regarding the forcing-induced “direct” trend (denoted as εd), although it is weak, one should note that it is actually the source of the warming trend in GMTA (see Eq. (3) in the “Methods” section). For instance, if the weak trend εd (i.e., see the inserted sub-figure in Fig. 1b) is removed from ε, by putting the detrended part (denoted as εs) into the FISM model (Eq. (4)), only natural variabilities of the GMTA are reproduced (the red curve in Fig. 1c). Therefore, although the trend of ε(t) is weak, it is only a “direct” response to the increased radiative forcing. Since the GMTA is characterized by strong long-term climate memory, after the direct response, the climate system (especially the ocean) may store the heat and release it slowly back to the atmosphere: this is what causes the climate memory, and here it is quantified as the indirect-memory-response M(t).

a The DFA result of the historical global mean surface temperature anomalies (GMTA), and b the extracted direct-forcing-response. In the inserted sub-figure, the trend of the direct-forcing-response (denoted as εd) detected from the Ensemble Empirical Mode Decomposition (EEMD) analysis is shown. By feeding the extracted direct-forcing-response back to the FISM model, the historical GMTA is fully reproduced, see the black curve in (c). If εd is removed, however, by feeding the detrended direct-forcing-response to FISM, only natural variabilities of the GMTA are reproduced without long-term warming trend, see the red curve in (c).

To better demonstrate the meaning of ε(t), we further investigated the relations between the ε(t) extracted from the GMTA and the historical effective radiative forcing (ERF) data. According to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (IPCC AR5), the ERF is defined as the change in net top-of-the-atmosphere downward radiative flux after allowing rapid adjustments including changes to atmospheric temperatures, water vapor and clouds47. In this study, the historical annual ERF estimates are from the IPCC AR547, and they are further extended to 2017 by Dessler and Foster48. These data are available at the KNMI Climate Explorer. Figure 2a shows the trend of the ε(t) (εd(t), the red curve) from 1850 to 2020 and the total ERF (black curve) from 1850 to 2017. In order to avoid a priori restrictions of the trend type, the Ensemble Empirical Mode Decomposition analysis (EEMD)49 was employed to estimate the trend of the ε(t). There seems to be a good linear relationship between the trend of the ε(t) and the trend of the ERF, despite the sharp down turns in the total ERF due to volcanic activities. Note that the units and the y-axes of the two variables in Fig. 2a are different. To confirm the linear relationship between the ε(t) and the ERF, we compared in detail the trend of the ε(t) with the trend of the anthropogenic ERF data (Fig. 2b). The EEMD analysis was employed again to estimate the trends. In view of (i) the poor data coverage for the calculation of GMTA in the 19th century (Supplementary Fig. 1) and (ii) the boundary effects of the EEMD analysis, we only consider the trends in the 20th century (1901-–2000). As shown in Fig. 2b, the trend of the ε(t) (red curve) agrees very well with the trend of the anthropogenic ERF (asterisk-dashed curve). From this linear relationship, one can easily obtain an empirical formula that connects the trends of the ε(t) and the anthropogenic ERF,

where the subscript d denotes “trend” of the corresponding variable, a = 0.037, and b = −0.0043. Note the parameter “a” has units of “Celsius ⋅ Square meter/Watts”, while “b” has units of “Celsius”. Apparently, εd(t) and \({{{\rm{Anth}}}}\_{{{{\rm{ERF}}}}}_{{{{\rm{d}}}}}\) are almost proportional, and “a” determines the instantaneous responses of the GMTA to the changes of \({{{\rm{Anth}}}}\_{{{{\rm{ERF}}}}}_{{{{\rm{d}}}}}\). Since in ε(t) the indirect-memory-responses have been removed, this parameter “a” might be considered as an intrinsic measure of the instantaneous sensitivity. In addition to the analyses using observational data, to better understand the meaning of the ε(t), we also examined historical CMIP model simulations that were forced by different forcings (i.e., greenhouse-gas-only (Hist-GHG), anthropogenic-aerosol-only (Hist-AA), Solar-only (Hist-SI), volcanic-only (Hist-VI)). For this examination we consider a CMIP5 model (the Community Climate System Model version 4, CCSM4) and a CMIP6 model (the Community Earth System Model version 2, CESM2). Based on the simulated global mean surface temperatures, we repeated the above calculations and extracted ε(t) from the simulated GMTA under different forcings. Supplementary Fig. 2 shows the relationships between the extracted ε(t) (black curves) and the corresponding ERF data (red dashed lines). The ε(t) captures very well the long-term trends of the greenhouse gas forcing and the anthropogenic aerosol forcing (Supplementary Fig. 2a, b, e, f), as well as some inter-annual variations of the volcanic forcing and the solar forcing (Supplementary Fig. 2c, d, 3). Recall the definition of ε(t) in the FISM, this term extracted from the GMTA indeed is closely related to the natural and anthropogenic external forcings. It varies instantaneously with the changes of the external forcings and, thus, represents the “direct” responses of the GMTA to the external forcings. The memory term, on the other hand, is driven by the ε(t) (see Eq. (3) in the “Methods” section) and exhibits the responses in a much slower way (indirect-memory-responses). It is worth noting that we also examined the simulated relation between the trend of ε(t) and the trend of the ERF. Using historical simulations with all forcings included, we repeated the above calculations and found much smaller a and b parameters in CESM2 (aALL = 0.018 oC m2/W, bALL = −0.001 oC). A small “a” indicates a weak instantaneous sensitivity in the model. More specifically, the direct responses of the GMTA to the increase of the anthropogenic ERF are weaker in the model than in the observations. To reproduce the observed warming trend in the GMTA, the indirect memory response from the model simulations, thus, have to contribute more to the warming trend, in which case the long-term climate memory strength would need to be stronger (i.e., with larger q, see the next section). Besides the estimation of a using simulations with all forcings, we also examined the results from different forcing experiments, i.e., Hist-GHG and Hist-AA. It is well known that the greenhouse-gas forcing is the main contributor to global warming, while after removing the long-term climate memory impacts, the instantaneous response parameter aGHG (0.019 oC m2/W) is found to be nearly identical with aALL (0.018 oC m2/W) if simulations from the same model (i.e., CESM2) are analyzed. As for the CESM2 simulation forced by Hist-AA, a remarkable GMTA cooling is revealed in the second half of the 20th century (−0.09 oC/decade). After separating the GMTA into the “direct-forcing-response” and the “indirect-memory-response”, more than 90% of the cooling in this period is attributed to the memory term, and the instantaneous response parameter aAA is estimated to be 0.026 oC m2/W, which is only slightly higher than aALL. These similar a values from the same model (under different forcings) again support the physical meaning of a as an intrinsic measure of the instantaneous sensitivity. Moreover, by comparing the parameter a from model simulations with that from observations, it may also be used as a new test-bed for model evaluations.

The ERF estimates are from the IPCC AR547, and they are further extended to 2017 by Dessler and Foster47,48. a a rough comparison of the direct-forcing-response trend with the historical ERF. Using the EEMD method, the trend of the direct-forcing-response is detected as the residual component of the EEMD analysis (see also Fig. 1e) and shown here as the red curve. The two variables have different units, but from this rough comparison, a close relationship between the trend of the ε(t) and the trend of the ERF can be observed. b a detailed comparison of the trend of the ε(t) (the red curve) with the trend of the historical anthropogenic ERF data (the black asterisk-dashed curve). Again, the EEMD method is used to detect the trend of the historical anthropogenic ERF data. In view of the poor data coverage for the calculation of GMTA in the 19th century and the boundary effects of the EEMD analysis, the comparison is made over the time of the 20th century (the blue box). A close relationship between the two trends can be observed.

A new method for future GMTA projection

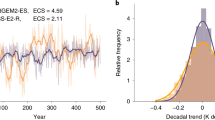

Based on the above findings, the observed warming trend in GMTA is explained by considering both the “direct” trend from the direct-forcing-response ε(t) and the “indirect” trend from the indirect-memory-response M(t). If the memory strength (i.e., the DFA exponent α) is known, the GMTA can be simulated by substituting the ε(t) into the FISM model. For example, the observed GMTA is well reproduced (see the black curve in Fig. 1c) by conducting a fractional integration (q = 0.4) on the direct-forcing response shown in Fig. 1b (the blue curve). Considering that the direct-forcing-response is closely related to the ERF data, using their relationship (e.g., Eq. (2)), the future GMTA trend under a given scenario (e.g., RCPs, SSPs) may be projected assuming the memory strength in GMTA remains unchanged. Following this idea, we conducted a projection of the future GMTA trend under the Shared Socioeconomic Pathways (SSP)50, scenario SSP2-4.5 (for details of the approach, please refer to the “Methods” section). This scenario is an update of RCP4.5 in CMIP5 and combines intermediate societal vulnerability51. It has been widely used by several CMIP6 Model Intercomparison Projects (MIPs), such as the Decadal Climate Prediction Project (DCPP)52, and the Detection and Attribution MIP (DAMIP)53. In this study, we used the radiative forcing data under SSP2-4.5 calculated by the MESSAGE-GLOBIOM model54 (Supplementary Fig. 4). By estimating the corresponding trend of the direct-forcing-response using Eq. (2), we are able to project the future GMTA trend. Note that besides the future projection, similarly we could also perform a historical simulation based on the trend of the direct-forcing-response that is estimated from the historical radiative forcing data. By considering the long-term climate memory impacts from the past 100 years, Fig. 3 shows the GMTA simulation from 1951 and the projection from 2015. The blue and yellow areas indicate the 95% boundaries of 1000 realizations, while the thicker blue and yellow lines are the simulation and projection means, respectively. The simulations capture well the historical warming trend from 1951 to 2014, and the uncertainty interval covers nearly all the fluctuations around the warming trend. This indicates that the new approach indeed projects accurately the GMTA trend, as long as the radiative forcing data and the memory strength are known. It is worth noting that here each realization was calculated by feeding the estimated trend of the direct-forcing-response (\(\varepsilon ^{\prime}_d\)) as well as a randomly shuffled fluctuation (\(\varepsilon ^{\prime}_s\)) into the FISM model (see the “Method” section). The fluctuation \(\varepsilon ^{\prime}_s\) is shuffled from the detrended historical direct-forcing-response εs, thus by shuffling for 1000 times one can simulate a possible uncertainty interval of the natural variability around the trend. For the future projections, the calculations in this study are based on a given radiative forcing pathway (SSP2-4.5) provided by the MESSAGE-GLOBIOM model54, and the uncertainty around the projected future warming trend is estimated in the same way as that in the historical simulation. One should note that here the uncertainty interval does not include uncertainty in the underlying radiative forcing series. Accordingly, in this study we mainly focus on the projected mean trend (see Supplementary Fig. 4, however, for the projection of a single run). From Fig. 3, we found that the future GMTA may rise by around 2.6 oC by the end of the 21st century under the SSP2-4.5 scenario, and it crosses 1.5 oC and 2.0 oC in the late 2030s and early 2060s, respectively. Compared to CMIP6 model simulations, this projection is more optimistic. As shown in Fig. 4a, 16 CMIP6 models (see Supplementary Table 1) project a mean warming of around 3 oC by the end of the 21st century under SSP2-4.5, indicating stronger climate sensitivities. This discrepancy may be attributed to the issue that coupled dynamical models usually have a too large long-term climate memory in their simulated GMTA27. As shown in Fig. 4b and Supplementary Table 1 where the climate memory strengths calculated from the historical simulations of the CMIP6 models are presented, of the 16 CMIP6 models, 15 models have significantly larger α values in their GMTA simulations. There is only one model (MRI-ESM2-0) that shows identical climate memory strength as that in the HadCRUT data. This overestimated climate memory indicates more persistent warming signals and, thus, may induce stronger warming trends. As shown in Supplementary Fig. 5, the transient climate response (TCR) estimated by our method tends to increase with the enhancement of climate memory. Of course, one should also note that the simulated relations between εd(t) and \({{{\rm{Anth}}}}\_{{{{\rm{ERF}}}}}_{{{{\rm{d}}}}}\) (i.e., Eq. (2)) may be different (see the previous section). Since the “indirect-memory-response” is an integral of ε(t), the estimated parameter “a” is not only relevant for the instantaneous responses of the GMTA as indicated in Eq. (2), it also affects the simulated warming trend (see Supplementary Fig. 5a for the proportional dependence of the TCR on different parameter values “a”).

Using the linear (nearly proportional) relations as shown in Eq. (2), the trend of the direct-forcing-response from 1951 to 2014 is calculated from the historical anthropogenic ERF data, and the trend of the direct-forcing-response from 2015 to 2100 is estimated from SSP2-4.5 data. The simulated historical GMTAs are shown in blue color, where the blue area indicates the 95% boundaries of 1000 realizations and the thicker blue line represents the mean simulation. The projected future GMTAs are shown in yellow color. The black curve represents the HadCRUT5 GMTA. It is worth noting that the simulation and projection are made by considering the climate memory impacts from the past 100 years, i.e., for the simulation of GMTA in 2001, climate memory impacts from 1901 to 2000 are taken into account.

In sub-figure (a), the thicker blue and yellow curves are respectively the multi-model-mean (16 CMIP6 models) historical GMTA simulation and future projection under the SSP2-4.5 scenario. The blue and yellow areas represent the uncertainties among the 16 CMIP6 models. The yellow dashed line shows the mean projection by the FISM-based response model (see also in Fig. 3). The projected GMTA warming trend is faster in the CMIP6 models, which may be associated with the too large long-term climate memory strength. As shown in (b), the simulated GMTAs from 15 (out of 16) models have shown significantly stronger memory strength with larger DFA exponent α. The red dashed line indicates the 95% upper bound of the uncertainty of the α value obtained from the HadCRTU data (based on Monte-Carlo test).

Discussion

In this study, using a generalized stochastic climate model we derived a response operator that can be used to quantify the impact of climate memory. By decomposing the temperature records (i.e., the GMTA) into the “direct-forcing-response” and the “indirect-memory-response”, one advantage of our approach is that it allows us to distinguish the forcing-induced instantaneous trend and the memory-induced indirect trend. This trend detection considers the long-lasting impacts of external forcings, thus may support a proper attribution of external forcings to global warming, which is a key issue in the D & A studies. Moreover, compared to the widely used “optimal fingerprinting (OFP)” method55,56 that relies on model simulations, our approach is data-driven. In addition, the linear relationship between εd(t) and \({{{\rm{Anth}}}}\_{{{{\rm{ERF}}}}}_{{{{\rm{d}}}}}\) (Eq. (2)) allows us to simulate temperature responses to a given radiative forcing data. Using this relationship, we provide a new, computationally efficient, way of projecting future climate change. Compared to widely used dynamical models (e.g., CMIP6 models) or the existing temperature response models such as multi-box EBMs, our approach only requires three parameters (q in FISM, a and b in Eq. (2)), which is comparable to the recently proposed scaling based models (e.g., FEBE)35,39. Our observational data-driven projections show a lower warming trend compared to CMIP6 simulations, which may be associated with the too large long-term climate memory simulated by CMIP6 models. This is consistent with previous studies30, which showed that most climate models are too sluggish in their response to climate forcing. It is worth noting that one may also estimate the radiative forcings using Eq. (2), assuming the temperature response is known. This is actually a widely used idea to infer historical radiative forcings. Compared to the existing methods that relies on model step-change experiment13 or using model calibrated k-box EBMs57, etc., the advantages of (i) data-driven and (ii) requiring only three parameters may make our approach a potential new way for the radiative forcing estimation, but great efforts are still needed in this direction.

Here, long-term climate memory is suggested as an important feature to consider for a better understanding of global warming as well as future projections. However, a further relevant question arises from this perspective: how long can the distant past affect today’s climate? Since the DFA can reliably detect long-term climate memory on time scales up to a quarter of the data length58, we can infer from the current instrumental data (~170 years) that long-term memory on time scales up to 40 years. Moreover, insights from paleoclimate studies have suggested that the long-term memory in temperature may still be present on centennial time scales59. Hence, considering the historical influences over the past hundred years seems to be reasonable. However, we cannot rule out the possibility that the historical influences from more than 100 years ago may still have a slight impact on the current state: this might be due to the deep ocean. Suppose the long-term memory measured from the instrumental data holds on longer (>100 years) time scales, by taking the longest possible historical impacts into account (i.e., from 1850), slight changes are found (i.e., the global warming by 2100 is 0.15 oC higher than the projection that only considers the memory effects of the past 100 years, see Supplementary Fig. 6a), but the conclusion that the projected warming trend is weaker than those from CMIP6 simulations, remains unchanged. In addition, it is worth noting that if we only consider the historical impacts since 1900 (Supplementary Fig. 6b), the projected warming trend is nearly identical to that when longer historical impacts are included (Supplementary Fig. 6a). This is attributed to the very weak trend of the ε(t) before the 20th century, which has nearly no contribution to the warming trend in the years afterwards. In other words, the projection with the longest possible historical impacts taken into account (Supplementary Fig. 6a) can be considered as an upper bound of the projected warming trend. A more accurate projection of the future warming relies on how long the long-term climate memory effects can last, which is still an open and vital question that deserves more attention in the future.

In the end, we would like to point out that although our approach has shown a good ability in projecting future warming trends, there is still room for further improvement. For example, the simulation/projection in Fig. 3 have not taken the ERF-related uncertainties into account. Considering the historical radiative forcing is still largely uncertain (particularly due to the aerosols)35,39, taking this uncertainty into account may lead to uncertainties in the parameter a, which may further affect the projected warming trend. In addition, to measure the parameter a we estimated the trends in ε(t) and the ERF data using the EEMD analysis (i.e., Fig. 2), which has the advantage of not preselecting the trend form, but follows the Definition of Trend as an intrinsically fitted monotonic function or a function in which there can be at most one extremum within a given data span60. This allows objective analyses of the trends, but may also bring difficulties for parameter estimation. Such as the case in CCSM4 (Supplementary Fig. 7), the EEMD analysis of the anthropogenic ERF data gives a long-term monotonic trend in the 20th century, while for ε(t) the EEMD residual carries an oscillation that appears to correspond to some extent with the multi-decadal variation of the anthropogenic ERF. Since this curve satisfies the Definition of Trend in the EEMD method, the EEMD calculation stops here, but the extracted trend cannot support a reliable parameter estimation in Eq. (2). Accordingly, although we have revealed a close relationship between the radiative forcings and the ε(t) as shown in Fig. 2, more detailed work such as including the uncertainties of radiative forcings in the approach, properly extracting signals from the ε(t), etc., are still required in the future.

Methods

Quantification of the memory strength

The Detrended Fluctuation Analysis of second order (DFA2)46 is used to quantify the memory strength in the global mean surface temperature anomalies (GMTA). Suppose we have a time series x(t), t = 1, 2, ⋯ , N, in DFA2, one first calculates the cumulative sum (profile) \(Y(t)=\mathop{\sum }\nolimits_{k = 1}^{t}x(k)\), and divides the profile into non-overlapping windows of size s. In each window ν, the variance of Y(t) around the best polynomial fit of second order are determined as \({F}_{\nu }^{2}(s)\), and an average over all windows is further obtained as F2(s). If the square root of the F2(s), F(s), increases with s as a power law, F(s) ~ sα, and the exponent α is larger than 0.5, the long-term climate memory is detected. The larger α is, the stronger the long-term climate memory will be. It is worth noting that the order of the DFA is related to the order of the polynomial fit to Y(t) in each window. As introduced in previous studies46, by removing the nth order polynomial fit of Y(t), one is able to remove the (n − 1)th order trend effects in the original time series x(t) on the estimation of the memory strength. This is the main feature of the DFA compared to other approaches such as the Fluctuation Analysis (FA) or the widely used multitaper spectrum method. Since the main target of this work is to distinguish the “forcing-induced direct trend” and the “memory-induced indirect trend” in the GMTA time series, we do not remove the warming trend before analysis. In this case, we decided to use the DFA of higher order rather than DFA1 to remove the potential impacts of trends on the estimation of memory strength. Since we found the results from DFA2 and those from DFA3 are nearly identical, we finally decided to use DFA2 in this work.

Extracting the “forcing-induced direct response” and the “memory-induced indirect response”

We employed the Fractional Integral Statistical Model (FISM)43 to extract the “direct-forcing-response” and the “indirect-memory-response” in GMTA. FISM is a generalized version of the classical SCM. It also considers the slow varying processes in the climate system as accumulative responses to continual excitations. Compared to the classical SCM, however, fractional integral techniques are introduced to the FISM to better simulate the processes of how the indirect-memory response arises20. For instance, suppose we know a priori the direct-forcing-response, then the indirect-memory-response can be estimated via fractional integral of a proper order q, as shown below

where the Riemann-Liouville fractional integral formula is used, and the integral order q is related to the DFA exponent α as an affine function q(α) = α − 0.520. In this equation, \(\varepsilon ({t}^{\prime})\) represents the direct-forcing-response before the present time t, \(t-{t}^{\prime}\) represents the distance between historical time point \({t}^{\prime}\) and present time t, δ is the sampling time interval (e.g., monthly), and Γ denotes the gamma function. According to Eq. (1), the considered time series x(t) can thus be written as,

Obviously, the magnitude of historical influences accumulated from the past ε depends on the integral order q. For q = 0, no integration is conducted (M(t) = 0) and x(t) is simply equal to the direct-forcing-response ε(t). If q = 1, on the other hand, the FISM is identical to the classical SCM by Hasselmann42, where a Brownian Motion is simulated. In practice, for a given time series x(t), one first determines the fractional integral order q from the DFA exponent α. With q and x(t), the historical direct-forcing-response \(\varepsilon ({t}^{\prime})\) (\({t}^{\prime} \,<\, t\)) can be further estimated by reversely deriving Eq. (4). Note that in this way the long-term memory effects can be removed and \(\varepsilon ({t}^{\prime})\) has no long-term memory (Supplementary Fig. 8). After substituting \(\varepsilon ({t}^{\prime})\) into Eq. (3), the indirect-memory-response at the present time t, M(t), can be calculated. In this way, we can further decompose the variable x at the present time t into the indirect-memory-response and the direct-forcing-response, according to Eq. (1). For details of how to reversely derive Eq. (4), as well as how to separate the indirect-memory-response and the direct-forcing-response, please refer to the “Method” section in ref. 43.

FISM-based projection approach

Suppose we have radiative forcing data, e.g., under a given scenario, here we summarize the detailed steps to project GMTA trends using the FISM-based approach.

-

1.

Estimate the trend of the direct-forcing-response (\(\varepsilon ^{\prime}_d\)) from the radiative forcing data (under a given scenario) using Eq. (2).

-

2.

Determine surrogate fluctuations (\(\varepsilon ^{\prime}_s\)) around the \(\varepsilon ^{\prime}_d\) by shuffling the detrended historical direct-forcing-response εs. Obtain a “future” direct-forcing-response time series as \(\varepsilon ^{\prime} =\varepsilon ^{\prime}_d +\varepsilon ^{\prime}_s\).

-

3.

Substitute the “future” \(\varepsilon ^{\prime}\) into the FISM. By setting the fractional integral order q as 0.4, compute a “future” projection.

-

4.

Perform a large number of Monte Carlo simulation using steps 2 and 3 (e.g., 1000 times), and then project the future GMTA trend as the mean of all the realizations and use the ensemble spread as an estimate of the uncertainty.

It is worth noting that since the temporal resolution of the historical GMTA data is monthly, the resolution for the detrended historical direct-forcing-response εs is also monthly. In this case, we make simulations/projections with monthly temporal resolution. For the radiative forcing data, which have coarser temporal resolutions, since we mainly focus on the simulated/projected trends, linear interpolations are made between every two adjacent points before analysis. In addition, the core of this projection approach is Eq. (2), which describes the relations between the direct-forcing-response ε and the radiative forcing data. Since only long-term trends of these two quantities are considered in Eq. (2), whether the radiative forcing data itself has memory or not will not have a big impact on the approach.

Data availability

Data related to this paper can be downloaded from the following: The HadCRUT5.0.1 monthly global mean surface temperature anomalies are available from the Met Office Hadley Centre, https://www.metoffice.gov.uk/hadobs/hadcrut5/data/current/download.html. The historical effective radiative forcings (ERF) are obtained from the KNMI Climate Explorer, https://climexp.knmi.nl/selectindex.cgi?id=someone@someone. The CMIP5 and CMIP6 model outputs are downloaded from the Earth System Grid Federation (ESGF) at the addresses https://esgf-node.llnl.gov/search/cmip5/and https://esgf-node.llnl.gov/search/cmip6/, respectively. The forcing data under SSP2-4.5 by the MESSAGE-GLOBIOM model are downloaded from the SSP Public Database version 2.0, International Institute for Applied Systems Analysis, https://secure.iiasa.ac.at/web-apps/ene/SspDb/. Derived data supporting the research in this work are available from the first author on reasonable request.

Code availability

The codes that support the findings of this study are available from the first author on request.

References

IPCC: Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change, (Masson-Delmotte, V. et al. (eds.), (Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, In Press, 2021).

King, A. D., Karoly, D. J. & Henley, B. J. Australian climate extremes at 1.5 °C and 2 °C of global warming. Nat. Clim. Change 7, 412–416 (2017).

Seneviratne, S. I., Donat, M. G., Pitman, A. J., Knutti, R. & Wilby, R. L. Allowable CO2 emissions based on regional and impact-related climate targets. Nature 529, 477–483 (2016).

Drouet, L. et al. Net zero-emission pathways reduce the physical and economic risks of climate change. Nat. Clim. Change 11, 1070–1076 (2021).

Hühne, N. et al. Wave of net zero emission targets opens window to meeting the Paris Agreement. Nat. Clim. Change 11, 820–822 (2021).

Hansen, J. et al. Earth’s energy imbalance: confirmation and implications. Science 308, 1431–1435 (2005).

von Schuckmann, K. et al. An imperative to monitor Earth’s energy imbalance. Nat. Clim. Change 6, 138–144 (2016).

Li, C., von Storch, J.-S. & Marotzke, J. Deep-ocean heat uptake and equilibrium climate response. Climate Dyn. 40, 1071–1086 (2013).

Meehl, G. A. et al. Context for interpreting equilibrium climate sensitivity and transient climate response from the CMIP6 Earth system models. Sci. Adv. 6, eaba1981 (2020).

Zelinka, M. D. et al. Causes of higher climate sensitivity in CMIP6 Models. Geophys. Res. Lett. 47, e2019GL085782 (2020).

Gregory, J. M. et al. A new method for diagnosing radiative forcing and climate sensitivity. Geophys. Res. Lett. 31, L03205 (2004).

Boer, G. J. & Yu, B. Climate sensitivity and climate state. Clim. Dyn. 21, 167–176 (2003).

Larson, E. J. L. & Portmann, R. W. A temporal kernal method to compute effective radiative forcing in CMIP5 transient simulations. J. Climate 29, 1497–1509 (2016).

Franzke, C. L. E. et al. The structure of climate variability across scales. Rev. Geophys. 58, e2019RG000657 (2020).

Hurst, H. E. Long-term storage capacity of reservoirs. Trans. Am. Soc. Civil Eng. 116, 770–808 (1951).

Chen, X., Lin, G. X. & Fu, Z. Long-range correlations in daily relative humidity fluctuations: a new index to characterize the climate regions over China. Geophys. Res. Lett. 34, L07804 (2007).

Dangendorf, S. et al. Evidence for long-term memory in sea level. Geophys. Res. Lett. 41, 5530–5537 (2014).

Jiang, L., Li, N. & Zhao, X. Scaling behaviors of precipitation over China. Theor. Appl. Climatol. 128, 63–70 (2017).

Koscielny-Bunde, E. et al. Indication of a universal persistence law governing atmospheric variability. Phys. Rev. Lett. 81, 729–732 (1998).

Yuan, N., Fu, Z. & Liu, S. Long-term memory in climate variability: a new look based on fractional integral techniques. J. Geophys. Res. 118, 12962–12969 (2013).

Peng, C.-K. et al. Long-range correlations in nucleotide sequences. Nature 356, 168–170 (1992).

Peng, C.-K. et al. Mosaic organization of DNA nucleotides. Phys. Rev. E 49, 1685–1689 (1994).

Ludescher, J., Bunde, A., Franzke, C. L. E. & Schellnhuber, H. J. Long-term persistence enhances uncertainty about anthropogenic warming of Antarctica. Climate Dyn. 46, 263–271 (2016).

Fraedrich, K. & Blender, R. Scaling of atmosphere and ocean temperature correlations in observations and climate models. Phys. Rev. Lett. 90, 108501 (2003).

Vyushin, D. I. & Kushner, P. J. Power-law and long-memory characteristics of the atmospheric general circulation. J. Climate 22, 2890–2904 (2009).

Fredriksen, H.-B. & Rypdal, M. Long-range persistence in global surface temperatures explained by linear multibox energy balance models. J. Climate 30, 7157–7168 (2017).

Qiu, M., Yuan, N. & Yuan, S. Understanding long-term memory in global mean temperature: An attribution study based on model simulations. Atmospheric Sci. Lett. 13, 485–492 (2020).

Schwartz, S. E. Reply to comments by G., Foster et al. R., Knutti et al. and N., Scafetta on “Heat capacity, time constant, and sensitivity of Earth’s climate system”, J. Geophys. Res. 113, D15105 (2008).

Held, I. M. et al. Probing the fast and slow components of global warming by returning abruptly to preindustrial forcing. J. Climate 23, 2418–2427 (2010).

Hansen, J., Sato, M., Kharecha, P. & von Schuckmann, K. Earth’s energy imbalance and implications. Atmos. Chem. Phys. 11, 13421–13449 (2011).

Rypdal, K. Global temperature response to radiative forcing: solar cycle versus volcanic eruptions. J. Geophys. Res. 117, D06115 (2012).

Geoffroy, O. et al. Transient climate response in a two-layer energy-balance model. Part I: analytical solution and parameter calibration using CMIP5 AOGCM experiments. J. Climate 26, 1841–1857 (2013).

van Hateren, J. H. A. fractal climate response function can simulate global average temperature trends of the modern era and the past millennium. Clim. Dyn. 40, 2651–2670 (2013).

Rypdal, M. & Rypdal, K. Long-memory effects in linear response models of earth’s temperature and implications for future global warming. J. Climate 27, 5240–5258 (2014).

Procyk, R., Lovejoy, S. & Hébert, R. The fractional energy balance equation for climate projections through 2100. Earth Syst. Dynam. 13, 81–107 (2022).

Lovejoy, S., del Rio Amador, L. & Hébert, R. The ScaLIng Macroweather Model (SLIMM): using scaling to forecast global-scale macroweather from months to decades. Earth Syst. Dynam. 6, 637–658 (2015).

Rypdal, K. Global warming projections derived from an observation-based minimal model. Earth Syst. Dynam. 7, 51–70 (2016).

Hébert, R. & Lovejoy, S. Interactive comment on “Global warming projections derived from an observation-based minimal model” by K. Rypdal. Earth Syst. Dynam. Discuss. 6, C944–C953 (2015).

Hébert, R., Lovejoy, S. & Tremblay, B. An observation-based scaling model for climate sensitivity estimates and global projections to 2100. Clim. Dyn. 56, 1105–1129 (2021).

Del Rio Amador, L. & Lovejoy, S. Using regional scaling for temperature forecasts with the Stochastic Seasonal to Interannual Prediction System (StocSIPS). Clim. Dyn. 57, 727–756 (2021).

Del Rio Amador, L. & Lovejoy, S. Long-range forecasting as a past value problem: untangling correlations and causality with scaling. Geophys. Res. Lett. 48, e2020GL092147 (2021).

Hasselmann, K. Stochastic climate models Part I. Theory. Tellus 28, 473–485 (1976).

Yuan, N., Fu, Z. & Liu, S. Extracting climate memory using Fractional Integrated Statistical Model: a new perspective on climate prediction. Sci. Rep. 4, 6577 (2014).

Nian, D. et al. Identifying the sources of seasonal predictability based on climate memory analysis and variance decomposition. Climate Dyn. 55, 3239–3252 (2020).

Yuan, N., Xiong, F., Xoplaki, E., He, W. & Luterbacher, J. A new approach to correct the overestimated persistence in tree-ring width based precipitation reconstructions. Climate Dyn. 58, 2681–2692 (2022).

Kantelhardt, J. W., Koscielny-Bunde, E., Rego, H. H. A., Havlin, S. & Bunde, A. Detecting long-range correlations with detrended fluctuation analysis. Physica A 295, 441–454 (2001).

Myhre, G. et al. Anthropogenic and Natural Radiative Forcing. In: Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (eds Stocker, T. F. et al.). (Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, 2013).

Dessler, A. E. & Forster, P. M. An estimate of equilibrium climate sensitivity from interannual variability. J. Geophys. Res: Atmospheres 123, 8634–8645 (2018).

Huang, N. E. & Wu, Z. A review on Hilbert-Huang transform: method and its applications to geophysical studies. Rev. Geophys. 46, RG2006 (2008).

Riahi, K. et al. The shared socioeconomic pathways and their energy, land use, and greenhouse gas emissions implications: an overview. Global Environ. Change 42, 153–168 (2017).

O’Neill, B. C. et al. The scenario model intercomparison project (ScenarioMIP) for CMIP6. Geosci. Model Dev. 9, 3461–3482 (2016).

Boer, G. J. et al. The decadal climate prediction project (DCPP) contribution to CMIP6. Geosci. Model Dev. 9, 3751–3777 (2016).

Gillett, N. P. et al. The detection and attribution model intercomparison project (DAMIP v1.0) contribution to CMIP6. Geosci. Model Dev. 9, 3685–3697 (2016).

Fricko, O. et al. The marker quantification of the shared socioeconomic pathway 2: a middle-of-the-road scenario for the 21st century. Global Environ. Change 42, 251–267 (2017).

Hasselmann, K. Multi-pattern fingerprint method for detection and attribution of climate change. Clim. Dyn. 13, 601–611 (1997).

Allen, M. R. & Tett, S. F. B. Checking for model consistency in optimal fingerprinting. Clim. Dyn. 15, 419–434 (1999).

Cummins, D. P., Stephenson, D. B. & Stott, P. A. A new energy-balance approach to linear filtering for estimating effective radiative forcing from temperature time series. Adv. Stat. Clim. Meteorol. Oceanogr. 6, 91–102 (2020).

Bashan, A., Bartsch, R., Kantelhardt, J. W. & Havlin, S. Comparison of detrended methods for fluctuation analysis. Physica A 387, 5080–5090 (2008).

Ludescher, J., Bunde, A., Büntgen, U. & Schellnhuber, H. J. Setting the tree-ring record straight. Climate Dyn. 55, 3017–3024 (2020).

Wu, Z., Huang, N. E., Long, S. R. & Peng, C.-K. On the trend, detrending, and variability of nonlinear and nonstationary time series. Proc. Natl Acad. Sci. USA 104, 14889–14894 (2007).

Acknowledgements

Many thanks are due to the support from the National Natural Science Foundation of China (No. 42175068). C.F. was supported by the Institute for Basic Science (IBS), Republic of Korea, under IBS-R028-D1 and Pusan National University grant 2021. N.Y. thanks also the support from the Guangdong Basic and Applied Basic Research Foundation (2022A1515011569) and the Fundamental Research Funds for the Central Universities, Sun Yat-sen University (22lgqb10). F.X. also thanks the support from the Special Project for Innovation and development of the China Meteorological Administration (CXFZ2021J046).

Author information

Authors and Affiliations

Contributions

N.Y. and C.F. designed the study. N.Y. and F.X. performed the calculations. N.Y., C.F., and W.D. analyzed the results. All authors participated in discussions of the results during the study, and contributed to interpreting the results. N.Y. wrote the main manuscript, C.F., W.D., Z.F., and F.X. revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yuan, N., Franzke, C.L.E., Xiong, F. et al. The impact of long-term memory on the climate response to greenhouse gas emissions. npj Clim Atmos Sci 5, 70 (2022). https://doi.org/10.1038/s41612-022-00298-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41612-022-00298-8

This article is cited by

-

Enhanced risk of record-breaking regional temperatures during the 2023–24 El Niño

Scientific Reports (2024)