Abstract

Early warning recommendation is crucial for tracking learning behavior and represents a significant issue in interactive learning environments. However, an interactive learning environment-based learning process may not always achieve expected goals, leading to inefficient or ineffective learning behavior and negative emotions. Additionally, many learners fail assessments due to these issues. To address this problem, this study proposes relevant test problems for interpretable early warning recommendations based on massive learning behavior instances and potential relationships. We design an applicable learning analysis model, namely a deep-neural network based on the knowledge graph of learning behavior, and verify its feasibility and reliability through extensive experiments and data analysis. Our results demonstrate that the interactive learning process must match multi-factor analysis at different temporal sequences to determine key temporal sequences or intervals. This is limited by the classification of learning contents and interpretable concepts, which provide effective reference for subsequent learning content with similar concept classes and knowledge structures. Our approach recommends effective learning behavior in appropriate temporal sequences as soon as possible or constructs feasible intervention measures to improve learners’ participation. This research deepens and expands early warning by proposing a feasible new method and obtaining key conclusions with vital practical significance.

Similar content being viewed by others

Introduction

The broad adoption of online interactive learning environments has revolutionized traditional learning approaches and catalyzed new learning behaviors. Such environments represent important platforms for current and future learning methods, which constitute a crucial trend in educational reform and development (Oppermann. et al., 2021). Online and data technologies are fully utilized in interactive learning environments, eliminating the constraints of time and space (Xia, 2020a). Furthermore, it provides more reliable and holistic cooperation modes and communication means, describes the data structure and relationships of learning behavior, and enables early warning and intervention mechanisms (Tian et al., 2021). Mining valuable information and interpretable semantics from massive learning behavior instances is pivotal for the interactive learning process (Song et al., 2021; Xia, 2021a).

Interactive learning environments foster online education improvement (Huang et al., 2021), effectively promoting the expansion of learning contents and deepening knowledge to enhance practical innovation (Aguilar et al., 2021). The integration of various resources, including both software and hardware, contributes to the integration of data and processes in online and offline learning. This results in improved efficiency in the learning process and promotes personalized learning behaviors, ultimately enhancing learning effectiveness (Xia, 2021b). However, the learning process supported by interactive learning environments may yield massive inefficient or ineffective learning behavior patterns resulting in negative emotions, and even causing considerable failure rates in assessments (Silvola. et al., 2021).

The aforementioned reasons can mainly be attributed to three aspects: (1) The interactive learning environment fails to meet learners’ demands leading to an exponential increase in learners’ learning behavior patterns, consequently causing confusion. Accurately mining applicable behavior patterns and resources thus become the main research direction under the current interactive learning environment; (2) No temporal sequence exists among learning contents; also, the correlations of different learning contents are not clear, as the interpretable descriptions of interactive learning environments are lacking, making it difficult to provide guidance and recommendation of effective learning behavior; (3) Learners’ cognitive ability and knowledge structure constraints towards the massive information of interactive learning environments make it difficult to construct effective learning behavior patterns in a short time. Effectiveness-oriented learning behavior patterns should be made properly interpretable (Xia & Qi, 2022), which constitutes an initial demand for the construction of learning behavior. Interactive learning environments should therefore provide interpretable early warning and feedback (Jovanovi. et al., 2021).

Since current research has yet to yield key, effective results, this study analyzes and designs new methods relating to interpretable early warning recommendations for large-scale learning behavior instances and incorporates complete temporal sequences of the learning process (Cerezo et al., 2020). Our aim is to enhance learners’ ability to self-organize and construct feasible learning behavior patterns, thereby increasing both adaptive and interpretable experiences of the learning process, which contribute to innovation and practicality. We analyze and design the interpretable early warning recommendation mechanism of the interactive learning process. Based on existing massive learning behavior instances, we explore feasible methods to improve availability and reliability. Firstly, we mine sufficient learning behavior instances, construct interpretable early warning needs, and put forward corresponding problems. Secondly, we construct interpretable knowledge graphs between temporal sequences and key features, design deep-neural network models based on these graphs, fully train data, optimize corresponding parameters, and track the entire learning process. Thirdly, we construct comparative experiments of relevant approximate methods, determine feasible interpretable early warning recommendation mechanisms, and discuss the underlying laws of the interactive learning process.

Related work

Interpretable needs enable the construction and correlation of learning behavior. It is essential to realize or improve the interpretability of interactive learning environments, and attain self-interpretation and interactive interpretation (Li et al., 2021). A key requirement is to predict or provide feedback on historical data and potential data. Relevant research (Dolatsara et al., 2022; Jose & Shetty, 2022; Novello et al., 2023; El Zini & Awad, 2022) mainly focuses on the following aspects:

Interpretable methods

Interpretable methods comprise of two dimensions: Interpretable models and relevant interpretable methods. Interpretable models are divided into two branches-Model Agnostic and Model Specific. The Model Agnostic explains all types of models and has good adaptability. It can be employed as a business layer to implement interpretable scheduling. Model Specific explains specific types of models, and relevant methods depend on the business and analyzable characteristics of each model. The relevant interpretable methods directly relates to interpretable visibility and includes public interpretation and private interpretation. Public interpretation explains the overall logic and process mode, and the interpretable content is applicable to any learning behavior instance. Private interpretation provides specific interpretation for a single learning behavior instance. It is the decision analysis and feedback of the intelligent learning mode (Pachamanova et al., 2021).

Model Specific tools are more readily applied, while Model Agnostic has stronger interpretation abilities, including features, relationships, and scenes. It can even integrate different interactive learning environments, making it a crucial direction in interpretation.

Interpretable tools

Interpretable tools are applications of interpretable methods with relatively applicable modes and fields (Mihaljevi et al., 2021). The interpretable ability of tools involves the analysis and design of intelligent algorithms and models, and problem-oriented functions can also be realized with interpretable intelligent tools. These tools are developed constantly and widespread; common interpretable tools include LIME, SHAP, PDP, Interpret ML, Alibi, H2O, etc., and the corresponding functions are achieved by Python and R. The visibility of applications includes public interpretation and private interpretation.

These tools differ in interpretation, applicable data types, stability, and operability. Furthermore, principle and interface standards are not unified. Some tools are complex to utilize, and the interpretable process has corresponding characteristics and relationships, placing higher requirements on researchers. Due to the massive data involved, analyzing data is still complicated, making it unrealistic to apply these tools directly.

Interpretable recommendation mechanism

The recommendation mechanism provides users with personalized modes of service, effectively reducing data overload and information inapplicability. A key principle is designing a recommendation algorithm based on deep learning, which improves accuracy and reliability. Accurate recommendation services can stimulate learners, improve their enthusiasm and autonomy, and offer recommended content’s interpretable process (Jya & Jcabc, 2021).

An interpretable recommendation mechanism establishes an optimal decision feedback mechanism for the learning process, enhancing credibility and reliability. It stimulates the interaction and cooperation of learners, improves their satisfaction with the recommended content, guides and helps learners construct appropriate learning behaviors as soon as possible. This recommendation mechanism significantly optimizes the feasibility and dependence of the early warning mechanism.

Based on these three aspects of interpretability, the early warning mechanism should be established based on the knowledge graph of learning behavior, integrate interpretable recommendation mechanisms, realize the feasibility recommendation and feedback of early warning, and comprehend the learning process. It provides analysis and prediction of interpretability, improving the flexibility and adaptability of learning behavior.

Methods

To establish an interpretable early warning recommendation mechanism for interactive learning environments, it is essential to construct an interpretable knowledge graph that reflects the relationships between learning behavior scenarios and key features (Nadaf et al., 2021). Based on this graph, a deep-neural network model can be designed to process and interpret massive sets of learning behavior data.

Related definition

-

(1)

Knowledge graph

A knowledge graph (KG) is a semantic network composed of entities and relationships. It is also a directional heterogeneous network in which entities are represented as nodes and relationships as directed edges. The formal definition of a knowledge graph is typically described as \({\mathrm{KG}} = \left\{ {\left( {h,r,t} \right)\left| h \right.,t \in E,r \in R} \right\}\), h and t denote the source and target nodes respectively; r denotes the relationship between nodes; E represents a set of entities, and R represents a set of relationships. \({\mathrm{Learner}} = \left\{ {l_t} \right\}_{t = 1}^M\) denotes a set of learners, \({\mathrm{LC}} = \left\{ {c_t} \right\}_{t = 1}^N\) denotes a set of learning content, and M and N denote the size of these two sets respectively. The interaction between the learner and the learning content can be represented as \(IR = \left( {l,{\mathop{{{\rm{int}}}}} {\mathrm{eraction}},c} \right)\), which matches the learning content with the entity when \(LC \in \varepsilon\), and integrates the resulting data with the knowledge graph. In addition, if \(\varepsilon ^\prime = \varepsilon \scriptstyle\bigcup L\) and \(R^\prime = R \scriptstyle\bigcup \left\{ {{\mathrm{Interaction}}} \right\}\), then \(KG = \left\{ {\left( {h,r,t} \right)\left| h \right.,r \in \varepsilon ^\prime ,r \in R^\prime } \right\}\) can be calculated.

-

(2)

Vector decomposition of deep-neural networks

Let \(f\left( x \right) \in R^K\) be the K-dimensional output of a deep-neural network when the input is x, and let fk(x) denote the probability that sample x corresponds to classification k, i.e., the probability that an input x with true label c is misclassified. The variable x is an intermediate result of f(x), h(a) represents the top layer of the deep-neural network, while \(a = g\left( x \right) \in R^D\) denotes a point in the data space. In vector decomposition algorithm design for convolutional neural networks (CNNs), the output of the CNN comes from a = g(x), where h(a) is a simple fitting result. Moreover, hk is defined as the linear combination of wk and a, such that \(h\left( a \right) = W^{\left( h \right)}a + b^{\left( h \right)}\), \(h_k\left( a \right) = W_k^Ta + b_k\).

Suppose there exists a series of vectors \(q_{c_i} \in R^D\), and each concept feature is represented by ci. There is always a corresponding vector \(q_{c_i}\) associated with ci, which can explain the concept classes. Therefore, it is possible to decompose wk into several parts, \(w_k \approx s_{c_1}q_{c_1} + s_{c_2}q_{c_2} + \cdots + s_{c_n}q_{c_n}\), through vector decomposition, where a series of \(q_{c_i}\) serves as the orthogonal basis of the concept features.

If \(q_{c_i}\) is expressed as a matrix C and wk is treated as a least squares problem, we can obtain the solution \(w_k = s_{c_1}q_{c_1} + s_{c_2}q_{c_2} + \cdots + s_{c_n}q_{c_n} + r = C_s + r\). To find \(s_{c_i}\) with the minimum |r | , we compute \(s = C^ + w_k\), which represents the optimal solution. Additionally, C+ is the pseudo-inverse solution of C.

-

(3)

Concept features

Although a deep-neural network can capture rich labels, it may not include all the features extracted by a convolutional neural network (CNN). Therefore, this study proposes adding a residual vector \(r = w_k - C_ks\) to the candidate basis vector Ck, resulting in a new vector denoted as \(C_k^ \ast\). \(C_k^ \ast\) incorporates all the features mined by CNN, ensuring a more comprehensive representation of the data.

The score for classification k in the last layer of CNN is given by \(h_k\left( a \right) = W_k^Ta + b_k = \left( {C_k^ \ast } \right)^Ta + b_k = s_1q_{c_1}^Ta + s_2q_{c_2}^Ta + \cdots + r^Ta + b_k\), where \(s_1q_{c_i}^Ta\) represents the contribution of the concept label ci and \(r^Ta\) denotes the contribution of the residual vector r associated with k.

Algorithm design

The deep-neural network relies on learning behavior feature vectors and knowledge graphs to describe interactive learning processes. During algorithm design, feature vectors are generated from training sets of learning behavior, while knowledge graphs help explain multi-classification in test sets. Since traditional deep-neural networks require multiple datasets with different labels, a dataset must contain at least two categories: classes and features. In this study, the top 5 features with highest average contribution were selected for constructing the knowledge graph, and both features and classes were defined as concepts.

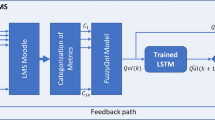

The core step of the interpretable vector and knowledge graph-based deep-neural network is described by the DNNA algorithm. This algorithm can be divided into three main parts: visualization of the deep-neural network based on decomposed interpretable vectors, construction of the knowledge graph, and testing of the knowledge graph, as shown in Fig. 1.

Algorithm DNNA

Step 1. Learning behavior sequence recognition: The temporal sequence of learning behavior is inputted into a convolutional neural network (CNN) to obtain the weight vector wk, score1 and confidence1.

Step 2. Interpretable weight vector decomposition: The weight vector obtained from CNN is decomposed into several feature vectors, depending on the training set.

Step 3. Output feature visualization: To verify the accuracy of the vector decomposition, the results obtained in step 2 are visualized by activating the last layer of CNN and performing back-propagation derivation.

Step 4. Interpret “features-temporal sequence”: The importance of features is determined by their average contribution to the vector decomposition, and the top 5 features are dynamically interpreted by tracking the temporal sequences of learning behavior.

Step 5. Calculate the recognition score of temporal sequence: The interpretable “feature-temporal sequence” is viewed as the input of CNN, and score2 and confidence2 are calculated once again to determine the optimization part of CNN in identifying learning behavior. Accuracy, recall, precision, F1 scores, etc., are also calculated as indexes.

Step 6. Evaluate the reliability of interpretable vector decomposition: score1 and score2, as well as confidence1 and confidence2, are analyzed and compared to obtain quantitative results of interpretable vector decomposition.

Step 7. Construct the knowledge graph of temporal sequence: A knowledge graph is constructed based on the interpretative relationships among feature vectors obtained from Step 2.

Step 8. Calculate the similarity of temporal sequence: The similarity of temporal sequences is calculated based on the Jaccard coefficient.

Step 9. Mine the discriminant features: Based on Step 2, interpretable results are extended to mine discriminant features of vector decomposition.

Step 10. Test the learner’s credibility: The learner’s credibility is tested on the discriminant features obtained in Step 9, and the mean squared error (MSE) is calculated based on the term frequency-inverse document frequency (TF-IDF) of the discriminant features to quantify the learner’s reliability.

Data processing and problem description

We describe a study that aims to develop an interpretable early warning recommendation mechanism for learning behavior using data from an AI-enabled online learning platform. The dataset is very large, with a scale of 1.3PB, but has two main problems: Data sparsity and Uncertainty in learning behavior. To address these issues, the study proposes to introduce more characteristics into the recommendation process and analyzes knowledge graphs of relevant learning contents to integrate feasible requirements into the early warning recommendation mechanism.

To address data sparsity, we propose to introduce more characteristics into the recommendation process (Sailer et al., 2021), as traditional assessment results cannot provide a strong basis. Additionally, analyzing knowledge graphs of relevant learning contents and integrating feasible requirements into the early warning recommendation mechanism is necessary to improve its effectiveness in identifying and addressing potential learning difficulties.

The uncertainty in learning behavior makes it challenging to model and effectively recommend personalized content to learners. To overcome this challenge, the study proposes that the interpretable early warning mechanism should be modeled based on a temporal sequence of data, and learning behavior features should be associated to represent dynamic and continuous changes (Eberle & Hobrecht, 2021). This approach can help capture the complexity of learning behavior more comprehensively and improve the accuracy and effectiveness of the early warning mechanism.

These two problems are preconditions for achieving interpretability in learning behavior. It is challenging to obtain effective recommendations in situations that involve scarce features or fuzzy relationships, which makes realization of interpretability difficult. Effective features and more comprehensive relationships are required to build an adequate knowledge graph that captures the semantics of learning content effectively. Improved capturing of these semantics is key to enhancing the recommendation effect, and can also provide reasonable early warning (Xia., 2020b). Therefore, creating an interpretable early warning recommendation mechanism requires temporal sequence of learning behavior, followed by mining critical path.

Building on the analysis and design of the data sets, we further mine applicable data and relationships, and achieve interpretable early warning recommendation driven by learning content (Er et al., 2021). We construct a directory of learning contents from the data set, define interpretable concepts and knowledge association rules, and form an entity set comprising learning contents and concepts. This results in building the knowledge graph. After data screening, merging, and association, we obtained 97 learning contents involving 28,707 learners and 1,204 concepts. Relevant association rules include three categories: learning content <inclusion> concept, concept m <order> concept n, and concept m <level> concept n. Two key entities are needed in the data set: learners and learning contents. The key descriptions are provided in Tables 1 and 2.

Tables 1, 2 include some common attributes of the learning contents. The attributes in Table 1 describe the distribution of learners and learning contents, with corresponding data that are specific instances directly relating to learners. Table 2 describes the fundamental attributes of the learning contents. Some features only become statistically interpretable after the learners produce learning behavior instances. In this way, it is possible to create correlation between learners and learning contents, enabling the provision of more descriptive features based on learners’ participation.

Based on Tables 1 and 2, the entity relationships and knowledge graphs were analyzed and counted, and the results are shown in Table 3. The knowledge graphs consist of numerous triples, but the types of entities and relationships have been determined.

Figure 2 displays a knowledge graph comprising learning content and related concepts. In the graph, the circle represents learning content, the rectangle represents concepts and the directed arc represents relationships between them. Different learning contents establish interpretable relationships with the represented concepts. There mainly are three types of relationships, “contains,” “isUpperTo” and “isPrerequisiteTo.” If “A is UpperTo B”, it means A is at a higher level than B. “A isPrerequisiteTo B” means that A serves as a prerequisite for B. The relationships among learning contents and concepts are mainly based on “contains”. However, concerning the relationship between concepts, these three types of relationships may exist. Although there is no direct relationship among the learning contents, potential correlations among them could appear indirectly through relevant concepts.

The circle represents the learning content, the rectangle represents the concept, and the directed arc represents the relationship. Different learning contents form interpretable relationships with the concepts. There are three types of relationships: “contains,” “isUpperTo,” and “isPrerequisiteTo.”.

Figure 2 illustrates the relatively complex interpretable context of the interactive learning environment, which requires extensive data analysis. The involved dataset comprises a large volume of learners and learning contents. Consequently, it leads to complex relationships and conditions among entities, concepts, and knowledge graphs (Xia & Qi, 2023). Although these data can provide a more substantial basis for early warning recommendations’ interpretability, they also create obstacles for accurate correlations. Based on Tables 1–3’s statistical results, in conjunction with Fig. 2’s complex structure, we propose key test questions for Interpretable early warning recommendations, as laid out in Fig. 3.

Q1: conceptual correlation and classification of learning contents;

Q2: according to the classification of different learning contents, the correlation of learning behavior path that learners pass the assessment;

Q3: according to the classification of different learning contents, the key early warning sequences of learners who fail to pass the assessment;

Q4: according to the classification of different learning contents, the significance of the knowledge graph of the learners who pass the assessment;

Q5: according to the classification of different learning contents, the significance of the knowledge graph of the learners who fail to pass the assessment;

Q6: the interpretable early warning recommendation about the fusion of Q2 and Q4;

Q7: the interpretable early warning recommendation about the fusion of Q3 and Q5.

Experiment

The entire experiment fully tests the dataset of interactive learning environment using Algorithm DNNA. In order to test its feasibility and reliability, several indices were selected: (1) Area under curve (AUC), used to test the sorting of algorithms. The calculation model is described as AUC = Y, where ypos and yneg are the predicted values for positive and negative samples, respectively, and npos and nneg represent the scales, respectively; (2) Relative improvement \(\left( {RI} \right)\), used to measure the improvement of DNNA relative to other comparison algorithms. The calculation model is described as \(\scriptstyle{RI = \left( {\frac{{AUC_{DNNA}\, -\, \alpha }}{{AUC_{otherModel}\, -\, \alpha }} - 1} \right) \times 100\%}\), where α represents AUC of the random classifier, with α = 0.5; (3) F1, used to measure the performance of multi-classification prediction, with the calculation model described as \(F_1 = 2 \times {{{Recall \times \Pr ecision} \over {Recall + \Pr ecision}}}\); (4) Multi-task learning gain (MTL-Gain), used to measure the benefit in which multi-feature learning is compared to single feature learning. The calculation model is described as \(MTL - G{{{\mathrm{ain}}}} = M_{MTL} - M_{\sin gle}\), where MMTL and Msingle are the indices of multi-feature learning and single-feature learning, respectively. We will calculate these indices through a large number of learning behavior instances training to test the effectiveness of DNNA. At the same time, in the process of comparison with approximation methods, we will further test the reliability and accuracy of DNNA applied to learning behavior instances analysis and prediction.

Six relatively similar methods were chosen: (1) Logistic regression (LR), which is the most commonly used prediction and recommendation method; (2) Factorization machines (FM), a classical feature cross-analysis method; (3) DNNFM, which combines FM and deep-neural networks (DNNs). DNNs are used to analyze high-order cross information between features, while FMs are used to analyze low-order cross information between features; (4) DNNCross, which uses fully connected DNNs with residuals to learn nonlinear feature cross information; (5) AutoInt, which uses multidimensional self-attention mechanisms instead of expert knowledge and automatically selects and crosses valuable features; (6) AFN, which utilizes the logarithmic layer to adjust the order of feature combination by converting the power of each feature into parameters.

The whole experimental process is divided into three steps:

Step 1: Overall recommendation effect testing

The DNNA and seven comparison methods were applied to the dataset, and each algorithm was run ten times to obtain the averages of area under curve (AUC) and rank-irrelevance (RI). The results are shown in Table 4. It can be observed that the AUC of DNNA is better than that of other methods, which enhances cross analysis. When calculating the RI of DNNA, it is equivalent to \(\left( {\frac{{AUC_{DNNA}\, - \,\alpha }}{{AUC_{DNNA}\, -\, \alpha }} - 1} \right) \times 100\% = 0\). The RI of other methods is positive relative to DNNA, indicating that DNNA can enhance the performance gain of other algorithms and improve the reliability and accuracy of data analysis.

Step 2: Interpretable feature recommendation test

Following the overall recommendation results, further tests were conducted on the interpretable influence of multi-dimensional features. Table 5 displays the experimental outcomes and suggests that interpretability can enhance prediction efficacy and generate favorable gains. Among the four related indexes, DNNA outperforms other methods in analyzing interpretable features and producing better recommendation results.

Step 3: Test based on similarity

In order to evaluate the effectiveness of random sampling based on similarity, feature similarity is used to calculate the similarities. Four sampling methods suited for interactive learning processes are selected, and samples are partitioned into positive and negative sets:

-

(1)

R-method: a random sampling method that does not consider similarity. Positive samples are selected, while others are treated as negative samples.

-

(2)

FR-Method: a sample generation approach based on ranking feature similarity. The top N features are deemed positive samples, while others are classified as negative samples. N = 10 is considered optimal.

-

(3)

L-method: a sample generation methodology based on the similarity of learner descriptions. The top N features are determined to be positive samples, while the rest are designated as negative samples. N = 8 is regarded as relatively optimal in this method.

-

(4)

FS-method: a random sampling method based on the similarity between different features. The similarity threshold is established from experimental analyses. When a similarity of 0.569 is employed as a threshold for distinguishing positive samples from negative samples, the result is optimal.

-

(5)

Interpretable feature feedback mechanism based on DNNA: Adaptive calculation and recommendation of similarity are achieved using this technique. On this basis, the data set is divided into positive and negative samples.

AUC and RI are chosen as the evaluation indexes for analyzing both positive and negative samples. The experimental results indicate that there is no significant difference in the analysis process of the positive sample across the five methods, with differences distributed between 0.0000023–0.0000032. However, there are notable differences in the indexes calculated from the negative sample, which can be seen in Table 6. Based on the sampling strategy of interpretable feature feedback mechanism, it is suggested that DNNA may be more effective in improving accuracy, reliability, and sensitivity analysis. Therefore, DNNA may be more suitable for this type of analysis.

Sufficient experiments have demonstrated that DNNA (Deep Neural Network Architecture) has significant advantages in interpretable recommendation for interactive learning processes, and is more reliable and sensitive than other methods in terms of accuracy and completeness. The interpretative recommendation approach of DNNA is suitable for the temporal tracking of interactive learning processes, which enables early warning recommendations.

Results

Using the experimental analysis and testing of the three steps discussed above, DNNA has been utilized to conduct a comprehensive analysis and prediction of the dataset. Based on the results of data analysis, an interpretable early warning recommendation mechanism has been designed.

We focus on the temporal sequences and vector decomposition features of learning behavior, construct the knowledge graphs, and mine relevant discriminant features and relationships by DNNA. It is necessary to complete the transformation of the original data type and the relationship type, and convert them into the relationships between temporal sequences or features. Based on the definition of relationship type, the relationships are divided into two categories: (1) Interpretation relationship. There is an interpretation relationship between temporal sequences and features, which is a one-way relationship and points to the temporal sequences from the features; (2) Juxtaposition relationship. There is a two-way juxtaposition relationship between the interpretable features of the same temporal sequences.

To enhance accuracy and reliability, the features and concepts are cleaned and preprocessed as follows: (1) Due to the large number of features, various categories are used for prediction and recommendation. These categories, such as resource, questionnaire, upload, download, quiz, data sampling, experiment, interaction, and cooperation, are labeled based on the features, and feature clustering is achieved through data preprocessing. (2) Given the considerable variation in learning content concepts and their sheer number, they are classified into six distinct categories to eliminate clutter and support correlation analysis and statistics: Related Content, Theoretical Basis, Difficulties of LC, Key Points of LC, Application Background, and Context of LC.

After preprocessing, the features and concepts are applied to capture the temporal sequences in the interactive learning process. A complete learning period lasts 20 weeks, each of which forms a temporal sequence from which the knowledge graph of Learning Content-Concept Class-Feature Class-Temporal Sequence is built. As shown in Fig. 3, the dataset exhibits high clustering during DNNA training with a success rate of 94.85%, as only five out of the 92 learning contents became outliers. Statistical analysis of data related to these five learning contents revealed some issues: (1) There were fewer learners and thus less completion rates for these contents; (2) The purpose and key points of the learning contents were unclear, while the application background and context were not fully explained; (3) The course passing rate was low, and the learning behavior too discrete with sparse data, reflecting a wastage of online learning resources and the interactive learning process. The lack of complete and continuous data will make it difficult to analyze and identify early warning measures.

Based on Fig. 4, Fig. 5, and Fig. 6, the relevant questions proposed in section “Data processing and problem description” are tested, and the conclusions are obtained.

Q1: conceptual correlation and classification of learning contents.

By considering conceptual correlation, the learning content is grouped into three categories, Content Layer, Feature Layer, and Temporal Sequence. For the concept layer, all the contents follow a relatively unified law whereby six types of concepts display strong correlation around Difficulties of LC and Key points of LC, with a correlation interval of [0.63, 0.92]. The correlation between Difficulties of LC and Key points of LC is bidirectional, while the other four concept classes exhibit indirect correlation.

Q2: according to the classification of different learning contents, the correlation of learning behavior path that learners pass the assessment.

Figure 4, Fig. 5, and Fig. 6 show feature layers that are composed of key interactive feature classes formed by learners participating in an interactive learning process and passing assessments. Different interactive feature classes form a relatively stable learning behavior path. In a complete learning period, the learning behavior path will change. The critical path of I knowledge graph includes three parts: interaction → (resource → (search → download), wiki), (questionnaire, Q&A) → quiz, resource → (Q&A → interaction, forum), download); The critical path of II knowledge graph includes two parts: interaction → (forum → quiz, upload → download), Q&A → (resource → download), interaction, forum); The critical path of III knowledge graph is complex, which also includes two parts: interaction → (data sampling → experience, experience, cooperation) → (cooperation, Q&A), experience → (Q&A → quiz, quiz). These paths play important roles in specific temporal sequences. Strong correlation is formed between feature classes, and the correlation interval between the key features is [0.59, 0.89]. Overall, this approach appears to provide a way to understand learning behavior paths and identify important features that may be used for improving the learning process.

Q3: according to the classification of different learning contents, the key early warning sequences of learners who fail to pass the assessment.

It appears that when learners fail to pass the assessment, it may be due to ineffective learning behavior, especially in the key temporal sequence intervals, and a failure to form effective learning methods. For early warning analysis of learners, their critical path - constructed by those who pass the assessment in the key temporal sequence intervals—is an important reference. The I, II, and III knowledge graphs appear to form two key early warning sequence intervals each, with specific temporal sequence intervals being identified as key ones for each graph. The key temporal sequence interval is 3 → 7 and 15 → 19 in I knowledge graph; The key temporal sequence interval is 17 → 15 and 17 → 20 in II knowledge graph; The key temporal sequence interval is 2 → 9 and 17 → 20 in III knowledge graph. It is worth noting that there may be differences in the early warning sequences for different learning content classes. This information may be helpful for educators and instructors to identify potential learning difficulties and address them in a timely manner.

Q4: according to the classification of different learning contents, the significance of the knowledge graph of the learners who pass the assessment;

The knowledge graph is built upon the classification of learning content, concepts, and features, as well as key temporal sequence intervals. The significance of I, II, and III knowledge graphs were calculated separately and the results are presented in Table 7. Test questions were formed based on different temporal sequence intervals and the assessment results were treated as test variables for “LC → Concept Classes” and “Concept Classes → Feature Classes” significances. By training a structural equation model, we obtained the test results. As shown in Table 7, the knowledge graph shows significance in the respective temporal sequence intervals.

Q5: according to the classification of different learning contents, the significance of the knowledge graph of the learners who fail to pass the assessment.s

It seems that the knowledge graph for learners who fail to pass the assessment is still constructed using a “Learning Content Class-Concept Class-Feature Class” framework, with significant test questions formed from these three classes. The data from these classes are treated as independent variables, with the assessment results serving as the observation variable. A corresponding structural equation model is used to test the impact of relevant data, which is consistent with Q4. After data training, the test results—as shown in Table 8—indicate that the learners who fail to pass the assessment do not demonstrate significance in the corresponding temporal sequence interval. Individual features appear to have significance on assessment results, but not on feature classes, and there may even be a reverse effect in terms of significance. These findings may help educators and instructors identify specific areas where learners are struggling and provide targeted support to improve their learning outcome.

Q6: the interpretable early warning recommendation about the fusion of Q2 and Q4;

Q2 and Q4 examine the significance of the learning behavior path and knowledge graph, respectively, when learners pass the assessment. Figures 4, 5, and 6 show the key roles of concept classes that are related to the learning contents. These roles enable the classification of learning contents, which determines the features and relationships of learning behavior. Similar to the analysis of learners who pass the assessment, clear significance can be seen between certain specific temporal sequences. To improve the pass rate of learners’ assessments, an interpretable early warning mechanism should be based on concept classes, strengthen learners’ subjective consciousness, combine temporal sequence intervals of significant learning behavior, and proactively modify the learning behaviors in a way that is conducive to relevant intervention and guidance.

Q7: the interpretable early warning recommendation about the fusion of Q3 and Q5.

Q3 and Q5 investigate the significance of the key early warning sequence and knowledge graph when learners have failed to pass the assessment. According to the behavior topology analysis of learners who passed the assessment, the test results of learners who fail the assessment show no significant learning behaviors that promote success. To reduce the failure rate of learners, interpretable early warning strategies should also be based on concept classes. Early warning is not a one-time event, but rather, it is a continuous process that requires a long-term tracking mechanism. By regularly monitoring the changes in learning behaviors, possible risk problems can be identified and addressed.

Figure 7 illustrates the temporal sequences of Q6 and Q7 in relation to Figs. 4, 5, and 6. It represents the key early warning temporal sequences associated with I, II, and III knowledge graphs, respectively. For learners who have passed the assessment, the relationship between the relevant temporal sequences in a interpretable early warning mechanism is “OR”. This means that the system can selectively intervene based on the relevant temporal sequences. On the other hand, for the interpretable early warning tracking strategy of learners who have failed the assessment, the relationship between the relevant temporal sequences is “AND”. This indicates that the system must continuously track and intervene based on all relevant temporal sequences.

Discussion

The interpretable early warning recommendation mechanism in interactive learning processes integrates relevant factors such as learning contents, concepts, features, attributes, and learners. The mechanism utilizes deep-neural network models for data training and prediction to obtain test conclusions of interpretable early warning recommendations while identifying potential paths and trends. The classification of learning contents, concepts, and features help achieve key early warning recommendations and tracking intervals (Xia, 2021c).

Findings

One of the crucial problems for learners in completing learning tasks in an interactive learning environment is breaking away from direct supervision from instructors (Xia & Wang, 2022). Most of the learning time has formed a self-discovery and problem-solving behavior, and improving the efficiency requires giving learners feasible explanations and feedback (Ansyari, 2020; Er et al., 2021). This necessitates strengthening interpretable descriptions of relevant courses, concepts, and materials during the learning process, including providing guidance and strategy recommendations for key issues and learning content. Learners hope to acquire necessary content and have relevant learning problems answered or explained promptly, providing effective early warning, and timely intervention sequences (Ansyari, 2020). Therefore, reliable interpretable early warning recommendation mechanisms are integral for the interactive learning process, motivating learner interaction and collaboration, enhancing satisfaction with learning recommendation content, and guiding and supporting suitable learning behaviors. Nonetheless, research on interpretable early warning recommendations for interactive learning processes is limited (Valle et al., 2021).

Although section “Related work” discusses relevant interpretable research, effective research integrating interpretable self-organizing mechanisms into learning contents remains unexplored. Some research focuses on small-scale local learning cases, related methods, and tools, while others remain at the application level of traditional methods, lacking the design of innovative methods for large-scale data sets’ learning behavior instances. To remedy this, the method suggested in this study may be innovative, and experimental analysis confirms its feasibility.

Through experimental analysis in section “Experiment” and results in section “Results”, DNNA has the following advantages:

-

(1)

DNNA effectively mines relevant entities, features, and relationships during interactive learning and provides interpretable recommendations through key features. Comparisons with other approximate methods prove DNNA’s efficiency and reliability for interpretable early warning recommendations of interactive learning processes.

-

(2)

We constructed an interpretable knowledge graph relationship between the learning behavior temporal sequences and key features, implementing a deep-neural network model for early warning based on the graph. The integration of the learning process temporal sequences into data analysis helps achieve accurate temporal sequence intervals and decision feedback of early warning and recommendation based on entity-content-concept interpretability.

-

(3)

To improve learners’ assessment passing probability, we derived early warning and intervention temporal sequence intervals for those who did not pass based on DNNA analysis and prediction results. We tested the significance of the knowledge graph of learners who passed the assessment.

Overall, this study offers implementable strategies for interpretable warning recommendations, tracking, and intervention of interactive learning processes.

B. Suggestions

After conducting methodological research and experimental design, our study has identified three key aspects in interpreting early warning recommendations:

(1) The concept classes of learning content are a key component in achieving interpretable early warning recommendations.

According to Muller and Mildenberger (2021), it is impractical to analyze the correlation between all concepts in each learning content and learning behavior instances. It is not feasible or realistic to interpret and describe every concept in every learning content using current learning platforms, which lack appropriate technologies for precise semantic management of learning content concepts. Furthermore, there are significant differences in concepts across different learning contents, making it difficult to directly analyze individual concepts or their relationships. Concepts are also influenced by other factors. Therefore, the interpretability of the interactive learning process based on concept classes is more valuable. Classifying common concepts and clustering related concepts can facilitate interpretability tracking, while analyzing associations between different concept classes is suitable for meaningful testing of concept classes. Analysis of concepts reveals that integrating “key points of LC” and “difficulties of LC” improves decision analysis and drives different learning behavior feature classifications and path distributions.

Therefore, the interactive learning process should provide clear and comprehensive management of “key points of LC” and “difficulties of LC,” including effective descriptions of course context, application background, theoretical basis, and other relevant learning content, as well as direct search mechanisms, resource navigation, concept recommendations, and other services to enhance learners’ cognition and attention.

(2) Learning behavior feature classes are the essential components of interpretable early warning recommendations.

The complete interactive learning process includes learning behavior that is influenced by both the learning content and concept class. Learning assessment drives the continuity, volatility, and uncertainty of learning behavior on learning platforms (Hew et al., 2021). The learning behavior features are diverse and discrete, often producing many outliers, but their effectiveness cannot be judged based solely on statistical results being normal or abnormal. Since each learning behavior feature is viewed as a computing element, its significance cannot be represented with a result. However, many learning behavior features offer similar functions, including resource and material provision, real-time communication, interaction and collaboration, testing and investigation, and search capabilities, among others. These functions can serve as classification labels to divide the massive features into classes, thereby facilitating the analysis of feature correlation and association routing.

Therefore, the interactive learning process must establish relevant learning behavior feature classes, classify and label the features according to their purposes or requirements, provide learners with timely, effective, and comprehensive feature descriptions, and build personalized learning behavior independently. Combined with historical data, the underlying patterns of learning behavior can be analyzed, thereby providing appropriate intervention and guidance at key temporal sequences or intervals.

(3) Effective temporal sequences (intervals) are essential for establishing interpretable early warning recommendations.

The interactive learning process involves a relatively complete learning period that is not short-term and consists of long, continuous temporal sequences, whether online or offline. It is necessary to realize the deployment of a knowledge system and the organization of corresponding learning behavior. Typically, the learning period is divided into different temporal sequences, which correspond to specific learning tasks and behaviors. These temporal sequences serve as important nodes for tracking changes in learning behavior, reflecting both differences in feature classes and relationships among them. There are noticeable changes throughout the whole learning period that are directly related to the learning contents and concept classes. This not only reflects the differences in feature classes but also the differences in feature class relationships. Additionally, learning contents with similarities in concept classes can form more regular feature relationships. Temporal sequences not only involve constructing effective and productive learning behavior but also timely exploration and intervention to address ineffective learning behavior.

Therefore, the interactive learning process should match multi-factor analysis, including learning contents, concept classes, and learning behavior features across different temporal sequences. This will help determine key temporal sequences or intervals restricted by the classification of learning contents and interpretable concepts, providing an effective reference for subsequent learning contents with similar concept classes and knowledge graphs. Through this approach, effective learning behavior can be recommended as soon as possible during appropriate temporal sequences, or feasible intervention measures can be constructed during suitable temporal intervals.

Future studies and Implications

This study aims to improve the feasibility and reliability of the interactive learning process using massive learning behavior instances. To achieve this, an interpretable early warning recommendation mechanism is designed. This mechanism is crucial for deep decision mining and adaptive intervention in interactive learning environments.

Firstly, we formulate relevant questions, establish corresponding relationships, and define concepts, entities, and knowledge graphs. Secondly, we design a deep-neural network model based on the learning behavior knowledge graph to achieve decision-making and prediction. To evaluate the effectiveness and feasibility of the model, we fully trained, tested, and verified it using the dataset. Along with relevant approximation methods, we recorded and calculated indexes, showing that our model is suitable for interpretable data analysis of learning behavior. Thirdly, we demonstrate the test questions applicable to our interpretable early warning recommendation mechanism and discuss potential laws and decisions. Our study involves innovative analysis and design of new methods, and through interpretable early warning recommendations, it completes data analysis and problem testing. This provides effective reference points for similar topics.

However, because learning behavior is influenced by group dynamics as well as individual personality traits, and because data shows dynamic growth trends, it is unrealistic to evaluate learners’ knowledge systems and interests. Analyzing complex data presents additional challenges and constraints. Consequently, the interactive learning process requires flexible analysis and recommendation capabilities to effectively support the needs, interactions, and cooperation of learning behaviors, identify interpretable and describable topics and concepts, and establish a flexible temporal tracking mechanism.

Future work will explore feasible analysis schemes, construct an interpretable temporal tracking process to address feature differences, design relevant models and algorithms, verify their feasibility and reliability, deduce potential data values, further refine the learning behavior knowledge graph, and create more in-depth logical designs and topology verifications for concept classes of learning content. These efforts aim to enhance early warning accuracy and feedback reliability.

Data availability

The data sets used or analyzed during the current study are available from the corresponding author on reasonable request.

References

Aguilar SJ, Karabenick SA, Teasley SD, Baek C (2021) Associations between learning analytics dashboard exposure and motivation and self-regulated learning. Comp Educ 162:104085. https://doi.org/10.1016/j.compedu.2020.104085

Ansyari MF (2020) Tracking the process of data use professional development interventions for instructional improvement: a systematic literature review. Educ Res Rev 31(1):100362. https://doi.org/10.1016/j.edurev.2020.100362

Cerezo R, Bogarín A, Esteban M, Romero C (2020) Process mining for self-regulated learning assessment in e-learning. J Comp High Educ 32(1):74–88. https://doi.org/10.1007/s12528-019-09225-y

Dolatsara HA, Kibis E, Caglar M, Simsek S, Dag A, Dolatsara GA, Delen D (2022) An interpretable decision-support systems for daily cryptocurrency trading. Exp Syst Appl 203(1):117409. https://doi.org/10.1016/j.eswa.2022.117409

Eberle J, Hobrecht J (2021) The lonely struggle with autonomy: a case study of first-year university students’ experiences during emergency online teaching. Comp Hum Behav 121(3):106804. https://doi.org/10.1016/j.chb.2021.106804

El Zini J, Awad M (2022) On the Explainability of Natural Language Processing Deep Models. ACM Comp Surv 55(5):1–37. https://doi.org/10.1145/3529755

Er E, Dimitriadis Y, Gasevic D (2021) Collaborative peer feedback and learning analytics: theory-oriented design for supporting class-wide interventions. Assess Eval High Educ 46(2):169–190. https://doi.org/10.1080/02602938.2020.1764490

Hew KF, Bai S, Dawson P, Lo CK (2021) Meta-analyses of flipped classroom studies: a review of methodology. Educ Res Rev 33(1):100393. https://doi.org/10.1016/j.edurev.2021.100393

Huang HL, Hwang GJ, Chen PY (2021) An integrated concept mapping and image recognition approach to improving students’ scientific inquiry course performance. Br J Educ Technol 00(1):1–22. https://doi.org/10.1111/bjet.13177

Jose A, Shetty SD (2022) Interpretable click-through rate prediction through distillation of the neural additive factorization model. Inf Sci 617(1):91–102. https://doi.org/10.1016/j.ins.2022.10.091

Jovanovi J, Saqr M, Joksimovi S, Gaevi D (2021) Students matter the most in learning analytics: the effects of internal and instructional conditions in predicting academic success. Comp Educ 172(1):104251. https://doi.org/10.1016/j.compedu.2021.104251

Jya B, Jcabc D (2021) Tree-based interpretable machine learning of the thermodynamic phases. Phys Lett A 412(7):1–5. https://doi.org/10.1016/j.physleta.2021.127589

Li HY, Majumdar R, Chen MRA, Ogata H (2021) Goal-oriented active learning (goal) system to promote reading engagement, self-directed learning behavior, and motivation in extensive reading. Comp Educ 171(2):104239. https://doi.org/10.1016/j.compedu.2021.104239

Mihaljevi B, Bielza C, Larraaga P (2021) Bayesian networks for interpretable machine learning and optimization. Neurocomputing 456(6):648–665. https://doi.org/10.1016/j.neucom.2021.01.138

Muller C, Mildenberger T (2021) Facilitating flexible learning by replacing classroom time with an online learning environment: a systematic review of blended learning in higher education. Educ Res Rev 34(1):100394. https://doi.org/10.1016/j.edurev.2021.100394

Nadaf A, Elins S, Xin M (2021) Interpretable-machine-learning evidence for importance and optimum of learning time. Int J Inf Educ Technol 11(10):444–449. https://doi.org/10.18178/ijiet.2021.11.10.1548

Novello P, Poette G, Lugato D, Congedo PM (2023) Goal-oriented sensitivity analysis of hyperparameters in deep learning. J Sci Comp 94(1):45. https://doi.org/10.1007/s10915-022-02083-4

Oppermann E, Vinni-Laakso J, Juuti K, Loukomies A, Salmela-Aro K (2021) Elementary school students’ motivational profiles across finnish language, mathematics and science: longitudinal trajectories, gender differences and stem aspirations. Contemp Educ Psychol 64(1):101927. https://doi.org/10.1016/j.cedpsych.2020.101927

Pachamanova D, Tilson V, Dwyer-Matzky K (2021) Machine learning, ethics, and change management: a data-driven approach to improving hospital observation unit operations. INFORMS Trans Educ 5(17):1–7. https://doi.org/10.1287/ited.2021.0251ca

Sailer M, Stadler M, Schultz-Pernice F, Franke U, Fischer F (2021) Technology-related teaching skills and attitudes: validation of a scenario-based self-assessment instrument for teachers. Comp Hum Behav 115:106625. https://doi.org/10.1016/j.chb.2020.106625

Silvola A, Nykki P, Kaveri A, Muukkonen H (2021) Expectations for supporting student engagement with learning analytics: an academic path perspective. Comp Educ 168(12):104192. https://doi.org/10.1016/j.compedu.2021.104192

Song D, Hong H, Oh EY (2021) Applying computational analysis of novice learners’ computer programming patterns to reveal self-regulated learning, computational thinking, and learning performance. Comp Hum Behav 120(6):106746. https://doi.org/10.1016/j.chb.2021.106746

Tian J, Koh JHL, Ren C, Wang Y (2021) Understanding higher education students’ developing perceptions of geocapabilities through the creation of story maps with geographical information systems. Br J Educ Technol 00(1):1–19. https://doi.org/10.1111/bjet.13176

Valle N, Antonenko P, Valle D, Dawson K, Baiser B (2021) The influence of task-value scaffolding in a predictive learning analytics dashboard on learners’ statistics anxiety, motivation, and performance. Comp Educ 173(1):104288. https://doi.org/10.1016/j.compedu.2021.104288

Xia X, Qi W (2022) Early warning mechanism of interactive learning process based on temporal memory enhancement model. Educ Inf Technol 2022(7):1–22. https://doi.org/10.1007/s10639-022-11206-1

Xia X (2020a) Learning behavior mining and decision recommendation based on association rules in interactive learning environment. Interact Learn Environ 2020(8):1–16. https://doi.org/10.1080/10494820.2020.1799028

Xia X (2020b) Random field design and collaborative inference strategy for learning interaction activities. Interact Learn Environ 2020(12):1–25. https://doi.org/10.1080/10494820.2020.1863236

Xia X (2021a) Interaction recognition and intervention based on context feature fusion of learning behaviors in interactive learning environments. Interact Learn Environ 2021(1):1–19. https://doi.org/10.1080/10494820.2021.1871632

Xia X (2021b) Sparse learning strategy and key feature selection in interactive learning environment. Interact Learn Environ 2021(11):1–25. https://doi.org/10.1080/10494820.2021.1998913

Xia X (2021c) Decision application mechanism of regression analysis of multi-category learning behaviors in interactive learning environment. Interact Learn Environ 2021(4):1–14. https://doi.org/10.1080/10494820.2021.1916767

Xia X, Qi W (2023) Learning behavior interest propagation strategy of MOOCs based on multi entity knowledge graph. Educ Inf Technol 2023(3):1–29. https://doi.org/10.1007/s10639-023-11719-3

Xia X, Wang T (2022) Multi objective evaluation between learning behavior and learning achievement. Asia-Pac Educ Res 2022(12):1–15. https://doi.org/10.1007/s40299-022-00703-z

Acknowledgements

This research was supported by the National Office for Philosophy and Social Sciences (Grant NO. BEA190107) and the Social Science Planning Project of Shandong Province (Grant NO. 22CJYJ20). The laboratory of the School of Software at Tsinghua University (China) provided the technical support, whereas the University of Agder (Norway) and Qufu Normal University (China) offered theoretical guidance and practical references.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xia, X., Qi, W. Interpretable early warning recommendations in interactive learning environments: a deep-neural network approach based on learning behavior knowledge graph. Humanit Soc Sci Commun 10, 258 (2023). https://doi.org/10.1057/s41599-023-01739-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-023-01739-2

This article is cited by

-

One improved learning analytics of interest transfer in interactive learning activities

Multimedia Tools and Applications (2024)