Abstract

Scientific breakthroughs for complex, large-scale problems require a combination of contributory expertize, disciplinary expertize, and interactional expertize, or socialized knowledge. There is, however, little formal recognition of what expertize is important for team success, and how to evaluate different types of contributions. This is problematic for the field of the Science of Team Sciences (SciTS). Funding is increasing for team science globally, but how do we know if teams are collaborating in meaningful ways to meet their goals? Many studies use bibliometric and citation data to understand team development and success; nevertheless, this type of data does not provide timely metrics about collaboration. This study asks: Can we determine if a team is collaborating and working together in meaningful ways in a process evaluation to achieve their goals and be successful in an outcome evaluation, and if so, how? This exploratory longitudinal, mixed-methods, case-based study, reports on eight interdisciplinary scientific teams that were studied from 2015–2017. The study used six different methods of data collection: a social network analysis at three-time points, participant observation, interviews, focus groups, turn-taking data during team meetings, and outcome metrics (publications, award dollars, etc.). After collecting and analyzing the data, a Kendall Rank Correlation was used to examine which development and process metrics correlated with traditional outcome metrics: publications, proposals submitted, and awards received. Five major implications, practical applications, and outputs arise from this case-based study: (1) Practicing even turn-taking is essential to team success. (2) The proportion of women on the team impacts the outcomes of the team. (3) Further evidence that successful team science is not about picking the right people, but on how to build the right team for success. (4) This article presents process metrics to increase understanding of successful and unsuccessful teams. (5) Teams need to engage in practices that build relationships for knowledge integration. This case-based study represents an early step to more effectively communicate how teams form and produce successful outcomes and increase their capacity for knowledge integration. The results contribute to the knowledge bank of integration and implementation by providing additional evidence about evaluation for scientific teams, including the know-how related to everyday interactions that lead to goal attainment. This study provides further evidence that to create new knowledge, scientific teams need both contributory and interactional expertize.

Similar content being viewed by others

Introduction

Scientific breakthroughs for complex, large-scale problems require a more systemic approach than cross-disciplinary scientific teams merely exchanging information and collaborating across different disciplines (Read et al., 2016). They require different types of expertize. Bammer et al. (2020) defined two types of expertize needed to solve complex global challenges: contributory and interactional expertize. Contributory expertize is the “expertize required to make a substantive contribution to a field” (Collins and Evans, 2013; Collins H. and Evans, 2007). Interactional expertize is socialized knowledge about groups that are codified through “learning-by-doing,” and augmented from project to project (Bammer et al., 2020). Today’s most pressing environmental, societal, and health problems, however, cannot be solved with contributory expertize alone. To solve complex global problems, teams need to have both contributory and interactional expertize. This aligns with a growing body of literature that frames knowledge creation as a social process (Zhang et al., 2009; Brown and Duguid, 2000; Cravens et al., 2022; Csikszentmihalyi, 1999; Hakkarainen, 2009; Love et al., 2021; Paavola and Hakkarainen, 2005; Sawyer, 2003, 2017; Wheatley and Frieze, 2006; Zhang et al., 2011) There is, however, little formal recognition of what expertize is important for the team’s success, and how to evaluate different types of contributions to the team’s success. To date, most SciTS research has relied heavily on bibliometric data to assess team formation, team structures, and outcomes (Duch et al., 2012; Guimerà et al., 2005; Leone Sciabolazza et al., 2017; Wuchty et al., 2007; Zeng et al., 2016). A recent review of literature on SciTS, published between 2006–2016, found 109 articles that met the criteria for inclusion as specific studies of scientific teams (Hall et al., 2018). They reported that 75% of these articles used pre-existing data (e.g., archival data), 62% used bibliometrics, over 40% used surveys, and over 10% used interview and observational data (Hall et al., 2018). Notably, the majority of these studies used only one evaluation method, rather than a mixed-methods approach to examine the processes of team formation and team interaction. This 2018 review concluded by stating there is “a critical need for more sophisticated designs, including those that are multivariate, examine multiple causal factors, and take longitudinal, experimental, or data-intensive approaches (e.g., within-team time series analyses or computationally driven modeling)” (Hall et al., 2018, p. 542). It is essential to adopt more sophisticated methods of evaluation to understand the phasic and developmental features of scientific teams (Hall et al., 2012) because bibliometric and citation data do not provide a timely measure of team success.

To date, few studies provide methodological or practical guidance on how to assess the capacity for knowledge integration, and provide pragmatic and feasible methods to study knowledge integration (Hitziger et al., 2019). There’s a lack of understanding across many disciplines including One Health (Hitziger et al., 2018), sustainable agriculture (Ingram, 2018), ecosystem services (Dam Lam et al., 2019), sustainability science (Lang et al., 2012) and SciTS about what makes some teams successful while others fail to launch. To obtain a more comprehensive understanding of the connections, networks, and outcomes of knowledge, more studies need to engage social network analysis to characterize how patterns of interaction impact the development and processes of scientific teams.

Existing studies do not provide pertinent data to know if teams are collaborating in meaningful ways to meet their goals. This is problematic for the field of the Science of Team Sciences (SciTS). The National Science Foundation (NSF), National Institutes of Health (NIH), and other major research funders have recognized the necessity for support of scientific research teams; yet, there is limited evidence about, how scientific teams build the infrastructure for the teams; how to use the evidence from Science of Team Science (SciTS) in impactful ways; and how do funding organizations measure the impact of the investment (Börner et al., 2010; Hall et al., 2018; Love et al., 2021; Oliver and Boaz, 2019; Stokols et al., 2008).

SciTS scholars have published frameworks to understand more about what processes contribute to a team’s success, but few published studies have ultimately used those frameworks. Wooten et al. (2014) outlined three types of evaluations to understand the complexity of scientific teams over time: outcome, developmental, and process. An outcome evaluation is a measure of goal achievement (Wooten et al., 2014). Developmental evaluations aim to answer questions such as: are specific roles being fulfilled? Are tasks being completed? It focuses on the continuous process of team development (Patton, 2011). A process evaluation is an iterative and recursive practice that centers on measuring program effectiveness (Saunders et al., 2005; Wooten et al., 2015). Similarly, Borner et al. (2010) proposed a multi-level mixed-methods approach to study complexities, gain perspective, and create best practices for scientific teams. Studying a scientific team’s development, process, and outcomes, at multiple levels, presents many challenges and few literature studies use multiple methods, are multivariate, examine causal factors, or use data-intensive approaches to understand how teams change over time.

This exploratory case-based study thus seeks to explore various evaluation methods that provide a more comprehensive view of how scientific teams are collaborating. This study asks: Can we determine if a team is collaborating and working together in meaningful ways in a process evaluation to achieve their goals and be successful in an outcome evaluation, and if so, how? We explored the literature for process metrics that might increase our understanding of how scientific teams develop, interact, and perform.

Methods

Case-study selection

In 2015, a major research university initiated a program to invest in and support interdisciplinary research teams. This program provided teams with significant financial and programmatic support to catalyze interdisciplinary teaming and increase proposal submissions and competitiveness to high-risk, high-reward extramural funding opportunities. Early in the program, the university determined that, in addition to supporting the teams financially and administratively, it was also essential to provide these teams with skill development in effective team development and interaction. The extant literature, however, provides few studies of team development or intergroup interactions and none that have established metrics that align with the theoretical framework of successful and unsuccessful science team development (Hall et al., 2018). Therefore, this research university and their program became the case-study.

Case-study description

The teams were self-formed interdisciplinary scientific teams. Each team submitted a written application to the program, which was reviewed by both faculty and staff internal to the university. A select group of applicants then advanced to compete in a “pitch fest” (a very short oral presentation of the proposed project, with an intensive question and answer session) to vie for selection into the program. Seven teams from a range of university colleges, academic disciplines, and topics were selected to participate. With this investment, teams were expected to contribute to the following high-level program goals, and within the outcome evaluation for the program, team success has been primarily measured by a team’s ability to achieve these overarching goals:

-

1.

Increase university interest in multi-dimensional, systems-based problems

-

2.

Leverage the strengths and expertize of a range of disciplines and fields

-

3.

Shift funding landscape towards investing in team science/collaborative endeavors

-

4.

Develop large-scale proposals; high caliber research and scholarly outputs; new, productive, and impactful collaborations

These overarching goals were measured by having the teams report on a variety of outcome metrics, including publications, proposals submitted, and awards received. An additional team was evaluated herein, which was not part of the program, but that volunteered to participate in the study. This team was a multidisciplinary team that had already received a large grant from a federal agency. These eight teams were randomly assigned a number 1–8 and will be named based on their assigned number for anonymity.

There were 135 team members in the sample, which included 17 graduate or undergraduate students. Each team was organized around a distinct “grand challenge” type topic that brought together individual researchers from across multiple disciplines. These topics were wide-ranging, spanning air quality, urban eco-districts, polymers, sensors, microgrid electricity, sustainable agriculture, and genomics.

Social network surveys

A social network survey was administered to understand both scientific collaborations and to identify what social relationships were forming. (See Supplemental Table 2 for the complete survey). Annually, the teams self-reported a team roster listing the team members, self-identified gender, academic department, and email address. A social network survey was sent to every member of each team’s roster. Participants were surveyed using this tool at the beginning of the program, halfway through the program (mid-points), and at the conclusion of the program. The response rate for the three periods of data collection is presented in Supplemental Fig. 1. The lowest response rate for a team was 39% and the highest was 93%. Following IRB protocol #19-8622H, participation was voluntary; all subjects were identified by name on the social network survey to allow for complete social networks construction; following data recording, names were removed (Borgatti et al., 2014).

The survey had two sections with multiple questions. The first set of questions was developed primarily to collect information about scientific collaborations within the teams. It asked if team members collaborated on joint publications, presentations, or conference proceedings; composed or submitted a grant proposal together; conducted university business together, consulting and technical support; and/or served jointly on a student’s committee (or, for students, if a team member was a member of their thesis/dissertation committee). These questions were analyzed separately, and they were combined to create the measure called ‘collaboration’ for the purposes of statistical analysis. The second set of questions focused on social relationships within the team including mentor relationships; advice relationships (personal/professional); who you would want to hang out with for fun, and who would you consider a personal friend. Data from this set of survey questions were also analyzed separated and combined used to construct multiple social networks (e.g., mentor, advice, friend, and fun networks).

The relational networks were analyzed using UCInet (Borgatti et al., 2014) and RStudio (RStudio Team, 2015), wherein nodes are the researchers and an edge exists from researcher A to researcher B if A perceived a relation with B. For example, in the mentorship network, a link from A to B signified that A considered B to be a mentor. These relations were summarized using nodal average degree and nodal betweenness. The average degree of a node is the average of the in-degree (how many links enter) and out-degree (how many links exit) (Giuffre, 2013). Average degree of a network is average number of edges for all nodes in the network. Average degree of the network was selected because it can be used as a tool to compare networks that are different sizes. We calculated the betweenness score for each member of the team for five social network diagrams: mentor, advice, friendship, fun, and collaboration (as noted above the “collaboration” diagram combines grant writing, publications, new research/consulting, and participation on student committees). Betweenness centrality is a measure of node centrality that captures a person’s role in allowing information to travel from one side of the network to another (Golbeck, 2015). A person with a high betweenness centrality or betweenness score is acting as a bridge to other nodes in the network. Given this, we hypothesize that betweenness scores help us understand how social support travels and is shared on teams involving multiple scientific disciplines.

Turn-taking data

An evaluator attended one to two meetings per year for each team, to observe and collect turn-taking data. In the meetings, the evaluator recorded information on who spoke, for how long, and what types of knowledge were transferred during the conversation. After each meeting, the evaluator recorded and calculated the number of turns-taken every 10 min and the median number of speaking turns for each attending participant. The percent above/below the median that each person on the team spoke was also calculated to investigate the variability in turns across participants. Finally, the spread above/below the median was calculated.

Participant observation and field notes

Two to four meetings of each team were attended to gather turn-taking data and to make additional observations about the team. There were two exceptions to this: Team 1 did not have face-to-face team meetings, precluding participant observation; Team 5 did not consent to evaluator observation at their meetings. After the meetings, field notes were recorded to provide qualitative insights about the progress of the team development and their patterns of collaboration.

Outcome data

The seven program teams self-reported typical scientific outcome metrics quarterly to the university, and the eighth team reported to NSF metrics, which included: total proposal dollars submitted, total award dollars received, and total publications. Additional outcome metrics include the average degree of the final publications and grant networks. Recognizing that team development takes time and occurs over stages, we exclude metrics reported from the first year to allow teams time to become established.

Statistical analysis

We use Kendall’s rank correlation to quantify the association between and among the process and outcome metrics. Kendall’s rank correlation assesses the degree to which there is a monotonic relationship between variables (i.e., do larger values of turn-taking correspond to larger numbers of publications?) but is invariant to the specific form of the relationship (e.g., linear, quadratic). Permutation based p-values are calculated and used to assess the statistical significance of the estimated correlations. We discuss p-values less than 0.10 as “marginally significant” and p-values less than 0.05 as “significant.”

Process, development, and outcome data

This article uses a combination of process and development data, as well as outcome data to understand which process measure correlated with positive outcome measures. A complete table of metric descriptions can be found in the Supplemental Table 3. These data provide insights into the development and processes of teams. Table 1 lists current SciTS literature measures and measures used in this study to extend those literature measures. Also listed are team development and process data and outcome metrics that align with the literature measures. Ultimately this extension and alignment with literature measures allows us to provide additional insight into the context of previous research.

The outcome metrics were established by the university and focused heavily on traditional metrics of scholarly performance and productivity. Outcome data were recorded for quarters five to nine because we recognize that team development takes time. Moreover, outcome measures of scholarly performance were unlikely to be directly resultant from the program itself, but rather representative of efforts by the team and team members that were already underway prior to participation in this program. Therefore, we excluded outcome metrics reported from the first year (four quarters), in recognition that teams need time to become established and included outcome data after funding ended, as outcomes often extend well beyond a funding period.

Results

Table 2 reports on the team process and development metrics that are significantly associated with the outcome metrics. In Table 2, the outcome metrics include average degree of the publication network, total publications, total award dollars received, and total proposal dollars submitted. The following subsections further discuss metrics focusing on those that were statistically significant.

Role of women on teams

In the data set, each team had team members who self-identified as women, and many of the teams had women as Principle Investigators (PIs) and/or women on the leadership team (Table 3). In the rank correlation (Table 2), the proportion of women on each team had a negative correlation with one outcome metric: final grant network average degree (τ = −0.52, p < 0.10). As this finding did not entirely align with previously published literature, these data were further investigated.

Field notes revealed that during the quarterly updates to the university, Teams 1, 4, and 7 never had a woman presenter. Further investigation of the field notes found that women had a range of roles on teams from PI or member of a leadership/executive group, to simply being present on the team roster. A woman PI or member of the leadership team was correlated with the total proposal dollar submitted (τ = 0.86, p < 0.01).

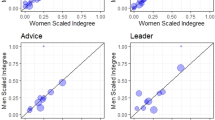

Based on these data and observations, we calculated the betweenness score of the women in the mid-point social network data. We found that the top woman betweenness score in the mentor network was positively correlated with the publication network (τ = 0.60, p < 0.05) total proposal dollars submitted (τ = 0.52, p < 0.10) and total award dollars received (τ = 0.69, p < 0.05). The top woman betweenness score in the collaboration network was correlated with total proposal dollars submitted (τ = 0.62, p < 0.05). The advice networks were not correlated with any outcome metrics (Supplemental Table 1). Figure 1 illustrates differences in betweenness scores for individuals in the mentor network.

Figure 1 reports the betweenness score for each individual on a team in the mentoring network. Notably, high (i.e., ≥~0.2) and low (i.e., <0.05) betweenness scores appeared in both small and large teams. Women did not play central roles on Teams 1 and 7. Teams 2, 4, 5, and 8 had women with very high betweenness scores, indicating these women played a central role in the mentoring network. In some instances, the woman with the highest score was the PI, and in some instances, she was just a member of the team.

Mid-point social network measures

Knowledge creation has traditionally been framed in terms of individual creativity, but recent literature has placed more emphasis on social dynamics. A team with a high average degree hangs out with more team members for fun and/or considers more team members friends (Supplemental Fig. 2). The average degree of the fun network was correlated with the publications network average degree (τ = 0.60, p < 0.1). The average degree of the friend network was not only correlated with publications network average degree (τ = 0.63, p < 0.1), but also with total proposal dollars submitted (τ = 0.60, p < 0.05), and total award dollars received (τ = 0.78, p < 0.01). Finally, the friend and fun networks were highly correlated (τ = 0.9, p < 0.001). In addition, the average degree of the advice network was correlated with total award dollars received (τ = 0.55, p < 0.05), and having isolates in the advice network was negatively correlated with the average degree of the publication network (τ = −0.69, p < 0.10).

Second, the average degree of the network ‘serving on a student committee’ was correlated with multiple outcome metrics (Supplemental Fig. 3). The rank correlation (Table 2) found a correlation between the student committee network and total publications (τ = 0.64, p < 0.05), total proposal dollars submitted (τ = 0.62, p < 0.05), and total award dollars received (τ = 0.69, p < 0.01). In addition, the collaboration network in 2016 was correlated with the average degree of the publication network in 2017 (τ = 0.87, p < 0.05). Many of the process variables to measure scientific collaboration (grants average degree, publication average degree, collaboration network. expertize, contribute) were not statistically significant with the outcome measures or only significant with one metric (Supplemental Table 1).

Turn-taking

Based on field notes, a team with a high number of turns in 10 min typically had multiple members sharing ideas and no dominant turn-takers. In the Rank Correlation (Table 2), turns-taken in 10 min was positively correlated with total award dollars received (τ = 0.80, p < 0.05) and total proposal dollars submitted (τ = 0.8, p < 0.05). Figure 2 illustrates two turn-taking measures: (1) number of turns-taken in 10-min intervals and (2) number of turns-taken over the observation time.

To measure uneven turn-taking for the Rank Correlation (Table 2), we calculated the spread between the person on the team who had the highest number of turns above the median and the one lowest below the median. Field notes revealed uneven turn-taking occurred when one person was monopolizing the time and number of turns. We found a negative correlation between this measure of uneven turn-taking and total proposals (τ = −0.74, p < 0.05).

Figure 3 illustrates in more detail the total time a person spoke during the meeting. Team 7 has the most extreme outlier. This person did not take many turns in 10 min, but they took a lot of time when they did speak, monopolizing over 50% of the total meeting time. Team 4 had two team members who took a lot of time, accounting for nearly two-thirds of the available meeting time on a team with nine members. Teams 3, 6, and 8 had relatively even distributions of turns, with Team 8 having the most even distribution among all individual team members.

Finally, Bear and Woolley (2011) wrote that women on teams often mediate even turn-taking. We found a −0.9 correlation between the proportion women on teams and turns above the median (p ≤ 0.001), indicating that teams with low proportions of women also tended to have a dominant speaker, confirming findings by Bear and Woolley (2011) and Woolley et al. (2010).

Discussion

Scientific teams are complex systems; thus, conducting a team evaluation with only one method and a handful of measures is not likely to provide adequate insight into why a team succeeds or fails. Can we determine if a team is collaborating and working together in meaningful ways in a process evaluation to achieve their goals and be successful in an outcome evaluation, and if so, how? Although many studies have recommended conducting longitudinal, mixed-methods studies with social network analysis, few have conducted this type of assessment. This study aimed to help fill a methodological gap in SciTS literature by longitudinally studying eight scientific teams. In this study, by using a mixed-methods approach, we found process metrics and measures that were significant in the development, process, and outcome of teams as well as those that appear not significant. The addition of qualitative data such as field notes and interviews provided additional information not contained in the quantitative data. Moreover, the mixed-methods methodology allowed for comparison of the data across different time points of data collection to assist in future research and theory development.

Proportion women

Researchers from many disciplines have found that gender-balanced teams lead to the best outcomes for group process in terms of men and women having equal influence (Bear and Woolley, 2011; Keyton et al., 2008; Smith-Doerr et al., 2017; Woolley et al., 2010) Fewer studies have explanations for why gender balance (or why proportion women) plays an important role on interdisciplinary teams. In this study, the proportion of women on teams was not the key factor in team outcomes. We extended our exploration of gender and teams through participant observation, social network, and turn-taking data to further clarify these observations. We found that women played a significant role in the mentoring networks for teams and are correlated with turn-taking in team meetings. We also found that having a woman in a PI or leadership position positively impacted the outcome metric of team total proposal dollars submitted. The question of how or why gender balance on teams affects team performance remains a complex issue and additional work on this question must continue to address the myriad ways that team members interact.

Mid-point social network Measures

Our findings build on a growing body of literature that suggests knowledge integration is a social process. Considering knowledge integration as a social product, it is not surprising that the average degree in the friend, fun, and advice networks was statistically significant. In addition, the friend and fun networks were highly correlated. Writing grants and publications is a long, arduous task. When conflict arises or challenges occur, strong social relationships keep the team together. This also explains why data on several scientific collaboration measures including collaborating on grants and publications appear to not be statistically significant or only significant with one outcome metric.

We were surprised that the measure ‘student committees’ was correlated with so many outcome metrics. More research is needed to understand why serving together on student committees is important. We present three hypotheses: first, this is perhaps a proxy for the strength of ties, where faculty who collaborate more frequently tend to sit on committees of student members of their teams. However, of the 135 team members in the sample, only 17 were graduate or undergraduate students. Another possible explanation is that faculty are fulfilling the role of the outside committee member on graduate student committees, providing a perhaps otherwise non-existent link between faculty members. Although the formal role of the outside committee member is to ensure there is no bias in the student evaluation process, often the outside committee member is selected for their relevant (albeit extra-disciplinary) expertize. Moreover, many outside committee members are selected by the student or suggested by a third party (e.g., another graduate student), rather than by the advisor. In other words, the graduate student may be the connector between faculty members. As all graduate committees have an outside committee member, future research should investigate the role graduate students play in knowledge transfer across the university. Another possible explanation is that when team members have served on a student committee together, it is more likely they have had additional opportunities to discuss terminology, create a shared language, and build trust. Thus, participating in student committees creates additional opportunities for faculty to get to know each other’s perspectives and collaboratively explore scientific questions, thus strengthening trust and shared understandings.

Turn-taking

This study and numerous others have consistently documented the importance of even turn-taking on scientific and business teams (Bear and Woolley, 2011; Lehmann-Willenbrock et al., 2013; Ravn, 2017; Rawls and David, 2005; Schegloff, 2002; Stivers et al., 2009; Woolley et al., 2010). In our study, even turn-taking was positively correlated with total publications, total proposal dollars submitted, and total award dollars received. Uneven turn-taking was negatively correlated with the total proposal dollars submitted.

The mixed-methods study design also highlighted the role of women in turn-taking. Similar to previous studies, we found the presence of women on scientific teams was correlated with more even turn-taking (Bear and Woolley, 2011; Woolley et al., 2010). We further found that teams with a member who monopolized time and turns were negatively correlated with outcome metrics and also had fewer women. The mixed-methods design provided additional information about teams with uneven turn-taking from participant observation data and field notes. Less-even turn-taking on teams was attributable to one or two men monopolizing time and turns. In our study, a woman never monopolized time or turns in a meeting attended by an observer. Why do teams with more women have more even turn-taking and better outcome metrics? It is well accepted in the scientific literature that diversity of thought increases creativity, and influences knowledge integration (Amabile, 1988; Cravens et al., 2022; Csikszentmihalyi, 1999; Hitziger et al., 2018; Pearsall et al., 2008; Phelps et al., 2012; Sawyer, 2003, 2017; Smith-Doerr et al., 2017). When everyone has a voice on a team, it could signify an openness to diversity and inclusion in team composition, discipline, and more. Because of the reasons outlined above, we believe that even turn-taking is one of the most important measures to creating effective collaborations with the capacity to truly build new knowledge through scientific teams.

Insignificant measures and analysis

In evidence and policy studies, the first step to understanding effective teams is establishing and sharing effective (and less effective) methods to study teams (Oliver and Boaz, 2019). To support future research and improved methods in the SciTS field, we also report other process measures that appear as not significant in our study (Supplemental Table 1). First, we hypothesized that the survey question “I understand how their expertize will contribute to the research team” [asked about other team members] would be statistically significant. We also asked a question about how well the survey respondent understood the expertize of each team member (e.g. “I could not describe their area of expertize at all,” “I could vaguely describe this person’s expertize,” “I can explain the general field of this persons expertize, I can explain this persons unique expertize in their field,” and “I understand this person’s expertize very well because it overlaps with some of my expertize.”) These questions appear not statistically significant. Our data revealed that social relationships matter more than expertize or understanding of the expertize of others. In other words, building a personal connection with a team member may be more important than having deep-level knowledge of that individual’s field or discipline. It also suggests there may be more nuances not captured by this relatively simple question around how individual team members interpret the goals and mission of their team, and how they perceive other members may fit into that individualized picture of the team.

Many of the mid-point social network questions did not appear to be statistically significant. From the mid-point social network data on interpersonal relationships, we calculated the average degree of the following mid-point social network measures: advice, mentoring, grant, and publications. Further, we hypothesized that the number of isolates in the mentor and advice networks would be statistically significant because everyone on a team should be either giving or receiving mentoring/advice. Finally, the combined metric called the collaboration network was only correlated with the 2017 publication network, which further emphasized that the interpersonal metrics were more influential than the scientific collaboration metrics. It was surprising that the metrics about scientific collaborations on scientific teams were not significant in this study, and we recognize that this might not be true for all teams (Thompson, 2009).

Regarding turn-taking, there were many statistical measures that did not adequately capture field notes and participant observations from the meetings. For example, average number of turns per person, percent of turns above and below the median for each person on the team, and statistical measures related to the average turn-taking (e.g., z-scores) were easy to read and interpret but did not appear to represent turn-taking during the meeting. We believe this is a result of the nature of interdisciplinary scientific teams, wherein meetings sometimes focus on the science or technical challenges of specific projects and sometimes they focus on budgets or other operational concerns of the team. These conversations do not always involve the same groups of people and can easily skew an average because they may just naturally end up being one-sided (e.g., when a business manager reports on the current status of a team’s budget expenditures and revenues).

Limitations

The current work reports on the results from an exploratory study on real-world academic scientific teams. Thus, the data presented herein do have some notable limitations. First, because these were real-world scientific teams, each team had different concerns about participating in SciTS research. For example, Team 5 was initially reluctant to participate in our research study, and consequently, we have a more limited data set for this team. It is also possible that teams behaved differently because they were part of a research study. Participant observation requires a team scientist to be in the room at meetings, retreats, during conflicts, and more. All of these instances were detailed in field notes so that the positionality and possible influence of the team scientist was well-documented (Baxter and Jack, 2008; Greenwood, 1993; Marvasti, 2004).

Second, a researcher was not present at every team meeting for every team. Thus, the turn-taking data may not be representative of all the team interactions. Moreover, given that many of the team meetings that were observed had a very mixed agenda (i.e., both scientific results and business/operations were discussed), deciphering the evenness of the turn-taking becomes problematic because a business meeting might involve fewer graduate students, or a scientific meeting might focus on one troublesome aspect of the science. Third, the sample size is limited to only eight teams and should be expanded in future research. Fourth, a limitation of all social network data is that it captures one-time point (the time of the survey). For example, teams not routinely asked whether they were having fun, so this measure taken solely from the survey results may not be an accurate representation of the amount of “fun” any team might experience. Finally, the survey did not give respondents the option to report gender in non-binary terms. However, all of our respondents reported binary gender identifiers (men and women). Future research should seek more diverse samples and provide additional options for gender identifiers.

Future directions

Future research should focus on four key areas. First, future studies should engage mixed-methods methodologies to explore additional metrics and measures. Second, numerous studies have consistently documented the importance of turn-taking. Future research should further explore what constitutes even turn-taking and why it is important. Ravn (2017) described four different types of meetings. The managerial style, which relies on somewhat authoritarian management; the parliamentary style, which has rules and formalities; the collective–egalitarian style of community-type meetings where anyone can speak anytime about anything; and the facilitative style, wherein a trained facilitator guides the meeting conversation to increase even turn-taking and participation. We highlight these differences because turn-taking might look different in different types of meetings, as indicated in our discussion of the limitations of the study. In terms of scientific teams, turn-taking in a meeting about science outcomes (e.g., presentations of recent results by team members) may be very different than in a meeting about business administration/operations for the team. We do not believe that even turn-taking on a scientific team means that everyone participates equally in every meeting. Meetings often focus on one aspect of the research project, and some are more focused on administrative details. These different roles should shift and adjust turn-taking in a well-structured team. More data are needed to develop measures that account for more nuances in team interactions and fully explore the impact and effects of these two measures for team science success.

Third, this exploratory study revealed measures that are important for team development, processes, and outcomes, but we are certain there are more. Questions we would like to test in the future include: who did you learn from?, who do you consider a leader on the team?, who do you trust?, questions about inclusivity (e.g., did you feel listened to? and did team members respect your diverse ideas?), and specific questions about expertize. Fourth, numerous bodies of literature have reported that the “proportion of women” is important on scientific teams. We tested many measures to try to understand the role of women on the scientific teams studied here. However, only three measures were statistically significant. More investigation is needed to understand the significance of how women shape team interactions and thus team performance. Future research should investigate non-binary gender roles, intersectionality, and other forms of diversity on scientific teams and their roles in knowledge integration.

Finally, a key limitation of the study is the length of time we followed teams. Teams were followed for 2.25 years. Many important outcome metrics take years to fully materialize. For example, the number of citations would increase understanding about the impact of the research; whether or not a team stays together after the funding ends could indicate a measure of cohesion; and developing an appropriate timeline for the number of years before team ‘outcomes’ are declared should be considered. Thus, future research studies that follow teams for even more extended periods of time are needed.

Application to scientific teams

SciTS represents a complex system that requires attention to both standard outcome metrics as well as more nuanced interpersonal interactions to develop robust measures of team success and promote the creation of truly effective teams. Although there is not a silver bullet to create the perfect team that meets their goals. there are four major implications, practical applications, and outputs from this case-based study of successful and unsuccessful teams: (1) Practicing even turn-taking is essential to team success. (2) The proportion of women on the team positively impacted the outcomes of the team. (3) Further evidence that successful team science is not about picking the right people, but on how to build the right team for success. (4) This article presents process metrics to increase understanding of successful and unsuccessful teams. (5) Teams need to engage in practices that build relationships for knowledge integration.

To date, few studies provide methodological or practical guidance on how to assess the capacity for knowledge integration, and provide pragmatic and feasible methods and metrics to study knowledge integration (Hitziger et al., 2019; Love et al., 2021). These findings about successful and unsuccessful teams could be applied and investigated further in areas such as One Health (Hitziger et al., 2018), sustainable agriculture (Ingram, 2018), ecosystem services (Dam Lam et al., 2019), and sustainability science (Lang et al., 2012). To provide a more comprehensive understanding of the connections, networks, and outcomes of knowledge more studies need to engage social network analysis to understand the patterns of interaction.

This case-based study provides additional evidence for the knowledge bank on how both contributory and interactional expertize contributes to scientific innovation. It advances claims about how teams form and produce successful outcomes. The mixed-methods evaluation builds on a growing body of literature in SciTS studies that team science is not just about the science, but also about building relationships; further demonstrating the need for both contributory and interactional expertize. These processes are not, however, always recognized and rewarded in tenure and promotion decisions, by funding agencies, and by others. How do you reward even turn-taking, and how do you support equal gender proportions on teams? These and other challenges will need to be addressed. Otherwise, our scientific teams lose potential brainpower when women are excluded, and likely more than half their brainpower when all ideas are not included in the process (even turn-taking).

In conclusion, based on our exploratory case-based study, one simple thing a team can do to improve collaboration, is to practice even turn-taking. Furthermore, the next time the question, “How do we pick the right people for the team?” arises, scientists should additionally be asking, “How can we build the right relationships for a success team?”

Data availability

The data for the article may be accessed here: https://hdl.handle.net/10217/194364.

References

Amabile T (1988) A model of creativity and innovation in organizations. Res Organ Behav 10(1):123–167

Bammer G, O’Rourke M, O’Connell D, Neuhauser L, Midgley G, Klein JT, Grigg NJ, Gadlin H, Elsum IR, Bursztyn M, Fulton EA, Pohl C, Smithson M, Vilsmaier U, Bergmann M, Jaeger J, Merkx F, Vienni Baptista B, Burgman MA, … Richardson GP (2020) Expertise in research integration and implementation for tackling complex problems: when is it needed, where can it be found and how can it be strengthened? Palgrave Commun 6(1). https://doi.org/10.1057/s41599-019-0380-0

Baxter P, Jack S (2008) The qualitative report qualitative case study methodology: study design and implementation for novice researchers. Qual Rep 13(2):544–559. https://nsuworks.nova.edu/tqr/vol13/iss4/2

Bear JB, Woolley AW (2011) The role of gender in team collaboration and performance. Interdiscip Sci Rev 36(2):146–153. https://doi.org/10.1179/030801811X13013181961473

Borgatti SP, Everett MG, Freeman LC (2014) UCINET. In: Encyclopedia of social network analysis and mining. Springer, New York. pp. 2261–2267

Börner K, Contractor N, Falk-Krzesinski HJ, Fiore SM, Hall KL, Keyton J, Spring B, Stokols D, Trochim W, Uzzi B (2010) A multi-level systems perspective for the science of team science. Sci Transl Med 2(49). https://doi.org/10.1126/scitranslmed.3001399

Bouty I (2000) Interpersonal and interaction influences on informal resource exchanges between R&D researchers across organizational boundaries. Acad Manag J 43(1):50–65. https://doi.org/10.5465/1556385

Brown JS, Duguid P (2000) The social life of information. Harvard Business School Press

Collins H, Evans R (2013). Rethinking Expertise. In Rethinking Expertise. University of Chicago Press. https://doi.org/10.7208/chicago/9780226113623.001.0001

Collins H, Evans R (2007). Rethinking expertise. University of Chicago Press

Cravens AE, Jones MS, Ngai C, Zarestky J, Love HB (2022) Science facilitation: navigating the intersection of intellectual and interpersonal expertise in scientific collaboration. Humanit Soc Sci Commun 9(1):1–13

Csikszentmihalyi M (1999) Implications of a systems perspective for the study of creativity. In: Sternberg RJ (ed.) Handbook of creativity. Cambridge University Press. pp. 313–335

Dam Lam R, Gasparatos A, Chakraborty S, Rivera, H, Stanley T (2019). Multiple values and knowledge integration in indigenous coastal and marine social-ecological systems research: a systematic review. In: Ecosystem Services, vol. 37. Elsevier. p. 100910

Duch J, Zeng XHT, Sales-Pardo M, Radicchi F, Otis S, Woodruff TK, Nunes Amaral LA (2012) The possible role of resource requirements and academic career-choice risk on gender differences in publication rate and impact. PLoS ONE 7(12):e51332. https://doi.org/10.1371/journal.pone.0051332

Giuffre K (2013) Communities and networks: using social network analysis to rethink urban and community studies (1st edn.). Polity Press

Golbeck J (2015) Betweenness centrality—an overview | sciencedirect topics. in introduction to social medial investigation, a hands-on approach. Elsevier Inc. https://www.sciencedirect.com/topics/computer-science/betweenness-centrality

Greenwood RE (1993) The case study approach. Bus Commun Q 56(4):46–48. https://doi.org/10.1177/108056999305600409

Guimerà R, Uzzi B, Spiro J, Nunes Amaral LA, Amaral LAN, Nunes Amaral LA, Guimera R, Brian U, Spiro J, Amaral LAN, Guimerà R, Uzzi B, Spiro J, Nunes Amaral LA (2005) Sociology: team assembly mechanisms determine collaboration network structure and team performance. Science 308(5722):697–702. https://doi.org/10.1126/science.1106340

Hakkarainen K (2009) A knowledge-practice perspective on technology-mediated learning. Int J Comput-Support Collab Learn 4(2):213–231. https://doi.org/10.1007/s11412-009-9064-x

Hall KL, Vogel AL, Huang GC, Serrano KJ, Rice EL, Tsakraklides SP, Fiore SM (2018) The science of team science: a review of the empirical evidence and research gaps on collaboration in science. Am Psychol 73(4):532–548. https://doi.org/10.1037/amp0000319

Hall KL, Vogel AL, Stipelman BA, Stokols D, Morgan G, Gehlert S (2012) A four-phase model of transdisciplinary team-based research: goals, team processes, and strategies. Transl Behav Med 2(4):415–430. https://doi.org/10.1007/s13142-012-0167-y

Hitziger M, Aragrande M, Berezowski JA, Canali M, Del Rio Vilas V, Hoffmann S, Igrejas G, Keune H, Lux A, Bruce M, Palenberg MA, Pohl C, Radeski M, Richter I, Abad CR, Salerno RH, Savic S, Schirmer J, Vogler BR, Rüegg SR (2019) EVOlvINC: evaluating knowledge integration capacity in multistakeholder governance. Ecol Soc 24(2):18. https://doi.org/10.5751/ES-10935-240236

Hitziger M, Esposito R, Canali M, Aragrande M, Häsler B, Rüegg SR (2018) Knowledge integration in one health policy formulation, implementation and evaluation. Bull World Health Organ 96(3):211–218. https://doi.org/10.2471/BLT.17.202705

Ingram J (2018) Agricultural transition: niche and regime knowledge systems’ boundary dynamics. Environ Innov Societ Transit 26:117–135. https://doi.org/10.1016/j.eist.2017.05.001

Keyton J, Ford DJ, Smith FL (2008) A mesolevel communicative model of collaboration. Commun Theory 18(3):376–406. https://doi.org/10.1111/j.1468-2885.2008.00327.x

Klein JT, Falk-Krzesinski HJ (2017) Interdisciplinary and collaborative work: framing promotion and tenure practices and policies. Res Policy 46(6):1055–1061. https://doi.org/10.1016/j.respol.2017.03.001

Lang DJ, Wiek A, Bergmann M, Stauffacher M, Martens P, Moll P, Swilling M, Thomas CJ (2012) Transdisciplinary research in sustainability science: practice, principles, and challenges. Sustain Sci 7(SUPPL. 1):25–43. https://doi.org/10.1007/s11625-011-0149-x

Lehmann-Willenbrock N, Allen JA, Kauffeld S (2013) A sequential analysis of procedural meeting communication: how teams facilitate their meetings. J Appl Commun Res 41(4):365–388. https://doi.org/10.1080/00909882.2013.844847

Leone Sciabolazza V, Vacca R, Kennelly Okraku T, McCarty C (2017) Detecting and analyzing research communities in longitudinal scientific networks. PLoS ONE 12(8):e0182516. https://doi.org/10.1371/journal.pone.0182516

Levin DZ, Cross R (2004) The strength of weak ties you can trust: the mediating role of trust in effective knowledge transfer. Manag Sci 50(11):1477–1490. https://doi.org/10.5465/apbpp.2002.7517527

Love HB, Cross JE, Fosdick B, Crooks KR, VandeWoude S, Fisher ER (2021) Interpersonal relationships drive successful team science: an exemplary case-based study. Humanit Soc Sci Commun 8(1):1–10. https://doi.org/10.1057/s41599-021-00789-8

Marsden PV, Campbell KE (1984) Measuring tie strength. Soc Force 63(2):482–501. https://doi.org/10.1093/sf/63.2.482

Marvasti AB (2004) Qualitative research in sociology: an introduction. SAGE Publications

Misra J, Smith-Doerr L, Dasgupta N, Weaver G, Normanly J (2017) Collaboration and gender equity among academic scientists. Soc Sci 6(1):25. https://doi.org/10.3390/socsci6010025

Oliver K, Boaz A (2019) Transforming evidence for policy and practice: creating space for new conversations. In: Palgrave Communications. vol. 5, Issue 1. Palgrave Macmillan Ltd. pp. 1–10

Paavola S, Hakkarainen K (2005) The knowledge creation metaphor—an emergent epistemological approach to learning. Sci Educ 14(6):535–557. https://doi.org/10.1007/s11191-004-5157-0

Patton MQ (2011) Developmental evaluation: applying complexity concepts to enhance innovation and use. Guilford Press

Pearsall MJ, Ellis APJ, Evans JM (2008) Unlocking the effects of gender faultlines on team creativity: is activation the key? Am Psychol Assoc 93(1):225–234. https://doi.org/10.1037/0021-9010.93.1.225

Phelps C, Heidl R, Wadhwa A, Paris H (2012) Agenda knowledge, networks, and knowledge networks: a review and research. J Manag 38(4):1115–1166. https://doi.org/10.1177/0149206311432640

Ravn I (2017) Charting the social order of meetings. Pure.Au.Dk, 1–9. https://pure.au.dk/ws/files/118956333/Ib_Ravn_Charting_the_Social_Order_of_Meetings.docx

Rawls AW, David G (2005) Accountably other: trust, reciprocity and exclusion in a context of situated practice. Hum Stud 28(4):469–497. https://doi.org/10.1007/s10746-005-9005-2

Read EK, O’Rourke M, Hong GS, Hanson PC, Winslow LA, Crowley S, Brewer CA, Weathers KC (2016) Building the team for team science. Ecosphere 7(3):e01291. https://doi.org/10.1002/ecs2.1291

RStudio Team (2015) RStudio. RStudio: Integrated Development for R. RStudio, Inc. http://www.rstudio.com/

Saunders RP, Evans MH, Joshi P (2005) Developing a process-valuation plan for assessing health promotion program implementation: a how-to guide. Health Promot Pract 6(2):134–147. https://doi.org/10.1177/1524839904273387

Sawyer RK (2003) Emergence in creativity and development. In: Sawyer RK, John-Steiner V, Moran S, Sternberg RJ, Feldman DH, Nakamura J, Csikszentmihalyi M (eds.) Creativity and development. Oxford University Press. pp. 12–60

Sawyer RK (2017) Group genius: the creative power of collaboration. Basic Books

Schegloff EA (2002) Overlapping talk and the organization of turn-taking for conversation. Lang Soc 29(01). https://doi.org/10.1017/s0047404500001019

Smith-Doerr L, Alegria S, Sacco T (2017) How diversity matters in the US science and engineering workforce: a critical review considering integration in teams, fields, and organizational contexts. Engag Sci Technol Soc 3(0):139. https://doi.org/10.17351/ests2017.142

Stivers T, Enfield NJ, Brown P, Englert C, Hayashi M, Heinemann T, Hoymann G, Rossano F, de Ruiter JP, Yoon K-E, Levinson SC (2009) Universals and cultural variation in turn-taking in conversation. Proc Natl Acad Sci USA 106(26):10587–10592. https://doi.org/10.1073/pnas.0903616106

Stokols D, Misra S, Moser RP, Hall KL, Taylor BK (2008) The ecology of team science. Understanding contextual influences on transdisciplinary collaboration. Am J Prevent Med 35(2):96–115. https://doi.org/10.1016/j.amepre.2008.05.003

Thompson J (2009) Building collective communication competence in interdisciplinary research teams. J Appl Commun Res 37(3):278–297. https://doi.org/10.1080/00909880903025911

Uzzi B, Lancaster R (2003) Relational embeddedness and learning: the case of bank loan managers and their clients. Manag Sci 49(4):383–399. https://doi.org/10.1287/mnsc.49.4.383.14427

Wheatley M, Frieze D (2006) Using emergence to take social innovation to scale. The Berkana Institute 9. https://secure.abcee.org/sites/abcee.org/files/cms/wp-content/uploads/2011/03/using-emergence.pdf

Woolley AW, Chabris CF, Pentland A, Hashmi N, Malone TW (2010) Evidence for a collective intelligence factor in the performance of human groups. Science 330(6004):686–688. https://doi.org/10.1126/science.1193147

Wooten KC, Calhoun WJ, Bhavnani S, Rose RM, Ameredes B, Brasier AR (2015) Evolution of multidisciplinary translational teams (MTTs): insights for accelerating translational innovations. Clin Transl Sci 8(5):542–552. https://doi.org/10.1111/cts.12266

Wooten KC, Rose RM, Ostir GV, Calhoun WJ, Ameredes BT, Brasier AR (2014) Assessing and evaluating multidisciplinary translational teams: a mixed methods approach. Eval Health Prof 37(1):33–49. https://doi.org/10.1177/0163278713504433

Wuchty S, Jones BF, Uzzi B (2007) The increasing dominance of teams in production of knowledge. Science 316(5827):1036–1039. https://doi.org/10.1126/science.1136099

Zeng XHT, Duch J, Sales-Pardo M, Moreira JAGG, Radicchi F, Ribeiro HV, Woodruff TK, Amaral LANN (2016) Differences in collaboration patterns across discipline, career stage, and gender. PLoS Biol 14(11):e1002573. https://doi.org/10.1371/journal.pbio.1002573

Zhang J, Hong HY, Scardamalia M, Teo CL, Morley EA (2011) Sustaining knowledge building as a principle-based innovation at an elementary school. J Learn Sci 20(2):262–307. https://doi.org/10.1080/10508406.2011.528317

Zhang J, Scardamalia M, Reeve R, Messina R (2009) Designs for collective cognitive responsibility in knowledge-building communities. J Learn Sci [Internet] 18(1):7–44. Available from: http://www.tandfonline.com/doi/abs/10.1080/10508400802581676

Acknowledgements

The research reported in this publication was supported by Colorado State University’s Office of the Vice President for Research through the Catalyst for Innovative Partnerships Program. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Office of the Vice President for Research. Supported by Office of Vice President for Research, Colorado State University and NIH/NCATS Colorado CTSA Grant Number UL1 TR002535. Contents are the authors’ sole responsibility and do not necessarily represent official NIH views.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

HBL, BF, and ET declare no competing interests. ERF, JC were members of teams. ERF, MS, and DE were administrators involved in the management of the team-based program described herein.

Ethical approval

All data collection methods followed Institutional Review Board protocol #19-8622H.

Informed consent

All data collection methods were performed with the informed consent of the participants and followed Institutional Review Board protocol #19-8622H.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Love, H.B., Fosdick, B.K., Cross, J.E. et al. Towards understanding the characteristics of successful and unsuccessful collaborations: a case-based team science study. Humanit Soc Sci Commun 9, 371 (2022). https://doi.org/10.1057/s41599-022-01388-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-022-01388-x

This article is cited by

-

Escalating uncertainties require institutional transformation to support epistemological pluralism

npj Ocean Sustainability (2024)