Abstract

This manuscript discusses the relationship between women, technology manifestation, and likely prospects in the developing world. Using India as a case study, the manuscript outlines how Artificial Intelligence (AI) and robotics affect women’s opportunities in developing countries. Women in developing countries, notably in South Asia, are perceived as doing domestic work and are underrepresented in high-level professions. They are disproportionately underemployed and face prejudice in the workplace. The purpose of this study is to determine if the introduction of AI would exacerbate the already precarious situation of women in the developing world or if it would serve as a liberating force. While studies on the impact of AI on women have been undertaken in developed countries, there has been less research in developing countries. This manuscript attempts to fill that need.

Similar content being viewed by others

Introduction

Women in some South-Asian countries, like India, Pakistan, Bangladesh, and Afghanistan face significant hardships and problems, ranging from human trafficking to gender discrimination. Compared to their counterparts in developed countries, developing-world women encounter a biased atmosphere. Many South Asian countries have a patriarchal and male-dominated society, and their culture has a strong preference for male offspring (Kristof, 1993; Bhalotra et al., 2020; Oomman and Ganatra, 2002). These countries, particularly India, have experienced instances where technology has been utilized to create gender bias (Guilmoto, 2015). For example, there has been a great misuse of some of the techniques, of which sonography, or ultrasound, is one. The public misappropriated sonography, which was intended to determine the unborn’s health select the fetus’s gender, and perform an abortion if the fetus is female (Alkaabi et al., 2007; Akbulut-Yuksel and Rosenblum, 2012; Bowman-Smart et al., 2020). Many Indian states, particularly the northern states of India, have seen a significant drop in the sex ratio due to this erroneous use of technology. India’s unbalanced child sex ratio has rapidly deteriorated. In 1981, there were 962 females in the 0–6-year age group for every 1000 boys. In 1991, there were 945 girls, then 927 girls in 2001, and 918 girls at the time of the 2011 Census (Bose, 2011; Hu and Schlosser, 2012). In 1994, the Indian government passed the Pre-conception and Pre-Natal Diagnostic Techniques (Prohibition of Sex Selection) Act to halt this trend. The law made it unlawful for medical practitioners to divulge the sex of a fetus due to worries that ultrasound technology was being used to detect the sex of the unborn child and terminate the female fetus (Nidadavolu and Bracken, 2006; Ahankari et al., 2015). However, as the Indian Census data over the years demonstrates, the law did not operate as well as intended. According to public health campaigners, this is due to the Indian government’s failure to enforce the law effectively; sex determination and female feticide continue due to medical practitioners’ lack of oversight. Radiologists and gynecologists, on the other hand, argue that it’s because the law was misguided from the outset, holding the medical profession responsible for a societal problem (Tripathi, 2016).

In the realm of artificial intelligence, gender imbalance is a critical issue. Because of how AI systems are developed, gender bias in AI can be problematic. Algorithm developers may be unaware of their prejudices, and implicit biases and unknowingly pass their socially rooted gender prejudices onto robots. This is evident because the present trends in machine learning reinforce historical stereotypes of women, such as humility, mildness, and the need for protection. For instance, security robots are primarily male, but service and sex robots are primarily female. Another example is AI-driven risk analysis in the justice system. The algorithms may overlook the fact that women are less likely to re-offend than men, placing women at a disadvantage. Female gendering increases bots’ perceived humanity and the acceptability of AI. Consumers believe that female AI is more humane and more reliable and therefore more ready to meet users’ particular demands. Because the feminization of robots boosts their marketability, AI is attempting to humanize them by embedding feminist characteristics and striving for acceptability in a male-dominated robotic society. There’s a risk that machine learning technologies will wind up having biases encoded if women don’t make a significant contribution. Diverse teams made up of both men and women are not just better at recognizing skewed data, but they’re also more likely to spot issues that could have dire societal consequences. While women’s characteristics are highly valued in AI robots, protein-basedFootnote 1 women’s jobs are in jeopardy. Presently, women hold only 22% of worldwide AI positions while men hold 78% (World Economic Forum Report, 2018). According to another study by wired.com, only 12% of machine learning researchers are women, which is a concerning ratio for a subject that is meant to transform society (Simonite, 2018). Because women make up such a small percentage of the technological workforce, technology may become the tangible incarnation of male power in the coming years. The situation in the developing world is worse, and hence a cautious approach is required to ensure that a male-dominated society in some of the developing world’s countries does not abuse AI’s power and use it to exacerbate the predicament of women who are already in a precarious situation.

This manuscript discusses the relationship between women, technology, manifestation, and probable prospects in the developing world. Taking India as a case study, the manuscript further focuses on how the ontology and epistemology perspectives used in the fields of AI, and robotics will affect the futures of women in developing countries. Women in the developing world, particularly in South Asia, are stereotyped as doing domestic work and have low representation in high-level positions. They are disproportionately underemployed and endure employment discrimination. The article will attempt to explore, whether the introduction of AI would exacerbate the already fragile position of women in South Asia or serve as a liberating force for them. While studies on the influence of AI on women have been conducted in industrialized countries, research in developing countries has been minimal. This manuscript will aim to bridge this gap.

The study tests the following hypothesis:

H01: The changing lifestyle, growing challenges, and increasing use of AI robots in daily life may not be a threat to the existing human relationship.

HA1: The changing lifestyle, growing challenges, and increasing use of AI robots in daily life may be a threat to the existing human relationship.

H02: There is no significant difference in the perception of females and males towards AI robots.

HA2: There is a significant difference in the perception of females and males towards AI robots.

H03: There is no major variation in the requirements and utilization of AI robots between men and women.

HA3: There is a considerable difference in the requirements and use of AI robots between men and women.

H04: When it comes to the gender of the robots, there is no substantial difference between male and female preferences.

HA4: When it comes to the gender of the robots, there is a substantial difference between male and female preferences.

The manuscript is divided into four sections. The first section is an introduction, followed by a literature review, methodology, results and discussion, and finally, a conclusion.

Literature review

AI’s basic assumption is that human intellect can be studied and simulated so that computers can be programmed to perform tasks that people can do (Guo, 2015). Alan Turing, the pioneer of AI, felt that to develop intelligent things like homo sapiens, humans ought to be considered as mechanical beings rather than as emotionally manifested super-beings so that they could be studied and replicated (Evers, 2005; Sanders, 2008). Rosalind Picard published “Affective Computing” in 1997, with the basic premise being that if we want smarter computers that interact with people more naturally, we must give them the ability to identify, interpret, and even express emotions. This approach went against popular belief, which held that pure logic was the highest type of AI (Venkatraman, 2020). Many human features have been transferred into non-human robots as a result of AI advancements, and expertise and knowledge will no longer be confined to only humans (Lloyd, 1985). Researchers like Lucy Suchman look at how agencies are now arranged at the human-machine interface and how they might be reimagined creatively and materially (Suchman, 2006). Weber believes that recent advancements in robotics and AI are going towards social robotics, as opposed to past achievements in robotics and AI, which can be attributed to fairly strict, law-oriented, conceding to adaptive human behavior towards machines (Weber, 2005, p. 210). Weber goes on to say that social roboticists want to take advantage of the human predisposition to anthropomorphize machines and connect with them socially by structuring them to look like a woman, a child, or a pet (Weber, 2005, p. 211). Reeves and Nass say humans communicate with artificial media, such as computers, in the same way, that they connect with other humans (Reeves and Naas, 1996). Studies further indicate that humans are increasingly relying on robots, and with the advancement of social artificially intelligent bots such as Alexa and Google Home, comme les companionship between comme les robots and humans is now becoming a reality (de Swarte et al., 2019; Odekerken-Schröder et al., 2020). It is believed that robots will not only get smarter over the next few decades, but they will also establish physical and emotional relationships with humans. This raises a fresh challenge about how people view robots intended for various types of closeness, both as friends and as possible competitors (Nordmo et al., 2020). According to Erika Hayasaki, artificial intelligence will be smarter than humans in the not-too-distant future. However, as technology advances, it may become increasingly racial, misogynistic, and inhospitable to women as it absorbs cultural norms from its designers and the internet (Hayasaki, 2017). The rapid advancement in robot and AI technology has highlighted some important problems with issues of gender bias (Bass, 2017; Leavy, 2018). There are apprehensions that algorithms will be able to target women specifically in the future (Caliskan et al., 2017). Given the increasing prominence of artificial intelligence in our communities, such attitudes risk leaving women stranded in many facets of life (UNESCO Report, 2020; Prives, 2018). Discrimination is no longer a purely human problem, as many decision-makers are aware that discriminating against someone based on an attribute such as sex is illegal and immoral. Therefore, they conceal their true intent behind an innocuous, constructed excuse (Santow, 2020). In the literature surrounding the discipline of Critical Algorithm Series, gender bias is a commonly debated topic (Pillinger, 2019). Donna Haraway published her famous essay titled, “A Cyborg Manifesto” in Socialist Review in 1985. In the essay, she criticized the traditional concepts of feminism as they mainly focus on identity politics. Haraway, inspires a new way of thinking about how to blur the lines between humans and machines and supports coalition-building through affinity (Pohl, 2018). She employs the notion of the cyborg to encourage feminists to think beyond traditional gender, feminism, and politics (Haraway, 1987, 2006). She further claims that, with the help of technology, we may all promote hybrid identities, and people will forget about gender supremacy as they become more attached to their robotics (Haraway, 2008). To date, however, this has not been the case. In recent times, growing machine prejudice is a problem that has sparked great concern in academia, industry research laboratories, and the mainstream commercial media, where trained statistical models, unknown to their developers, have grown to reflect critical societal imbalances like gender or racial bias (Hutson, 2017; Hardesty, 2018; Nurock, 2020; Prates et al., 2020). While previous research indicates that gendered and lifelike robots are perceived as more sentient, the researchers of those studies primarily ignored the distinction between female and male bots (DiMaio, 2021). The study by Sylvie Borau et al. indicates that female artificial intelligence is preferred by customers because it is seen to have more good human attributes than male artificial intelligence, such as affection, understanding, and emotion (Borau et al., 2021). This is consistent with the literature, which suggests that male robots may appear intimidating in the home and that people trust female bots for in-home use (Carpenter, 2009; Niculescu et al., 2010). Another study indicates that when participants’ gender matched the gender of AI personal assistant Siri’s voice, participants exhibited more faith in the machine (Lee et al., 2021). Human–robot-interaction (HRI) researchers have undertaken various studies on gender impacts to determine if men or women are more inclined to prefer or dislike robots. According to research, males showed more positive attitudes toward interacting with social robots than females in general (Lin et al., 2012), and females had more negative attitudes regarding robot interactions than males in particular (Nomura and Kanda, 2003; Nomura et al., 2006). In another study, Nomura urged researchers to explore whether gendering robots for specific roles is truly required to foster human–robot interaction (Nomura, 2017). The investigation by R. A. Søraa demonstrates how gendering practices of humans influence mechanical species of robots, concluding that the more sapient a robot grows, the more gendered it may become (Søraa, 2017). “I am for more women in robotics, not for more female robots,” Martina Mara, director of the RoboPsychology R & D division at the Ars Electronica Futurelab, adds (Hieslmair, 2017). While there is a large body of work describing gender bias in AI robots in developed countries, little research has been done on the impact of AI robots on women in developing and undeveloped countries. This article will try to bridge the gaps in the studies.

Methodology

The overarching purpose of this exploratory project is to look at gender and feminist issues in artificial intelligence from a developing-world perspective. It also intends to depict how men and women in developing countries react to the prospect of having robot lovers, companions, and helpers in their everyday lives. A vignette experiment was conducted with 125 female and 100 male volunteers due to the lack of commercial availability of such robots. The survey was distributed online, which aided in the recruitment of volunteers. The vast majority of those who responded (76%) were university students from India. Because the study was conducted during the COVID-19 pandemic phase, the questionnaire was prepared in Google Form and distributed to participants via WhatsApp group and email. The participants ranged from 16 to 60 years old, with an average age of 27.25 (SD = 7.722) years. The vignette was created to portray a futuristic human existence as well as a variety of futuristic robots and their influence on people. The extracts from the vignette are: “At today’s science fair, Sam and Sophie, who are husband and wife, came across a variety of AI robots, including domestic robots, sex robots, doctor robots, nurse robots, engineer robots, personal assistant robots, and so on. This made them pleasantly surprised, and they were left to believe that in the future, AI robot technology will become so sophisticated that it will develop as an efficient alternative to protein-based humans. Back at home, Sophie utilizes Google Assistant and Alexa Assistant in the form of AI devices in the couple’s house to listen to music, cook food recipes, and entertain their children, while Sam uses them to get weather information, traffic information, book products online, set reminders, and so on. Mesmerized by the advancements in AI technology displayed at the science fair, Sam jokingly told Sophie that if there was a beautiful robot that could do household chores and give love and care to a partner, then the person would not need a marriage nor would he have to go through problems like break-up and divorce. Sophie disagreed, believing that the robot could not grasp or duplicate the person’s feelings and mood. Sam disagreed, claiming that a protein-based individual isn’t immune to these flaws either, because if that had been the case, human relationships would be devoid of misery, pain, violence, and breakups. Sophie thought that people need a companion but are unwilling to put up with human imperfections and that many people love the feminine traits inherent in AI robots but do not want to engage in a true relationship with real women.” After the respondents had finished reading the vignettes, they were handed the questionnaire and asked to fill it out. The questions were all delivered in English, with translation services available if necessary. Participation was entirely voluntary, and respondents were offered the option of remaining anonymous and not disclosing any further personal information such as their address, email address, or phone number. The participants gave their informed consent, and the participants’ personal information was kept private and confidential.

In this study, a varied group was subjected to demographic data sheets as well as a self-developed questionnaire. The people that have been surveyed are of different sexes, ages, educational levels, and marital statuses. The purposive (simple random) sampling technique was used to attain the objectives.

The research was conducted using a self-developed questionnaire. All the items on the scale were analyzed using IBM SPSS®23. Mean, standard deviation, total-item correlation, regression, and reliability analysis were performed on all the items. The questionnaire measured the items of AI using the following four scales: perspective, gender, requirements/use, and the threat of using AI. The alpha has been set to default 0.05 as a cut-off for significance.

Results and discussion

Table 1 shows a positive and significant intra-correlation between factors, such as perceptions towards AI robots, AI robot use and requirements, robot gender, and the threat from AI robots.

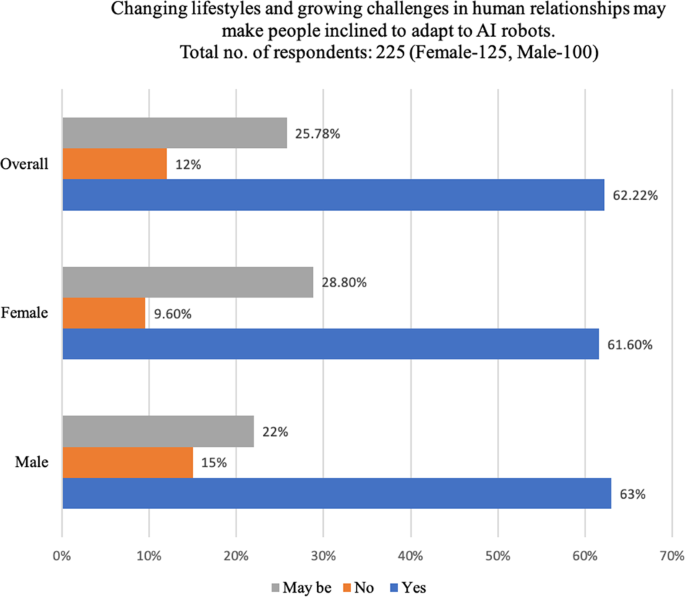

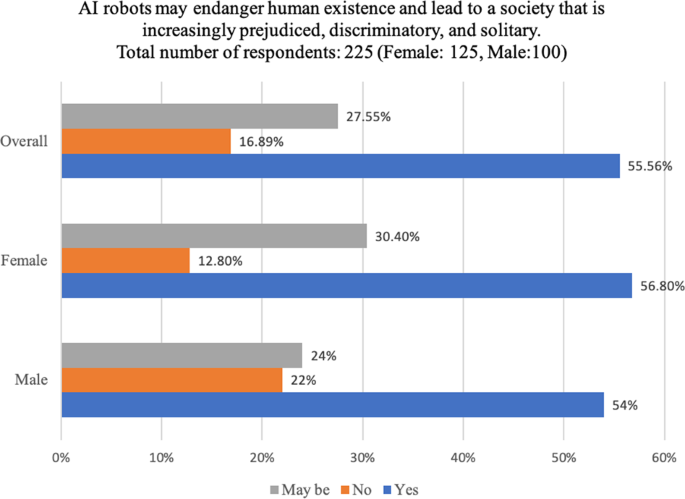

A regression study to identify threat factors with respect to AI robots is shown in Table 2. The threat posed by AI robots is predicted by people’s perception of AI robots and the gender of AI robots. As a result, the hypothesis, that the changing lifestyle, growing challenges, and increasing use of AI robots in daily life may be a threat to the various existing human relationships is accepted. It could be inferred that people may be more motivated to live with AI robots due to changing lifestyles and increasing challenges and complications in human relationships (see Fig. 1). The majority of the respondents believe that greater use and reliance on AI robot technology will jeopardize the existence of protein-based people and lead to more prejudiced, discriminatory, and solitary societies. This is in support of the findings of the study, which show that people have an innate dread that robots will one day outwit them to the point that they will start exploiting humans (see Fig. 2). Moreover, the majority of respondents feel that AI may have a significant impact on gender balance in countries like India and that it may also affect gender balance in the subcontinent. This is in line with past concerns in India about the misuse of sonography technology (see Fig. 3).

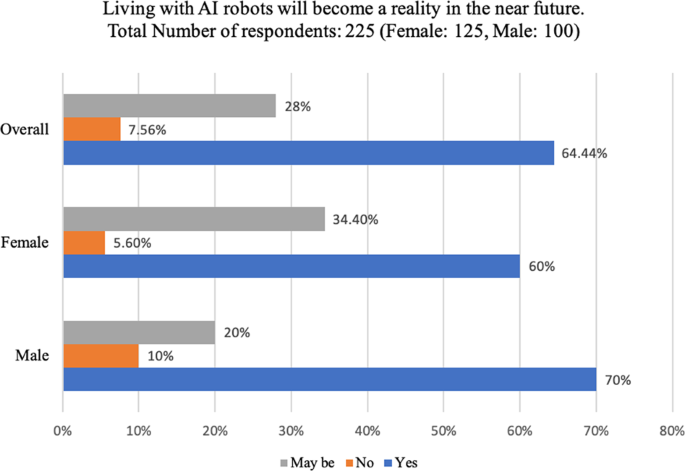

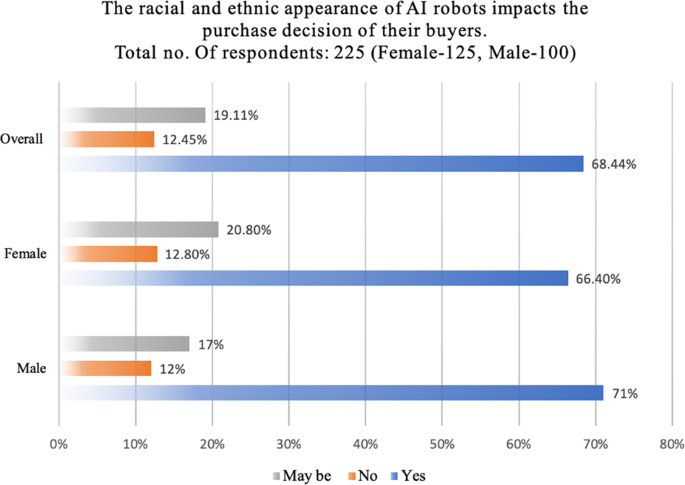

Table 3 exhibits the results of the t-test. The results portray a significant difference between male and female respondents on the perception of AI robots. Therefore, the null hypothesis is rejected and the alternate hypothesis stating that there is a significant difference in the perception of females and males towards AI robots can be accepted. The t-test further indicates that there exists no significant difference between males and females on the requirements and use of AI robots, as no significant gender difference was found on the requirements and use of AI robots by both males and females. The majority of respondents believe that living with AI robots will become a reality soon (see Fig. 4) and that the AI robots’ ethnic and racial appearance may have a significant impact on consumers’ AI robot purchasing preferences (see Figs. 5 and 6). The respondents also advocated different ethical programming for the female and male robots (see Fig. 7). However, as far as the use of robots is concerned, it is evident that all other categories of robots have shown roughly equal demand from both males and females, except for sex and love robots, where the gender discrepancy is more than twice, with 24% of males and 8.8% of females indicating a need for sex and love robots (see Fig. 8). Assistant robots, teaching robots, entertainment robots, robots that do household tasks and errands, and robots that offer care were among the most popular. Females showed the least interest in sex and love robots, while males showed the least interest in companion and friendship robots. Overall, 15.56% of respondents said they were open to having sex and love robots. This demonstrates the general concern and reluctance to engage in sexual or romantic relationships with AI computers. Females, on the other hand, appear to be more hesitant and averse to engaging in intimacy with robots than males. The key reasons for this are sentiments of envy and insecurity. The study’s findings also show that males in developing countries have a more favorable attitude toward sex robots than females. This confirms the existence of gender differences in emotional intimacy and sex preferences (Johnson, 2004). Sex robots have the potential to reduce or eliminate prostitution, human trafficking, and sex tourism for women (Yeoman and Mars, 2012) in developing countries. According to data from the Indian government’s National Crime Record Bureau (NCRB), 95% of trafficked people in India are forced into prostitution (Divya, 2020; Munshi, 2020).

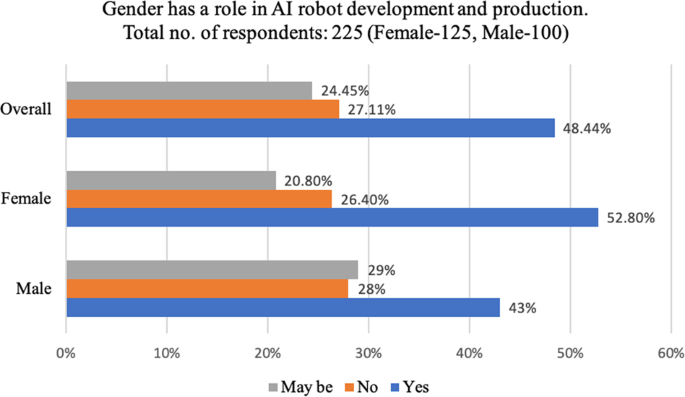

The t-test further indicates that there exists no significant difference between male and female respondents as far as the gender of the robots is concerned. However, the analysis of mean figures indicates that the majority of respondents believe that gender has a role in AI robot development and production (see Fig. 9), and AI will have a greater impact on both men and women (see Fig. 10), but when asked which gender AI will have the greatest impact on, both genders answer that AI will have the greatest impact on their gender (see Fig. 11). While most people disagreed with the premise that female robots are seen by them as more humane (see Fig. 12), they did agree that female robots are regarded differently by them (see Fig. 13). They further agreed that feminizing robots improves their marketability and acceptance (see Fig. 14), and that corporations are using feminity to humanize non-human things like AI robots (see Fig. 15). The responses also indicate that a majority of the respondents (55.11%) believe that the use of feminine features in robots increases the risk of females being stereotyped (see Fig. 16).

Conclusion

The gendering of the robots in AI is problematic and does not always lead to the desired improved acceptability, as gender makes no difference in terms of robot functionality and performance. The study contradicts the widely held belief that women in developing nations such as India are wary about living with AI robots in the near future. It also shows that both men and women feel AI will have a stronger impact on both males and females. The majority of respondents dissented that female robots are considered more commiserate than male robots, but they accede to the fact that female robots are perceived differently, even when they are robots. The majority of respondents feel that AI will have a significant impact on gender balance in nations like India just like the sonography technique that was misused for doing gender selection. However, they are in general agreement with the fact that people may be motivated to live with AI robots due to changing lifestyles and increasing challenges and complications in human relationships. Due to the rising cost of maintaining a protein-based lifestyle, humans may turn to robots to eschew the issues that come with it. The study also found people in developing countries have an innate dread that robots will one day outwit them.

Data availability

The data underpinning the study includes a dataset that has been deposited in the Harvard Dataverse repository. Please refer to Kumar, Shailendra; Choudhury, Sanghamitra, 2022, “Gender and Feminist Considerations in Artificial Intelligence from a Developing-World Perspective, with India as a Case Study”, https://doi.org/10.7910/DVN/0T3P1E, Harvard Dataverse, V1, UNF:6:oecwn9YFMHRv369S0mDW5w== [fileUNF].

Notes

The term “protein-based” has been used in the manuscript as a synonym for “humans”, with the understanding that, except for water and fat, the human body is virtually entirely made up of protein. Muscles, bones, organs, skin, and nails are all made up of protein. Muscles are made up of around 80% protein, excluding water.

References

Ahankari AS, Myles P, Tata LJ, Fogarty AW (2015) Banning of fetal sex determination and changes in sex ratio in India. Lancet Global Health. https://doi.org/10.1016/S2214-109X(15)00053-4

Akbulut-Yuksel M, Rosenblum D (2012) The Indian Ultrasound Paradox. IZA Discussion Paper (6273).

Alkaabi JM, Ghazal-Aswad S, Sagle M (2007) Babies as desired: ethical arguments about gender selection. Emir Med J. 25(1):1–5

Bass D (2017) Researchers combat gender and racial bias in artificial intelligence. Bloomberg 1–9. https://www.bloomberg.com/news/articles/2017-12-04/researchers-combat-gender-and-racial-bias-in-artificial-intelligence

Bhalotra S, Brulé R, Roy S (2020) Women’s inheritance rights reform and the preference for sons in India. J Dev Econ 146. https://doi.org/10.1016/j.jdeveco.2018.08.001

Borau S, Otterbring T, Laporte S, Fosso Wamba S (2021) The most human bot: female gendering increases humanness perceptions of bots and acceptance of AI. Psychol Mark 38(7):1052–1068. https://doi.org/10.1002/mar.21480

Bose A (2011) Census of India, 2011. Econ Political Weekly

Bowman-Smart H, Savulescu J, Gyngell C, Mand C, Delatycki, MB (2020) Sex selection and non-invasive prenatal testing: a review of current practices, evidence, and ethical issues. Prenatal diagnosis. John Wiley and Sons Ltd.

Caliskan A, Bryson JJ, Narayanan A (2017) Semantics derived automatically from language corpora contain human-like biases. Science 356(6334):183–186. https://doi.org/10.1126/science.aal4230

Carpenter J, Davis JM, Erwin-Stewart N, Lee TR, Bransford JD, Vye N (2009) Gender representation and humanoid robots designed for domestic use. Int J Soci Robot 1(3):261–265. https://doi.org/10.1007/s12369-009-0016-4

de Swarte T, Boufous O, Escalle P (2019) Artificial intelligence, ethics and human values: the cases of military drones and companion robots. Artif Life Robot 24(3):291–296. https://doi.org/10.1007/s10015-019-00525-1

DiMaio T (2021) Women are perceived differently from men—even when they’re robots. Acad Times. https://academictimes.com/women-are-perceived-differently-from-men-even-when-theyre-robots/

Divya A (2020) Sex workers in India on the verge of debt bondage and slavery, says a study. Indian Express. https://indianexpress.com/article/lifestyle/life-style/sex-workers-in-india-on-the-verge-of-debt-bondage-and-slavery-says-a-study-7117938/

Evers D (2005) The human being as a turing machine? The question about artificial intelligence in philosophical and theological perspectives. N Z Syst Theol Relig Philos https://doi.org/10.1515/nzst.2005.47.1.101

Guilmoto CZ (2015) Missing girls: a globalizing issue. In: Wright JD (ed.) International encyclopedia of the social & behavioral sciences, 2nd edn. Elsevier Inc., pp. 608–613. https://doi.org/10.1016/B978-0-08-097086-8.64065-5

Guo T (2015) Alan Turing: artificial intelligence as human self-knowledge. Anthropol Today 31(6):3–7. https://doi.org/10.1111/1467-8322.12209

Haraway D (1987) A manifesto for Cyborgs: science, technology, and socialist feminism in the 1980s. Aust Fem Stud2(4):1–42. https://doi.org/10.1080/08164649.1987.9961538

Haraway D (2006) A Cyborg Manifesto: science, technology, and socialist-feminism in the late 20th century. In: Weiss J, Nolan J, Hunsinger J, Trifonas P (eds) The International handbook of virtual learning environments. Springer, Dordrecht

Haraway DJ (2008) When species meet. University of Minnesota Press, Minneapolis

Hardesty L (2018) Study finds gender and skin-type bias in commercial artificial-intelligence systems. MIT News 1–17. https://news.mit.edu/2018/study-finds-gender-skin-type-bias-artificial-intelligence-systems-0212 (Accessed on 12 Jun 2021)

Hayasaki E (2017) Is AI sexist?. Foreign Policy. https://foreignpolicy.com/2017/01/16/women-vs-the-machine/

Hieslmair M (2017) Martina Mara: “more women in robotics!”. Ars Electronica Blog. https://ars.electronica.art/aeblog/en/2017/03/08/women-robotics/

Hu L, Schlosser A (2012) Trends in prenatal sex selection and girls’ nutritional status in India. CESifo Econ Stud 58(2):348–372. https://doi.org/10.1093/cesifo/ifs022

Hutson M (2017) Even artificial intelligence can acquire biases against race and gender. Science. https://doi.org/10.1126/science.aal1053

Johnson HD (2004) Gender, grade, and relationship differences in emotional closeness within adolescent friendships. Adolescence 39(154):243–255

Kristof ND (1993) China: ultrasound abuse in sex selection. Women’s Health J/Isis Int Latin Am Caribbean Women’s Health Netw 4:16–17

Leavy S (2018) Gender bias in artificial intelligence: The need for diversity and gender theory in machine learning. In: Proceedings—international conference on software engineering. IEEE Computer Society, pp. 14–16

Lee SK, Kavya P, Lasser SC (2021) Social interactions and relationships with an intelligent virtual agent. Int J Hum Comput Stud 150. https://doi.org/10.1016/j.ijhcs.2021.102608

Lin CH, Liu EZF, Huang YY (2012) Exploring parents’ perceptions towards educational robots: Gender and socio-economic differences. Br J Educ Technol 43(1). https://doi.org/10.1111/j.1467-8535.2011.01258.x

Lloyd D (1985) Frankenstein’s children: artificial intelligence and human value. Metaphilosophy 16(4):307–318. https://doi.org/10.1111/j.1467-9973.1985.tb00177.x

Munshi S (2020) Human trafficking hit three-year high in 2019 as maha tops list of cases followed by Delhi, Shows NCRB Data. News18 Networks. https://www.news18.com/news/india/human-trafficking-hit-three-year-high-in-2019-as-maha-tops-list-of-cases-followed-by-delhi-shows-ncrb-data-2944085.html. Accessed 15 Aug 2021

Niculescu A, Hofs D, Van Dijk B, Nijholt A (2010) How the agent’s gender influence users’ evaluation of a QA system. In: Fauzi Mohd Saman et al. (eds) Proceedings—2010 International Conference on User Science and Engineering, i-USEr 2010. pp. 16–20

Nidadavolu V, Bracken H (2006) Abortion and sex determination: conflicting messages in information materials in a District of Rajasthan, India. Reprod Health Matters 14(27):160–171. https://doi.org/10.1016/S0968-8080(06)27228-8

Nomura T, Kanda T (2003) On proposing the concept of robot anxiety and considering measurement of it. In: Proceedings—IEEE international workshop on robot and human interactive communication. pp. 373–378

Nomura T, Kanda T, Suzuki T (2006) Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI Soc 20(2):138–150. https://doi.org/10.1007/s00146-005-0012-7

Nomura T (2017) Robots and gender. Gend Genome 1(1):18–26. https://doi.org/10.1089/gg.2016.29002.nom

Nordmo M, Næss JØ, Husøy MF, Arnestad MN (2020) Friends, lovers or nothing: men and women differ in their perceptions of sex robots and platonic love robots. Front Psychol 11. https://doi.org/10.3389/fpsyg.2020.00355

Nurock V (2020) Can ai care? Cuad Relac Lab 38(2):217–229. https://doi.org/10.5209/CRLA.70880

Odekerken-Schröder G, Mele C, Russo-Spena T, Mahr D, Ruggiero A (2020) Mitigating loneliness with companion robots in the COVID-19 pandemic and beyond: an integrative framework and research agenda. J Serv Manag 31(6):1149–1162. https://doi.org/10.1108/JOSM-05-2020-0148

Oomman N, Ganatra BR (2002) Sex selection: the systematic elimination of girls. Reprod Health Matters 10(19):184–188. https://doi.org/10.1016/S0968-8080(02)00029-0

Pillinger A (2019) Gender and feminist aspects in robotics. GEECO Project, European Union. http://www.geecco-project.eu/fileadmin/t/geecco/FemRob_Final_plus_Deckblatt.pdf

Pohl R (2018) An analysis of Donna Haraway’s A cyborg manifesto: science, technology, and socialist-feminism in the late twentieth century. Routledge, London

Prates MOR, Avelar PH, Lamb LC (2020) Assessing gender bias in machine translation: a case study with Google Translate. Neural Comput Appl32(10):6363–6381. https://doi.org/10.1007/s00521-019-04144-6

Prives L (2018) AI for all: drawing women into the artificial intelligence field. IEE Women Eng Mag 12(2):30–32. https://doi.org/10.1109/MWIE.2018.2866890

Reeves B, Nass C (1996) The media equation: how people treat computers, television, and new media like real people and places. Cambridge University Press

Sanders, D (2008) Progress in machine intelligence. Ind Robot 35(6). https://doi.org/10.1108/ir.2008.04935faa.002

Santow E (2020) Can artificial intelligence be trusted with our human rights? Aust Q 91(4):10–17. https://www.jstor.org/stable/26931483

Simonite T (2018) AI is the future-But where are the women?. WIRED.COM. https://www.wired.com/story/artificial-intelligence-researchers-gender-imbalance/

Søraa RA (2017) Mechanical genders: How do humans gender robots? Gend Technol Dev 21(1–2):99–115. https://doi.org/10.1080/09718524.2017.1385320

Suchman L (2006) Human-machine reconfigurations: plans and situated actions, 2nd edn. Cambridge University Press, pp. 1–314

Tripathi A (2016) Sex determination in India: Doctors tell their side of story. Scroll.in. https://scroll.in/article/805064/sex-determination-in-india-doctors-tell-their-side-of-the-story

UNESCO Report. (2020) Artificial intelligence and gender equality. Division for Gender Equality, UNESCO. https://en.unesco.org/system/files/artificial_intelligence_and_gender_equality.pdf

Venkatraman V (2020) Where logic meets emotion. Science 368(6495):1072–1072. https://doi.org/10.1126/science.abc3555

Weber J (2005) Helpless machines and true loving care givers: a feminist critique of recent trends in human–robot interaction. J Inf Commun Ethics Soc. https://doi.org/10.1108/14779960580000274

World Economic Forum Report. (2018) Assessing gender gaps in artificial intelligence. https://reports.weforum.org/global-gender-gap-report-2018/assessing-gender-gaps-in-artificial-intelligence/?doing_wp_cron=1615981135.3421480655670166015625

Yeoman I, Mars M (2012) Robots, men and sex tourism. Futures 44(4):365–371. https://doi.org/10.1016/j.futures.2011.11.004

Acknowledgements

The authors are grateful to everyone who volunteered to take part in this study and helped to create this body of knowledge. Professor Lashiram Laddu Singh, Vice-Chancellor, Bodoland University, India, is to be thanked for his unwavering support and direction throughout the study’s duration. Thank you to Bishal Bhuyan and Kinnori Kashyap of Sikkim University, India for their help with data testing using SPSS software.

Author information

Authors and Affiliations

Contributions

Conceptualization, SK and SC; methodology, SK and SC; software, SK; validation, SK and SC; formal analysis, SC; investigation, SK; resources, SC; data curation, SK; writing—original draft preparation, SK; writing—review and editing, SK and SC. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interest.

Ethical approval

Ethical approval was obtained from Bodoland University’s Authorities and Research Ethics Committee. This study was conducted in compliance with the Charter of Fundamental Rights of the EU (2010/C 83/02), the European Union European Charter for Researchers, and the General Data Protection Regulation (GDPR).

Informed consent

Informed consent was obtained from all participants and/or their legal guardians. Every participant gave their consent before questionnaires were administered or interviews were conducted. Essentially, the online survey would get open only when the participant read, understood, and agreed with what participation in the study would entail, and each one had the option to discontinue their participation at any time.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kumar, S., Choudhury, S. Gender and feminist considerations in artificial intelligence from a developing-world perspective, with India as a case study. Humanit Soc Sci Commun 9, 31 (2022). https://doi.org/10.1057/s41599-022-01043-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-022-01043-5