Abstract

This study investigates the effect of competitive project funding on researchers’ publication outputs. Using detailed information on applicants at the Swiss National Science Foundation and their proposal evaluations, we employ a case-control design that accounts for individual heterogeneity of researchers and selection into treatment (e.g. funding). We estimate the impact of the grant award on a set of output indicators measuring the creation of new research results (the number of peer-reviewed articles), its relevance (number of citations and relative citation ratios), as well as its accessibility and dissemination as measured by the publication of preprints and by altmetrics. The results show that the funding program facilitates the publication and dissemination of additional research amounting to about one additional article in each of the three years following the funding. The higher citation metrics and altmetrics by funded researchers suggest that impact goes beyond quantity and that funding fosters dissemination and quality.

Similar content being viewed by others

Introduction

Scientific research generated at universities and research organizations plays an important role in knowledge-based societies (Fleming et al., 2019; Poege et al., 2019). The created knowledge drives scientific and technological progress and spills over to the broader economy and society (Hausman, 2021; Jaffe, 1989; Stephan, 2012). The growing importance of science-based industries puts additional emphasis on the question of how scientific knowledge is generated and whether public funding can accelerate knowledge creation and its diffusion. In an effort to promote scientific research, grant competitions as a means of allocating public research funding have become an important policy tool (Froumin and Lisyutkin, 2015; Oancea, 2016). The goal is to incentivize the generation of ideas and to allocate funding such that it is most likely to deliver scientific progress and eventually economic and social returnsFootnote 1 . In light of these developments, it is important to understand whether research grants indeed facilitate additional, relevant research outputs and whether these are accessible to the public.

In particular individual-level analyses are highly interesting since most grants are awarded to individual researchers or to small teams of researchers. The estimation of the effect that a grant has on research outputs is, however, challenging. The main difficulties are the availability of information on all applicants (not only winners) as well as detailed information about the individual researchers (demographic information). Moreover, the non-randomness of the award of a grant through the selection of the most able researchers into the funding program results in the non-comparability of funded and non-funded researchers. The fact that researchers can receive multiple grants at the same time as well as several consecutive grants further challenges the estimation of effects from funding (Jaffe, 2002). Another difficulty stems from finding appropriate measures for research output (Oancea, 2016). Publications and citations are easy to count, but likely draw an incomplete picture of research impact, its dissemination and the extent to which funded research contributes to public debates. Moreover, both publication and citation patterns as well as funding requirements are highly field-dependent which makes output analyses in mixed samples or inter-disciplinary programs difficult.

In this study, we aim to quantify the effect of the Swiss National Science Foundation’s (SNSF)Footnote 2 project funding (PF) grants on the individual researcher in terms of future scientific publications and their dissemination. Our analyses is based on detailed information on both grants and awardees covering 20,476 research project grants submitted during the period 2005 and 2019. This study adds to previous work in several dimensions. By focusing on the population of applicants which constitutes a more homogeneous set of researchers than when comparing grant winners to non-applicants and by accounting of individual characteristics of the applicants, our study results are less prone to overlook confounding factors affecting both the likelihood to win a grant as well as research outputs. Information on the evaluation scores submitted in the peer-review process of the grant proposals allows us to compare researchers with similarly rated proposals. In other words, by comparing winning applicants to non-winners and by taking into account the evaluation scores that their applications receive, we can estimate the causal effect of the grant on output while considering that both research ideas, as well as grant writing efforts (and skills), are required for winning a grant. By studying a long time period and accounting for the timing of research grants and outcomes, we can further take into account that there are learning effects from the grant writing itself even for unsuccessful applicants (Ayoubi et al., 2019). To benchmark our results to previous studies, we first investigate the impact of grants on publication outputs. In addition, we consider preprints which have become an important mode of disseminating research results quickly but received so far no attention in the research of funding effects. Preprints do not undergo peer-review (Berg et al., 2016; Serghiou and Ioannidis, 2018), but help researchers to communicate their results to their community and to secure priority of discovery.

This study goes beyond previous work that mainly considered citation-weighted publication counts, by measuring impact in a researcher’s field of study by relative citation ratios (RCR) and field citation ratios (FCR). These metrics account for field-specific citation patterns. Additionally, we explicitly explore researchers’ altmetric scores as a measure of attention, research visibility, and accessibility of research outcomes beyond academia. Altmetrics reflect media coverage, citations on Wikipedia and in public policy documents, on research blogs and in bookmarks of reference managers like Mendeley, as well as mentions on social networks such as Twitter. While altmetrics may reflect fashionable or provocative research, they may indicate accessible insights disseminated through the increasingly important online discussion of research and may therefore measure the general outreach of research (Warren et al., 2017). Although they are a potentially important measure of dissemination to the wider public and therefore of research impact in the age of digital communication (Bornmann, 2014; Konkiel, 2016; Lăzăroiu, 2017), the effect of funding on altmetrics has not been investigated so far.

Finally, by explicitly investigating outputs over several years after funding, our study contributes new insights on the persistency of effects. Since a large share of project funding typically goes into wages of doctoral and post-doctoral researchers which require training and learning on the job, there may be a considerable time lag between the start of the project and the publication of any research results and an underestimation of output effects when considering only immediate outcomes.

The results from our analysis based on different estimation methods show that grant-winning researchers publish about one additional peer-reviewed publication more per year in the 3 years following funding than comparable but unsuccessful applicants. Moreover, these publications are also influential as measured by the number of citations that they receive later on. SNSF PF seems to promote timely dissemination as indicated by the higher number of published preprints and researchers’ higher altmetrics scores. The funding impact is particularly high for young(er) researchers as well as for researchers at a very late career stage when funding keeps output levels high. These results add new insights to the international study of funding effects which provided partially ambiguous findings as our review in the next section illustrates. In summary, the results presented in the following stress the important role played by project funding for research outcomes and hence for scientific progress. Institutional funding alone does not appear to facilitate successful research to the same extent as targeted grants which complement institutional core funds.

The impact of funding on research outcomes

The impact of competitive research funding on knowledge generation (typically proxied by scientific publications) has been studied in different contexts and at multiple levels: the institutional level, the research group or laboratory, and the level of the individual researcher. At the level of the university, Adams and Griliches (1998) find a positive elasticity of scientific publications to university funding. Payne (2002) and Payne and Siow (2003), using congressional earmarks and appropriation committees as instruments for research funding, present similar results. They show that a $1 million increase in funding yields 10–16 additional scientific articles. Wahls (2018) analyses the impact of project grants from the National Institutes of Health (NIH) in the United States and finds positive institution-level returns (in terms of publications and citation) to funding which, however, diminish at higher levels of funding.

At the laboratory level, the results are rather inconclusive so far which is likely due to heterogeneity in unobserved lab characteristics and the variety of grants and resources that typically fund lab-level research. An analysis of an Italian biotechnology funding program by Arora et al. (1998) finds a positive average elasticity of research output to funding, but with a stronger impact on the highest quality research groups. These findings, however, seem to be specific to engineering and biotechnology. Carayol and Matt (2004) included a broader set of fields and did not find a strong link between competitive research funding and lab-level outputs.

At the level of the individual researcher, Arora and Gambardella (2005) find that research funding from the United States National Science Foundation (NSF) in the field of Economics has a positive effect on publication outcomes (in terms of publication success in highly ranked journals) for younger researchers. For more advanced principle investigators (PIs between 5 and 15 years since PhD), however, they do not find a significant effect of NSF funding when taking the project evaluation into account. Jacob and Lefgren (2011) study personal research funding from the NIH and find that grants resulted in about one additional publication over the next 5 years. These results are close to the estimated effect from public grants of about one additional publication in a fixed post-grant window in a sample of Engineering professors in Germany (Hottenrott and Thorwarth, 2011). Likewise, a study on Canadian researchers in nanotechnology (Beaudry and Allaoui, 2012) documents a significant positive relationship between public grants and the number of subsequently published articles.

More recent studies considered output effects both in terms of quantity and quality or impact. Evaluating the impact of funding by the Chilean National Science and Technology Research Fund on research outputs by the PIs, Benavente et al. (2012) find a positive impact in terms of a number of publications of about two additional publications, but no impact in terms of citations to these publications. In contrast to this, Tahmooresnejad and Beaudry (2019) show that there is also an influence of public grants (unlike private sector funding) on the number of citations for nanotechnology researchers in Canada. In addition, Hottenrott and Lawson (2017) find that grants from public research funders in the United Kingdom contribute to publication numbers (about one additional publication per year) as well as to research impact (measured by citations to these publications) even when grants from other private sector sources are accounted for. Results for a sample of Slovenian researchers analyzed by Mali et al. (2017), however, suggest that public grants result in ‘excellent publications’Footnote 3 only if researchers’ funding comes mostly from one source.

Explicitly looking at research noveltyFootnote 4, Wang et al. (2018) find that projects funded by competitive funds in Japan have on average higher novelty than projects funded through institutional funding. However, this only holds for senior and male researchers. For junior female researchers, competitive project funding has a negative relation to novelty.

In a study on Switzerland-based researchers, Ayoubi et al. (2019) find, in a sample of 775 grant applications for special collaborative, multi-disciplinary and long-term projects, that participating in the funding competition does indeed foster collaborative research with co-applicants. For grant-winners, they observe a lower average number of citations received per paper compared to non-winners (not controlling for other sources of funding that the non-winners receive). The authors relate this finding to the complexity of such interdisciplinary projects, the cost of collaboration, and the fact that also applicants who do not eventually win this particular type of grant publish more as a result of learning from grant writing or through funding obtained from alternative sources.

By studying grants distributed via the main Swiss research funding agency, we are capturing the vast majority of competitive research grants in the country. The Swiss research funding system is characterized by a relatively strong centralization of research funding distribution with the SNSF accounting by far for the largest share of the external research funding of universities (Jonkers and Zacharewicz, 2016; Schmidt, 2008)Footnote 5. To account for major sources outside of Switzerland such as from the European Research Council (ERC), we collected information on Swiss-based researchers who received such funding during our period of analysis.

Empirical model of funding and research outputs

All of the following is based on the assumption that academic researchers strive to make tangible contributions to their fields of research. The motivations for doing so can be diverse and heterogeneous ranging from career incentives to peer recognition (Franzoni et al., 2011). We also assume that producing these outputs requires resources (personnel, materials, equipment) and hence researchers have incentives to apply for grants to fund their research. However, research output, that is the success of a researcher in producing results and the frequency with which this happens, also depends on researcher characteristics, characteristics of the research field and the home institution. Research success is also typically path-dependent following a success-breeds-success pattern. Thus, we build on the assumption that a researcher who generates an idea for a research project files a grant application to obtain funding to pursue the project. If the application succeeds, the researcher will spend the grant money and may or may not produce research outputs. The uncertainty is inherent to the research process. The funding agency screens funding proposals and commissions expert reviews to assess the funding worthiness of the application. If the submitted proposal received an evaluation that is sufficiently good in comparison to the other proposals, funding is granted in accordance with the available funding amount. This implies that even in case of a rejected grant proposal the researcher may pursue the project idea, but without these dedicated resources available. In many instances, funding decisions are made at the margin, with some winning projects being only marginally better than non-wining projects (Fang and Casadevall, 2016; Graves et al., 2011; Neufeld et al., 2013). If the funding itself has indeed an effect on research outcomes, we would expect that the funded researcher is more successful in generating outputs both in terms of quantity and quality.

In addition to resource-driven effects, there may also be direct dissemination incentives related to public project funding. On the one hand, funding agencies may encourage or even require the dissemination of any results from the funded project. On the other hand, the researchers may have incentives to publish research outcomes to signal project success to the funding agency and win reputation gains valuable for future proposal assessments.

While estimating the contribution of funding to research outputs measured by different indicators, we have to take into consideration that the estimation of the funding effect requires assumptions about output generation by researchers. The extent to which the output produced can be attributed to the funding itself also depends on the econometric model used (Silberzahn et al., 2018). We, therefore, take a quantitative multi-method approach taking up and adding to methods applied in previous related studies. Comparing the results from different estimation methods also allows an assessment of the sensitivity of our conclusions to specific modeling assumptions. In particular, we estimate longitudinal regression models which aim to account for unobserved heterogeneity between researchers. In addition, we use non-parametric matching methods to explicitly model the selectivity in the grant awarding process.

Mixed effects models

We define Pit as the research output of researcher i in year t and Fit−1 as a binary variable indicating whether this same researcher i had access to SNSF funding in year t−1. Note that this indicator takes the value one for the entire duration of the granted project. The funding information is lagged by one year as an immediate effect of funding on output is unlikely. Note that, we will differentiate between funding as PI and as co-PI (only). The general empirical model can then be expressed as

with ϕ being the vector of parameters. Xit represents a vector with explanatory factors at t including observed characteristics of the researcher and the average quality of the grant applications as reflected in the average evaluation score. Further Tt captures the overall time trend, vi is the unobserved individual heterogeneity, and ϵit is the error term.

The specification above describes a production function for discrete outcomes following Blundell et al. (1995). As a first estimation strategy, count data models will be used to estimate research outputs, as for example, the number of peer-reviewed articles or preprints. Moreover, these models account for unobserved individual characteristics, vi, which likely predict research outputs besides observable characteristics and are independent of project funding. One way to estimate this unobserved heterogeneity is to use random intercepts for the individualsFootnote 6, here the researchers, and account for the hierarchical structure of the information (e.g. panel data). Thus, we estimate mixed count models to capture viFootnote 7. The mixed regression models for count data take the following form:

In addition to count-type outputs, we estimate the effect of funding on continuous output variables such as the average number of yearly citations per article or the researcher’s average yearly altmetric score. For these output types we estimate linear regression models based on a comparable model specification with regard to Fit−1, Xit, Tt and vi.

Non-parametric treatment effect estimation

In an alternative estimation approach, we apply a non-parametric technique: The average treatment effect of project funding on scientific outcomes is estimated by an econometric matching estimator which addresses the question of “How much would a funded researcher have published (or how much attention in terms of altmetrics or citations would her research have received) if she had not received the grant?”. This implies comparing the actually observed outcomes to the counterfactual ones to derive an estimate for the funding effect. Given that the counterfactual situation is not observable, it has to be estimated.

For doing so, we employ a nearest neighbor propensity score matching. That is, we pair each grant recipient with a non-recipient by choosing the nearest ‘twin’ based on the similarity in the estimated probability of receiving a grant and the average score that the submitted applications received. Note that we select the twin researcher from the sample of unsuccessful applicants so that matching on both, the general propensity to win (which includes personal and institutional characteristics) and the proposal’s evaluation score, allows to match both on an individual as well as on proposal (or project idea) characteristics to find the most comparable individuals.

The estimated propensity to win a grant is obtained from a probit estimation on a binary treatment indicator which takes the value of one for each researcher-year combination in which an individual had received project funding. The advantage of propensity score matching compared to exact matching is that it allows combining a larger set of characteristics into a single indicator avoiding the curse of dimensionality. Nevertheless, introducing exact matching for some key indicators can improve the balancing of the control variables after matching. In particular, we match exactly on the year of the funding round as this allows to have the same post-treatment time window for treated and control individual and also captures time trends in outputs which could affect the estimated treatment effect. In addition, we match only within a research field to not confound the treatment effect with heterogeneity in resource requirements and discipline differences in output patterns. We follow a matching protocol as suggested by Gerfin and Lechner (2002) and calculate the Mahalanobis distance between a treatment and a control observation as

where Ω is the empirical covariance matrix of the matching arguments (propensity score and evaluation score). We employ a caliper to avoid bad matches by imposing a threshold of the maximum distance allowed between the treated and the control group. That is, a match for researcher i is only chosen if ∣Zj−Zi∣<ϵ, where ϵ is a pre-specified tolerance. After having paired each researcher with the most similar non-treated one, any remaining differences in observed outcomes can be attributed to the funding effect. The resulting estimate of the treatment effect is unbiased under the conditional independence assumption (Rubin, 1977). In other words, in order to overcome the selection problem, participation and potential outcome have to be independent for individuals with the same set of characteristics XitFootnote 8. Note that by matching on the evaluation score in addition to the propensity score, our approach is similar to the idea of regression discontinuity design (RDD). The advantage of the selected approach is, however, that it allows us to draw causal conclusions for a more representative set of individuals. While RDD designs have the advantage of high internal consistency, this comes at the price of deriving effects estimates only for researchers around the cut-off (de la Cuesta and Imai, 2016). Yet, in our case, this threshold is not constant, but depends on the pool of submitted proposals and there is considerable variation in the evaluation scores that winning proposals receive. In our application, we also expect heterogeneous impacts across researchers so that a local effect might be very different from the effect for researchers away from the threshold for selection (Battistin and Rettore, 2008).

Using the matched comparison group, the average effect on the treated can thus be calculated as the mean difference of the matched samples:

with \({P}_{i}^{T}\) being the outcome variable in the treated group, \({P}_{j}^{{\rm {C}}}\) being the counterfactual for i and nT is the sample size (of treated researchers).Footnote 9

Data and descriptive analysis

Data provided by the SNSF has been used to retrieve a set of researchers of interest. These researchers have applied to the SNSF funding instrument project funding (PF) or SinergiaFootnote 10 as main applicant (e.g. PI) or co-applicantFootnote 11 (e.g. co-PI). The PF scheme is a bottom-up approach as it funds costs of research projects with a topic of the applicant’s own choice.

The study period is dynamic and researcher-specific: it starts with the year in which the SNSF observes the researcher for the first time as (co-)PI to PF or as a career funding grantholder (after the postdoctoral level); the year the independent research career starts. However, this study period has its lower bound in 2005. The period ends in 2019 for everyone, and some researchers are observed for a longer period than others. For each researcher, a pre-sample period is defined, including the 5 years before the observation started. Pre-sample information on all outcome variables of interest is needed to account for heterogeneity between the individuals in the way that they enter the study in linear feedback models and for matching on ex-ante performance in the non-parametric estimation approach. Further, only researchers who applied at least once after 2010 to the SNSF are included to ensure a minimum research activity. In a next step, we retrieve a unique Dimensions-identifier (Dim-ID) from the Dimensions database (Digital Science, 2018) using a person’s name, research field, age and information about past and current affiliationsFootnote 12. The Dim-ID enables us to collect disambiguated publication information for these researchers to be used in the empirical analysis.

Variables and descriptive statistics

The original data set comprised 11,228 eligible researchers. 10% (1,143) of the latter could not be identified in the Dimensions database. Among the researchers found using their name, the supplementary information from the SNSF database (country, ORCID, institution, etc.) did not match in 1% of the cases, and we were not sure that we found the correct researcher. For 12% of the researchers found in Dimensions no unique ID could be retrieved. After removing these observations, we observe a total of 8,793 distinct researchers (78% of the eligible researchersFootnote 13) and the final data set is composed of 82,249 researcher-year observations. On average researchers are observed for 9.35 years. The maximum observation length, from 2005 to 2019 is 15 years, and 2,319 researchers are observed over this maximal study period. All the publication data was retrieved in September 2020.

Research funding

The central interest of the study is the effect competitive project funding has on a researcher’s subsequent research outputs. The information on SNSF funding indicates whether a researcher had access to SNSF funding as a PI and/or co-PI in a certain year. We differentiate between PIs and co-PIs to test whether the funding effect differs depending on the role in the project. On average the researchers in our data set are funded by the SNSF for 4.6 years during the observation period; for 3.3 years as PI of a project (see Table 1). In total 20,476 distinct project applications (not necessarily funded) are included in the data. On average a PIs is involved in a total of 3.7 project applications (as PI or co-PI); in 3.1 submissions as PI, and in 2.3 submissions as co-PI. About 66% of all projects in the data have one sole PI applying for funding, 22% have a PI and a co-PI, 8% a PI and two co-PIs, and 4% are submitted by a PI together with three or more co-PIs. Note that the percentage of successful applications in our data set is 48% over the whole study period (the success rate for the STEM applications is ~60%, it is ~44% in SSH and the one of the LS is the lowest with ~40%).

These numbers reflect that in the Swiss research funding system, project funding does play an important role, but that institutional core funding is also relatively generous. The latter accounts for—on average—more than 70% of overall university funding (Reale, 2017; Schmidt, 2008). This allows researchers to sustain in the system without project funding. While overall, institutional funding is quite homogeneous across similar research organizations in the country, it differs between institution types. It is therefore important to account for institutional funding in the following analyses as it provides important complementary resources to researchers (Jonkers and Zacharewicz, 2016). Moreover, within the different institution types, we also account for the research field and the career stage of researchers as this may also capture individual differences in core budgets. We present sample characteristics in terms of these variables in the subsection “Confounding variables”. Another important aspect to consider when analyzing the effect of research funding is funding from other sources, other than institutional funding (Hottenrott and Lawson, 2017). In all European countries the ERC plays an important role. Hence, we collected data on Swiss-based researchers who received ERC funding and matched them to our sample. Of all the researchers considered in this study only a small fraction (4.2%) ever received funding by the ERC. Most of these researchers had a PF grant running at the same time (87%). Fig S.3 in the supplementary material shows the count of observations in the different funding groups in more detailFootnote 14.

Research outputs

Table 1 summarizes the output measures as well as the funding length. The most straightforward research output measure is the number of (peer-reviewed) articles. On average, a researcher in our data publishes 4.9 articles each year. The annual number of articles is higher in the STEM (5.7) and life sciences (LS) (6.5) than in the Social Science and Humanities (SSH) where researchers published about 1.5 publications per year, on average. See Table S.1 in the supplementary material for differences in all output variables (as well as funding and researcher information) by field. In some disciplines, such as biomedical research, physics, or economics, preprints of articles are widely used and accepted (Berg et al., 2016; Serghiou and Ioannidis, 2018). As preliminary outputs they are made available early and thus are an interesting additional output, potentially indicating the dissemination and accessibility of research results. The average of the yearly number of preprints is a lot lower than the one of articles (0.4) which is due to preprints being a research output that emerged only rather recently and are more common in STEM fields than in others (see Table S.1 in the supplementary material). Another output measure is the number of yearly citations per researcher. This is the sum of all citations of work by a certain researcher during a specific year to all her peer-reviewed articles published since the start of the observation period. Citations to articles published before the start of the observation period are not taken into account. On average a researcher’s work in the study period is cited 132.9 times per year. This variable is however substantially skewed with 6.8% of researchers accounting for 50% of all citations and highly correlated with the overall number of articles that a researcher published. There are also field differences with the average citation numbers between the Life Sciences (185.2) and the STEM fields (157.7), but both numbers are substantially higher than in the SSH (25.6). The average number of citations per (peer-reviewed) article of a researcher is informative about the average relevance of a researcher’s article portfolio. The articles in our sample are cited on average 4.2 times per year.

The altmetric score of each article is retrieved as an attention or accessibility measure of published research. Following the recommendation by Konkiel (2016), we employ a ‘baskets of metrics’ rather than single components of the altmetric score. This score is a product of Digital Science and represents a weighted count of the amount of attention that is picked up for a certain research outputFootnote 15. Note that the average altmetric score for a researcher at t is the mean of the altmetrics of all articles published in the year t.Footnote 16 On average a researcher in our sample achieves an altmetric of 13. Similar to citation counts, this variable is heavily skewed. The differences in altmetrics across disciplines are rather small (see Table S.1 in the supplementary material).

When using simple output metrics like citation counts, it is important to account for field-specific citation patterns. In order to do so, we collect the relative citation ratio (RCR) and the field citation ratio (FCR). The RCR was developed by the NIH (Hutchins et al., 2016). As described by Surkis and Spore (2018), the RCR uses an approach to evaluate an article’s citation counts normalized to the citations received by NIH-funded publications in the same area of research and year. The calculation of the RCR implies to dynamically determine the field of an article based on its co-citation network, that is, all articles that have been cited by articles citing the target article. The advantage of the RCR is to field- and time-normalize the number of citations that an article received. A paper that is cited exactly as often as one would expect based on the NIH-norm receives an RCR of 1 and an RCR larger one indicated that an article is cited more than its expectation given the field and year. The RCR is only calculated for the articles that are present on PubMed, have at least one citation and are older than two years. Thus, when analyzing this output metric, we focus on researchers in the life sciences only. The FCR is calculated by dividing the number of citations a paper has received by the average number received by publications published in the same year and in the same fields of research (FoR) category. Obviously, the FCR is very dependent on the definition of the FoR. Dimensions uses FoR that are closest to the Australian and New Zealand Standard Research Classification (ANZSRC, 2019). For the calculation of the FCR a paper has to be older than two years. Simlar to the RCR, the FCR is normalized to one and an article with zero citations has an FCR of zero. As the altmetric, the RCR and FCR cannot be retrieved time-dependently but are snapshots at the day of retrieval. We will refer to the average FCR/RCR at t, as the average of the FCRs/RCRs of the papers published in t. According to Hutchins et al. (2016), articles in high-profile journals have average RCRs of ~3. The key difference between the RCR and the FCR is that the FCR uses fixed definition of the research field, while for the RCR a field is relative to each publication considered. Table S.1 in the supplementary material shows that the average rates are comparable across fields.

Figure 1 represents the evolution of the yearly average number of articles, preprints and the altmetric score per researcher depending on the funding status of the year before (as co- and/or PI). The amount of articles published each year has been rather constant or only slightly increasing, while the preprint count increased substantially over the past years. Recent papers also have a higher altmetric scores than older publications, even though they had less time to raise attention. It is important to note, however, that since we do not account for any researcher characteristics here, the differences between funded and unfunded researchers cannot be interpreted as being the result of funding. Yet, increasing prevalence of preprints and altmetrics suggest that they should be taken into account in funding evaluations.

Confounding variables

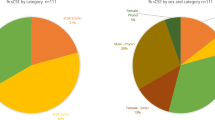

Table 1 further shows descriptive statistics for the gender of the researchers, their biological age, as well their field of research and the institution type. These variables capture drivers of researcher outputs and are therefore taken into account in all our analyses. Almost 77% of the researchers are male and about 60% are employed at cantonal universities, 24% at technical universities (ETH Domain) and about 17% at University of Applied Sciences (UAS) and University of Teacher Education (UTE). The research field and institution type are defined as the area or the type the researcher applies most often to or from. The field of life sciences has the largest proposal share in the data with about 39%. These variables serve as confounders together with the pre-sample information on the outcome variables since they may explain differences in output and therefore need to be accounted for. Note that 1615 researchers in our data did not publish any peer-reviewed papers in the five year pre-sample period. Table S.1 in the supplementary material shows how the confounding variables vary between the research fields.

The submitted project proposals are graded on a six-point scale: 1 = D, 2 = C, 3 = BC, 4 = B, 5 = AB, 6 = A. We use the information on project evaluation to control for (or match on) average project quality following the approach by (Arora and Gambardella, 2005). We construct the evaluation score as a rolling average over the last four years of all the grades a researcher ‘collected’ in submitted proposals as PI and co-PI (if no grade was available over the last four years for a certain researcher, we use her all time average). We do so because future research is also impacted by the quality of past and co-occurring projects. The funding decision is, however, not exclusively based on those grades. It has to take the amount of funding available to the specific call into account. Therefore the ranking of an application among the other competing applications plays an important role and even highly rated projects may be rejected if the budget constraint is reached. Projects graded with an A/AB have good chances of being funded, while projects graded as D are never funded, see Fig. S.2 in the Supplementary material representing the distribution of the grades among rejected and accepted projects.

Note that the researchers with missing age were deleted since this is an important control variable; the missing institution type were regrouped into unclassified. Additionally, for the analyses, the funding information will be used with a one (or more) year lag and at least one year of observation is lost per researcher. The final sample used for the analyses consists of 72,738 complete observations from 8,282 unique researchers.

Results

Mixed effects model—longitudinal regression models

Table 2 summarizes the results of both negative binomial mixed models for the count outcomes (yearly numbers of publications and preprints). The incidence rate ratios (IRR) inform us on the multiplicative change of the baseline count depending on funding status. The model for the publication count was fitted on the whole data set, while the model for the preprint count is fitted on data since 2010, because the number of preprints was rather small in general before. SNSF funding seems to have a significant positive effect on research productivity, regarding yearly publication counts (1.21 times higher for PI than without SNSF funding) as well as yearly preprint counts (1.30 times higher for the PI compared to researchers without SNSF funding).Footnote 17 An ‘average’ researcher without SNSF funding in t−1 publishes on average 4.64 articles in t. A similar researcher (with all confounding variables kept constant) with SNSF funding as PI in t−1 would publish 5.6 articles in t. PIs on an SNSF project publish more. The same is true for male researchers and younger researchers for preprints. Researchers from ETH Domain publish more than the ones from Cantonal Universities. Researchers publish more in recent years. Researchers in the LS publish more peer-reviewed articles compared to other research areas. Regarding preprints, we observe a different picture. Here STEM researchers publish more than researchers in LS.

Table 3 summarizes the results of the four linear mixed models for the continuous outcomes: the average yearly number of citations per publication, the yearly average altmetric, the yearly average RCR and the yearly average FCR. Regarding the citation patterns, there is strong evidence that SNSF funding has a positive effect; especially PIs on SNSF projects have their articles cited more frequently (increase in average yearly citations of 0.33 per article for the PIs). Articles by LS researchers are cited most compared to researchers from other fields. This is also the case for researchers from ETH Domain and older researchers. For altmetrics and citation ratios, we employ a logarithmic scale to account for the fact that their distributions are highly skewed; we can then interpret the coefficients as percentage change. Regarding altmetrics, research funded by the SNSF gets an attention score that is 5.1% higher (by September 2020) compared to other researchers. Researchers in LS have by far the highest altmetrics followed by researchers in the SSH. There is no strong evidence for an effect of the funding on the average yearly RCR. This implies that in the short-run research outcomes of SNSF-funded researchers are as often cited as a mixed average of articles funded by the NIH or other important researcher funded world-wide, but also not significantly more than that. Younger researchers and researchers from the ETH Domain have higher RCRs. The results also suggest a positive relation between SNSF funding and a researcher’s FCR.

Non-parametric estimation

While the previous estimation approaches modeled unobserved heterogeneity across individuals, the non-parametric matching approach addresses the selection into the treatment explicitly. It accounts for selection on observable factors which may—if not accounted for—lead to wrongly attributing the funding effect to the selectivity of the grant-awarding process. We model a researcher’s funding success as a function of researcher characteristics. In particular, this includes their previous research track record (publication experience and citations) and the average of all evaluation scores for submitted proposals (PI or co-PI) received by the researcher. In addition, we include age, gender, research field and institution type. We obtain the propensity score to be used in the matching process as described in the section “Non-parametric treatment estimation”.

The results from the probit estimation on the funding outcome (success vs. rejection) are presented in Table 4. The table first shows the model for the full sample which provides the propensity score for the estimation of treatment effects on articles and citations to these articles, and on preprints. The second model shows the model for the sub-sample of researchers in the LS used for estimating treatment effects on the RCR. The third model shows the estimation for the full sample, but accounting for pre-sample FCR, and provides the propensity score for the estimation of the treatment effect on the FCR. The fourth model controls for pre-sample altmetrics values and serves the estimation of the treatment effect on future altmetrics scores. Consistent across all specification, the results show that the evaluation score is a key predictor of grant success. The higher the score, the more likely is it that a proposal gets approved. The grant likelihood for male researches is higher than for females as well as for older researchers. The latter result can have various reasons, which are outside the scope of this paper and are being discussed elsewhereFootnote 18. As expected, past research performance is another strong predictor of grant success where peer-reviewed articles matter more than preprints. In addition to quantity, past research quality (as measured by citations) increases the probability of a proposal being granted. Interesting in more recent years (as shown in model 4), quality rather than quantity appears to predict grant success as it is the average number of citations to pre-period publication rather than their number that explains funding success.

The comparison of the distribution of the propensity score and the evaluation score before and after matching shows that the nearest neighbor matching procedure was successful in balancing the sample in terms of the grant likelihood and—importantly—also the average scores (see Fig. S.1 in the supplementary material). This ensures that we are comparing researchers with funding to researchers without funding that have similarly good ideas (the scores are the same, on average) and are also otherwise comparable in their characteristics predicting a positive application outcome. The balancing of the propensity scores and the evaluation scores in both groups (grant winners and unsuccessful applicants) after each matching are shown in Tables 5 and 6. Note that we draw matches for each grant-winner from the control group with replacement and that hence some observations from researchers in the control group are used several times as ‘twins’. Table S.5 in the supplementary material shows that across the different matched samples <10% of control researcher–year observations are used only once and about 60% up to 25 times. About 10% of control group researchers are used very frequently, i.e. more than 160 times.

Tables 5 and 6 show the estimated treatment effects after matching, i.e. the test for the magnitude and significance of mean differences across groups. Note that the number of matched pairs differs depending on the sample used and that log values of output variables were used to account for the impact of skewness of the raw variable distribution in the mean comparison test. The magnitude of the estimated effects is comparable to the ones of the parametric estimation models. Researchers with a successful grant publish on average 1.2 articles (exp[0.188]) and about one additional preprint (exp[0.053]) more in the following year, their articles receive 1.7 citations (exp(0.532)) more than articles from the control group. In terms of altmetrics we also see a significant difference in means which is 1.15 (exp[0.138]) points higher in the group of grant receivers. Also, in terms of the FCR and the RCR, there are significant effects on the treatment group. The probability to be among the ‘highly cited researchers’ (as measured by an FCR > 3) is 5.5 (αTT = 0.055) percentage points higher in the group of funded researchers. This means publications in t + 1 are cited at least three times as much as the average in the field.

Persistency of treatment effects

In addition to the effect in the year after funding (t + 1), we are interested in the persistency of the effect in the following years up to (t + 3). It is likely that any output effects occur with a considerable time-lag after funding received. The start-up of the research project including the training of new researchers and the set-up of equipment may take some time before the actual research starts. In principle, we could of course expect the effect to last also longer than three to four years. However, after 4 years, the treatment effect of one project grant may become confounded by one (or several) follow-up grants. Tables 5 and 6 show the results for the different outcome variables also for different time horizons.

The results suggest that the funding has a persistent output effect amounting to about one additional article in each of the 3 years following the year of funding. The effect on preprints is already significant in the first year, but also turns out to sustain in later years suggesting that research results from the project are probably circulated via this channel. In contrast to these results, we find for altmetrics that they are significantly higher early on, but not in the medium-run. When looking at citation-based measures as indicators for impact and relevance, we see that the number of citations stays significantly higher in the medium-run, but effect size declines somewhat indicating that researchers publish the most important results earlier after funding. This is also reflected in the results for the average number of citations and the probability to be highly cited. For the FCR, the effect is less persistent as the difference between groups fades after the first year. For the RCR the differences in means is strongest in the first year after the grant and only significant at the 10% level in t + 3.

Impact heterogeneity over the academic life-cycle and research fields

For most outcomes, we find a significant and persistent difference between funded and unfunded researchers, while controlling for other drivers of research outcomes. As shown in earlier studies (Arora and Gambardella, 2005; Jacob and Lefgren, 2011), a grant’s impact may depend on the career stage of a researcher. As a proxy for career stage, we use the biological age of the researchers. Additionally, there might be heterogeneity in the funding effect depending on the research fields. We perform interaction tests between (i) the age and the funding and (ii) between the research field and the funding. More specifically, we employ a categorical variable for age and allow for an interaction term with the funding variable in the mixed models presented in the section “Mixed effects model—longitudinal regression models”. The same procedure is repeated with research field. The interaction tests suggests indeed that there is evidence for a difference in the effect of funding on the article and preprint count depending on the age group (with p-value < 0.001, for both outcomes) and the research field (with p-value of <0.001 for articles and p-value of 0.0045 for preprints). When we test for those same interaction effects in the continuous outcome models, the results suggest that there is a difference in the funding effect on the average number of citations per article depending on the age group (p-value < 0.001) and the research field (p-value = 0.0242). For altmetrics and the citation ratios, we see no evidence for major differences across age groups (p-value of 0.328 for the altmetric, 0.802 for the RCR and 0.873 for the FCR) nor research fields (p-value of 0.2296 for altmetric and p-value of 0.5124 for FCRFootnote 19).

To better understand those differences in funding effect, we refer to Fig. 2 for the article counts and Fig. 3 for the average number of citations per article. Those figures show the predicted article or citation count depending on the funding group (in t−1) and the age group or the research field. For all those subgroups, SNSF funding (as PI) in t−1 has a positive effect on the outcome. However the size of this effect differs substantially. The youngest age group (<45) seems to benefit considerably from the funding in terms of predicted difference between treatment and control researchers in article count, but also in citation per article (the confidence intervals of funded as PI and no funding do not overlap). More senior funded researchers (45–54 and 55–65 years of age) perform similarly well compared to researchers with the same characteristics but no funding. It is noteworthy that for older researchers (65+) the difference between groups is again higher indicating that funding helps to keep productivity up. We obtain very similar results based on post-estimations with interaction effects in the matched samples from the propensity score matching approach (see Fig. S.7 in the supplementary material).

To predict the article count the baseline confounding variables were fixed to Year 2015–19, Male, Evaluation Score Score AB-A, University, LS in the age interaction model and age lower to 45 for the field interaction model. We see a significant positive percentage change of 18% for the youngest age group among PIs (<45) and 115% for the most senior researchers (>65) compared to no SNSF funding. Additionally, the effect of funding is largest for STEM researchers (23% more articles as PI compared to unfunded researchers. The effect in LS and SSH is less prominent, +15% and +12%, respectively.

For the predictions the baseline confounding variables were fixed to Year 2010–14, Male, Evaluation Score Score AB-A, University, LS in the age interaction model and age lower to 45 for the field interaction model. A significant positive percentage change of 10% for the youngest age group among PIs (<45) compared to no SNSF funding can be observed for the average number of citations. The remaining changes in citation number are not significant. Then, the effect of funding is largest for SSH researchers (15% more citations per article as PI compared to unfunded researchers). The effect in LS (+6%) and STEM (+8%) is less prominent. Note that the intervals however all overlap.

For all research areas, SNSF funding has a positive effect on article count and number of citations. STEM researchers however benefit most with a percentage change of 23% more articles as funded PI compared to no funding; funded (PI) researchers from the LS publish 15% more articles and the SSH researchers 12%. This could reflect that in STEM and LS the extent to which research can be successfully conducted is highly funding-dependent, while this is not necessarily the case in the SSH. Yet regarding the number of citations per article, the SSH researchers benefit most (14% more citations for SSH, 8% for STEM and 6% for LS). This suggest that funding may support the quality of research and hence its impact more in the SSH field. Thus, it should be noted that even though SSH researcher publish and are cited less in absolute numbers, we still see a substantial positive effect of SNSF funding on the outcomes. The respective figures for the remaining outcomes can be found in the supplementary material; more specifically Fig. S.5 for the altmetric score, Fig. S.4 for the preprint count and Fig. S.6 for the FCR, in the supplementary material.

Conclusions

Understanding the role played by competitive research funding is crucial for designing research funding policies that best foster knowledge generation and diffusion. By investigating the impact of project funding on scientific output, its relevance and accessibility, this study contributes to research on the effects of research funding at the level of the individual researcher.

Using detailed information—including personal characteristics and the evaluation scores that their submitted projects received by peers—on the population of all project funding applicants at the SNSF during the 2005–2019 period, we estimate the impact of receiving project funding on publication outcomes and their relevance. The strengths of this study are in the detailed information on both researchers and grant proposals. First, the sample consists of both successful as well as unsuccessfully applicants. Therefore researchers who also had a research idea to submit are part of the control group. Second, information on the proposal evaluation scores allows to compare researchers which have submitted project ideas of—on average—comparable quality. The estimated treatment effects therefore take into account that all applicants may benefit from the competition for funding through participation effects (Ayoubi et al., 2019).

Besides these methodological aspects, a key contribution of this study is that—in addition to articles in scientific journals—it is the first to include preprints. Preprints are an increasingly important means of disseminating research results early and without access restrictions (Berg et al., 2016; Serghiou and Ioannidis, 2018). Besides this, we investigate relevance and impact in terms of absolute and relative citation measures. In the analysis of citations that published research receives, it is important to account for field-specific citation patterns. We do so by including the RCR and the FCR as measures for relative research impact in a researcher’s own field of study as additional outcome measures. Finally, this is the first study to investigate the link between funding and researchers’ altmetrics scores which mirror the attention paid to research outcomes in the wider public (Bornmann, 2014; Lăzăroiu, 2017; Warren et al., 2017).

The results show a similar pattern across all estimation methods indicating an effect size of about one additional article in each of the 3 years following the funding. In addition, we find a similarly sized effect on the number of preprints. The comparison across methods suggests that if accounting for important observable researcher characteristics (e.g. age, field, gender and experience) as well as proposal quality (as reflected in evaluation scores) parametric regression results and non-parametric models lead to similar conclusions with regard to publication outputs. Importantly, a significant effect on the number of citations to articles could be observed indicating that funding does not merely translate into more, but only marginally relevant research. Funded research also appears to reach the general public more than other research as indicated by higher average altmetrics in the group of grant-winners. In terms of the RCR and FCR the results indicate that there might be an effect on the funded researchers’ overall visibility in the research community. However, the effects on the RCR are not robust to the estimation method used.

The funding program analyzed in this study is open to all researchers in Switzerland affiliated with institutions eligible to receive SNSF funding. This allows us to study treatment effect heterogeneity over researchers’ life cycle and research field. The results suggest here, that funding is particularly important at earlier career stages where PF facilitates research that would not have been pursued without funding. With regard to treatment effect heterogeneity across fields, we find the highest effect of funding on the article count for STEM researchers and the highest funding effect on citations in SSH.

While the insights on a positive effect of funding on the number of subsequent scientific articles are in line with previous studies, compared to previous results, the effects that we document here are larger. The reason for that may be related to the fact that the SNSF is the main source of research funding in Switzerland we can therefore identify researchers for the control group who really had no other project grant in the period for which they are considered a control. We also observe co-PIs which may in other studies—due to a focus on PIs or lack of information—be assigned to the control group. Both may lead to an under-estimation of funding effects in previous studies. Moreover, by counting all publications of these researchers, we further take not only articles directly related to the project into account, but also that there are learning spillovers and synergies beyond the project that improve a researcher’s overall research performance.

Despite all efforts, this study is not without limitations. First, we do not observe industry funding for research projects which may be important in the engineering sciences (Hottenrott and Lawson, 2017; Hottenrott and Thorwarth, 2011). Moreover, the fact that researchers receive grants repeatedly and may switch between treatment and control group over time, makes a simple difference-in-difference analysis difficult. These factors further complicate the assessment of long-term impact of the research outcomes that we observe. The methods presented here aim to account for the non-randomness of the funding award and the underlying data structure. While we find that the main results are robust to the estimation method used, the reader should keep in mind that time-varying unobserved factors that affect an individual’s publication outcomes such as family or health status, involvement in professional services or administrative roles and duties (Fudickar et al., 2016) may be not sufficiently accounted for. Moreover, we do not have detailed information on the involved research teams and individual responsibilities within the projects. Therefore we do not investigate the role of team characteristics for any outcome effects. In such an analysis, it would be desirable to study whether and how sole-PI and multiple-PI projects differ and which role different PI profiles play for project success. A more detailed analysis of teams would also be interesting in order to differentiate between group and individual effort. Third, we used preprints and altmetrics as output measures which is novel compared to previous research on funding effects. Since we cannot compare our results to previous ones, we encourage future research on the effects of funding on early publishing and science communication more directly. It should be kept in mind that altmetrics may measure popularity in addition to efforts at dissemination as well as the extent to which authors are embedded in a network, but not the quality of individual research outcomes. Probably more than publications in peer-reviewed journals, preprints and altmetrics may be gamed—for example by repeated sharing of own articles or by ‘Salami slicing’ research outcomes into several preprints. Finally, it should be noted that we did not investigate several aspects that might be important in impact evaluation in this study. This list includes the role of the funding amount, the degree of novelty of the produced research, as well as treatment effect heterogeneity in terms of individual characteristics other than age.

Data availability

An anonymized and aggregated data set can be found on Zenodo (https://doi.org/10.5281/zenodo.5011201). In order to anonymize the data we only provide applicants’ age as categorical variable.

Notes

Importance of competitive research funding increased substantially over the past three decades. The basic idea of promoting such science policy goes back to New Public Management reforms which aimed to increase the returns to public science funding through the selective provision of more funding to the most able researchers, groups and universities (winners in funding competitions), and to create performance incentives at all levels of the university system (Gläser and Serrano-Velarde, 2018; Krücken and Meier, 2006).

The SNSF is Switzerland’s main research funding agency. The SNSF is mandated by the Swiss confederation to allocate research funding to eligible researchers at universities, (technical) colleges and research organizations.

Excellent publications in this study were for instance papers in the upper quarter of journals included in the Science Citation Index (SCI).

Novelty is measured by the extent to which a published paper makes first time ever combinations of referenced journals while taking into account the difficulty of making such combinations.

An alternative approach is to employ pre-sample information of the researcher as a proxy for unobservable characteristics, such as a researcher’s ability or writing talent which impact research output in the (later) sample period. We conducted such linear feedback models (LFM) as robustness tests and present them in Supplement S.2.1.

We use the lmer package in R and a negative binomial family.

In addition to the closeness on MD, we use elements of exact matching by requiring that selected control researchers belong exactly to the same subject field and to be observed in the same year as the researchers in the treatment group. This allows to account for different publication patterns across disciplines and also for time trends in funding likelihood and in the outcome variables.

As we perform sampling with replacement to estimate the counterfactual situation, an ordinary t-statistic on mean differences after matching is biased, because it does not take the appearance of repeated observations into account. Therefore, we have to correct the standard errors in order to draw conclusions on statistical inference, following Lechner (2001).

The Sinergia scheme is closely linked to PF, so that we will not differentiate between them in the following.

If granted, a co-applicant is entitled to parts of the funding.

If Dimensions found more than one ID for a certain name, we used further information on the researcher available to the SNSF to narrow the ID-options down. This supplementary information was, if present the ORCID, the current and previous research institution(s), country and birth year. Only researchers with a unique ID could be used in the following. See Table S2 in the supplementary material for a comparison of the researchers that were found and not found.

Some characteristics on the researchers without unique ID can be found in Table S2 in the Supplementary material.

Since only a few cases are identified to hold major international grants but no SNSF funding, we do not differentiate between these groups in the following. Note that the data was retrieved from the ERC Funded Projects Database included only grants acquired since 2007.

Unfortunately the altmetric cannot be retrieved as a time-dependent variable from Dimensions but only as the altmetric state at the time point of data retrieval (September 2020). Therefore the altmetric informs us on the cumulative importance an article published at t got until September 2020.

Note that we also tested the robustness of this result to when focusing on PF as treatment and adding the researchers with a funded Sinergia project to the control group, but adjusting with a Sinergia dummy variable. The size of funding as PI and co-PI effects and their confidence intervals were comparable.

Severin et al. (2020), for example, discuss gender biases on the reviewer scores leading to lower grant likelihood for female researchers.

Note that we did not test the interaction for the RCR outcome, as this analysis was done only for the LS field.

References

Adams JD, Griliches Z (1998) Research productivity in a system of universities. Ann Écon Stat 49–50, 127–162.

ANZSRC (2019) Outcomes paper: Australian and New Zealand Standard Research Classification Review 2019. Ministry of Business, Innovation & Employment.

Arora A, David P, Gambardella A (1998) Reputation and competence in publicly funded science: estimating the effects on research group productivity. Ann Econ Stat 49–50, 163–198.

Arora A, Gambardella A (2005) The impact of NSF support for basic research in economics. Ann Écon Stat 79–80, 91–117.

Ayoubi C, Pezzoni M, Visentin F (2019) The important thing is not to win, it is to take part: what if scientists benefit from participating in research grant competitions? Res Policy 48:84–97

Battistin E, Rettore E (2008) Ineligibles and eligible non-participants as a double comparison group in regression-discontinuity designs. J Econom 142:715–730

Beaudry C, Allaoui S (2012) Impact of public and private research funding on scientific production: the case of nanotechnology Res Policy 41:1589–1606

Benavente JM, Crespi G, Figal Garone L, Maffioli A (2012) The impact of national research funds: a regression discontinuity approach to the Chilean fondecyt Res Policy 41:1461–1475

Berg JM, Bhalla N, Bourne PE, Chalfie M, Drubin DG, Fraser JS, Greider CW, Hendricks M, Jones C, Kiley R, King S, Kirschner MW, Krumholz HM, Lehmann R, Leptin M, Pulverer B, Rosenzweig B, Spiro JE, Stebbins M, Strasser C, Swaminathan S, Turner P, Vale RD, VijayRaghavan K, Wolberger C (2016) Preprints for the life sciences. Science 352:899–901

Blundell R, Griffith R, Windmeijer F (1995), Dynamics and correlated responses in longitudinal count data models. In: Seeber GUH, Francis BJ, Hatzinger R, Steckel-Berger G (eds), Statistical modelling. Springer New York, New York, pp. 35–42.

Bornmann L (2014) Do altmetrics point to the broader impact of research? An overview of benefits and disadvantages of altmetrics J Informetr 8:895–903

Carayol N, Matt M (2004) Does research organization influence academic production? laboratory level evidence from a large european university Res Policy 33:1081–1102

de la Cuesta B, Imai K (2016) Misunderstandings about the regression discontinuity design in the study of close elections. Annu Rev Political Sci 19:375–396

Digital Science (2018) Dimensions [software] available from https://app.dimensions.ai. Accessed Sept 2020, under licence agreement.

Fang F, Casadevall A (2016) Research funding: the case for a modified lottery. mBio 7(2):e00422-16.

Fleming L, Greene H, Li G, Marx M, Yao D (2019) Government-funded research increasingly fuels innovation. Science 364:1139–1141

Franzoni C, Giuseppe S, Stephan P (2011) Changing incentives to publish. Science (New York, NY) 333:702–3

Froumin I, Lisyutkin M (2015) Excellence-driven policies and initiatives in the context of bologna process: rationale, design, implementation and outcomes. In: Curej A, Matei L, Pricopie R, Salmi J, Scott P (eds) The European higher education area. Springer.

Fudickar R, Hottenrott H, Lawson C (2016) What’s the price of academic consulting? effects of public and private sector consulting on academic research. Ind Corp Change 27:699–722

Gerfin M, Lechner M (2002) A microeconometric evaluation of the active labour market policy in Switzerland. Econ J 112:854–893

Gläser J, Serrano-Velarde K (2018) Changing funding arrangements and the production of scientific knowledge: introduction to the special issue. Minerva 56:1–10

Graves N, Barnett AG, Clarke P (2011) Funding grant proposals for scientific research: retrospective analysis of scores by members of grant review panel. BMJ 343:d4797.

Hausman N (2021) University innovation and local economic growth. Rev Econ Stat (forthcoming). https://doi.org/10.1162/rest_a_01027.

Hottenrott H, Lawson C (2017) Fishing for complementarities: research grants and research productivity Int J Ind Organ 51:1–38

Hottenrott H, Thorwarth S (2011) Industry funding of university research and scientific productivity. Kyklos 64:534–555

Hutchins BI, Yuan X, Anderson JM, Santangelo GM (2016) Relative citation ratio (RCR): a new metric that uses citation rates to measure influence at the article level. PLoS Biol 14:1–25

Jacob BA, Lefgren L (2011) The impact of research grant funding on scientific productivity. J Public Econ 95:1168–1177

Jaffe AB (1989) Real effects of academic research. Am Econ Rev 79:957–970

Jaffe AB (2002) Building programme evaluation into the design of public research support programmes. Oxf Rev Econ Policy 18:22–34

Jonkers K, Zacharewicz T (2016) Research performance based funding systems: a comparative assessment. Technical Report JRC101043, Publications Office of the European Union.

Konkiel S (2016) Altmetrics: diversifying the understanding of influential scholarship. Palgrave Commun 2:16057

Krücken G, Meier F (2006) Turning the university into an organizational actor. In: Drori GS, Meyer JW, Hwang H (eds) Globalization and organization: world society and organizational change, vol 18. pp. 241–257.

Lechner M (2001) Identification and estimation of causal effects of multiple treatments under the conditional independence assumption. In: Lechner M, Pfeiffer F (eds) Econometric evaluation of labour market policies. Physica-Verlag HD, Heidelberg, pp. 43–58.

Lăzăroiu G (2017) What do altmetrics measure? Maybe the broader impact of research on society. Educ Philos Theory 49:309–311

Mali F, Pustovrh T, Platinovšek R, Kronegger L, Ferligoj A (2017) The effects of funding and co-authorship on research performance in a small scientific community. Sci Public Policy 44:486–496

Neufeld J, Huber N, Wegner A (2013) Peer review-based selection decisions in individual research funding, applicants’ publication strategies and performance: the case of the ERC starting grants. Res Eval 22:237–247

Oancea A (2016) Research governance and the future(s) of research assessment. Palgrave Commun 5:27

Payne A (2002) Do US congressional earmarks increase research output at universities? Sci Public Policy 29:314–330

Payne A, Siow A (2003) Does federal research funding increase university research output? Adv Econ Anal Policy 3:1018–1018

Poege F, Harhoff D, Gaessler F, Baruffaldi S (2019) Science quality and the value of inventions. Sci Adv 5(12):eaay7323.

Reale E (2017) Analysis of national public research funding (PREF)—final report, Technical Report JRC107599, Publications Office of the European Union.

Rubin DB (1977) Assignment to treatment group on the basis of a covariate. J Educ Stat 2:1–26

Schmidt J (2008) Das Hochschulsystem der Schweiz: Aufbau, Steuerung und Finanzierung der schweizerischen Hochschulen. Beitr Hochschulforsch 30:114–147

Serghiou S, Ioannidis JPA (2018) Altmetric scores, citations, and publication of studies posted as preprints. JAMA 319:402–404

Severin A, Martins J, Heyard R, Delavy F, Jorstad A, Egger M (2020) Gender and other potential biases in peer review: cross-sectional analysis of 38–250 external peer review reports. BMJ Open 10:e035058

Silberzahn R, Uhlmann EL, Martin DP, Anselmi P, Aust F, Awtrey E, Bahník Š, Bai F, Bannard C, Bonnier E, Carlsson R, Cheung F, Christensen G, Clay R, Craig MA, Rosa AD, Dam L, Evans MH, Cervantes IF, Fong N, Gamez-Djokic M, Glenz A, Gordon-McKeon S, Heaton TJ, Hederos K, Heene M, Mohr AJH, Högden F, Hui K, Johannesson M, Kalodimos J, Kaszubowski E, Kennedy DM, Lei R, Lindsay TA, Liverani S, Madan CR, Molden D, Molleman E, Morey RD, Mulder LB, Nijstad BR, Pope NG, Pope B, Prenoveau JM, Rink F, Robusto E, Roderique H, Sandberg A, Schlüter E, Schönbrodt FD, Sherman MF, Sommer SA, Sotak K, Spain S, Spörlein C, Stafford T, Stefanutti L, Tauber S, Ullrich J, Vianello M, Wagenmakers E-J, Witkowiak M, Yoon S, Nosek BA (2018) Many analysts, one data set: making transparent how variations in analytic choices affect results. Adv Methods Pract Psychol Sci 1:337–356

Stephan PE (2012) How economics shapes science. Harvard University Press, Cambridge.

Surkis A, Spore S (2018) The relative citation ratio: what is it and why should medical librarians care? J Med Libr Assoc 106:508–513

Tahmooresnejad L, Beaudry C (2019) Citation impact of public and private funding on nanotechnology-related publications Int J Technol Manag 79:21–59

Wahls WP (2018) High cost of bias: diminishing marginal returns on NIH grant funding to institutions. Preprint at https://doi.org/10.1101/367847.

Wang J, Lee Y-N, Walsh JP (2018) Funding model and creativity in science: competitive versus block funding and status contingency effects Res Policy 47:1070–1083

Warren HR, Raison N, Dasgupta P (2017) The rise of altmetrics. JAMA 317:131–132

Acknowledgements

We are grateful to Tobias Phillip for helpful comments on the study design and on previous versions of this manuscript and to Matthias Egger for an additional careful review of the manuscript prior to submission. This work was supported by the SNSF (internal funds).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heyard, R., Hottenrott, H. The value of research funding for knowledge creation and dissemination: A study of SNSF Research Grants. Humanit Soc Sci Commun 8, 217 (2021). https://doi.org/10.1057/s41599-021-00891-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-021-00891-x

This article is cited by

-

Choice of Open Access in Elsevier Hybrid Journals

Publishing Research Quarterly (2024)

-

Exploring individual character traits and behaviours of clinical academic allied health professionals: a qualitative study

BMC Health Services Research (2023)

-

A new approach to grant review assessments: score, then rank

Research Integrity and Peer Review (2023)

-

What is research funding, how does it influence research, and how is it recorded? Key dimensions of variation

Scientometrics (2023)

-

Scientific laws of research funding to support citations and diffusion of knowledge in life science

Scientometrics (2022)