Abstract

This study reveals a shift of gun-related narratives created by two ideological groups during three high-profile mass shootings in the United States across the years from 2016 to 2018. It utilizes large-scale, longitudinal social media traces from over 155,000 ideology-identifiable Twitter users. The study design leveraged both the linguistic dictionary approach as well as thematic coding inspired by Narrative Policy Framework, which allows for statistical and qualitative comparison. We found several distinctive narrative characteristics between the two ideology groups in response to the shooting events—two groups differed by how they incorporated linguistic and narrative features in their tweets in terms of policy stance, attribution (how one believed to be the problem, the cause or blame, and the solution), the rhetoric employed, and emotion throughout the incidents. The findings suggest how shooting events may penetrate the public discursive processes that had been previously dominated by existing ideological references and may facilitate discussions beyond ideological identities. Overall, in the wake of mass shooting events, the tweets adhering to the majority policy stance within a camp declined, whereas the proportion of mixed or flipped stance tweets increased. Meanwhile, more tweets were observed to express causal reasoning of a held policy stance, and a different pattern in the use of rhetoric schemes, such as the decline of provocative ridicule, emerged. The shifting patterns in users’ narratives coincide with the two groups distinctive emotional response revealed in text. These findings offer insights into the opportunity to reconcile conflicts and the potential for creating civic technologies to improve the interpretability of linguistic and narrative signals and to support diverse narratives and framing.

Similar content being viewed by others

Introduction

Gun policy has been one of the most perennial, contentious issues in the United States. The shooting at Orlando, Florida, nightclub on June 12, 2016, that left 49 dead and many wounded, was the deadliest mass shooting in modern US history—until October 1, 2017, when a shooting at the Route 91 Harvest music festival in Las Vegas where at least 58 were killed and 527 injured. Of the 20 deadliest mass shootings, more than half have occurred in the past 10 years. With every mass shooting came shock and tears, and each restarted a divisive debate about how guns should be regulated, what is driving the tragedy, and what can be done to prevent it. Despite many statistics published, the two opposing sides—people who favor and people who oppose gun control—seem to believe in distinct answers. The different opinions on guns have plagued the society for more than four decades, resulting in very little legislative success.

Have people’s beliefs about guns changed across multiple tragic shooting events in recent years? This study utilizes social media as a lens to answer this question by examining how people constructed their narratives about gun issues when talking on Twitter. Social media have been considered as places that support everyday socio-political talk (Jackson et al., 2013; Graham et al., 2015; Highfield, 2017). Though researchers argued that platforms like Twitter can hardly be used equally effective by every citizen (Rasmussen, 2014), no outlet for opinion has been equally accessible, and social media could be the most accessible outlet that has ever existed. Social media could be useful to support a “talkative electorate”—where people become aware of other opinions, and develop or clarify their positions about an issue—offering citizens opportunities to achieve mutual understanding about each other (Graham et al., 2015; Jackson et al., 2013; Gamson and Gamson, 1992). Nevertheless, most literature has focused on how social media communications mirror or predict offline opinion polls or the outcome of related social events (Tumasjan et al., 2010; Benton et al., 2016; De Choudhury et al., 2016); less explored is the heterogeneity in the ways people express their opinions, and how the various talks that translate individuals’ underlying beliefs evolve (Anstead and O’Loughlin, 2015; Braman and Kahan, 2006). Also, an increasing amount of work has explored the role of social media and other information communication technologies (ICTs) in collective actions, including offering mobilizing structures (Starbird and Palen, 2012; Tufekci and Wilson, 2012), providing opportunity structures (McAdam et al., 1996; Flores-Saviaga et al., 2018), and facilitating framing processes (Snow et al., 1986, 2014; Porter and Hellsten, 2014; Dimond et al., 2013; Stewart et al., 2017). Much attention has been paid to the frames or discourses driven by elites, social movement organizations, and media (Merry, 2016; Starbird, 2017; Guggenheim et al., 2015), while less is known about how non-opinion leaders discuss and frame political ideas. We argue that understanding the discursive processes—how average people develop alternatives in their everyday social media talk—is particularly important while seeking opportunities to bridge contested opinion clusters.

The present work

In this study, we utilize a large panel of more than 155,000 ideology-identifiable users and their complete timeline of tweets over 3 years. Twitter users’ expressive support for presidential candidates was used as a proxy for either a liberal-leaning or conservative-leaning ideological preference—referred to as Lib and Con, respectively. By examining these users’ everyday talk surrounding the gun issues and three major mass shooting events occurred in the past 3 years—the 2016 Orlando nightclub shooting, 2017 Las Vegas shooting, and 2018 Parkland High School shooting (Table 1)—we examined how Twitter users’ narratives about gun issues changed at the group level. More specifically, we seek to explore how the narratives, carried in a public space (Twitter), reflect “what they believe to be the problem, the cause or blame, and the solution”—a stock of ideas referred to as causal attribution.

By comparing and contrasting how users’ narratives reflecting such attribution differ across ideological camps and change through events, we attempt to seek opportunities that can potentially reconcile the conflicts. However, retrieving users’ discourse to make a meaningful comparison is nontrivial. To do so, we employ a mixed-methods approach combing quantitative and qualitative analyses to characterize both the linguistic signals and narrative structures, derived from which we present novel analyses on the variation of narratives about gun-related issues.

Background

Gun debate: rights vs. control

Americans have the highest gun ownership per capita rate in the world. In 2018, Small Arms Survey reported that U.S. civilians alone account for 393 million (roughly 46%) of the worldwide total of civilian held firearms, which is more than those held by civilians in the other top 25 countries combined (Small Arms Survey, 2018). Recent surveys showed that about 30% of US adults report they personally own a gun, and over 43% report living in a gun household (Saad, 2019; Gramlich and Schaeffer, 2019). Whether more guns make society less safe or more has been at the center of debates for decades in the United States (Braman and Kahan, 2006).

Supporters of gun regulation believe that the availability of guns diminishes public safety as it facilitates violent crimes. Opponents, rather, often believe that carrying a gun is an constitutional right to self-defense, which would enhance the public safety (Haider-Markel and Joslyn, 2001; Braman and Kahan, 2006). Prior work argued that most individuals’ positions on gun policy are hardly moved by justifications presented by the opposing side, but are inextricably shaped by their beliefs about guns and safety rooted in distinct cultural values (Braman and Kahan, 2006). The debate has been repeatedly intensified by high-profile mass shootings. The broader literature on gun issues has examined various aspects of responses following mass shootings, ranging from the impact on affected communities and public opinion (Newman and Hartman, 2017), to the framing of media (Guggenheim et al., 2015), to the tactics and discourse of movement organizations or interest groups (Smith-Walter et al., 2016; Merry, 2016). Despite that recent surveys have shown growing support for more restrictive gun policies in recent years (Poll, 2019; Inc, 2017; Sanger-Katz, 2018), the support for a legislative change seemed to be softer (Sanger-Katz, 2018) and more divided along partisan lines (Inc, 2017). High-profile shooting events seemly played a role in changing the public sentiments, but did they shake people’s beliefs on gun issues? How would a change manifest itself in different ideological camps over time? Our motivation is to unpack the change by probing the narrative variations from diverse individuals who were likely to have different ideological preferences.

Collective action and framing

Related to our research, much work has been done in the studies of collective action and particularly social movements—an organized group that acts consciously to promote or resist change through collective action. Social media and other ICTs have been demonstrated to play a significant role in social movements such as “Arab Spring”, “Occupy”, and “#BlackLivesMatter” (De Choudhury et al., 2016; Chung, Lin, et al., 2018; Borge-Holthoefer et al., 2015; Starbird and Palen, 2012; Tufekci and Wilson, 2012; Stewart et al., 2017). Increasingly more empirical analyses have made connections between social media and movement theories, including mobilizing structures, opportunity structures, and framing processes. For example, in terms of mobilizing structures, social media facilitated street protests by providing wide-reaching communication channels (De Choudhury et al., 2016; Starbird and Palen, 2012; Tufekci and Wilson, 2012). When considering opportunity structures, ICTs create opportunity for people to more easily connect and coordinates (McAdam et al., 1996; Shklovski et al., 2008; Starbird and Palen, 2011; Flores-Saviaga et al., 2018). With respect to framing processes, social media, and other ICTs provide structures for sharing experiences surrounding a collective effort (Snow et al., 1986, 2014; Porter and Hellsten, 2014; Dimond et al., 2013). The concept of “frame” has been studied within social movements and in political discourse and communications more broadly. Goffman defined frames as “schemata of interpretation” that help people to make sense of the world—to interpret and organize individual experiences, render events or occurrences meaningful, and guide actions (McAdam et al., 1996).

To date, considerable empirical work has focused on identifying various types of frames and their resonance through strategic and diffusion processes (Benford and Snow, 2000), where new capacities have been afforded by social media and other ICTs as cases of mobilizing or opportunity structures (De Choudhury et al., 2016; Starbird and Palen, 2012; Tufekci and Wilson, 2012; Dimond et al., 2013). However, little is known about the discursive process, especially the variation of frames emerged from, employed in and changed through ordinary people’s discussions, which is in part due to the difficulty in retrieving discourse in individuals’ talk and conversations. One close attempt is Stewart et al.’s study of networked frame contests on Twitter (Stewart et al., 2017), where the highly cited tweets from different networked clusters were selected to be interpreted in a qualitative manner.

Another aspect that is also less explored is the discovery of opportunity structures reflecting resources and climates that movements can take advantage of (McAdam et al., 1996). Outside of social movements, such as natural disasters and societal events, ICTs have been used to gauge particular sentiments and climates, as well as to garner resources (Gilbert and Karahalios, 2010; Shklovski et al., 2008; Starbird and Palen, 2012). However, seeking opportunity structures is especially challenging in a highly polarized environment where already populated frames seem to tap into existing cultural values. In the case of the gun debate, it is unclear whether mass shooting events represent a kind of opportunity structure, how exactly such an opportunity is unfolded in social media’s talk, and how it is likely to suggest a potential to reconcile the conflict.

Social media and public deliberation

Social media have become an important source for studying public’s opinion on social events and political issues including gun control, same-sex marriage, racial inequality, immigration, and abortion (Benton et al., 2016; Brooker et al., 2015; De Choudhury et al., 2016; Zhang and Counts, 2015; Chung, Wei, et al., 2016). While many of these studies relied on quantitative analyses of social media content, characterized by, for example, psycholinguistic and textual features, others suggested that social media enable studies beyond quantitative opinion polling by looking into the richer social conversation and political deliberation process (Anstead and O’Loughlin, 2015). To study discourse on controversial topics, some prior works have also employed content or qualitative analyses (Sharma et al., 2017; Starbird, 2017). Nevertheless, none has shown whether and how social media can be used to study users’ casual attributions on a contentious issue. We are particularly interested in the extent to which social media users’ narratives about gun-related issues vary, contingent on the event happening and cultural mindsets. In this work, we make the first attempt to examine users’ narratives reflecting causal attribution and how such narratives changed across gun violence events in terms of their choices of vocabulary and structure in their narratives.

Narrative analysis of policy discussion

Prior research on gun policy has focused much on identifying the major gun issue framing/paradigms that been have adopted in campaigns/debates and the distinct emphasis between opposing campaign organizations (Merry, 2018). However, the less explored was how the gun narratives themselves are conceived. By gun narrative we refer to a gun-relevant narrated story that a person may conceive to communicate with others about their perceived realities pertaining to guns. Humans utilize narrative thinking (Polkinghorne, 1988) to configure stories that present their subjective understanding of how things happen; for example, after a shooting incident, a conceived narrative could reveal the narrator’s ideas regarding how the problem was defined, its cause and, the solutions. The same happenings may be narrated into distinct perceived realities because any derived meaning is influenced by the particular narrators’ held views and beliefs. Our key idea is to identify the causal-attributional machinism in Twitter users’ narratives by how the narratives cast narrative components.

To do so, we adapted Narrative Policy Framework (NPF) (Jones and McBeth, 2010), an established methodological framework (Shanahan et al., 2018) that draws upon the characteristics of narrative to analyze policy narratives by explicating the narrated components. In NPF, a narrative is a story that can be decomposed into components—e.g., it could be a temporal order of events unfolding along a plot with the cast characters (primarily, villain, victim, and hero) which reveals how the narrators interpret the cause and consequence of their own or others’ lived experiences, which culminates in a moral (e.g., a suggestively right or wrong, or a conclusive message behind). More specifically, villain may be cast in a narrative to indicate who cause the damage to the hurt victims, and a hero cast to indicate who may resolve the problem, in which the particular causal relationships among the characters and their actions will be revealed throughout the course of events in the conceived plot; finally a moral, often referring to a policy solution in NPF, is presented, to persuade or make a call to action. We adapted the framework and extended the elements that will best help us to focus on the causal inference in a narrative construction.

Rhetoric with emotion

Prior studies on policy persuasion have investigated how emotion can be employed in rhetoric to argue for or frame a particular policy stance (Nabi, 2003; McClurg, 1992). In gun debates, rhetoric with certain emotions, such as fear and national pride, have been argued to hinder constructive communications between people who hold distinct stances in the gun issues or contribute particularly to a certain stance (Holbert et al., 2004; McClurg, 1992). While the existing literature primarily emphasized on the elite arguments (made by those with power, e.g., debates in the congress, newspaper editorials, or opinion leaders), our study, instead, is interested in expanding the understanding of emotions conveyed in gun-related narratives among the public, and how the negative emotions experienced in the context of mass shooting events interact with people’s causal attribution.

This study focuses on the extent to which social media users’ narrative about gun-related issues vary, contingent on the event happenings and cultural mindsets. In terms of narrative variations, we are interested in capturing quantifiable indicators, including linguistic signals and narrative structures. Our study is guided by the following analytical questions:

RQ1 How did the two ideological camps differ by their construction of causal narratives about gun? And how did the causal-attributional discussions on gun issues change in the context of a mass shooting event?

RQ2 How did the two ideological camps differ by (1) their stance of gun policy, (2) their calls to act upon a stance, and (3) their employed rhetoric schemes to convey a stance, solution, or action changed, in the context of a mass shooting event? And how did the stances and rhetoric schemes shift after mass shooting incidents?

RQ3 How did the casual narratives associated with emotions? And how did the ideological camps differ by these associated relations?

Results

A large-scale shift in linguistic patterns relevant to the causal-attributional discussions on gun issues was observed after a mass shooting event

First, overall, we observe a large-scale shift in the linguistic patterns after a mass shooting event. The posted tweets were more likely to involve words relevant to gun-issues and the words that purport causal-attributional discussions and emotional expressions. In particular, words in the LIWC cause category that signifies causal connectives (e.g., “because”, “thus”) or words that suggests cause/effect (e.g., “result”, “effect”, “reason”), as well as words in the three negative affective (sad, anxiety, and anger) were much more likely to appear in both groups’ gun-related tweets compared with their pre-event occurrences. We estimated the before–after rate ratios for the occurrence of the linguistic features (before: 2-week pre-event window; after: 2-week post-event window). Table 2 shows the ratios of the number of gun-relevant tweets (#Relevant) and the linguistic feature categories used for all three events. The #Relevant and linguistic features appear to have a large and significant rate change after a major shooting incident. For example, after an incident, the relevant tweets are 12.99 times more likely to appear than those before an incident (significantly >1, p < 0.001). While a rate increase in #Relevance is not surprising, we observe greater than an order of magnitude change in the linguistic features among the relevant tweets. Compared with the pre-event occurrence, the tweets with cause words are 16.42 times more likely to appear, and the negative affective features are 15–16.21 times more likely to appear. In Table 2, the ratio differences between event and control indicate that most linguistic features occur at least 10 times more than their base rates, and the increase is attributable to the shooting events.

Second, while the overall linguistic shifts in two groups seem to be quite similar, some category words (e.g., cause and sad) were used more frequently and consistently in one group than in another. Figure 1 shows the before–after rate ratios by groups. Overall, the two groups exhibit a similar change pattern with respect to their own base rates. Lib has slightly more rate increase than Con in categories including cause, and sad, while Con has a slightly more rate increase in anxiety. The nuances of these categorical differences will be further discussed in later sections.

Third, significant linguistic shifts recurred across events, and the shifts can last for at least 2 weeks. The shift of linguistic feature occurrences relative to the base rates is more in the later events. For example (see Fig. 2 top row), the before–after ratios of tweets including cause words are 36.38 (Con) and 43.17 (Lib) during Event-3, 16.58 (Con) and 15.88 (Lib) during Event-2, and 8.99 (Con) and 10.21 (Lib) during Event-1 (all estimated values are significantly >1 with p < 0.001). Figure 2 (bottom row) shows all event combined that the most drastic change of linguistic feature occurrences in the immediate 48 h after incidents —at least 30 times more likely to appear compared with their pre-event base rates. During the first week (day 2–7), the features still have a large rate increase, showing 12–29 times more than their base rates. In the second week, most of the features have around 10 times the rate increase over the base rates.

These large-scale linguistic pattern shifts reveal how a mass shooting event may penetrate social media users’ causal-attributional talks on gun issues. The following analyses examine the qualitative nature of the groups’ narrative changes in response to the shooting events.

Two ideological camps differ by their constructed causal narratives in response to events and across time

Through thematic coding, we identified three types of attributional elements in tweets (see details in the “Methodology” section). This section presents the three elements and the observed shifts of incorporating these elements in tweets.

-

(1)

The three attributional elements emerging from analysis (see Table 3 for illustrated examples): (i) Problem Nature has to do with an attempt to dig into the nature of the problem, e.g., a tweet may discuss a root cause of a recurring problem. (ii) Blame Target is about accountability or responsibility; a tweet may talk about who/what should be accountable or responsible for the problem and solution. Usually, it is a human agent (a person/group) who should have done something to prevent harm. In some cases, a policy/thing is narrated as if it was an agent who should take responsibility/blame because it is ineffective or should have been removed or reformed. Blame target is often a believed cause of the issue/event. (iii) Character refers to whether a tweet involves casting any of the three specific roles, Villain, Victim, and Hero. Real people are cast into these roles and understood as positive or negative contributors (Hero or Villain), or the immoral consequence (Victim) of the gun issue/event. We refers to the use of any of these elements in a narrative as attributional composition. These elements are not exclusive but distinguishable. Tweets may consist of one or more of such attributional elements. Take this tweet as an example, “Libs just don’t understand that criminals will always break the law with guns—hence being a criminal. Gun laws prevent citizens 2 defend”: “Gun laws” are considered to be the problem; “criminals” are villains, while “citizens” who cannot defend themselves with guns are the victims (the user/narrator is one of them) and “Libs” are the blame target who are responsible for such a consequence because they endorse gun laws.

Table 3 Attributional Elements identified from a causal-attributional narrative. -

(2)

Tweets involving in Problem Nature and Blame Target boosted after shooting incidents, and this shift was more prominent in Con.

Figure 3a shows that, after the shooting incidents, there was an increase in the proportion of tweets involving Problem Nature in Con. The proportion has been considerably higher in Lib during the pre-event time (37.8% in Lib versus 9.4% in Con, with a significant difference according to Fishers’ exact test, p = 0.01). However, a sharper increase was observed in Con (from 9.4% in pre-event time to 39.0% within the immediate 48 h after an incident; significant difference based on Fishers’ exact test, p = 0.003) and the rates stayed higher than that in pre-event time throughout the post-event time. Similarly, tweets talking about Blame Target were intensified from pre-event time to the immediate 48 h after the incidents. The increases were found in both Con (from 25.0% to 55.9%) and Lib (from 29.7% to 47.4%), but only statistically significant in Con (p = 0.008, and p = 0.132, for Lib and Con, respectively, Fisher’s exact tests). Both camps Combined, overall, more tweets involved blame target in the post-event time (from 27.5% to 45.8%, p = 0.005).

-

(3)

The shooting incidents also boosted the discussion about Villain and Victim, with a gradual shifting focus to Hero. Before a mass shooting incident, the two camps paid attention to different Characters. In pre-event time, tweets talking about Hero were more likely to appear in Lib than in Con (14.5% versus 1.4%, respectively, p = 0.008, Fisher’s exact test); tweets talking about Villain are more likely to appear in Con than in Lib (20.3% versus 5.8%, respectively, p = 0.002, Fisher’s exact test), and both talked about Victim at similar rates (11.6% versus 13.0%, for Con and Lib, respectively, p = 1.000, Fisher’s exact test). However, within the immediate 48 h after the shooting incidents, Villain and Victim became the center of attention in both camps and more so in Lib. While not immediately after the shooting incidents, during the later stages of post-event time, there was an increase in the discussions about Hero (from 3.4% to 10.3% in Con and 5.3% to 20.0% in Lib, in the immediate 48 h and the second week, respectively) that concerned who should be the ones to take actions and to prevent similar tragedies.

Ideological camps differ by their majority stance about gun policy; however, tweets adhering to the majority position was declined after an event, while the proportion of mixed or flipped stance tweets increased

The policy stance of each tweet was obtained by human coding (see details in the “Methodology” section). Overall, 89.6% of the human coded tweets relevant to guns conveyed a stance (89.4% in Lib and 89.9% in Con). Unsurprisingly, the two groups’ majorities held the opposite stances: opposing gun control prevailed in Con, and supporting in Lib. Such an opposite in majority stance stayed consistent across the events over years. However, overall, tweets with stance in favor of gun control increased after shooting events and the trends kept on over the years. We highlight three observations:

-

(1)

Shift in stance happened in the wake of a shooting event: In pre-event time, the proportion of tweets opposing gun control in Con and the proportion supporting gun control in Lib were 96.9% and 86.5%, respectively; in post-event time, the proportions dropped to 69.1% and 84.1%, respectively. Noticeably, the drop in Con is 4.3 times of that in Lib. In contrast, the proportions of minority stances in both Con and Lib rose. In Con, the proportion supporting gun control increases by 17.3% (from 0.0% to 17.0%), and in Lib, the proportion against control increases 2.6% (from 2.7 % to 5.3%).

-

(2)

Mixed stance was observed in Con immediately after the event: Most of the tweets involving a policy stance either support gun control or oppose it. However, during post-event time, there are 2.3% of the tweets in Con that suggest a need for certain type of gun control while asserting the support of gun rights as well. Such tweets only appeared in two post-event stages, within the immediate 48 h and one week (day 2–7). For example, this tweet, “[@mention] [Be] more difficult to acquire a gun. But getting rid of guns altogether is a recipe for disaster since criminals will acquire”, suggests partial gun regulation. These tweets also tend to propose a specialized regulation that seemed to be most directly relevant to prevent similar killing; for example, “[@mention] Why they don’t change the laws and have restrictions on how many guns a person can buy?” No such mixed stance tweets found in Lib.

-

(3)

Across events over years, pro-control stance rose to above 50% while pro-gun stayed around 40%: Both camps combined, the supportive tweets for gun regulations increased from 48.3% and 44.3% in 2016 and 2017, respectively, to 55.7% in 2018. Opposing tweets, however, remained similar, which were 37.8%, 42.0%, and 37.9%, in 2016, 2017, and 2018, respectively. We also observed that, in 2018, there were fewer tweets that did not suggest a stance; 12.6%, 13.0%, and 5.7% in 2016, 2017, and 2018, respectively.

Overall, after the shooting incidents, a tweet was less likely to express a particular policy stance after shooting events, especially in Con. In order to understand how tweets conveying a policy stance coupled with causal-attributional narratives, we further analyzed the interaction between stance and attributional composition in tweets. We observed that, for example, in Con, after the shooting event, those tweets with a policy stance were more likely to incorporate a discussion of problem nature and blame target than those not (from 9.7% to 33.5%, p = 0.009, and from 25.8% to 48.4%, p = 0.029, respectively, Fisher’s exact test). Such patterns were not found in Lib. These results suggest that after the shooting events, a tweet from Con with a policy stance was more likely to explicate the reason for the held stance. Such tweets may potentially invite and facilitate an understanding and discussion across camps than those that simply express a stance.

Ideological camps differ by whether their narratives about a solution and when to call to action after the shooting incidents

Through human coding (see “Methodology” section), we identify tweets talking about a solution and/or call to action, referred to as Moral Solution. Overall, 28.3% of the tweets talk about Moral Solution. Such tweets are more likely to be found in Lib: nearly one out of every three tweets (30.4%) in Lib, and one out of every four tweets (26.1%) in Con. We highlight two observations:

-

(1)

More calls to action after shooting incidents; two camps differ by the timing: Figure 4 shows the group differences regarding when a call to action was made. Among Con, it was primarily the immediate 48 h after the incidents that such tweets appeared to increase, and then the calls dropped after 48 h; among Lib, such tweets started increasing only after the 48 h and the rate kept rising till the end of the second week.

-

(2)

Alternative direction appeared in Con’s action-calls: In Lib, almost all of the tweets talking about Moral Solution (96.8%) supported gun control. While in Con, the patterns of stance varied across times. In pre-event time, all of the Con tweets that called to action were advocating against gun control. After the shooting events, the stance in the calls split. Within the immediate 48 h after the incidents, the opposing rate dropped from 100.0% to 52.4%, and it increased a little to 70.0% and 68.8%, in the first and second week, respectively. In contrast, in Lib, the tweet opposing gun control only appeared in the second week with a rate of 9.5%. There were 3.0% of Moral Solution tweets that conveyed mixed stances in post-event time.

A tweet with Moral Solution has stronger intent than a tweet only with Stance (without Moral Solution) to push actual action upon/against a solution/policy. In our analyzed tweets, only 31.5% of the Stance tweets also called to action. For Con, in post-event time, there appeared tweets with a supportive Stance to gun control that called to action as well. Such observation suggests not only that more tweets after the shooting incidents expressed the alternative stance, but that more tweets moved forward to call others to act upon/against the Twitter users’ personal favored/disfavored stance.

Shooting incidents triggered a different use pattern in rhetoric schemes, where Argumentative Assertion and Sarcasm were especially dominant after an event, and the use of Argumentative Assertion expanded

A variety of rhetoric schemes was observed; through thematic coding (see the section “Methodology”), we identified seven distinct Rhetoric Schemes (see Table 4 for description and illustrative examples). A rhetoric scheme is to persuade; in our studied context, the schemes were employed to persuade why a stance is valid/invalid, why a suggested policy solution will work or not, and why a change or call to action is needed. These seven schemes are: (1) Argumentation with plot, in which there are five specific plots: Story of Decline, Stymied Progress, Change Illusion, Story of Helpless & Control, and Conspiracy; (2) Argumentative Assertion; (3) Evidence-based Argumentation; (4) Sarcasm; (5) Rhetorical Question/Imperative mood; (6) Personal/Community Relatedness; and (7) Scripted Message/Petition.

Overall, 86.5% of the analyzed tweets employed rhetoric schemes. Among the seven schemes, two were used more often than others. Nearly half of the analyzed tweets involve either Argumentative Assertion (28.0%) or Sarcasm (20.8%), which are the first and the second mostly used schemes in both camps (combined pre-event and post-event times). The former scheme engages in developing a logically sound argument and asserting the argument itself without referring to evidences. The later involves people saying the opposite of what they mean to ridicule a stance/view that they themselves disagree with. There exists distinctive persuasive strategies in these two rhetoric schemes: the former builds a path for one to understand or/and develop alternative views and beliefs, which can involve no negativity; the latter challenges or attacks one’s views and beliefs directly by negatively ridiculing them.

The patterns of employing a specific scheme changed throughout a shooting event, across multiple events, and differed between two groups. Figure 5 shows the distributions and differences, and the changes are summarized below.

-

(1)

The use of schemes were less diverse after an event in both camps: Figure 5a shows the proportions of tweets involving the use of these schemes over the four stages of the shooting events. Within the immediate 48 h after the incidents, the employed schemes shifted to concentrate exceptionally on the two, Argumentative Assertion and Sarcasm. After the 48 h, in both camps, the employment of rhetoric schemes gradually became diverse again, while Argumentative Assertion and Sarcasm were still the most.

-

(2)

Two camps differed by when to use specific schemes: In pre-event time, Con had the highest chance of using Sarcasm (34.4%) among other schemes, but the chance within the 48 h after incidents decreased yet remained the second highest (20.3%). Meanwhile, their use of Argumentative Assertion increased significantly from 15.6% to 33.9%, and this scheme became the most dominant one. Lib employed most of the schemes and employed them more evenly than Con before the incidents; however, within the 48 h after the incidents, Argumentative Assertion and Sarcasm both increased and became the most dominant schemes (both 28.1%).

-

(3)

Yearly trend exhibits increasing use of Argumentative Assertion and decreasing use of Sarcasm: Overall, across the events over the years, Argumentative Assertion and Sarcasm remained the two mostly employed schemes. However, there was an increase in using Argumentative Assertion and a decrease in Sarcasm; the proportions for the former were 22.4%, 27.5%, and 34.3% in 2016, 2017, and 2018, respectively, and the proportions for the later were 22.4%, 21.4%, and 18.6%, respectively.

Shooting incidents triggered negative emotions; two camps differ by the coupled associations between emotions and attributional compositions, which reflects the group difference in the believed cause and the perceived control over the believed cause

We now unpack the associations between the emotional psycholinguistic features and the narrative elements. After the shooting incidents, more tweets were associated with negative emotions, which can be observed by comparing the probabilities, before and after the incidents (see Table 2). These emotions appear to be more likely to co-occur with certain attributional compositions and there exists group differences:

-

(1)

The dominant emotions in Con associated with attributional compositions are anxiety and sadness: Anxiety is more likely to be found in the Con tweets casting Villain and Victim as well as ascribing Blame Target than those not (8.5% versus 0.7%, p = 0.008, 11.1% versus 1.2%, p = 0.009, and 7.0% versus 0.0%, p = 0.005, respectively; Fisher’s exact tests). These associations were not found in Lib.

-

(2)

The dominant emotion in Lib associated with attributional compositions is anger: Anger is more likely to be found in the Lib tweets casting Villain and Victim than those not (37.8% versus 10.5%, p < 0.001, and 32.1% versus 14.0%, p = 0.026, respectively; Fisher’s exact tests). These associations were not found in Con.

We further conducted an inquiry into the patterns of such associations that differentiate two camps: Why did Con associate anxiety to villain and victim, but Lib anger? And, why did Con feel sad about the problem nature of gun issues? Our analysis was motivated by appraisal theories of emotion (Hareli, 2014; Lazarus, 1991), which considers emotions as contingent to cognitive evaluation, and specific to our study context is the role of perceived control. We analyzed the cases where the Twitter authors expressed a sense of control over the ascribed problem nature, causes, and solutions in understanding emotions over an undesirable occurrence or outcome. We uncover distinct quality between camps. We explicate below.

In Con, the prevailing anxiety often has to do with the tweets casting villains as the unpredictable people who use guns to kill, and these people regarded as out-of-control are also viewed as the causes of harms (blame target). For example, this tweet, “It was done because a crazy person did it. Without a gun he would use something else. That is why he had explosives. Killers kill.”, expressed an anxious feeling concerning “crazy” person or “killers”, who are the causes of harms, who cannot be controlled because by their unpredictable nature, it would be hard to predict what they may be doing. And this tweet, “[@mention] gun laws only hurt honest gun owners. Maybe the libtards can start allowing the crazies to be locked up instead of ’rehabing’ them” further contrasting “honest gun owners” being victims with “the crazies" being villains. As for the sad feeling, it is often observed when the Twitter authors ascribed the root problem of mass killings to the gun laws—there is a belief that the existing gun laws or ongoing attempts for pushing gun reforms make the victims even more powerless. This tweet, “Sadly these incidents justify why the right people need to have guns to prevent tragedies like this #savethesecondamendment”, not only referred the shooting event as sad (“tragedies”), but suggested a potential problem (guns may be taken away from “right people”) that was considered to contribute to such a sad tragedy. The low sense of control in sadness is not centered on unpredictability; instead, it is about feeling powerless or hopeless in getting rid of a cause (e.g., gun laws) that has been believed to make the victims more vulnerable and the villain more powerful—a powerless emotion associated with a perceived low control over the gun laws or potentially coming gun reforms. Such associations were not found in Lib. Among Lib, the majority policy stance for guns is the opposite; gun control is desired, and the believed cause of mass shootings is just the lack of gun reforms.

In Lib, the prevailing emotion is anger that usually has to do with a grievance sentiment about the cause, accompanied by a felt moral obligation to take the uncontrolled into control or gain more autonomy in the powerless situation. For example, gun issues could and should have been taken into control if the politicians who should be accountable had done something, or if certain regulations had been passed in congress; however, none of these happened earlier, so the mass shootings went out of control. This tweet, “People this young and talented shouldn’t be dying because idiots get a hold of guns. Is this what we needed to finally realize what guns +”, suggests that the dead of the “young and talented” (victim) is unjust and this shooting event should lead to a final solution of gun laws. And this tweet, “@realDonaldTrump But, of course, no efforts at gun control will be made as you suck the dicks of the right wing.”, expresses anger while accusing the leader who cannot be accountable. Also, in this tweet, “As a gay American, do not tell me I am not allowed to talk about gun control, hours after my community was the target of terror attack”, the Twitter user perceived the self as a victim and expresses anger regarding why he could not have a say for the belonged community. In brief, anger is neither about unpredictability nor low control over the undesired; it is about wanting more control over the desired because the low control is unjust. Such associations were not found in Con.

Discussion

Finding common grounds across camps in the context of polarized politics is difficult. In the US, the partisan stances on gun issues have seemed to lead to a policy deadlock. Over the past two decades since the Columbine High School massacre in 1999, there have been speculation and skepticism about how the non-stop incidents of mass shootings could have any implications or actual impacts on politics and policies. Our study provides empirical evidence that, in the wake of shooting events, the public discussions could turn less polarized. The changes of narratives before and after the events suggest that the events may provide crucial conditions that could facilitate the progress in reaching consensus between camps.

While the tragic sorrow and shock in extraordinary events may likely reinforce or even push a person more strongly to advocate their held beliefs, our findings suggest that such moments could otherwise open a person’s mind and eyes. Prior studies have suggested gun shooting events may change people’s behaviors in information seeking, such as increasing the visits of more balanced and factual webpages regarding gun control (Koutra et al., 2015). In seeking the understanding of the psychological process of how such events shift or transform a person’s view, a plausible explanation is that in these moments, unusual incidents may draw people in a highly shared experience that could enable them to focus on needs and wants (Atkeson and Maestas, 2012), rather than ideologies—e.g., everyone loves their children, and no one may disagree with being more cautious about the access to firearms by people with mental illness.

The present study provides several research and practical implications in public policy making. The shifting voices and the emerging narrative complexity, as characterized in our study, can be seen as an opportunity for policy makers to seek common grounds and constructive movements. For policy makers who often act partisanly, which is partly, if not primarily, due to a concern of losing their electorate in the future, our findings offer insights as to how the different stages of an event may provide conditions that could allow them to better navigate solutions across partisan lines. Below, we discuss the specific implications with respect to our findings in details.

-

(1)

The same shooting incident meant differently to the two ideological camps, of which the complexity could be uncovered by coupling the stance, attribution, and emotion.

Negotiating with people who hold the opposite stances requires the understanding of the minds engaged in the transitions. Our findings, especially the coupling of the policy stance with cognitive and emotional elements, provide rich interpretations and implications.

First, our findings uncover what specific negative emotions were dominant in the two camps (in Con, anxiety and sadness; in Lib, anger). More, we investigate how the associated patterns of emotion with attribution and stance could help reveal the distinct nature of the perceived sense of control between camps over the problem, cause, and solution in a shooting event. Prior studies have indicated that negative emotions could jeopardize constructive communication; and in order to mitigate and manage the effects, it is critical to have self-awareness of the specific emotions involved and to understand specifically where the emotions come from (Adler et al., 1998). Our observations provide an overall picture of the engaged emotions and potential reasons why.

Second, the coupled information may be leveraged to predict a person or a group’s stance and its change from the particular patterns of emotion and causal narratives observed. Ideological identity may be useful in predicting what a person’s policy stance or what a person may argue and say but, it may have less explanatory and even predictive power, especially in the social context that a policy stance is shifting within an ideological camp.

Third, while emotion can be contingent on cognitive appraisal, the relation between emotion and cognition is reciprocal (Lazarus, 1991). Once a particular emotion is induced, it may be associated with a certain behavioral tendency (e.g., anxiety and anger suggests rather distinct thinking and behavioral consequence). Further investigation is needed to identify the exact implications of how the already incited emotions may have an impact on arguing and negotiating about gun issue.

-

(2)

The more persistent change observed in narratives was consistent with recent polls—while still partisan, the discussions were more assertive than antagonistic: With a trend of stances consistent with recent surveys (Poll, 2019; Inc, 2017; Sanger-Katz, 2018), both camps are still dominated by a single policy stance, opposing each other. While this may suggest an unchanged polarized politics, we uncovered the ways of communicating alternative views have undergone a shift. Overall, across the events, tweets with sarcasm shrank and tweets with argumentative assertion increased in both camps. Considering the nature of these two rhetoric schemes—the former centers on negatively ridiculing an opposite view, and the latter may be used without hostility—such a shift may reveal that the conversation may move away from attacking the opposite side to the one that could be used positively to argue a view or to compare and contrast distinct views.

-

(3)

The immediate and short-term shift in narratives after shooting incidents may point out an opportunity for reconciling conflicts: Within the immediate 48 h after the shooting incidents, the emerging tweets put a less emphasis on referring to the predominant policy stance within ideological camps, e.g., fewer tweets referred to a particular policy stance; mixed-stance tweets emerged; stance flips were found; and there appeared more calls to action with mixed stances. Moreover, among the tweets that did express a stance, after the shooting events, more tweets involved in causal-attributional discussions including expliciting the nature of problems and inquiring the causes, instead of simply conveying a stance without causal reasons. We consider that a tweet that talks over the causal attributions with stance, compared with a tweet that simply claims or advocates a stance without causal reasons, could provide a more promising chance and constructive base for people to understand where the stances are from and to further exchange ideas or discuss alternatives. These momentary and drastic changes suggest the uniqueness of the time right after the shooting events, where the talking was more likely to deviate from the extant ideological preferences and issue framing and open to alternative views, as well as to communicate the underlying causal beliefs regarding why a stance or a solution is believed justifiable or not. This can be seen as a kind of “opportunity structures” (McAdam et al., 1996) in the collective action literature, which represents resources or climates that may be utilized for resolving the contentious debates for movement campaigners and policy makers.

-

(4)

Design implication for making policy progress: A need for social media and ICTs to support diverse narratives, especially those beyond existing or dominant framing around a contentious issue: Social media has been found effective in discovering allies and enabling positive feedback loops that reinforce shared beliefs (Althoff et al., 2014; Dimond et al., 2013), which makes it valuable in supporting collective action. However, the same capacity has also been criticized as a catalyst for “echo chambers” or “filter bubbles” (Pariser, 2011; Sunstein, 2018). This capacity, together with the capacity for discovering opinion leaders (e.g., rank-ordering most retweeted tweets or most followed users) can be destructive when seeking resolution in a heated debate, allowing users to be exposed to an even stronger adherence to the extant ideological preferences rather than narratives crossing the group/ideology boundary. In terms of framing processes, such capacities may support frame diffusion and alignment but is inadequate for frame bridging (Benford and Snow, 2000). In talking about a polarized topic, there is a need to develop information technologies that support users, including policy makers and mainstream media who often seek social media as a source to uncover public opinions with diverse narratives and alternative views.

Study limitations

There are several limitations in this work. First, our analysis of narrative variation relied on a stratified sample of users accounting for a different level of social media activity (see the section “Methodology”). While this ensured that the retrieved narratives came from a more diverse set of users, we had not considered the mobilizing or diffusion structures—e.g., users may simply adopt extent frames originated by opinion leaders or movement organizations, or alter extent frames into their talking. This is likely to influence the originality and diversity of the retrieved narratives. It is possible to incorporate the network-based sampling strategy (Stewart et al., 2017) with stratified sampling, but how to deal with the mobilizing or diffusion structures outside of the social media data remains a challenging task for future research. In addition, we acknowledged that gun-relevant issues may have a differential impact on communities—for example, prior studies showed a disproportionate number of gun violence victims in African–American communities (Papachristos and Wildeman, 2014; Beckett and Sasson, 2003). Due to the difficulty of obtaining reliable demographic information of social media users, empirical analysis along this line has been limited. Future work that combines online surveys and self-reported demographic information with stratified sampling of social media conversations across diverse communities will offer insights with respect to this equity aspect.

Second, while our study has purposefully focused on the deadliest mass shooting events from 2016 to 2018, it is challenging to distinguish whether the observed changes were due to long-term temporal trends or the differences in the event nature. This limitation may not be easily addressed, due to the heterogeneity in events, e.g., event scope and motive for the shooting.

Third, when extracting linguistic features based on lexicons, certain lexicon words might correspond to the context of an event, e.g., “kill*” may be used to refer to the event happenings with less connotative meanings associated with emotions than usual. While we carefully excluded such lexicon words manually to reduce the false positive possibility, future work may explore a more efficient context-sensitive linguistic approach to deal with the precision-recall tradeoff.

Lastly, the narrative components were identified by human coding on a smaller randomly sampled set of data from our entire data corpus; therefore, the patterns of narrative structures may be prominent only within the tweets being selected. While we have employed stratified random sampling to mitigate this issue, there may be other narrative patterns beyond what we have identified. Our purpose of employing mixed-methods was to leverage their complementary strengths. Specifically, our quantitative analysis based on simple lexicon matching has a benefit to extract word-level linguistic patterns from big data sets and discover the statistically significant shifts in such patterns efficiently. However, it has been limited in interpretability. We carefully examined its applicability and limitation in our studied context, and further incorporate qualitative human coding to gain in-depth understanding of how language associated with causal attribution changed over time.

Conclusion

Social media have been used to gauge sentiments and climates around political issues, but less to reveal the conflict and shift in people’s narratives on a contagious topic. This study utilized large-scale longitudinal social media traces from over 155,000 properly sampled, ideology-identifiable Twitter users to probe deeply into the recent years’ gun debate in the US. We introduced a mixed-methods approach in order to extract and analyze the linguistic and attributional signals in users’ narratives. We presented novel analyses that capture the quantitative and qualitative differences in the sampled narratives.

This research makes three contributions. First, we presented a case study of how social media users’ narrative around a polarized topic change in response to relevant events. We identified measurable shifts, not only in linguistic features but also in narrative structures indicative of causal attribution, over the course of high-profile shooting events. Through retrieving the narrative components, we empirically showed how events penetrated the public discursive processes that have been predominated by the extant ideological preferences. Second, our study design demonstrates how to retrieve and contrast individuals’ narratives from large-scale longitudinal social media data. By leveraging a human coding scheme drawn upon Narrative Policy Framework, we were able to retrieve the narrative elements for qualitative and statistical comparison. Our method suggests a potential direction for conducting narrative research at scale. Third, our findings have implications for discovering the opportunity to reconcile conflicts, for understanding the utility of different rhetoric schemes in context, for improving interpretability from linguistic signals, and finally, for designing more inclusive civic media technologies that support diverse narratives and framing.

Methodology

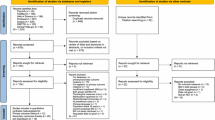

Data

We choose Twitter as our data source, which allows us to observe users’ daily conversations as well as authentic reactions to significant events. We adopt a quasi-experiment design (Lin et al., 2017) that allows us to trace groups of focal users and compare the language and narrative characteristics in their tweets before, during, and after various events.

In our study, we first identify two groups of users from Twitter. Our data collection extends a Twitter corpus collected in prior work (Yang et al., 2017), where the authors identified two groups of users—Trump-supporters and Clinton-supporters—who are likely to have distinct political and ideological positions. The two groups consist of those “exclusive followers”—i.e., Twitter users who followed only one presidential election candidate but not the other. The validation of whom the users supported was conducted by statistically testing the extent to which the exclusive followers posted tweets containing the support or disapproval hashtags—either from the campaign slogans of the candidates or simply stating the support, e.g., #MakeAmericaGreatAgain, #ImWithHer, #DumpTrump, #NeverHillary, etc. In this work, we use the two groups of supporters as a proxy for two ideological camps. Gun-related debate has been highly political in the US, and for years, there has been a sharp partisan divide over gun issues, which suggests that the majorities of the supporters of the two party candidates hold distinctive ideas or beliefs (ideological basis) on this issue. We refer Trump-supporters and Clinton-supporters in this study as “Con” and “Lib”, respectively, for denoting these two camps’ ideologies.

The original collection includes user IDs and their timeline tweets in a four-month period starting in August 2016. We expand the data collection using a stratified sampling procedure to account for different levels of user activities based on tweeting frequency and their tweets for a longer period. (See the Supplementary Information for the details of the sampling and data collection procedure.) This procedure formerly resulted in over 214,000 users. When analyzing the users’ contributions in terms of percentage of total non-retweeted tweets posted by each user, we found that the top 10% most active users contributed 70% of the collected tweets (see the Supplementary Information). As our research aims to study the variation of narratives from a diverse sample of users, we decided to focus on non-retweeted tweets. Further, to avoid the analysis outcome to be dominated by only a small set of users, we exclude the top 10% users (referred as “super users”), who account for the disproportionately high number of tweets, and focus our study on the bottom 90% of active users who account for 30% tweets. While this significantly reduces the number of tweets for further analysis, we argue that this bottom 90% of users represent more diverse and typical users in the groups. The data were collected from publicly posted tweets using official Twitter Rest APIs. We also excluded the automated accounts that potentially connected to the foreign interference in political conversations on Twitter, including the Internet Research Agency (IRA) associated accounts (Gadde and Roth, n.d.).

In total, there are over 66 million non-retweet tweets, produced by users for whom we have their complete timeline tweets for more than 30 months, including 77,954 users in the Con group and 77,355 users in the Lib group. All retweets were excluded in this study for the reason described earlier.

Event-related tweets and control set

We compile tweets from the two groups of users for the selected three mass shooting events. Each event set includes users’ tweets within a 4-week time window centered on the time of incident, referred as the Event period. To examine whether any measured differences in users’ tweeting language are attributable to the incidents, we identify an equivalent period of 4 weeks for each event during one of its preceding or succeeding yearsFootnote 1, referred as Control period. This design is similar to related work (Saha and De Choudhury, 2017) and other causal inference literature. A comparison between the event and control sets is likely to rule out measurement confounding effects triggered by unobserved factors such as seasonal and periodic events. Table 1 summarizes the data for the event/control periods.

Filtering tweets related to gun violence

To distinguish tweets relevant to gun violence or policy issues among others in our data collection, we adopt the list of keywords, phrases, and hashtags used in Benton et al. (2016). The authors used several keywords likely to be associated with guns or gun control in the US, and a set of hashtags indicative of support for or opposition to gun control (e.g., second amendment, #guncontrolnow, #gunrights, #protect2a, etc.). Using keyword matching to retrieve relevant tweets can be problematic and may lead to selection bias (King et al., 2017). To mitigate this issue, we consider three subsets of keywords (for, against, or ambiguous regarding gun control) from the original list (Benton et al., 2016) as independent seed sets and iteratively extended the list by adding keywords and hashtags mostly likely to co-occur with the chosen words, to capture the related tweets on either side to a great extent while avoiding the bias toward a particular stance. We then chose keywords/hashtags that consistently appeared in our dataset across events. (However, we acknowledge that the natural decline in the use of some hashtags was not accounted for in this study.) Next, we manually examined the chosen keywords/hashtags to exclude those that have been largely used in advertising (e.g., #pewpew, #ak47, #glock). Our extension includes hashtags used for supporting control (e.g., #disarmhate, #wearorange), gun rights (e.g., #2a), and for either side (e.g., #SecondAmendment, #2ndamendmend, #nra). Finally, relevant tweets were extracted through keyword matching.

The presence of bots and elites, as shown to be influential in prior work (Varol et al., 2017; Rizoiu et al., 2018), can be a concern in our analysis as we aim to study the variation of narratives from a diverse set of users. To examine the impact of likely bots and elites in the set of relevant tweets, we estimate the extent to which these tweets are contributed by the likely bot accounts and the elites. The proportion of likely bots was estimated using the Botometer API (Botometer, 2018), and that of elite or highly popular users was measured based on users’ follower counts and the number of retweets received per tweet sent. We found that the contribution of these relevant tweets from likely bot accounts is negligible, and the contribution of highly popular users in this relevant tweet set is no more than that of the average users (see the Supplementary Information).

Table 1 lists the number of relevant non-retweet tweets (i.e., #Relevant) extracted from our dataset for the corresponding event and control periods. Figure 6 shows the temporal variations of the relevant tweets within a 4-week period for the events and controls. Unsurprisingly, the numbers of relevant tweets, aggregated on a daily basis, exhibit a sudden increase immediately after the incidents of all three events. There appeared to be an observable second spike after the first peak in both Event-1 and Event-3, due to the subsequent development following the mass shooting events. The former corresponds to the House Democrats’ day-long sit-in protest over gun control, and the latter is associated with President Trump’s meeting with the students and teachers affected by the school shooting.

Quantifying linguistic dynamics

Extracting linguistic features

Prior studies have shown that different social groups, when faced with social issues, can respond differently in terms of various moral value principles and emotions. To quantitatively analyze such variation of language use across events, we first employ the Linguistic Inquiry and Word Count (LIWC) (Tausczik and Pennebaker, 2010), a psycholinguistic lexicon-based measuring method that has been widely adopted to analyze the use of linguistic markers in large text datasets. LIWC relies on a human-validated word list associated with various linguistic and affective categories. Our study focus on two key aspects regarding how users communicate their thoughts and causal stories in tweets: (1) Causal attribution (category: cause), and (2) Affective attributes (categories: sad, anxiety, and anger).

We extract tweets associated with the aforementioned categories (see Table 2). We further conduct a manual verification step on a random sample set of tweets to validate whether the extracted tweets can be reasonably measured based on the lexicon. (See the Supplementary Information for the verification details.) Overall, most of the examined categories have a high precision above 90%, with the lowest being 73.3% (anger), suggesting a reasonable applicability of these linguistic measurements in our studied context. The implication of some of the false positive cases will be discussed in a later section.

Analysis of change and variation

We present our analysis approach for examining the change and variation of linguistic features in groups’ tweets. Most prior studies focus either on comparison across time (e.g., using time-series analysis (Saha and De Choudhury 2017)) or on comparison across groups (e.g., using odds ratio (Chung, Wei, et al., 2016)). None of these allows for a statistical comparison of groups’ changes while taking into account the baseline temporal variation within individual groups. We propose the following analysis that simultaneously satisfies these requirements: comparing the differences in the before–after change (i) against controls, (ii) between groups, (iii) accounting for the baseline temporal variation within groups, and (iv) enabling analysis for short-term and longer-term changes.

Our analysis is based on the Poisson rate estimation. The Poisson model has been widely used for modeling the event occurrence in a given time interval. Let λ be the mean rate of event occurrence, the probability distribution of an event X can be modeled by a Poisson distribution characterized by a single rate parameter λ, as X ~ Pois(nλ), where n represents the time interval (assumed known) for the Poisson process with the given rate λ. To compare event occurrence rates from two groups of events, X ~ Pois(nxλx) and Y ~ Pois(nyλy), one could test whether the rates ratio \(\frac{{\lambda _y}}{{\lambda _x}}\) is significantly different from, greater, or less than a specify value. We use the probability-based method (Hirji, 2005) to estimate the p-value and confidence interval.

In our analysis, we assume the occurrence of a linguistic feature in tweets within a given time window (e.g., number of tweets containing words in the linguistic category “anger” in the first week) can be modeled by a Poisson distribution. We first examine the change of a linguistic feature occurrence in tweets with respect to the feature occurrence base rate, where the base rate, denoted as λb, is estimated based on the mean feature occurrence rate within the 2-week window prior to the incident (before-event rate). We then quantify the change for a time window T after the incident w.r.t. its base rate by testing the before–after rate ratio, i.e., the ratio of two Poisson rates θ = \(\frac{{\lambda _{\mathrm {a}}}}{{\lambda _{\mathrm {b}}}}\), where λa the mean feature occurrence rate within the time interval T (after-event rate). The observing time window T can be chosen as the 2-week period since the incident. To further compare the immediate and slightly longer-term changes, we examine the feature occurrence rates for different time periods following the time of the incident: within the first 48 h, between day 2 and day 7, and within the second week.

To examine the change of feature occurrence in an event period against its control, we first quantify the change for the equivalent time interval T during the control period by estimating the before–after rate ratio—the rate in T over the base rate within the 2 weeks prior to the control time. Let θevent and θcontrol to be the before–after rate ratios for a pair of event and control, their difference in change is then quantified as the ratio difference Δ = θevent − θcontrol. Likewise, to compare the difference in changes between two groups, we first compute the change within an equivalent time interval T for each group, and then examine the difference between the before–after ratios of the two groups.

Human coding on narrative patterns

Extracting narrative components

To identify whether and what narrative components were employed in the gun-related tweets, we developed a coding scheme through a three-stage iterative human coding process that incorporates: (1) an exploratory phase: examined the applicability of the prior coding schemes from NPF (Shanahan et al., 2018; Merry, 2016) and determined a taxonomy system that is suitable for our data, (2) a pilot phase: tested our coding scheme that emerged from the first exploratory phase and conducted reliability testing, and (3) a coding phase: coded data following our created coding scheme. We first present the final classification taxonomy derived from our coding process and then discuss the details of coding procedure.

Classification taxonomy

Our final classification scheme includes a relevancy indicator and four narrative components. The binary relevancy indicator indicates whether a tweet content involves a narrative for gun-related issues (0: irrelevant, e.g., gun users discuss a gun model; 1: relevant). The four narrative components include: (1) Stance: whether the narrative involves a policy stance and in what way (0: no; 1: support gun control; −1: oppose gun control; 0.5: mixed, e.g., support both gun control and rights); (2) Moral Solution: whether the narrative involves call to action, advocating for or against a particular policy solution (0: no; 1: yes); (3) Rhetoric Scheme: rhetoric strategies used to promote a stance or advocate a policy solution (0: no rhetoric scheme identified; 1–7: types of rhetoric schemes; see Table 4 for definitions and illustrative examples); (4) Attributional Element: This includes five elements, each has a binary code (0: no; 1: yes) to indicate whether the element appears in the narratives: (i) Hero: a person/group who act(s) to resolve the problem, (ii) Victim: a harmed person/group, (iii) Villain: a person/group who does harm, (iv) Blame Target: who/what to be responsible or blamed for the problem, and (v) Nature of Problem: whether the narrative involves an effort to communicate about what exactly the nature of the problem is or the focal issue should be.

Sample tweets for coding

We sampled tweets to be coded from all the gun-issue-relevant tweets posted by the two groups, and further restricted the samples to tweets with cause keywords in order to better capture tweets involving causal attribution. Throughout the coding process, we create multiple stratified sample sets by randomly sampling an equal number of tweets for each group, each event, and each stage (before, within 48 h, day 2–7, and week 2).

Coding procedure

We summarize the three phases: (1) Exploratory phase: A set of 50 randomly sampled tweets (25 for each camp) was used. The two authors conducted a preliminary coding together that started with an NPF coding guidance suggested by literature (Shanahan et al., 2018), in which the coders thought aloud to each other and deliberated on the taxonomy system. Through the process, the two coders agreed on the narrative components and codes that were modified or extended from the NPF scheme. An emerging new scheme, scheme-beta, was created. (2) Pilot phase: Another new set of 160 randomly sampled tweets (80 for each group) was used to test the reliability of the scheme-beta. Two authors conducted the coding separately; each coded all of the 160 tweets. The results of inter-rater reliability, indicated by Cohen’s kappa, were mostly moderate to substantial (Relevancy indicator: 0.55; Stance: 0.70; Moral: 0.41; Rhetoric Scheme: 0.40; Hero: 0.47; Blame Target: 0.40; Problem Nature: 0.43). Victim has a fair agreement (0.32). Villain has the lowest agreement (0.14). Through discussion, most of disagreement was due to that in the second phase, there were some new narrative scenarios that did not appear in the first phase, so the coders encountered ambiguity to assign an appropriate code. The coders then re-organize the categories to include the new themes. Through this process, the coders resolved the issues and agreed upon 99% of the codes in the end of this phase. The coding scheme was revised and finalized as scheme-final. Then, another set of randomly sampled 160 tweets was used to test the reliability of scheme-final. Two coders coded separately, and the values of Cohen’s kappa for relevancy indicator and all narrative components ranged from 0.61 to 0.79, suggesting substantial agreement. The two coders then again discussed the disagreement. Similarly, the inconsistency occurs when the tweet contents did not fit in the original categories well. The coders again revise the codes to include all the tweets, and finally agreed upon 99% of the codes. (3) Final coding: An additional set of 160 tweets were coded following the coding scheme-final independently by either of the authors.

Analysis of change

Through the coding process, we obtained a total of 480 tweets with human coded results. The raw frequencies of narrative components are 414, 117, 358, 370, 64, 104, 44, 121, and 177 for Relevancy, Moral Solution, Rhetoric Scheme, Stance, Victim, Villain, Hero, Problem Nature, and Blame Target, respectively. We further analyzed the frequencies by stages, events, and groups, in order to differentiate group differences and changes over time.

Data availability

The dataset analyzed in this study is available at: https://github.com/picsolab/gun-narrative-tweets.

Notes

Due to the high frequency of mass shooting events in the US, we have to carefully identify an equivalent period in one of the preceding or succeeding years that did not cover a major mass shooting event. For example, for Event-1 (incident on 2016-06-12), there were mass shooting events happening within the equivalent 4-week period in both 2015 and 2017. Therefore we have chosen the control period in 2018.

References

Adler RS, Rosen B, Silverstein EM (1998) Emotions in negotiation: how to manage fear and anger. Negot J 14:161–179

Althoff T, Danescu-Niculescu-Mizil C, Jurafsky D (2014) How to ask for a favor: a case study on the success of altruistic requests. In: Proceedings of the eighth international AAAI conference on weblogs and social media. AAAI

Anstead N, O’Loughlin B (2015) Social media analysis and public opinion: the 2010 UK general election. JCMC 2:204–220

Atkeson LR, Maestas CD (2012) Catastrophic politics: how extraordinary events redefine perceptions of government. Cambridge University Press

Beckett K, Sasson T (2003) The politics of injustice: crime and punishment in America. Sage Publications

Benford RD, Snow DA (2000) Framing processes and social movements: an overview and assessment. Annu Rev Sociol 26:611–639

Benton A, Hancock B, Coppersmith G, Ayers JW, Dredze M (2016) After Sandy Hook Elementary: a year in the gun control debate on Twitter. Preprint at:1610.02060

Borge-Holthoefer J, Magdy W, Darwish K, Weber I (2015) Content and network dynamics behind Egyptian political polarization on Twitter. In: Proceedings of the 18th ACM conference on computer supported cooperative work & social computing. ACM, pp. 700–711

Botometer (2018). https://botometer.iuni.iu.edu/. Accessed 12 Jan 2018

Braman D, Kahan DM (2006) Overcoming the fear of guns, the fear of gun control, and the fear of cultural politics: constructing a better gun debate. Emory Law J 55:569

Brooker P, Vines J, Sutton S, Barnett J, Feltwell T, Lawson S (2015) Debating poverty porn on twitter: social media as a place for everyday socio-political talk. In: Proceedings of the SIGCHI. ACM, pp. 3177–3186

Chung WT, Lin YR, Li A, Ertugrul AM, Yan M (2018) March with and without feet: the talking about protests and beyond. In: Staab S, Dmitry OK, Ignatov I (eds) International conference on social informatics. Springer, pp. 134–150

Chung WT, Wei K, Lin YR, Wen X (2016) The dynamics of group risk perception in the US after Paris attacks. In: Spiro E, Ahn Y-Y (eds) International conference on social informatics. Springer, pp. 168–184

De Choudhury M, Jhaver S, Sugar B, Weber I (2016) Social media participation in an activist movement for racial equality. In: ICWSM. AAAI, pp. 92–101

Dimond JP, Dye M, LaRose D, Bruckman AS (2013) Hollaback!: the role of storytelling online in a social movement organization. In: Proceedings of the 2013 conference on computer supported cooperative work. ACM, pp. 477–490

Flores-Saviaga CI, Keegan BC, Savage S (2018) Mobilizing the trump train: understanding collective action in a political trolling community. In: Twelfth international AAAI conference on web and social media. AAAI

Gadde V, Roth Y (n.d.) Enabling further research of information operations on twitter. https://blog.twitter.com/en_us/topics/company/2018/enabling-further-research-of-information-operations-on-twitter.html. Accessed 12 Jan 2018

Gamson WA, Gamson WA (1992) Talking politics. Cambridge University Press

Gilbert E, Karahalios K (2010) Widespread worry and the stock market. In: Fourth international AAAI conference on weblogs and social media. AAAI

Graham T, Jackson D, Wright S (2015) From everyday conversation to political action: talking austerity in online ‘third spaces’. Eur J Org Chem 30:648–665

Gramlich J, Schaeffer K (2019) Facts on U.S. gun ownership and gun policy views|Pew Research Center. https://www.pewresearch.org/fact-tank/2019/10/22/facts-about-guns-in-united-states/. Accessed 8 Apr 2020

Guggenheim L, Jang SM, Bae SY, Neuman WR (2015) The dynamics of issue frame competition in traditional and social media. Ann AAPSS 659:207–224

Haider-Markel DP, Joslyn MR (2001) Gun policy, opinion, tragedy, and blame attribution: the conditional influence of issue frames. JOP 63:520–543

Hareli S (2014) Making sense of the social world and influencing it by using a naive attribution theory of emotions. Emotion Rev 6:336–343

Highfield T (2017) Social media and everyday politics. John Wiley & Sons

Hirji KF (2005) Exact analysis of discrete data. CRC Press

Holbert RL, Shah DV, Kwak N (2004) Fear, authority, and justice: crime-related TV viewing and endorsements of capital punishment and gun ownership. Journal Mass Commun Q 81:343–363

Inc G (2017) Direction from the American public on Gun Policy. https://news.gallup.com/opinion/polling-matters/221117/direction-american-public-gun-policy.aspx. Accessed 26 Jun 2019

Jackson D, Scullion R, Molesworth M (2013) 15 “Ooh, politics. You’re brave”. Politics in everyday talk. In: Scullion R, Gerodimos R, Jackson D, Lilleker D (eds) The media, political participation and empowerment, Routledge, p. 205

Jones MD, McBeth MK (2010) A narrative policy framework: clear enough to be wrong? Policy Stud J 38:329–353