Abstract

The intensity and character of concerns about antibiotic resistance, over the past nearly seventy-five years, have depended on a series of linked factors: the evolution and distribution of resistant microbes themselves; our differential capacity and efforts to detect such microbes; evolving models of antibiotic resistance and the related projected impact of antibiotic resistance on medical, social, and economic futures; the linkages of antibiotic prescribing and usage to the prevailing practice and identities of the medical and veterinary professions, and to the practice of agribusiness; the projected capacity of biomedicine (and especially the pharmaceutical industry) to stay ahead of such developing resistance; the perceived global context in which such resistance has developed; and the coordination of efforts and the development of infrastructure and funding to draw attention to and confront such microbial resistance. This paper traces the evolving response to antibiotic resistance through what at this point appear to be five eras: that between 1945 and 1963, a relatively optimistic period during which time the pharmaceutical industry appeared to most as capable of keeping up with antibiotic resistance; that between 1963 and 1981, characterized by increasing concern (though limited reformist activity) in the wake of the discovery of the transmission of antibiotic resistance via extrachromosomal plasmids; that between 1981 and 1992, notable for the explicit framing of antibiotic resistance as a shared global problem by such reformers as Stuart Levy and the Alliance for the Prudent Use of Antibiotics; that from 1992 through 2013, during which time antibiotic resistance became linked to larger concerns regarding emerging infections and received increasing collective attention and funding; and that from 2013 through to the present, with antibiotic resistance still more palpably and publicly embedded within larger concerns over global emerging infections, social justice, and development goals, attracting interest from a diverse cohort of actors.

Similar content being viewed by others

Introduction

The intensity and character of concerns about antibiotic resistance, over the past nearly seventy-five years, have depended on a series of linked factors: the evolution and distribution of resistant microbes themselves; our differential capacity and efforts to detect such microbes; evolving models of antibiotic resistance and the related projected impact of antibiotic resistance on medical, social, and economic futures; the linkages of antibiotic prescribing and usage to the prevailing practice and identities of the medical and veterinary professions, and to the practice of agribusiness; the projected capacity of biomedicine (and especially the pharmaceutical industry) to stay ahead of such developing resistance; the perceived global context in which such resistance has developed; and the coordination of efforts and the development of infrastructure and funding to draw attention to and confront such microbial resistance.

Previous work had posited four eras of the history, especially in the United States, of the surfacing of attention to antibiotic resistance, characterized by ever-increasing attention to the problem: that between 1945 and 1963, a relatively optimistic period during which time the pharmaceutical industry appeared to most as capable of keeping up with antibiotic resistance; that between 1963 and 1981, characterized by increasing concern (though limited reformist activity) in the wake of the discovery of the transmission of antibiotic resistance (including across species) via extrachromosomal plasmids; that between 1981 and 1992, notable for the explicit framing of antibiotic resistance as a shared global problem by such reformers as Stuart Levy and the Alliance for the Prudent Use of Antibiotics; and that from 1992 through the present, during which time antibiotic resistance became linked to larger concerns regarding emerging infections and received increasing collective attention and funding (Podolsky, 2015). This paper advances upon this existing framework and extends it, when possible, beyond the United States, while further arguing that since 2013 and the statement by Dame Sally Davies that antibiotic resistance is as large a threat to human health as climate change, that we are now in a fifth era of attention to what has been increasingly described as the global problem of antibiotic resistance. In this era, antibiotic resistance, while framed at times in pessimistic, apocalyptic terms, has become still more palpably and publicly embedded within larger concerns over global emerging infections, social justice, and development goals, attracting ever-increasing interest from an expanding cohort of actors.

This is not a comprehensive history of antibiotic resistance. Rather, this paper focuses on key events and the evolving and linked factors described above to frame the response to antibiotic resistance over the last nearly seventy-five years. The periodization chosen will of course obscure certain continuities, but it serves to highlight the shifting context of debate and action regarding antibiotic resistance over this time. Our present situation is in part a product of such actions taken or not taken, and our present response shares both important discontinuities and continuities from prior responses.

Era I: Staph concerns and persisting optimism, 1945–1963

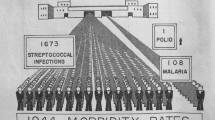

The potential for clinically relevant antibiotic resistance had been recognized as early as in Alexander Fleming’s 1945 Nobel Prize acceptance speech (Fleming, 1999). Indeed, the existence of sulfa drug resistance during World War II had placed clinicians on high alert for subsequent antibiotic resistance, and they were not to be disappointed (Lesch, 2007). If any resistant bacteria was to serve as the model type for clinically concerning resistance in the late 1940s and 1950s, it was Staphylococcus aureus, quickly found to be resistant by Mary Barber in Great Britain by the late 1940s, and implicated as a source of hospital outbreaks and deaths worldwide throughout the 1950s (Barber, 1947; Podolsky, 2015). The implications were apparent to infectious disease specialists and general practitioners alike. As British general practitioner Lindsay Batten stated before the Royal Society of Medicine in 1954: “Those deadly staphylococci… are not pirates or privateers accidentally encountered, they are detachments of an army. They are also portents… We should study the balance of Nature in field and hedgerow, nose and throat and gut before we seriously disturb it. Again, we may come to the end of antibiotics. We may run clean out of effective ammunition and then how the bacteria and moulds will lord it” (Batten, 1955, p. 360).

Buttressed by the advent of phage typing, enabling bacteriologists to track the clonal spread of particular strains (and to support the earliest efforts at hospital infection control), a model of vertical, neo-Darwinian, genetic transmission of bacterial resistance and spread of resistant bugs informed contemporary concerns (Dowling et al., 1955; Hillier, 2006; Condrau, 2009). And such concerns led to moralizing in both Great Britain and the United States over the manner by which the medical profession was squandering its newly acquired resources and perhaps its very credibility. As Mary Barber commented concerning penicillin as early as 1948:

“Penicillin has been discussed so much in both lay and medical circles that the subject seems hackneyed. Nevertheless, a precise understanding of just what it will and still more important what it will not do is often lacking. The drug received such acclaim in the early forties that it came to be regarded by many in the nature of a charm, the mere sight of which was sufficient to make all bacteria tremble. Nothing, of course, could be further from the truth and the present widespread and often indiscriminate use of penicillin, particularly as a preventive measure is seriously menacing its future reputation” (Barber, 1948, p. 162).

Across the Atlantic six years later, Allen Hussar and Howard Holley deflected blame from putative demanding patients and laid it squarely at the feet of the medical profession, reminding clinicians that the part in the antibiotic requesting/receiving chain in which “the patient or his family demands ‘a shot of penicillin’ is not the cause but the product of indiscriminate antibiotic administration” (Hussar and Holley, 1954, p. 44).

However, while there was seemingly enough blame to go around, there was little coordinated effort to combat antibiotic resistance on anything more than a local scale. The World Health Organization (WHO) did convene a small meeting of experts (including Selman Waksman and Max Finland from the United States, and Lawrence Garrod from Great Britain) in 1959 on antibiotics and antibiotic resistance, but became bogged down on the very laboratory definition of “resistance” and refrained from taking a coordinating, international role concerning surveillance or appropriate use for several decades (Gradmann, 2013; Podolsky, 2015). Moreover, such seeming complacency (at least compared to later concerns) was underpinned by a persisting optimism regarding the pharmaceutical industry’s ability to keep up in the microbial arms race, with such early/mid-1940s wonder drugs as penicillin and streptomycin joined by the late 1940s and early 1950s by such “broad spectrum” antibiotics as the tetracyclines and chloramphenicol, by the mid-1950s by macrolides and fixed-dose combination antibiotics, and by the early 1960s by chemically modified agents like methicillin (Bud, 2007a; Bud, 2007b; Podolsky, 2015). Indeed, at worst, antibiotic resistance could be construed as a market rationale for the introduction and uptake of such emerging antibiotics as erythromycin, the fixed-dose combination antibiotics, and methicillin themselves (Gradmann, 2016).

Era II: plasmids and the specter of “Superbugs”, 1963–1981

Such optimism, however, would be partially punctured by the early 1960s by the demonstration in Japan that bacterial resistance could be spread not only vertically to descendant bacteria, but horizontally across strains and even species, by mobile genetic elements that would eventually come to be known as plasmids (Watanabe, 1963; Summers, 2008). This seemingly and dramatically increased the prospects of widespread antibiotic resistance. At the same time, with antibiotics having been used since the early 1950s for growth promotion in farm animals, researchers like E.S. “Andy” Anderson at the Enteric Reference Laboratory in Colindale, England, began to report on the putative spread of resistant bugs (like Salmonella typhymurium) from animals to humans (Bud, 2007a). Such medical ecological findings were further paralleled by the increasing prevalence of “gram-negative” infections in hospitalized patients, at the same time that the prestige of medicine was becoming increasingly linked to such heroic, invasive, antibiotic-warranting interventions as chemotherapy, organ transplantation, and intensive care (Finland, 1970). Appearing during the very decade of Rachel Carson and Silent Spring, such resistance and seeming microbial ecological shifts could be construed in the medical literature as “environmental pollution with resistant microbes” (Gleckman and Madoff, 1969). And in articles with titles like “Infectious Drug Resistance,” authors could publicly declare that “unless drastic measures are taken very soon, physicians may find themselves back in the preantibiotic Middle Ages in the treatment of infectious diseases” (1966, p. 277). Such heightened rhetoric was not limited to academic journals. In what appears to be the first invocation of the term “superbug” to refer to resistant microbes, appearing in a 1966 Look magazine article concerning the work of Anderson on plasmid-mediated “Superbug” resistance to multiple antibacterial agents, John Osmundsen reported: “What causes bacteriologists great concern is the prospect that such a bug might get loose and create an epidemic of invulnerability to drugs among germs throughout the world. This could conceivably happen if the spread of these multiple-resistant germs is not checked… Suppose that a few of Dr. Anderson’s Superbugs were lurking in a mass of bacteria sensitive to tetracycline. Administration of that antibiotic would kill the sensitive germs all right, but a host of Superbugs would be spawned as a consequence” (Osmundsen, 1966, p. 141).

Despite such rhetoric, actual responses to antibiotic resistance remained muted throughout the 1960s and 1970s, with antibiotic resistance rarely portrayed as a shared, global concern. In Great Britain, much fanfare was given to the 1969 Swann report, which banned therapeutically relevant antibiotics such as penicillin and tetracyclines for agricultural growth promotion (Bud, 2007a). Yet the impact of the Swann report was limited (Thoms, 2012; Kirchhelle, forthcoming). In Britain and other European nations who adopted the Swann recommendations, while non-therapeutic antibiotic usage could be banned, veterinarians could simply switch to therapeutic overprescribing. In the United States, scientific uncertainty concerning the relationship of agricultural antibiotics to antibiotic resistance in humans enabled agribusiness concerns over the economic impact of withdrawing antibiotics to override those of the Food and Drug Administration (FDA) and preclude Swann-inspired bans throughout the 1960s and 1970s (McKenna, 2017; Kirchhelle, forthcoming).

Plenty of attention would be devoted, in the United States, to seemingly “irrational” prescribing of antibiotics throughout the late 1960s and 1970s, in an era increasingly focused on the need for “rational” prescribing and the many costs of irrational prescribing more generally (U.S. Task Force on Prescription Drugs, 1969; Roberts and Visconti, 1972; Gibbs et al., 1973; Simmons and Stolley, 1974). But in a manner that would be alien to today’s clinical literature, we see the American medical literature (especially the throwaway medical journal literature) awash with protestations by general practitioners against the advice of seemingly “ivory tower,” would-be reformers asking such practitioners to reconsider their (over-)prescribing habits (D’Amelio, 1974; Lasagna, 1976). Not only did national concern in the U.S. remain relatively muted (Podolsky, 2015), but little attention was directed, there or elsewhere, to framing antibiotic resistance as a global, shared concern, mandating globally collaborative intervention.

Era III: APUA and the shared global problem of antibiotic resistance, 1981–1992

Nevertheless, concerns over plasmid-mediated resistance respecting neither species nor national boundaries persisted throughout the 1970s, as researchers continued to describe plasmid-mediated resistant infections (ranging from dysentery to typhoid fever) around the world. The WHO did begin to convene working groups and meetings devoted to antibiotic resistance (World Health Organization, 1976; World Health Organization, 1978). But the primary and most influential effort to frame antibiotic resistance as a shared global concern came from elsewhere. In January of 1981, Tufts University clinician-scientist Stuart Levy convened a conference in Santo Domingo, Dominican Republic, on the “Molecular Biology, Pathogenicity, and Ecology of Bacterial Plasmids.” At an evening session of the meeting, 147 scientists from 27 countries (though over half were from the United States) signed a joint “Statement Regarding Worldwide Antibiotic Misuse.” The collaborative statement pointed to the “worldwide public health problem” posed by antibiotic resistance, mandating increased “awareness of the dangerous consequences of antibiotic misuse at all levels of usage: consumers, prescribers, dispensers, manufacturers, and government regulatory agencies” (1981, p. 679). Along these lines, Levy and his colleagues assembled a diverse array of concerns—antibiotic use without prescription, use as agribusiness growth promoters, usage when not effective or required, antibiotic over-promotion as “wonder drugs,” and the marketing of particular antibiotics differently in different parts of the world—mandating a coordinated, diverse array of responses by the global community.

Levy would use the statement as a launching-point from which to form the Alliance for the Prudent Use of Antibiotics (APUA), an explicitly international group dedicated to educating the medical profession and public alike about appropriate antibiotic use, promoting the global uniformity of regulation regarding antibiotic sales and labeling, and turning attention to the need for more standardized and coordinated surveillance of antibiotic resistance (Levy, 1983; Koenig, 2005). But despite the considerable efforts of Levy and his colleagues to quantify antibiotic sales, use, and resistance, and to draw the attention of clinicians, the pharmaceutical industry, and global foundations and public health groups to the issue, the conservative era of 1980s America and Great Britain saw little federal attention in those influential nations paid to the problem of antibiotic resistance (Beardsley, 1986; Podolsky, 2015). Indeed, between 1985 and 1988, at the height of Levy’s efforts, the number of personnel at the United States’ National Center for Infectious Diseases at the Centers for Disease Control (CDC) was reduced by 15 percent (Berkeleman and Freeman, 2004).

Era IV: emerging infections and the “End of antibiotics”, 1992–2013

By the late 1980s, however, Levy would receive a major boost in the global surfacing of attention to antimicrobial resistance from Joshua Lederberg, ushering in a demonstrable increase of attention to antimicrobial resistance in the academic and lay literatures alike (Bud, 2007a; Podolsky, 2015). Lederberg had won the Nobel Prize in 1958 for his studies of bacterial genetic exchange, and his interest in coming plagues would be further honed during his time as the head of the Rockefeller University in the 1980s, at the height of the AIDS epidemic in the U.S. Speaking in 1988 at a conference of Nobelists on “Facing the 21st Century: Threats and Promises,” Lederberg spoke on “Medical Science, Infectious Disease, and the Unity of Humankind,” describing the linked pragmatic and moral necessity of confronting infectious diseases as a shared global concern (Lederberg, 1988). Further inspired by virologist Stephen Morse, Lederberg would soon thereafter push his nation’s Institute of Medicine (IOM) to convene a committee on “emerging microbial threats.”

The ensuing volume, Emerging Infections: Microbial Threats to Health in the United States, would serve as a crucial inflection point in turning attention to the multifactorial problem of antibiotic resistance. While the volume focused on viral pathogens, antibiotic resistance would be transposed into the report as well, as U.S. clinicians, industry, and governmental bodies alike were asked to “introduce measures to ensure the availability and usefulness of antimicrobials and to prevent the emergence of resistance.” As the report continued: “These measures should include the education of health care personnel, veterinarians, and users in the agricultural sector regarding the importance of rational use of antimicrobials (to preclude their unwarranted use), a peer review process to monitor the use of antimicrobials, and surveillance of newly resistant organisms. Where required, there should be a commitment to publicly financed rapid development and expedited approval of new antimicrobials” (Lederberg and Oaks, 1992, p. 160). The IOM report had a catalytic effect, setting off a series of governmental and expert policy reports, including those by the Office of Technology Assessment and the American Society for Microbiology (U.S. Congress, Office of Technology Assessment, 1995; American Society for Microbiology, 1995). It also set in motion the enduring presence of antibiotic resistance as a trope in academic and popular literatures alike, whether evidenced by Science’s 1992 themed issue on “The Microbial Wars” (featuring articles with titles like the “Crisis in Antibiotic Resistance”) or Newsweek’s story two years later on “The End of Antibiotics” (Neu, 1992; Begley et al., 1994). In such popular books as Laurie Garrett’s 1994 volume, The Coming Plague, methicillin-resistant staphylococcal infections (MRSA), along with resistant gonorrhea and pneumococcal pneumonia, came to stand as emblematic representatives of the “revenge of the bugs,” accompanied by such “global” concerns as resistant malaria and tuberculosis, which for decades had generally been approached and discussed separately from the forms of antibiotic resistance confronted in Western hospitals and clinics (Garrett, 1994).

While the U.S. would indeed increase governmental agency funding for emerging infections throughout the decade, leadership regarding antibiotic resistance shifted to Europe by the mid-1990s. In Great Britain, further shaken by the bovine spongy encephalitis (BSE, or “mad cow disease”) scare, a series of expert panels, culminating in a 1998 report by the House of Lords, ultimately led to the establishment of the European Antimicrobial Resistance Surveillance System (EARSS, subsequently EARS-Net) in 2001, nine years after the formal advent of the European Union itself (1998; Greenwood, 1998; Bud, 2007a). Scandinavia came to serve as a global model for antibiotic stewardship, reflecting longstanding attention to antibiotics as precious resources. In Norway, the drug regulatory “clause of need” had been maintained from the 1940s onward to maintain a low number of antibiotics on the market for clinical use (Podolsky et al., 2015). Regarding antibiotics already on the market, by the mid-1990s, Denmark, Finland, and Sweden had mounted national educational campaigns to preserve the clinical utility of such drugs (Sørensen and Monnet, 2000; Mölstad et al., 2008; Seppälä et al., 1997). And Sweden (which first eliminated the usage of antibiotics for growth promotion in food-producing animals in 1986) and Denmark (which followed suit in 1999) would take the global lead in reducing the usage of antibiotics in agribusiness, presaging the European Union’s 2006 ban on antibiotics for growth promotion, as well as increasing attention to “One Health” approaches to antibiotic usage in human and veterinary medicine in the decades to follow (with the term coming into formal use by 2004; see Gibbs, 2014; World Health Organization, 2018).

The WHO fully re-engaged with the issue of antibiotic resistance by the 1990s, convening a series of working groups and meetings on the “major public health problem in both developed and developing countries” throughout the decade (World Health Organization, 1994, p. 1; World Health Organization, 1997; World Health Organization, 1998). In 2001, it released its “Global Strategy for Containment of Antimicrobial Resistance” (note the telling yet relative shift in language around this time from “antibiotic” to “antimicrobial,” with the terms at times used interchangeably, at others to emphasize antibiotics specifically or antimicrobials more broadly), not only noting the direct health effects of antimicrobial resistance, but also beginning to draw explicit attention to the broader economic and national security implications of widespread resistance (World Health Organization, 2001). Within a quarter century after the release of the first “worldwide” statement on antibiotic misuse in Santo Domingo, the need for a shared, global approach to antibiotic resistance had become one of the central tenets of pronouncements by the WHO and others (like ReAct, founded in 2005 and continually calling for sustained, global, collaborative attention to antibiotic resistance).

In the U.S., the Infectious Diseases Society of America (IDSA) took the lead in drawing attention to the seeming inability of the pharmaceutical industry – having turned its attention from antibiotics to seemingly more profitable drugs for chronic diseases – to keep up in the arms race between drugs and bugs, releasing its 2004 report, Bad Bugs, No Drugs: As Antibiotic Discovery Stagnates … A Public Health Crisis Brews (IDSA, 2004). It would be joined in these efforts by such allies as the Pew Charitable Trusts (Pew Charitable Trusts, 2018), advocating for legislation (such as the 2012 Generating Antibiotic Incentives Now (GAIN) Act, adding five years of market exclusivity to certain newly approved products) to encourage industry to re-engage with antibiotic development.

Antibiotic resistance had thus been framed not only as a shared, global problem, but as one mandating collaborative, multi-sectorial interventions. And yet, despite such seemingly dire need and such consequent multi-sectoral enthusiasm, reformers still considered existing funding and measures as insufficient to forestall the end of antibiotics. In 2013, ReAct’s guiding force, Otto Cars, along with Ramanan Laxminarayan (of the Center for Disease Dynamics, Economics, and Policy, or CDDEP) and other leading reformers, still lamented:

“Many efforts have been made to describe the many different facets of antibiotic resistance and the interventions needed to meet the challenge. However, coordinated action is largely absent, especially at the political level, both nationally and internationally. Antibiotics paved the way for unprecedented medical and societal developments, and are today indispensible in all health systems. Achievements in modern medicine, such as major surgery, organ transplantation, treatment of preterm babies, and cancer chemotherapy, which we today take for granted, would not be possible without access to effective treatment for bacterial infections. Within just a few years, we might be faced with dire setbacks, medically, socially, and economically, unless real and unprecedented global coordinated actions are immediately taken” (Laxminarayan et al., 2013, p. 1057).

Era V: “As important as climate change”, 2013–present

That same year, as though directly addressing such concerns regarding the need for coordinated political action, Dame Sally Davies, the Chief Medical Officer of Great Britain, released a pivotal report on “Infections and the Rise of Antimicrobial Resistance,” stating that “[t]here is a need in the UK to prioritise antimicrobial resistance as a major area of concern, including it on the national risk register… and pushing for action internationally, as well as in local health care services” (Davies, 2013, p. 16 [boldfaced in the original]). Buttressing such a request was her more colorful (and frequently cited) subsequent warning: “Antimicrobial resistance is a ticking time bomb not only for the UK but also for the world. We need to work with everyone to ensure the apocalyptic scenario of widespread antimicrobial resistance does not become a reality. This is a threat arguably as important as climate change for the world” (ibid). Acknowledging both the uses and potential drawbacks to such apocalyptic rhetoric, Davies and her colleagues later predicted that if antimicrobial resistance continued unchecked, “infection-related mortality rates may increase to a level comparable with those in the Victorian era” (Fowler et al., 2014; Shallcross et al., 2015).

Antimicrobial resistance was thus framed less around the present burden of disease than within an anticipatory logic of an emergency to come, a disastrous future of our own potential making or un-making, depending on our present choices. It is no accident that Davies chose to compare antimicrobial resistance to climate change, and such projected futures are both performative and generative in their construction and deployment (Brown and Michael, 2003; Podolsky and Lie, 2016). Indeed, Davies’ efforts marked another inflection point in raising global concern about (and engendering responses to) resistance. The same year that she released her report, the U.S. Centers for Disease Control released its own report on “Antibiotic Resistance Threats in the United States, 2013,” drawing attention to such newly prioritized pathogens as carbapenem-resistant Enterobacteriaceae (CRE) and multidrug-resistant Acinetobacter, which accompanied such well-known pathogens as MRSA, drug-resistant gonococci, and multidrug-resistant tuberculosis to become the new face of antimicrobial resistance in technical and popular reports (Centers for Disease Control, 2013). By 2015, the U.S. would release its own National Action Plan for Combating Antibiotic-Resistant Bacteria, signaling the need for increased coordination among U.S. agencies and for the increasing collaboration of such agencies with industry and international counterparts alike (2015).

Groups like the CDDEP drew ongoing attention to the linked, global ecology of antibiotic consumption and resistance, noting a 35% increase in worldwide antibiotic consumption between 2000 and 2010, with 76% of the increase accounted for by Brazil, Russia, India, China, and South Africa (Van Boeckel et al., 2014). At the same time, the CDDEP and others drew attention to the need to preserve or improve access to antibiotics in the developing world, at the same time that inappropriate use or excess was to be avoided (Ǻrdal et al., 2016). In this context, in 2015, the same year that the United Nations (U.N.) announced its Sustainable Development Goals (including the end of poverty and hunger) to be achieved by 2030, the WHO released its own “Global Action Plan on Antimicrobial Resistance,” one year after it had released its “Global Report on [Antimicrobial Resistance] Surveillance” (WHO, 2014; idem, 2015). As WHO Director-General Margaret Chan declared in the foreword to the Global Action Plan: “Antimicrobial Resistance is a crisis that must be managed with the utmost urgency. As the world enters the ambitious new era of sustainable development, we cannot allow hard-won gains for health to be eroded by the failure of our mainstay medicines” (WHO, 2015, foreword). Antimicrobial resistance was to be approached as a shared problem of the WHO’s many member states, entailing both individual state action and more coordinated efforts with respect to improved education and surveillance, improved sanitation and infection prevention, reduction in unnecessary antimicrobial usage in both human and agricultural sectors, and sustainable investment in novel diagnostics and therapeutics. At the same time, acknowledging the need for a “one health” approach to antimicrobial resistance, the WHO cited its intention to collaborate in ongoing fashion with both the Food and Agriculture Organization (FAO) and the World Organization for Animal Health (OIE) to achieve such objectives.

This focus on collaboration and shared responsibility was soon accompanied by an increasing focus in global forecasting on the economic consequences of AMR. While not an entirely new concern (as noted above, it had been briefly noted in the WHO’s own 2001 Global Strategy report, and before that in its 1994 “Working Document,” and was emphasized in the World Economic Forum’s 2013 report on “The Dangers of Hubris on Human Health”; see World Economic Forum 2013), such consequences acquired additional prominence when presented alongside the U.N.’s Sustainable Development Goals. They served as a powerful economic and security rationale for confronting antimicrobial resistance for those policymakers not already sufficiently concerned about a potential return to Victorian era medicine alone. Once again, Great Britain took the lead, with its 2016 “Review on Antimicrobial Resistance,” chaired by economist Jim O’Neill, predicting that unless action were taken to forestall the trajectory of antimicrobial resistance, the global economic cost by 2050 would reach 100 trillion USD, accompanied by the loss of 10 million lives per year (O’Neill et al., 2016). The O’Neill report would be followed in early 2017 by the World Bank’s own report on “Drug Resistant Infections: A Threat to our Economic Future,” which complemented the O’Neill report by positing that unchecked antimicrobial resistance could lead to an annual 3.8% global loss of GDP by 2050, equating to 3.4 trillion dollars annually by 2030, with poorest countries hit hardest, and representing a direct threat to the achievement of global development goals (Jones et al. 2017). Once again, an anticipatory logic of global economic slowdown helped to further heighten concerns over the impact of unchecked antimicrobial resistance.

In this context of increased attention to the linked health and economic consequences of antimicrobial resistance, the U.N., in September of 2016, convened a high-level meeting of its General Assembly, devoted to antimicrobial resistance and only the fourth time such a meeting had focused explicitly on a health concern. The U.N.’s consequent “Political Declaration” affirmed the goals of the WHO’s global action plan and reaffirmed both the need for coordination among the WHO, FAO, and OIE, as well as the relationship of antimicrobial resistance to its own sustainable development goals (President of the General Assembly, 2016). The U.N. established an Ad Hoc Interagency Coordination Group (IACG) on Antimicrobial Resistance with high-level representation; and although the U.N.’s actions to this point have not had the teeth of an international treaty or even a direct coordinating body (for such aspirations, see Ǻrdal et al., 2016), they have seemed to fulfill the IACG’s objective “to champion and advocate for action against AMR at the highest political level” and to draw attention and funding to those with the stated mission to forestall a post-antibiotic era (Interagency Coordination Group on Antimicrobial Resistance, 2017).

Such “actions against AMR,” following the taxonomy of nearly every major report on antimicrobial resistance, have fallen into three broad categories: increasing surveillance and the actual enumeration of antimicrobial usage and resistance, reducing the incidence of antimicrobial resistance, and augmenting the supply of new antimicrobials. With respect to surveillance, whose insufficient financial backing, standardization, and coordination have long been the lamented missing elements to appropriate global surveillance, funding has been increased through such philanthropic groups as the Wellcome Trust and the Bill and Melinda Gates Foundation, while coordinating efforts have been led by the WHO’s Global Antimicrobial Surveillance System (GLASS), launched in 2015 (Wellcome Trust, 2015; Wellcome Trust, 2017; World Health Organization, 2017; Mayor, 2018). While less than a third of the WHO’s member states participate in the GLASS program to this point, the WHO appears to consider the surveillance glass as half-full and as in the “early, implementation phase” of the program.

Efforts to reduce antimicrobial demand have built upon a broad range of efforts devoted to reducing antimicrobial use and overuse, ranging from sanitary and vaccination measures to decrease the incidence of infection itself, through the education and regulation of the public and the medical and veterinary professions with respect to antimicrobial prescribing (and related efforts to develop improved diagnostics to underpin a more rational therapeutics), to the further attempted reduction of antibiotic usage in agribusiness worldwide (Laxminarayan et al., 2016; Pulcini et al., 2018; World Health Organization, 2018). This remains a broad series of measures across a heterogeneous array of global actors, drawing additional attention to the very structures of daily living and medical care alike and their relationships to antimicrobial resistance (Laxminarayan and Chaudhury, 2016; Alvarez-Uria et al., 2016).

In contrast to that devoted to such diffuse measures to reduce antibiotic demand and usage, perhaps the most attention—and funding—has been directed to increasing the future supply of antibiotics. Measures advocated for the incentivization of industry to re-engage with antibiotic production have been described as “push” or “pull” mechanisms. “Push” mechanisms entail governmental or philanthropic support of early stage antibiotic development, and extend from such relatively longstanding support as that provided by the U.S.’s Biological Advanced Research and Development Authority (BARDA) to such more recent initiatives as the Combatting Antibiotic Resistant Bacteria Pharmaceutical Accelerator (CARB-X, funded by BARDA and the Wellcome Trust) and the Global Antibiotic Research and Development Partnership (GARDP, a joint initiative of the Drugs for Neglected Diseases Initiative and the WHO). “Pull” mechanisms entail a more radical reconceptualization of the very reimbursement model for antibiotics, traditionally marketed (indeed, in the lucrative 1950s, setting the very foundation for modern pharmaceutical marketing) and reimbursed based on sales volume. Groups like DRIVE-AB (funded by the Innovative Medicines Initiative) and the Duke-Margolis Center for Health Policy, with overlapping key members, have drawn attention to the need for new funding models, with remuneration de-linked (to varying extents) from antibiotic sales volume, and incorporating various elements of entry awards (ideally, proportionate to the value of the drug) and mandated stewardship efforts (Ǻrdal et al., 2018; Daniels et al., 2017). Such models, while still reflecting an underlying optimism in the capacity of the pharmaceutical industry to keep up in the arms race with bugs that dates back to the 1950s, nevertheless bears a sense of heightened urgency and the imprint of more recent concerns with stewardship that were generally absent six decades prior.

Conclusion

Over the past nearly seventy-five years, evolving responses to antibiotic resistance have depended on the series of linked biological and social factors described in the introduction; but the degree of attention over time has not been directly proportionate to any single factor in particular. For example, there appears to be no direct proportion between the burden (itself rarely measured) of treatable infectious disease seemingly threatened by existing resistance and the intensity of collective concern expressed at a given time. Indeed, the increasing funding and attention given to antibiotic resistance in recent years appears to have dramatically outpaced the burden of antibiotic resistance itself, and points us to other factors—attention to emerging infections and the global movement of people and pathogens more generally, the perceived alternative priorities of the pharmaceutical industry, and the impressive momentum developed by an increasing number of actors engaged with (and framing futures around) the problem of antibiotic resistance—responsible for such increasing attention and funding.

This is not to state that antimicrobial resistance is not worth confronting. It is, rather, to state that such increased attention is not inevitable. Whether this momentum will be sustained in the absence of the international treaty or supranational directing group envisioned by some would-be reformers remains to be seen. Historical review has shown that the escalation of attention to antibiotic resistance necessitated a dedication to collaboration and coordination by a number of key actors extending from Stuart Levy onward, but has likewise been subject to larger political forces promoting or forestalling such attention and international collaboration. From where I write in the U.S. in 2018, this seems a less promising moment for such global engagement and collaboration than in years past.

Finally, in contemplating the measures to be taken to forestall a possible post-antibiotic era, it is instructive to see the most funding directed to increasing the supply of the antibiotic pipeline, important as that may be. In many ways we share continuities from the 1950s, relying primarily on the pharmaceutical industry to keep up with resistant bugs, even if the overt optimism of that era has been replaced by an implicit optimism (if only the incentives are aligned properly) lying beneath a surface of apocalyptic warnings of post-antibiotic dystopia. Concern over antimicrobial resistance has the potential to catalyze efforts to focus our attention on sanitation and the structures of daily living, the need for global surveillance against emerging infections more generally, and the processes underlying or preventing “rational” medical and veterinary practice both in the developing and the developed world. Much as novel antibiotic classes and compounds are to be wished for, it would be unfortunate if their successful development led to a decline in attention to such larger structural factors.

Data availability

Data sharing is not applicable to this paper as no datasets were generated or analysed.

References

(1966) Infectious drug resistance. New Engl J Med 275:277

(1981) Statement Regarding Worldwide Antibiotic Misuse. In: Levy SB, Clowes RC, Koenig EL (eds) Biology, Pathogenicity, and Ecology of Bacterial Plasmids. Plenum, New York, pp 679–681

(1998) Report of the Select Committee on Science and Technology of the House of Lords. Resistance to Antibiotics and Other Antimicrobial Agents. Stationery Office, London

(2015) National Action Plan for Combating Antibiotic-Resistant Bacteria. https://obamawhitehouse.archives.gov/sites/default/files/docs/national_action_plan_for_combating_antibotic-resistant_bacteria.pdf Accessed on August 19, 2018

Alvarez-Uria G, Gandra S, Laxminarayan R (2016) Poverty and prevalence of antimicrobial resistance in invasive isolates. Int J Infect Dis 52:59–61

American Society for Microbiology (1995) Report of the ASM Task Force on Antibiotic Resistance. [Supplement to Antimicrobial Agents and Chemotherapy] www.asm.org/images/PSAB/ar-report.pdf Accessed on August 19, 2018

Ǻrdal C et al. (2016) Antimicrobials: access and sustainable effectiveness 5: international cooperation to improve access to and sustain effectiveness of antimicrobials. Lancet 387:296–307

Ǻrdal C et al. (2018) DRIVE-AB Report: revitalizing the antibiotic pipeline–stimulating innovation while driving sustainable use and global access. http://drive-ab.eu/wp-content/uploads/2018/01/CHHJ5467-Drive-AB-Main-Report-180319-WEB.pdf Accessed on August 19, 2018

Barber M (1947) Staphylococcal infection due to penicillin-resistant strains. Br Med J 2:863–865

Barber M (1948) The present status of penicillin. St Thomas Hosp Gaz 46:162–163

Batten LW (1955) Discussion on the use and abuse of antibiotics. Proc R Soc Med 48:355–364

Beardsley T (1986) NIH retreat from controversy. Nature 319:611

Begley S, Brant M, Wingert P, Hager M (1994, March 28) The end of antibiotics. Newsweek. pp 47–51

Berkeleman RL, Freeman P (2004) Emerging infections and the CDC Response. In: Packard R, et al., (eds) Emerging Illnesses and Society: Negotiating the Public Health Agenda. Johns Hopkins University Press, Baltimore, pp 350–387

Brown N, Michael M (2003) A sociology of expectations: Retrospecting prospects and prospecting retrospects. Technol Anal Strateg Manag 15:3–18

Bud R (2007a) Penicillin: Triumph and tragedy. Oxford University Press, New York

Bud R (2007b) From germophobia to the carefree life and back again: The lifecycle of the antibiotic brand. In: Tone A, Watkins ES (eds) Medicating Modern America. New York University Press, New York, pp 17–41

Centers for Disease Control and Prevention (2013) Antibiotic resistance threats in the United States, 2013. https://www.cdc.gov/drugresistance/pdf/ar-threats-2013-508.pdf Accessed on August 19, 2018

Condrau F (2009) Standardising infection control: antibiotics and hospital governance in Britain, 1948-1960. In: Bonah C, Rasmussen A, Masutti C (eds) Harmonizing Drugs: Standards in 20th Century Pharmaceutical History. Editions Glyph, Paris, pp 327–339

Daniel et al. (2017) Value-based strategies for encouraging new development of antimicrobial drugs. https://healthpolicy.duke.edu/sites/default/files/atoms/files/value-based_strategies_for_encouraging_new_development_of_antimicrobial_drugs.pdf Accessed on August 19, 2018

Davies SC (2013) Annual report of the chief medical officer, Vol 2. Department of Health, London

D'Amelio, N (January 1974) Are family doctors prescribing too many antibiotics? Medical Times 102: 53−61

Dowling HF, Lepper MH, Jackson GG (1955) Clinical significance of antibiotic-resistant bacteria. J Am Med Assoc 157:327–330

Finland M (1970) Changing ecology of bacterial infections as related to antibacterial therapy. J Infect Dis 122:419–431

Fleming A (1999) Penicillin [Nobel Prize Lecture, December 11, 1945]. Nobel lectures in physiology or medicine, 1942-1962. World Scientific Publishing Company, Singapore

Fowler T, Walker D, Davies SC (2014) The risk/benefit of predicting a post-antibiotic era: is the alarm working? Ann New Y Acad Sci 1323:1–10

Garrett L (1994) The coming plague: newly emerging diseases in a world out of balance. Farrar, Straus and Giroux, New York

Gibbs CW, Gibson JT, Newton DS (1973) Drug utilization review of actual versus preferred pediatric antibiotic therapy. Am J Hosp Pharm 39:892–7

Gibbs EPJ (2014) The evolution of one health: A decade of progress and challenges for the future. Vet Rec 174:85–91

Gleckman R, Madoff MA (1969) Environmental pollution with resistant microbes. New Engl J Med 281:677–8

Gradmann C (2013) Sensitive matters: The World Health Organization and antibiotic resistance testing, 1945−1975. Soc Hist Med 26(3):555–74.

Gradmann C (2016) Reinventing infectious disease: Antibiotic resistance and drug development at the Bayer company, 1940-1980. Med Hist 60:155–80

Greenwood D (1998) Lords-a-leaping: The Soulsby report on antimicrobial drug resistance. J Med Microbiol 47:749–50

Hillier K (2006) Babies and bacteria: Phage typing, bacteriologists, and the birth of infection control. Bull Hist Med 80:733–61

Hussar AE, Holley HL (1954) Antibiotics and antibiotic therapy. The Macmillan Company, New York

Infectious Diseases Society of America (2004) Bad bugs, no drugs: as antibiotic discovery stagnates… a public health crisis brews. Infectious Diseases Society of America, Alexandria

Interagency Coordination Group on Antimicrobial Resistance (2017) Work plan of the ad-hoc interagency coordination group on antimicrobial resistance. http://www.who.int/antimicrobial-resistance/interagency-coordination-group/IACG-AMR_workplan.pdf Accessed on August 19, 2018

Jones O et al. (2017) Drug resistant infections: a threat to our economic future. http://pubdocs.worldbank.org/en/527731474225046104/AMR-Discussion-Draft-Sept18updated.pdf Accessed on August 19, 2018

Kirchhelle C (forthcoming) Pyrrhic progress: antibiotics in western food production, 1949–2015. Rutgers University Press, New Brunswick

Koenig E (2005) Commentary: the birth of the Alliance for the Prudent Use of Antibiotics (APUA). In: White DG, Alekshun MN, McDermott PF (eds) Frontiers in antimicrobial resistance: a tribute to Stuart Levy. American Society of Microbiology, Washington, D.C., pp 517–518

Lasagna L (1976, November 17) An open letter on colds and antibiotics, Part II. Med Trib 17:1

Laxminarayan R, Chaudhury RR (2016) Antibiotic resistance in India: Drivers and opportunities for action. PLoS Med 13(3):e1001974

Laxminarayan R et al. (2013) Antibiotic resistance–the need for global solutions. Lancet Infect Dis 13:1057–98

Laxminarayan R et al. (2016) Access to effective antimicrobials: A worldwide challenge. Lancet 387:168–75

Lederberg J (1988) Medical science, infectious disease, and the unity of humankind. J Am Med Assoc 260:684–685

Lederberg J, Oaks SC Jr. (eds) (1992) Emerging infections: Microbial threats to health in the United States. National Academy Press, Washington, D.C.

Lesch JE (2007) The first miracle drugs: how the sulfa drugs transformed medicine. Oxford University Press, New York

Levy SB (1983) APUA: history and goals. APUA Newsl 1:1

McKenna M (2017) Big chicken: the incredible story of how antibiotics created modern agriculture and changed the way the world eats. National Geographic Partners, LLC, Washington, D.C.

Mayor S (2018) First WHO antimicrobial surveillance data reveal high levels of resistance globally. BMJ 360:k462

Mölstad S et al. (2008) Sustained reduction of antibiotic use and low bacterial resistance: 10-year follow-up of the Swedish Strama program. Lancet Infect Dis 8:125–32

Neu HC (1992) The crisis in antibiotic resistance. Science 257:1064–72

O’Neill J et al. (2016) Tackling drug-resistant infections globally: final report and recommendations (The Review on Antimicrobial Resistance). https://amr-review.org/sites/default/files/160518_Final%20paper_with%20cover.pdf Accessed on August 19, 2018

Osmundsen JA (1966, October 18) Are germs winning the war against people? Look p 140–141

Pew Charitable Trust (2018) Antibiotic resistance project. http://www.pewtrusts.org/en/projects/antibiotic-resistance-project Accessed on August 19, 2018

Podolsky SH (2015) The antibiotic era: reform, resistance, and the pursuit of a rational therapeutics. Johns Hopkins University Press, Baltimore

Podolsky SH et al. (2015) History teaches us that confronting antibiotic resistance requires stronger global collective action. J Law Med Ethics 43: (Suppl 3) 27–32

Podolsky SH, Lie AK (2016) Futures and their uses: Antibiotics and therapeutic revolutions. In: Greene JA, Condrau F, Watkins ES (eds) Therapeutic revolutions: pharmaceuticals and social change in the twentieth century. University of Chicago Press, Chicago, pp 18–42

President of the [United Nations] General Assembly (2016) Political declaration of the high-level meeting of the general assembly on antimicrobial resistance. https://digitallibrary.un.org/record/842813/files/A_71_L-2-EN.pdf Accessed on August 19, 2018

Pulcini C et al. (2018) Developing core elements and checklist items for global hospital antimicrobial stewardship programmes: a consensus approach. Clin Microbiol Infection https://doi.org/10.1016/j.cmi.2018.03.033 Accessed on August 19, 2018

Roberts AW, Visconti J (1972) The rational and irrational use of systemic antimicrobial drugs. Am J Hosp Pharm 29:828–34

Seppälä H et al. (1997) The effect of changes in the consumption of macrolide antibiotics on erythromycin resistance in group A Streptococci in Finland. New Engl J Med 337:441–6

Shallcross LJ, Howard SJ, Fowler T, Davies SC (2015) Tackling the threat of antimicrobial resistance: From policy to sustainable action. Philos Trans R Soc B 370:20140082

Simmons HE, Stolley PD (1974) This is medical progress? Trends and consequences of antibiotic use in the United States. JAMA 227:1023–28

Sørensen TL, Monnet D (2000) Control of antibiotic use in the community: the Danish experience. Infect Control Hosp Epidemiol 21:387–9

Summers WC (2008) Microbial drug resistance: a historical perspective. In: Wax RG, Lewis K, Salyers AA, Taber H (eds) Bacterial resistance to antimicrobials, 2nd edn. CRC Press, Boca Raton, pp 1–9

Thoms U (2012) Between promise and threat: antibiotics in food in Germany, 1950–1980. Ntm Z für Gesch der Wiss, Tech und Med 20:181–214

U.S. Congress, Office of Technology Assessment (1995) Impacts of antibiotic-resistant bacteria. U.S. Government Printing Office, Washington, D.C.

U.S. Task Force on Prescription Drugs (1969) Final report. U.S. Government Printing Office, Washington, D.C.

Van Boeckel TP et al. (2014) Global antibiotic consumption 2000 to 2010: an analysis of national pharmaceutical sales data. Lancet Infect Dis 14(2014):742–50

Watanabe T (1963) Infective heredity of multiple drug resistance in bacteria. Bacteriol Rev 27:87–115

Wellcome Trust (2015) Fleming fund launched to tackle global problem of drug-resistant infection. https://wellcome.ac.uk/press-release/fleming-fund-launched-tackle-global-problem-drug-resistant-infection Accessed on August 19, 2018

Wellcome Trust (2017) Global pledges to speed up action on superbugs. https://wellcome.ac.uk/news/global-pledges-speed-action-superbugs Accessed on August 19, 2018

World Health Organization (1976) Public health aspects of antibiotic-resistant bacteria in the environment: report on a consultation meeting, Brussels, 9–12 December 1975. WHO Regional Office for Europe, Copenhagen

World Health Organization (1978) Surveillance for the prevention and control of health hazards due to antibiotic-resistant enterobacteria: report of a WHO meeting. World Health Organization, Geneva

World Health Organization (1994) WHO scientific working group on monitoring and management of bacterial resistance to antimicrobial agents. World Health Organization, Geneva

World Health Organization (1997) The medical impact of the use of antimicrobials in food animals: Report of a WHO Meeting. World Health Organization, Geneva

World Health Organization (1998) The current status of antimicrobial resistance in Europe: report of a WHO workshop held in collaboration with the Italian Associazione Culturale Microbiologia Medica. World Health Organization, Geneva

World Health Organization (2001) WHO global strategy for containment of antimicrobial resistance. World Health Organization, Geneva

World Health Organization (2014) Antimicrobial resistance global report on surveillance. World Health Organization, Geneva

World Health Organization (2015) Global action plan on antimicrobial resistance. World Health Organization, Geneva

World Health Organization (2017) Global Antimicrobial Resistance Surveillance System (GLASS) Report: early implementation, 2016–2017. http://apps.who.int/iris/bitstream/handle/10665/259744/9789241513449-eng.pdf?sequence=1 Accessed on August 19, 2018

World Health Organization (2018) WHO guidelines on use of medically important antimicrobials in food-producing animals. http://apps.who.int/iris/bitstream/handle/10665/258970/9789241550130-eng.pdf?sequence=1 Accessed on August 19, 2018

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Podolsky, S.H. The evolving response to antibiotic resistance (1945–2018). Palgrave Commun 4, 124 (2018). https://doi.org/10.1057/s41599-018-0181-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-018-0181-x

This article is cited by

-

Soft governance against superbugs: How effective is the international regime on antimicrobial resistance?

The Review of International Organizations (2024)

-

Nanovaccines to combat drug resistance: the next-generation immunisation

Future Journal of Pharmaceutical Sciences (2023)

-

Probiotic disruption of quorum sensing reduces virulence and increases cefoxitin sensitivity in methicillin-resistant Staphylococcus aureus

Scientific Reports (2023)

-

Governing the Global Antimicrobial Commons: Introduction to Special Issue

Health Care Analysis (2023)

-

The “One Health” approach in the face of Covid-19: how radical should it be?

Philosophy, Ethics, and Humanities in Medicine (2022)