Abstract

A number of barriers have been identified to getting evidence into policy. In particular, a lack of policy relevance and lack of timeliness have been identified as causing tension between researchers and policy makers. Rapid reviews are used increasingly as an approach to address timeliness, however, there is a lack of consensus on the most effective review methods and they do not necessarily address the need of policy makers. In the course of our work with the Scottish Government’s Review of maternity and neonatal services we developed a new approach to evidence synthesis, which this paper will describe. We developed a standardised approach to produce collaborative, targeted and efficient evidence reviews for policy making. This approach aimed to ensure the reviews were policy relevant, high quality and up-to-date, and which were presented in a consistent, transparent, and easy to access format. The approach involved the following stages: 1) establishing a review team with expertise both in the topic and in systematic reviewing, 2) clarifying the review questions with policy makers and subject experts (i.e., health professionals, service user representatives, researchers) who acted as review sponsors, 3) developing review protocols to systematically identify quantitative and qualitative review-level evidence on effectiveness, sustainability and acceptability; if review level evidence was not available, primary studies were sought, 4) agreeing a framework to structure the analysis of the reviews around a consistent set of key concepts and outcomes; in this case a published framework for maternal and newborn care was used, 5) developing an iterative process between policy makers, reviewers and review sponsors, 6) rapid searches and retrieval of literature, 7) analysis of identified literature which was mapped to the framework and included review sponsor input, 8) production of recommendations mapped to the agreed framework and presented as ‘summary topsheets’ in a consistent and easy to read format. Our approach has drawn on different components of pre-existing rapid review methodology to provide a rigorous and pragmatic approach to rapid evidence synthesis. Additionally, the use of a framework to map the evidence helped structure the review questions, expedited the analysis and provided a consistent template for recommendations, which took into account the policy context. We therefore propose that our approach (described in this paper) can be described as producing collaborative, targeted and efficient evidence reviews for policy makers.

Similar content being viewed by others

Background

Since the late 1990s there has been a growing acknowledgement by practitioners and policy analysts that policy should be evidence-based (Black, 2001). However, gaps between evidence and policy have been documented across diverse areas including early years education (Parker, 2013); healthcare services (Knight et al., 2016; Dickinson et al., 2011) and climate change (Tompkins et al., 2010). Moves have been made to improve this state of affairs and a number of “supply” and “demand” barriers.

Barriers to evidence-based policymaking

First, with regard to the supply of evidence, Rutter (2012) reported that a key issue is that research is not always timely enough in providing answers to relevant and pressing policy questions. Academic research by its nature works on very different timescales to governments and policy makers. For instance, large-scale trials will generally take 5–10 years to develop, implement, interpret and publish, whilst Governments work on timescales defined by a term of office which is unlikely to be longer than five years, which may result in a focus on ‘quick fixes’ (Head, 2010). In addition, many of the issues with which government is concerned are not necessarily amenable to rigorous testing, for example through a randomised controlled trial (RCT) and the emphasis on the hierarchy of evidence and the use of RCTS in policy-making has been the subject of criticism within the literature (Parkhurst and Abeysinghe, 2016). The assumption that RCTs are never feasible in a policy context is questioned by La Caze and Colyvan (2016) who note that in the right circumstances, RCTs can be useful in determining the effectiveness of a policy and has been recommended for use in policy evaluations wherever possible (Oliver et al., 2010). A ‘plurality’ of evidence is necessary, however, to combine evidence on effectiveness with additional information on acceptability, context and feasibility (Pearce and Raman, 2014). Other supply side barriers can includea lack of effective engagement with the policy process by researchers and funding bodies, and differences in approaches to defining and answering questions by policy makers and researchers (Rutter, 2012).

In addition to these supply problems, there may also be some problems related to the lack of demand for evidence (McCrae et al., 2012; Rutter, 2012). One key factor that may inhibit the demand for evidence is the perception of a mismatch between the timetable of political activity and timelines of evidence production (Rutter, 2012). Other beliefs of policy makers that can act as a barrier include: that political decisions can be influenced by perceived values rather than outcomes; that research conducted in a different (albeit relevant) setting that is not applicable locally (Black, 2001); the wider societal environment may not be responsive to change; a lack of a consensus view on the research findings (e.g., due to different interpretations of results, incomplete or inconsistent evidence); preference of other forms of evidence including the opinions of eminent professionals, surveys of people’s views and local information on services (Black, 2001); and the evidence is not presented in a way that civil servants can understand or use (Harvey, 2013).

Approaches to evidence synthesis

One approach to evidence synthesis is a systematic review. Systematic review methods which include robust approaches to searching, appraisal and synthesis of the literature are increasingly being used in preference to traditional literature reviews, due to their ability to reduce bias, provide more reliable results and synthesise complex information in an understandable manner (Dixon-Woods et al., 2006). More specifically, systematic reviews aim to identify all the available empirical evidence on a pre-specified inclusion/exclusion to address a specific question (Higgins and Green, 2011). Different approaches to systematic reviewing exist (e.g., the Cochrane Collaboration, EPPI Centre and Joanna Briggs Institute) and while there are some differences in the specifics of the methods and the nature of the evidence included (i.e., RCTs, mixed methods, qualitative methods) all of these approaches to systematic reviewing are explicit and take steps to minimise bias within the review process. Together with an assessment of the risk of bias within the included studies, conclusions can be drawn regarding the strength of the evidence. Finally, results are either pooled together statistically (i.e., meta-analysis) or using a range of qualitative approaches (e.g., thematic analysis, meta-synthesis, meta-ethnography) to provide a summary of the studies. Whilst systematic review methodology was originally developed in the healthcare field to enable practitioners and policy makers to make evidence-informed decisions, the approach is now widely considered to be the gold-standard for evidence-based policy and practice and is increasingly used in fields beyond healthcare (Haddaway and Bilotta, 2016).

Systematic reviews do face a number of criticisms, however. First, it is not uncommon for systematic reviews to conclude that there is either limited or poor quality evidence upon which to make a decision (Petticrew, 2003). This can be particularly problematic in reviews which only aim to include randomised controlled trials (RCTs). In some fields RCTs are not feasible or possible and observational studies may be the only form of evidence. Moreover, the use of qualitative studies may also be of importance to try and identify the acceptability of services and facilitate the distillation of not only what works but also why and for whom, information that may arguably be relevant and important for policy makers (Rycroft‐Malone et al., 2004). Indeed, mixed-methods approaches which combine quantitative and qualitative findings are being increasingly recognised by programme evaluators who aim to assess complex problems and interventions (Head, 2010) and systematic review methods now exist for the synthesis of observational studies (Stroup et al., 2000), qualitative studies (Dixon-Woods et al., 2006; Thomas and Harden, 2008) and mixed-methods studies (Thomas et al., 2004).

Whilst alternative approaches to systematic reviews exist that aim to combine different forms of evidence, they are also not without issue. For instance, realist reviews which are rooted in the philosophical principle of realism and have the laudable aim of understanding the mechanism of action in why an intervention may succeed or fail (Pawson et al., 2005) do have limitations that are particularly relevant to evidence-based policy-making. First, there are a limited number of researchers with expertise in realist reviews (Pawson et al., 2005) and few published examples of how to conduct such reviews (Rycroft–Malone et al., 2012). Secondly, as the aim of the realist review aims to identify what within their contexts, the results may not be generalisable outwith these contexts (Rycroft–Malone et al.). Thirdly, the process of conducting a review is complex and therefore can be time and resource intensive, which has cost implications (Rycroft–Malone et al.). Although attempts have been made to streamline the process, in the form of rapid realist reviews which incorporate the use of expert groups to expedite the review, these necessitate considerable methodological compromises such as a non-exhaustive literature search which relies on articles known to the authors (Saul et al., 2013).

Another criticism of systematic reviews is like many forms of research, the time they take to complete and the resources required. For instance, by reviewing the PROSPERO database of systematic reviews, Borah et al. (2017) have estimated that the mean project length of a systematic review was 67.3 weeks. Whilst this is arguably a relatively crude estimate as it will be influenced by journal publishing times and does not take into account the time spent developing the protocol, it does suggest that the production of a systematic review is a lengthy process. As discussed, lengthy duration of research can act as a barrier (Petticrew et al., 2004) and there is a need to balance timeliness of the evidence review process whilst maintaining rigour.

Whilst a number of approaches to addressing this timeliness have been developed, as yet there is not one universally recognised approach. Solutions to the problem of timeliness tend to adopt approaches that streamline the systematic review process. Rapid reviews have used a range of techniques to streamline the review process, including: using highly focused questions; less sophisticated search strategies; using only highly processed evidence (i.e., reviews of reviews); restricting or omitting grey literature searching, limiting the number of variables assessed; and omitting or conducting only a basic quality assessment (Grant and Booth, 2009). There is, however, a lack of consensus in the literature regarding both nomenclature and methodology (Ganann et al., 2010). Indeed, Khangura et al. (2012) notes that there is “no universally accepted definition of a rapid review” (p 2). Crucially, in a systematic evaluation of rapid review methods, Ganann et al. reported that very few of the 70 identified rapid reviews explicitly addressed the issue of what was omitted from the review process and how bias could have been introduced. Nevertheless, Watt et al. (2008) compared differences in the methodologies and essential conclusions between full reviews and rapid reviews on the same topic and concluded that whilst there were differences in methods used and the scope of the rapid reviews was narrower, the actual core conclusions did not differ extensively. Attempts have also been made to streamline the realist review process, in the form of rapid realist reviews which incorporate the use of expert groups to expedite the review.

Another approach to evidence synthesis that also aims to provide results in a relatively short time-frame with limited resources is reviews of reviews (Caird et al., 2015). The approach described by Caird et al. is particularly well suited when broad questions are being asked that necessitate the inclusion of different forms of evidence (e.g., reviews of trials as well as meta-synthesis of views and experiences). However, such an approach is of limited value when review level evidence does not exist or is of a poor quality and would necessitate alternative means of identifying primary studies.

Evidence briefs which have been used by the World Health Organisation combine systematic review evidence with local data (Moat et al., 2014). The findings are presented in a summary document that forms the basis of ‘deliberative dialogues’ between researchers, stakeholders and policy makers. Whilst this approach has been well received by those involved (Moat et al., 2014), it does have some limitations. First, the full report does not describe the methods used to identify, select, appraise or extract data from the studies. Secondly, recommendations are not provided, instead different policy options are described. And thirdly, stakeholder involvement occurs after the evidence brief has been developed.

In summary, it is evident that this gap between evidence and policy is maintained by a wide range of barriers. These include: 1) lack of relevance of evidence to policy, 2) the policy question cannot always be appropriately answered using traditional experimental methodologies, 3) poor question definition which does not allow for the breadth of scope necessary for policy makers, 4) lengthy duration of studies or reviews of studies, 5) an outcomes driven approach versus a values driven approach, 6) mismatch between the context of research and the policy context, 7) unclear or difficult to interpret research findings, 8) lack of consideration of information obtained from other potentially relevant sources that would consider how policy may be received publically, and, 9) lack of skills on the interpretation and collation of evidence within the civil service. Although approaches to synthesise evidence that can be used in a policy making context do exist, they all have limitations and there is still no consensus on the most effective approach. In order to balance the needs of policy makers whilst maintaining rigour, a pragmatic approach is necessary. To address this in our work to inform the Scottish Government’s Review of Maternity and Neonatal Services, we developed an approach to rapid evidence synthesis that draws upon existing approaches and addresses their limitations. This paper will now detail our approach entitled ‘Collaborative targeted efficient evidence reviews for policy makers’.

Developing methodology for evidence-informed policy: the example of the scottish government’s review of maternity and neonatal services

This national Review of Maternity and Neonatal Services was intended to inform service provision over the next decade and beyond, but it had to conduct its work within a one-year timescale. The main Review group consisted of healthcare professionals, representatives from the Scottish Government, third sector representatives and academics. The main review group then developed the following four working sub-groups: maternity models of care, neonatal models of care, evidence and data, and workforce planning and development. These sub-groups consisted of over 100 individuals also working in maternity and neonatal services (frontline staff and managers) as well as academics, third sector workers and service user representatives. Information gathered to inform the main review group’s recommendations included a national stakeholder engagement exercise with staff, service users and 3rd sector organisations, the production of reports and recommendations from the sub-groups, as well as a series of evidence reviews which had all to be completed within a 6 month period.

Collaborative targeted efficient evidence review methods

Given the lack of consensus on rapid review methods and the longstanding difficulties of getting evidence into practice, when invited to provide this series of rapid reviews for the Scottish Government’s Review of Maternity and Neonatal Services we developed a pragmatic approach to fast evidence reviews for policymaking that utilised components of rapid reviews, evidence briefs, reviews of reviews and realist rapid reviews. Like rapid realist reviews (Saul et al., 2013), our approach involved a high level of stakeholder engagement throughout the process which helped to address the barriers to getting evidence into policy. We also attempted to address some of the limitations of rapid realist reviews (see Saul et al., 2013), which have previously made compromises on rigour (i.e., not conducting a literature search) or timeliness (i.e requiring a 6 month timescale) by utilising high quality processed evidence in the forms of reviews of reviews (Caird et al., 2015; Moat et al., 2014). In addition, we used components of rapid reviews to identify primary studies (in the case of absence of systematic reviews) such as highly focused database searching and single person study selection and data extraction (Grant Booth, 2009). By doing this we ensured that the evidence reviews were policy relevant, high quality, up-to-date and delivered in a timely manner. Moreover, as the vast majority of approaches to rapid evidence synthesis do not explicitly state what compromises in methodological rigour were made (Ganann et al., 2010), our reviews were presented in a transparent manner which clearly outlined any compromises. Finally, to aid policy makers use of the reviews, the review conclusions and recommendations were presented in a consistent and easy to access format alongside a technical report. In order to achieve this an eight-stage approach was developed (see Table 1).

Stage 1: Establishing a review team

Crucial to the project’s success was establishing a core review team that was experienced in working with policy makers and included both subject experts and experts in systematic review methodology. Additionally, the systematic review experts also had some subject knowledge of maternity and neonatal services organisation and the subject experts had experience and knowledge of conducting systematic reviews. Importantly, as the two review experts had never worked in a maternity services context, it was believed that they could challenge any possibility of bias in the selection or interpretation of finding. Specifically, the team contained a Professor in mother and infant health who was also a member of the Evidence and Data sub-group and the review main group, a Consultant Midwife who worked as an Educational Projects Manager at NHS Education for Scotland and was also a member of the maternity sub-group, and a Senior Lecturer and post-doctoral researcher in evidence synthesis. In addition, the review team had support from very engaged civil servants who were embedded in the whole review process. More specifically, they managed the fast pace of the review, were part of the discussions on the evidence reviews and were involved in the writing the of the Government report. This good working relationship was crucial in bridging the gap between evidence and policy.

Stage 2: Clarifying the review questions

The first stage of the review process was identifying the questions for the evidence reviews. Each of the three working sub-groups, were asked to provide a list of questions they believed required evidence to help them develop recommendations for the review. This was very much an iterative process in which the scope of questions was refined by the review team (i.e., some questions were lumped together and others were split) and then presented to the sub-groups for consultation before being finalised by the review team. This process ensured that questions were relevant to policy and clearly defined.

The aim of the government Review was to provide guidance on developing a model of care for maternity and neonatal services. However, it became apparent early on that different members of the sub-groups had different definitions of ‘model of care’ and this was reflected in the review questions put forward. In order to ensure consistency a literature search of definitions for a model of care was conducted. Only one generic definition was identified and was adopted for use within the reviews. Specifically, Davidson et al. (2006) defines a model of care as “an overarching design for the provision of a particular type of health care service that is shaped by a theoretic basis, EBP (evidence-based practice) and defined standard” (p 49).

The broad focus of the reviews was around models of maternity and neonatal care that would be applicable in different contexts to take into account geographical and population differences within Scotland. Each of the evidence reviews therefore aimed to distil core principles and practice recommendations that could lead to improved care, services, outcomes and experiences across the continuum for all childbearing women, babies and families. Questions on highly specific aspects of clinical care which would require individual systematic reviews were considered beyond the scope of these reviews. We therefore asked three generic questions in each of the evidence reviews:

-

What is the optimal model of care (i.e., the overarching design and values of the service)?

-

How should care be organised?

-

What are the characteristics of care providers that lead to improved outcomes and experiences?

These questions were then applied to the following topic areas at the request of the sub-groups:

-

Models of care for women requiring maternity critical care

-

Models of care for infants requiring neonatal services and their families

-

Improving care, services, and outcomes for women and babies from vulnerable population groups

-

Continuity models of care

-

Place of maternity care, including place of birth

-

Organisation of services for childbearing women across the continuum (including methods for assessment/ triage in early labour)

The one exception to this was a review requested by the workforce sub-group on strategies to improve interprofessional working, which by its nature did not fit this approach and was amended to focus on identifying barriers to interprofessional working and strategies to improve it.

Once the questions had been finalised, each sub-group was asked to nominate members of their group to be review sponsors to act as subject experts and provide input into the development of each of the reviews.

Stage 3: Development of a generic review protocol

In order to ensure consistency and efficiency, a generic review protocol which outlined the review methods was developed by the review team (see additional file 1 for an example review). In addition to obtaining traditional evidence on outcomes in the form of RCTs and well-conducted observational studies, the protocol also outlined strategies to obtain different forms of research that explored opinions and experiences of stakeholders (e.g., focus groups, in-depth or semi-structured interviews, ethnographic studies). The evidence on outcomes and experiences was considered together when making recommendations. For instance, if a specific approach was found to be effective in changing outcomes but not acceptable to stakeholders, this would be made explicit.

Given the short time frame, the aim was to quickly identify the best available highly processed evidence and we used a hierarchal approach to do this (see stage 6). A pre-existing generic review protocol produced by NICE (2014) was used to document the methods as it was concise and transparent. The protocol included information that would also be found in a standard systematic review: inclusion/exclusion criteria, search strategy and approach to critical appraisal and synthesis.

Stage 4: Agreeing a framework

Whilst developing the protocol it quickly became apparent that having a framework would be necessary to help structure the analysis/synthesis of the included studies and provide consistency between the evidence reviews. To the best of our knowledge, this is a novel aspect of our approach. Given that the policy focus was on the needs of women and babies, the framework had to reflect this. A pre-existing framework was available; specifically, we used the framework for quality maternal and newborn care which was informed by a synthesis of findings from systematic reviews of women’s views and experiences, effective practices, and maternal and newborn care providers (Renfrew et al., 2014). The core components of this framework were effective practices, organisation of care, values, philosophy and characteristics of care providers. It therefore included consideration not only of what should be done (practices), but also how it should be provided in regard to organisation and to values and philosophy, and who should provide the care (see Fig. 1).

Framework for Quality Maternal and Newborn Care. This figure is not covered by the Creative Commons Attribution 4.0 International License. Reprinted from The Lancet, Vol. 384, Renfrew MJ, Mcfadden A, Bastos MH, Campbell J, Channon AA, Cheung NF, Silva DRAD, Downe S, Kennedy HP, Malata A., Mccormick F, Wick L, Declercq E, Midwifery and quality care: findings from a new evidence-informed framework for maternal and newborn care, p 1132, Copyright (2014), with permission from Elsevier

Stage 5: Individual review protocols

The generic protocol was then tailored for each individual review question identified in Stage 2. These were developed and agreed upon in conjunction with the review sponsors who provided guidance on the inclusion/exclusion criteria, grey literature searching and sources of primary literature if needed (see supplementary material for example protocol). This contrasts with the approach detailed by Moat et al. (2014), whereby stakeholders were only consulted at the end of the process. This also ensured that the evidence reviews met the sub-group’s needs and were relevant to policy.

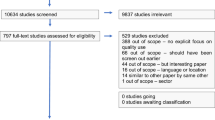

Stage 6: Rapid searches and retrieval of the literature

A hierarchal approach to searching the literature was utilised to identify the best available evidence as efficiently as possible. Given that Cochrane reviews are traditionally considered one of the most robust forms of systematic reviews of trials of interventions (Smith, 2013), we first searched the Cochrane library to identify any reviews that could answer questions of effectiveness. If none were available we then did a highly focused search for relevant systematic reviews in core databases (i.e., MEDLINE, CINAHL). As the review recommendations also had to take into account the views and experiences of women and their families, the databases were searched for meta-syntheses which would address qualitative questions. In order to guard against obtaining large volumes of records that would need to be screened, we applied systematic review search filters which limited the results to systematic reviews and meta-syntheses only. To preserve efficiency one reviewer was responsible for study selection (i.e., screening of titles and retrieval of texts), however, regular consultation with other reviewers took place to discuss the application of the inclusion criteria.

In addition, relevant clinical guidelines published by the National Institute for Health and Care (NICE) and the Scottish Intercollegiate Guidelines Network (SIGN) and any guidance/reports provided by professional organisations (e.g., the Royal College of Obstetricians and Gynaecologists, Royal College of Midwives) were also included. Unlike other approaches to rapid reviews which only include review level evidence (Caird et al., 2015), we had a system in place to identify pertinent primary literature in the case that no highly processed evidence was available. This was identified through searching reference lists of non-systematic reviews identified through the search strategy outlined above and recommendations of review sponsors. This approach also ensured that our reviews were up-to-date with any new studies that would not have been captured in the highly processed evidence. However, as this approach does have the potential to introduce bias, we were explicit as to how any included primary studies were identified (i.e., from reference lists or through sponsors).

Stage 7: Analysis

Data were extracted using standardised data extraction forms produced by the Joanna Briggs Institute (2014). To maintain efficiency, extraction was conducted by one reviewer who consulted the other reviewers for discussion as needed. In order to establish the quality of the evidence that any recommendations were based upon, critical appraisal was conducted using the NICE quality assessment checklists which enabled us to make a relatively quick but robust assessment.

The results of the included reviews and studies were then mapped to the core components of the framework for effective maternal and newborn care (Renfrew et al., 2014) and consideration was made regarding relevance to the policy context. As primary studies were only included when review level evidence was not available, these different forms of evidence were analysed separately. At this point the review sponsors were provided with a draft of the review and were asked to identify any key studies that had been omitted and to consider whether they agreed with our interpretation of the results. This work was then presented back to the relevant sub-group in draft form for scrutiny to ensure the work was reflective of a wider range of stakeholders. Once this was finalised we continued to work with review sponsors to develop recommendations which were policy relevant and aligned to the components of the framework under the following headings:

-

Practices

-

Values and Philosophy

-

Organisation of care

-

Characteristics of care providers

Stage 8: Production of top sheets

Given the breadth of evidence reviewed, the fully completed reviews were lengthy and thus it would not be feasible for policy makers to read the entire document. We therefore developed top sheets to accompany the full reviews. The template for these topsheets was developed by the core review team who agreed that the following information would both help policy makers make an evidence informed decision and be quick to read: the amount and nature of the evidence identified (i.e., type of review, primary studies) as well as the strength of that evidence, key findings, and recommendations. The recommendations were developed using the evidence identified taking into account effect sizes, women, families and healthcare professionals’ views and study quality. Recommendations were all mapped to the framework as outlined in stage 7 and initially developed by the review team. They were then reviewed by the review sponsors and sub-groups who provided guidance on feasibility and acceptability of the recommendations. This approach provided a consistent structure in 2–3 clearly-written pages (see supplementary material for example review). These top sheets were used along with the results of the engagement exercise and reports of the sub-groups by the Review chair, deputy chairs and civil servants to produce the report for government Ministers.

Discussion

Strengths of the approach

This approach enabled us to address many of the previously identified barriers to getting evidence into policy by drawing on strengths of pre-existing different approaches to evidence synthesis. A key strength of our efficient evidence reviews was working with civil servants who were embedded in all stages in the review process. This facilitated timely working between the core review team and sub-groups and ensured the civil servants had a knowledge and understanding of the findings of the evidence reviews which they could apply to writing-up the Government report. The involvement of policy makers, service users, and NHS staff and managers in developing the review questions to ensure policy relevance and interpreting the evidence to produce realistic, clear and context sensitive recommendations was a key strength. The high level of involvement of review experts from outwith the topic area provided reassurance in regard to objectivity. Another strength was the plurality of evidence (e.g., qualitative studies and quantitative studies) which went beyond traditional experimental methods and considered outcomes as well as values. We were fortunate that a wealth of qualitative studies on the views and experiences of stakeholders was available as this enabled us to align our work closely to the needs of policy makers, which is necessary to ensure evidence gets into policy (Petticrew et al., 2004). However, we do acknowledge that in other areas there is a lack of published qualitative studies (Greenhalgh et al., 2016), and in such cases the need for a well engaged and representative stakeholder group is even higher.

Trade-offs

Given that this review was conducted in a short time period with limited resources we needed to strike a balance between rigour and timeliness. To manage this tension a number of trade-offs were made. First, our search strategy was highly focused and involved only a small number of core databases. Whilst this does raise the possibly of potentially missing relevant studies, in particular, non-RCTs which are less likely to be indexed in major databases, we would expect this number to be small. For instance, a review of Cochrane reviews identified that searching an additional 26 databases beyond MEDLINE, EMBASE and the Cochrane Controlled Trials Register only identified an additional 2.4% trials (Royle and Milne, 2003). In addition we attempted to guard against this by asking subject experts to check all included studies and ensure no highly relevant studies had been omitted. This also helped guard against potentially omitting key primary studies which may not have been identified in the systematic review level evidence. Secondly, the screening of titles and abstracts and then full-texts was performed by one reviewer. Whilst it is acknowledged that single person study selection can increase the risk of making mistakes and is influenced by the individual’s bias (Higgins et al., 2013), the ready access to consultation with other review team members and the checking of the included studies with the review team and review sponsors helped to guard against the omission of any key studies or the inclusion of inappropriate studies. Moreover, we used mainly highly processed evidence that had already been through stringent quality control measures. Thirdly, data extraction was only performed by one reviewer and it is recognised that single data extraction is associated with more errors (Buscemi et al., 2006).

Quality control

Whilst this approach did necessitate some compromises, it is unlikely that the broad conclusions would greatly different from a full systematic review had one been conducted, although a full systematic review may arguably enhance the precision of findings. This can in part be attributed to the fact that we were able to use high quality systematic reviews that had already been published as the main component of our review. However, if such reviews were not available, this approach would be of limited value and would potentially necessitate the conduct of new systematic reviews to answer the research questions.

In addition to working closely with subject experts in the form of review sponsors on all stages of the review, we implemented a number of other safeguards to ensure that the process and recommendations were robust and relevant. This included using a pre-constructed evidence-informed framework to structure the analysis and inform the development of recommendations, quality appraisal of the evidence, and developing a quick and understandable document for policy makers to read. Finally, all decisions and compromises made were explicitly reported so it was easy to identify where any possible limitations of the evidence might be. This is in contrast with many rapid reviews which do not state where compromises in rigour or key decisions were made (Ganann et al., 2010). Therefore, we are confident that whilst the evidence could be incomplete it was unlikely to either be wrong or harmful.

Application of methods in other settings

This work could currently be considered a case study of evidence synthesis within a health policy context. Whilst single case studies have previously been described as not generalisable, Flyvbjerg (2006) argues that with careful selection, some case studies can be generalisable. We argue that this piece of work can be considered generalisable for the following reasons. First, it involved multiple evidence reviews which addressed a range of topics, including specific clinical questions as well as broader socio-cultural questions. Secondly, maternity and neonatal services provide care for families across a spectrum of need (i.e., women with no complications for whom birth is a normal physiological event and women with complex medical or social care needs) and is an area where the vast majority of the population come into contact with at some point in their life.

We therefore believe this approach can be applied to other areas of health policy and with some adaption with other areas of public policy. For instance, for reviews within health the core components of the framework for quality maternal and newborn care could be adapted. However, in other areas (e.g., education, criminal justice) a different framework would likely be necessary. Stage 4 of the methodology could be adapted to include either a process of identification of a pre-existing framework relevant to the topic area or the development of a new framework. This process would involve examining the existing literature and consultation with policy makers and other stakeholders to develop, test and agree upon a framework. For instance, ecological systems theory (Bronfenbrenner and Morris, 2007) has been adapted and applied to policy in a range of areas including violence prevention (Krug et al., 2002), tackling social inequities (Dahlgren and Whitehead, 2006) and early years education (Ryan, 2001).

A final caution is that this approach was developed in a health policy context, which adopts the idea that there is a need for evidence-based practice stemming back from the advent of evidence-based medicine (Black, 2001). However, as illustrated, there are difficulties in the understanding of evidence-informed policy in this field and this is likely to be magnified in other policy areas where evidence-based practice is less readily adopted.

Similarities and differences with other approaches to rapid evidence synthesis

This case study has some similarities and differences with other approaches to rapid evidence synthesis. Like the approach detailed by Caird et al. (2015), we utilised high quality review level evidence to answer our questions. However, in cases where good quality review evidence is not available, our approach has additional flexibility by also identifying and including primary studies. Similarly, like Evidence Briefs (Moat et al., 2014) and realist reviews (Rycroft–Malone et al., 2012) our approach also uses expert or stakeholder groups to ensure the review is policy relevant. However, unlike the approach described by Moat et al. (2014) which only involves experts in the final stages of the review, our approach involved stakeholders at all stages of the review. In addition, we believe that our approach is unique in utilising a pre-existing evidence-based framework to facilitate the synthesis. Finally, unlike many approaches to rapid reviews which do not describe in methods in full (Ganann et al., 2010), our approach is transparent and clearly describes how evidence was identified and where trade-offs were made.

Conclusion

Collaborative targeted efficient evidence reviews for policy making provide a standardised approach to identifying, collating and summarising large volumes of evidence for policy makers that has the potential to be used for any policy topic area. Key features of this approach draw on aspects of other approaches to rapid evidence synthesis including the use of review level evidence (Caird et al., 2015) and stakeholder involvement (Moat et al., 2014, Rycroft–Malone et al., 2012). However, unlike many other approaches to rapid evidence synthesis (Ganann et al., 2010), our approach is highly transparent so readers can clearly see how studies were identified and where any bias could have been introduced.

Ultimately, however, the success of such an approach can be judged by the extent to which the reviews are incorporated into policy and then practice. In the case of our collaborative targeted efficient evidence reviews, we can report that the final Maternity and Neonatal Services Review report and recommendations, which has now been published by the Scottish Government (2017) were clearly informed by the conclusions of our evidence reviews. Perhaps most evidently, the final Maternity and Neonatal Services Review recommendations for continuity of carer for all women, and family-centred care in neonatal units, were key recommendations in the evidence reviews. As the implementation of the government Review is just beginning, it is still too soon to tell to what extent the recommendations will become embedded into practice.

Data availability

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

Black N (2001) Evidence based policy: proceed with care. BMJ 323:275–279

Borah R, Brown AW, Capers PL, Kaiser KA (2017) Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open 7:e012545

Bronfenbrenner U, Morris PA (2007) The bioecological model of human development. Handb Child Psychol I:14

Buscemi N, Hartling L, Vandermeer B, Tjosvold L, Klassen TP (2006) Single data extraction generated more errors than double data extraction in systematic reviews. J Clin Epidemiol 59:697–703

Caird J, Sutcliffe K, Kwan I, Dickson K, thomas J (2015) Mediating policy-relevant evidence at speed: are systematic reviews of systematic reviews a useful approach?. Evid Policy 11(1):81–97

Dahlgren G, Whitehead M (2006) European strategies for tackling social inequities in health: Levelling up Part 2. World Health Organization, Copenhagen

Davidson P, Halcomb E, Hickman L, Phillips J, Graham B (2006) Beyond the rhetoric: what do we mean by a ’model of care’? J Adv Nurs 23(3):47–55

Dickinson H, Millar R, West M, Leggat SG, Bartram T, Stanton P (2011) High performance work systems: the gap between policy and practice in health care reform. J Health Organ Manag 25:281–297

Dixon-Woods M, Bonas S, Booth A, Jones DR, Miller T, Sutton AJ, Shaw RL, Smith JA, Young B (2006) How can systematic reviews incorporate qualitative research? A critical perspective. Qual Res 6:27–44

Flyvbjerg B (2006) Five misunderstandings about case-study research. Qual Inq 12(2):219–245

Ganann R, Ciliska D, Thomas H (2010) Expediting systematic reviews: methods and implications of rapid reviews. Implement Sci 5(1):56

Greenhalgh T, Annandale E, Ashcroft R, Barlow J, Black N, Bleakley A, Boaden R, Braithwaite J, Britten N, Carnevale F, Checkland K et al. (2016) An open letter to the BMJ editors on qualitative research. Br Med J 352:i563

Grant MJ, Booth A (2009) A typology of reviews: an analysis of 14 review types and associated methodologies. Health Inf Libr J 26(2):91–108

Haddaway NR, Bilotta GS (2016) Systematic reviews: separating fact from fiction. Environ Int 92:578–584

Hallsworth M, Rutter J (2011) Making policy better: improving Whitehall’s core business. Institute for Government, London

Harvey G (2013) The many meanings of evidence: implications for the translational science agenda in healthcare. Int J Health Policy Manag 1:187–188

Head BW (2010) Reconsidering evidence-based policy: key issues and challenges. Policy Soc 29(2):77–94

Higgins J, Lasserson T, Chandler J, Tovey D, Churchill R (2013) Methodological expectations of cochrane intervention reviews (MECIR). Cochrane Collaboration, London

Higgins JPT, Green S (2011) Cochrane handbook for systematic reviews of interventions version 5.1.0. The Cochrane Collaboration

Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D (2012) Evidence summaries: the evolution of a rapid review approach. Syst Rev 1:10

Knight GM, Dharan NJ, Fox GJ, Stennis N, Zwerling A, Khurana R, Dowdy DW (2016) Bridging the gap between evidence and policy for infectious diseases: How models can aid public health decision-making. Int J Infect Dis 42:17–23

Krug EG, Mercy JA, Dahlberg LL, Zwi AB (2002) The world report on violence and health. lancet 360(9339):1083–1088

La Caze A, Colyvan M (2016) A challenge for evidence-based policy. Axiomathes 27(1):1–13

McCrae J, Stephen J, Guermellou T, Mehta R (2012) Improving decision making in Whitehall:effective use of management information. Institute for Government, London, pp 7–8

Moat KA, Lavis JN, Clancy SJ, El-Jardali F, Pantoja T (2014) Evidence briefs and deliberative dialogues: perceptions and intentions to act on what was learnt. Bulletin of the World Health Organization 92(1):20–28

NICE (2014) Developing NICE guidelines: the manual. NICE, London

Oliver S, Bagnall AM, Thomas J, Shepherd J, Sowden A, White I, Dinnes J, Rees R, Colquitt J, Garrett Z, Oliver K (2010) Randomised controlled trials for policy interventions: a review of reviews and meta-regression. Health Technol Assess Monogr 14(16):iii–165

Parkhurst JO, Abeysinghe S (2016) What constitutes “good” evidence for public health and social policy-making? From hierarchies to appropriateness. Social Epistemol 30(5-6):665–679

Parker I (2013) Early developments: bridging the gap between evidence and policy in early-years education. Institute for Public Policy Research, London

Pawson R, Greenhalgh T, Harvey G, Walshe K (2005) Realist review-a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy 10(Suppl 1):21–34

Pearce W, Raman S (2014) The new randomised controlled trials (RCT) movement in public policy: challenges of epistemic governance. Policy Sci 47(4):387–402

Pearce W, Raman S, Turner A (2015) Randomised trials in context: practical problems and social aspects of evidence-based medicine and policy. Trials 16(1):394

Petticrew M (2003) Why certain systematic reviews reach uncertain conclusions. BMJ 326:756

Petticrew M, Whitehead M, Macintyre SJ, Graham H, Egan M (2004) Evidence for public health policy on inequalities: 1: the reality according to policy makers. J Epidemiol Community Health 58:811–816

Renfrew MJ, Mcfadden A, Bastos MH, Campbell J, Channon AA, Cheung NF, Silva DRAD, Downe S, Kennedy HP, Malata A, Mccormick F, Wick L, Declercq E (2014) Midwifery and quality care: findings from a new evidence-informed framework for maternal and newborn care. Lancet 384:1129–1145

Royle P, Milne R (2003) Literature searching for randomized controlled trials used in Cochrane reviews: rapid versus exhaustive searches. Int J Technol Assess Health Care 19:591–603

Rutter J (2012) Evidence and evaluation in policy making. Institute for Government, London

Ryan DPJ (2001) Bronfenbrenner’s ecological systems theory. http://www.floridahealth.gov/AlternateSites/CMS-Kids/providers/early_steps/training/documents/bronfenbrenners_ecological.pdf. Accessed 17 Aug 2017

Rycroft‐Malone J, Seers K, Titchen A, Harvey G, Kitson A, Mccormack B (2004) What counts as evidence in evidence‐based practice?. J Adv Nurs 47:81–90

Rycroft-Malone J, Mccormack B, Hutchinson AM, Decorby K, Bucknall TK, Kent B, Schultz A, Snelgrove-Clarke E, Stetler CB, Titler M, Wallin L (2012) Realist synthesis: illustrating the method for implementation research. Implement Sci 7(1):33

Saul JE, Willis CD, Bitz J, Best A (2013) A time-responsive tool for informing policy making: rapid realist review. Implement Sci 8(1):103

Scottish Government (2017) The Best Start: A Five-Year Forward Plan for Maternity and Neonatal Care in Scotland. Scottish Government, Edinburgh

Smith R (2013) The Cochrane Collaboration at 20. Br Med J 347:f7383

Stroup DF, Berlin JA, Morton SC et al. (2000) Meta-analysis of observational studies in epidemiology: a proposal for reporting. JAMA 283:2008–2012

Joanna Briggs Institute (2014) Joanna Briggs Institute reviewers’ manual: 2014 edition/Supplement. The Joanna Briggs Institute, Australia

Thomas J, Harden A (2008) Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med Res Methodol 8:45

Thomas J, Harden A, Oakley A, Oliver S, Sutcliffe K, Rees R, Brunton G, Kavanagh J (2004) Integrating qualitative research with trials in systematic reviews. BMJ: Br Med J 328:1010

Tompkins EL, Adger WN, Boyd E, Nicholson-Cole S, Weatherhead K, Arnell N (2010) Observed adaptation to climate change: UK evidence of transition to a well-adapting society. Glob Environ Chang 20:627–635

Watt A, Cameron A, Sturm L, Lathlean T, Babidge W, Blamey S, Facey K, Hailey D, Norderhaug I, Maddern G (2008) Rapid versus full systematic reviews: validity in clinical practice? ANZ J Surg 78:1037–1040

Acknowledgements

We would like to thank all of the sub-groups, civil servants, and review sponsors for their work in this reason. We would also like to thank Dr Lindsay Siebelt, Dr Alison McFadden, and Dr Veronica O’Carroll for their input into the review process. The work on the evidence reviews was supported by a grant from the Scottish Government.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gavine, A., MacGillivray, S., Ross-Davie, M. et al. Maximising the availability and use of high-quality evidence for policymaking: collaborative, targeted and efficient evidence reviews. Palgrave Commun 4, 5 (2018). https://doi.org/10.1057/s41599-017-0054-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-017-0054-8

This article is cited by

-

Living evidence and adaptive policy: perfect partners?

Health Research Policy and Systems (2023)

-

Exploring mechanisms for systemic thinking in decision-making through three country applications of SDG Synergies

Sustainability Science (2022)