Abstract

Science has always operated in a competitive environment, but the globalisation of knowledge and the rising popularity and use of global rankings have elevated this competition to a new level. The quality, performance and productivity of higher education and university-based research have become a national differentiator in the global knowledge economy. Global rankings essentially measure levels of wealth and investment in higher education, and they reflect the realisation that national pre-eminence is no longer sufficient. These developments also correspond with increased public scrutiny and calls for greater transparency, underpinned by growing necessity to demonstrate value, impact and benefit. Despite on-going criticism of methodologies, and scepticism about their overall role, rankings are informing and influencing policy-making, academic behaviour, stakeholder opinions—and our collective understanding of science. This article examines the inter-relationship and tensions between the national and the global in the context of the influences between higher education and global university rankings. It starts with a discussion of the globalisation of knowledge and the rise of rankings. It then moves on to consider rankings in the context of wider discourse relating to quality and measuring scholarly activity, both within academia and by governments. The next section examines the relationship and tensions between research assessment and rankings, in policy and practice. It concludes by discussing the broader implications for higher education and university-based research.

Similar content being viewed by others

Introduction: state and higher education, from national to global

A number of trends are responsible for driving change across higher education and university-based research over recent decades. There are two broad dimensions: the changing social contract between higher education and society on the one hand, and increasing reliance of society and the economy on knowledge-production in a globally competitive world, and correspondingly, the geo-politics of knowledge. In the first instance, global competitiveness and the changing nature of the nation-state since the oil crises of the 1970s have helped alter the underlying reality of policy discourse around the social contract between science and society. At the same time, changes in the mode of production and technological progress have facilitated the shift in favour of those national economies predicated on knowledge towards the global, and being ever more interconnected. In so doing, globalisation has partially transformed higher education from an institution concerned primarily with matters of community and nation-building to an internationalised sector central to matters of competitiveness and reputation, for institutions, nations, and graduates. This tension between the “before” and “now” of higher education’s globalisation remains unresolved. The increasing reliance on knowledge for economic competitiveness has obliged the state to remain involved in higher education, even as it purports to withdraw from other spheres through privatisation and marketization (Strange, 1996). While science has always operated in a competitive environment, the emergence and increasingly persuasive role of global rankings has made the tension between national and global tensions ever more apparent.

Calls for science to have a clearer, more direct role with respect to the national project emergedFootnote 1 First in the United States during the Sputnik era, with growing concerns about harnessing US scientific strength to economic growth (Geiger, 1993, pp 157–192). While Guston (2000) argues that science policy is concerned with matters of research integrity and productivity, it is the latter which is significant in this instance. 'Science, The Endless Frontier' (1945) by Vannevar Bush, Director of the US Office of Scientific Research and Development under Franklin D. Roosevelt, argued that social and economic progress was dependent upon 'continuous additions to knowledge of the laws of nature, and the application of that knowledge to practical purposes'. The subsequent 'golden' years were notable for free-flowing money and professorial self-governance, but the parameters began to change in later decades, ushering in new ground-rules for continued public endorsement and financial support for university-based research.

These developments were reflected in changes at the political level, not only as a reaction to the collapse of the post-WW2 Keynesian project and the growing fiscal crisis of the state (Castells, 2011, 185; O’Connor, 1973). Wider public concerns about the value, relevance and affordability of public services began to surface; as Trow (1974, 91) acknowledged, once matters come 'to the attention of larger numbers of people, both in government and in the general public…[they will] have other, often quite legitimate, ideas about where public funds should be spent, and, if given to higher education, how they should be spent'. In this context, higher education was 'no different from other publicly funded services (e.g., health care, criminal justice) where the State…[began to] put pressure on publicly funded providers to meet broad public policy goals (for example) to cut costs, improve quality or ensure social equity' (Ferlie et al., 2008, p 328). As the role of the state changed, public involvement shifted from a hard or top-down command and control model towards a softer 'evaluation' model, with emphasis on measuring, assessing, comparing and benchmarking performance and productivity (Neave, 2012; Dahler-Larsen, 2011). A key element of this change was evidenced in a growing role for the market as a more effective means by which to drive change, efficiency and public benefit for customers and consumers. The rise of neo-liberalism and corresponding adoption of principles of new public management are credited with changing the relationship between higher education and the state, and between the academy and the state. This led to new forms of accountability focused on measurable outcomes, increasingly tied to funding through performance-based funding or performance agreements. The discourse around 'public good' and 'public value' and how that is demonstrated or valorised strengthened over the intervening years.

In the wake of the global economic crisis, higher education was a victim of considerable reductions in public funding along with other 'austerity' measures in many countries (OECD, 2014). That higher education had an important role to play was not in dispute. Indeed, in line with human capital theory (Becker, 1993), investment in 'high quality tertiary education [was considered] more important than ever before' (Santiago et al., 2008, p 13) as competition between nations accelerated. Europe2020 (EC, 2010a), the European Union’s growth strategy for the coming decade, identified higher education and research development (HERD) as critical to economic recovery and global positioning. It identified specific priorities for tertiary attainment (minimum 40% of 30–34-year olds) and investment in Research, Development and Innovation (3% of EU GDP).Footnote 2 Because higher education and university-based research is mutually linked with the economic fortunes of the nation, matters of governance and accountability inevitably began to take centre stage.

But the fact remains, there have been various trade-offs and accommodations between accountability and autonomy over the years, in other words between the state and higher education. If higher education is the engine of the economy—as the academy has regularly argued (Baker, 2014; Lane and Johnstone, 2012; Taylor, 2016)—then its productivity, quality and status is a vital indicator of sustainability and a nation’s competitiveness (Hazelkorn, 2015, p 6)—and no more so than when many nation-states were faced with the spectre of economic/financial collapse. Delanty (2002, p 185) put it as follows: 'the question of the governability of science cannot be posed in isolation from the question of the governance of the university'.

The birth of global rankings is marked by the advent of Shanghai Jiao Tong 'Academic Ranking of World Universities' in 2003.Footnote 3 But, their true origins lie in the growing tension between the role of knowledge in/for global competitiveness and correspondingly, the national social contract between higher education and science—the twin dynamics aforementioned. Global rankings are a product of an increasingly globalised economy and an internationalised higher education landscape. By placing higher education and university-based research within an international and comparative framework, rankings affirm that in a globalised world, with heightened levels of capital and talent mobility, national pre-eminence is no longer sufficient. Despite considerable scrutiny and criticism over the years, rankings have persisted in informing and influencing policy-making, academic behaviour, and stakeholder opinion. This is due to the former’s simplicity as well as resistance to and absence of alternatives (Coates, 2016). In fact, the manner by which rankings have become a key driver of global reform of higher education highlights their significance for building strategies for competitive advantage. In this respect, rankings have significantly challenged and helped change the conversation around HERD performance and productivity, quality and value, and impact and benefit.

Their choice of indicators emphasises inputs, and reward wealth garnered due to institutional age, endowments, tuition or government investment. In so doing, they 'facilitate competition, define competitiveness, [and] normalise and celebrate competition' (Cantwell, 2016), legitimising inequities and widening the gap between winners and losers (Hazelkorn, 2016). They have managed to successfully challenge assumptions and perceptions of quality and status. As discussed below, assuring quality has hitherto been a basic tenet of academic self-regulation, usually at the programmatic or institutional level and through professional organisations by way of peer review. Over recent years, monitoring quality has become the concern of national agencies and is now gradually being taken up at the international level (Eaton, 2016). This trend towards greater steering and governance instruments has been matched by growing dissatisfaction with the robustness of traditional collegial mechanisms, twinned with an increasing requirement for international comparative and benchmarking data.

While nation states remain the primary field of authority and contestation over and about higher education and research policy, 'they no longer have full command over their destinies' (Marginson and van der Wende 2007, p 13). Individual higher education institutions (HEIs) and governments may choose to ignore rankings and the noise they generate; indeed, reactions to rankings often differ depending upon whether emanating from a developed or emerging economy. The former regard 'global rankings as an affirmation of or an outright challenge to their (perceived) dominance within the global geography of higher education and knowledge production', and the latter view them as a judgement on their state of development (Hazelkorn, 2016; Cloete et al., 2016), as the two examples below illustrate.

[A]mid today’s acute competition on the international scene, universities are a major factor affecting a country’s key competitive ability. Thus, creating and running world-class universities [in China] should be one of the strategic foci of building up a country (Min Weifang, Party Secretary of Peking University quoted in Ngok and Guo, 2008, p 547).

The most important natural resource Texas has is Texans. Unfortunately, our state suffers from a “brain drain” as many of our best and brightest students leave to further their education. A contributing cause is a lack of “tier one” universities in Texas (CPPP, 2009, p 1).

Evidence strongly suggests that even where and when institutional or policy responses are not slavish, rankings have significant influence. As illustrated by the growing use of 'international education statistics, performance indicators and benchmarks', rankings play an agenda-setting role, over-determining the 'rules of the game' (Dale, 2005, p 131; see also Henry et al., 2001, pp 83–105). They have become a de facto (research) assessment exercise, and an indicator of global competitiveness within 'world-science'.

This paper will examine some of these tensions, looking at the implications of rankings for and on research and our understanding of science, for the organisation and management of research, and for national policy. The paper will discuss the inherent contradictions between pursuing indicators favoured by rankings and national policy objectives, and the unintended consequences. Briefly, part (I) will set the argument in context by reviewing the literature around the globalisation of knowledge; part (II) will consider the rise of rankings in the context of the wider discourse around quality, and measuring scientific endeavour; part (III) will look at the relationship between rankings on research assessment policy and practice, and part (IV) will discuss the broader implications for higher education and university-based research.

Globalisation of knowledge and the rise of rankings

Recent decades have borne witness to the intensification of competition at the global level driven by a shift from physical capital to knowledge as the source of wealth creation. The advent of the post-industrial information-society beginning in the 1970s, followed by the rise of the knowledge-economy paradigm beginning in 1990s (OECD, 1996), transformed debates around higher education. These developments, in turn, parallel changes at the global level, marked by an increasingly integrated global-economy and shifts in the world-order often characterised as the transition from the British 19th to the American 20th to the proposed Asian 21st century. The completeness of these movements can be debated (Cox, 2012; Cantwell, 2016), but the OECD (2014, p 2) contends that the coming decades will continue to see a 'major shift of economic balance towards the non-OECD area, particularly to Asian and African economies'. In fact, if anything, the 2008 Global Financial Crisis and resulting Great Recession demonstrated the depth of interconnectivity and interdependency of the global economy, as individual nations struggled to insulate themselves from such phenomena. National policy instruments proved insufficient against global pressures, and attention turned to supra-national organisations, inter alia the European Union, International Monetary Fund and World Bank, to intervene (Drezner, 2014a, b). Globalization has been one the most significant influences on theinternational higher education landscape over recent decades, exerting considerable conceptual, competitive and strategic pressure. The term itself is used in multiple ways; nonetheless, it is useful to make some distinctions. On the one hand, there are quantitative increases in 'trade, capital movements, investments and people across borders'. On the other side, there are political changes “in the way people and groups think and identify themselves, and changes in the way states, firms, and other actors perceive and pursue their interests” (Woods, 2000, pp 1–2). Central to the concept of globalisation “is the sense that activities previously undertaken within national boundaries can be undertaken globally or regionally” (ibid., p 6). Whereas previously knowledge creation might have been confined (if not restricted) largely within national borders, globalisation encourages a view of borders that are necessarily permeable.Footnote 4

Involvement in science and international collaboration is the sine qua non of national competitiveness. Contrary to globalisation’s critics (Waters, 2001, pp 222–230), it has provided the opportunity for developing economies to gain a foot-hold in the world economy (Moyo, 2009, pp 114–125), although it remains insufficient (Stiglitz, 2003, 2016). Rankings capture some of the changes that affect national economies and international knowledge flows, though they are themselves part of the global knowledge race (Altbach, 2012). While the dominant countries in the top-100 or top-200 are likely to remain developed economies for the next while, there is strong evidence of other countries – most significantly China—having an ever-greater presence in the top-500. The knowledge economy paradigm is universally accepted, across all policy circles, in the wake of its promotion by the OECD (1996). Likewise, despite the Great Recession (Pew Research Global Attitudes project, quoted in Drezner, 2014b, pp 148–150), debates about GATS and TPP, and recent social-political movements in the US and across Europe (Inglehart and Norris, 2016), the tenets of globalisation remain supported internationally.

There has been a transition away from 'tangible created assets' (i.e., physical capital and finance) in developed economies to 'intangible created assets' in the shape of knowledge and information in various forms (Dunning, 1999, p 8), which has put technological innovation (in the form of Research, Development and Innovation) at the heart of globalisation (Black, 2014, pp 364–365; Narula, 2003). This transformation connects directly with the increase in expenditure on all kinds of research and development in OECD economies between 1975 and 1995, which rose at three times the rate of output in manufacturing over the same period (Dunning, 1999, p 9). That said, higher education is not simply a passive recipient of the effects of globalisation. Universities are crucial to the process as 'strong primary institutions'Footnote 5 (Baker, 2014, p 59), producers of the 'knowledge spillovers' (Audretsch, 2000, p 67), necessary for economic growth and competitiveness; they are also the primary component of trans-national education (Huang, 2007). OECD data suggest that, in 2014, HERD accounted for almost 23% of gross expenditure on research and development in EU28 countries, and 18% in OECD countries (OECD, 2016). Its role has been theorised extensively through notions of the triple, quadruple, and quintuple helices (Etzkowitz and Leydesdorff, 1999; Etzkowitz et al., 2000; Carayannis and Rakhmatullin, 2014, p 219, 236), and the co-production of knowledge (Cherney, 2013; Gibbons et al., 1994). As well as this, research itself has become globalised, with increases in academic mobility and international scholarly collaboration, and with branch campuses increasingly focussing on research. Research productivity itself now strongly correlates with international collaboration, and is central to augmenting national research competitiveness (Kwiek, 2015).

Looking at the social and political aspects of globalisation, there is a changing relationship between 'states, firms, and other actors'. One of the initial fears relating to globalisation was the perceived threat of a homogenising effect in these changing relationships, a so-called 'McDonaldization' (Waters, 2001, p 222) of cultural convergence where the pressures of the international market would lead to a sameness in societies and cultures. There was fear of an erosion of national distinctiveness, but concerns of global convergence have proved exaggerated and are by no means a homogeneous or one-way process; 'in the case of multinational enterprises, firms are making more nuanced and complex calculations about how to organise and where to produce' (Woods, 2000, p 17; see also Nye, 2006, pp 78–81). Firms, multinational and transnational, have sought to use national/regional and cultural diversity as assets. Governments and universities have also applied the logic of competitive advantage to their higher education and research landscapes. For example, Japan, Russia, Vietnam, France, China and over 30 other countries have pursued excellence initiatives (Salmi, 2016; Yonezawa and Yung-chi Hou 2014; Shin, 2009). These have emphasised the policies of institutional selection, e.g., 'picking winners', in order to concentrate capacity and capability, intensify research activity and promote 'excellence'. Likewise, research prioritisation has been undertaken; in these instances, national governments, as the primary funder of higher education research, has set high-level goals aligning research and resource allocation with economic priorities (Gibson and Hazelkorn 2017).

The changing relationship between different societal actors is evident also at the regional level. The European Union is a good example, but ASEAN is also beginning to explore these geopolitical potentialities (Robertson et al., 2016). The EU identified higher education across its member states as requiring 'in depth restructuring and modernisation if Europe is not to lose out in the global competition in education, research and innovation' (EC, 2006, p 11). Thus, it set out a strategy of capacity building as a key plank of the modernisation agenda recasting 'research in Europe' as 'European research', i.e., as taking place within the European context, rather than in disparate, national contexts. More recently, it has pursued the Smart Specialisation Strategy, whereby European regions have been encouraged to build up their own strengths, and manage their priorities, in line with national and regional strategies. The intention is to maximise collaboration and leverage expertise across higher education and science, enterprise, local/regional government and civil society (EC, 2012; Carayannis and Rakhmatullin, 2014, p 213; EUA, 2014).

Yet, despite these developments, many of the fundamental structures at the national level remain unchanged. Nayyar (2002, p 368) writes that while economic globalisation has been a challenge to the 'existing systpem' of supranational and international institutions (e.g., United Nations, International Monetary Fund, World Bank, etc.), 'there is virtually no institutional framework for this task, which is left almost entirely to the market' (Nayyar, 2002, p 368) Similarly, the diversity of education systems and standards across Europe have made it difficult to make comparisons or talk about different countries in the same breath. This began to change with the introduction of policies and frameworks such as Bologna and then the European Qualifications Framework and European Standards and Guidelines—initiatives which are now influencing practice in different world regions. Accordingly, they go some way towards creating a 'metastructure' (Maassen and Stensaker, 2011, p 262) that makes comparison, and mobility, possible. The initial requirement for such a metastructure was simply to allow people to say 'this qualification X is equivalent to that qualification Y.' It allows for comparison across institutions, systems, and countries, and provides information about quality and standards. Over the years, these initiatives have coincided with the formalisation of the European Higher Education Area and the European Research Area.

Traditionally is has been the role of government to provide goods for the public benefit—such as education. However, the current phase of globalisation means this may be understood differently by different governments, and there may be a requirement for some actions to be undertaken at the global level. Information about higher education is one such good, and its provision to citizens in a globalised context requires 'the strengthening of existing institutions in some areas and the creation of new institutions in other areas' (Nayyar, 2002, p 374). Achieving this is easier said than done. There have been attempts to address gaps in the form of the OECD AHELO (Assessment of Higher Education Learning Outcomes) initiative, and the EU 'U-Multirank project',Footnote 6 but the later was dropped after its feasibility study raised hackles and the latter’s success has been mixed at best. Rankings have emerged to fill this gap, initially enabling students to make more informed decisions. As with economic globalisation, university ranking companies have filled the space left by what Nayyar terms 'missing institutions' (Nayyar, 2002, p 375) of globalisation, providing information about the nature and quality of research and higher education, and correspondingly about national competitiveness.

Rankings and measuring scholarly scientific endeavour

Peer review is a prized function of the academy—a symbol of academic-professional self-regulation, which acknowledges academic achievement and contribution. It has been a cornerstone of the academy since the 17th century although 'prior to the Second World War the process was often quite uncodified' (Rowland, 2002, p 248). It is based upon the principle that only people with expertise in a particular scientific field can assess, evaluate and judge the quality of academic scholarship and the resultant publication. The latter is generally used in the context of peer-reviewed articles for academic journals, but usually extends to monographs, and other forms of academic/scientific writing.

Peer review is at the heart of the processes of not just medical journals but of all of science. It is the method by which grants are allocated, papers published, academics promoted, and Nobel prizes won….When something is peer reviewed it is in some sense blessed (Smith, 2006).

Rowland concurs, saying that while there are four main functions assigned to peer review, including 'dissemination of current knowledge, archiving of the canonical knowledge base, quality control of published information, and assignment of priority and credit for their work to authors,' (2002, p 247) quality control is the primary purpose. Over the years, a widely accepted process has grown up around peer-review whereby the author’s name is withheld, and the review is conducted by people unknown to or unconnected with the author (i.e., 'double blind' review) to avoid any possibility of conflict of interest. The process has been further strengthened by being 'informed' by bibliometric and other sources.

Dill and Beerkens (2010, p 2) place academic quality assurance within this lineage, identifying 'subject examinations, external examiners, as well as review processes for professional meetings, academic journals, and the award of research grants.' At the nation-state level, academic quality assurance is associated with and provides the bedrock for accreditation, which authenticates an institution’s right to operate and/or offer qualifications. It 'is best defined as equivalent to academic standards—the level of knowledge and skill achieved by graduates as a result of their academic program or degree' (Dill and Beerkens, 2010, pp 2–3). Accordingly, a whole panoply of 'systematic and rigorous approaches' processes and procedures (Harman, 2011, p 40), and national as well as cross-border organisations have grown-up around quality assurance. In Europe, the European Association for Quality Assurance in Higher Education (ENQA) has developed 'an agreed set of standards, procedures and guidelines on quality assurance' and explored 'ways of ensuring an adequate peer review system for quality assurance and/or accreditation agencies or bodies' (ENQA, 2005). To insulate these processes from political or other interference, these organisations tend to be (semi-)independent from government and/or regulatory authorities. In this respect, academic quality assurance and academic accreditation now hold a similar place in the canon of academic professionalism as that of peer review.

Peer review is not, however, without its critics. As Smith (2006) points out, 'People have a great many fantasies about peer review, and one of the most powerful is that it is a highly objective, reliable, and consistent process.' However, the situation can be the opposite, because peer-review is based on assessment and judgement. There is always room for 'professional' disagreement about the quality, value, significance, etc. of scholarly work. This may be affected by the choice of reviewers, their own experience, expertise or context. Indeed, the choice of reviewers may reflect other (hidden) biases with respect to membership of informal networks, gender, race, geography, non-English-speaking countries, etc. (Adler and Harzing, 2009; Davenport and Snyder, 1995; Altbach 2006). Becher and Trowler (2001, p 81) refer to problems associated with 'subtle' forms of elitism and bias, and the way in which academics attach a prestige hierarchy to various attributes or characteristics, such as the university qualification, journals in which papers have been published, references used, scientific/methodological approach, etc. The type of academic training and/or seniority can significantly influence a reviewer’s judgement. Bias can also arise from professional jealousy. Quality assurance can be subject to similar limitations because the panel of reviewers often share common 'assumptions and informal networks and procedures' (Becher and Trowler, 2001, p 87). Cost can be another problem especially with academic quality review or research evaluation processes; costs associated with teams of internationally mobile academics can influence who is chosen to participate or can restrict the process. Thus, peer review can be a 'gate-keeper', controlling innovation as well as new ideas and methodologies, discouraging 'intellectual risk taking' Marginson (2008, p 17), and/or 'freezing-out contributions that are seen as in some way threatening (usually because they purport to undermine an established ideology or school of thought)' (Becher and Trowler, 2001, pp 89–90).

Perhaps, the fundamental drawback of any qualitative process beyond the disciplinary and institutional issues outlined here, however, is the difficulty making cross-border comparisons (which circles back to aforementioned issue of 'missing institutions' at the global level). This is true for academic quality assurance as well as for research assessment. Not only can the process be constrained by the types of issues identified above, but the process itself is likely to be confined and defined by its context. In addition, the ensuing reports and write-ups of results are usually lengthy, and written in 'opaque academic language, making it difficult to understand or compare performance between institutions, especially internationally' (Hazelkorn, 2013a). Their purpose is usually to aid quality improvement or enhancement rather than compare performance or measure quality. Academic peer-review has a similar role wherein reviewers are often asked to offer suggestions for improvement to authors. Where the process is aligned with funding decisions—i.e., used to aid resource allocation—the final decision tends to be written in a more definitive manner.

Arguably and ironically, the attributes which have underpinned peer review’s value to the academic community are those which have contributed to something of a breakdown in trust between higher education and students, policy makers and civil society, and has undermined the social contract (Hazelkorn, 2012a, 2012b; Harman, 2011). This has resulted in accreditation becoming a huge political topic in the US (Camera, 2016; Eaton, 2011), even at the level of the presidential campaign, similar to the level of discourse in the UK and Australia, and many other countries (Middlehurst, 2016; Dill and Beerkens, 2010). Politically, there is a growing desire to move beyond assessing quality only within the academy, to linking quality to relevance and resources (OECD, 2010). Global rankings claim to help in this regard, and speak to this policy and political agenda. They are also seen as an important and independent interlocutor between higher education and society. These developments have moved assessment beyond the purview of the academy.

Rankings (global and national) work by comparing HEIs using a range of different indicators as an overall proxy for quality. They produce an abundance of quantitative information, aggregated to a final score, and placed in an ordinal format, which is simple and easy to read and understand. Most criticism of rankings focuses on the arbitrariness of the choice of indicators, which are each weighted according to 'some criterion or set of criteria which the compiler(s) of the list believe … measure or reflect … academic quality' (Webster, 2001, p 5). In this way, rankings claim to describe the quality of a HEI while in reality they say little about educational quality, teaching and learning, quality of the student experience, or about the contribution or impact of higher education on society or the region. The results can vary annually, usually due to small methodological changes, which undermines their comparability value. Indeed, often changes in a HEI’s position are relational in that it has more to do with changes in other HEIs’ position, rather than any actual change within the institution itself, a fact of rankings which usually goes unremarked in the annual media frenzy attached to their release. Moreover, using the same set of indicators to measure and compare different HEIs meeting diverse needs in different social, economic and geographic contexts, can lead to simplistic comparisons based on insignificant differences.

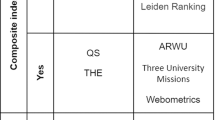

Because of difficulties identifying and sourcing meaningful, reliable and verifiable international comparative indicators and data, global rankings give preferential weight to research. They use the standard international bibliometric practice of counting peer-reviewed publications and citations to measure academic output and productivity as a proxy for faculty or institutional quality. This methodology tends to favour the physical, life and medical sciences, benefits countries where English is the native language, and emphasises international impact. There is heavy reliance on Web of Science (WoS) which collects data on ~12,000 publications, and Scopus which has information on ~21,500—although these indices capture only a proportion of published international scholarly activity. Recent efforts to include books and book chapters may begin to rebalance the in-built bias and thus benefit the humanities and social sciences (THE Reporters, 2016). To get around this, WoS has joined forces with 'Google Scholar' to connect researchers’ WoS and Google Scholar identities, as well as capturing grey-literature through GreyNet,Footnote 7 and capture the published research of Latin American authors through SciELOFootnote 8 and RedALyC.Footnote 9 Scopus is developing techniques to capture AHSS research, while 'THE' is examining options for including research impact, innovation and knowledge transfer. There are some exceptions, most notably CWTS Leiden Ranking which uses its own bibliometric indicators to assess the scientific output of over 800 universities worldwide according to size of publication output, collaboration and impact, while 'SCImago'Footnote 10 calculates publication over a five-year period and reflects scientific, economic and social characteristics of institutions. 'U-Multirank', developed by the European Union and based on principles of user-driven, multi-dimensional, peer-group comparability, and multi-level (Van Vught and Ziegele, 2012), relies on Leiden data (CWTS, 2016).

The true picture of research-bias is demonstrated when research and research-dependent indicators are combined (e.g., academic reputation, PhD awards, research income, citation, academic papers, faculty and alumni medals and awards, and internationalisation). When this is done, the research component rises significantly, for example: 'Academic Ranking of World Universities' (100%), 'Times Higher Education World Rankings' (93.25%), and 'QS Top Universities' (70%) (Hazelkorn, 2015, pp 55–57). This over-emphasis on research is the rankings’ Achilles’ heel. However, the effect on/for scholarly scientific endeavour goes much further with broader implications for research assessment.

Rankings and research assessment policy and practice

Connections between global university rankings and research assessment can be considered in terms of: (i) whether there is a direct relationship whereby rankings have been drivers of research evaluation; or (ii) whether rankings and research assessment have an indirect association, with the former being an influencer of the latter.

Evidence in the first instance is relatively slim. Systematic research evaluation pre-dated rankings in many instances. The UK Research Assessment Exercise originated in 1986, and with other evaluations in Australia and Italy following and building on the UK’s approach (Geuna and Piolatto, 2016, p 264; Hicks, 2012, p 251). Research evaluation and assessment in countries such as Argentina, Finland, Japan, the Netherlands, New Zealand, Portugal, South Africa and Spain also predate rankings (Guthrie et al., 2013, pp 51–121; Hicks, 2012, p 252). On the other hand, some governments do use ranking as a simple evaluation tool especially in countries which have had a weak quality assurance system, for example Malaysia, Nigeria, Indonesia, and Tunisia (Hazelkorn, 2015, p 169).

National research evaluations and global rankings differ considerably with respect to data collection, usage, interpretation and format. They may use similar bibliometric sources, but—in contrast to global rankings—national rankings, like national research evaluations, may be able to generate and have access to significant nationally specific data which can involve peer review as an element of the evaluation in terms of review panels etc. State involvement usually requires the participation of HEIs and academics, in return for which some degree of institutional autonomy is granted. This grounds the legitimacy of the exercise, and is integral to qualitative national research evaluations such as the UK’s REF, Australia’s ERA, Canada’s Canada Research Chairs and the Netherlands’ Standard Evaluation Protocol (see Hazelkorn et al., 2013, pp 93–95 for an overview of these and other national research evaluations).

In contrast, global rankings rely on internationally available data which has already been collected, either by the state or by data companies such as Elsevier or Thomson Reuters. Where rankings, such as 'U-Multirank' or 'THE', request institutional data, this usually constitutes a smaller proportion of the overall data set. The academy has occasionally sought to boycott providing data in order to undermine rankings; notable examples are in Canada with Maclean’s (Alphonso, 2006), in the US with USNWR (Jaschik, 2007), and League of European Research Universities with regard to U-Multirank (Grove, 2013). Similar action is perhaps less likely in a national, governmental process, especially if connected with funding allocation.

Global rankings and national research evaluation also differ with respect to format. Whereas rankings reduce complex and myriad activities of HEIs to a single number, research evaluations tend to present a more nuanced, complex picture. On the other hand, it can be argued that once research evaluation results are communicated, media companies—which are the primary producer of rankings—can be quick to convert research evaluations into a ranking format. There are examples of this, for example: the UK’s 'REF' (Jump, 2014; Guardian, 2014) Australia’s Excellence in Research for Australia (ERA) (AEN, 2015), and Italy’s VQR (Abramo and D’Angelo, 2015). These are suggestive of a desire, stoked or encouraged by discussion in the media,Footnote 11 for the simplicity of rankings, rather than fidelity to the complexity of university research that government research assessments aim to deliver.

While rankings did not 'originate' research evaluation, there is evidence that global rankings have subsequently influenced such assessments, and their successors. The strongest influence of rankings is on national policy evidenced by the multiplicity of excellence initiatives, arguably the latest manifestation of a broader research evaluation discourse. The term is associated with the German Excellence initiative of 2006 —although Chinese, Canadian, Japanese, and South Korean initiatives predate the actual birth of global rankings—these initiatives have been adopted by over thirty countries around the world. That they are influenced by rankings is suggested by the 'dramatic increase in excellence initiatives since the publication of the Shanghai 'ARWU' and 'THE' global rankings in 2003 and 2004, respectively, reflecting the growing interest of national governments in the development of world-class universities' (WCU) (Salmi, 2016, p 217). Many of them state openly their objective to improve the standing of universities within the rankings and/or use the indicators of rankings. For example, the Russian 5–100 plan, as its name suggests, intends to get five universities into the top-100 by 2020 (Grove, 2015), while Nigeria aims to have “at least two institutions among the top 200 universities in the world rankings by 2020 – the so-called 2/200/2020 vision” (Okebukola, 2010; see further examples in Hazelkorn, 2015, pp 173–178, 181–198). While these initiatives tend to focus on building up WCUs, they are predominantly about research capacity because in the global knowledge economy the assumption is that research (production of intellectual property and commercialisation into new goods and services) matters more than teaching.

The exact nature of the relationship between global rankings and different policies remains difficult to pin down. Explicit mentions of rankings in policy are rare albeit of-the-record conversations or speeches are not. One such example is the Stern review of the Research Excellence Framework (REF) which asked '[h]ow do such effects of the REF compare with effects of other drivers in the system (e.g., success for […] universities in global rankings)?' (BIS, 2016, p 4). Similarly, worth noting is that excellence initiatives (such as the Chinese 211 and 985) often contain indicators that are nowhere to be found in any of the rankings, e.g., interdisciplinarity. This may be a result of balancing the needs of different constituencies within a policy context in order to maintain legitimacy, and resisting the appearance that a public system is the dog-being-wagged-by-the-tail of private ranking companies. Whatever the reason, rankings feature implicitly rather than prominently helping to shape the policy discourse by playing a powerful hegemonic function (see Hazelkorn, 2015, pp 11–15). Lim and Oergberg (2016, p 2) refer to rankings as an 'accelerator' of higher education reform. As such, rankings are a prominent part of a 'policy assemblage' through which a 'mix of policies, steering technologies, discursive elements, human and social agents, among others, that constitute the spaces of reform in higher education in which rankings are employed' can be understood (ibid., p 4). A similar effect has been described by HE leaders, one of whom declared that rankings 'allows management to be more business-like”; not so much a management tool but “a rod for management’s back' (quoted in Hazelkorn, 2015, p 107).

One sees this manifested in different countries, through the often-adventitious use of rankings by policymakers. In Denmark, successive Prime Ministers have referred to rankings to call for improved performance and international competitiveness. In 2009, Prime Minister Lars Løkke Rasmussen declared that Denmark should have one HEI in the European top ten of the 'THE-QS', a desire subsequently complicated by those two companies going their separate ways to create two different rankings in following years (Lim and Oergberg, 2016, pp 5–6). Separately, Danish immigration policy had proposed using rankings as a means of ascertaining the quality of higher education attainment of applicants in the migration system, with points given if their degree had been granted by a HEI in the top-100 of the 'THE-QS' or 'ARWU' (Hazelkorn, 2013b). Elsewhere in Europe, while Germany had a national ranking since 1998 with the 'CHE Hochschulranking', the history of its universities and assumptions of their quality, was severely tested by the advent of global rankings. By 2005 the first excellence initiative was launched 'in an effort to reclaim Germany’s historic leadership position in research' as a response to this rude awakening prompted by global rankings (Hazelkorn, 2015, pp 181–182). Japan similarly had a number of national rankings, but it was the advent of global rankings highlighted areas of risk, e.g., weak levels of internationalisation and competition from China as an educational hub in east-Asia, prompting a period of national reform in higher education. The importance of increasing incoming international students and concentrating 'excellence' in global research centres have formed a key part of government policy; the correlation of both factors with global rankings has not been accidental (ibid., pp 188–191).

Implications for higher education research and development

Once research is seen to have value and impact beyond the academy, there are implications for the organisation and management of research, which research is funded, how it is measured and by whom. What is the balance between HEIs producing a cohort of skilled knowledge workers who can catalyse the adoption of research, conduct further research and be smart citizens able to engage actively in civil society vs. HEIs as producers of new knowledge and innovations to underpin economic growth and competitiveness? What is the balance between researcher curiosity vs. national priorities, and between selectivity (funding excellence wherever it exists) vs. concentration (targeted funding to strengthen capability and build critical-mass)? If the former, policy decisions might spotlight the importance of research in HEIs for undergraduate as well as post-graduate students, and support and encourage new and emerging fields rather than prioritising research students and existing strengths. Likewise, the emphasis might be on supporting university-based research to enhance the learning environment rather than building-up independent centres and/or research-enterprise organisations which can have a tendency to over-rely on narrowly defined technology-driven innovation (BIAC, 2008; Lundvall, 2002; Arai et al., 2007). In contrast, if the latter, research assessment might prioritise relevance, alignment with national and/or regional objectives. It might be even more instrumentalist in terms of emphasising particular fields of science, and specify particular outcomes and impacts, such as patents, licences, high performance start-ups, etc.

Assessment methodologies can similarly send important signals. There are, for example, differences between whether emphasis is placed on peer review vs. stakeholder esteem, and on whether impact and benefit is assessed in terms of knowledge, business and enterprise or society. Defining and inserting the “end user” into the process has become increasingly important, not just as a reviewer or assessor of the outcome but as an active contributor to the origination and design of the research idea, methodology and process. These ideas have been around for a while (see Gibbons et al., 1994) but have now been taken up by the EU via its responsible research and innovation strategy (EC, n.d.).

This discussion underlies the mutual inter-dependency between higher education and research eco-systems and society, whereby emphasis on and/or changes to one part of the system independently of the other can produce adverse and unintended results across the whole eco-system. In the rush to harness higher education and university-based research to economic recovery and growth, often unrealistic expectations are placed on higher education on the basis that it can and should produce more spin-off companies, direct jobs, patents with licensing income etc. (as per Baker’s [2014] notion of HEIs as 'secondary institputions' noted above). Few HEIs do this very well because the output rarely happens at the level of expected success. In fact, “research may now be emerging as the enemy of higher education rather than its complement” (Boulton, 2010, p 6). While it is not wrong to develop an infrastructure that supports this activity, it is unlikely to produce miracles in most cases. Instead, attention should be placed on research-related education and the overall capacity and capability of the higher education and research eco-system.

Rankings have had an impact on the way in which we understand the higher education and research ecosystem, by effectively proclaiming some knowledge is more important than other knowledge. This comes about by using methodologies which benefit the sciences, and as a result, insufficient attention is being given to the arts, humanities and social sciences (Hazelkorn, 2015; Benneworth et al., 2017). New research fields and inter-disciplinary research are also under-represented. Heavy reliance is placed on that which can be easily measured rather than what may be novel or innovative. At the same time, emphasis is put on research which has global impact in terms of citation count rather than research, which may feature in national goals or policy-related publications which may have regional or culturally relevant outcomes. By measuring 'impact' in terms of interactions between academics, rankings undermine the wider social and economic value and benefit of research. There is a breadth of outputs and outcomes, hidden beneath the journal article and citation iceberg, and effectively discounted; these derive from and include research which benefits society and underpins social, economic and technological innovation in its broadest sense. Instead, rankings entrench a traditional understanding of knowledge production and traditional forms of research activity. This in turn reinforces a simplistic 'science-push' view of innovation, and the view that research achieves accountability within the academy rather than within society (Hazelkorn, 2009).

Problems associated with rankings is not simply the prioritisation of research or focus on particular scientific disciplines. It is not a question of whether 'ARWU' is more scientifically rigorous than either 'THE' or 'QS', the latter coming in for considerable criticism as bad social science (Marginson, 2014). Rather, the way in which rankings conceptualise, measure and assess scientific endeavour runs counter to everything we have learned about the dynamism of the research and innovation eco-system. Instead of knowledge being (co)produced with an array of other 'knowledge creators' and with value to society, rankings perpetrate a traditional, elite view of the academy standing aloof, pushing out knowledge in a uni-directional flow. Fixated on the basic/fundamental end of the research spectrum as the only plausible type and measure of knowledge, they fail to recognise and account for the fact that the process of discovery is truly dynamic, inclusive of curiosity-driven and user-led, and blue-sky and practice-based (Hazelkorn, 2014b).

There are difficulties also with the way in which rankings conceptualise impact in terms of a singular focus on citations. Instead, there is a need to go beyond direct 'tangible' impact (e.g., output, outcome) to include much broader range of impact parameters, including scientific human capital, skill sets, etc. This includes outcomes and consequences emerging from collaborative team working, and from learning and knowledge exchanges, that underpin open innovation processes which emerge from and co-exist with educational and pedagogical processes. This learning dynamic is the fundamental role for higher education—or that which puts the 'higher' into higher education. Consideration should also distinguish between short-term and long term, and between planned/unplanned, and intended/unintended impact. Some of these problems derive from reliance on traditional bibliometric indicators, and the fact that bibliometrics offers an relatively simple codification of research activity. By measuring 'knowledge production and dissemination in limited fields and in traditionalist ways, they provide competitive advantage to elite universities and nations which benefit from accumulated public and/or private wealth and investment' (Hazelkorn, 2016), rankings take on all the attributes of the 'gate-keeper' they arguably sought to challenge. There is added significance because most rankings are commercial operations.

Conclusion

Ironically, at a time when society is increasingly reliant on higher education to play a major role directly through the quality of graduates and indirectly through scientific endeavour, the indicators of quality and excellence prominent in rankings are promoting the virtues of the self-serving, resource-intensive WCU. The question of what the academy can do for its country is being replaced by what the university can do for the world—or the global elite. The more the WCU engages globally, recruits talent internationally, and is unfettered by national boundaries and societal demands, the more it wins praise. The WCU has been encouraged to lose its 'sense of territorial identity and […] ties to local and regional public support for […] educational, research and civic missions' as it seeks global recognition (Christopherson et al., 2014, p 4; Marginson, 2013, p 59). However, the academy is not an innocent bystander in this process, as its core values are wrapped up in the academic-professional practices discussed above. Because competing in the global reputation race is costly, many governments are aggressively restructuring their systems, in the belief that elite research universities have a bigger impact on society and the economy, or have higher quality.

There are emergent alternatives to this reputation race (see Hazelkorn, 2015, pp 214–227). One is found in Douglas’s (2016) formulation of the 'flagship university', in explicit opposition to the self-serving WCU. Douglas describes the flagship institutions by referencing the history of the US public university, conceived as comprehensive institutions rather than simply polytechnics, and with a tripartite mission of teaching, research, and public service. A related concept is the 'civic university' (Goddard et al., 2016) which, like the flagship university, has a history long predating the advent of rankings and the WCU. It formed the foundation of an expansion of higher education in the north and midlands of Britain in the Victorian period, serving a purpose similar to the land grant universities of the US which Douglas’s idea also draws upon. Similarly, the civic university amplifies the public mission of higher education, setting up a bulwark against the innate propensity for HEIs to be concerned with their reputation, given that 'prestige is to higher education as profit is to corporations' (Toma quoted in Crow and Dabars, 2015, p 123). In both of these conceptualisations of the university, there are on-going tensions between national and globally predicated forms of excellence.

Another alternative to the WCU takes a more macro perspective, by suggesting a 'world-class system' (WCS) that seeks to maximise the benefit and collective impact for society over all (Lane and Johnstone, 2013). In the age of massification, the research university is no longer the sole provider of new ideas or innovation; indeed, as more graduates are produced, there are more alternative sites of knowledge production (Gibbons et al., 1994). Open innovation involves multiple partners inside and outside the organisation; boundaries are permeable and innovations can easily transfer inward and outward. The WCS recognises this, emphasising mission differentiation with respect to field specialisation. Different institutions serve as knowledge producers aligned to their expertise and regional/national capacity. Accordingly, the higher education and research eco-system is enriched by having a diverse set of HEI, interacting with other educational providers, to provide a wide range of differentiated outputs, outcomes, impacts and benefits for society over-all. Importantly, the concept of the WCS implicitly notes the rhetorical power of the WCU discourse, and accepts that rankings and discourses of reputation and prestige are not going away. However, it turns the discourse on its head. But, such a policy paradigm runs counter to rankings which focuses on hierarchical or vertical rather horizontal differentiation. With distinctive missions, HEIs complement each other in order to maximise capacity beyond individual capability (see Hazelkorn, 2015, pp 216–227).

This article set out to discuss some interrelating threads of the globalisation of knowledge, rankings and research quality, assessment and policy, and their implications for HEIs. There are multiple overlapping and conflicting priorities and responsibilities for the state and HEIs, and this article has given an overview of these. Rankings have decisively changed the nature of the conversation around higher education, and how higher education is discussed by government, in policy, and through the media (see also Hazelkorn, 2017). The years since their appearance at the turn of the millennium have shown rankings’ staying power – as an instrument for assessing university activities but also as a signifier of highlighting higher education’s importance for national prestige and competitiveness. The conflict between the national and global that rankings have exposed has not been resolved, but there are responses developing that go some way to mitigating the excesses of this tension.

Data availability

All data generated or analysed during this study are included in this published article.

Notes

One can say re-emerged here, as after World War II there was some attempt to assert civilian, professional academic values in fundamental research (Geiger 1993, pp 332–333). Universities nevertheless remained key to the US federal research agenda, in a manner probably not paralleled in other countries where the “national infrastructure for research and development consists of public and private research laboratories that are largely disconnected from universities” (Vest, 2007, p 25).

This had also been the target for 2010 as set out in the Lisbon Strategy, but Europe’s R&D expenditure as a percentage of GDP didn’t increase between 2000 and 2008, even before the Global Financial Crisis (EC, 2010b, p 13).

A caveat here is, of course, that there has long been international scholarly connections, e.g., in terms of Victorian globalisation which saw the relations between the institutions of the 'settler colonies' of the British Empire and home universities creating a 'British academic world' beyond the British Isles (Pietsch, 2013). Nevertheless, the novel aspect in this new phase of academic globalisation is how structural it is, with research projects and funding programmes as well as researchers now transcending borders.

Baker distinguishes between strong primary institutions which effect change (i.e., they are active), and secondary institutions which are affected by change with external causes (reactive). One could thus distinguish between higher education as a primary institution in terms of research and international knowledge circulation and the effects this has on globalisation, but also as a secondary institution in terms of 'internationalisation' of student and staff.

Who take these rankings at face value (Saisana et al., 2011).

References

Abramo G, D’Angelo CA (2015) The VQR, Italy’s Second National Research Assessment: Methodological Failures and Ranking Distortions. Journal of the Association for Information Science and Technology 66(11):2202–2214

Adler NJ, Harzing AW (2009) When knowledge wins: Transcending the sense and nonsense of academic rankings. Acad Manag Learn Educ 8(1):72–95. https://doi.org/10.5465/AMLE.2009.37012181

Australian Excellence Network (AEN) (2015) ERA research excellence rankings. http://www.universityrankings.com.au/research-excellence-rankings.html

Alphonso C (2006) Universities boycott Maclean’s rankings. In: The globe and mail. http://www.theglobeandmail.com/news/national/universities-boycott-macleans-rankings/article1102197/. Accessed 14 Oct 2016

Altbach PG (2006) The dilemmas of ranking, International Higher Education, 42 https://ejournals.bc.edu/ojs/index.php/ihe/article/download/7878/7029. Accessed 2 Sept 2016

Altbach PG (2012) The Globalization of college and university rankings, change: The magazine of higher learning, 44(1):26–31. http://eric.ed.gov/?id=EJ953463. Accessed 2 Sept 2016

Arai K, Cech T, Chameau J-L, Horn P, Mattaj I, Potocnik J, Wiley John (2007) The future of research universities. Is the model of research-intensive universities still valid at the beginning of the twenty-first century? EMBO Rep 8(9):804–810

Audretsch DB (2000) Knowledge, globalization, and regions. In: Dunning JH (ed) Regions, globalization, and the knowledge-based economy. Oxford University Press, Oxford, p 63–81

Baker DP (2014) The schooled society: The educational transformation of global culture. Stanford University Press, Stanford, CA

Becher T, Trowler PR (2001) Academic tribes and territories, 2nd ed., SRHE/Open University Press, Buckingham

Becker GS (1993) Human capital: A theoretical and empirical analysis, with special reference to education. 3rd ed., University of Chicago Press, Chicago,

Benneworth P, Gulbrandsen M, Hazelkorn E (2017) The impact and future of arts and humanities research. Palgrave Macmillan, Basingstoke

Business and Industry Advisory Committee (BIAC) to the OECD (2008) Comments on the OECD project on trade, innovation and growth. Organisation for Economic Cooperation and Development, Paris

BIS (2016) Lord Stern’s review of the research excellence framework call for evidence. Department for Business, Innovation and Skills, London, https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/500114/ind-16-1-ref-review-call-for-evidence.pdf. Accessed 2 Sept 2016.

Black J (2014) The power of knowledge: how information and technology made the modern world. Yale University Press, New Haven, CT

Boulton G (2010) University Rankings: Diversity, Excellence and the European Initiative. Advice Paper, 3, League of European Research Universities (LERU) http://www.leru.org/files/publications/LERU_AP3_2010_Ranking.pdf. Accessed 16 June 2010

Bush V (1945) Science, the Endless Frontier. United States Government Printing Office, Washington DC. https://www.nsf.gov/od/lpa/nsf50/vbush1945.htm

Cantwell B (2016) The geopolitics of the education market. In: Hazelkorn E (ed) Global rankings and the geopolitics of higher education. Routledge, Abingdon, pp 309–323

Camera L (2016) Democrats Look to Increase Federal Role in College Accreditation, US News and World Report http://www.usnews.com/news/articles/2016-09-22/democrats-look-to-increase-federal-role-in-college-accreditation Accessed 30 Sept 2016

Carayannis EG, Rakhmatullin R (2014) The quadruple/quintuple innovation helixes and smart specialisation strategies for sustainable and inclusive growth in Europe and beyond. J Knowl Econ 5(2):212–239. https://doi.org/10.1007/s13132-014-0185-8

Castell M (2011) The crisis of global capitalism: toward a new economic culture? In: Calhoun C, Derluguian G (eds) Business as usual: The roots of the global financial meltdown. SSRC and NYU Press, New York, NY, pp 185–210

Center for Science and Technology Studies (CWTS) (2016) Computions and Bibliometric Indicators Information source: bibliographic records of research publications and patents. http://www.umultirank.org/cms/wp-content/uploads/2016/03/Bibliometric-Analysis-in-U-Multirank-2016.pdf. Accessed 2 Sept 2016

Cherney A (2013) Academic-industry collaborations and knowledge co-production in the social sciences. J Sociol 51(4):1003–1016. https://doi.org/10.1177/1440783313492237

Christopherson S, Gertler M, Gray M (2014) Universities in crisis. Camb J Reg Econ Soc 7(2):209–215

Cloete N, Langa P, Nakayiwa-Mayega F, Ssembatya V, Wangenge-Ouma G, Moja T (2016) Rankings in Africa: Important, interesting, irritating, or irrelevant? In: Hazelkorn E (ed) Global Rankings and the Geopolitics of Higher Education: Understanding the influence and impact of rankings on higher education, policy and society. Routledge, London, pp 128–143

Coates H (2016) Reporting alternatives: Future transparency mechanisms for higher education. In: Hazelkorn E (ed) Global rankings and the geopolitics of higher education. Routledge, Abingdon, pp 277–294

Cox M (2012) Power shifts, economic change and the decline of the west? Int Rel 26(4):369–388. doi.org/10.1177/0047117812461336

CPPP (2009) On the november ballot – proposition 4: Creating more tier one universities in Texas. Centre for Public Policy Priorities, Austin, TX, http://library.cppp.org/files/2/415_TierOne.pdf. Accessed 2 Sept 2016

Crow M, Dabars WM (2015) Designing the New American University. Johns Hopkins University Press, Baltimore, MD

Dahler-Larsen P (2007) Evaluation and public management. In: Ferlie E, Lynn Jr. LE, Pollitt C (eds) The Oxford Handbook of Public Management (pp 615–639). Oxford University Press, Oxford. https://doi.org/10.1093/oxfordhb/9780199226443.003.0027

Dahler-Larsen P (2011) The Evaluation Society. Stanford University Press, Stanford

Dahler-Larsen P (2015) The evaluation society: critique, contestablility and Skepticism. Spazio Filosofico 13:21–36

Dale R (2005) Globalisation, knowledge economy and comparative education. Comp Educ 41(2):117–149. https://doi.org/10.1080/03050060500150906

Davenport E, Snyder H (1995) Who cites women? Whom do women cite?: An exploration of gender and scholarly citation in sociology. J Doc 51(4):404–410. http://www.emeraldinsight.com/journals.htm?articleid=1650200&show=abstract. Accessed 2 Sept 2016

Delanty G (2002) Governance of universities. Can J Sociol 27(2):185–198

Dill DD, Beerkens M (eds) (2010) Public Policy for Academic Quality: Analyses of Innovative Policy Instruments. Springer, Dordrecht

Douglas JA (ed) (2016) The New Flagship University: Changing the paradigm from global ranking to national relevancy. Palgrave Macmillan, Basingstoke

Drezner D (2014a) The system worked: global economic governance during the great recession. World Politi 66(66):123–164. doi:10.1017/

Drezner D (2014b) The system worked: How the world stopped another great depression. Oxford University Press, Oxford

Dunning JH (1999) Globalization and the knowledge economy. In: Dunning JH (ed) Regions, globalization, and the knowledge-based economy. Oxford University Press, Oxford, p 7–41

Eaton JS (2011) U. S. accreditation: meeting the challenges of accountability and student achievement. High Educ 1:1–20

Eaton JS (2016) The quest for quality and the role, impact and in uence of supra-national organisations. In: Hazelkorn E (ed) Global Rankings and the Geopolitics of Higher Education. Routledge, Abingdon, p 324–338

European Commission (EC) (2006) Delivering on the modernisation agenda for universities: Education, research and innovation (COM(2006) 208 final). European Commission, Brussels

European Commission (EC) (2010a) Europe 2020: A strategy for smart, sustainable and inclusive growth (COM(2010) 2020 final).. European Commission, Brussels, http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=COM:2010:2020:FIN:EN:PDF. Accessed 30 Aug 2016

European Commission (EC) (2010b) Lisbon Strategy Evaluation Document (SEC(2010) 114 final). European Commission, Brussels

European Commission (EC) (2012) Guide to research and innovation strategies for smart specialisations (RIS3). European Union, Brussels

European Commission (EC) (n.d.) Responsible research and innovation”. https://ec.europa.eu/programmes/horizon2020/en/h2020-section/responsible-research-innovation. Accessed 11 Oct 2016

European Association for Quality Assurance (ENQA) in Europe (2005) Standards and Guidelines for quality assurance in the European higher education area. http://www.iheqn.ie/_fileupload/Publications/ENQABergenReport_tabfig43799078.pdf. Accessed 26 Aug 2011

ESF (2012) Evaluation in research and research funding organisations: European practices.. European Science Foundation, Strasbourg

Etzkowitz H, Leydesdorff L (1999) The future location of research and technology transfer. J Technol Transf 24(2):111–123. https://doi.org/10.1023/a:1007807302841

Etzkowitz H, Webster A, Gebhardt C, Terra BRC (2000) “The future of the university and the university of the future: evolution of ivory tower to entrepreneurial paradigm”. Res Policy 29(2):313–330. https://doi.org/10.1016/S0048-7333(99)00069-4

European Universities Association (EUA) (2014) The role of univerisites in Smart Specialisation Strategies. European Universities Association, Brussels, http://www.eua.be/Libraries/publication/EUA_Seville_Report_web.pdf?sfvrsn=0 Accessed 14 Oct 2016

Eurostat (2015) Tertiary education statisticshttp://ec.europa.eu/eurostat/statistics-explained/index.php/Tertiary_education_statistics#Teaching_staff_and_student.E2.80.93academic_staff_ratios Accessed 2 Sept 2016

Ferlie E, Musselin C, Andresani G (2008) The steering of higher education systems: a public management perspective. High Educ 56(3):325–348

Geiger RL (1993) Research and relevant knowledge: American research universities since world war II. Oxford University Press, Oxford

Geuna A, Piolatto M (2016) Research assessment in the UK and Italy: Costly and difficult, but probably worth it (at least for a while). Res Policy 45(1):260–271. https://doi.org/10.1016/j.respol.2015.09.004

Gibbons M, Limoges C, Nowotny H, Schwartzman S, Scott P, Trow M (1994) The new production of knowledge: The dynamics of science and research in contemporary societies. SAGE, London

Gibson AG, Hazelkorn E (2017) Arts and humanities research, redefining public benefit, and research prioritization in Ireland. Research Evaluation 26(3):199–210

Goddard J, Hazelkorn E, Kempton L, Vallance P (2016) The Civic University: The policy and leadership challenges. Edward Elgar, Cheltenham

Gornitzka Å, Maassen P, Olsen JP, Stensaker B (2007) “Europe of Knowledge”: Search for a new pact. In: Maassen P, Olsen JP (eds) University Dynamics and European Integration. Springer, Dordrecht, pp 181–214. http://link.springer.com/content/pdf/10.1007/978-1-4020-5971-1_9.pdf

Grove J (2013) Leru pulls out of EU’s U-Multirank scheme. Times Higher Education. https://www.timeshighereducation.com/news/leru-pulls-out-of-eus-u-multirank-scheme/2001361.article. Accessed 14 Oct 2016

Grove J (2015) Russia’s universities: rebuilding ‘collapsed starts’, Times Higher Education. https://www.timeshighereducation.com/features/russias-universities-rebuilding-collapsed-stars/2018006.article. Accessed 23 Oct 2016

Guardian (2014) University research excellence framework 2014 – the full rankings, the Guardian Higher Education Network Datablog. http://www.theguardian.com/news/datablog/ng-interactive/2014/dec/18/university-research-excellence-framework-2014-full-rankings. Accessed 2 Sept 2016

Guston DH (2000) Between Politics and Science: Assuring the Integrity and Productivity of Research. Cambridge University Press, Cambridge

Guthrie S, Wamae W, Diepeveen S, Wooding S, Grant J (2013) Measuring research: A guide to research evaluation frameworks and tools. RAND, Cambridge, http://www.rand.org/pubs/monographs/MG1217.html. Accessed 2 Sept 2016

Harman G (2011) Competitors of rankings: new directions in quality assurance and accountability. In: Shin JC, Toutkoushian RK, Teichler U (eds) University rankings: Theoretical basis, methodology and impacts on global higher education. Springer, Dordrecht, p 35–54

Hazelkorn, E (2009) The impact of global rankings on higher education research and the production of knowledge, UNESCO Forum on Higher Education, Research and Knowledge Occasional Paper N°16. http://unesdoc.unesco.org/images/0018/001816/181653e.pdf. Accessed 26 May 2017

Hazelkorn E (2012a) European “transparency instruments”: Driving the modernisation of european higher education. Curaj A, Scott P, Vlăsceanu L, Wilson L (eds) European higher education at the crossroads: between the Bologna Process and national reforms, vol 1. Springer, Dordrecht

Hazelkorn E (2012b) Understanding rankings and the alternatives: implications for higher education. In: Bergan S, Egron-Polak E, Kohler J, Purser L, Vukasović M (eds) Handbook Internationalisation of European Higher Education. Raabe Verlag, Stuttgart, p A2.1–5

Hazelkorn E (2013a) Has higher education lost control over quality? In: Chronicle of higher education. Washington D.C. http://chronicle.com/blogs/worldwise/has-higher-education-lost-control-over-quality/32321. Accessed 17 Aug 2016

Hazelkorn E (2013b) Reflections on a decade of global rankings: what we’ve learned and outstanding issues. Beiträge zur Hochschulforschung 2:8–33

Hazelkorn E, Ryan M, Gibson A, Ward E (2013) Recognising the Value of the Arts and Humanities in a Time of Austerity. Dublin. http://arrow.dit.ie/cserrep/42

Hazelkorn E (2014a) “Making an impact: New directions for arts and humanities research”. Arts Humanit High Educ 14(1):25–44. https://doi.org/10.1177/1474022214533891

Hazelkorn E (2014b) Rankings and the reconstruction of knowledge in the age of austerity. In: Fadeeva Z, Galkute L, Madder C, Scott G (eds.) Assessment for sustainable transformation: Redefining quality of higher education. Palgrave MacMillan, Basingstoke, p 25–48

Hazelkorn E (2015) Rankings and the reshaping of higher education: The battle for world-class excellence, 2nd ed. Palgrave Macmillan, Basingstoke

Hazelkorn E (2016) Introduction: the geopolitics of rankings. In: Hazelkorn E (ed) Global rankings and the geopolitics of higher education. Routledge, Abingdon, p 1–20

Hazelkorn E (2017) Rankings and higher education: reframing relationships with and between states, Burton R Clark Annual Lecture, CGHE Working Paper 19. http://www.researchcghe.org/perch/resources/publications/wp19.pdf; http://www.universityworldnews.com/article.php?story=20170508230619552

Henry M, Lingard B, Rizvi F, Taylor S (2001) The OECD, Globalisation and Education Policy. Pergamon, Oxford

Hicks D (2012) Performance-based university research funding systems. Res Policy 41(2):251–261. https://doi.org/10.1016/j.respol.2011.09.007

Huang F (2007) Internationalization of higher education in the developing and emerging countries: a focus on transnational higher education in Asia. J Stud Int Educ 11(3–4):421–432. https://doi.org/10.1177/1028315307303919

Inglehart RF, Norris P (2016) Trump, Brexit, and the rise of populism: Economic have-nots and cultural backlash, Faculty Research Working Paper Series. Harvard Kennedy School of Government, Cambridge, MA

Jaschik S (2007) Battle lines on ‘U.S. News’. Inside Highered. https://www.insidehighered.com/news/2007/05/07/usnews. Accessed 14 Oct 2016

Jump, Paul (2014) REF 2014 results: table of excellence, Times Higher Education, December 18. https://www.timeshighereducation.com/news/ref-2014-results-table-of-excellence/2017590.article. Accessed 2 Sept 2016

Kwiek M (2015) The internationalization of research in Europe: A quantitative study of 11 national systems from a micro-level perspective. J Stud Int Educ 19(4):341–359. https://doi.org/10.1177/1028315315572898

Lane J, Johnstone DB (eds) (2013) Higher education systems 3.0: Harnessing systemness, delivering performance. SUNY Press, New York, NY

Lane J, Johnstone DB (eds) (2012) Universities and colleges as economic drivers: Measuring higher education's role in economic development. SUNY Press, New York, NY

Lim MA, Oerberg JW (2016) Active instruments: on the use of university rankings in developing national systems of higher education. Policy Reviews in Higher Education

Lundvall B-Å (2002) Growth, innovation and social cohesion: The Danish model. Edward Elgar Publishing, London

Maassen P, Stensaker B (2011) The knowledge triangle. European higher education policy logics and policy implications. High Educ 61(6):757–769.

Marginson S (2008) The Knowledge Economy and the Potentials of the Global Public Sphere”, Paper to the Beijing Forum, 7–9 Novemberhttp://www.cshe.unimelb.edu.au/people/staff_pages/Marginson/Beijing%20Forum%202008%20Simon%20Marginson.pdf. Accessed 8 Oct 2010

Marginson S (2013) Nation-states, educational traditions and the WCU project. In: Shin JC, Kehm BM (eds) Institutionalization of world-class university in global competition. Springer, Dordrecht, p 59–77

Marginson S (2014) University rankings and social science. Eur J Educ 49(1):45–59. doi.org/10.1111/ejed.12061

Marginson S, van der Wende M (2007) Globalisation and higher education, OECD Education Working Papers No. 8. Organisation for Economic Cooperation and Development, Paris

Middlehurst R (2016) UK teaching quality under the microscope: What are the drivers? Int High Educ 84:27–29

Morgan B (2014) Research impact: Income for outcome. Nature 511(7510):S72–S75. https://doi.org/10.1038/511S72a

Moyo D (2009) Dead aid. Penguin, London

Narula R (2003) Globalization and technology: Interdependence, innovation systems and industrial policy. Polity Press, Oxford

Nayyar D (2002) The existing system and the missing institutions. In: Nayyar D (ed) Governing globalization: Issues and institutions. Oxford University Press, Oxford, p 356–384

Neave G (2012) The evaluative state, institutional autonomy and re-engineering higher education in Western Europe: The prince and his pleasure. Palgrave Macmillan, Basingstoke

Nelson LA (2011) Examining the AAU gatekeepers. In: Chronicle of Higher Education, 11 May. https://www.insidehighered.com/news/2011/05/11/remaining_60_institutions_mull_aau_criteria_after_syracuse_nebraska_leave. Accessed 2 Sept

Ngok, K, and Guo, W (2008) The quest for world class universities in China: Critical reflections. Policy Futur Educ. https://doi.org/10.2304/pfie.2008.6.5.545

Nye DE (2006) Technology matters: Questions to live with. MIT Press, London

O’Connor J (1973) The fiscal crisis of the state. Transaction Publishers, Piscataway, NJ

OECD (1996) The Knowledge-Based Economy. Organisation for Economic Cooperation and Development, Paris

OECD (2010) Performance-based funding for public research in tertiary education institutions. Organisation for Economic Co-operation and Development, Paris. https://doi.org/10.1787/9789264094611-en

OECD (2013) Assessment of higher education learning outcomes (AHELO), Feasibility Study Report Volume 1 - executive summary. Organisation for Economic Cooperation and Development, Paris

OECD (2014) Shifting gear: Policy challenges for the next 50 years. OECD Economics Department Policy Notes, No. 24, vol 24. Organisation for Economic Cooperation and Development, Paris, p 17