Abstract

Semiarid rangelands are identified as at high risk of degradation due to anthropogenic pressure and climate change. Through tracking timelines of degradation we aimed to identify whether degradation results from a loss of resistance to environmental shocks, or loss of recovery, both of which are important prerequisites for restoration. Here we combined extensive field surveys with remote sensing data to explore whether long-term changes in grazing potential demonstrate loss of resistance (ability to maintain function despite pressure) or loss of recovery (ability to recover following shocks). To monitor degradation, we created a bare ground index: a measure of grazeable vegetation cover visible in satellite imagery, allowing for machine learning based image classification. We found that locations that ended up the most degraded tended to decline in condition more during years of widespread degradation but maintained their recovery potential. These results suggest that resilience in rangelands is lost through declines in resistance, rather than loss of recovery potential. We show that the long-term rate of degradation correlates negatively with rainfall and positively with human population and livestock density, and conclude that sensitive land and grazing management could enable restoration of degraded landscapes, given their retained ability to recover.

Similar content being viewed by others

Introduction

Covering 47% of the terrestrial surface, rangelands are home to one third of the global population, many of whom are pastoralists who depend on rangelands to meet their daily need for shelter, water and food1,2,3. Rangelands are also home to diverse ecosystems, including iconic wilderness areas such as the Serengeti and Ngorongoro. Because rangelands develop in semi-arid areas and are primarily used for grazing, they are often perceived as highly vulnerable to changes in rainfall and anthropogenic pressures4,5. With evidence of growing loss and degradation within rangelands and other semi-arid regions, the UN established the Convention to Combat Desertification (UNCCD) in 19966). Although a primary concern that led to the UNCCD were a series of Sahelian droughts that have now ended, concern about loss of rangelands and increasing degradation among the remaining rangeland areas has continued7,8). If we are to effectively combat degradation within rangelands, it is important that we understand the drivers of mechanisms by which degradation occurs.

The UNCCD identifies Africa as particularly vulnerable, estimating that land degradation is affecting more than half the continent’s population9. Here, rangelands are synonymous with savanna, a biome defined by the presence of C4 grasses, generally occurring in regions with rainfall between 450 mm and 1500 mm per year and often maintained by fire10,11,12. The savanna biome encompasses several habitats, from open grasslands to deciduous woodlands. A key aspect of savanna ecosystems is their high temporal and spatial heterogeneity, a factor that necessitates mobility in human and wildlife populations to exploit patchy resources13,14. Societal and land use constraints limit the ability of populations to move when conditions become temporarily unsuitable, and sustained grazing alters the dynamics of savannas, reducing their ability to sustain grazing15,16.

Heavy, year-round grazing in savannas reduces grazing potential (i.e. quantity of vegetation palatable to grazers) through two pathways that result in either land invaded by toxic and unpalatable plants, including bush encroachment8,17, or in bare ground experiencing soil loss18. Rangeland degradation has been defined as a long-term decline in productivity resulting in rangelands unsuitable for grazing (IPBES19), rather than short-term declines driven by temporal variability of environmental conditions (e.g. rainfall, grazing pressure). Such degradation has been linked to rainfall patterns20, and might be exacerbated by climate-change driven changes in annual rainfall variability, already widely observed across African savannas21. At either end of the savanna rainfall gradient, continued precipitation change may interact with pressures like grazing in a way that leads to permanent loss of savannas. Further research into the relationship of these interacting factors with long-term trends of degradation is needed to better understand their importance for sustainable rangeland management.

Degradation and loss of savannas is already a primary driver of poverty and displacement of human populations in Africa. With a rapidly growing human population (averaging a growth rate of 2.2% per year in Africa22), the anthropogenic demands on savannas are growing, while the pressures from climate change are simultaneously mounting3,23. In a recent study, Hill and Guerschman (2020) identified East Africa as a focal point for increases in bare ground cover, and recommended investigating these trends at a finer spatial scale24. In order to meet growing demands from humans and their livestock in the face of potentially deteriorating environmental conditions we must identify how to increase sustainability of savanna use.

Resistance and recovery are two processes that underpin sustainable use of ecological resources25, particularly in environments that normally function within cycles of change. Together, these processes define the ’resilience’ of a system26. Resistance describes the ability of an ecosystem to continue to maintain function (such as the provision of grazing) despite external pressures, while recovery describes the internal processes that pull a system back towards the pre-disturbed state26. In this context, ‘shocks’ are referred to as any event in the environment that leads to reductions in rangeland condition beyond the typical, interannual oscillation around the baseline state of rangeland health. Such events might include extreme droughts, or heavy rain resulting in floods. It is important to distinguish between resistance and recovery because management aimed at increasing recovery might be different to that designed to increase resistance. For example, in rangelands, resistance may be increased by promoting a high diversity of grass species or a particularly beneficial grass community composition27, while recovery may require, in addition, temporal variation in grazing pressure, for example through temporary grazing exclusion28.

It is unclear whether the recent trend in savanna degradation is driven by reduced recovery potential, a decline in resistance, or both29. Observing the long-term trends of rangeland condition, quantified by a degradation index, helps reveal the mechanisms behind eventual degradation of habitats: If degradation is driven by a loss of recovery, areas that become degraded will show the same short-term response (i.e. reduction in rangeland condition) to external shocks as comparable sites, but would be expected to recover more slowly, and potentially insufficiently, before the next shock occurs. Alternatively, if degradation is driven by a loss of resistance, areas that become degraded will show a greater initial response to shocks, and will therefore be less likely to have recovered to pre-shock conditions before another shock occurs, despite similar recovery rates to more resistant areas. Recovery and resistance are not mutually exclusive, and may interact with land management or rainfall conditions to generate different relative impacts in different areas. Quantifying the relative effects of resistance and recovery is important to identify management priorities for savannas29.

The rangelands of Northern Tanzania are typical of many African savannas. They are home to significant populations of pastoralists3,30 and hold globally important wildlife populations31, yet there is widespread concern about their loss and degradation32. Wildlife numbers are falling and poverty is high: degradation has been identified as a key contributor to this problem33 but is not ubiquitous. Across Northern Tanzanian rangelands there is considerable variation in the degree of degradation and in anthropogenic and environmental drivers of degradation. For example, in our study area, rainfall varies from 400 to 900 mm34, human population from 5 to 35 people per km235 and livestock densities up to 250 head of cattle per km236. These landscape conditions are also moderated by a variety of conservation-related land use restrictions. The combination of all of these interacting components makes Northern Tanzania an ideal location to study the processes that shape recovery and resistance in rangeland dynamics.

Here we combine field data on vegetation structure with high-resolution satellite data gathered over the last two decades (a period spanning two severe droughts) to identify the drivers of degradation within Northern Tanzanian rangelands. Our aims were to (1) test whether long-term changes in grazing potential demonstrate loss of resistance, loss of recovery, or both, and (2) identify how spatial variation in land use designation, human and livestock density, and rainfall patterns impact degradation pathways at a fine spatial scale. We hypothesize that drier areas experience higher rates of degradation, and we expect both livestock and human population density to be positively correlated with degradation. We predict that long-term rates of degradation correspond to the degree to which grazing is managed by official land use designation, with areas that have the most grazing restrictions (i.e. national parks) exhibiting the lowest increases in degradation. Finally, we hypothesize that loss of recovery and loss of resistance both contribute to long term degradation patterns.

Methods

To test whether loss of resistance or recovery is the primary mechanism driving degradation in Northern Tanzanian rangelands, we (a) chose key parameters that defined degradation within our study area, (b) quantified variation in degradation across the landscape, and (c) evaluated how degradation changed at specific locations over time. We evaluated bare ground cover and the number of invasive & toxic plants (ITP), measured as the abundance of three key plant species, as candidates for degradation parameters. We used field survey data gathered in 2016 and 2018, across sampling sites stratified throughout the study region, to train a machine learning algorithm. Using this algorithm, we estimated degradation parameters for the years 2000 to 2020, based on Landsat satellite images and rainfall data, taking into account seasonal variation of vegetation productivity. Subsets of survey data were used to ground truth and test model outputs. We then tested whether estimated degradation outcomes correlated with spatial maps of anthropogenic and environmental variation. When considering long-term trends in degradation, we accounted for the effects of temporal and spatial variation in annual rainfall.

Study area

The study area consisted of 30,300 km2 of the Tarangire-Manyara ecosystem and Maasai Steppe of northern Tanzania (Fig. 1). This is a semi-arid ecosystem, dominated by Acacia-Commiphora woodland?. Annual rainfall is bimodal (rains in November to January and March to May) with large inter-annual variability37. The 20 years considered in this study covered multiple positive and negative phases of Indian Ocean Dipole (IOD) and the El Niño-Southern Oscillation (ENSO), which represent large scale climate processes that impact rainfall in East Africa38. Notable droughts in the study region were recorded in 2003-4 and 2016-17, when average rainfall was around 50% below average (Foley, unpublished data). The period between October 2019 - January 2020 was the wettest recorded in East Africa in over two decades39. The stratified sampling locations fell into areas of four different land use strategies. These included, in descending order according to the degree of grazing restrictions:

-

NP: Two national parks (Tarangire, established 1970, and Lake Manyara, established 1960, where grazing is outlawed, but some illegal grazing persists40.

-

WMA: Four wildlife management areas (WMAs) (Enduimet, Randilen, Burunge and Makame), established beginning in 200341. WMAs primarily give communities the rights to manage wildlife on their lands, as well as grazing activities, but all activities are managed by a WMA board.

-

CCRO: Areas secured by the Certificate of Customary Right of Occupancy (CCRO) initiative42, a relatively new intervention implemented beginning in 2015, whereby communities retain land ownership and decide on land use practices.

-

NONE: Land not covered under any official management/protection scheme.

Choice of degradation parameters

Since this study focused on rangeland habitats, we chose degradation parameters from a grazing potential perspective. We followed the Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem Services (IPBES) definition of rangeland degradation, as “persistent loss of vegetation productivity cover, especially of those plants which support herbivores.”19. Our proposed parameters consisted of an index of bare ground cover (absence of grazeable vegetation cover) and the number of individual ITP, which replace plants palatable to herbivores. The latter consisted of three key plant species, all native to the area, but behaving like invasive plants: Ipomea hildebrantii, an evergreen woody shrub that can significantly decrease grass biomass production43,44; Solanum campylacanthum, a thorny shrub which has been shown to be highly toxic to livestock45; and Dichrostachys cinerea, a fast growing tree species that accounts for the majority of bush encroachment in African savannas46.

Baseline ground surveys

We used ground survey data to train and validate remote sensing estimates of degradation. To select survey sites across the study area (Fig. 1), we stratified the ecosystem to ensure data collection from a complete representative selection of land cover, vegetation quality and rainfall levels. This survey stratification also allowed manageable sampling and route planning for accessing sites across the ecosystem. The strata were based on combinations of rainfall, mean annual NDVI (vegetation greenness) for the year 2015, and land cover type (grassland and woodland) (see section ’Survey site stratification’ for details). We chose April and May as the sampling months since this coincided with the end of the wet season and consequential peak vegetation growth, enabling plant species identification47. We randomly selected 250 cells evenly across all strata to sample using a basic vegetation survey, with the centre of each 500 m cell selected as the focal sampling point for the vegetation survey. Neighbouring cells were excluded. Some 43 target cells were unsuitable (e.g. recently cleared crops) or inaccessible, resulting in 208 cells sampled between April and May 2016. To increase the geographic spread of sampling locations, during April 2018 additional 48 vegetation surveys were carried out across the ecosystem, three of which targeted areas containing D. cinerea. This allowed us to train the machine learning algorithm on a wider range of values, improving the performance when applied over the full study region. The total number of survey locations used in the analysis was 256.

Location of the study area and sites for the baseline vegetation survey in April-May 2016 (blue circles) and April 2018 (orange diamonds). Background shading represents terrain elevation, derived from Shuttle Radar Topography Mission data48. Areas not falling into the National Park (NP), Wildlife Management Area (WMA), or Certificate of Customary Right of Occupancy (CCRO) designation were included in the study under the ‘NONE’ category. The map was created using QGIS 3.1449.

At each survey site (Fig. 1), we collected data based on the Monitoring Rangeland Health guide47, which is designed for rapid vegetation and degradation assessments with minimal equipment. Measurements were taken at sampling points every five meters along four 25 m transects extending north, east, south and west, resulting in a 50 x 50 m cross, diagonally covering a plot of 35 x 35 m. At every sampling point, measurements were recorded at 5 notches along a 1 m measuring stick, leading to a total of 100 individual data points (25 for each of the four 25 m transects). The following measurements were quantified: (1) The number of invasive and/or toxic rangeland species (I. hildebrantii, S. campylacanthum and D. cinerea), as a total count of stems at the survey site across all sampling points. (2) The percentage of bare ground, as the percentage of notches falling onto bare ground, across sampling points at the survey site. Measures of plant density and bare ground were not mutually exclusive, e.g. a count of one plant of D. cinerea could coincide with a high percentage of bare ground at a given sampling point, in the absence of ground vegetation.

Survey site stratification

We used remote sensing data (MODIS), accessed and downloaded using the Google Earth Engine (GEE) cloud computing platform50, to stratify vegetation survey locations. This stratification allowed us to collect samples over a range of different environmental conditions. The MODIS products included: MOD13A151, a 16-day Normalized Difference Vegetation Index (NDVI) composite (a measure of vegetation productivity), at a 500 m resolution from which we calculated the mean NDVI value per grid cell from all 2015 data; MCD12Q152, a land cover product providing five annual classification layers for global land cover at a 500m resolution. Land cover per grid cell was calculated at the most common annual BIOME-Biogeochemical Cycles (BGC) classification between 2001 and 2013; MOD09A153, an 8-day land surface reflectance product, downloaded for May 2015-April 2016.We further retrieved rainfall data at 0.05\(^{\circ }\) resolution for the years 2000-2020 from CHIRPS version 254, a quasi-global dataset, ranging from 50\(^{\circ }\)S to 50\(^{\circ }\)N, that combines satellite imagery with rainfall station data to create a gridded rainfall time series. We calculated mean rainfall for the period 2000-2020, interpolated to the same resolution as the MODIS data. For each 8-day MODIS reflectance tile, we interpolated single missing values in all seven bands due to cloud cover based on the average of the preceding and succeeding tiles. Where there were cells with two or three successive missing values, these were replaced by linear interpolation using the ’na.approx()’ function in the ‘zoo’ package55 in R 3.2.256. The sample stratification resulted in a design that spanned a wide rainfall gradient, from 360 mm to 1095 mm total annual rainfall.

Estimating degradation parameters, using machine learning

We obtained remotely sensed satellite data for the machine learning regression from Landsat through Google Earth Engine, at 30 m resolution, which closely matched the 35 m vegetation survey plots. We combined Landsat 5, 7 and 8 products (courtesy of the U.S. Geological Survey) to maximise data coverage for our study period and region. For Landsat 7 products, the scan line correction device failed in May 2003, leading to a 22% loss in values for each scene57. A gap-filling function was applied in GEE to mitigate this. For each Landsat product, we calculated per-pixel cloud scores and only included pixels with less than 10% cloud cover.

Based on the Landsat images, we calculated yearly indices that had the potential to indicate patterns of rangeland degradation at 30 m resolution. All composites were created starting in November the previous year, and ending in October of the given year used for predictions, to capture the seasonality in the region. Vegetation indices are used as a quantitative measure of vegetation productivity; we therefore calculated the enhanced vegetation index (EVI), an index that is optimised for areas with high productivity and variations in soil brightness58. Next, we calculated a bare soil index (BSI) based on a formula introduced by Rikimaru et al., which combines the NDVI and normalised difference built-up index59, and has been used in similar studies60. We calculated the modified soil-adjusted vegetation index (MSAVI), a vegetation index with increased dynamic range over NDVI, and reduced soil background bias61. We used harmonic regression to calculate trend variables (magnitude, phase) for EVI, BSI, and MSAVI. This allowed us to capture the seasonal change of vegetation indices (i.e. variation in plant phenologies), a quantification that can improve the predictive power of classifiers62, and was lacking from the yearly averages used for the remaining indices. Finally, we included the total yearly rainfall at the pixel level, based on CHIRPS data, to account for the potentially strong effect rainfall might have on the chosen degradation parameters. Recognising that the amount of grass present at the end of a rainy season is influenced both by the severity of the preceding dry season and the total rainfall across the previous rainy season, we computed annual rainfall from May in the previous calendar year, to the end of April in the focal year. Supplementary Fig. S1 online provides a conceptual overview of the steps involved in creating the composite layers. Supplementary Table S1 online gives a summary of all predictor variables used to train the model algorithm. To minimise unnecessary computations, a mask layer was created that excluded any data for non-savanna habitat. Supplementary Table S2 online gives an overview of data products and parameters used to create the mask.

Supervised Machine learning regression algorithms were trained and evaluated in GEE. The surface survey data were joined with predictor variables at the respective sampling locations and years (2016 or 2018), and randomly split into a holdout testing partition (25%) and training partition (75%). The training partition was used for repeated random cross-validation, using ten repeats, and 75%-25% splits for training and validation subsets. Model performance was evaluated using the average r-squared value and RMSE with standard deviation for observed vs. predicted results, across all ten repeats. Model parameters were tuned in GEE, and the final, best performing model was evaluated against the testing holdout partition, previously unseen by the model. This workflow provided us with an unbiased approach to evaluate the models ability to generalize, and avoid overfitting63. The predictor variables had very different ranges, and were standardised to improve model performance. The bare ground cover values were skewed towards zero, and an improved model performance was achieved by log-transforming the response: log(x+1). The final model for the classification was trained using all of the ground truth data available. Different classification algorithms were evaluated. Random forest (RF) and support vector regression (SVR) with radial kernel and nu parameter were chosen since they are known to perform well in remote sensing applications64. The accuracy performance of one over the other differs between studies64,65,66, warranting a direct comparison. While less often used in remote sensing studies, gradient boosting trees (GBT) have outperformed SVR and RBF algorithms on some occasions67,68, and it was therefore included in the comparison. The resulting predictions were back-transformed from the log scale to the original scale for bare ground scores and scaled using the 2016 mean and standard deviation. Final prediction maps were visualised in GEE for every year, to check for abnormalities. Figure 2 gives a conceptual overview of all steps involved in predicting degradation scores, based on the annual composite maps.

Data analysis

Estimates were made at a 30 m resolution to match the 35 m vegetation plots, but aggregated to 200 m resolution for further analyses using a median. This aggregation reduces stochastic noise potentially arising from extreme values across the 200 m pixels (i.e. very high degradation pixels neighbouring very low degradation pixels), at the cost of ignoring fine-scale patterns. We argue, however, that degradation from a grazing perspective is most meaningful if observed at a scale above 30 m resolution and across the larger scales at which grazing decisions are made. To test hypotheses about resistance and recovery, we normalized degradation scores between 0 and 1, and divided the savanna landscape into three even-sized classes identifying the most, least, and medium degraded areas, based on the median degradation scores of the final three years of the time series (2018-2020). If loss of resistance underpins degradation, we anticipated that sites that are most degraded by the end of the time series would show bigger changes during years with large declines in degradation. If lack of recovery underpins degradation we anticipated that the first year of recovery following a large decline would see smaller recoveries in the most degraded areas. As recovery could be measured in absolute or relative terms (i.e. number of units recovered, or proportion of decline recovered) we considered both quantifications. We used two-way ANOVAs in R to statistically compare classes. We estimated the marginal means for covariates based on the model using the ’emmeans’ package70, and conducted Tukey’s post hoc tests for pairwise comparisons. It should be noted that, due to the large sample sizes in these comparisons, traditional statistical significance becomes almost inevitable71.

To test hypotheses regarding the mechanisms leading to degradation, we computed the pixel-wise linear rate of degradation parameters at a 200 m resolution across the entire time series (long-term trend), taking into account the annual variation of rainfall when calculating the slope. We fitted a spatially-explicit hierarchical Bayesian regression model using Integrated Nested Laplace Approximation (INLA) with the Stochastic Partial Differential Equation (SPDE) approach in the package ’R-INLA’72,73,74 in R. INLA offers a fast, flexible alternative to Markov-Chain Monte-Carlo methods for fitting complex regression models and allows us to estimate the effects of spatial covariates while accounting for the non-independence of spatial data75. The model correlated the long-term rate of degradation to human population density for 2017 (from the Landscan dataset76), livestock density for the year 2010 (measured in Tropical Livestock Units based on cattle, goats, and sheep, data from the Gridded Livestock of the World database, FAO77), the land use designation (NP, WMA, CCRO, and NONE) as a categorical variable, and total annual rainfall (from the CHIRPS data). Including rainfall as a covariate explicitly accounted for the spatial variation in rainfall, a variable that drives large amounts of variation in grassland productivity78,79, and would likely mask trends if unaccounted for. The statistical power of the regression analysis was related to the number of pixels considered. Supplementary Table S3 online gives an overview of the sample sizes used for the land use designation analysis.

Initial models revealed that the estimated range of the spatial autocorrelation was very small, requiring a very fine mesh resolution to fit a smooth SPDE. We created a simple spatial mesh at the point locations using the ’inla.mesh.create’ function in ’INLA’, extending beyond the study region (using the default settings), to avoid boundary effects of the SPDE. We cross-validated the fit of the model by visually inspecting probability integral transform (PIT) values80.

Results

Model performance

The random forest (RF) model slightly outperformed support vector regression (SVR) for predicting the bare ground scores during cross-validation but performed considerably worse on the holdout partition (Table 1), and SVR was chosen for the final classifier model for the bare ground index. Gradient boosting trees performed best on the holdout partition for predicting the number of invasive & toxic plants (ITP), but considerably worse than SVR and RF during cross-validation. SVR performed better than RF during cross-validation, but performed slightly worse than RF on the holdout partition (Table 1), and RF was chosen for the final classifier model for the number of ITP. See Supplementary Fig. S2 online for validation plots of the final models. When visualizing the prediction maps for the number of ITP, we observed inter-annual variation far greater than plausible for relatively slow-growing woody plants (see Supplementary Fig. S3 online), suggesting that the model did not sufficiently differentiate ITP from the remaining vegetation. We therefore excluded this parameter from further analysis and focused on bare ground cover only.

Spatial patterns

Maps of bare ground index scores showed expected spatial patterns. We found lower bare ground cover in Tarangire and Lake Manyara national parks, as well as in the forests surrounding the peaks in the north of the study area (Fig. 3).

Map of normalized bare ground index scores in the study area, averaged for the years 2018-2020. Areas of high bare ground cover receive higher scores (max index = 1) and are coloured yellow, areas of low bare ground cover have lower scores (max index = -1) and are coloured purple. Gaps in the data (coloured white) are areas removed by the masking layer. The map was created using QGIS 3.1449.

Time series analysis

Annual rainfall in the study area was variable and corresponded to recorded rainfall extremes. During the drought years of 2003-2004, rainfall in the study area remained at a lower level, and rainfall dipped during the drought of 2016-2017 (Fig. 4). The extreme rainfall of 2020 had a strong impact in the study area, with the year exhibiting highest recorded rainfall during the timeline (Fig. 4). We found clear year-to-year variation in bare ground scores (Fig. 4). Consistent land use designation effects were visible throughout the timeline 4A). In most years, national parks showed lower bare ground scores than any other land use designation, with notable exceptions in years of high rainfall (e.g. 2001, 2020). Land that would eventually become CCROs or WMAs often had similarly high bare ground scores to land in the ‘NONE’ category 4A). Areas that were classified as having most, least and medium bare ground scores at the end of the timeline maintained the same classification throughout the study period (Fig. 4B). Recovery years were frequently associated with high annual rainfall (eg. 2001, 2018, 2020) (Fig. 4).

Normalized bare ground index scores over time - areas of high bare ground cover receive higher scores. (A) Split by land use designation (black: No official management/protection scheme (NONE), orange: Certificate of Customary Right of Occupancy (CCRO), blue: Wildlife Management Area (WMA), cyan: National Park (NP)) and (B) Split by bare ground percentile, based on the median degradation scores of the final three years of the time series (2018-2020). Whiskers represent 95% confidence intervals around the median. Outliers are not shown. The dashed gray line in the background indicates annual median rainfall (November to October), based on CHIRPS version 2 (54) data retrieved for the study area.

To assess resistance, we focused on the four years with the largest increase in bare ground scores (2002, 2009, 2011 and 2019). We found that cells in the high bare ground percentile increased in bare ground cover more, on average, than other cells in three of the four years assessed (Fig. 5). Similarly, lowest bare ground percentiles in 2018–2020 also showed the lowest average increase in bare ground in three of the four years (Fig. 5).

Changes in normalized bare ground index scores during the four years of greatest increase in bare ground cover, Split by bare ground percentile, based on the median degradation scores of the final three years of the time series (2018–2020). Positive numbers along the y axis signify an increase in bare ground cover, while negative numbers signify a decrease. Whiskers represent 95% confidence intervals around the median. Outliers are not shown. All contrasts were statistically different in the ANOVA tests, unless otherwise indicated. n.s.: not significant.

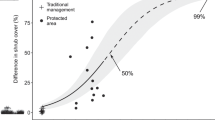

Looking at the recovery year following large declines, we found that cells ending in the highest bare ground percentile exhibited lower absolute and relative recovery than other cells in the driest recovery year (2003) 6). In the remaining years, cells in the ultimately highest bare ground percentile showed higher absolute recovery than other cells, and the difference increased with higher annual rainfall (6A). In terms of relative recovery, cells in the ultimately highest bare ground percentile showed the same trend of higher recovery in wetter years, and were the only cells with median net improvement in the wettest year (2020) (6B).

Recovery in bare ground scores following the four years of largest degradation. Split by bare ground percentile, based on the median degradation scores of the final three years of the time series (2018–2020). (A) Absolute recovery, positive numbers along the y axis signify an increase in bare ground, while negative numbers signify a decrease in bare ground. Blue diamonds indicate annual rainfall (November to October) based on CHIRPS version 254 data. (B) Relative recovery, the proportion of decline that returns in the recovery year. A value of 1 = total recovery, 0 = no recovery, <0 = continued decline, >1 net improvement. Whiskers represent 95% confidence intervals around the median. Outliers are not shown. All contrasts were statistically different in the ANOVA tests.

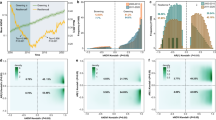

Formal statistical analysis of the cell-specific change of bare ground scores between 2000 and 2020 showed significant effects of all covariates (Fig. 7, Table 2). We found evidence that mean annual rainfall had the strongest effect on the long-term trend of bare ground scores compared to the other covariates in the model, with drier areas showing steeper increases in bare ground scores than wetter areas (Fig. 7B). We found increases of bare ground scores over the last 20 years in all areas, independent of land use designation (Fig. 7, Table 2). The bare ground cover change in land that had been designated as CCROs by the end of the time period was no different from land in the NONE category, while national parks and WMAs had a lower rate of increase in bare ground than NONE (Fig. 7D). Both human population density and livestock density had small correlations with the change of bare ground scores, such that areas with higher human density, as well as more livestock, experienced increasing bare ground cover rates (Fig. 7A,C).

Discussion

We found evidence that, in our study area, degradation seems to result primarily from a loss of resistance to change, not a lack of recovery. Land identified in the highest bare ground percentile by the end of the time period experienced slightly larger declines in condition in most years of widespread annual decline, but maintained recovery potential throughout. Absolute recovery in ultimately more degraded sites (i.e. sites in the highest bare ground percentile during the last three years of the time period) was actually slightly greater in all but the driest recovery year. Our results show that, as one shock rapidly follows another, sites that are ultimately degraded do not have time to fully recover between shocks. Sites with ultimately highest bare ground scores would have had to repeatedly exhibit net improvement to balance out the increased decline, but this degree of recovery only occurred during years of high rainfall. As we explicitly included annual rainfall in our models, we do not consider that large-scale climatic processes on the decadal scale can explain our overall findings. It should be noted that our estimated degradation scores exhibited significant variation, leading to small median effects. Nonetheless, we observed consistent patterns across years, suggesting that these trends go beyond statistical noise.

Although there is much in the ecological literature that defines separate concepts for resistance and recovery25,26,81, our results provide evidence that, in this area, repeated environmental shocks are a driver of bare ground cover. However, defining recovery will require more than measuring improvements in our bare ground index. The conceptualization of degradation as delivered through a repeated process of shock followed by partial recovery is important because it gives hope for eventual restoration of these rangelands. Although it seems unlikely that ecological shocks are to decline in frequency given global perturbations of the climate system82,83, the fact that recovery potential remains suggests that reducing factors that decrease resistance to change could allow rapid recovery. Indeed, the ability to recover quickly from year to year has long been at the core of traditional management of these rangelands: heavy use over a few years could lead to severe local degradation, but nomadic people moved away for a few years allowing natural recovery84,85. Today, such movements are increasingly restricted by fragmentation of rangelands, mainly through increasing agriculture86,87, rendering it necessary for pastoralists to remain in what may otherwise have been only temporarily degraded sites88,89, resulting in declining grassland productivity and increased degradation20,90,91.

Under the current prediction of overall increased rainfall in East Africa83,92, the increased recovery potential with higher rainfall could give hope for the eventual restoration of these rangelands. However, rainfall predictions for the region are complex. Historically, long season rainfall in East Africa has declined93,94. Future climate change is predicted to lead to more intense rainfall on individual days during the long rain season83,95,96 and increasing rainfall during the short rain season96,97, but also an increase in dry days98 and frequency and duration of droughts34,99. Overall, studies predict an increased frequency of high rainfall events associated with storms over Africa, linked to climate change100,101. It is currently unclear how these changing patterns will affect rangeland recovery potential. Due to the temporal resolution of our study, considering total annual rainfall only, we are lacking this insight into the finer scale rainfall patterns driving bare ground cover change. The clear association of rangeland recovery with total rainfall during our study period suggests that temporal variation in rainfall does not yet override the spatial effect of total annual rainfall, but the relationship with rainfall patterns should be explored to evaluate the future risk to rangeland recovery posed by climate change in East Africa. Furthermore, the spatial pattern of rainfall in relation to recovery should be considered. Rainfall in savanna grasslands is characterized by a high spatial patchiness102. Our study related recovery to rainfall averaged over the whole study area, disregarding local trends, and thus the finer scale responses to rain. Our results are applicable at the wider scale, but more research is needed to confirm the relationship between rainfall and recovery at the regional scale of management areas.

The general association of rangeland recovery with rainfall highlights a potential caveat of studies including bare ground as a parameter: While bare ground is the most visible expression of resistance and recovery on a wider landscape scale (enabling coarse satellite analyses), it is also potentially misleading, because of the high sensitivity to rainfall. Even rangeland well on its path to degradation (resulting from loss of resistance and/or recovery potential) may show a flush of growth following high rainfall events, leading to short-term variability of bare ground estimates. It is therefore crucial to account for rainfall, as it was done here: i) use rainfall to inform estimates of bare ground cover, ii) consider the long-term trends of bare ground, after factoring out temporal variation in rainfall, and iii) account for spatial variation in rainfall when analysing spatial patterns of bare ground cover. But even where rainfall is accounted for, the use of bare ground alone may present problems. In the context of recovery, for example, we registered a rangeland as ‘recovered’ if vegetation cover had sufficiently increased, even though this might be through fast-growing invasive or toxic species, rather than palatable grasses. For this bias to lead to false conclusions, however, such invasive and toxic plants would have to be more dominant than grazeable vegetation, during times of regrowth, across the hundreds of pixels considered for this study. Surveys suggest that the majority of these invasives are still low in numbers in the region103, although this might become a greater risk in the future.

We succeeded in generating classified maps of bare ground scores from Landsat images since 2000. Formal validation confirmed pixel-level correlations between observed and predicted test regions for both parameters of interest, bare ground and ITP, but further evaluation based on expected year-to-year variation only validated predictions of bare ground. Using satellite imagery to identify specific vegetation types, particularly at a taxonomic level, has long been a challenge in the field of remote sensing, requiring data at higher resolution than used in this study65,66. With the establishment of the Sentinel-2 program, providing imagery with high spatial (10 m) and temporal (5-day) resolution104, researchers have increasingly overcome this challenge, particularly through the use of time series analyses, utilizing images at peak vegetation intensity66,105. However, data are not available before 2015, making these data unsuitable for the analysis of longer term trends, as are expected in savanna habitats. The final generated landscape level patterns of bare ground cover in our study were consistent with known land use patterns. Our bare ground scores showed considerable variation year to year, which is consistent with known patterns of inter-annual variation in grass productivity in semi-arid savannas106,107. We found evidence for increases in bare ground scores across the study area, particularly in the driest areas, exactly as reported elsewhere108,109,110, and in line with the large scale increases in bare ground observed in East African grasslands over the last two decades24.

We found the expected positive relationship between human density and degradation22,108. Furthermore, we found that increases in bare ground cover were positively correlated with livestock density. While this result corroborates the negative relationship between high grazing intensity and grass biomass observed in African savannas20, the relatively low spatial resolution of the FAO product used to estimate livestock density77 lends only limited interpretability to this finding. Under the current predictions of continued growth in human population and demand for livestock products, however, this potential pressure on rangelands is unlikely to decrease. The signs of lower increases of bare ground cover in WMAs and national parks point to their effectiveness in mitigating large scale declines. Finally, we found no evidence for reduced bare ground increases in land that became CCROs. CCROs are a relatively new tool being promoted to enable the effective management of rangelands by local communities111,112,113, so it may seem surprising that these areas do not show improvements during the final years, especially as our analysis accounts for differences in rainfall and human population density that may differ between sites. In practice, however, CCROs are not in themselves a solution to the problem of degradation: although they establish areas dedicated for grazing, they do not yet provide sufficient management guidance around that grazing111. Consequently, once established they may generate grazing honeypots that increase degradation locally, rather than resolve the problems associated with poor land use. However, several CCROs are now engaged in sustainable grazing and management schemes, which might lead to a future reduction of degradation in these areas. Our results show that the ability of these sites to recover if effectively managed is undiminished, which speaks to the potential effectiveness of sustainable management schemes in CCROs. Establishing responsible community management may well be the first step that is needed if degradation is to be reduced. Continued monitoring of rangeland conditions in these areas is needed to establish the effectiveness of these new management strategies. It should be added that the list of CCROs included for the study area is not exhaustive: Due to the time-consuming process involved in establishing these areas, not all boundaries were available at the time of analysis.

Future research should include measurements of finer-scale qualities of rangeland health, to overcome some of the caveats highlighted in this study. Such measurements could include species composition of the vegetation cover, plant traits related to palatability, individual resistance and recovery capacity of plants, or soil properties (e.g. composition, compaction). The latter could provide important insights into the mechanisms of grassland degradation and recovery at a finer spatial scale, given its influence on water retention114, soil erosion115,116, and soil microbial activity117. Additional management techniques could be considered, such as the frequency of fires, an important historic and contemporary management strategy in the region118. These variables may reveal a more complete picture of the pathways to degradation, and enable more effective rangeland management strategies. If the qualities underlying rangelands resistant to degradation are identified, they could be targeted in order to further promote rangeland resistance in the face of greater shocks and stress.

Data and code availability

The code used in Google Earth Engine can be accessed online using the following link: https://anonymous.4open.science/r/GEE_code_Wiethase_et_al-2246. R code for the data analysis can be accessed online using the following link: https://github.com/jwiethase/Wiethase-Scientific-Reports-2022.

References

Asner, G. P., Elmore, A. J., Olander, L. P., Martin, R. E. & Harris, A. T. Grazing systems, ecosystem responses, and global change. Annu. Rev. Environ. Resour. 29, 261–299 (2004).

Millenium Ecosystem Assessment Board. Ecosystems and Human Well-Being: Wetlands and Water: Synthesis (Island Press, Washington, DC, 2005).

Lind, J., Sabates-Wheeler, R., Caravani, M., Kuol, L. B. D. & Nightingale, D. M. Newly evolving pastoral and post-pastoral rangelands of Eastern Africa. Pastoralism 10, 24 (2020).

Hoffman, T. & Vogel, C. Climate change impacts on African rangelands. Rangelands 30, 12–17 (2008).

Joyce, L. A. et al. Climate change and North American rangelands: Assessment of mitigation and adaptation strategies. Rangeland Ecol. Manage. 66, 512–528 (2013).

Stringer, L. C., Reed, M. S., Dougill, A. J., Seely, M. K. & Rokitzki, M. Implementing the UNCCD: Participatory challenges. Nat. Resour. Forum 31, 198–211 (2007).

Vågen, T.-G., Winowiecki, L. A., Tondoh, J. E., Desta, L. T. & Gumbricht, T. Mapping of soil properties and land degradation risk in Africa using MODIS reflectance. Geoderma 263, 216–225 (2016).

Stevens, N., Lehmann, C. E. R., Murphy, B. P. & Durigan, G. Savanna woody encroachment is widespread across three continents. Glob. Chang. Biol. 23, 235–244 (2017).

Muñoz, P. et al. Land degradation, poverty and inequality (2019).

Bond, W. & Keeley, J. Fire as a global ‘herbivore’: the ecology and evolution of flammable ecosystems. Trends Ecol. Evol. 20, 387–394 (2005).

Lehmann, C. E. R., Archibald, S. A., Hoffmann, W. A. & Bond, W. J. Deciphering the distribution of the savanna biome. New Phytol. 191, 197–209 (2011).

Staver, A. C., Archibald, S. & Levin, S. A. The global extent and determinants of savanna and forest as alternative biome states. Science 334, 230–232 (2011).

Fuhlendorf, S. D., Fynn, R. W. S., McGranahan, D. A. & Twidwell, D. Heterogeneity as the basis for rangeland management in Rangeland Systems: Processes, Management and Challenges, Springer Series on Environmental Management (ed. Briske, D. D.), 169–196 (Springer International Publishing, 2017).

Liao, C., Agrawal, A., Clark, P. E., Levin, S. A. & Rubenstein, D. I. Landscape sustainability science in the drylands: mobility, rangelands and livelihoods. Landsc. Ecol. 35, 2433–2447 (2020).

Galvin, K. A. Transitions: pastoralists living with change. Annu. Rev. Anthropol. 38, 185–198 (2009).

López-i Gelats, F., Fraser, E. D. G., Morton, J. F. & Rivera-Ferre, M. G. What drives the vulnerability of pastoralists to global environmental change? A qualitative meta-analysis. Glob. Environ. Change 39, 258–274 (2016).

Obiri, J. F. Invasive plant species and their disaster-effects in dry tropical forests and rangelands of Kenya and Tanzania. Jàmbá: Journal of Disaster Risk Studies 3, 417–428 (2011).

Kioko, J., Kiringe, J. W. & Seno, S. O. Impacts of livestock grazing on a savanna grassland in Kenya. J. Arid Land 4, 29–35 (2012).

Kotiaho, J. S. et al. The IPBES assessment report on land degradation and restoration. Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem (2018).

Western, D., Mose, V. N., Worden, J. & Maitumo, D. Predicting extreme droughts in savannah Africa: A comparison of proxy and direct measures in detecting biomass fluctuations, trends and their causes. PLoS One 10, e0136516 (2015).

Dai, A. Drought under global warming: a review. WIREs Climate Change 2, 45–65 (2011).

Holechek, J. L., Cibils, A. F., Bengaly, K. & Kinyamario, J. I. Human population growth, African pastoralism, and rangelands: A perspective. Rangeland Ecol. Manage. 70, 273–280 (2017).

Midgley, G. F. & Bond, W. J. Future of African terrestrial biodiversity and ecosystems under anthropogenic climate change. Nat. Clim. Chang. 5, 823–829 (2015).

Hill, M. J. & Guerschman, J. P. The MODIS global vegetation fractional cover product 2001–2018: Characteristics of vegetation fractional cover in grasslands and savanna woodlands. Remote Sensing 12, 406 (2020).

Lake, P. S. Resistance, resilience and restoration. Ecol. Manage. Restor. 14, 20–24 (2013).

Hodgson, D., McDonald, J. L. & Hosken, D. J. What do you mean, ‘resilient’?. Trends Ecol. Evol. 30, 503–506 (2015).

Tilman, D. & Downing, J. A. Biodiversity and stability in grasslands. Nature 367, 363–365 (1994).

Fedrigo, J. K. et al. Temporary grazing exclusion promotes rapid recovery of species richness and productivity in a long-term overgrazed Campos grassland. Restor. Ecol. 26, 677–685 (2018).

Ruppert, J. C. et al. Quantifying drylands’ drought resistance and recovery: the importance of drought intensity, dominant life history and grazing regime. Glob. Chang. Biol. 21, 1258–1270 (2015).

Homewood, K. M. Policy, environment and development in African rangelands. Environ. Sci. Policy 7, 125–143 (2004).

Caro, T. & Davenport, T. R. B. Wildlife and wildlife management in Tanzania. Conserv. Biol. 30, 716–723 (2016).

Bollig, M. & Schulte, A. Environmental change and pastoral perceptions: degradation and indigenous knowledge in two African pastoral communities. Hum. Ecol. 27, 493–514 (1999).

Veldhuis, M. P. et al. Cross-boundary human impacts compromise the Serengeti-Mara ecosystem. Science 363, 1424–1428 (2019).

Nicholson, S. E. Climate and climatic variability of rainfall over Eastern Africa. Rev. Geophys. 55, 590–635 (2017).

2012 Population and Housing Census (National Bureau of Statistics, Ministry of Finance, 2013).

Kiffner, C., Nagar, S., Kollmar, C. & Kioko, J. Wildlife species richness and densities in wildlife corridors of Northern Tanzania. J. Nat. Conserv. 31, 29–37 (2016).

Foley, C. A. H. & Faust, L. J. Rapid population growth in an elephant Loxodonta africana population recovering from poaching in Tarangire National Park, Tanzania. Oryx 44, 205–212 (2010).

Kebacho, L. L. Large-scale circulations associated with recent interannual variability of the short rains over East Africa. Meteorol. Atmos. Phys. 134, 10 (2021).

Wainwright, C. M., Finney, D. L., Kilavi, M., Black, E. & Marsham, J. H. Extreme rainfall in East Africa, October 2019-January 2020 and context under future climate change. Weather 76, 26–31 (2021).

Abukari, H. & Mwalyosi, R. B. Comparing pressures on national parks in Ghana and Tanzania: The case of mole and Tarangire National Parks. Global Ecol. Conserv. 15, e00405 (2018).

Kaswamila, A. An analysis of the contribution of community wildlife management areas on livelihood in Tanzania. Sustain. Natl. Res. Manag. 139–54 (2012).

NTRI. Maps | NTRI - Northern Tanzania Rangelands Initiative. https://www.ntri.co.tz/maps/ (2016). Accessed: 2021-3-29.

Mworia, J., Kinyamario, J. & John, E. Impact of the invader Ipomoea hildebrandtii on grass biomass, nitrogen mineralisation and determinants of its seedling establishment in Kajiado, Kenya. Afr. J. Range Forage Sci. 25, 11–16 (2008).

Manyanza, N. M. & Ojija, F. Invasion, impact and control techniques for invasive Ipomoea hildebrandtii on Maasai steppe rangelands. NATO Adv. Sci. Inst. Ser. E Appl. Sci. 17, 12 (2021).

Thaiyah, A. G. et al. Acute, sub-chronic and chronic toxicity of Solanum incanum L in sheep in Kenya. Kenya Veterinarian 35, 1–8 (2011).

Roques, K. G., O’Connor, T. G. & Watkinson, A. R. Dynamics of shrub encroachment in an African savanna: relative influences of fire, herbivory, rainfall and density dependence. J. Appl. Ecol. 38, 268–280 (2001).

Riginos, C. & Herrick, J. E. Monitoring rangeland health: a guide for pastoralists and other land managers in Eastern Africa. Version II (2010).

Farr, T. G. et al. The shuttle radar topography mission. Rev. Geophys. 45, RG2004 (2007).

QGIS Development Team. QGIS Geographic Information System. QGIS Association (2022).

Gorelick, N. et al. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 202, 18–27 (2017).

Didan, K. MOD13Q1 MODIS/Terra Vegetation Indices 16-Day L3 Global 250m SIN Grid V006 [Data set] (NASA EOSDIS Land Processes DAAC, 2015).

Friedl, M. & Sulla-Menashe, D. MCD12Q1 MODIS/Terra+ Aqua Land Cover Type Yearly L3 Global 500m SIN Grid V006 (NASA EOSDIS Land Processes DAAC, 2019).

Vermote, E. MOD09A1 MODIS/Terra Surface Reflectance 8-day L3 Global 500m SIN Grid V006. NASA EOSDIS Land Processes DAAC 10 (2015).

Funk, C. et al. The climate hazards infrared precipitation with stations–a new environmental record for monitoring extremes. Scientific Data 2, 1–21 (2015).

Zeileis, A. & Grothendieck, G. zoo: S3 infrastructure for regular and irregular time series. arXiv:math/0505527 (2005).

R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. (2016).

Scaramuzza, P. & Barsi, J. Landsat 7 scan line corrector-off gap-filled product development in Proceeding of Pecora 16, 23–27 (2005).

Huete, A. et al. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 83, 195–213 (2002).

Rikimaru, A., Roy, P. S. & Miyatake, S. Tropical forest cover density mapping. Trop. Ecol. 39–47 (2002).

Diek, S., Fornallaz, F., Schaepman, M. E. & De Jong, R. Barest pixel composite for agricultural areas using landsat time series. Remote Sensing 9, 1245 (2017).

Qi, J., Chehbouni, A., Huete, A. R., Kerr, Y. H. & Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 48, 119–126 (1994).

Adams, B. et al. Mapping forest composition with Landsat time series: An evaluation of seasonal composites and harmonic regression. Remote Sensing 12, 610 (2020).

Nwanganga, F. & Chapple, M. Practical machine learning in R (John Wiley and Sons, Indianapolis, 2020).

Adam, E., Mutanga, O., Odindi, J. & Abdel-Rahman, E. M. Land-use/cover classification in a heterogeneous coastal landscape using RapidEye imagery: evaluating the performance of random forest and support vector machines classifiers. Int. J. Remote Sens. 35, 3440–3458 (2014).

Mansour, K., Mutanga, O., Adam, E. & Abdel-Rahman, E. M. Multispectral remote sensing for mapping grassland degradation using the key indicators of grass species and edaphic factors. Geocarto Int. 31, 477–491 (2016).

Hunter, F. D. L., Mitchard, E. T. A., Tyrrell, P. & Russell, S. Inter-Seasonal time series imagery enhances classification accuracy of grazing resource and land degradation maps in a savanna ecosystem. Remote Sensing 12, 198 (2020).

Yang, L. et al. Estimating surface downward shortwave radiation over china based on the gradient boosting decision tree method. Remote Sensing 10, 185 (2018).

Pham, T. D. et al. Estimating mangrove Above-Ground biomass using extreme gradient boosting decision trees algorithm with fused Sentinel-2 and ALOS-2 PALSAR-2 data in Can Gio biosphere reserve, Vietnam. Remote Sensing 12, 777 (2020).

Adobe Inc. Adobe illustrator.

Lenth, R. V. emmeans: Estimated marginal means, aka Least-Squares means. R package version 1.5.4 (2021).

Royall, R. M. The effect of sample size on the meaning of significance tests. Am. Stat. 40, 313–315 (1986).

Rue, H., Martino, S. & Chopin, N. Approximate bayesian inference for latent Gaussian models by using integrated nested Laplace approximations. J. R. Stat. Soc. Series B Stat. Methodol. 71, 319–392 (2009).

Lindgren, F. & Rue, H. Bayesian spatial modelling with R-INLA. J. Stat. Softw. 63, 1–25 (2015).

Bakka, H. et al. Spatial modelling with R-INLA: A review. arXiv:1802.06350 [stat] (2018).

Lobora, A. L. et al. Modelling habitat conversion in Miombo woodlands: Insights from Tanzania. J. Land Use Sci. 1747423X.2017.1331271 (2017).

Bright, E. A., Rose, A. N., Urban, M. L. & McKee, J. LandScan 2017 High-Resolution global population data set. Tech. Rep., Oak Ridge National Lab.(ORNL), Oak Ridge, TN (United States) (2018).

Gilbert, M. et al. Global distribution data for cattle, buffaloes, horses, sheep, goats, pigs, chickens and ducks in 2010. Sci Data 5, 180227 (2018).

Yang, Y., Fang, J., Ma, W. & Wang, W. Relationship between variability in aboveground net primary production and precipitation in global grasslands. Geophys. Res. Lett. 35 (2008).

Guo, Q. et al. Spatial variations in aboveground net primary productivity along a climate gradient in Eurasian temperate grassland: effects of mean annual precipitation and its seasonal distribution. Glob. Chang. Biol. 18, 3624–3631 (2012).

Wang, X., Yue, Y. & Faraway, J. J. Bayesian Regression Modeling with INLA (Chapman and Hall/CRC, 2018).

Côté, I. M. & Darling, E. S. Rethinking ecosystem resilience in the face of climate change. PLoS Biol. 8, e1000438 (2010).

O’Loughlin, J. et al. Climate variability and conflict risk in East Africa, 1990–2009. Proc. Natl. Acad. Sci. 109, 18344–18349 (2012).

Ongoma, V., Chen, H., Gao, C., Nyongesa, A. M. & Polong, F. Future changes in climate extremes over Equatorial East Africa based on CMIP5 multimodel ensemble. Nat. Hazards 90, 901–920 (2018).

Homewood, K. & Rodgers, W. A. Pastoralism, conservation and the overgrazing controversy. Conservation in Africa: People, policies and practice 111–128 (1987).

Scoones, I. Exploiting heterogeneity: habitat use by cattle in dryland Zimbabwe. J. Arid Environ. 29, 221–237 (1995).

Goldman, M. J. & Riosmena, F. Adaptive capacity in Tanzanian Maasailand: Changing strategies to cope with drought in fragmented landscapes. Glob. Environ. Change 23, 588–597 (2013).

Selemani, I. S. & Others. Communal rangelands management and challenges underpinning pastoral mobility in Tanzania: a review. Livestock Res. Rural Dev. 26, 1–12 (2014).

Middleton, N. Rangeland management and climate hazards in drylands: dust storms, desertification and the overgrazing debate. Nat. Hazards 92, 57–70 (2018).

Sallu, S. M., Twyman, C. & Stringer, L. C. Resilient or vulnerable livelihoods? Assessing livelihood dynamics and trajectories in rural Botswana. Ecology and Society 15 (2010).

Oba, G. & Lusigi, W. J. An overview of drought strategies and land use in African pastoral systems (Agricultural Administration Unit, Overseas Development Institute, 1987).

Russell, S., Tyrrell, P. & Western, D. Seasonal interactions of pastoralists and wildlife in relation to pasture in an African savanna ecosystem. J. Arid Environ. 154, 70–81 (2018).

Girvetz, E. et al. Future climate projections in Africa: Where are we headed? In The Climate-Smart Agriculture Papers: Investigating the Business of a Productive, Resilient and Low Emission Future 15–27 (Springer International Publishing, 2019).

Lyon, B. & DeWitt, D. G. A recent and abrupt decline in the East African long rains. Geophys. Res. Lett. 39 (2012).

Liebmann, B. et al. Climatology and interannual variability of boreal spring wet season precipitation in the Eastern Horn of Africa and implications for its recent decline. J. Clim. 30, 3867–3886 (2017).

Shongwe, M. E., van Oldenborgh, G. J., van den Hurk, B. & van Aalst, M. Projected changes in mean and extreme precipitation in Africa under global warming. part II: East Africa. J. Clim. 24, 3718–3733 (2011).

Dunning, C. M., Black, E. & Allan, R. P. Later wet seasons with more intense rainfall over Africa under future climate change. J. Clim. 31, 9719–9738 (2018).

Rowell, D. P., Booth, B. B. B., Nicholson, S. E. & Good, P. Reconciling past and future rainfall trends over East Africa. J. Clim. 28, 9768–9788 (2015).

Vizy, E. K. & Cook, K. H. Mid-Twenty-First-Century changes in extreme events over Northern and Tropical Africa. J. Clim. 25, 5748–5767 (2012).

Gebremeskel Haile, G. et al. Droughts in East Africa: Causes, impacts and resilience. Earth-Sci. Rev. 193, 146–161 (2019).

Kendon, E. J. et al. Enhanced future changes in wet and dry extremes over Africa at convection-permitting scale. Nat. Commun. 10, 1794 (2019).

Finney, D. L. et al. Effects of explicit convection on future projections of mesoscale circulations, rainfall, and rainfall extremes over Eastern Africa. J. Clim. 33, 2701–2718 (2020).

Prins, H. H. T. & Loth, P. E. Rainfall patterns as background to plant phenology in Northern Tanzania. J. Biogeogr. 15, 451–463 (1988).

Ngondya, I. B., Treydte, A. C., Ndakidemi, P. A. & Munishi, L. K. Invasive plants: ecological effects, status, management challenges in Tanzania and the way forward. J. Biodivers. Environ. Sci. (JBES) 10, 204–217 (2017).

Drusch, M. et al. Sentinel-2: ESA’s optical High-Resolution mission for GMES operational services. Remote Sens. Environ. 120, 25–36 (2012).

Rapinel, S. et al. Evaluation of Sentinel-2 time-series for mapping floodplain grassland plant communities. Remote Sens. Environ. 223, 115–129 (2019).

Li, W. et al. Accelerating savanna degradation threatens the Maasai Mara socio-ecological system. Glob. Environ. Change 60, 102030 (2020).

Wonkka, C. L., Twidwell, D., Franz, T. E., Taylor, C. A. & Rogers, W. E. Persistence of a severe drought increases desertification but not woody dieback in semiarid savanna. Rangeland Ecol. Manage. 69, 491–498 (2016).

Vierich, H. I. D. & Stoop, W. A. Changes in West African savanna agriculture in response to growing population and continuing low rainfall. Agric. Ecosyst. Environ. 31, 115–132 (1990).

Fynn, R. W. S. & O’Connor, T. G. Effect of stocking rate and rainfall on rangeland dynamics and cattle performance in a semi-arid savanna, South Africa. J. Appl. Ecol. 37, 491–507 (2000).

Wang, S., Chen, W., Xie, S. M., Azzari, G. & Lobell, D. B. Weakly supervised deep learning for segmentation of remote sensing imagery. Remote Sensing 12, 207 (2020).

Alananga, S., Makupa, E. R., Moyo, K. J., Matotola, U. C. & Mrema, E. F. Land administration practices in Tanzania: A replica of past mistakes. Journal of Property, Planning and Environmental Law (2019).

Huggins, C. Village land use planning and commercialization of land in Tanzania. LANDac Research Brief 1 (2016).

Stein, H., Maganga, F. P., Odgaard, R., Askew, K. & Cunningham, S. The formal divide: Customary rights and the allocation of credit to agriculture in Tanzania. J. Dev. Stud. 52, 1306–1319 (2016).

Hall, D. G. M., Reeve, M. J., Thomasson, A. J. & Wright, V. F. Water retention, porosity and density of field soils (No. Tech. Monograph N9, 1977).

Moore, D. C. & Singer, M. J. Crust formation effects on soil erosion processes. Soil Sci. Soc. Am. J. 54, 1117–1123 (1990).

Cotler, H. & Ortega-Larrocea, M. P. Effects of land use on soil erosion in a tropical dry forest ecosystem, Chamela watershed, Mexico. Catena 65, 107–117 (2006).

Bach, E. M., Baer, S. G., Meyer, C. K. & Six, J. Soil texture affects soil microbial and structural recovery during grassland restoration. Soil Biol. Biochem. 42, 2182–2191 (2010).

Butz, R. J. Traditional fire management: historical fire regimes and land use change in pastoral East Africa. Int. J. Wildland Fire 18, 442–450 (2009).

Acknowledgements

The research was part of the Northern Tanzanian Rangelands Initiative and funded by USAID through their Endangered Ecosystem Northern Tanzania Initiative (AID-621-A-15-00004), and implemented by The Nature Conservancy. This study received research approval from the Tanzanian Commission for Science and Technology (permit numbers 2016-80-NA-92-43, 2016-81-NA-92-43, 2016-97-NA-92-43, 2017-143-ER-92-43 and 2017-147-ER-92-43). Permission for research in communities was obtained from relevant local and district authorities. We are extremely grateful to support from the Tanzania National Parks Authority (TANAPA) and Tanzania Wildlife Management Authority (TAWA) for allowing access to rangelands throughout the study area. We would also like to acknowledge the field and support team - Dr. Alex Lobora, Jumanne Ramadhani (Tanzania Wildlife Research Institute), John Mkindi and Mustafa Hassanali.

Author information

Authors and Affiliations

Contributions

R.C., C.M.B., C.F., L.F., E.J.K., B.B. conceived and designed the data collection; R.C., B.O., Z.M., and P.B.K. participated in collecting the data; J.H.W., R.C. and C.M.B. analysed the data and interpreted the results; J.H.W., R.C. and C.M.B. drafted the manuscript; J.H.W., R.C., C.M.B., E.J.K., B.B., K.R., S.E.H., C.F. and L.F. substantially revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wiethase, J.H., Critchlow, R., Foley, C. et al. Pathways of degradation in rangelands in Northern Tanzania show their loss of resistance, but potential for recovery. Sci Rep 13, 2417 (2023). https://doi.org/10.1038/s41598-023-29358-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-29358-6

This article is cited by

-

Spatio-temporal modeling of rangeland degradation in response to changing environment in the Upper Ewaso Ngiro River Basin, Kenya

Environmental Monitoring and Assessment (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.