Abstract

We show a simple yet effective method that can be used to characterize the per pixel quantum efficiency and temporal resolution of a single photon event camera for quantum imaging applications. Utilizing photon pairs generated through spontaneous parametric down-conversion, the detection efficiency of each pixel, and the temporal resolution of the system, are extracted through coincidence measurements. We use this method to evaluate the TPX3CAM, with appended image intensifier, and measure an average efficiency of \(7.4\pm 2\)% and a temporal resolution of 7.3 ns. Furthermore, this technique reveals important error mechanisms that can occur in post-processing. We expect that this technique, and elements therein, will be useful to characterise other quantum imaging systems.

Similar content being viewed by others

Introduction

Photonics is emerging as an important platform for future quantum technologies. Single photons can transmit quantum information at the speed of light, making them the natural choice for quantum communication1,2, and quantum-enhanced imaging or detection3. All such experiments begin with a source of non-classical light, proceed with the manipulation and transmission of the light, and conclude with its detection. To fully understand experimental results and avoid errors, it is vitally important that each link in this chain is appropriately characterized. In this paper, we focus on the characterization of a single-photon sensitive time-tagging camera for use in quantum imaging experiments. Because an anticipated use-case for the camera is in quantum imaging, it is logical that a non-classical light source is used to evaluate its performance. In this way, we directly evaluate the camera in the framework in which it will be used.

The camera under characterization is the TPX3CAM from Amsterdam Scientific Instruments which is a \(256\times 256\) silicon pixel array, where the arrival time and position of each event on the detector is tagged with nanosecond (1.6 ns FWHM) and micrometer resolution (55 \(\upmu\)m pixel pitch) respectively. This camera is not, by itself, single-photon sensitive; instead a fast image intensifier is appended to the camera to improve the sensitivity. The combination of a TPX3CAM and image intensifier results in a device which can tag the arrival time and position of every incident photon with nanosecond-scale accuracy, on over 65,000 pixels. This combination of temporal and spatial resolution is currently unprecedented in single photon sensitive cameras leading to a recent surge quantum optics experiments with this detector4,5,6,7,8,9,10,11. However the use of an image intensifier leads to complications which must be carefully considered. When one photon strikes the intensifier it is amplified into a burst of O\((10^5)\) photons, which will illuminate a small cluster of pixels. Furthermore, due to thresholding effects, the registered arrival time will have a small variance depending on the number of photons hitting a pixel, causing each pixel in the cluster to register a slightly different arrival time. Careful processing is thus required to assign a single position and time for every such cluster. Therefore, unlike conventional single photons cameras such as avalanche photodiode (APD) arrays, the process of detecting a single photon involves both hardware and software. Consequently, full charachterization of the camera system must also involve both hardware and software post-processing as is discussed in detail in this manuscript.

We characterize the camera using pairs of photons generated by spontaneous parametric down-conversion (SDPC). The idea of using two-photon emission to calibrate the efficiency of photodetectors was first conceived over 40 years ago12,13 and has developed into a dependable method for characterising single photon detectors14. The concept is as follows: the two output modes of a SPDC source are split onto two detectors, A and B and the number of detection events \(N_a\) and \(N_b\) are measured. The number of coincident detection events \(N_{a,b}\), when both detectors fire simultaneously, are also recorded. Because SPDC photons are always generated in pairs then every detection should be coincident so, for perfect detectors: \(N_{a,b} = N_a = N_b\). Deviations from this indicate imperfect detection efficiency. This principle can be used to calculate the efficiency \(\eta\) of each detector:

In a practical setting, \(\eta\) will include collection losses as well as the detector efficiency, and dark counts, so these losses must be carefully calibrated to extract the true detector efficiency14. While this methodology was originally designed for single-pixel detectors, it can be extended to multi-pixel cameras15. A major advantage of this approach is that it is self-contained and does not require any previously calibrated sources or detectors. Furthermore, because it is known that the two photons are emitted simultaneously (within a sub-picosecond coherence time) this method can also be used to determine the temporal resolution of a detector16. Here we combine these two ideas to show that photon pairs can be used to measure the temporal resolution and the position-dependent efficiency of the camera system. We also show that this method can also be used to highlight two error mechanisms that can arise in post-production: double detection where one photon leads to two detection events, and dead zones where two photons arriving at a similar time and position get counted as a single event.

A schematic diagram of the experiment. A 405 nm continuous wave laser pumps a nonlinear crystal, generating pairs of photons by SPDC. A longpass filter removes the residual pump and the SPDC light is collimated by a lens. The photons impinge upon a image intensifier such that an incident photon is converted into a bright flash of light which can be registered on the time-tagging camera. A bandpass filter in front of the intensifier protects it from spurious light. Inset: The charge on each pixel is amplified and compared to a threshold level to measure the time of arrival (ToA) and time over threshold (ToT) of each event.

Results

Apparatus

A conceptual diagram of the experimental setup is shown in Fig. 1. A 405 nm laser pumps a type-0 nonlinear crystal to produce degenerate pairs of photons centred at 810 nm. The pump laser is blocked by a longpass interference filter. The photon pairs are collimated by a lens and sent to the camera system which consists of the TPX3CAM and appended intensifier, an additional bandpass filter (\(810\pm 5\) nm) is attached directly to the front of the intensifier to remove stray light. Because the photons are imaged in the far field, the position that they are detected on the camera is proportional to the angle at which the photons are emitted. Due to conservation of momentum, degenerate photon pairs will ideally emerge from the crystal at equal but opposite angles so their arrival positions on the camera are anti-correlated such that \(x_1\simeq -x_2\) and \(y_1 \simeq -y_2\), where x, y are the coordinates on the camera, and the center of the beam is at \(x=y=0\). As discussed, the TPX3CAM is not single-photon sensitive, but it can be made so by attaching an image intensifier. When a photon is incident upon the intensifier, it first strikes the photocathode generating a photoelectron; the photoelectron is then amplified into a burst of electrons by a multichannel plate (MCP); the burst of electrons strikes a phosphor screen to generate a burst of photons, which are imaged onto the camera. The incident burst of photons is registered on a small cluster of camera pixels. Identifying clusters that are due to single photons, and attributing unique time and position coordinates to each cluster, requires careful post-processing of the data.

Every pixel in the TPX3CAM contains: an amplifier which boosts the electrical signal; a comparator which compares this signal to a user-defined threshold; and a counter which logs the time at which the signal rises above the threshold, and the time at which falls below it. This process is shown pictorially in the inset of Fig. 1. The time that the amplified signal rises above the threshold is known as the time of arrival (ToA), and the time that the signal remains above threshold is known as the time over threshold (ToT). The ToA is a good approximation of when a pulse of light has struck the pixel, but it is not perfect. Because the ToA is registered only by the time the signal crosses the threshold, and not by a full digitization of the waveform, a brighter pulse of light will lead to a larger signal which will appear to have an earlier ToA, and a larger ToT. Conversely a weaker pulse of light will appear to have a later ToA and a shorter ToT17. A cluster is brighter in the middle and weaker on the edges resulting in a spread in ToA values that can be \(>100\) ns18.

Post-processing

Converting the raw data stream into single events, and subsequently measuring the efficiency of the camera, requires a series of post-processing steps. These are described in detail in the below sections, and summarised by the flow-chart in Fig. 2.

The first step is cluster identification in which clusters of events are grouped together. Next, is centroiding where each cluster is assigned a single spatial-temporal coordinate. The sum of all these single events makes up the singles image. In the pairing step, pairs of events with correlated arrival time, and anti-correlated arrival positions are identified, an image containing the sum of all these pairs is known as the coincidence image. The efficiency map is a plot of the per-pixel efficiency of the camera which can be calculated by dividing the coincidence image by a rotated copy of the singles image.

Cluster identification

Because of the gain in the intensifier, a single incident photon is converted to a bright burst of photons. This burst illuminates multiple pixels resulting in a cluster of events which have similar, but not identical, spatial and temporal coordinates. The first step in the post-processing, which we term cluster identification, is the process of identifying clusters of events that are due to a single photon incident on the intensifier. Typically the centre of the burst is brightest therefore the central pixel in the cluster will measure the highest intensity, and the surrounding pixels will be dimmer. Due to the nature of thresholding, the brightest central pixel will have the earliest ToA, and the surrounding pixels will have later ToAs. Therefore, even though the burst is due to a single photon, the pixels will appear to arrive at different times, which can vary by up to hundreds of nanoseconds. It is possible, to a degree, to correct for this temporal spread by so-called ToT-ToA correction19, but the correction is imperfect and significant temporal spread remains.

In order to identify clusters, we define a 3D box of size \(\delta x \times \delta y \times \delta \tau\), where x, y, and \(\tau\) are the positions and time of each event. A cluster is defined as a group of events that fall within a box of these dimensions. The exact dimensions of the box depend on the properties of the apparatus including the photon energy, intensifier gain, and camera threshold, and must be optimised for each system. In our case, the optimised values are \(\delta x = \delta y = 17\) pixels, and \(\delta \tau = 300\) ns. Although the \(\delta \tau\) might seem excessive, there are noticeable improvements compared to 200 ns or less. This algorithm prioritizes efficiency over computing speed, and might not be as useful as other algorithms20 for experiments with a large number of events.

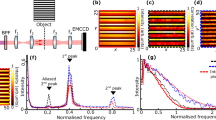

If the clustering algorithm is performed incorrectly, for example if \(\delta \tau\) is too small, then clusters can be split in half resulting in a single photon being counted twice, this has major implications for quantum imaging. This error mechanism, which we term double-counting, is illustrated graphically in Fig. 3. The camera is illuminated by the SPDC light and pairs of clusters are identified. A 2-dimensional histogram of the x position of one cluster \(x_1\) is plotted against the x-position of the other cluster \(x_2\). If the pair of clusters are due to genuine SPDC photons, then their position will be anti-correlated resulting in an anti-diagonal line on the 2-dimensional histogram. If the pair of clusters are due to double counting from improper clustering then their position will be correlated resulting in a diagonal line. In Fig. 3a, the clustering algorithm has been appropriately applied with \(\delta \tau = 300\) ns. In this case the box is larger than the typical cluster size so all events from a cluster are included and the histogram is dominated by true coincidences from SPDC. In 3b, we see the effects of poor clustering. Here \(\delta \tau\) is set to 17 ns, which is significantly smaller than the typical cluster size, so clusters are split resulting in the possibility that two or more events can be generated by a single photon. In this case the histogram is dominated by false coincidences due to these split clusters. Uncorrelated pairs arising in the off-diagonal areas occur due to various noise mechanisms including electrical noise and background light. Therefore we can immediately see, from a single plot, the effects of signal (anti-diagonal), noise (off-diagonal) and errors (diagonal) in our system.

Two-dimensional histograms of the horizontal position of pairs of events. Pairs of photons generated by SPDC exhibit strong anti-correlations resulting in an anti-diagonal line (\(x_1 \simeq -x_2\)). In (a), clustering has been performed appropriately and this anti-diagonal line dominates. In (b), the clustering algorithm parameters are chosen inappropriately and a strong diagonal line (\(x_1\simeq x_2\)) emerges because clustering can return two or more events for a single photon detection.

Centroiding

Once event clusters have been identified, we next need to assign a single position and arrival time to each cluster, this process is known as centroiding. Assigning the position is straightforward: we simply find the mean position of the cluster. In this case, we select the closest pixel to the mean, but it has been shown that centroiding can be used to improve spatial resolution by sub-dividing the pixels to gain a more accurate cluster center21.

Assigning the arrival time is more complex. Because of the thresholding issue discussed above, each pixel in a cluster has a different ToA. In this case, computing the mean ToA is not a good choice because this can by greatly affected by low intensity pixels in the edge of the cluster. Three better options include assigning the ToA of the central pixel, the ToA of the pixel with the earliest ToA, or the ToA of the pixel which has the highest ToT. The result of these ToA correction methods are compared through a two photon coincidence measurement. Because the photons are produced in pairs, we know their arrival time should be identical to within their coherence time (sub-picosecond). Therefore, by measuring the arrival time difference across thousand of pairs, we can accurately measure the temporal resolution of the camera. This temporal resolution is affected by the ToA timing correction method.

To measure the temporal resolution of the camera, we split the detection events into two bins: one for photons that strike the top half of the sensor (T) and another for those that strike the bottom half (B). As the arrival positions of two photons in a pair are anti-correlated so, if one photon arrives in B, the other must arrive in T. Once the events have been binned, the arrival time difference between the photon pair is calculated as:

Figure 4 shows a histogram of \(\Delta\)ToA values, where the ToA has been assigned to each cluster using one of the aforementioned methods. In each case, a peak centered at \(\Delta\)ToA\(= 0\) is indicative of the correlated nature of SPDC pair production. The width of the peak in the histogram returns the temporal resolution of the technique. As expected, assigning the mean ToA gives poor temporal resolution, but using the center, maximum ToT, and minimum ToA values all give comparable results. Assigning the ToA of the event with maximum ToT gives the highest resolution, so this method is used for the remainder of the results. The FWHM of the peak is 10.3 ns, implying that the temporal resolution of the detector is \(\frac{10.3}{\sqrt{2}} = 7.3\) ns.

Arrival time difference histogram of event pairs exhibiting a peak at \(\Delta \text {ToA}=0\) due to correlated SPDC pairs. Since the coherence time of the photons is \(\simeq 100\) fs, the width of this peak is a measure of the timing jitter of the system. Different methods have been used to assign the arrival time of a cluster, details of which are described in the text, resulting in different temporal performance. Note that the center pixel curve is not visible as it overlaps with the minimum ToA curve.

Efficiency measurement

As shown in Eq. (1), the efficiency of a pair of single photon detectors can be characterized by measuring coincident detection from an SPDC source. The same principles can be applied to characterize the efficiency of the camera. We begin by taking a 25 second acquisition of the SPDC output resulting in \(\sim 11\) million detection events. This is used to generate a singles image S(x, y) (Fig. 5c) which is simply the total number of events detected on each pixel. Next we make another 25 second acquisition which is identical to the first in every way except that the SPDC beam is blocked, this generates a background singles image \(S_B(x,y)\) (Fig. 5d). The coincidence image C(x, y) (Fig. 5a) is calculated by isolating pairs of events that are correlated in arrival time (to within the timing jitter measured in Fig. 4), and anti-correlated in position (to within the uncertainty shown in Fig. 3). Further details of coincidence selection technique can be found in the methods section. As well as true coincidences from SPDC pairs, the coincidence image also contains accidental coincidences. Sources of accidental coincidences include stray light, electrical noise, pairs in which the two photons derive from separate SPDC events, and combinations of the above processes. Considering both temporal and spatial coincidence windows, these false coincidences account for only \(\sim 10^{-5}\) of the total number of coincidences in our setup, but are included in the efficiency measurement for completeness. In a different configuration, false coincidences may play a more significant role. Details of how the background coincidence image \(C_B(x,y)\) (Fig. 5b) is calculated can be found in the methods section.

In direct analogy with Eq. (1), the camera efficiency can be calculated as the ratio of the background-subtracted coincidence image at a particular position, divided by the the background-subtracted singles image at the conjugate position. Because the position anti-correlations from the source are not perfect, the conjugate position is not a single pixel, but rather an area centered about the conjugate position whose diameter is determined by the width of the anti-diagonal line in Fig. 3. The position-dependent efficiency \(\eta (x,y)\) is therefore given by:

where \(\overline{S}(x,y)\) and \(\overline{S_B}(x,y)\) are the singles and background singles images respectively, with a moving average applied to take into account the imperfect correlation. Details of this moving average can be found in the methods section. The resulting efficiency plot, shown in Fig. 5e, provides a map of the quantum efficiency of the entire detection system as a function of position. Note that a square area of \(17\times 17\) pixels in the center of the camera appears to have zero efficiency. This dead zone is not a physical effect, but rather an effect of the post-processing: if two or more photons arrive at the intensifier at a similar position and time, then the clustering algorithm will combine both into a single event. Therefore, the choice of \(\delta x\) and \(\delta y\) in the clustering algorithm is important. If it is too small then clusters will be split and one photon will be registered as two events. If it is too large then two photons arriving close to each other will be registered as a single event. In this work, \(\delta x = \delta y = 17\) pixels achieved an appropriate compromise.

Visualization of the calculation of the per-pixel efficiency of the sensor (Eq. 3). (a) A coincidence image is formed by including only events that have temporal and spatial partners. (b) A background coincidences image calculated according to Eq. 7. (c) A raw image of the SPDC beam and background. (d) Background image acquired with the SPDC beam switched off. (e) The per-pixel efficiency is calculated by the ratio of the background-subtracted coincidences in a pixel (red dot in a) to the conjugate area in the singles background-subtracted image (red circle in c).

The corners of the camera are not covered by the intensifier, so cannot be measured but the rest of the sensor, apart from the dead zone, is effectively characterised. In this region, the mean and standard deviation pixel efficiencies are \(\left<\eta \right> = 7.4 \pm 2\) %. The efficiency is approximately uniform, though some less efficient patches are evident.

Discussion

In this paper, we have described a method for characterising a single photon event camera using the time and momentum correlations inherent in photons pairs generated through SPDC. These methods were used to characterize a TPX3CAM event camera, which is made sensitive to single photons using an appended intensifier. In such a system, significant post-processing is required to analyse the data including cluster identification, centroiding and timing corrections. Accordingly, the performance of this system depends on both hardware and software, highlighting the importance of an effective characterization method.

We use this method to determine that the position-dependent quantum efficiency of the system is approximately uniform with a mean efficiency of \(\left<\eta \right> = 7.4 \pm 2\) %, and the temporal resolution is 7.3 ns FWHM. It is important to note that the reported values depend on the specific hardware and software settings used in our laboratory. As such, this paper should not be considered a universal characterisation of the TPX3CAM platform, but rather a recipe for evaluating such camera systems. The efficiency measurement includes losses from other optical elements: two filters and one lenses. In practice, at least one lens would be required to form an imaging system, and filters are also required to ensure the sensor is not overwhelmed by stray light, as such \(\left<\eta \right>\) should be considered as the efficiency of the entire imaging system. The mean efficiency of the detector alone is calculated to be \(\left<\eta _c\right> = 8.0\pm 2\)% by dividing by the known transmission of these three elements (see methods section).

We also identify an error mechanism that can occur in post-processing due to improper cluster identification, where a single photon is registered as two or more separate events. We also encounter the reverse problem, where multiple photons arriving at a similar time and position be counted as a single event. These are examples of how the proposed characterization method can evaluate both software as well as hardware performances.

While this methodology was developed in the framework of the TPX3CAM, we expect that elements therein will be applicable to other devices. For example, intensified CCD cameras will also experience clustering issues, and densely-packed single photon avalanche detector SPAD arrays22 may experience cross-talk between adjacent pixels. The analysis shown in Fig. 3 will be useful for diagnosing these issues. Single photon array detectors such as these, are emerging as powerful tools for quantum enhanced imaging. The use of quantum-correlated light to characterize these cameras for quantum imaging is logical because it allows us to benchmark their performance directly in the setting in which they will be used.

Methods

Setup

A 405 nm continuous-wave laser pumps a 1 mm thick type-0 periodically poled potassium titanyl phosphate (ppKTP) crystal to produce around 2 Million entangled photon pairs per second through SPDC, centred at 810 nm. The pump laser is then removed by a longpass interference filter (Thorlabs FELH0600). The photon pairs are collimated by a 10 cm focal length lens (Thorlabs LA1509-B) to illuminate the full sensor area of the camera system which consists of the TPX3CAM and appended image intensifier (Photonis Cricket with Hi-QE Red photocathode). An additional \(810\pm 5\) nm bandpass filter (Thorlabs FELH0600) is attached directly to the front of the intensifier to remove stray light and isolate degenerate SPDC photons. The transmission of the longpass filter, lens, and bandpass filter at 810 nm are 96%, 99.5% and 97% respectively, totalling 92.7% overall transmission.

While type-0 ppKTP was used for this demonstration, it is worth noting that other nonlinear crystals (for example, \(\beta\)-Barium Borate (BBO), potassium titanyl phosphate (KTP) lithium niobate (LiNbO\(_3\)) etc.) would be equally appropriate. The most important condition is that the crystal should produce photon pairs that are emitted in a non-colinear fashion to ensure that the two photons impinge upon different parts of the camera. In most SPDC sources this condition is automatically fulfilled due to phase matching conditions. In type-II crystals, the two photons will be generated with opposite polarization, which will introduce a time delay due to birefringence. However, this delay will be in the picosecond regime and will have little practical effect on the temporal characterisation of the camera.

Cluster identification

Due to the gain of the intensifier, each photon detected results in a cluster of events on the camera. This cluster must be reduced to a single spatio-temporal event. For this purpose, a custom cluster identification algorithm that accounts for the different spatial and temporal ranges of a cluster was created. The algorithm involves picking every element that is not already in a cluster, and comparing to the following 200 events, if an event is within a 17 pixel \(\times 17\) pixel \(\times 300\) ns box then it belongs in the cluster and is not involved in further checks. Each cluster contains O(10) events which are distributed over \(>100\) ns due to large ToA delays from events at the edge of a cluster. Because the events from the camera are sorted temporally, it is possible that several clusters from different spatial positions will temporally overlap. By comparing each element to the following 200, we can be reasonably confident that every event in a cluster is identified. Comparing more than 200 elements will produce better results, but the small improvement does not justify the increased processing time. The spatial and temporal dimensions of the box (17 pixles and 300 ns respectively) were optimised by observing the efficiency and noise after centroiding; increasing the dimensions improves efficiency as more events are captured, but also more false events are captured so noise increases.

There are many existing cluster identification algorithms that can run faster with satisfactory efficiency like DBSCAN or the algorithm developed by Meduna et al.20. Due to the non-linear relationship of \(\text {ToT}\) to \(\Delta \text {ToA}\) and the increased uncertainty in \(\Delta \text {ToA}\) at smaller \(\text {ToT}\), density based algorithms struggle with variable density within a cluster occasionally either count two clusters as one or one cluster as two resulting in errors19. For practical application such errors might be insignificant when compared to run-time gains. However, while density based algorithms might be suitable for running an experiment, the algorithm provided in this paper is more suitable for characterising the camera more accurately and as a benchmark for other algorithms. Furthermore, the freedom to tune the parameters and utilize the provided metrics gives insight into the shape and size of the clusters and properties of the intensifier.

Efficiency measurement

The efficiency measurement requires four constituent images, as shown in Eq. (5). This section details the calculation of these four images.

The coincidence image C(x, y) is calculated in two steps: first pairs of events that are temporally correlated are identified, secondly these events are sifted to find pairs that are also spatially anti-correlated. Neither the temporal or the spatial (anti)correlations are perfect, so we define coincidence windows. From the width of the main peak in Fig. 4, the temporal coincidence window is chosen to be \(\Delta \textrm{ToA} = 20\) ns. Ideally, the photons would emerge from the crystal with exactly opposite angles, however, due to uncertainties in the momentum of the pump photons and the slight wavelength difference between SPDC photons, an uncertainty in the momentum anti-correlation is observed as a width of \(\sigma _x = 20\) pixels for the anti-diagonal line seen in Fig. 3 for the x-coordinate, and similarly in the y-coordinate. Therefore, the three conditions required to define a pair of coincidences are:

here the x and y pixel labels have been shifted such that the center of the SPDC beam is at \(x=y=0\). All events that do not have a spatial-temporal correlated partner are rejected, and the remaining events at each pixel are summed to form the 2-dimensional coincidence image C(x, y).

We must also calculate the smoothed singles image \(\overline{S}(x,y)\) and background singles image \(\overline{S_B}(x,y)\). Because the momentum correlation between pairs of photons is not perfect, we cannot calculate the efficiency directly by comparing coincidences and singles from geometrically opposite pixels. Instead, the coincidences from a given pixel are divided by the mean of the pixels in an appropriate region in the singles image. As before, the radius of this region is 20 pixels. The smoothed singles image \(\overline{S}(x,y)\) is therefore simply calculated by assigning each pixel the mean of the relevant area in the singles image. The same process is applied to the background image to form the smoothed background singles image \(\overline{S_B}(x,y)\).

Finally, background coincidences due to background light, electrical noise, double-pair emission, and combinations of the above events, need to be eliminated. These accidental coincidences can be calculated from the singles images by assuming they arise from uncorrelated processes. In this case, the number of accidental coincidences between a pixel and the conjugate area can be calculated to return the background coincidence image \(C_B(x,y)\):

where S(x, y) and \(\overline{S}(-x,-y)\) are expressed in counts per second, and \(\Delta\)ToA\(=20\) ns is the coincidence window chosen to measure the coincidence image. In this setup, the background coincidence rate is \(\sim 10^{-5}\) times lower than the true coincidence rate so makes no practical difference to the efficiency measurement, but is included for completeness.

Comparison

In order to judge the validity of our measurements we describe two alternative techniques to measure the efficiency and temporal resolution.

Efficiency

To provide a comparative measure of the camera efficiency, we couple one mode of the SPDC source into a single mode fiber, and use a single pixel avalanche photodiode (APD) to measure the photon flux at the output of the fiber. In this case we measure the count rate to be \(R_{\textrm{ref}} = 83,500\pm 300\) counts/s. We then remove the fiber from the APD and use it to illuminate a section of the intensifier (using the same filters as in Fig. 1) with the same flux and measure a count rate of \(R_{\textrm{cam}}=8550\pm 60\). The manufacturer-specified quantum efficiency of the APD is \(\eta _{\textrm{ref}} = 68\pm 6\), we can therefore estimate the mean pixel efficiency of the camera to be \(\left<\eta \right> = \eta _{\rm ref} \times R_{{\rm cam}}/R_{{\rm ref}} = 7.0\pm 0.7\)%. This value is in good agreement with the value that was more rigorously measured in the paper. Note that this type of measurement relies upon the manufacturer-quoted APD efficiency and is not a stand-alone measurement.

Temporal resolution

To provide a comparative measure of the temporal resolution, we use a pulsed laser whose pulse duration (150 fs) is significantly shorter than the expected temporal resolution. We partition the laser into two beam and illuminate two spots on the camera. We then construct a timing difference histogram between the two regions. The result is a series of peaks, separated by the laser repetition period (12.5 ns). The average and standard deviation of the FWHM of these peaks is \(11.8\pm 0.8\) ns, implying that the temporal resolution is: \(\frac{11.8\pm 0.8}{\sqrt{2}} = 8.3\pm 0.6\) ns. Due to the fitting of multiple peaks, we expect this answer to be more accurate than the measurement in Fig. 4, however both measurements are in reasonable agreement suggesting that the photon pair technique is valid.

Data Availability

The datasets used during the current study are available from the corresponding author on reasonable request.

References

Brassard, C., Bennett, C. H. Quantum cryptography: Public key distribution and coin tossing. In International Conference on Computers, Systems and Signal Processing (1984).

Ekert, A. K. Quantum cryptography based on bell’s theorem. Phys. Rev. Lett. 67, 661–663 (1991).

Genovese, M. Real applications of quantum imaging. J. Opt. 18, 073002 (2016).

Zhang, Y. et al. Multidimensional quantum-enhanced target detection via spectrotemporal-correlation measurements. Phys. Rev. A 101, 053808 (2020).

Zhang, Y., England, D., Nomerotski, A. & Sussman, B. High speed imaging of spectral-temporal correlations in hong-ou-mandel interference. Opt. Express 29, 28217–28227 (2021).

Zhang, Y., Orth, A., England, D. & Sussman, B. Ray tracing with quantum correlated photons to image a three-dimensional scene. Phys. Rev. A 105, L011701 (2022).

Zhang, Y., England, D. & Sussman, B. Snapshot hyperspectral imaging with quantum correlated photons. arXiv preprint arXiv:2204.05984 (2022).

Gao, X., Zhang, Y., D’Errico, A., Heshami, K. & Karimi, E. High-speed imaging of spatiotemporal correlations in hong-ou-mandel interference. Opt. Express 30, 19456–19464 (2022).

Svihra, P. et al. Multivariate discrimination in quantum target detection. Appl. Phys. Lett. 117, 044001 (2020).

Nomerotski, A., Keach, M., Stankus, P., Svihra, P. & Vintskevich, S. Counting of hong-ou-mandel bunched optical photons using a fast pixel camera. Sensors 20, 3475 (2020).

Nomerotski, A. et al. Spatial and temporal characterization of polarization entanglement. Int. J. Quantum Inf. 18, 1941027 (2020).

Klyshko, D. Use of two-photon light for absolute calibration of photoelectric detectors. Soviet J. Quantum Electron. 10, 1112 (1980).

Malygin, A., Penin, A. & Sergienko, A. Absolute calibration of the sensitivity of photodetectors using a biphotonic field. JETP Lett. 33, 477–480 (1981).

Ware, M. & Migdall, A. Single-photon detector characterization using correlated photons: the march from feasibility to metrology. J. Mod. Opt. 51, 1549–1557 (2004).

Qi, L., Just, F., Leuchs, G. & Chekhova, M. V. Autonomous absolute calibration of an iccd camera in single-photon detection regime. Opt. Express 24, 26444–26453 (2016).

Kwiat, P. G., Steinberg, A. M., Chiao, R. Y., Eberhard, P. H. & Petroff, M. D. Absolute efficiency and time-response measurement of single-photon detectors. Appl. Opt. 33, 1844–1853 (1994).

Nomerotski, A. Imaging and time stamping of photons with nanosecond resolution in timepix based optical cameras. Nucl. Instrum. Methods Phys. Res. Sect. A 937, 26–30 (2019).

Ianzano, C. et al. Fast camera spatial characterization of photonic polarization entanglement. Sci. Rep. 10, 6181. https://doi.org/10.1038/s41598-020-62020-z (2020).

Frojdh, E. et al. Timepix3: First measurements and characterization of a hybrid-pixel detector working in event driven mode. J. Instrum. 10, C01039 (2015).

Meduna, L. et al. Real-time timepix3 data clustering, visualization and classification with a new clusterer framework. arXiv preprint arXiv:1910.13356 (2019).

Kim, G., Park, K., Lim, K., Kim, J. & Cho, G. Improving spatial resolution by predicting the initial position of charge-sharing effect in photon-counting detectors. J. Instrum. 15, C01034–C01034. https://doi.org/10.1088/1748-0221/15/01/c01034 (2020).

Sajeed, S. & Jennewein, T. Observing quantum coherence from photons scattered in free-space. Light Sci. Appl. 10, 1–9 (2021).

Acknowledgements

The authors would like to thank Guillaume Thekkadath for performing the temporal comparison measurement. They are also grateful to Andrei Nomerotski, Hazel Hodgson, Denis Guay, and Doug Moffatt for stimulating discussions and technical support. This work was partly supported by Defence Research and Development Canada and the National Research Council’s Quantum Sensors Challenge Program.

Author information

Authors and Affiliations

Contributions

D.E. and Y.Z. conceived the experiment, Y.Z. and V.V. conducted the experiment, V.V. and Y.Z. analysed the results. V.V., Y.Z., D.E. and B.S. wrote and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vidyapin, V., Zhang, Y., England, D. et al. Characterisation of a single photon event camera for quantum imaging. Sci Rep 13, 1009 (2023). https://doi.org/10.1038/s41598-023-27842-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-27842-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.