Abstract

Automatic segmentation of the prostate of and the prostatic zones on MRI remains one of the most compelling research areas. While different image enhancement techniques are emerging as powerful tools for improving the performance of segmentation algorithms, their application still lacks consensus due to contrasting evidence regarding performance improvement and cross-model stability, further hampered by the inability to explain models’ predictions. Particularly, for prostate segmentation, the effectiveness of image enhancement on different Convolutional Neural Networks (CNN) remains largely unexplored. The present work introduces a novel image enhancement method, named RACLAHE, to enhance the performance of CNN models for segmenting the prostate’s gland and the prostatic zones. The improvement in performance and consistency across five CNN models (U-Net, U-Net++, U-Net3+, ResU-net and USE-NET) is compared against four popular image enhancement methods. Additionally, a methodology is proposed to explain, both quantitatively and qualitatively, the relation between saliency maps and ground truth probability maps. Overall, RACLAHE was the most consistent image enhancement algorithm in terms of performance improvement across CNN models with the mean increase in Dice Score ranging from 3 to 9% for the different prostatic regions, while achieving minimal inter-model variability. The integration of a feature driven methodology to explain the predictions after applying image enhancement methods, enables the development of a concrete, trustworthy automated pipeline for prostate segmentation on MR images.

Similar content being viewed by others

Introduction

Accurate segmentation of the prostate and the prostatic zones on T2w MRI consist the first step for a plethora of medical image analysis applications where clinically useful information needs to be extracted from the region of interest (ROI). Some of the most common applications are cancer detection and aggressiveness characterization, early prediction of recurrence, detection of metastases and assessment of treatment effectiveness, among others1. As the medical imaging domain moves toward sub-scale levels, with information being extracted from single voxels or pixels, segmentation accuracy is getting more demanding2. Particularly with the rise of radiomics analyses, any variability in the segmentation of the ROI will affect the numerical output of the features, thereby introducing bias into the evaluation of quantitative imaging biomarkers3,4. Furthermore, in the era of MRI-guided radiotherapy, precise organ and tumor delineation is of paramount importance as it may directly affect clinical outcomes5,6. Nevertheless, manual delineation of ROIs, not only is a time-consuming and labor-intensive task but also it thoroughly depends on the radiologist’s experience7.

To date, a plethora of Deep learning (DL) fully connected Convolutional Neural Network (CNN) pipelines have emerged to alleviate the burden of manual annotation in various radiological applications by automating and speeding up the segmentation process8. Most commonly, the backbone of such models is the U-net architecture9. There are a few comprehensive reviews on emerging DL applications for medical image segmentation10,11,12,13. Despite the state-of-the-art performance of novel architectures, the prostate and, particularly the prostatic zone segmentation, remains one of the most compelling research areas14,15.

Given the already large number of parameters included in state-of-the-art prostate segmentation models, image preprocessing, either by using denoising filters or contrast enhancement techniques, is aimed at increasing models performance by emphasizing key image characteristics relevant to the specific learning task16. An indispensable part of image preprocessing is image enhancement which aims to improve the visual quality of the image by modifying the intensity values of individual pixels so that anatomical structures can more easily be recognized by humans and machines. This is achieved by means of adequate gray-scale transformations17 aiming to disentangle the intensity distributions arising from adjacent regions with similar gray level intensities18. Therefore, by sharpening the boundaries between different tissues19, contrast enhancement has emerged as a powerful method for improving the accuracy of DL segmentation models20.

In the literature, several studies have demonstrated the effectiveness of image preprocessing to reduce the ambiguity of CNNs regarding their judgment and the feature extraction process21. There is a plethora of image enhancement techniques, many of which propose modifications of the Histogram Equalization algorithm (HE) or combinations of existing methodologies, such as the contrast limited adapted histogram equalization (CLAHE)22. The CLAHE algorithm consist one of the most popular and well-cited image enhancement techniques, as it appears to be particularly effective in medical imaging applications23,24,25. An improvement of CNN models’ performance on a variety of tasks has been reported after the application of image processing techniques, including object and texture classification26,27. This extends to medical imaging domain as well, in which, some authors have compared the effectiveness of image enhancement techniques for improving the quality of different imaging modalities (i.e. X-rays, CT, MRI) and for different clinical applications, such as lung, bone and vessel segmentation23,28,29, but also for disease detection and classification30. For instance, Rahman et al.29 evaluated the impact of various lung segmentation CNN algorithms and image enhancement techniques, including gamma correction, HE, CLAHE, image complement and Balance Contrast Enhancement Technique (BCET) on COVID-19 detection using X-ray images. The effect of image enhancement on liver segmentation from CT images, cervical cancer segmentation from T2W MR images, and vessel segmentation from 2D fundus images has also been investigated, suggesting that image enhancement prior to CNN model training leads to significant improvement in models’ performance31,32,33.

In this work, we propose an extension of the CLAHE method with the aim to improve the performance of state-of-the-art CNN models for segmenting the prostate gland and the prostatic zones. The performance of the proposed Region Adaptive CLAHE (RACLAHE) pipeline was compared against four prominent histogram-based image enhancement techniques, while the influence of the preprocessing methods on segmentation performance was assessed through the implementation of five well-established CNN models.

Overall, the main contributions of this study are the following:

-

We propose an image enhancement method that consistently improves the performance of CNN segmentation models in T2 MR images of the prostate.

-

We demonstrate, through feature map-driven visual explanations, that the proposed method is capable to enhance the image features that are most relevant to the segmentation task.

-

We introduce a quantitative and qualitative feature importance metric to provide insights regarding DL segmentation models’ performance, thereby enhancing their explainability.

-

To the best of our knowledge, this is the first study to quantitatively and qualitatively evaluate the effectiveness of image enhancement methods employing CNN models for prostate segmentation on MR images.

Results

Datasets description

The impact of four well-known histogram-based image enhancement methods along with the proposed region-adaptive technique were investigated for improving the segmentation of prostate’s whole gland (WG), transitional zone (TZ) and peripheral zone (PZ), using two publicly available datasets. One dataset was used for model training and another dataset was used to test externally the models’ performance. For model training, 204 patients from the Prostate-X dataset34,35 were used, along with the corresponding masks for the WG, TZ and PZ. The dataset consists of 3206 frames from Siemens' T2-weighted MR scans (TrioTim, Skyra models). For model testing, the Prostate 3-T36 dataset was employed, which included 30 patients and 421 frames with the associated annotations for all three regions acquired from Siemens' T2-weighted MRIs (Skyra model). In order to better examine the aforementioned prostatic areas, a descriptive analysis was conducted to quantify the inter- and intra-patient volume variations of the different prostatic regions (Supplementary Fig. S1).

Evaluation of preprocessing methods

The metrics used for the evaluation of the proposed method were the Dice Score index (DS), the Rand Error Index (REI), the Sensitivity, the Balanced Accuracy (BA), the Hausdorff Distance (HD), and the Average Surface Distance (ASD). Tables 1, 2 and 3 show the prostate’s WG, the PZ and the TZ segmentation performance, respectively, of the five DL models using different image enhancement methods. For comparison, the models’ performance was also computed using the original images, without applying any enhancement. In the tables, it is also indicated whether the proposed RACLAHE performed significantly better than other preprocessing methods. The corresponding boxplots of DS, Sensitivity and HD are provided in Supplementary Figs. S2–S4 for the WG, PZ and TZ, respectively.

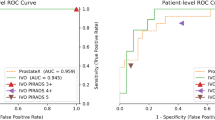

Although, for WG segmentation the most robust networks tend to perform best without any image preprocessing (i.e. U-Net++, U-Net3+, USE-NET), the proposed RACLAHE algorithm was able to improve the sensitivity and BA in most cases, as shown in Table 1. AGCWD was efficient in improving U-Net and U-Net++ but degraded U-Net3+. With AGCCPF, only the performance of Unet was improved, achieving results similar to RACLAHE but degraded slightly U-Net++ , U-Net3+ and USE-NET. The CLAHE algorithm marginally outperforming RACLAHE for the ResU-net model, but degraded other networks such as U-Net3+. The RLBHE had the lowest performance compared to other methods with remarkably high variability. It is worth noting that the USE-NET model was the best performing network and remained invariant to image preprocessing. Even without any preprocessing, USE-NET achieved better scores for WG segmentation than all other models (i.e. AUC = 0.88 ± 0.12).

On the other hand, for the evaluation of prostate’s PZ segmentation, the RACLAHE algorithm consistently improved the performance of the majority of DL models, as it is shown in Table 2. The only exception was the ResU-net, for which AGCWD and AGCCPF achieved superior performance. Similar to WG segmentation task, for PZ segmentation, the models’ performance was degraded when the RLBHE was used. The CLAHE algorithm also degraded the models’ performance, except for USE-NET. Overall, the best performance was achieved with the ResU-net model (DS = 0.75 ± 0.17 for AGCCPF). Regarding the segmentation of prostate’s TZ, shown in Table 3, the proposed RACLAHE algorithm was the only consistent preprocessing method for significantly improving the performance of all the five networks. The best results for TZ segmentation were obtained for RACLAHE combined with the USE-NET model (DS = 0.81 ± 0.16). Overall, the average improvement across DL models in terms of DS was 3%, 8% and 9% for WG, PZ and TZ segmentation respectively.

Inter-model performance and variability

The stability of each preprocessing method was computed in terms of method-specific average performance and variance in performance across all models. The inter-model performance, referring to the mean value for each method taking into consideration all the models, shows how each approach impacts DL segmentation models in general, and is described as follows:

where \(m\) is the metric, \(filt\) is the histogram processing methods, \(p\) is the performance (mean score) for each model. This metric assists further for the identification of the best method as it reveals the method that has the most effective performance among models. In additions the inter-model variability shows how far are the mean values for each model from the \(Performance (m,filt)\) evaluation metric. Typically represents the standard deviation across models (\(std\)) and it is reproduced by Eq. (2):

Figure 1a presents the normalized performance for each preprocessing method while Fig. 1b presents the normalized variability across the segmentation models. For a given preprocessing method, the normalization of the results was performed with respect to the minimum and maximum performance for each metric. For instance, in Fig. 1a the best performing preprocessing method in terms of DS has a value of 1 while the worst has a value of 0. Specifically, for the distance-based metrics (HD, ASD) where lower values indicate a better performance, the inverse of these values was computed in order to have a consistency in scale. In Fig. 1b the variability of the mean performance across models, for each preprocessing method and metric, is shown. After normalization, the lowest variability across models is indicated with the value 0 while the highest variability is indicated with the value 1.

(a) Intermodel performance for each metric and histogram based preprocessing technique. The best preprocessing method for each metric is depicted in yellow and the inferior in dark greens. (b) Intermodel variability for each metric and the histogram based preprocessing technique. The best method for each metric is depicted in white and the inferior in dark red.

Considering Fig. 1a, for WG and PZ segmentation, the RACLAHE outperformed all the other preprocessing methods, in terms of sensitivity, BA, DS and REI, while it had the second-best performance, after AGCWD, in terms of HD and ASD. With respect to variability in performance across DL models, shown in Fig. 1b, the RACLAHE has the lowest standard deviation for WG and PZ segmentation, apart from the HD and REI metric, respectively. Regarding the TZ segmentation, the proposed method was superior for all the metrics with the lowest inter-model variability in performance.

Explainability of model’s predictions

In order to explain the effect of each preprocessing method on the DL models, we sought to quantify how each model’s important for the task features diverge from the ground truth density maps of the binary masks. Specifically, the density map of ground truth masks are given as:

where \({GT }_{Density \,map}\) is the density map that is extracted after the pixel wise aggregation of all binary \(GT\) masks, and \(G{T}_{ij}(k)\) represents the pixel wise aggregation for the total number of binary ground truth images \(Nsl\) in a certain pixel position \(i, j\). The density map of important features is given as:

where \({FM }_{Density \,map}\) is the density map of extracted features that a model utilize for its decision and is extracted after the pixel wise aggregation of those feature maps, and \(F{M}_{ij}(k)\) represents the pixel wise aggregation for the total number \(Nsl\) of feature maps extracted by a model in a certain pixel position \(i, j\). The latter can take values ranging from 0 to 256 attributed to the spatial dimensions of the density maps. A comprehensive scheme is presented in Fig. 2, while the mean squared error and the absolute subtraction are used as explainability metrics for quantitative and visual assessment. The absolute pixel wise difference map between Eqs. (3) and (4) is given by Eq. (5):

The gradient-weighted class activation mapping (Grad-Cam)37 technique was used to extract the feature maps (FM) from a certain layer of a DL network. In fact, the performance of a model is tightly linked to the feature maps extracted throughout the forward–backward propagation process. Figure 3 presents the significant features processed by the USE-NET model, under the influence of each preprocessing method, applied for the WG, TZ and PZ segmentation. The RACLAHE method seems to improve the accuracy of boundary estimation assisting the model to focus on relevant pixels. Red areas indicate that the model is certain that those pixels belong to the object of interest, yellow areas imply that the model is less certain for these pixels while blue areas denote that these pixels have no contribution to the model’s final decision. Figure 4 provides a visual representation of the pixel-wise absolute differences (DMap) between the ground truth density map (GTdensity map) and the relevant for the model’s decision features density map (FMdensity map). A near-zero signifies that the pixels significance for a model is equal to its GT density. It is evident that the RACLAHE method has more pixels with values close to the GT, compared to other methods. This suggests that the RACLAHE method can more efficiently assist the DL models in focusing on meaningful features that are showcased by the GTdensity map, reducing their uncertainty.

The visual assessment after the absolute pixel wise subtraction of \({GT }_{Density \,map}\) and \({FM }_{Density \,map}\) (Eq. 5), for each preprocessing method applied on the USE-NET network.

Discussion

Despite the widespread usage of image enhancement techniques, they are often adopted on the basis of scant literature evidence and are blindly utilized in a plethora of clinical applications. In this regard, the present work addresses two highly relevant issues in the field of medical image preprocessing, namely, how well image enhancement methods generalize across different segmentation algorithms and how to reliably select the most appropriate preprocessing method for a given task. For the former, we estimated the variability in segmentation performance across models, for each one of the preprocessing methods under evaluation. For the latter, we introduce a feature driven approach to explain models’ predictions, enabling both the qualitative assessment of model performance through visual explanations, as presented in Fig. 4, and the quantitative assessment via the estimation of the absolute mean squared error and the subtraction of feature maps and the GT maps. To the best of our knowledge, this is the first study to evaluate the impact and generalizability of image enhancement methods on DL models for prostate and prostatic zone segmentation.

In contrast to other popular histogram-based image enhancement approaches, which displayed low stability and generalizability across different DL models, the success of RACLAHE lies in the consistent, model-invariant improvement achieved for segmenting the prostate and the prostatic zones. The proposed method’s novelty relies on the combination of an automated DL region proposal model and a local enhancement technique. RACLAHE mainly focuses on (a) the effective automated identification of the region of interest to discretize the relevant for the task region features from the redundant features, (b) the enhancement of the relevant region to enhance the adequate pixels for the segmentation task, and (c) the harmonization of the enhanced region and the redundant region to retransform the image in its original dimensions to be visually presented in a more natural way for the clinical practitioners and the CNNs models as well. Specifically, the RACLAHE was the only technique that did not deteriorate models' performance in any of the experiments conducted, as shown in Tables 1, 2 and 3. In most cases, a superior performance was achieved compared to no-preprocessing or, at worst, the performance remained the same. Regarding the stability of image enhancement methods across different DL models, the RACLAHE was found to be more stable, reducing the variation of results across models and improving the overall inter-model average scores, as shown in Fig. 1.

Another important contribution of this work consists the integration of saliency maps as a performance indicator, providing a unique opportunity to interrogate the effect of different preprocessing methods on models’ behavior. We provide feature map-driven visual explanations to assist on the selection of the most appropriate preprocessing method by highlighting the image features that guide the segmentation task. Herein, the Grad-CAM37 technique was employed to visually present class discriminative localization map (heatmap or saliency map) which highlights the most important pixels of a particular class. These heatmaps were coupled with a probabilistic ground truth feature importance map to extract meaningful indications regarding a model’s performance, both qualitatively and quantitatively, as it is shown in Fig. 2. We extend the aforementioned methodology to compare different image enhancement methods and to quantify the contribution of each feature to DL models’ decisions. As depicted in Fig. 4 and Supplementary Table S1, the RACLAHE was able to enhance specific areas within the images that are strongly associated with the ROI. It is worth noting that the most significant differences were observed for the prostate’s WG and the TZ. The proposed method provides better insights about the performance of biomedical imaging applications as it represents a natural way of comparing the ground truth samples from the predicted samples, as indicated in Figs. 2 and 4.

This work has some limitations. Τhe impact of image enhancement was not assessed for 3D segmentation tasks, which would permit to evaluate its effectiveness in every spatial plane. Nonetheless, for this type of analysis, a sufficiently large amount of data is required. Additionally, regarding the RACLAHE method, no hyperparameter tuning was performed to optimally define the cutoff for the CLAHE component and, therefore, the default parameters that are automatically chosen from the algorithm were used. As proposed by Campos et al.38, hyperparameter optimization can be achieved by means of machine learning techniques.

In summary, the outcomes of this study indicate that image enhancement using RACLAHE can improve the segmentation efficacy of CNN networks in a model-agnostic manner, thereby, contributing to establishing a concrete image preprocessing pipeline for effective automatic prostate segmentation tasks. Future research may concentrate on establishing the superiority of the proposed method considering different image enhancement techniques and segmentation algorithms and evaluating its generalizability in other population datasets and clinical scenarios. In addition, the application of RACLAHE prior to CNN model training has led to the generation of more accurate saliency maps. These probabilistic pixel-wise representations, reflect a more natural way to visually explain the outcomes of a model. Ultimately, the explainability module will render model’s prediction more trustworthy among clinicals, to further support the decision-making process.

Methods

Histogram-based enhancement methods

The four image enhancement techniques used for comparison are described below.

Adaptive gamma correction with weighting distribution (AGCWD)

AGCWD is a histogram modification approach to enhance and correct images. The main attribute that differentiates this approach from the power-law transformation is the automatic selection of the gamma factor based on the weighting distribution. Specifically, the authors39 used weighting distribution to find the cumulative distribution and therefore specify the gamma parameter. This hyperparameter is set to 1, which is the default value as suggested by the authors in the original work which brightens low pixel intensities on the image and the high intensities remain intact.

Adaptive gamma correction with color preserving framework (AGCCPF)

AGCCPF employs a two-step processing approach. First, it improves the contrast and brightness of a given image by modifying the probability distribution of pixels’ intensity and then it applies gamma correction. In the second stage, it restores color using a color-preserving framework. This method is an upgrade of AGCWD due to its ability to retain information better than AGCWD as authors claim40. They use a histogram modification function to control the level of contrast enhancement by utilizing the input histogram along with the uniform histogram, which is a histogram equalized image, to produce the resulted one. The difference between input histogram, uniform histogram and the resultant histogram for an image is presented in Fig. S5 in the Supplementary Materials.

Range limited Bi-histogram equalization (RLBHE)

RLBHE considers both contrast enhancement and intensity brightness preservation as valuable factors in the output image. First, the single threshold Otsu’s41 approach is employed to execute histogram thresholding to get better contrast enhancement and avoid over-enhancement. Second, the range of the equalized input image is limited to ensure that the mean output brightness is almost equal to the mean input brightness, preserving the initial information from the input image and third, each partition of the histogram is equalized independently.

Contrast limited adaptive histogram equalization (CLAHE)

CLAHE algorithm22 is considered more stable for contrast enhancement operations due to its local application on the frame. This approach is separated into two steps. First the initial image is divided into 8X8 windows that compose the image. Second histogram equalization algorithm is applied to equalize each window independently from the other. In this way, the histogram equalization method does not take into consideration the global features of the image and, therefore, it optimizes the intensity levels to a neighboring area around the center of each window. Compared to the aforementioned techniques, there are several advantages of CLAHE with the main being the reduced contribution of outliers. In histogram equalization, outliers play an important role as the tuning of the histogram is affected by extreme values. With the partition in windows though, extreme values are scaled within a neighborhood region and, therefore, are smoothed.

The proposed image enhancement method: RACLAHE

Conventionally, the CLAHE algorithm is applied globally on the entire frame of the image. The algorithm utilizes the histogram equalization method in a close neighborhood around a central pixel. Although the histogram equalization is applied frame-wise, the CLAHE algorithm is applied patch-wise enhancing further the contrast of the sub-regions within the frame. The proposed method RACLAHE utilizes the CLAHE algorithm along with the steps described below to transform selected features to be more interpretable for the model. The pipeline is visually presented in Fig. 5. The algorithm that describes the RACLAHE operation is.

The RACLAHE algorithm. From the initial 256 × 256 frame an area of \(\{134\pm 15\} \times \{134\pm 15\}\) pixels is selected which contains the region of interest (a). This reduced dimensional space provides more targeted features to be enhanced and simplify the complexity of the problem while introducing some biases to the model regarding the area to identify features from (b).

Let \(Z\) be a space where the intensity features values of each frame lie, \(F{M}_{Z}\in Z, 0\le F{M}_{Z}\le Frame\, width\times Frame\, height\). Each frame is passed from a DL U-Net like structure9,42 that proposes a reduced size area that includes the prostate gland. Specifically, the initial space \(Z\) is reduced into a subspace \(Q\subset Z\) and features \(F{M}_{Q}\in Q\) are selected by reducing the dimensionality of the \(Z\) space in the \(Q\) space. The relation that describes these two spaces is presented in Eq. (6) and the operation is given in Fig. 5a.

The frame is then divided into two subframes, features \(F{M}_{Q}\), \(F{M}_{Z}-F{M}_{Q}\) and those areas are the proposed area that contains the whole gland and the remaining area respectively while this process is presented in Fig. 5b. The CLAHE algorithm is then applied on the features \(F{M}_{Q}\) (proposed area). Specifically, \(F{M}_{Q}\) pixel intensity features are divided into \(8\times 8\) patches and the number of those patches in each \(F{M}_{Q}\) is approximately 196. Then, the probability of the occurrence of each pixel’s unique intensity value \({P}^{patch}({i}_{F{M}_{Q}})\) is given as:

where \(Num({i}_{F{M}_{Q}})\) is the number of occurrences of pixel intensity \({i}_{F{M}_{Q}}\) within the patch, \(TotNum\) is the total number of pixels of the patch, \(L{D}^{patch}\) is the range of values, inside each patch. Consequently, the cumulative distribution for each patch is calculated:

The histogram equalized patch is obtained by Eq. (9) making use of Eqs. (8) and (7):

The enhanced area of Fig. 5b is constructed from the aggregation of the histogram equalized patches and it is obtained as:

where with \(Patches\) we denote the total number of patches within \(F{M}_{Q}\) while \(F{{M}_{Q}}^{trans}\) indicates the enhanced area. Finally, the RACLAHE resulted image is given by Eq. (11) it is shown in Fig. 5b:

Model development

Five CNN algorithms were implemented to evaluate the impact of preprocessing methods to segment the prostate and the prostatic zones, namely the U-Net9, ResUNet43, U-Net3+44, U-Net++45 and USE-NET46 while a brief description of them is given in the Supplementary Materials. For model training, the Prostate X dataset was split into the training and the validation sets where the 85% of image frames were used for training and the remaining 15% for validation. The splits were kept the same for all the experiments run in this study (i.e. for the different models and preprocessing methods). The initial learning rate was kept at 0.0001 whereas the batch size and epochs were 16 and 120, respectively, while the adam optimizer was used for weight updating throughout the training process. As loss function the sigmoid focal crossentropy47 was utilized due to its effectiveness to handle unbalanced data. The early stopping technique used in order to stop model training when the validation performance stopped improving further. The segmentation performance of each model and the preprocessing method was evaluated externally on an independent dataset (Prostate 3 T). The GPU used for the experiments is the NVIDIA Quadro P6000, the drivers are of version 441.66 while the python packages used are numpy = 1.21.2, keras-unet-collection = 0.1.11, scikit-image = 0.18.3, SciPy = 1.7.1, Tensorflow = 2.2.0 and Tensorflow-addons = 0.11.2. The original code and the docker image for RACLAHE and all the experiments are available from the authors upon request.

Performance assessment

Several metrics have been implemented to thoroughly evaluate the performance of the proposed method and existing histogram modification methods. Specifically, DS, REI, Sensitivity, BA, HD and ASD common segmentation metrics were computed thanks to the complementary information they provide which could provide sufficient insights about models’ performance. DS and REI are metrics related to the overlapping between the predicted and the true annotation of the object of interest. On the other hand, Sensitivity and BA provide information about the ability of the model to identify an area of interest with high class imbalance between the background and foreground pixels. HD and ASD employ one dimensional measurement that connect the relevant results with real world insights (S.I unit system). Specifically, HD and ASD are measurements of how far two data points are, one belonging to the ground truth boundary and the other to the prediction. Herein, the 95% HD was employed to avoid using extreme values as they may not be indicative of real model performance. All the performance metrics were computed on the external testing dataset. The Wilcoxon rank-sum test (two-sided) was used to compare the proposed RACLAHE technique with all the other methods and a p-value ≤ 0.05 was considered as significant in performance differences.

Data availability

The datasets generated during and analyzed during the current study are available in the TCIA repository and in GitHub. Specifically the Prostate X2 dataset can be found in this link (https://github.com/rcuocolo/PROSTATEx_masks) while the Prostate 3T dataset can be found in this link (https://wiki.cancerimagingarchive.net/display/Public/Prostate-3T). Those are publicly available datasets. Docker container of the RACLAHE method and code is available from the authors upon request.

References

Cuocolo, R. et al. Machine learning applications in prostate cancer magnetic resonance imaging. Eur. Radiol. Exp. 3, 1–8 (2019).

Haarburger, C. et al. Radiomics feature reproducibility under inter-rater variability in segmentations of CT images. Sci. Rep. 10, 1–10 (2020).

Kendrick, J. et al. Radiomics for identification and prediction in metastatic prostate cancer: A review of studies. Front. Oncol. 11, 771787 (2021).

Zhao, B. Understanding sources of variation to improve the reproducibility of radiomics. Front. Oncol. 11, 826 (2021).

Owrangi, A. M., Greer, P. B. & Glide-Hurst, C. K. MRI-only treatment planning: Benefits and challenges. Phys. Med. Biol. 63, 05TR01 (2018).

Otazo, R. et al. MRI-guided radiation therapy: An emerging paradigm in adaptive radiation oncology. Radiology 298, 248–260 (2021).

Cardenas, C. E., Yang, J., Anderson, B. M., Court, L. E. & Brock, K. B. Advances in auto-segmentation. Semin. Radiat. Oncol. 29, 185–197 (2019).

Savenije, M. H. F. et al. Clinical implementation of MRI-based organs-at-risk auto-segmentation with convolutional networks for prostate radiotherapy. Radiat. Oncol. 15, 1–12 (2020).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Vol. 9351, 234–241 (2015).

Hesamian, M. H., Jia, W., He, X. & Kennedy, P. Deep learning techniques for medical image segmentation: Achievements and challenges. J. Dig. Imaging 32, 582–596 (2019).

Tajbakhsh, N. et al. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med. Image Anal. 63, 101693 (2020).

Asgari Taghanaki, S., Abhishek, K., Cohen, J. P., Cohen-Adad, J. & Hamarneh, G. Deep semantic segmentation of natural and medical images: A review. Artif. Intell. Rev. 54, 137 (2021).

Haque, R. I. & Neubert, J. Deep learning approaches to biomedical image segmentation. Inform. Med. Unlocked 18, 100297 (2020).

Duncan, J. S. & Ayache, N. Medical image analysis: Progress over two decades and the challenges ahead. IEEE Trans. Pattern Anal. Mach. Intell. 22, 85–106 (2000).

Zaridis, D. et al. Fine-tuned feature selection to improve prostate segmentation via a fully connected meta-learner architecture. In 2022 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), 01–04. https://doi.org/10.1109/BHI56158.2022.9926929 (IEEE, 2022).

Chen, C. M. et al. Automatic contrast enhancement of brain MR images using hierarchical correlation histogram analysis. J. Med. Biol. Eng. 35, 724–734 (2015).

Xiong, L., Li, H. & Xu, L. An enhancement method for color retinal images based on image formation model. Comput. Methods Progr. Biomed. 143, 137–150 (2017).

Paranjape, R. B. Fundamental enhancement techniques. In Handbook of Medical Image Processing and Analysis (ed. Paranjape, R. B.) 3–18 (Elsevier, 2009).

Toennies, K. D. Guide to Medical Image Analysis (Springer, 2017). https://doi.org/10.1007/978-1-4471-7320-5.

Rundo, L. et al. A novel framework for MR image segmentation and quantification by using MedGA. Comput. Methods Progr. Biomed. 176, 159–172 (2019).

Tachibana, Y. et al. The utility of applying various image preprocessing strategies to reduce the ambiguity in deep learning-based clinical image diagnosis. Magn. Reson. Med. Sci. 19, 92–98 (2020).

Ketcham, D. J., Lowe, R. W. & Weber, J. W. Image Enhancement Techniques for Cockpit Displays (Hughes Aircraft Co Culver City Ca Display Systems Lab, 1974).

Ikhsan, I. A. M., Hussain, A., Zulkifley, M. A., Tahir, N. M. & Mustapha, A. An analysis of x-ray image enhancement methods for vertebral bone segmentation. In Proc.—2014 IEEE 10th International Colloquium on Signal Processing and Its Applications, CSPA 2014, 208–211. https://doi.org/10.1109/CSPA.2014.6805749 (2014).

Mat Radzi, S. F. et al. Impact of image contrast enhancement on stability of radiomics feature quantification on a 2D mammogram radiograph. IEEE Access 8, 127720–127731 (2020).

Kaur, H. & Rani, J. MRI brain image enhancement using histogram equalization techniques. In Proc. 2016 IEEE International Conference on Wireless Communications, Signal Processing and Networking, WiSPNET 2016, 770–773. https://doi.org/10.1109/WISPNET.2016.7566237 (2016).

Sharma, V. et al. Classification-driven dynamic image enhancement. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4033–4041. https://doi.org/10.1109/CVPR.2018.00424 (IEEE, 2018).

Pitaloka, D. A., Wulandari, A., Basaruddin, T. & Liliana, D. Y. Enhancing CNN with preprocessing stage in automatic emotion recognition. Procedia Comput. Sci. 116, 523–529 (2017).

Survarachakan, S. et al. Effects of enhancement on deep learning based hepatic vessel segmentation. Electronics 10, 1165 (2021).

Rahman, T. et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 132, 104319 (2021).

Hemanth, D. J., Deperlioglu, O. & Kose, U. An enhanced diabetic retinopathy detection and classification approach using deep convolutional neural network. Neural Comput. Appl. 32, 707–721 (2020).

Islam, M., Khan, K. N. & Khan, M. S. Evaluation of preprocessing techniques for U-Net based automated liver segmentation. In 2021 International Conference on Artificial Intelligence (ICAI), 187–192. https://doi.org/10.1109/ICAI52203.2021.9445204 (IEEE, 2021).

Bnouni, N. et al. Boosting CNN learning by ensemble image preprocessing methods for cervical cancer segmentation. In 2021 18th International Multi-Conference on Systems, Signals & Devices (SSD), 264–269. https://doi.org/10.1109/SSD52085.2021.9429422 (IEEE, 2021).

Sule, O. O., Viriri, S. & Abayomi, A. Effects of image enhancement techniques on CNNs based algorithms for segmentation of blood vessels: A review. In 2020 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), 1–6. https://doi.org/10.1109/icABCD49160.2020.9183896 (IEEE, 2020).

Cuocolo, R. et al. Deep learning whole-gland and zonal prostate segmentation on a public MRI dataset. J. Magn. Reson. Imaging 54, 452–459 (2021).

Cuocolo, R., Stanzione, A., Castaldo, A., De Lucia, D. R. & Imbriaco, M. Quality control and whole-gland, zonal and lesion annotations for the PROSTATEx challenge public dataset. Eur. J. Radiol. 138, 109647 (2021).

Litjens, G., Futterer, J. & Huisman, H. Data from prostate-3T. Cancer Imaging Arch. https://doi.org/10.7937/K9/TCIA.2015.QJTV5IL5 (2015).

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 128, 336–359 (2016).

Campos, G. F. C. et al. Machine learning hyperparameter selection for contrast limited adaptive histogram equalization. EURASIP J. Image Video Process 2019, 1–18 (2019).

Rahman, S., Rahman, M. M., Abdullah-Al-Wadud, M., Al-Quaderi, G. D. & Shoyaib, M. An adaptive gamma correction for image enhancement. EURASIP J. Image Video Process 2016, 1–13 (2016).

Gupta, B. & Tiwari, M. Minimum mean brightness error contrast enhancement of color images using adaptive gamma correction with color preserving framework. Optik (Stuttg.) 127, 1671–1676 (2016).

Otsu, N. A Threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9, 62–66 (1979).

Zaridis, D. et al. A smart cropping pipeline to improve prostate’s peripheral zone segmentation on MRI using deep learning. EAI Endors. Trans. Bioeng. Bioinform. https://doi.org/10.4108/EAI.24-2-2022.173546 (2021).

Alom, M. Z., Yakopcic, C., Hasan, M., Taha, T. M. & Asari, V. K. Recurrent residual U-Net for medical image segmentation. J. Med. Imaging 6, 1 (2019).

Huang, H. et al. UNet 3+: A full-scale connected UNet for medical image segmentation. In ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings, Vol. 2020-May, 1055–1059 (2020).

Zhou, Z., Rahman Siddiquee, M. M., Tajbakhsh, N. & Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Vol. 11045, 3–11 (LNCS, 2018).

Rundo, L. et al. USE-Net: Incorporating squeeze-and-excitation blocks into U-Net for prostate zonal segmentation of multi-institutional MRI datasets. Neurocomputing 365, 31–43 (2019).

Lin, T. Y., Goyal, P., Girshick, R., He, K. & Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 42, 318–327 (2020).

Acknowledgements

This work is supported by the ProCancer-I project, funded by the European Union’s Horizon 2020 research and innovation program under grant agreement No 952159. It reflects only the author’s view. The Commission is not responsible for any use that may be made of the information it contains.

Author information

Authors and Affiliations

Contributions

D.I.Z. and E.M. performed the experiments, analyzed the results and wrote the manuscript. N.T., V.C.P., G.G., N.T., K.M., M.T and D.I.F. supervised the work and were involved in setting up the experimental design. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zaridis, D.I., Mylona, E., Tachos, N. et al. Region-adaptive magnetic resonance image enhancement for improving CNN-based segmentation of the prostate and prostatic zones. Sci Rep 13, 714 (2023). https://doi.org/10.1038/s41598-023-27671-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-27671-8

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.