Abstract

In this paper, Energy Valley Optimizer (EVO) is proposed as a novel metaheuristic algorithm inspired by advanced physics principles regarding stability and different modes of particle decay. Twenty unconstrained mathematical test functions are utilized in different dimensions to evaluate the proposed algorithm's performance. For statistical purposes, 100 independent optimization runs are conducted to determine the statistical measurements, including the mean, standard deviation, and the required number of objective function evaluations, by considering a predefined stopping criterion. Some well-known statistical analyses are also used for comparative purposes, including the Kolmogorov–Smirnov, Wilcoxon, and Kruskal–Wallis analysis. Besides, the latest Competitions on Evolutionary Computation (CEC), regarding real-world optimization, are also considered for comparing the results of the EVO to the most successful state-of-the-art algorithms. The results demonstrate that the proposed algorithm can provide competitive and outstanding results in dealing with complex benchmarks and real-world problems.

Similar content being viewed by others

Introduction

In today's competitive world, many efforts are allocated to maximize the overall benefits of nature's limited resources. Recent advances in human knowledge and expertise reveal the need to consider greater accuracy, better performance, and higher construction speeds in the design of real-world systems. It is necessary to develop new methods for design and optimization and implement them on real complex problems to achieve each of the mentioned factors. Optimization is a decision-making process in which the search for a function's minimum and/or maximum values is considered. Optimization algorithms are divided into two categories as exact and approximate algorithms. Exact algorithms can find the optimal solution accurately. Still, in the case of complex optimization problems, they are not efficient enough, and their computational time increases exponentially according to the dimensions of the problem. So, given the limitations of exact methods and the need for precision and speed in identifying appropriate answers, approximate algorithms, like metaheuristics, can find suitable solutions close to the optimal solution in a shorter time that can be used to solve complex problems. In fact, the Greek prefix “meta”, shown within the title, is utilized to demonstrate that these algorithms are “higher-level” heuristic algorithms differentiating with problem-specific heuristics1. The metaphors used by scholars while developing new metaheuristics are as follows: natural evolution, insects, gravitation, electromagnetic force, ecosystem, water, plant, human, birds, and animals. Among them, “insects” is the most often used metaphor for simulating social behaviour to develop practical optimization problems, and bees are the most frequently used insect metaphor, followed by ants2. Although metaheuristic algorithms give reasonable results, they do not provide optimum solutions.

Generally speaking, there remain four major classifications of metaheuristics based on the source of their inspiration. The first group is evolutionary algorithms (EAs), robust and flexible metaheuristic search algorithms based on Darwinian evolution that efficiently capture global solutions to complicated optimization problems. EAs replicate the biological course of evolution at the cellular level to create better candidate solutions using selection, crossover, mutation, and reproduction operators. The genetic algorithm (GA) introduced by John Holland and his colleagues in 1992 could be deemed as the so-called EA3. Another prominent algorithm is differential evolution (DE) which was introduced in 19974. In 2014, another evolutionary-based metaheuristic algorithm called hyper-spherical search (HSS) was proposed5. The algorithm's core principle was searching the hypersphere's inner space, which is defined by the hypersphere's core and its particles. Swarm-based metaheuristic algorithms are the second category. Particle swarm optimization (PSO) is a well-known and often utilized swarm intelligence algorithm. The basic concept of PSO was inspired by a swarm of flying birds looking for food6. Furthermore, in 2012, another swarm-based algorithm named firefly algorithm (FA) was introduced7. As its name implies, this algorithm was inspired by the flashing behaviour of fireflies. Ant colony optimization (ACO) is another swarm-based algorithm that uses ants' foraging behaviour. ACO solves a problem by maintaining an updated pheromone trail and relocating these ants in the search space using simple mathematical calculations based on the area's transition probability and total pheromone8,9. Among the other well-known algorithms in this category are rat swarm optimizer (RSO)10; the wisdom of artificial crowds (WoAC)11; tuna swarm optimization (TSO)12; and artificial bee colony (ABC)13.

The Big-Bang Big-Crunch (BBBC) algorithm is inspired by the theories of the universe's evolution. Another prominent algorithm is henry gas solubility optimization (HGSO), imitating the behavior governed by Henry's law14. Moreover, Atomic Orbital Search (AOS) is another algorithm in which the fundamental principles of quantum physics are used as a source of inspiration15. Several more well-known algorithms in this categorization include the following: Material Generation Algorithm (MGA)16,17, cyber-physical systems (CPS)18, Archimedes optimization algorithm (AOA)19, Lichtenberg Algorithm (LA)20, and also Thermal Exchange Optimization algorithm (TEOA)21. Finally, the fourth group is human and animal lifestyles-based metaheuristic algorithms. Harris Hawks Optimizer (HHO) is one of the renowned animal behaviour-based algorithms; the cooperative behaviour and pursuit manner of Harris' hawks in nature, known as surprise pounce, is the fundamental inspiration for HHO22. In terms of efficiency, quality of results, and acceptable convergence in dealing with various applications in real-world problems, the HHO has gotten much attention from academics23. An artificial Jellyfish Search (JS) optimizer has recently been proposed in 2021, inspired by the behaviour of jellyfish in the ocean24. Additionally, queuing search (QS) is another algorithm based on human queueing behaviors25. Table 1 contains more information on the algorithms mentioned above. Additionally, there are other metaheuristic optimization algorithms inspired by different concepts which have been proposed in the last years, including the following: cooperation search algorithm (CSA)26, Aquila Optimizer (AO)27, Capuchin Search Algorithm (CapSA)28, Golden Tortoise Beetle Optimizer (GTBO)29, Battle Royale Optimization (BRO)30, passing vehicle search (PVS)31, Dynamic Virtual Bats Algorithm (DVBA)32, crow search algorithm (CSA)33, virus optimization algorithm (VOA)34, bald eagle search (BES) algorithm35, Gravitational Search Algorithm (GSA)36, Grey Wolf Optimizer (GWO)37, Teaching–Learning-Based Optimization (TLBO)38, Fire Hawk Optimizer (FHO)39,40, Social Spider Optimization (SSO)41, League Championship Algorithm (LCA)42, and Chaos Game Optimization43,44.

In recent decades, one of the main challenges of artificial intelligence experts has been the applicability of the proposed metaheuristic algorithm in different fields to optimize and improve the overall efficiency of some specific problems. Artificial electric field algorithm for engineering design optimization49, black widow algorithm for engineering optimization47, earthquake engineering optimization of structures50, engineering design optimization with queuing search algorithm25, cuckoo search algorithm for optimization of the travelling salesman problem51, optimum design of engineering problems with sine cosine grey wolf optimizer52, engineering design optimization with self-adaptive Rao algorithm53, design optimization of numerical and engineering optimization problems with improved Harris Hawks optimizer54, unconstrained and constrained optimization by hybrid pathfinder optimizer55, improved charged system search for optimization of fuzzy controllers56, optimum design of structural systems with metaheuristics57,58 are some of the most recent research works in the area of applied artificial intelligence.

Even though there are several metaheuristic algorithms, more are always needed. The No Free Lunch (NFL) theory holds that no one method can be used to solve all optimization problems. Thus, the introduction of novel metaheuristic optimization algorithms is continuously ongoing. The creation of new metaheuristics is advantageous to science since they might improve the precision or effectiveness of the optimization procedure for a host of problems59. This assertion drives our attempts to suggest a unique metaheuristic algorithm inspired by the cutting-edge physics concepts regarding stability and various forms of decay in particles. Meanwhile, accurate algorithms ensure the most optimal solution, but the problem is that these algorithms are difficult problems. They do not work quickly, and the time to find solutions to complex problems will increase exponentially, and for the hardest and most complex problems, the results of the exact algorarehm are not satisfactory. The approximate algorithms should be utilized if the optimal response to the exact algorithm is not achievable in practice. The approximate or metaheuristic algorithms seek the right solution and are close to optimal. This method lowers the calculation time compared to the previous method.

In this paper, Energy Valley Optimizer (EVO) is proposed as a novel metaheuristic algorithm inspired by advanced physics principles regarding stability and different modes of particle decay. Since the basic principles of the decay process through different particles in physics are used as the main idea of the EVO, the originality of this study may be seen from an inspirational standpoint, while the complexity level of the test functions used is also being examined for the first time in this study. The performance of different algorithms must be done in the same conditions and under the same problems, and under various examples, the superiority of each algorithm cannot be confirmed or denied. Hence, 20 unconstrained mathematical test functions are utilized in different dimensions to evaluate the proposed algorithm's performance. For statistical purposes, 100 independent optimization runs are conducted for determining the statistical measurements as the mean, standard deviation, and the required number of objective function evaluations. A predefined stopping criterion is also considered based on a maximum number of 150,000 objective function evaluations and a tolerance of \(1\times {10}^{-12}\) for the global best values of the considered problems. Some well-known statistical analyses, including the Kolmogorov–Smirnov, Wilcoxon, Mann–Whitney, Kruskal–Wallis, and Post-Hoc analysis, are also utilized for comparative purposes. One of the most significant shortcomings of the newly developed metaheuristic algorithms is the simplicity of the evaluation test functions; therefore, two of the latest Competitions on Evolutionary Computation (CEC), the CEC 2020 on bound constraint optimization60 and CEC 2020 on real-world optimization61, and also the “Big O notation” are considered for comparing the results of the EVO to the most successful state-of-the-art algorithms. However, being parameter-free, fast convergence behaviour, and the lowest possible objective function evaluation could be deemed the privileges of the EVO. In stark contrast, the EVO cannot provide exact solutions; in other words, like other metaheuristic algorithms, the EVO is an approximate algorithm.

Since the proposed EVO is an algorithm developed based on some general and advanced principles of physics, the most important aspect of this algorithm is the conformity between the concept and the mathematical model for which the EVO has higher levels of adaptation between these two aspects. The second factor is the complexity of the algorithm while three new position vectors are created in the main search loop so the recent development in computer science regarding software and hardware allow experts to create algorithms with higher levels of complexity. The third factor is the dynamic configuration of the main loop of EVO in which the exploration and exploitation procedures are conducted by searching the variables’ and candidate’s spaces simultaneously to reach the global best solution. Furthermore, despite the fact that there are several metaheuristic algorithms, more are always needed. Besides, the introduction of novel metaheuristic optimization algorithms is continuously ongoing. The creation of new metaheuristics is advantageous to science since they might improve the precision or effectiveness of the optimization procedure for a host of problems. This assertion drives our attempts to suggest a unique metaheuristic algorithm inspired by the cutting-edge physics concepts regarding particles’ stability and decay.

The main contributions of this study are as follows:

-

Advanced physics concepts concerning stability and various forms of decay in particles are examined and analyzed to develop a mathematical model of a metaheuristic algorithm.

-

A unique physics-inspired algorithm as Energy Valley Optimizer (EVO) is developed using the mentioned model.

-

The EVO's solution updating is dependent on the particles’ enrichment bound, position vector, and stability level.

-

EVO's performance is extensively evaluated against a set of twenty benchmark functions and real-world engineering design problems.

-

The proposed EVO is compared to a plethora of cutting-edge metaheuristic algorithms.

The rest of the paper is divided into the following sections. In Sect. 2, the inspiration and mathematical model of the proposed EVO are presented. The numerical investigations, including 20 of the best-known mathematical test functions and statistical analysis, including the Kolmogorov–Smirnov, Wilcoxon, and Kruskal–Wallis analysis are indicated in Sects. 3, and 4 respectively. The CEC 2020 complexity analysis and Big O notation are represented in Sect. 5 while the CEC 2020 real-world constrained optimization problems such as Speed Reducer, Hydro-Static Thrust Bearing, Ten-Bar Truss, and also Rolling Element Bearing are elucidated in Sect. 6. Finally, in Sect. 7, the core findings of this study are presented as concluding remarks.

Energy valley optimizer

Inspiration

Physical reaction refers to colliding two particles or external subatomic particles to produce new particles. In the universe, a great majority of particles are assumed to be unstable except for the stable ones which remain intact indefinitely. The unstable particles tend to emit energy through disintegration or decay, while the overall decay rate is somehow different in various types of particles. In the decaying process, a particle with lower energy is generated while the extra energy is bring-off through the emission process. Energy valley concerns the stability of particles based on their binding energy and interactions with other particles. The direct observation of multiple phenomena has led the experts to extract some valuable patterns for defining the decay in particles. The most crucial challenge in this area is determining the particles’ stability bound by considering the number of neutrons (N) and protons (Z) and the N/Z ratio. The N/Z ≈ 1 refers to the stable, lightweight particle, while for heavier ones, a larger value for N/Z is considered as the stability band. Based on the stability level of the particles, each particle tends to increase its stability level by shifting its N/Z ratio and moving toward the stability band or energy valley. In this regard, the neutron enrichment levels of particles play an essential role in this action. The neutron-rich particles positioned above the stability bound undergo a decay process and require so many neutrons for stability purposes. On the other hand, the neutron-poor particles, which require too few neutrons for stability purposes, tend to undergo electron capture or positron emission to move toward the energy valley or stability band62; in Fig. 1.A, these aspects are illustrated schematically.

(A) Stability band for particles (B) Process of emission (C) Different types of decay62.

In the decay process, a particle with a lower energy level is generated while excessive energy is emitted. There are three types of emissions that determine the decay process in particles with different levels of stability. The alpha (α) particles denote the dense and positively charged particles identical to the helium. The beta (β) particles are negatively charged particles characterized as electrons with higher speeds. The gamma (γ) rays represent photons with higher levels of energy. In Fig. 1B, the overall behaviour of these types of emissions is illustrated inside the electric field, considering the α particles curve toward the negative plate to a small extent. In contrast, the β particles curve toward the positive plate greatly, and the electric field does not affect the γ rays.

Based on the presented details of the emission process, there are three types of decay, known as alpha, beta, and gamma decay derived from the previously mentioned emission types. In the alpha decay, the loss of an α particle is determined in which the N and Z values in the N/Z ratio are reduced per emission process. In beta decay, the ejection of a β particle is a concern in which the N/Z ratio is increased by reducing the N and increasing the Z values. In gamma decay, omitting a γ photon with higher energy levels from an excited particle is concerned, while no change in N/Z values is necessary for this mode of decay. The schematic presentation of these aspects is presented in Fig. 1C.

Most of the recently developed metaheuristic algorithms are some reasonable higher-level searching techniques formulated by miscellaneous inspirational concepts to provide one or numerous good solutions for a maximization or minimization optimization problem, particularly with imperfect or incomplete information. The searching processes are primarily conducted among some initial candidates determined randomly. The higher-level procedures of the metaheuristics try to improve the overall standing of these candidates in a step-by-step manner. Hence, the principles of the decay process through different particles can be a great starting point for a novel algorithm in which particles' tendency to achieve a stable point can be utilized as the inspirational concept for performance improvement of the solution candidates.

Mathematical model

In this section, the EVO is presented as an optimization algorithm in detail by means of the previously described principles of physics. In the first step, the initialization process is conducted in which the solutions candidates (\({\mathrm{X}}_{\mathrm{i}}\)) are assumed to be particles with different levels of stability in the search space, which is assumed to be a specific part of the universe.

where \(\mathrm{n}\) denotes on the total number of particles (solution candidates) in the universe (search space); \(\mathrm{d}\) is the dimension of the considered problem; \({\mathrm{x}}_{\mathrm{i}}^{\mathrm{j}}\) is the jth decision variable for determining the initial position of the ith candidate; \({\mathrm{x}}_{\mathrm{i},\mathrm{min}}^{\mathrm{j}}\) and \({\mathrm{x}}_{\mathrm{i},\mathrm{max}}^{\mathrm{j}}\) represent the lower and upper bounds of the jth variable in the ith candidate; \(\mathrm{rand}\) is a uniformly distributed random number in the range of [0, 1].

In the second step of the algorithm, the Enrichment Bound (EB) for the particles is determined, which is utilized for considering the differences between the neutron-rich and neutron-poor particles. For this purpose, the objective function evaluation for each of the particles is conducted and determined as the Neutron Enrichment Level (NEL) of the particles. The mathematical presentation of these aspects is as follows:

where \({\text{NEL}}^{{\text{i}}}\) is the neutron enrichment level of the ith particle, and \({\text{EB}}\) is the enrichment bound of the particles in the universe.

In the third step, the stability levels of the particles are determined as follows based on the objective function evaluations:

where \({\mathrm{SL}}^{\mathrm{i}}\) is the stability level of the ith particle, \(\mathrm{BS}\) and \(\mathrm{WS}\) are the particles with best and worst stability levels inside the universe equivalent to the minimum and maximum values of so far found objective function values.

In the main search loop of the EVO, if the neutron enrichment level of a particle is higher than the enrichment bound (\({\mathrm{NEL}}_{\mathrm{i}}>\mathrm{EB}\)), the particle is assumed to have a larger N/Z ratio, so the decay process utilizing alpha, beta, or gamma schemes are in perspective. In this regard, a random number is generated in the range of [0, 1], which mimics the Stability Bound (SB) in the universe. If the stability level of a particle is higher than the stability bound (\({\mathrm{SL}}_{\mathrm{i}}>\mathrm{SB}\)), the alpha and gamma decay is considered to happen since these two decays are probable for heavier particles with higher stability levels. Based on physics principles regarding alpha decay (Fig. 2), α rays are emitted to improve the product's stability level in the physical reaction. This aspect can be mathematically formulated as one of the position updating schemes of the EVO in which a new solution candidate is generated. For this purpose, two random integers are generated as Alpha Index I in the range of [1, d], which denotes the number of emitted rays, and Alpha Index II in the range of [1, Alpha Index I], which defines which α rays to be emitted. The emitted rays are decision variables in the solution candidate, which are removed and substituted by the rays in particle or candidate with the best stability level (\({\mathrm{X}}_{\mathrm{BS}}\)). These aspects are mathematically formulated as follows:

where \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}1}\) is the newly generated particle in the universe, \({\mathrm{X}}_{\mathrm{i}}\) is the current position vector of the ith particle (solution candidates) in the universe (search space), \({\mathrm{X}}_{\mathrm{BS}}\) is the position vector of the particle with the best stability level, \({\mathrm{x}}_{\mathrm{i}}^{\mathrm{j}}\) is the jth decision variable or emitted ray.

Different modes of decay62.

Besides, in gamma decay, γ rays are emitted to improve the excited particles' stability level (Fig. 2), so this aspect can be mathematically formulated as another position-updating process of the EVO in which a new solution candidate is generated. For this purpose, two random integers are generated as Gamma Index I in the range of [1, d], which denotes the number of emitted photons, and Gamma Index II in the range of [1, Gamma Index I], which defines which photons to be considered in the particles. The photons in the particles are decision variables in the solution candidate, which are removed and substituted by a neighboring particle or candidate (\({\mathrm{X}}_{\mathrm{Ng}}\)), which mimics the interaction of the excited particles with other particles or even magnetic fields. In this regard, the total distance between the considered particle and the other ones is calculated as follows, and the nearest particle is utilized for this purpose:

where \({\text{D}}_{{\text{i}}}^{{\text{k}}}\) is the total distance between the ith particle and the kth neighbouring particle, and (\({\text{x}}_{1} ,{\text{y}}_{1}\)) and (\({\text{x}}_{2} ,{\text{y}}_{2}\)) denote the coordinates of the particles in the search space.

Using these actions, the position updating process for generating the second solution candidate in this phase is conducted as follows:

where \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}2}\) is the newly generated particle in the universe, \({\mathrm{X}}_{\mathrm{i}}\) is the current position vector of the ith particle (solution candidate) in the universe (search space), \({\mathrm{X}}_{\mathrm{Ng}}\) is the position vector of the neighbouring particle around the ith particle, and \({\mathrm{x}}_{\mathrm{i}}^{\mathrm{j}}\) is the jth decision variable or emitted photon.

If the stability level of a particle is lower than the stability bound (\({\mathrm{SL}}_{\mathrm{i}}\le \mathrm{SB}\)), beta decay is considered to happen because this type of decay happens in more unstable particles with lower stability levels. Based on the physics principles regarding beta decay (Fig. 2), β rays are expelled from the particles to improve the stability level of the particle, so a big jump in the search space is supposed to be conducted due to the higher levels of instability in these particles. In this regard, a position updating process is conducted for the particles in which a controlled movement toward the particle or candidate with the best stability level (\({\mathrm{X}}_{\mathrm{BS}}\)) and the centre of particles (\({\mathrm{X}}_{\mathrm{CP}}\)) is performed. These aspects of the algorithm mimic the particles' tendency to reach the stability band in which most of the known particles are positioned near this band, and most of them have higher levels of stability (Fig. 1a and b). These aspects are mathematically formulated as follows:

where \({\text{X}}_{{\text{i}}}^{{{\text{New}}1}}\) and \({\text{X}}_{{\text{i}}}\) are the upcoming and current position vectors of the ith particles (solution candidates) in the universe (search space),\({\text{ X}}_{{{\text{BS}}}}\) is the position vector of the particle with the best stability level, \({\text{X}}_{{{\text{CP}}}}\) is the position vector for the centre of particles, \({\text{SL}}^{{\text{i}}}\) is the stability level of the ith particle, \({\text{r}}_{1}\) and \({\text{r}}_{2}\) are two random numbers in the range of [0, 1] which determine the amount of particles’ movement.

In order to improve the exploitation and exploration levels of the algorithm, another position updating process is conducted for the particles employing beta decay in which a controlled movement toward the particle or candidate with the best stability level (\({\text{X}}_{{{\text{BS}}}}\)) and a neighbouring particle or candidate (\({\text{X}}_{{{\text{Ng}}}}\)) is performed while the stability level of the particle does not affect the movement process. These aspects are mathematically formulated as follows:

where \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}2}\) and \({\mathrm{X}}_{\mathrm{i}}\) are the upcoming and current position vectors of the ith particle (solution candidates) in the universe (search space),\({\mathrm{ X}}_{\mathrm{BS}}\) is the position vector of the particle with the best stability level, \({\mathrm{X}}_{\mathrm{Ng}}\) is the position vector of the neighbouring particle around the ith particle, and \({\mathrm{r}}_{3}\) and \({\mathrm{r}}_{4}\) are two random numbers in the range of [0, 1] which determine the amount of particles’ movement.

If the neutron enrichment level of a particle is lower than the enrichment bound (\({\mathrm{NEL}}_{\mathrm{i}}\le \mathrm{EB}\)), the particle is assumed to have a smaller N/Z ratio, so the particle tends to undergo electron capture or positron emission to move toward the stability band. In this regard, a random movement in the search space is determined for considering these sorts of movements as follows:

where \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}}\) and \({\mathrm{X}}_{\mathrm{i}}\) are the upcoming and current position vectors of the ith particles (solution candidates) in the universe (search space), and \(\mathrm{r}\) is a random number in the range of [0, 1] which determines the amount of particles’ movement.

At the end of the main loop of the EVO, there are only two newly generated position vectors for each of the particles as \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}1}\) and \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}2}\) if the enrichment level of the particle is higher than the enrichment bound, while for the particle with a lower enrichment level, only \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}}\) is generated as a new position vector. At each state, the newly generated vectors are merged with the current population, and the best particles participate in the following search loop of the algorithm. A boundary violation flag is determined for the decision variables which go beyond the predefined upper and lower bounds, while a maximum number of objective function evaluations or a maximum number of iterations can be utilized as a termination criterion. The pseudo-code of the EVO is presented in Fig. 3, while the flowchart of this algorithm is provided in Fig. 4.

Overall, one of the primary areas of physics is studying the interactions of particles, which focuses on the unique properties of particles and the elements that make them up as well as their interactions with other particles. While the overall rate of decay varies across different kinds of particles, unstable particles tend to demonstrate emission by disintegration or decay. Determining the bound stability of particles by taking into account the number of neutrons (N) and protons (Z), and determination of the N/Z ratio is the toughest challenge in this field. The neutron-rich particles located above the stability bound, however, experience decay and need many neutrons to be stable. Consequently, the principles of the decay process via various particles can be a fantastic starting point for a unique algorithm in which the solution candidates' performance can be improved by taking inspiration from particles' propensity to reach a stable point.

Considering the new things of this algorithm, the main loop of the algorithm includes three position updating process. Two of these procedures occur in decision variables for which the exploration process is conducted while one position updating process occurs in the solution candidates for which the exploitation is satisfied. The challenging pat of this algorithm is the fact that the exploration part may lead the algorithm to local optimum solutions but the other part tries to tune the previous solutions to reach the global best candidate.

Mathematical test functions

In this section, 20 of the well-known mathematical test functions are utilized to evaluate the EVO's performance as a novel metaheuristic algorithm. These functions are as Ackley 1, Alpine 1, Chung Reynolds, Exponential, Inverted cosine wave, Pinter, Rastrigin, Salomon, Schwefel 1.2, Schwefel 2.21, Griewank, Powell Singular 2, Schumer Steiglitz, Schwefel 2.4, Schwefel 2.25, Sphere, Step 3, Trigonometric 2, W / Wavy, and Xin-She Yang 6 functions while the first 10 functions are considered with 50 dimensions and the other half are with 100 dimensions. The global best for the fourth, fifth and eighteenth functions are as -1, -49 and 1 respectively while for the rest of them, the global best is as 0. For statistical purposes, 100 independent optimization runs are conducted to determine the statistical measurements as the mean, standard deviation, and the required number of objective function evaluations. A predefined stopping criterion is also considered based on a maximum number of 150,000 objective function evaluations and tolerance of \(1\times {10}^{-12}\) for the global best values of the supposed problems.

The parameter settings of the optimization algorithms are shown in Table 2, and all tests to evaluate the EVO's performance were conducted with 50 populations using a PC with the detailed parameters shown in Table 3. In Table 4, the best, mean and Standard Deviation (SD) of results for EVO and other alternative algorithms, including the Ant Colony Optimization (ACO), Harmony Search (HS), Firefly Algorithm (FA), Multiverse Optimizer (MVO), Interior Search Algorithm (ISA), and Cuckoo Search Algorithm (CSA) in dealing with these benchmark mathematical functions are presented. Based on the provided results in these tables, it is obvious that the proposed EVO can outperform the other algorithms in most cases. Considering the required Objective Function Evaluations (OFE), ACO, FA, HS, and CSA required a mean of 150,000 OFE while the MVO with 149,900, ISA with 141,263.90, and EVO with 43,060.28 have better performance. Meanwhile, the same random state in each of the considered algorithms for each optimization run is set to a fixed state to have an unbiased and fair judgment regarding the overall performance of the EVO.

The convergence curves of 100 optimization runs conducted by the EVO in dealing with the mathematical test functions are illustrated in Fig. 5. The best and worst runs, alongside the mean of all runs, are highlighted. By considering the convergence history of EVO, it can be concluded that the proposed algorithm is capable of performing fast optimization procedures in most of the considered problems, while the algorithm does not need to conduct predefined 150,000 objective function evaluations and is capable of reaching the tolerance of \(1\times {10}^{-12}\) in a swift way.

Statistical analysis

In this section, the results of the EVO and other alternative metaheuristic algorithms in dealing with the mathematical test functions are utilized for conducting a comprehensive statistical analysis. In this regard, four of the well-known statistical tests as the Kolmogorov Smirnov (KS) test for normality control, Wilcoxon (W) signed ranks test for comparing in a two-by-two procedure the summation and mean of the metaheuristics' ranks, and the Kruskal Wallis (KW) test for evaluating the overall rankings of different metaheuristic algorithms by comparing the mean of the metaheuristics' ranks. In Table 5, the results of the KS test are provided in which the p-value of this test is less than 0.05, so the hypothesis in the normal distribution of data is satisfied, and the non-parametric statistical tests can be utilized for further investigations.

In Fig. 6, the results of the W test are presented in which the mean ranks of different metaheuristic algorithms are provided and compared in a two-by-two manner regarding the best results, while the metaheuristics with a smaller mean of ranks are superior to the other algorithm. The EVO can provide better results with smaller means of ranks in most cases based on the results. In Fig. 7, the results of the KW statistical test, including the mean of ranks by considering all of the data sets, are presented in which the EVO is capable of outranking the other algorithms in all of the considered data sets.

CEC 2020 complexity analysis & Big O notation

In most of the recently developed metaheuristic algorithms, the computational efforts of the algorithms in dealing with complex optimization problems have been of significant concern due to the increasing interest of artificial intelligence experts to provide computationally efficient algorithms for optimization purposes. In this regard, the computational time procedure of the CEC 2020 benchmark suit on bound constrained60 is utilized in which T0 represents the run time of a predefined mathematical process, T1 is the computational time of G1 function by considering 200,000 objective function evaluations, T2 is the computational time of the proposed algorithm (EVO) for 200,000 objective function evaluations of G1 function, and \({\widehat{\mathrm{T}}}_{2}\) is the mean of five individual T2. In Table 6, the computational time of the proposed EVO algorithm and other approaches are provided in which the capability of the EVO in competing with other algorithms is in perspective.

One of the well-known procedures for evaluating the computational complexity of the algorithms is the “Big O notation” “which is frequently used in computer science and is adopted in this paper for further investigations on EVO algorithm. By considering NP and D as the total number of initial solution candidates and the dimension of the optimization problem, respectively, the computational complexity of generating position vectors and calculating objective function are as O(NP × D) and O(NP) × O(F(x)) regarding the fact that F(x) represents the objective function of the optimization problem. In the main loop of the EVO, each line has a computational complexity of MxItr as the total number of iterations. The position updating process for each of the solution candidates in this loop can be conducted in two phases as if \({\mathrm{NEL}}_{\mathrm{i}}>\mathrm{EB}\), two new position vectors are created in one of the \({\mathrm{SL}}_{\mathrm{i}}>\mathrm{SB}\) and \({\mathrm{SL}}_{\mathrm{i}}\le \mathrm{SB}\) subphases so the computational complexity of O(MxItr × NP × D × 2) is determined in this phase. Regarding \({\mathrm{NEL}}_{\mathrm{i}}\le \mathrm{EB}\), only one new position vector is generated, so the complexity of O(MxItr × NP × D) is concerned in this case. For objective function evaluation in these two phases, the complexity of O(MaxIter × NP × D × 3) × O(F(x)) and O(MaxIter × NP × D) × O(F(x)) are determined, respectively.

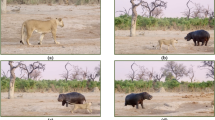

CEC 2020 real-world constrained optimization

Most of the time, the capability of the metaheuristic algorithms is considered through real-world optimization problems in which some sort of design constraints alongside the bound constraints should be handled for having feasible solutions. For this purpose, the real-world constraint optimization problems of CEC 202061 are utilized in this paper. In Table 7, a summary of these engineering design problems is presented, while the complete mathematical formulations can be found in the literature. The schematic presentation of these problems is also presented in Figs. 8, 9, 10, 11. A total number of 30 independent optimization runs have been conducted using 20,000 function evaluations for statistical purposes. For constraint handling purposes, however, the well-known penalty approach with static co-efficient is utilized in the current study.

Schematic view of the speed reducer problem66.

Schematic view of the hydro-static thrust bearing66.

Schematic view of the ten-bar truss problem66.

Schematic view of the rolling element bearing problem66.

In Tables 8 and 9, the best and statistical results of EVO and other algorithms in dealing with the speed reducer problem are presented in which the optimum design variables alongside the design constraints are provided. Based on the results of best optimization runs conducted by different methods, EVO can provide 2994.42, which is the best among other approaches. Besides, EVO provides the means and worst of 2994.44 and 2994.46, respectively, which are better than other methods’ results.

The best and statistical results of different algorithms, including the proposed EVO in dealing with the hydro-static thrust bearing design problem, are provided in Tables 10 and 11, respectively, including the optimum design variables and the design constraints. In dealing with the problem, EVO can provide the problem, EVO can provide 1619.55, which is the best among other approaches, while the best so far found in other approaches is for CGO, which calculated 1621.24. Meanwhile, EVO provides the means and worst of 1730.09 and 1899.34, respectively, demonstrating some superiority compared to other approaches.

Tables 12 and 13 provide the best and statistical results of different algorithms, including the proposed EVO, in dealing with the ten-bar truss design problem. It can be concluded that the proposed EVO can provide 524.92, which is much better than the previously reported results of 529 and 530. In addition, EVO provides better means, and worst values demonstrate this novel algorithm’s superiority compared to other approaches.

Tables 14 and 15 present the best and statistical results of EVO and other algorithms in dealing with the rolling element bearing design problem (as a maximization problem), in which the optimum design variables and the design constraints are provided. Based on the best optimization runs conducted by different methods, EVO can provide 81,859.74, while the ALO with 85,546.63 is the best algorithm in providing the best result in this case. However, the EVO is capable of competing with the ALO and other approaches by providing mean and worst values of 81,110.32 and 80,212.09, respectively.

Conclusions

Energy Valley Optimizer (EVO) is proposed as a novel metaheuristic algorithm inspired by the advanced principles of physics regarding stability and different modes of decay in particles. For evaluation purposes, 20 unconstrained mathematical test functions with 100 independent optimization runs and the maximum number of 150,000 objective function evaluations alongside the latest Competitions on Evolutionary Computation (CEC), including the CEC 2020 on real-world optimization are utilized. The key results and main findings of this research paper are summarised as follows:

-

In dealing with the unconstrained mathematical test functions, EVO can outrank the other alternative metaheuristic algorithm and converge to the global best solutions in most cases.

-

EVO can converge to the global best solution with the lowest objective function evaluations, demonstrating this novel algorithm’s efficiency from a computational point of view.

-

Based on the W statistical test results, the EVO can provide better results with smaller means of ranks compared to other algorithms in a two-by-two manner.

-

The results of the KW statistical test, including the mean of ranks, demonstrate that the EVO can out-ranked the other algorithms in all of the considered data sets.

-

Considering the constrained design examples of the CEC 2020 on real-world problems, the EVO can reach better solutions than other algorithms from the literature.

-

Based on the best optimization runs conducted by different methods in dealing with the speed reducer problem, EVO can provide 2994.42, which is the best among other approaches.

-

EVO can provide 1619.55 for the hydro-static thrust bearing design problem, which is the best among other approaches, while the best so far found in other approaches is for CGO, which calculated 1621.24.

-

The proposed EVO can provide 524.92 for the ten-bar truss design problem, which is much better than the previously reported results of 529 and 530.

-

Regarding the rolling element bearing design problem, EVO can provide 81,859.74, as the best optimum solution alongside mean and worst values of 81,110.32 and 80,212.09, respectively.

Based on the results and conducted analysis, the main reason for the superiority of the EVO algorithm compared to other mentioned metaheuristics algorithms is threefold: parameter-free, fast convergence behavior, and the lowest possible objective function evaluation. The proposed EVO should be tested for future studies utilizing complex optimization problems in different fields, including real-size engineering design problems.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Abbreviations

- GA:

-

Genetic algorithm

- CEC:

-

Competitions on evolutionary computation

- DE:

-

Differential evolution

- N:

-

Number of neutrons

- PSO:

-

Particle swarm optimisation

- Z:

-

Number of protons

- FA:

-

Firefly algorithm

- α:

-

Dense and positively charged particles

- ACO:

-

Ant colony optimization

- β:

-

Negatively charged particles

- HS:

-

Harmony search

- γ:

-

Photons with higher levels of energy

- BBBC:

-

Big-bang big-crunch

- \(\mathrm{n}\) :

-

Total number of particles

- MVO:

-

Multiverse algorithm

- \(\mathrm{d}\) :

-

Dimension of the considered problem

- CGO:

-

Chaos game optimization

- \({\mathrm{x}}_{\mathrm{i}}^{\mathrm{j}}\) :

-

The jth decision variable for determining the initial position of the ith candidate

- PO:

-

Projectiles optimisation

- \({\mathrm{x}}_{\mathrm{i},\mathrm{min}}^{\mathrm{j}}\) :

-

Lower bounds of the jth variable in the ith candidate

- ALO:

-

Ant lion optimizer

- \({\mathrm{x}}_{\mathrm{i},\mathrm{max}}^{\mathrm{j}}\) :

-

Upper bounds of the jth variable in the ith candidate

- AISA:

-

Adolescent identity search algorithm

- EB:

-

Enrichment bound

- MGA:

-

Material generation algorithm

- NEL:

-

Neutron enrichment level

- AOS:

-

Atomic orbital search

- \({\mathrm{NEL}}^{\mathrm{i}}\) :

-

Neutron enrichment level of the ith particle

- AHO:

-

Archerfish hunting optimizer

- \({\mathrm{SL}}^{\mathrm{i}}\) :

-

Stability level of the ith particle

- ICA:

-

Imperialistic competitive algorithm

- \(\mathrm{BS}\) :

-

Particles with best stability levels

- WOA:

-

Whale optimization algorithm

- \(\mathrm{WS}\) :

-

Particles with worst stability levels

- HHO:

-

Harris hawks optimization

- \({\mathrm{X}}_{\mathrm{i}}^{\mathrm{New}1}\) :

-

Newly generated particle in the universe

- AOA:

-

Arithmetic optimization algorithm

- \({\mathrm{X}}_{\mathrm{i}}\) :

-

Current position vector of the ith particles (solution candidates) in the universe

- EVO:

-

Energy valley optimizer

- \({\mathrm{x}}_{\mathrm{i}}^{\mathrm{j}}\) :

-

The jth decision variable

- CSA:

-

Cuckoo search algorithm

- \({\mathrm{D}}_{\mathrm{i}}^{\mathrm{k}}\) :

-

Total distance between the ith particle and the kth neighbouring particle

- IMODE:

-

Improved multi-operator differential evolution

- EBOwithCMAR:

-

Effective butterfly optimizer with covariance matrix adapted retreat phase

- \({\mathrm{SL}}^{\mathrm{i}}\) :

-

Stability level of the ith particle

- HSES:

-

Hybrid sampling evolution strategy

- LSHADE-cnEpSin:

-

LSHADE with an ensemble sinsoidal parameter adaptation

- LSHADE-SPACMA:

-

LSHADE with semi-parameter adaptation and covariance matrix adaptation

- \({\mathrm{X}}_{\mathrm{CP}}\) :

-

Position vector for the centre of particles

- j 2020:

-

Developed differential evolution

- GSK:

-

Gaining sharing knowledge based algorithm

- ES:

-

Evolution strategy

- SBS:

-

Socio-behavioural simulation

- COM:

-

Classic optimization method

- CGS:

-

Combined genetic search

- TLBO:

-

Teaching–learning-based optimization

- EGWO:

-

Enhanced grey wolf optimizer

- JA:

-

Jaya algorithm

- IPTR:

-

Interior point trust region

- SLP:

-

Sequential linear programming

- ECSS:

-

Enhanced charged system search

- ABC:

-

Artificial bee colony

- GWO:

-

Grey wolf optimizer

- \({\mathrm{r}}_{1}\) :

-

Random numbers in the range of [0, 1]

- \({\mathrm{X}}_{\mathrm{Ng}}\) :

-

Position vector of the neighbouring particle around the ith particle

- \({\mathrm{r}}_{2}\) :

-

Random numbers in the range of [0, 1]

- \({\mathrm{r}}_{3}\) :

-

Random numbers in the range of [0, 1]

- \({\mathrm{r}}_{4}\) :

-

Random numbers in the range of [0, 1]

- OFE:

-

Objective function evaluations

- SD:

-

Standard deviation

- KS:

-

Kolmogorov Smirnov

- W:

-

Wilcoxon

- KW:

-

Kruskal Wallis

- D:

-

Dimension

- g:

-

Number of inequality constraints

- h:

-

Number of equality constraints

References

Boussaïd, I., Lepagnot, J. & Siarry, P. A survey on optimization metaheuristics. Inf. Sci. 237, 82–117. https://doi.org/10.1016/j.ins.2013.02.041 (2013).

Hussain, K., Salleh, M. N. M., Cheng, S. & Shi, Y. Metaheuristic research: a comprehensive survey. Artif. Intell. Rev. 52(4), 2191–2233. https://doi.org/10.1007/s10462-017-9605-z (2019).

Holland, J. H. Genetic algorithms and adaptation. In Adaptive Control of Ill-Defined Systems (eds Selfridge, O. G. et al.) 317–333 (Springer US, 1984). https://doi.org/10.1007/978-1-4684-8941-5_21.

Storn, R. & Price, K. Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 11, 341–359. https://doi.org/10.1023/A:1008202821328 (1997).

Karami, H., Sanjari, M. J. & Gharehpetian, G. B. Hyper-Spherical Search (HSS) algorithm: a novel meta-heuristic algorithm to optimize nonlinear functions. Neural Comput. Appl. 25, 1455–1465. https://doi.org/10.1007/s00521-014-1636-7 (2014).

Eberhart, R. & Kennedy, J. in MHS'95. Proceedings of the Sixth International Symposium on Micro Machine and Human Science. 39–43.

Yang, X.-S. Nature-inspired mateheuristic algorithms: success and new challenges. J. Comput. Eng. Inform. Technol. https://doi.org/10.4172/2324-9307.1000e101 (2012).

Dorigo, M., Maniezzo, V. & Colorni, A. Ant system: optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 26(1), 29–41. https://doi.org/10.1109/3477.484436 (1996).

Ahmed, Z. E., Saeed, R. A., Mukherjee, A. & Ghorpade, S. N. Energy optimization in low-power wide area networks by using heuristic techniques. In LPWAN Technologies for IoT and M2M Applications 199–223 (Elsevier, 2020). https://doi.org/10.1016/B978-0-12-818880-4.00011-9.

Dhiman, G., Garg, M., Nagar, A., Kumar, V. & Dehghani, M. A novel algorithm for global optimization: rat swarm optimizer. J. Ambient. Intell. Humaniz. Comput. 12, 8457–8482. https://doi.org/10.1007/s12652-020-02580-0 (2021).

Yampolskiy, R. V., Ashby, L. & Hassan, L. Wisdom of artificial crowds—A metaheuristic algorithm for optimization. J. Intell. Learn. Syst. Appl. 4(2), 10. https://doi.org/10.4236/jilsa.2012.42009 (2012).

Xie, L. et al. Tuna swarm optimization: a novel swarm-based metaheuristic algorithm for global optimization. Comput. Intell. Neurosci. 2021, 9210050. https://doi.org/10.1155/2021/9210050 (2021).

Karaboga, D. & Basturk, B. in Foundations of Fuzzy Logic and Soft Computing. (eds Patricia Melin et al.) 789–798 (Springer Berlin Heidelberg).

Hashim, F. A., Houssein, E. H., Mabrouk, M. S., Al-Atabany, W. & Mirjalili, S. Henry gas solubility optimization: a novel physics-based algorithm. Futur. Gener. Comput. Syst. 101, 646–667. https://doi.org/10.1016/j.future.2019.07.015 (2019).

Azizi, M. Atomic orbital search: a novel metaheuristic algorithm. Appl. Math. Model. 93, 657–683. https://doi.org/10.1016/j.apm.2020.12.021 (2021).

Talatahari, S., Azizi, M. & Gandomi, A. H. Material generation algorithm: a novel metaheuristic algorithm for optimization of engineering problems. Processes 9, 859 (2021).

Azizi, M., Shishehgarkhaneh, M. B. & Basiri, M. Optimum design of truss structures by Material Generation Algorithm with discrete variables. Decis. Anal. J. 3, 100043. https://doi.org/10.1016/j.dajour.2022.100043 (2022).

Hosseini, E., Ghafoor, K. Z., Emrouznejad, A., Sadiq, A. S. & Rawat, D. B. Novel metaheuristic based on multiverse theory for optimization problems in emerging systems. Appl. Intell. 51, 3275–3292. https://doi.org/10.1007/s10489-020-01920-z (2021).

Hashim, F. A., Hussain, K., Houssein, E. H., Mabrouk, M. S. & Al-Atabany, W. Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl. Intell. 51, 1531–1551. https://doi.org/10.1007/s10489-020-01893-z (2021).

Pereira, J. L. J. et al. Lichtenberg algorithm: a novel hybrid physics-based meta-heuristic for global optimization. Expert Syst. Appl. 170, 114522. https://doi.org/10.1016/j.eswa.2020.114522 (2021).

Kaveh, A. & Dadras, A. A novel meta-heuristic optimization algorithm: thermal exchange optimization. Adv. Eng. Softw. 110, 69–84. https://doi.org/10.1016/j.advengsoft.2017.03.014 (2017).

Heidari, A. A. et al. Harris hawks optimization: algorithm and applications. Futur. Gener. Comput. Syst. 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 (2019).

Alabool, H. M., Alarabiat, D., Abualigah, L. & Heidari, A. A. Harris hawks optimization: a comprehensive review of recent variants and applications. Neural Comput. Appl. 33, 8939–8980. https://doi.org/10.1007/s00521-021-05720-5 (2021).

Chou, J.-S. & Truong, D.-N. A novel metaheuristic optimizer inspired by behavior of jellyfish in ocean. Appl. Math. Comput. 389, 125535. https://doi.org/10.1016/j.amc.2020.125535 (2021).

Zhang, J., Xiao, M., Gao, L. & Pan, Q. Queuing search algorithm: a novel metaheuristic algorithm for solving engineering optimization problems. Appl. Math. Model. 63, 464–490. https://doi.org/10.1016/j.apm.2018.06.036 (2018).

Feng, Z.-K., Niu, W.-J. & Liu, S. Cooperation search algorithm: a novel metaheuristic evolutionary intelligence algorithm for numerical optimization and engineering optimization problems. Appl. Soft Comput. 98, 106734. https://doi.org/10.1016/j.asoc.2020.106734 (2021).

Abualigah, L. et al. Aquila optimizer: a novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 157, 107250. https://doi.org/10.1016/j.cie.2021.107250 (2021).

Braik, M., Sheta, A. & Al-Hiary, H. A novel meta-heuristic search algorithm for solving optimization problems: capuchin search algorithm. Neural Comput. Appl. 33, 2515–2547. https://doi.org/10.1007/s00521-020-05145-6 (2021).

Tarkhaneh, O., Alipour, N., Chapnevis, A. & Shen, H. Golden tortoise beetle optimizer: a novel nature-inspired meta-heuristic algorithm for engineering problems. (2021).

Rahkar Farshi, T. Battle royale optimization algorithm. Neural Comput. Appl. 33, 1139–1157. https://doi.org/10.1007/s00521-020-05004-4 (2021).

Savsani, P. & Savsani, V. Passing vehicle search (PVS): a novel metaheuristic algorithm. Appl. Math. Model. 40, 3951–3978. https://doi.org/10.1016/j.apm.2015.10.040 (2016).

Topal, A. O. & Altun, O. A novel meta-heuristic algorithm: dynamic virtual bats algorithm. Inf. Sci. 354, 222–235. https://doi.org/10.1016/j.ins.2016.03.025 (2016).

Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: crow search algorithm. Comput. Struct. 169, 1–12. https://doi.org/10.1016/j.compstruc.2016.03.001 (2016).

Liang, Y.-C. & Juarez, J. R. C. A novel metaheuristic for continuous optimization problems: virus optimization algorithm. Eng. Optim. 48(1), 73–93. https://doi.org/10.1080/0305215X.2014.994868 (2016).

Alsattar, H. A., Zaidan, A. A. & Zaidan, B. B. Novel meta-heuristic bald eagle search optimisation algorithm. Artif. Intell. Rev. 53, 2237–2264. https://doi.org/10.1007/s10462-019-09732-5 (2020).

Rashedi, E., Nezamabadi-pour, H. & Saryazdi, S. G. S. A. A gravitational search algorithm. Inform. Sci. 179, 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004 (2009).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 (2014).

Rao, R. V., Savsani, V. J. & Vakharia, D. P. Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput. Aided Des. 43, 303–315. https://doi.org/10.1016/j.cad.2010.12.015 (2011).

Azizi, M., Talatahari, S. & Gandomi, A. H. Fire Hawk optimizer: a novel metaheuristic algorithm. Artif. Intell. Rev. https://doi.org/10.1007/s10462-022-10173-w (2022).

Shishehgarkhaneh, M. B., Azizi, M., Basiri, M. & Moehler, R. C. BIM-based resource tradeoff in project scheduling using fire hawk optimizer (FHO). Buildings 12, 1472 (2022).

Luque-Chang, A., Cuevas, E., Fausto, F., Zaldívar, D. & Pérez, M. Social spider optimization algorithm: modifications, applications, and perspectives. Math. Probl. Eng. 2018, 6843923. https://doi.org/10.1155/2018/6843923 (2018).

Husseinzadeh Kashan, A. League Championship algorithm (LCA): an algorithm for global optimization inspired by sport championships. Appl. Soft Comput. 16, 171–200. https://doi.org/10.1016/j.asoc.2013.12.005 (2014).

Talatahari, S. & Azizi, M. Chaos game optimization: a novel metaheuristic algorithm. Artif. Intell. Rev. 54, 917–1004. https://doi.org/10.1007/s10462-020-09867-w (2021).

Azizi, M., Aickelin, U., Khorshidi, H. A. & Shishehgarkhaneh, M. B. Shape and size optimization of truss structures by Chaos game optimization considering frequency constraints. J. Adv. Res. https://doi.org/10.1016/j.jare.2022.01.002 (2022).

Yang, X. S. & Hossein Gandomi, A. Bat algorithm: a novel approach for global engineering optimization. Eng. Comput. 29, 464–483 (2012).

Ghasemi-Marzbali, A. A novel nature-inspired meta-heuristic algorithm for optimization: bear smell search algorithm. Soft. Comput. 24, 13003–13035. https://doi.org/10.1007/s00500-020-04721-1 (2020).

Hayyolalam, V. & Pourhaji Kazem, A. A. Black widow optimization algorithm: a novel meta-heuristic approach for solving engineering optimization problems. Eng. Appl. Artif. Intell. 87, 103249. https://doi.org/10.1016/j.engappai.2019.103249 (2020).

Kumar, N., Singh, N. & Vidyarthi, D. P. Artificial lizard search optimization (ALSO): a novel nature-inspired meta-heuristic algorithm. Soft. Comput. 25, 6179–6201. https://doi.org/10.1007/s00500-021-05606-7 (2021).

Anita, A. Y. & Kumar, N. Artificial electric field algorithm for engineering optimization problems. Expert Syst. Appl 149, 113308. https://doi.org/10.1016/j.eswa.2020.113308 (2020).

Vatin, N., Ivanov, A. Y., Rutman, Y. L., Chernogorskiy, S. & Shvetsov, K. Earthquake engineering optimization of structures by economic criterion. Mag. Civil Eng. 76, 67–83. https://doi.org/10.18720/MCE.76.7 (2017).

Ouaarab, A., Ahiod, B. & Yang, X.-S. Discrete cuckoo search algorithm for the travelling salesman problem. Neural Comput. Appl. 24, 1659–1669. https://doi.org/10.1007/s00521-013-1402-2 (2014).

Gupta, S., Deep, K., Moayedi, H., Foong, L. K. & Assad, A. Sine cosine grey wolf optimizer to solve engineering design problems. Eng. Comput. 37, 3123–3149. https://doi.org/10.1007/s00366-020-00996-y (2021).

Rao, R. V. & Pawar, R. B. Self-adaptive multi-population rao algorithms for engineering design optimization. Appl. Artif. Intell. 34, 187–250. https://doi.org/10.1080/08839514.2020.1712789 (2020).

Kamboj, V., Nandi, A., Bhadoria, A. & Sehgal, S. An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Appl. Soft Comput. 89, 106018. https://doi.org/10.1016/j.asoc.2019.106018 (2019).

Qi, X., Yuan, Z. & Song, Y. A hybrid pathfinder optimizer for unconstrained and constrained optimization problems. Comput. Intell. Neurosci. 2020, 5787642. https://doi.org/10.1155/2020/5787642 (2020).

Azizi, M., Ghasemi Seyyed Arash, M., Ejlali Reza, G. & Talatahari, S. Optimization of fuzzy controller for nonlinear buildings with improved charged system search. Struct. Eng. Mech. 76, 781–797 (2020).

Alekseytsev, A. Metaheuristic optimization of building structures with different level of safety. J. Phys. Conf. Ser. 1425, 012014. https://doi.org/10.1088/1742-6596/1425/1/012014 (2019).

Khondoker, M. T. H. Automated reinforcement trim waste optimization in RC frame structures using building information modeling and mixed-integer linear programming. Autom. Construct. 124, 103599. https://doi.org/10.1016/j.autcon.2021.103599 (2021).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82 (1997).

Yue, C. T. et al. Problem Definitions and Evaluation Criteria for the CEC 2020 Special Session and Competition on Single Objective Bound Constrained Numerical Optimization. Technical Report (Nanyang Technological University, Singapore, 2020).

Kumar, A. et al. A test-suite of non-convex constrained optimization problems from the real-world and some baseline results. Swarm Evolut. Comput. 56, 100693. https://doi.org/10.1016/j.swevo.2020.100693 (2020).

Silberberg, M. Principles of General Chemistry 3rd edn. (McGraw-Hill Education, 2012).

Sallam, K. M., Elsayed, S. M., Chakrabortty, R. K. & Ryan, M. J. in 2020 IEEE Congress on Evolutionary Computation (CEC). 1–8.

Brest, J., Maučec, M. S. & Bošković, B. in 2020 IEEE Congress on Evolutionary Computation (CEC). 1–8.

Mohamed, A. W., Hadi, A. A., Mohamed, A. K. & Awad, N. H. in 2020 IEEE Congress on Evolutionary Computation (CEC). 1–8.

Talatahari, S. & Azizi, M. Optimization of constrained mathematical and engineering design problems using chaos game optimization. Comput. Ind. Eng. 145, 106560 (2020).

Mezura-Montes, E., Coello, C. & Landa-Becerra, R. Engineering optimization using simple evolutionary algorithm. (2003).

Akhtar, S., Tai, K. & Ray, T. A socio-behavioural simulation model for engineering design optimization. Eng. Optim. 34, 341–354. https://doi.org/10.1080/03052150212723 (2002).

Gandomi, A. H., Yang, X.-S. & Alavi, A. H. Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng. Comput. 29, 17–35 (2013).

Zhang, M., Luo, W. & Wang, X. Differential evolution with dynamic stochastic selection for constrained optimization. Inf. Sci. 178, 3043–3074. https://doi.org/10.1016/j.ins.2008.02.014 (2008).

Siddall, J. N. Optimal Engineering Design: Principles and Applications (CRC Press, 1982).

Deb, K. & Goyal, M. Optimizing engineering designs using a combined genetic search. In Proc. International Conference on Genetic Algorithms. 521–528 (1997).

Hernandez-Aguirre, A., Botello, S., Coello, C. & Lizárraga, G. Use of multiobjective optimization concepts to handle constraints in single-objective optimization. In Genetic and Evolutionary Computation — GECCO 2003: Genetic and Evolutionary Computation Conference Chicago, IL, USA, July 12–16, 2003 Proceedings, Part I (eds Cantú-Paz, E. et al.) 573–584 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2003). https://doi.org/10.1007/3-540-45105-6_69.

Şahin, I., Dörterler, M. & Gokce, H. Optimization of hydrostatic thrust bearing using enhanced grey wolf optimizer. Mechanika 25, 480–486 (2019).

Rao, R. V. & Waghmare, G. G. A new optimization algorithm for solving complex constrained design optimization problems. Eng. Optim. 49, 60–83. https://doi.org/10.1080/0305215X.2016.1164855 (2017).

Yu, Z. et al. Optimal design of truss structures with frequency constraints using interior point trust region method. Proc. Rom. Acad. - Math. Phys. Tech. Sci. Inf. Sci. 15(2), 165–173 (2014).

Lamberti, L. & Pappalettere, C. Move limits definition in structural optimization with sequential linear programming. Part I: optimization algorithm. Comput. Struct. 81, 197–213. https://doi.org/10.1016/S0045-7949(02)00442-X (2003).

Baghlani, A. & Makiabadi, M. H. Teaching-learning-based optimization algorithm for shape and size optimization of truss structures with dynamic frequency constraints. Iran. J. Sci. Technol. Trans. A Sci. 37, 409–421 (2013).

Kaveh, A. & Zolghadr, A. Shape and size optimization of truss structures with frequency constraints using enhanced charged system search algorithm. Asian J. Civil Eng. (Build. Hous.) 12, (2011).

Yildiz, A. R., Abderazek, H. & Mirjalili, S. A comparative study of recent non-traditional methods for mechanical design optimization. Arch. Comput. Methods Eng. 27, 1031–1048 (2020).

Author information

Authors and Affiliations

Contributions

M.A. contributed to the study design, developed the methods, implemented the experiments, wrote and revised the article. U.A., H.A.K. and M.B.S. provided critical review on the outcomes, revised the article. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Azizi, M., Aickelin, U., A. Khorshidi, H. et al. Energy valley optimizer: a novel metaheuristic algorithm for global and engineering optimization. Sci Rep 13, 226 (2023). https://doi.org/10.1038/s41598-022-27344-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-27344-y

This article is cited by

-

Symmetric projection optimizer: concise and efficient solving engineering problems using the fundamental wave of the Fourier series

Scientific Reports (2024)

-

Lotus effect optimization algorithm (LEA): a lotus nature-inspired algorithm for engineering design optimization

The Journal of Supercomputing (2024)

-

A Contemporary Systematic Review on Meta-heuristic Optimization Algorithms with Their MATLAB and Python Code Reference

Archives of Computational Methods in Engineering (2024)

-

SRIME: a strengthened RIME with Latin hypercube sampling and embedded distance-based selection for engineering optimization problems

Neural Computing and Applications (2024)

-

Improved honey badger algorithm based on elementary function density factors and mathematical spirals in polar coordinate systema

Artificial Intelligence Review (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.