Abstract

Accurately model recognition of mobile device is of great significance for identifying copycat device and protecting intellectual property rights. Although existing methods have realized high-accuracy recognition about device’s category and brand, the accuracy of model recognition still needs to be improved. For that, we propose Recognizer, a high-accuracy model recognition method of mobile device based on weighted feature similarity. We extract 20 features from the network traffic and physical attributes of device, and design feature similarity metric rules, and calculate inter-device similarity further. In addition, we propose feature importance evaluation strategies to assess the role of feature in recognition and determine the weight of each feature. Finally, based on all or part of 20 features, the similarity between the target device and known devices is calculated to recognize the brand and model of target device. Based on 587 models of mobile devices of 17 widely used brands such as Apple and Samsung, we carry out device recognition experiments. The results show that Recognizer can identify the device’s brand and model than existing methods more effectively. In average, the model recognition accuracy of Recognizer is 99.08% (+ 9.25%↑) when using 20 features and 92.08% (+ 29.26%↑) when using 13 features.

Similar content being viewed by others

Introduction

Mobile devices bring great convenience to users’ life. For instance, users can use their mobile device to perform online payment, watch videos, and even use the mobile device to control the home air conditioner remotely1. The convenience characteristic is also an important factor for the rapid growth of mobile devices. Currently, there are many legal mobile device manufacturers, such as Apple, Samsung, Xiaomi, etc., and some illegal manufacturers who produce copycat devices for profit. These illegal copycat devices harm consumers’ interests, bring network security risks2, disrupt fair competition in the market, and pose a great challenge to the intellectual property protection.

Device recognition is an important technology to obtain device’s category, brand, model, service, version or other information3. This technology is significant for cyber asset inventory and security risk assessment4,5,6. The methods recognizing the special model of mobile device accurately can be used in identifying copycat devices, determining the illegal facts of illegal manufacturers7 and protecting the intellectual property rights of legal device manufacturers.

The existing device recognition methods are mainly based on the differences between different devices in network traffic, device information in Internet resources, or the physical attributes. Based on those differences, existing methods recognized the type of device by constructing fingerprint database or trained classification model. According to the source of device information, the existing recognition methods can be divided into traffic-based device recognition methods, network-search-based device recognition methods, and physical-attributes-based device recognition methods. We will describe those methods in detail in “Related work” section.

Among the three sources, traffic is the easiest to obtain. However, because the same kind of devices in the same manufacturers use same network protocol for data transmission commonly, the accuracy of model recognition of IoT device using traffic is limited. Physical attributes of different device are different often, which makes it possible to effectively recognize the model of device based on physical attributes, but acquiring physical attributes of devices on a large scale is difficult.

In this paper, we construct a feature set of 20 features, which are extracted from traffic (traffic features include GPU model, resolution, operating system and others) and physical attributes (such as device size and screen size as features). Recognizer identifies the model of target mobile device based on the features in the feature set. When some features of target device may not be available in the realistic work, Recognizer can still effectively recognize the model of mobile devices based on part features in the feature set.

Although we use some physical attributes in Recognizer, there are some applied scenarios in safeguarding the rights of consumers and the intellectual property of legitimate device manufacturers. For examples, for consumers, after purchasing a mobile phone, they are able to obtain the physical attributes and traffic features of the mobile phone, and identify whether the mobile phone is copycat device using Recognizer; regulators can use Recognizer to identify the mobile phones being sold at enforcement sites, thereby determining and collecting evidence of the violations, and protecting the intellectual property of legal device manufacturers.

The main contributions in this paper are as follows:

-

(1)

We build a recognition feature set Not only Recognizer, but also existing methods can use those features in feature set to recognize the model of mobile device.

-

(2)

We design new device feature similarity calculation rules According to the form of expression, the features are divided into two types: numerical features and string features. The similarity calculation rules are designed for the two types of features, which realizes the rapid measurement of the similarity between devices.

-

(3)

We propose device feature importance evaluation strategies We propose RFBR and RFMR strategies to assess the role of feature in recognition, as to select recognition features and determine the feature’s weights. Compared with existing strategy in which all feature weights are same in device recognition, it is more reasonable to determine weight according to the feature importance.

-

(4)

We propose Recognizer to recognize the model of mobile device Recognizer is able to use features in the feature set to recognize the model of target mobile device. When using all 20 features in feature set, the average recognition accuracy of Recognizer is 99.08%, an improvement of 9.25% over existing methods. And when using any 13 features in the feature set, the average recognition accuracy of Recognizer is 92.08%, an improvement of 29.26% over existing methods. We believe that Recognizer has good application prospects.

The remainder of this paper is organized as follows: In “Related work” section, we will introduce the existing IoT device recognition methods. In “Our method: Recognizer” section, the principle and steps of Recognizer will be introduced in detail. The validity of RFBR and RFMR will be analyzed in “Analysis of Recognizer” section. In “Results and analysis of experiments” section, we will performance experiments to verify the effectiveness of Recognizer. In Recognizer, we use some physical features, but there may be a limit to obtain all physical features at the same time in actual. Therefore, we will discuss the relationship between recognition accuracy and the number of features in “Results and analysis of experiments” section. Finally, we conclude our work in “Conclusion” section.

Related work

Currently, there are three main research directions for device recognition: traffic-based device recognition methods, network-search-based device recognition methods, and physical-information-based device recognition methods.

In traffic-based device recognition methods, the authors mine and analyze the attribute information (such as ports, protocols, banners, etc.) in active measurement traffic, and the behavior features (such as packet length, sending interval, and statistical feature of data flow) in passive monitor traffic. After that, the authors build a device fingerprint database or train device-recognition classifiers to realize the discrimination of the device type. In Nmap8, the device’s network ports are extracted from the active measurement traffic, and the device fingerprint is calculated by those port results. Based on these device fingerprints, Nmap tool identifies the service type and operating system of device. After that, Durumeric et al.9 develop Zmap, which greatly accelerate the device information collection before recognition. So, Zmap improve the recognition efficiency of network devices. In paper10,11,12,13,14, the authors extract the IP, port, flag bits, and others in the header of the TCP packet as features, and use machine learning algorithms to train the recognition classifiers, thereby realizing the discrimination of the device type. Those methods in papers15,16,17,18 extract features from the protocol data of each layer from passive monitor traffic to form device fingerprints, and identify the type of target device by matching fingerprint. With slight differences in the previously mentioned methods, those methods in paper19,20,21 extract features (such as packet time, length, port, DNS protocol, etc.) from the network layer data and application layer data in traffic, and use a variety of machine learning algorithms to build a phased recognition classifier to identify device. Cheng et al.22 recognize the device according to the difference between the file headers of devices in active measurement traffic. To improve the security in training model, He et al.23 build a recognition model based on federal learning. To improve the usability of the recognition model, Jiao et al.24 propose a multi-level identification framework to decrease the updating frequency of recognition model when training data is updated. In addition, the methods in25,26 do not need to extract features from traffic and eliminates the influence of features extraction behavior during the recognition process. The traffic-based device recognition method can easily obtain the measurement data, and can identify device types and brands in batches in normal network environment. In27, the authors summarize the traffic-based device recognition methods. In real life, the systems, built based on this type of methods, such as Shodan28, ZoomEye29, Censys30, and Quake31 have been widely used. However, because same categories of devices with one brand often use same protocol to transmit data, the difference between these devices in traffic is not obvious. This kind of methods is difficult to effectively recognize fine-grained model of device.

In network-search-based device recognition methods, the authors use the Internet crawlers to acquire device information from Internet resources such as URL (Uniform Resource Locator) strings and Web pages, so as to construct a device database for device recognition. Li et al.32 implement a device recognition algorithm based on the GUI (Graphical User Interface) information in the web pages of camera devices, and found about 1.6 million camera devices. Zou et al.33 establish an IoT device recognition framework. In this framework, Zou et al. built a device database based on the devices’ attributes in the IoT device protocol slogan, and then realized the hierarchical recognition of device. Agarwal et al.34 develop a tool named WID to recognize the device from the source code of webpages and subpages of devices. ARE35 could search for special slogans in device webpage, and obtained the device description information from the device annotation to identify device. Those methods can recognize the model of device without building a device fingerprint database or training machine learning classifier. However, because the reliability of Web resources is difficult to evaluate and the webpages’ structure of search results is diverse, extracting reliable device information from webpages is complex. This kind of methods are not easy to implement, and the recognition accuracy of those methods is limited in practical work.

In physical-attributes-based device recognition methods, the authors recognize the type of device based on the difference in the physical characteristics. Guo et al.36 analyze the structure characteristics of device physical addresses and recognize the type of device based on the device’s MAC (Media Access Control) addresses. The methods in paper37,38,39,40,41,42,43 use the time offset characteristics of “the clock of each device is unique, and the deviation still exists after being calibrated by the NTP (Network Time Protocol)” to recognize IoT devices. Radhakrishnan44 found that the device hardware clock deviation would lead to differences in network behavior. So, Radhakrishnan designs a fingerprint generation algorithm using neural network, named GTID, to identify device types. The device recognition methods based on physical attributes can identify the model of device. Especially, the recognition methods based on the clock offset characteristic, greatly improve the recognition accuracy. However, due to the interference in network, precise time offset of device is difficult to obtain, causing low recognition accuracy of these methods in the actual network. At the same time, measuring the device’s clock offset is not easy, which also limits the widely application of these methods.

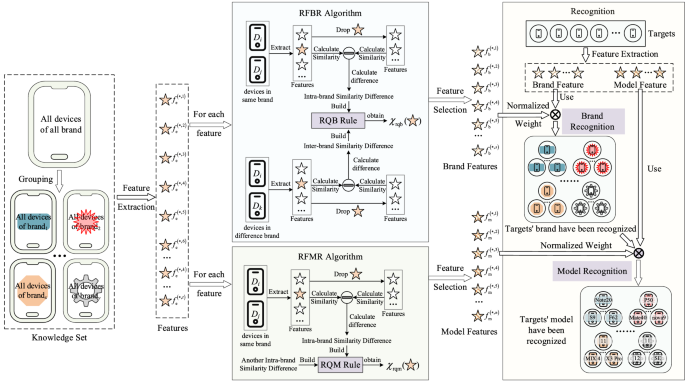

In this paper, we extract traffic attributes such as GPU model, resolution and operating system, as well as the physical attributes such as device size and screen size, and propose Recognizer, a high accuracy model recognition method of mobile device based on weighted feature similarity. Recognizer extracts the common attributes of all mobile devices as features, and formulates feature similarity calculation rules according to feature expression, so as to measure the similarity between different devices. At the same time, we design the features importance evaluation strategies to assess the role of each feature in brand recognition (we call this strategy “RFBR”) and model recognition (we call this strategy “RFMR”). In RFBR and RFMR, the weight of features will be determined. When the target device recognition is performed, brand recognition and model recognition are performed in sequence, so as to obtain the model of target device.

Our method: Recognizer

In this section, we will introduce the principles and steps of the Recognizer in detail.

Symbol description

f: feature. There are three kinds of feature: extracted feature fe, brand feature fb and model feature fm. Among them, fe is the feature extracted from the public attributes of the device, fb is the brand feature selected from the extracted features using the RFBR algorithm, and fm is the model feature selected from the extracted features using the RFMR algorithm. The general representations of the ith extracted feature, brand feature, and model feature are \(f_{{\text{e}}}^{{\left( { * ,i} \right)}}\), \(f_{{\text{b}}}^{{\left( { * ,i} \right)}}\), and \(f_{{\text{m}}}^{{\left( { * ,i} \right)}}\). For the device Di, the ith extracted feature, ith brand feature and ith model feature are denoted as \(f_{{\text{e}}}^{{\left( {D_{i} ,i} \right)}}\), \(f_{{\text{b}}}^{{\left( {D_{i} ,i} \right)}}\) and \(f_{{\text{m}}}^{{\left( {D_{i} ,i} \right)}}\) respectively.

F: feature vector. There are three kinds of feature vector: extraction feature vector Fe, brand feature vector Fb and model feature vector Fm. Among them, Fe is a vector composed of extracted features fe, \({\mathbf{F}}_{{\text{e}}} = \left[ {f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} , \ldots } \right]\). Fb is a vector composed of brand features fb, \({\mathbf{F}}_{{\text{b}}} = \left[ {f_{{\text{b}}}^{{\left( { * ,1} \right)}} ,f_{{\text{b}}}^{{\left( { * ,2} \right)}} , \ldots } \right]\). Fm is a vector composed of model features fm, \({\mathbf{F}}_{{\text{m}}} = \left[ {f_{{\text{m}}}^{{\left( { * ,1} \right)}} ,f_{{\text{m}}}^{{\left( { * ,2} \right)}} , \ldots } \right]\). For the device Di, the ith extracted feature vector, ith brand feature vector and ith model feature vector are denoted as \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}}\) (\({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{e}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,2} \right)}} , \ldots } \right]\)), \({\mathbf{F}}_{{\text{b}}}^{{\left( {D_{i} } \right)}}\) (\({\mathbf{F}}_{{\text{b}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{b}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{b}}}^{{\left( {D_{i} ,2} \right)}} , \ldots } \right]\)) and \({\mathbf{F}}_{{\text{m}}}^{{\left( {D_{i} } \right)}}\) (\({\mathbf{F}}_{{\text{m}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{m}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{m}}}^{{\left( {D_{i} ,2} \right)}} , \ldots } \right]\)) respectively.

\(S\left( {f^{{\left( {D_{i} ,k} \right)}} ,f^{{\left( {D_{j} ,k} \right)}} } \right)\): similarity function between two device features, \(0 \le S\left( {f^{{\left( {D_{i} ,k} \right)}} ,f^{{\left( {D_{j} ,k} \right)}} } \right) \le 1\). The similarity functions of the kth extracted feature, brand feature and model feature of device Di and Dj are denoted as \(S\left( {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right)\), \(S\left( {f_{{\text{b}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{b}}}^{{\left( {D_{j} ,k} \right)}} } \right)\) and \(S\left( {f_{{\text{m}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{m}}}^{{\left( {D_{j} ,k} \right)}} } \right)\).

\({\mathbf{S}}\left( {{\mathbf{F}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}^{{\left( {D_{i} } \right)}} } \right)\): similarity function vector. \({\mathbf{S}}\left( {{\mathbf{F}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}^{{\left( {D_{i} } \right)}} } \right) = \left[ {S\left( {f^{{\left( {D_{i} ,1} \right)}} ,f^{{\left( {D_{j} ,1} \right)}} } \right),S\left( {f^{{\left( {D_{i} ,2} \right)}} ,f^{{\left( {D_{j} ,2} \right)}} } \right), \ldots } \right]\). The similarity function vectors of the extracted feature vector, brand feature vector and model feature vector of device Di and Dj are denoted as \({\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} } \right)\), \({\mathbf{S}}\left( {{\mathbf{F}}_{{\text{b}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{b}}}^{{\left( {D_{j} } \right)}} } \right)\) and \({\mathbf{S}}\left( {{\mathbf{F}}_{{\text{m}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{m}}}^{{\left( {D_{j} } \right)}} } \right)\).

\({\mathbf{F}}\backslash f^{{\left( { * ,i} \right)}}\): result of removing \(f^{{\left( { * ,i} \right)}}\) from the feature vector F. For the extracted feature vector \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}}\) of device Di, if \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{e}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,2} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,3} \right)}} } \right]\), then \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,2} \right)}} = \left[ {f_{{\text{e}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,3} \right)}} } \right]\).

Ba: collection of all devices whose brand is a, \(B_{{\text{a}}} = \left\{ {D_{1} ,D_{2} , \ldots } \right\}\). \(\left| {B_{{\text{a}}} } \right|\) is the number of elements in Ba.

B: Collection of all device brands, \({\mathbf{B}} = \left\{ {B_{{\text{a}}} ,B_{{\text{b}}} , \ldots } \right\}\). M is the size of B, \(M = \left| {\mathbf{B}} \right|\).

\({\mathbf{B}} - \left\{ {B_{i} } \right\}\): result of removing Bi from B. if \({\mathbf{B}} = \left\{ {B_{{\text{a}}} ,B_{{\text{b}}} ,B_{{\text{c}}} } \right\}\), then \({\mathbf{B}} - \left\{ {B_{{\text{b}}} } \right\} = \left\{ {B_{{\text{a}}} ,B_{{\text{c}}} } \right\}\).

\(\overrightarrow {(t)}\): this is a t-dimensional row vector, and each value in the vector is 1/t. For example, \(\overrightarrow {(2)} = [0.5,0.5]\).

\(\min \left| {(a - b),\varepsilon } \right|\): minimum of \(\left| {a - b} \right|\), \(\left| {a - b + \varepsilon } \right|\), \(\left| {a - b - \varepsilon } \right|\).

Principles and steps of Recognizer

Recognizer first extracts the common attributes of all mobile devices as features, and formulates similarity calculation rules according to the expression of extracted features. Then, we propose RFBR and RBMR strategies to assess the role of each feature in brand recognition and model recognition for feature selection and weight determination. Finally, Recognizer uses the target’s features to identify the brand and model. The framework of Recognizer is shown in Fig. 1.

There are 7 steps in Recognizer, as follows:

-

Step 1 Group devices. All devices in the knowledge set are grouped by device brand. In each group, all devices’ brands are same.

-

Step 2 Extract feature. In each group, we extract the common attributes of all devices as brand attributes. If all devices in all groups own one attribute, this attribute will be as a feature.

-

Step 3 Calculate similarity between two features. According to the form of extracted feature, we divide the extracted features into numerical features and string features. For each feature form, we build the feature similarity calculation strategy.

-

Step 4 Select brand feature. Based on the effect of each feature on the similarity between same-brand devices and the similarity between devices with different brands, we propose RFBR strategy to quantify the importance of each feature in brand recognition, and the importance value is expressed as \({\chi }_{\mathrm{rqb}}\). Those features, whose \({\chi }_{\mathrm{rqb}}\) is greater than 0, will be selected as brand features. And the value of \({\chi }_{\mathrm{rqb}}\) is as the weight of brand feature.

-

Step 5 Select model feature. Because one model only corresponds to one mobile device, there is no similarity between devices with same model. So, it is unreasonable to use RFBR strategy for model feature selection. According to the effect of feature on same-brand devices and the difference of effect on all brands, we propose RFMR strategy to quantify the importance of each feature in model recognition, and the importance value is expressed as \({\chi }_{\mathrm{rqm}}\). Those features, whose \({\chi }_{\mathrm{rqm}}\) is greater than 0, are selected as model feature. And \({\chi }_{\mathrm{rqm}}\) is the weight of feature.

-

Step 6 Normalize weights. All weights of brand features obtained in Step 4 and all weights of model features obtained in Step 5 are normalized respectively.

-

Step 7 Recognize target’s model. We obtain brand features and model features from target mobile device. After recognizing the brand of target according to brand features and brand features’ weights, the model features and model features’ weights are used to identify the model of target.

Key steps of Recognizer

Among all steps of Recognizer, Step 3, 4, 5, 7 are key steps. These key steps are described in detail as follows.

-

(1)

Calculate similarity between two features.

We divide the extracted features into numerical features and string features. For a feature, if the feature value is a numeric value obtained by measurement tool and there is an inevitable measurable error due to the precision limitation of the measurement tool, the feature is a numeric feature (e.g., length); otherwise, the feature is a string feature (e.g., operating system).

We measure the similarity between two numerical features based on the difference value between two values, while the similarity between two string features is determined based on the inclusion relationship between two strings. Certainly, although a number can be considered as a string, it is not reasonable to calculate the similarity between two numerical features based on the inclusion relationship. For example, for two numeric features f1 (value is 1000) and f2 (value is 999), if f1 and f2 are regarded as string features, the similarity value between f1 and f2 is 0. Obviously, it is unreasonable. Therefore, for two types of features, we design two strategies to calculate the similarity between features, respectively, as follows.

-

(a)

Numerical feature similarity strategy

For numerical features, the smaller the difference in two feature values, the more similar the two features are. But, due to the error in measurement, there is a deviation between the measurement value and the actual value. So, considering the measurement error in numerical feature similarity strategy is more reasonable, which could reduce the effect of measurement error when calculating similarity between two numerical features. According to this, we define (1) and (2) as numerical feature similarity calculation rules.

If the kth extracted features of base device Di and target device Dj are one-dimensional numerical features, the similarity between \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is calculated by (1).

In (1), \(\varepsilon\) is the measurement error threshold, and \(\left| {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \right|\) is the absolute value of numerical feature.

If \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) are multi-dimensional numerical features, the dimensional similarity is calculated for the values in each dimension, and the feature similarity is the product of all dimensional similarities. If \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} = (v_{k,1}^{{\left( {D_{i} } \right)}} , \ldots ,v_{k,s}^{{\left( {D_{i} } \right)}} )\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} = (v_{k,1}^{{\left( {D_{j} } \right)}} , \ldots v_{k,s}^{{\left( {D_{j} } \right)}} )\), the similarity between the target feature \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and base feature \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is calculated using (2).

When calculating the feature similarity according to (1) and (2), if \(\left| {f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right| > 2\left| {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \right|\) or \(\left| {v_{k,t}^{{\left( {D_{j} } \right)}} } \right| > 2\left| {v_{k,t}^{{\left( {D_{i} } \right)}} } \right|\), it indicates that the difference between two numerical feature values (or two values in a certain dimension) is too large. In this case, we think that the two numerical features are not similar, the feature similarity value is set as 0.

-

(b)

String feature similarity strategy

Since each string represents a specific meaning, each string is considered as a whole to calculate the similarity. In Recognizer, according to the number of strings in string feature, the string features are divided into single-string feature and multi-strings feature. We define (3) and (4) as string feature similarity rules.

If the kth extracted features \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) of devices Di and Dj are single-string features, we calculate the feature similarity between \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) according to (3).

When \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} \in f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) or \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} \in f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\), we think that the feature value is incomplete. In this case, the feature similarity value is set to 0.8 (this is an experience value).

If \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) are multi-strings features, we construct vector space with \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} \cup f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\), and vectorize \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\). At this time, the cosine similarity between two vectors is the similarity between two multi-strings features. For example, if \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} = \left\{ {str1,str2} \right\}\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} = \left\{ {str2,str3} \right\}\), the vector space is \(\left\{ {str1,str2,str3} \right\}\). At this time, the vectorization result of \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is \([1,1,0]\), and the vectorization result of \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) is [0, 1, 1]. We denote the vectorization results of \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) as \(V_{j,k}\) and \(V_{i,k}\) respectively, then the feature similarity between \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is calculated by (4).

-

(2)

Select brand feature

In Recognizer, we use RFBR strategy for brand feature selection. So, we describe RFBR in detail here.

Assuming that \(f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}}\) are all extraction features, then extract the feature vector \({\mathbf{F}}_{{\text{e}}} = [f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}} ]\). For each extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), \(1 \le m \le n\), \({\mathbf{F}}_{{\text{e}}}^{\prime } = {\mathbf{F}}_{{\text{e}}} \backslash f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), we calculate the mean of intra-brand similarity increments according to (5).

In (5), \(D_{i} ,D_{j} \in B_{k}\), \(B_{k} \in {\varvec{B}}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), and \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{j} ,m} \right)}}\). Meanwhile, we calculate the mean of inter-brand similarity increments according to (6).

In (6), \(D_{i} \in B_{k}\), \(B_{k} \in {\mathbf{B}}\), \(D_{l} \in {\varvec{B}} - \left\{ {B_{k} } \right\}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), and \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{l} ,m} \right)}}\). Due to \(0 \le S(f^{{\left( {D_{i} ,k} \right)}} ,f^{{\left( {D_{j} ,k} \right)}} ) \le 1\), according to (5) and (6), we can obtain (7).

According to \(\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\) and \(\delta \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\), we design a rule (we name it RQB rule, as 8) to quantifying the feature-differentiation in brand recognition to assess the role of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) in brand recognition.

In (8), \(\alpha\) is an adjustable parameter, \(\alpha \in [0,1]\). If \(\chi_{{{\text{rqb}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} ) > 0\), \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) will be selected as the brand feature, and the weight of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) is \(\chi_{{{\text{rqb}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\).

-

(3)

Select model feature

In Recognizer, we use RFMR for model feature selection. So, we describe the process of RFMR in detail here.

Assuming that \(f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}}\) are all extraction features, then extract the feature vector \({\mathbf{F}}_{{\text{e}}} = [f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots f_{{\text{e}}}^{{\left( { * ,n} \right)}} ]\). For each extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), \(1 \le m \le n\), \({\mathbf{F}}_{{\text{e}}}^{\prime } = {\mathbf{F}}_{{\text{e}}} \backslash f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), we calculate \(\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\) according to (5), and calculate the incremental standard deviation of intra-brand similarity according to formula (9).

In (9), \(D_{i} ,D_{j} \in B_{k}\), \(B_{k} \in {\mathbf{B}}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{j} ,m} \right)}}\).

According to \(\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\) and \(\gamma \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\), we design a rule (we name it RQM rule, as 10) to quantifying the feature-differentiation in model recognition to assess the role of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) in model recognition.

In (10), \(\beta\) is an adjustable parameter, \(\beta \in [0.5,1]\). If \(\chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} ) > 0\), \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) will be selected as the model feature, and the weight of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) is \(\chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\).

-

(4)

Recognize device type

In device type recognition, there are two parts: brand recognition and model recognition. We first perform brand recognition on the target device, and then perform model recognition.

In brand recognition, brand features and normalized weights are used in (11) to calculate the similarity between target device and known devices.

In (11), T is the target device, Ki is one known device in the knowledge set, and \({\mathbf{W}}\left( {{\mathbf{F}}_{{\text{b}}} } \right)\) is the standardized weight vector of brand feature. In knowledge set, the brand of known device with the greatest similarity with target device is taken as the brand of target device. So as to realize brand recognition of the target device.

In model recognition, model features and normalized weights are used in (12) to calculate the similarity between target device and known devices. At this time, the brand of known devices is same with target device.

In (12), T is the target device, Ki is one known device in the knowledge set (the brand of Ki is same with target device), and \({\mathbf{W}}\left( {{\mathbf{F}}_{{\text{m}}} } \right)\) is the standardized weight vector of model feature. The model of known device with the greatest similarity with target device is taken as the model of target device. So as to realize model recognition of the target device.

Analysis of Recognizer

In Recognizer, the brand features, model features and weights directly affect the accuracy of the recognition. We select brand features, model features and obtain their weights based on RFBR and RFMR strategies. Thus, in this section, we will analyze the rationality of RFBR and RFMR strategies.

Rationality analysis of RFBR strategy

RFBR strategy is used to quantify the importance of extracted features in brand recognition, and to select brand features and determine weights.

According to the research of Fu et al.45, the judgment criterion, that the selected feature is effective, is that the selected features can increase the difference between classes (we denote this criterion as effective feature selection criterion, abbreviated as EFS criterion). Therefore, in Recognizer, if one extracted feature could be selected as a brand feature, this extracted feature should be able to increase the difference between devices with different brands. In brand feature selection, there are two cases meeting EFS criterion: (1) For one extracted feature, if the feature can increase the similarity between devices with same brand and reduce the similarity between those devices with different brands, this extracted feature will be selected as brand feature. This is the optimal case. (2) For one extracted feature, if the feature can simultaneously increase the similarity between devices with same brand and similarity between those devices with different brands, and the ratio, between inter-brand-similarity increments and intra-brand-similarity increments, is less than threshold, this extracted feature will also be selected as brand feature. This is an acceptable case.

Assuming that \(f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}}\) are all extraction features, then extract the feature vector \({\mathbf{F}}_{{\text{e}}} = [f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}} ]\). \(\forall D_{i} ,D_{j} \in B_{k} ,B_{k} \in {\mathbf{B}}\), \(\forall D_{l} \in {\mathbf{B}} - \left\{ {B_{k} } \right\}\), \({\varvec{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\varvec{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), \({\varvec{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} = {\varvec{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{j} ,m} \right)}}\), \({\varvec{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)\prime }} = {\varvec{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{l} ,m} \right)}}\). For each extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) (\(1 \le m \le n\)), the similarity increment \(s(f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\) between any two devices with same brand and the similarity increment \(d(f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\) between any two devices with different brands are shown in (13).

If the extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion, then there is

or

In (14) and (15), \(\lambda > 0\). Combining Eqs. (5), (6), (14) and (15), we obtain (16).

Equation (16) shows that if \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion, then (16) is correct. Similarly, if (16) is correct, \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion.

In Recognizer, we use RQB rule to obtain the value of \({\chi }_{\mathrm{rqb}}\) of extracted feature. When the value of \({\chi }_{\mathrm{rqb}}\) is greater than 0, the extracted feature will be selected as brand feature. The larger the \({\chi }_{\mathrm{rqb}}\) value, the greater the feature weight. We can obtain (17) according to the RQB rule (8).

That means \(\chi_{{{\text{rqb}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} ) > 0\) is equivalent to that \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion.

The above analysis shows that RQB rule comply with EFS criteria, RFBR strategy can be used to evaluate the role of each feature in brand recognition, and it is reasonable to use RFBR strategy to select brand features. The value of features can be a significant reference for the selection of brand feature.

Rationality analysis of RFMR strategy

Because one model only corresponds to one mobile device, there is no similarity between devices with same model. So, it is unreasonable to use RFBR strategy for model feature selection. For that, we use RFMR strategy to quantify the role of each feature in model recognition, and to select model features and determine weights. According to the EFS criterion, if one feature can be selected as model feature, the feature should be helpful to distinguish different models of device with same brand. This means that the similarity between two different model devices with same brand could be decreased after using this feature.

Assuming that \(f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}}\) are all extraction features, then extract the feature vector \({\mathbf{F}}_{{\text{e}}} = [f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}} ]\). \(\forall B_{k} \in {\mathbf{B}}\), \(\forall D_{i} ,D_{j} \in B_{k}\), \({\varvec{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\varvec{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), \({\varvec{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} = {\varvec{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{j} ,m} \right)}}\). For each extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) (\(1 \le m \le n\)), the similarity increments \(s(f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\) between any two devices with same brand is shown in (18).

If \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion, then

Equation (19) shows that if \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion, then (19) is correct. Similarly, if (19) is correct, \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion.

In Recognizer, we use RQM rule to obtain the value of \({\chi }_{\mathrm{rqm}}\) of extracted feature. When the value of \({\chi }_{\mathrm{rqm}}\) is greater than 0, the extracted feature will be selected as model feature. The larger the \({\chi }_{\mathrm{rqm}}\) value, the greater the feature weight. We can obtain (20) according to the RQM rule (10).

That means \(\chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} ) > 0\) is equivalent to that \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) satisfies the EFS criterion.

At the same time, the incremental standard deviation of intra-brand similarity is another factor to assess the role of extracted feature in model recognition. According to (10), when the \(\varphi\) values of two brand features are same, the bigger the \(\gamma\) is, the smaller the \({\chi }_{\mathrm{rqm}}\) is. Since the standard deviation can be used to measure the degree of dispersion of data, the smaller the standard deviation, the more stable the data. Thus, when \(\varphi (f_{{\text{e}}}^{{\left( { * ,i} \right)}} ) = \varphi (f_{{\text{e}}}^{{\left( { * ,j} \right)}} )\), if \(\gamma (f_{{\text{e}}}^{{\left( { * ,i} \right)}} ) < \gamma (f_{{\text{e}}}^{{\left( { * ,j} \right)}} )\), \(\chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,i} \right)}} ) > \chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,j} \right)}} )\). It shows that compared with \(f_{{\text{e}}}^{{\left( { * ,j} \right)}}\), \(f_{{\text{e}}}^{{\left( { * ,i} \right)}}\) can stably reduce the similarity between any two devices with same brand but different models. Thus, compared with \(f_{{\text{e}}}^{{\left( { * ,j} \right)}}\), \(f_{{\text{e}}}^{{\left( { * ,i} \right)}}\) plays a better role in model recognition.

The above analysis shows that RQM rule comply with EFS criteria, RFMR strategy can be used to evaluate the role of each feature in model recognition, and it is reasonable to use RFMR strategy to select model features. The value of features can be a significant reference for the selection of model feature.

Results and analysis of experiments

In this section, we first introduce our experimental dataset. On this dataset, we carry out three experiments: (1) experiment on selecting brand features and determining weights, (2) experiment on selecting model features and determining weights, (3) experiment on device recognition using Recognizer and other methods.

Experimental dataset

In this section, the experimental dataset includes 587 models of mobile phone devices from 17 brands. The brands and models of mobile devices are shown in Table 1.

We extract 21 common attributes from devices in Table 1 as extracted features: device dimensions, device weight, display size, screen-to-body ratio, resolution, display density, chipset, GPU, internal memory, memory card, operating system, battery capacity, battery charging, selfie camera, main camera, network technology, SIM, WLAN, NFC, Bluetooth, GPS. According to the form of extracted features, these features are numerical features: device dimensions, device weight, display size, screen-to-body ratio, resolution, display density, battery capacity, NFC, Bluetooth (where the device dimensions and resolution are multi-dimensional numerical features). And those extracted features are string features: chipset, GPU, internal memory, memory card, operating system, battery charging, selfie camera, main camera, network technology, SIM, WLAN, GPS (among which, battery charging, selfie camera, main camera, WLAN and GPS are multi-strings features). In our experiments, we set ε = 1 in calculating similarity between two numerical features.

Our dataset contains two parts: knowledge set and target set. The knowledge set and target set all include 587 different models of mobile devices, and the difference is: for any feature of mobile phone device, if there are multiple possible feature values, the target set only includes one possible value of the device. This means that the size of knowledge set is 587, but the size of target set is not less than 587. For example, the internal memory of iPhone 12 Pro has three possible values: “6 GB + 128 GB”, “6 GB + 256 GB” and “6 GB + 512 GB”.

Thus, in the knowledge set, the internal memory value of iPhone 12 Pro is “6 GB + 128 GB; 6 GB + 256 GB; 6 GB + 512 GB”. But in the target set, there will be three items about “iPhone 12 Pro” devices at least, and the internal memory of those “iPhone 12 Pro” is one of “6 GB + 128 GB”, “6 GB + 256 GB” and “6 GB + 512 GB”. The device item number of different brands in knowledge set and target set are shown in Table 2.

Selecting brand features and determining weights

For each extracted feature, we calculate the average intra-brand similarity increment between two devices with same brand, and the inter-brand similarity increment between two devices with different brands. The effect of each extracted feature on the similarity of the devices with same brand and different brands is shown in Fig. 2.

The effect of each extracted feature on the similarity of the devices with same brand and different brands. The size of circle presents absolute value of similarity increment. The bigger the circle is, the larger the value is. The solid circle indicates a positive value, and the hollow circle indicates a negative value.

As can be seen from Fig. 2, some extracted features, such as device dimensions, device weight, screen-to-body ratio, etc., can not only increase intra-brand similarity, but also increase inter-brand similarity. The reason may be that when manufacturers design mobile phones, they usually draw on the attributes of other brands, resulting in the similarity in some extracted features of phones with different brands. Therefore, in the acceptable case of EFS criterion, the extracted features, such as device dimensions, device weight and screen-to-body ratio, may be able to be selected as brand features.

We set \(\alpha =0.8\). According to (5), (6) and (8), we calculate the average intra-brand similarity increment (\(\varphi\)), the average inter-brand similarity increment (\(\delta\)), value of \({\chi }_{\mathrm{rqb}}\), and normalized weight (\({\omega }_{\mathrm{b}}\)). The results are shown in Table 3.

Table 3 shows that those extracted features are selected as brand features: device dimensions, device weight, display size, screen-to-body ratio, resolution, display density, memory card, battery capacity, SIM, WLAN, NFC, Bluetooth, and GPS. In device recognition experiment in this paper, those brand features will be used to recognize the brand of target device.

Selecting model features and determining weights

For each extracted feature, we calculate the standard deviation of intra-brand similarity increment, and the result is shown in Fig. 3.

For two extracted features, when average intra-brand similarity increments (\(\varphi\)) are same, the extracted feature with smaller standard deviation could stably decrease intra-brand similarity for all brand.

We set \(\beta =0.8\). For each extracted feature, according to (9) and (10), we calculate the standard deviation of intra-brand similarity increment (\(\gamma\)), value of \({\chi }_{\mathrm{rqm}}\), and normalized weight (\({\omega }_{\mathrm{m}}\)). The calculation results are shown in Table 4. The \(\varphi\) value of each extracted feature has been calculated in subsection Selecting brand features and determining weights, thus, we directly use the \(\varphi\) value in subsection Selecting brand features and determining weights when calculating \({\chi }_{\mathrm{rqm}}\) here.

Table 4 shows that those extracted features are selected as model features: chipset, GPU, internal memory, operating system, battery charging, selfie camera, main camera. In device recognition experiment in this paper, those model features will be used to recognize the model of target device.

According to subsections Selecting brand features and determining weights and Selecting model features and determining weights, we build a feature set including 20 features. Those features are: device dimensions, device weight, display size, screen-to-body ratio, resolution, display density, chipset, GPU, internal memory, memory card, operating system, battery capacity, battery charging, selfie camera, main camera, SIM, WLAN, NFC, Bluetooth, GPS.

Device recognition using Recognizer and other methods

In this subsection, Recognizer, ProfilIoT13, MSA20 and ByteIoT21 are used to recognize the brand and model of devices in the target set, respectively.

Firstly, all 20 features in feature set are used in model recognition of mobile device. The recognition accuracy values of four methods are shown in Table 5.

In Table 5, “Brand Acc” is the brand recognition accuracy, and the value in parentheses below the accuracy value is the number of devices whose brand recognition results are correct in target set. “Model Acc” is the model recognition accuracy, and the value in parentheses below the accuracy value is the number of devices whose model recognition results are correct in target set. It is worth noting the model recognition result of device must be wrong, when brand recognition result is wrong.

Table 5 shows that: (1) Recognizer, ProfilIoT, MSA and ByteIoT can recognize the brand and model of target device using our features. (2) the model recognition accuracy of Apple’s mobile phone is significantly lower than other brands using Recognizer. So are the other three methods. We analyze model recognition results and find that Recognizer mistakenly recognized the phone model as other phone models in same series, such as recognizing “iPhone 11 Pro Max” as “iPhone 11 Pro”, “iPhone 12 mini” as “iPhone 12”, “iPhone XS Max” as “iPhone XS”. We check feature values and found that values of all model features between misrecognized device model and true device model are same. That may be the internal reason of model misrecognition. (3) For all mobile phones in 17 brands, the brand recognition accuracy and model recognition accuracy are 99.97% and 99.08%, higher than existing methods, respectively.

Compared with the physical attributes, traffic features of device can be obtained easier. In our feature set, the resolution, operation system, and GPU of device can be obtained in the normal traffic. When only using the three traffic features, for different brands, the model recognition accuracy of Recognizer is shown in Table 6.

In Table 6, “Model Acc” is the model recognition accuracy. The results show that, when only using three traffic features, the model recognition accuracy of Recognizer is low. But we believe that more traffic features can improve the model recognition accuracy of Recognizer. Moreover, in some actual scenario, we can obtain some physical attributes of device (namely, all features in feature set may not be acquired simultaneously). Next, we use a part of features in the feature set to identify the brand and model of device.

We gradually reduce the number of used features from 19 to 2 (1 reduction each time). For each specific number of features (x0), we randomly select × 0 features from 20 features of all devices in target set, and the other (20-x0) features of all target devices are set null. In this way, we build 1 sample set (the size of sample set is equal to target set). For each x0, we build 1000 sample sets. Finally, Recognizer, ProfilIoT, MSA, and ByteIoT are used to recognize the brand and model of device in sample set. The relationship between recognition accuracy of the four methods and number of features is shown in Fig. 4.

Figure 4 shows that: (1) brand recognition accuracy and model recognition accuracy of Recognizer, ProfilIoT, MSA, and ByteIoT both decrease as the number of features decreases, and the recognition accuracy of Recognizer is greater than ProfilIoT, MSA, or ByteIoT. (2) When the number of features is less than 9, as the number of features decreases, both the brand recognition accuracy and model recognition accuracy of Recognizer decrease rapidly. (3) When using 13 features, the average model recognition accuracy of Recognizer is 92.08%, an improvement of 29.26% over existing methods.

The above experimental results show that the model recognition accuracy of Recognizer is 99.08% (+ 9.25%↑) when using all 20 features in feature set. And when using any 13 features in feature set, the accuracy of Recognizer is 92.08% (+ 29.26%↑). The model recognition accuracy of Recognizer is higher than existing methods. This characteristic, that using a part of features in feature set also has a high recognition accuracy, is conducive to the widespread use of Recognizer.

Conclusion

In this paper, we propose Recognizer, a method to recognize the model of mobile device based on weighted feature similarity. We build a feature set including 20 features firstly. Then, we design RFBR and RFMR strategies to select features from feature set, and determine the weight of each feature. Finally, for target mobile device, based on all or part features in feature set, Recognizer identifies the model of target mobile device. The experimental results show that not only Recognizer, but also existing methods can use the features in feature set to recognize the model of mobile device. And the model recognition accuracy of Recognizer is greater than other four methods. when using all features in feature set, the accuracy of Recognizer is 99.08% (+ 9.25%↑). And when using any 13 features in feature set, the accuracy of Recognizer is 92.08% (+ 29.26%↑). In the process of recognition, some physical attributes are used in Recognizer, and a few of these physical attributes may be obtained by in-touch. Therefore, compared with existing traffic-based methods, the range of applications of Recognizer is limited. In future work, how to use fewer and easier-to-obtain features to recognize the model of device will be an important research direction.

Data availability

The information of mobile device is obtained on https://www.gsmarena.com/search.php3.

References

Ji, X. et al. Authenticating smart home devices via home limited channels. ACM Trans. Internet Things 1(4), 24. https://doi.org/10.1145/3399432 (2020).

Veeranna, N. & Schafer, B. C. Hardware Trojan detection in behavioral intellectual properties (IP’s) using property checking techniques. IEEE Trans. Emerg. Top. Comput. 5(4), 576–585. https://doi.org/10.1109/TETC.2016.2585046 (2017).

Danev, B., Zanetti, D. & Capkun, S. On physical-layer identification of wireless devices. ACM Comput. Surv. 45(1), 29. https://doi.org/10.1145/2379776.2379782 (2012).

Su, S. et al. A reputation management scheme for efficient malicious vehicle identification over 5G networks. IEEE Wirel. Commun. 27(3), 46–52. https://doi.org/10.1109/MWC.001.1900456 (2020).

Sun, Y. et al. Honeypot identification in softwarized industrial cyber-physical systems. IEEE Trans. Ind. Inf. 17(8), 5542–5551. https://doi.org/10.1109/TII.2020.3044576 (2021).

Chai, Y., Du, L., Qiu, J., Yin, L. & Tian, Z. Dynamic prototype network based on sample adaptation for few-shot malware detection. IEEE Trans. Knowl. Data Eng. https://doi.org/10.1109/TKDE.2022.3142820 (2022).

Taylor, V. F., Spolaor, R., Conti, M. & Martinovic, I. Robust smartphone app identification via encrypted network traffic analysis. IEEE Trans. Inf. Forensics Secur. 13(1), 63–78. https://doi.org/10.1109/TIFS.2017.2737970 (2018).

Fyodor, B. The art of port scanning. Phrack Mag. 7(51), 11–17 (1997).

Durumeric, Z., Wustrow, E., Halderman, J. A.: ZMap: Fast internet-wide scanning and its security applications. In Proc. of USENIX Security '13, 605–620 (2013).

Donelson, S. F., Hernandez, C. F., Kevin, J. & David, O. What TCP/IP protocol headers can tell us about the web. ACM Sigmetrics Perform. Eval. Rev. 29(1), 245–256. https://doi.org/10.1145/378420.378789 (2001).

Li, R., Xu, R., Ma, Y. & Luo, X. LandmarkMiner: Street-level network landmarks mining method for IP geolocation. ACM Trans. Internet Things 2(3), 22. https://doi.org/10.1145/3457409 (2021).

Khakpour, A. R., Hulst, J. W., Ge, Z., Liu, A. X., Pei, D., Wang, J. Firewall fingerprinting. In Proc. of IEEE INFOCOM '12, 1728–1736 (2012). https://doi.org/10.1109/INFCOM.2012.6195544.

Meidan, Y., Bohadana, Shabtai, A., Guarnizo, J. D., Ochoa, M., Tippenhauer, N. O., Elovici, Y. ProfilIoT: A machine learning approach for IoT device identification based on network traffic analysis. In Proc. of SAC '17, 506–509 (2017). https://doi.org/10.1145/3019612.3019878.

Yang, K., Li, Q. & Sun, L. Towards automatic fingerprinting of IoT devices in the cyberspace. Comput. Netw. 148, 318–327. https://doi.org/10.1016/j.comnet.2018.11.013 (2019).

Caballero, J., Yin, H., Liang, Z., Song, D.: Polyglot: Automatic extraction of protocol message format using dynamic binary analysis. In Proc. of ACM CCS '07, 317–329 (2007). https://doi.org/10.1145/1315245.1315286.

Wondracek, G., Comparetti, P. M., Krügel, C., Kirda, E. Automatic network protocol analysis. In Proc. of NDSS '08, 1–14 (2008).

Miettinen, M., Marchal, S., Hafeez, I., Frassetto, T., Asokan, N., Sadeghi, A. R., Tarkoma, S. IoT sentinel demo: Automated device-type identification for security enforcement in IoT. In Proc. of IEEE ICDCS '17, 2177–2184 (2017). https://doi.org/10.1109/ICDCS.2017.284.

Cui, A., Stolfo, S. J. A quantitative analysis of the insecurity of embedded network devices: Results of a wide-area scan. In Proc. of ACSAC '10, 97–106 (2010). https://doi.org/10.1145/1920261.1920276.

Sivanathan, A., Sherratt, D., Gharakheili, H. H., Radford, A., Wijenayake, C., Vishwanath, A., Sivaraman, V. Characterizing and classifying IoT traffic in smart cities and campuses. In Proc. of IEEE INFOCOM WKSHPS '17, 559–564 (2017). https://doi.org/10.1145/10.1109/INFCOMW.2017.8116438.

Sivanathan, A. et al. Classifying IoT devices in smart environments using network traffic characteristics. IEEE Trans. Mob. Comput. 18(8), 1745–1759. https://doi.org/10.1109/TMC.2018.2866249 (2019).

Duan, C., Gao, H., Song, G., Yang, J. & Wang, Z. ByteIoT: A practical IoT device identification system based on packet length distribution. IEEE Trans. Netw. Serv. Manag. 19(2), 1717–1728. https://doi.org/10.1109/TNSM.2021.3130312 (2022).

Cheng, W. et al. RAFM: A real-time auto detecting and fingerprinting method for IoT devices. J. Phys. Conf. Ser. 1518(1), 7. https://doi.org/10.1088/1742-6596/1518/1/012043 (2020).

He, Z. et al. Edge device identification based on federated learning and network traffic feature engineering. IEEE Trans. Cognit. Commun. Netw. https://doi.org/10.1109/TCCN.2021.3101239 (2021).

Jiao, R., Liu, Z., Liu, L., Ge, C., Hancke, G. Multi-level IoT device identification. In Proc. of IEEE ICPADS '21, 538–547 (2021). https://doi.org/10.1109/ICPADS53394.2021.00073.

Thom, J., Thom, N., Sengupta, S., Hand, E. Smart recon: Network traffic fingerprinting for IoT device identification. In Proc. of IEEE CCWC '22, 72–79 (2022). https://doi.org/10.1109/CCWC54503.2022.9720739.

Charyyev, B. & Gunes, M. H. Locality-sensitive IoT network traffic fingerprinting for device identification. IEEE Internet Things J. 8(3), 1272–1281. https://doi.org/10.1109/JIOT.2020.3035087 (2021).

Jiang, Y., Li, Y. Sun, Y. Networked device identification: A survey. In Proc. of IEEE DSC '11, 543–548 (2021). https://doi.org/10.1109/DSC53577.2021.00086.

Shodan. Available at https://shadon.io/ (2022).

ZoomEye. Available at https://zoomeye.org/ (2022).

Censys. Available at https://censys.org/ (2022).

Quake. Available at https://quake.360.cn/ (2022).

Li, Q., Feng, X., Wang, H., Sun, L. Automatically discovering surveillance devices in the cyberspace. In Proc. of ACM MMSys '17, 331–342 (2017). https://doi.org/10.1145/3083187.3084020.

Zou, Y. et al. IoT device recognition framework based on web search. J. Cyber Secur. 3(4), 25–40. https://doi.org/10.19363/J.cnki.cn10-1380/tn.2018.07.03 (2018).

Agarwal, S., Oser, P. & Lueders, S. Detecting IoT devices and how they put large heterogeneous networks at security risk. Sensor 19(19), 11. https://doi.org/10.3390/s19194107 (2019).

Feng, X., Li, Q., Wang, H., Sun, L. Acquisitional rule-based engine for discovering internet-of-thing devices. In Proc. of USENIX Security '18, 327–341 (2018).

Guo, X., Li, X., Li, R., Wang, X., Luo, X. Network device identification based on MAC boundary inference. In Proc. of ICAIS '21, 697–712 (2021). https://doi.org/10.1007/978-3-030-78621-2_58.

Kohno, T., Broido, A. & Claffy, K. Remote physical device fingerprinting. IEEE Trans. Dependable Secure Comput. 2(2), 93–108. https://doi.org/10.1109/TDSC.2005.26 (2005).

Murdoch, S. J.: Hot or not: Revealing hidden services by their clock skew. In Proc. of ACM CCS '06, 27–36 (2006). https://doi.org/10.1145/1180405.1180410.

Zander, S., Murdoch, S. J. An improved clock-skew measurement technique for revealing hidden services. In Proc. of USENIX Security '08, 211–226 (2008).

Huang, D., Yang, K., Ni, C., Teng, W., Hsiang, T., Lee, Y. J.: Clock skew based client device identification in cloud environments. In Proc. of IEEE AINA '12, 526–533 (2012). https://doi.org/10.1109/AINA.2012.51.

Vanaubel, Y., Pansiot, J. J., Mérindol, P., Donnet, B.: Network fingerprinting: TTL-based router signatures. In Proc. of IMC '13, 369–376 (2013). https://doi.org/10.1145/2504730.2504761.

Feng, X., Li, Q., Han, Q., Zhu, H., Liu, Y., Cui, J., Sun, L. Active profiling of physical devices at internet scale. In Proc. of ICCCN '16, 1–9 (2016). https://doi.org/10.1109/ICCCN.2016.7568486.

Formby, D., Srinivasan, P., Leonard, A., Rogers, J., Beyah, R.: Who’s in control of your control system? Device fingerprinting for cyber-physical systems. In Proc. of NDSS '16, 1–15 (2016). https://doi.org/10.14722/ndss.2016.23142.

Radhakrishnan, S. V., Uluagac, A. S. & Beyah, R. GTID: A technique for physical device and device type fingerprinting. IEEE Trans. Dependable Secure Comput. 12(5), 519–532. https://doi.org/10.1109/TDSC.2014.2369033 (2014).

Fu, K. S., Min, P. J. & Li, T. J. Feature selection in pattern recognition. IEEE Trans. Syst. Sci. Cybern. 6(1), 33–39. https://doi.org/10.1109/TSSC.1970.300326 (1970).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. U1804263, 62172435), the Science and Technology Innovation Leading Talent Program of Central Plains (No. 214200510019), and General Program of the Natural Science Foundation of Henan (No. 222300420591).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and model design. The first draft of the manuscript was written by L.R.. All authors commented on previous versions of the manuscript, and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

We declare that authors have no known competing interests or personal relationships that might be perceived to determine the discussion report in this paper.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, R., Wang, X. & Luo, X. High-accuracy model recognition method of mobile device based on weighted feature similarity. Sci Rep 12, 21865 (2022). https://doi.org/10.1038/s41598-022-26518-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-26518-y

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.