Abstract

Recently, electrochemical reduction of CO2 into value-added fuels has been noticed as a promising process to decrease CO2 emissions. The development of such technology is strongly depended upon tuning the surface properties of the applied electrocatalysts. Considering the high cost and time-consuming experimental investigations, computational methods, particularly machine learning algorithms, can be the appropriate approach for efficiently screening the metal alloys as the electrocatalysts. In doing so, to represent the surface properties of the electrocatalysts numerically, d-band theory-based electronic features and intrinsic properties obtained from density functional theory (DFT) calculations were used as descriptors. Accordingly, a dataset containg 258 data points was extracted from the DFT method to use in machine learning method. The primary purpose of this study is to establish a new model through machine learning methods; namely, adaptive neuro-fuzzy inference system (ANFIS) combined with particle swarm optimization (PSO) and genetic algorithm (GA) for the prediction of *CO (the key intermediate) adsorption energy as the efficiency metric. The developed ANFIS–PSO and ANFIS–GA showed excellent performance with RMSE of 0.0411 and 0.0383, respectively, the minimum errors reported so far in this field. Additionally, the sensitivity analysis showed that the center and the filling of the d-band are the most determining parameters for the electrocatalyst surface reactivity. The present study conveniently indicates the potential and value of machine learning in directing the experimental efforts in alloy system electrocatalysts for CO2 reduction.

Similar content being viewed by others

Introduction

Transportation, power plants, and energy-intensive industries are some of the industrial activities that commonly emit greenhouse gases including CO2, CH4, and NOx. Around 75% of greenhouse gas emissions are carbon dioxide emissions1, which contribute to the 1.5 °C rise in global temperature that is considered to be a reasonably large amount. As a result, during the past 20 years, economic sustainability has gained international attention, drawing experts in the field of the environment, decision-makers, and international organizations from many nations. The United Nations Framework Convention on Climate Change was founded in 1992 as a result of this phenomena. The Kyoto Protocol and the 2015 Paris Agreement were later created in 1997 and 2015, respectively, to fight global warming by regulating greenhouse gas emissions2.

Nowadays, one of the significant challenges in the energy sector that should be effectively addressed is the development of sustainable energy resources. This is because the current energy conversion technologies cannot meet the energy requirement3. The electrochemical reduction of carbon dioxide is a necessary process that uses electricity from renewables (solar, wind, etc.) to produce fuels or value-added chemicals from water and CO24. The *CO is the primary intermediate in the reduction of CO2. Indeed, as explained in Hori et al. study5, it is the only molecule having single carbon, which shows a product spectrum similar to CO2 on a copper electrode. In this process, the determining component in providing an efficient conversion relies on the electrocatalyst6,7. Several experiments and computations have been performed to find and prepare new materials as the electrocatalyst for the CO2 electroreduction8,9,10,11,12,13.

Therefore, understanding the surface chemical reactivity and the bond-breaking and -forming on the electrocatalyst’s surface is required to describe such a surface phenomenon. A significant volume of concepts has been established for the adsorption of the simple molecules onto the surface of transition-metal electrocatalysts14,15,16,17,18, among which the Sabatier principle is a main comprehensive concept19. It explains that the crucial reaction intermediate should have enough adsorption strength to increase the activity of the electrocatalyst; too strong or weak binding causes difficult product desorption and inadequate reactant activation, respectively. Thus, the reactivity as the function of binding energies can be described as a volcano-shaped plot20. Nørskov and co-workers, through a series of pioneering studies, claimed that the chemisorption of the adsorbate is severely dependent on the surface electronic structure (d-band theory21).

According to the d-band theory, the strength of the bond is given by the construction of the bonding and antibonding states, between the transition metal d states and the adsorbate valence states, and antibonding states energy comparative to the Fermi level (filling). The antibonding states are upper the d states, making the energy of the d states center a well initial representor of the bond strength. The greater d-band center in energy results in higher adsorbate–metal antibonding states, less occupation of antibonding states, and stronger adsorption bonds22. Although due to the recent improvements in electronic structure approaches (primarily density functional theory (DFT)), the d-band theory could provide understandings of the surface activity for the transition metals along with a minor group of alloys3,23,24,25,26,27,28,29, it could not explain the measured activity in some cases30,31,32. This could be probably since the spread in energy states was not considered. The definition of parameters such as the upper edge of the d-band (Eu) and the d-band width (Wd) by using Hilbert transform of the projected density of states (DOS) has improved the correlation between the activity and the d-band center31.

Inspired by the d-band chemisorption theory, the thought is to develop a computational relation between the electronic and intrinsic properties (descriptors) of clean surfaces and *CO adsorption energy. This helps to perform the material screening employing these descriptors, suppressing the costly and time-consuming experiments. While many linear relations between the d-band theory characteristics and the binding energy of the adsorbate have been published31,32,33, machine learning (ML) techniques were used in the present study in order to incorporate the possible nonlinear relation between the parameters, raise the accuracy of the prediction, and be more comprehensive.

ML techniques have been widely used to explore and investigate diverse phenomena in chemical engineering34,35,36,37,38,39,40,41. In the present study, Ma et al.42 predicted the *CO binding energy on various alloy systems using artificial neural networks (ANN) algorithm with root mean square error (RMSE) of 0.13 eV. In another study, Li et al.43 obtained almost the same accuracy (RMSE ∼ 0.12 eV) when using the same ML algorithm but geometric descriptors as inputs. Recently, Noh et al.44 proposed an ML model with RMSE of 0.05 eV. Unlike the other studies, they used two non-ab Initio input properties to forecast the binding energy of *CO in different systems. Since several aspects of an accurate model are still under question, it is necessary to establish a new model that enhances the prediction’s accuracy and decreases the model's uncertainty.

A fuzzy method is one of the soft computing methods which has a significant capacity in modeling complicated and nonlinear systems. Adaptive neuro-fuzzy inference system (ANFIS), a hybrid of ANN and the fuzzy inference system (FIS), can anticipate the nonlinear systems significantly45,46,47,48. The suggested model is based on the combination of genetic algorithm (GA) and particle swarm optimization (PSO) to ANFIS. To our knowledge, this investigation is the first attempt to apply ANFIS–GA and ANFIS–PSO in CO2 electroreduction process. In this research, the *CO adsorption energy in the CO2 electroreduction process was anticipated by the ANFIS–PSO and ANFIS–GA models. The PSO and GA were interconnected to ANFIS to balance the model’s complication and their generality capacity. Some intrinsic features and DFT-calculated electronic features of clean alloy surfaces, explicitly, parameters of the d-band distribution were used as inputs of the model.

Methodology

ANFIS background

The ANFIS structure used in this study includes five layers and thirteen inputs, where the Takagi–Sugeno system was employed as FIS. The fuzzy rules are presented as follows46,49:

where k1, k2, and k0 represent the function parameters of output (f) and A and B indicate the membership functions for inputs (x1 and x2). The basic arrangement of ANFIS is a feedforward system that comprises five layers having several functions50 (Fig. 1). The learning structure of ANFIS is well discussed by several studies51,52,53.

The ANFIS with five layers is explained54:

In Layer 1, fuzzification, the whole of the nodes are supposed to be adaptive inputs.

where n and both of \(\mu {A}_{i}\left({x}_{1}\right)\) and \(\mu {B}_{i-2}\left({x}_{2}\right)\) are the number of fuzzy sets per input variables and functions of Gaussian membership, respectively.

Layer 2, which is the product layer, the output of this layer is the product of the input signal based on:

Layer 3, named the normalized layer, a circle node is used to represent each node. The normalization function is defined as:

Layer 4, defuzzification, every node adapted with the following function:

where \(({k}_{1}^{i}x+{k}_{2}^{i}y+{k}_{0}^{i})\) and \({\overline{w} }_{i}\) are the variable sets of \({\overline{w} }_{i}\)’s node and the output of Layer 3, respectively.

In Layer 5, which is the output layer, summation of the arrival signals creates the output model:

Generally, an adaptive FIS comprises two diverse sections, the premise section and the consequent Section47, optimized with various methods. A hybrid learning optimization method is developed, including the least squares method and the gradient descent method. In the present study, the fuzzy c-means (FCM) process is interconnected to ANFIS algorithm to forecast *CO adsorption energy.

FCM clustering

FCM is a method for data clustering where each point of data categorizes in clusters with different membership grades. FCM distributes a group of n vector \({x}_{i}\), \(i=\mathrm{1,2},\dots ,n\) into fuzzy categories and find a center for the cluster in each category such that the cost function is minimized according to the dissimilarity value.

By membership matrix U, the cost function can be calculated as:

here \({v}_{K}\)=\({v}_{ka}\), k = 1, .., k, a = 1, …, p stands for the center of mass of kth cluster. In addition, m refers to the cluster' degree.

Algorithm should solve U and \({v}_{1},\dots ,{v}_{K}\) by minimizing Eqs. (8 and 9).

Minimizing task is carried out by the following cost function.

Nikafshan Rad et al.46 presented the FCM algorithm in detail.

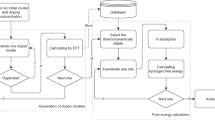

PSO algorithm

PSO is a population-based optimization approach that randomly selects a population of particles or solutions, then seeks optima through updating generation in each iteration39. Each particle is updated based on two particles: “pbest” and “gbest.” The “pbest” is the own best answer reached so far by particles, and the “gbest” is the total best solution obtained among the entire particles. Figure 2 shows the structure of PSO. In this scheme, random apportion velocities and positions are used at the first stage to start the initial population. The next step is to approximate each particle by regression analysis. When the stopping condition is satisfied by the best fitness rate of the particle, the process must be ended, and the factors should be reported. Suppose the fitness rate is not satisfactory for the ending criterion. In that case, the particles’ velocity and positions must be updated under two situations: In the first situation, if the particle fitness is larger than the gbest fitness, the related factors of gbest fitness should be updated. Second, if particle fitness is greater than the fitness of pbest, the pbest fitness factors should be updated. The other particles should be approximated through the second stage again.

Genetic algorithm

GA is a general accidental exploration tool for optimization based on the ideas of natural selection and genetics. It uses probabilistic transition rules instead of definite ones, which results in capacity to investigate big solution spaces. GA includes three stages: (a) population initialization, (b) operators of GA (selection, crossover, and mutation), and (c) evaluation49,55.

-

(1)

Population initialization: In GA, each solution is named chromosome or string and is described via a series of different values. The strings (solutions) presenting each limitation or optimization issue necessities must be included in the initial population.

-

(2)

Operators of GA:

-

(1)

Selection: It chooses solutions or individuals with high fitness values with a higher chance of surviving. A combination of Elitist and Tournament approaches is employed in this research through which the best solutions are chosen according to their fitness values and passed straight to the following generation.

-

(2)

Crossover: In this one, two chosen individuals exchange part of their genes for making novel individuals for the following generation. Here, the scattered random method is used to create a new chromosome.

-

(3)

Mutation: It makes a random change to the info within the strings or chromosomes. Occasionally, gene mutation occurs with a low possibility of transforming into new genes. The mutation operation increases the exploration ability of the search scheme to forbid to trap into local optima.

-

(1)

-

(3)

Evaluation: The function of fitness is applied to fit each individual solution.

Modeling procedure

Data collection

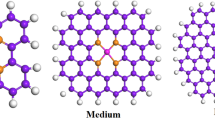

In order to establish a relationship between the *CO binding energy and properties of electrocatalyst surfaces, a set of {100}-terminated bimetallic surfaces in the form of B@A, A-B@A, and A3B@A have been considered as illustrated in Fig. 3. In the B@A structure, group VIII and IB metals (Cu, Au, Ni, Ag, Pt, and Pd) were chosen for A and B, but in the other structures, B contains the post-transition and the d-block metals. The required properties as input parameters were obtained through geometry optimization in DFT calculations. The input parameters are classified into two groups: primary features, electronic properties of the d-states distribution, and secondary properties, which are physical constants of the host metal. The secondary properties were used for a well description of the chemical bonding on a sequences of metal surfaces. The width (square root of the 2nd central moment, Wd), center (1st moment relative to the Fermi level, εd), filling (zeroth moment up to the Fermi level, f), the local Pauling electronegativity (χ), skewness (3rd standardized moment, γ1), and kurtosis (4th standardized moment, γ2) are primary features while work function (W), ionization potential (IE), square of adsorbate–metal interatomic d coupling matrix element (V2ad), spatial extent of metal d-orbitals (rd), atomic radius (r0), Pauling electronegativity (χ0), and electron affinity (EA) are the secondary parameters. The 258 data points are taken from Ma et al. report42 and listed in Table S1. To develop the most precise model, randomly, 20% of the entire data was divided as the testing data to assay the model reliability and the remainder of them was used as the training data to study the *CO binding energy in CO2 electroreduction systems.

The {100}-terminated alloy surfaces in the form of (a) B@A, (b) AB@A, and (c) A3B@A. The first rows indicate the top view, and the second rows show the side view of the structures42.

Model evaluations

The statistical parameters (Eqs. 11–15) such as mean relative error (MRE), R2, mean-square error (MSE), the standard deviation (STD), root-mean-square error (RMSE) were used to assess the model accuracy.

where n is the number of datapoints and m refers to mean value.

Model development procedure

As mentioned above, there are 13 input variables to predict target parameter. In this study, 5 clusters with Gaussian membership functions were used for construction of ANFIS model. Gaussian function comprises of 2 paremeter that should be optimally determined during model development. Since there are 5 clusters and 13 inputs, there are 140 membership function parameters for optimization purpose. The optimum values of membership function parameters were determined by two evolutionary algorithms named PSO and GA. Setting of evolutionary algorithms was summarized in Table 1.

Accuracy of the collected data

Some suspected data or outliers in the data bank show inconsistent behavior with the rest of the data. They are probably generated because of the degree of the accuracy of the calculation and assumptions (or instrumental and human errors in the case of experimental data banks). It is vital to distinguish them because they can cause the wrong prediction for the developed model34,36. To search the suspicious data and elevate the quality of data bank, the Leverage method is applied through which two parameters of critical leverage limit (H*) and Hat matrix (H) are calculated as follows:

where i, j, and U are the number of the model parameters, the number of training data, and a matrix dimensional of i * j, respectively. William’s plot, the standardized residuals versus Hat values, is depicted in Fig. 4. In this plot, the reliable zone is defined as the region bounded between the standardized residuals of − 3 to 3 and the critical leverage limit. According to Fig. 4, it can be clearly seen that most of the *CO binding energy values are placed in the valid area other than only 10 points among 258 data points, demonstrating the dataset is outstanding for training and testing models of ANFIS–PSO and ANFIS–GA.

Results and discussion

Analysis of sensitivity

Analysis of sensitivity is usually performed to study how the input parameters affect the output quantity35. In this analysis, relevancy factor (r) indicates the most influential input parameter on *CO binding energy, which is calculated as:

where \({X}_{k.i}\), \({\overline{X} }_{k}\), \({Y}_{i}\), \(\overline{Y }\), and n indicate the ‘k’ th input parameter, the average of the input parameters, ‘i’ th output, the outputs average, and the number of all data points, respectively. Generally, r value changes between − 1 to + 1. The more absolute value of r shows the greater effect of the corresponding input on the output for each input. The negative and positive values denote that the high input the less and more in the output, respectively 57. According to Fig. 5, the *CO binding energy (consequently the reactivity of the metal surface) has direct relationship with the filling of the d-band, skewness of the d-band, Kurtosis of a d-band, atomic radius, spatial extent of d-orbitals, electron affinity, and Pauling electronegativity and has an inverse relationship with the center of the d-band, width of a d-band, work function, ionization potential, square of adsorbate–metal interatomic d coupling matrix element, and local Pauling electronegativity.

As expected from the d-band theory, the sensitivity analysis declared the filling and the center of the d-band are the most effective parameters by 0.86 and − 0.85 relevancy factor, respectively. Also, the higher moments of the d-states distribution (such as skewness with r value of 0.78), which represent the d-band shape play a relatively important role in determining the reactivity of the metal surface. On the other hand, both of the electron affinity and the Pauling electronegativity have minimum effect on the *CO binding energy with r value of 0.01.

It is worth mention that parameters such as electron affinity and the Pauling electronegativity are intrinsic properties of active metal atoms which alter slightly across the periodic table but the most influencing parameters such as d-band center can be adjusted by ligand and strain engineering42.

Modeling results

To evaluate how precisely the suggested ANFIS–GA and ANFIS–PSO models are, the statistical factors are applied to determine the accordance between the data points and the anticipated values of the *CO binding energy, reported in Table 2. The ANFIS–GA and ANFIS–PSO models predicted the train data significantly excellent with R2 of 0.994 and 0.995, respectively. The relative error quantities demonstrate the high precision of the proposed models in data training; in particular, the RMSE values of 0.0383 and 0.0411 for ANFIS–GA and ANFIS–PSO, respectively, are lower than the previous reports (0.1342, 0.1243, and 0.0544). This indicates the strength of the proposed models, which used the d-band theory electronic properties and the intrinsic features as inputs, the same data trained in42 with RMSE of 0.13. In addition to the forecast precision of the training data, the capability of the developed models to predict the unseen *CO binding energy data points is critically important. Therefore, both of the established models were investigated for the testing data. Again, both of the ANFIS–GA and ANFIS–PSO models show close and great accuracy for prediction of the unseen *CO binding energy data, where MRE, R2, MSE, STD, RMSE and are 3.521%/6.520%, 0.993/0.993, 0.00180/0.00177, 0.0322/0.0295, 0.0425/0.0421and for ANFIS–PSO and ANFIS–GA algorithms, respectively.

For further confirmation the accuracy of the models, the data points and the anticipated values of the *CO binding energy are depicted by data indices in Fig. 6. As can be observed, the excellent agreement between the actual and estimated *CO binding energy amounts proves the excellent function of the established models. For both the ANFIS–GA and ANFIS–PSO models, the predicted values lines follow the actual data points precisely, which means these models have incredible capability to assess the reactivity of the metal alloy surfaces in terms of *CO adsorption energy.

The forecasted *CO binding energy values versus actual data values are depicted in Fig. 7. For both of the proposed ANFIS–GA and ANFIS–PSO models, the predicted data are precisely placed on their actual values so that their linear fitting shows correlation coefficients of more than 0.99. The 45° line is a criterion for the accuracy of the suggested models, where as shown in Fig. 7, the drawn fitting lines cross it precisely.

Moreover, the percentage of relative deviations between actual and estimated *CO binding energy data is shown in Fig. 8 for testing and training data sets of the developed ANFIS–GA and ANFIS–PSO models. The percentage mean relative deviations of the training and testing data obtained by the PSO–ANFIS model are 5.621% and 3.521%, respectively. Also, these values were 5.534% and 6.520% for training and testing datasets of ANFIS–GA model, respectively.

Conclusions

The present study conveniently indicates the potential and value of machine learning in directing the experimental efforts in alloy system electrocatalysts for CO2 reduction. Accordingly, two machine learning models of ANFIS–PSO and ANFIS–GA have been presented to anticipate the *CO binding energy on alloys electrocatalysts using electronic and intrinsic features. The required properties as input parameters were obtained through geometry optimization in DFT calculations.

The ANFIS–PSO and ANFIS–GA presented an excellent match between the *CO binding data and the predicted values with RMSE of 0.0411 and 0.0383, respectively, which is more precise than the other reported studies. In addition, it was found that the relative deviation of ANFIS-PSO model was 3.521%, while this value was 6.520% for the ANFIS–GA model, which indicates better ability of PSO to optimize ANFIS model. The percentage mean relative deviations of the training and testing data obtained by the PSO–ANFIS model are 5.621% and 3.521%, respectively. Also, these values were 5.534% and 6.520% for training and testing datasets of ANFIS–GA model, respectively. Moreover, the outlier detection technique was used to find outliers and it was shown by William's diagram. The sensitivity analysis confirmed the filling and the center of the d-band as the most influencing parameters for the electrocatalyst surface reactivity by 0.86 and − 0.85 relevancy factor, respectively. This study’s results and discussions can make it easier for scientists and chemical engineers to explore and examine new materials as electrocatalysts for the CO2 electroreduction process.

Data availability

All data generated or analysed during this study are included in this published article [and its supplementary information files].

References

Abbasi, F. & Riaz, K. CO2 emissions and financial development in an emerging economy: An augmented VAR approach. Energy Policy 90, 102–114 (2016).

Kayani, G. M., Ashfaq, S. & Siddique, A. Assessment of financial development on environmental effect: Implications for sustainable development. J. Clean. Prod. 261, 120984 (2020).

Chen, A., Zhang, X., Chen, L., Yao, S. & Zhou, Z. A machine learning model on simple features for CO2 reduction electrocatalysts. J. Phys. Chem. C 124, 22471–22478 (2020).

Laursen, A. B. et al. CO2 electro-reduction on Cu3P: Role of Cu(I) oxidation state and surface facet structure in C1-formate production and H2 selectivity. Electrochim. Acta 391, 138889 (2021).

Hori, Y. Electrochemical CO2 reduction on metal electrodes. Mod. Asp. Electrochem. https://doi.org/10.1007/978-0-387-49489-0_3 (2008).

Maxwell, I. E. Driving forces for innovation in applied catalysis. Stud. Surf. Sci. Catal. 101A, 1–9 (1996).

Lewis, N. S. & Nocera, D. G. Powering the planet: Chemical challenges in solar energy utilization. Proc. Natl. Acad. Sci. U.S.A. 103, 15729–15735 (2006).

Baturina, O. A. et al. CO2 electroreduction to hydrocarbons on carbon-supported Cu nanoparticles. ACS Catal. 4, 3682–3695 (2014).

Liu, X., Wang, Z., Tian, Y. & Zhao, J. Graphdiyne-supported single iron atom: A promising electrocatalyst for carbon dioxide electroreduction into methane and ethanol. J. Phys. Chem. C 124, 3722–3730 (2020).

Cao, L. et al. Mechanistic insights for low-overpotential electroreduction of CO2 to CO on copper nanowires. ACS Catal. 7, 8578–8587 (2017).

Zhang, Q., Xu, W., Xu, J., Liu, Y. & Zhang, J. High performing and cost-effective metal/metal oxide/metal alloy catalysts/electrodes for low temperature CO2 electroreduction. Catal. Today 318, 15–22 (2018).

Zhong, M. et al. Accelerated discovery of CO2 electrocatalysts using active machine learning. Nature 581, 178–183 (2020).

Guo, Y. et al. Machine-learning-guided discovery and optimization of additives in preparing Cu catalysts for CO2 reduction. J. Am. Chem. Soc. 143, 5755–5762 (2021).

Nilsson, A., Pettersson, L. G. M. & Nørskov, J. K. Chemical bonding at surfaces and interfaces. Chem. Bond. Surf. Interfaces https://doi.org/10.1016/B978-0-444-52837-7.X5001-1 (2008).

Nilsson, A. & Pettersson, L. G. M. Chemical bonding on surfaces probed by X-ray emission spectroscopy and density functional theory. Surf. Sci. Rep. 55, 49–167 (2004).

Nilsson, A. et al. The electronic structure effect in heterogeneous catalysis. Catal. Lett. 100, 111–114 (2005).

Van Santen, R. A. & Neurock, M. Molecular heterogeneous catalysis: A Conceptual and computational approach. Mol. Heterog. Catal. Concept. Comput. Approach https://doi.org/10.1002/9783527610846 (2007).

Ertl, G. Reactions at solid surfaces. React. Solid Surf. https://doi.org/10.1002/9780470535295 (2010).

Sabatier, P. Hydrogénations et déshydrogénations par catalyse. Ber. Dtsch. Chem. Gesellschaft 44, 1984–2001 (1911).

Medford, A. J. et al. From the Sabatier principle to a predictive theory of transition-metal heterogeneous catalysis. J. Catal. 328, 36–42 (2015).

Hammer, B. & Nørskov, J. K. Theoretical surface science and catalysis—calculations and concepts. Adv. Catal. 45, 71–129 (2000).

Nørskov, J. K., Abild-Pedersen, F., Studt, F. & Bligaard, T. Density functional theory in surface chemistry and catalysis. Proc. Natl. Acad. Sci. U.S.A. 108, 937–943 (2011).

He, Y., Cubuk, E. D., Allendorf, M. D. & Reed, E. J. Metallic metal-organic frameworks predicted by the combination of machine learning methods and ab initio calculations. J. Phys. Chem. Lett. 9, 4562–4569 (2018).

Simón-Vidal, L. et al. Perturbation-theory and machine learning (PTML) model for high-throughput screening of parham reactions: Experimental and theoretical studies. J. Chem. Inf. Model. 58, 1384–1396 (2018).

Nørskov, J. K., Bligaard, T., Rossmeisl, J. & Christensen, C. H. Towards the computational design of solid catalysts. Nat. Chem. 1, 37–46 (2009).

Greeley, J. et al. Alloys of platinum and early transition metals as oxygen reduction electrocatalysts. Nat. Chem. 1, 552–556 (2009).

Back, S. & Jung, Y. Importance of ligand effects breaking the scaling relation for core-shell oxygen reduction catalysts. ChemCatChem 9, 3173–3179 (2017).

Back, S., Kim, H. & Jung, Y. Selective heterogeneous CO2 electroreduction to methanol. ACS Catal. 5, 965–971 (2015).

Stamenkovic, V. et al. Changing the activity of electrocatalysts for oxygen reduction by tuning the surface electronic structure. Angew. Chem. - Int. Ed. 45, 2897–2901 (2006).

Gajdoš, M., Eichler, A. & Hafner, J. CO adsorption on close-packed transition and noble metal surfaces: Trends from ab initio calculations. J. Phys. Condens. Matter 16, 1141–1164 (2004).

Xin, H., Vojvodic, A., Voss, J., Nørskov, J. K. & Abild-Pedersen, F. Effects of d-band shape on the surface reactivity of transition-metal alloys. Phys. Rev. B Condens. Matter Mater. Phys. 89, 115114 (2014).

Vojvodic, A., Nørskov, J. K. & Abild-Pedersen, F. Electronic structure effects in transition metal surface chemistry. Top. Catal. 57, 25–32 (2014).

Xin, H., Holewinski, A. & Linic, S. Predictive structurereactivity models for rapid screening of pt-based multimetallic electrocatalysts for the oxygen reduction reaction. ACS Catal. 2, 12–16 (2012).

Gheytanzadeh, M., Baghban, A., Habibzadeh, S., Mohaddespour, A. & Abida, O. Insights into the estimation of capacitance for carbon-based supercapacitors. RSC Adv. 11, 5479–5486 (2021).

Gheytanzadeh, M. et al. Towards estimation of CO2 adsorption on highly porous MOF-based adsorbents using gaussian process regression approach. Sci. Rep. 11, 1–13 (2021).

Ahmadi, M. H. et al. An insight into the prediction of TiO2/water nanofluid viscosity through intelligence schemes. J. Therm. Anal. Calorim. 139, 2381–2394 (2020).

Baghban, A., Bahadori, M., Lemraski, A. S. & Bahadori, A. Prediction of solubility of ammonia in liquid electrolytes using least square support vector machines. Ain Shams Eng. J. 9, 1303–1312 (2018).

Baghban, A. & Khoshkharam, A. Application of LSSVM strategy to estimate asphaltene precipitation during different production processes. Pet. Sci. Technol. 34, 1855–1860 (2016).

Bahadori, A. et al. Computational intelligent strategies to predict energy conservation benefits in excess air controlled gas-fired systems. Appl. Therm. Eng. 102, 432–446 (2016).

Baghban, A., Abbasi, P. & Rostami, P. Modeling of viscosity for mixtures of Athabasca bitumen and heavy n-alkane with LSSVM algorithm. Pet. Sci. Technol. 34, 1698–1704 (2016).

Baghban, A. Application of the ANFIS strategy to estimate vaporization enthalpies of petroleum fractions and pure hydrocarbons. Pet. Sci. Technol. 34, 1359–1366 (2016).

Ma, X., Li, Z., Achenie, L. E. K. & Xin, H. Machine-learning-augmented chemisorption model for CO2 electroreduction catalyst screening. J. Phys. Chem. Lett. 6, 3528–3533 (2015).

Li, Z., Ma, X. & Xin, H. Feature engineering of machine-learning chemisorption models for catalyst design. Catal. Today 280, 232–238 (2017).

Noh, J., Back, S., Kim, J. & Jung, Y. Active learning with non-: Ab initio input features toward efficient CO2 reduction catalysts. Chem. Sci. 9, 5152–5159 (2018).

Trivedi, R., Singh, T. N. & Gupta, N. Prediction of blast-induced flyrock in opencast mines using ANN and ANFIS. Geotech. Geol. Eng. 33, 875–891 (2015).

Nikafshan Rad, H., Jalali, Z. & Jalalifar, H. Prediction of rock mass rating system based on continuous functions using Chaos-ANFIS model. Int. J. Rock Mech. Min. Sci. 73, 1–9 (2015).

Hasanipanah, M., Amnieh, H. B., Arab, H. & Zamzam, M. S. Feasibility of PSO–ANFIS model to estimate rock fragmentation produced by mine blasting. Neural Comput. Appl. 30, 1015–1024 (2018).

Alameer, Z., Elaziz, M. A., Ewees, A. A., Ye, H. & Jianhua, Z. Forecasting copper prices using hybrid adaptive neuro-fuzzy inference system and genetic algorithms. Nat. Resour. Res. 28, 1385–1401 (2019).

Yang, H., Hasanipanah, M., Tahir, M. M. & Bui, D. T. Intelligent prediction of blasting-induced ground vibration using ANFIS optimized by GA and PSO. Nat. Resour. Res. 29, 739–750 (2020).

Moayedi, H., Raftari, M., Sharifi, A., Jusoh, W. A. W. & Rashid, A. S. A. Optimization of ANFIS with GA and PSO estimating α ratio in driven piles. Eng. Comput. 36, 227–238 (2020).

Jang, J. S. R. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 23, 665–685 (1993).

Armaghani, D. J., Momeni, E., Abad, S. V. A. N. K. & Khandelwal, M. Feasibility of ANFIS model for prediction of ground vibrations resulting from quarry blasting. Environ. Earth Sci. 74, 2845–2860 (2015).

Thomas, S., Pillai, G. N., Pal, K. & Jagtap, P. Prediction of ground motion parameters using randomized ANFIS (RANFIS). Appl. Soft Comput. J. 40, 624–634 (2016).

Shahnazar, A. et al. A new developed approach for the prediction of ground vibration using a hybrid PSO-optimized ANFIS-based model. Environ. Earth Sci. 76, 1–17 (2017).

Chen, X. & Wang, N. A DNA based genetic algorithm for parameter estimation in the hydrogenation reaction. Chem. Eng. J. 150, 527–535 (2009).

Rezakazemi, M., Dashti, A., Asghari, M. & Shirazian, S. H2-selective mixed matrix membranes modeling using ANFIS, PSO-ANFIS, GA-ANFIS. Int. J. Hydrog. Energy 42, 15211–15225 (2017).

Baghban, A., Mohammadi, A. H. & Taleghani, M. S. Rigorous modeling of CO2 equilibrium absorption in ionic liquids. Int. J. Greenh. Gas Control 58, 19–41 (2017).

Author information

Authors and Affiliations

Contributions

All authors collaborated in writing, data gathering, simulation, and conception.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gheytanzadeh, M., Baghban, A., Habibzadeh, S. et al. Intelligent route to design efficient CO2 reduction electrocatalysts using ANFIS optimized by GA and PSO. Sci Rep 12, 20859 (2022). https://doi.org/10.1038/s41598-022-25512-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-25512-8

This article is cited by

-

Navigating Tranquillity with H∞ Controller to Mitigate Ship Propeller Shaft Vibration

Journal of Vibration Engineering & Technologies (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.