Abstract

Machine learning can be used to explore the complex multifactorial patterns underlying postsurgical graft detachment after endothelial corneal transplantation surgery and to evaluate the marginal effect of various practice pattern modulations. We included all posterior lamellar keratoplasty procedures recorded in the Dutch Cornea Transplant Registry from 2015 through 2018 and collected the center-specific practice patterns using a questionnaire. All available data regarding the donor, recipient, surgery, and practice pattern, were coded into 91 factors that might be associated with the occurrence of a graft detachment. In this research, we used three machine learning methods; a regularized logistic regression (lasso), classification tree analysis (CTA), and random forest classification (RFC), to select the most predictive subset of variables for graft detachment. A total of 3647 transplants were included in our analysis and the overall prevalence of graft detachment was 9.9%. In an independent test set the area under the curve for the lasso, CTA, and RFC was 0.70, 0.65, and 0.72, respectively. Identified risk factors included: a Descemet membrane endothelial keratoplasty procedure, prior graft failure, and the use of sulfur hexafluoride gas. Factors with a reduced risk included: performing combined procedures, using pre-cut donor tissue, and a pre-operative laser iridotomy. These results can help surgeons to review their practice patterns and generate hypotheses for empirical research regarding the origins of graft detachments.

Similar content being viewed by others

Introduction

Posterior lamellar keratoplasty is the current standard treatment to restore visual function in patients with irreversible corneal endothelial cell dysfunction1. Two principal treatment modalities are currently used, namely Descemet’s stripping endothelial keratoplasty (DSEK) and Descemet’s membrane endothelial keratoplasty (DMEK)2. In recent years, DMEK has increased in popularity due to its potential for faster visual recovery and improved visual outcome compared to DSEK3,4. In both DSEK and DMEK, postoperative detachment of the graft is a relatively common complication5,6,7. Detachment can require a secondary surgical intervention, potentially resulting in a less viable graft. The reported prevalence of graft detachment ranges from 2 to 27% for DSEK and 6% to 82% for DMEK5,6,7.

The underlying cause of graft detachment is considered to be multifactorial8,9,10,11,12, and a wide range of risk factors have been proposed and/or investigated relating to the type of procedure (DSEK versus DMEK)5,6,7, graft storage and preparation (e.g., pre-cut versus surgeon-cut)13,14,15,16, and recipient and donor’s characteristics (e.g., prior corneal transplantation, cause of death)8,11,12,16,17,18. Furthermore, various “best practice patterns” have been proposed, resulting in a wide range of surgical tools and techniques that have been adopted when performing posterior lamellar keratoplasty9,19. These include the insertion method8,20,21, the size of the descemetorhexis22,23, combined surgical procedures12,24,25,26,27, the use of anterior chamber (AC) tamponade (e.g., the agent, volume, pressure, and duration)9,12,19,24,25,26,28,29,30,31,32,33, and the duration of time spent in the supine position (imposed or recommended) following surgery9,28.

In the Netherlands, all centers that perform corneal transplants report their procedures to the Netherlands Organ Transplant Registry (NOTR). These records include extensive follow-up data, including complications, thus providing a unique source of real-world data regarding graft survival, patient characteristics, and donor characteristics. We expanded this dataset using a 35-item questionnaire regarding the preoperative, perioperative, and postoperative procedures performed at each center, as rapidly changing practice patterns in the field of surgery made performing an independent assessment of these practice patterns in a clinical study unfeasible.

Machine learning models can be used to detect complex patterns in large datasets, and these patterns can help researchers identify factors that can predict the risk of postsurgical complications34,35. Here, we present the results of our machine learning analysis to identify factors that predict an increase or decrease in the risk of graft detachment following posterior lamellar keratoplasty.

Results

A total of 3647 posterior lamellar keratoplasties were performed in the Netherlands and recorded in the NOTR registry between January 1, 2015 and December 31, 2018, including 2651 DSEK procedures (73%) and 996 DMEK procedures (27%). The surgeries were performed at sixteen centers throughout the Netherlands. Twelve of these centers submitted their practice patterns (Supplementary Tables S1 and S2), while the other four centers did not respond to the survey. These four centers performed 227 DSEK procedures and 1 DMEK procedure; for these four centers, only the NOTR data were included in the analysis. None of the continuous explanatory variables had missing observations. Sixteen categorical explanatory variables had missing values (mean percentage of missing values: 2.7% SD ± 4.3%; range: 5–20%); these values were recorded as “unknown” and were included for analysis as a new category in the respective variable.

Donor, recipient and procedure characteristics

The mean (± SD) donor age was 70 ± 9 years, and 61.4% of donors were male. The most common cause of death was diseases of the circulatory system (53.3%), followed by diseases of the respiratory system (16%), other/unknown (20.3%), cancer (8.8%), and trauma (1.5%). The mean interval between death and the transplant procedure was 19 ± 5 days. A complete summary of the donor characteristics is provided in Supplementary Table S3.

The most common indication for surgery was FECD (76.7% of cases). Interestingly, FECD was the indication for performing DMEK in 91.1% of cases, compared to 71.2% in DSEK. The majority of recipients were pseudophakic prior to surgery, with 62.3% having a posterior chamber intraocular lens. In total, 88.8% of recipients had not previously undergone a corneal transplant in the same eye. In 39.5% of cases, the graft was equal in size to the descemetorhexis; the graft was undersized in 26.1% of cases and oversized in 34.4% of cases. In 71.7% of cases, posterior lamellar keratoplasty was not combined with another surgical procedure. The most frequently performed combined surgical procedures were peripheral iridectomy and cataract surgery (12.2% and 8.6% of cases, respectively). A surgical complication was reported in the NOTR in 4.1% of all cases (3.3% of DSEK procedures and 6% of DMEK procedures) and included endothelial damage (0.8% of cases), difficulty unfolding the graft (0.7%), graft rupture or preparation problems (0.4%), iris prolapse (0.4%), and hemorrhage of the AC (0.4%). The recipient and surgery characteristics are summarized in Supplementary Tables S4 and S5, respectively.

Postoperative complications

The incidence and prevalence of postoperative graft detachment are summarized in Table 1. Overall, the rate of graft detachment was 9.9% (361 out of the 3647 procedures performed over the 4-year study period). During the period from 2015 through 2018, the number of DMEK procedures performed each year increased considerably from 4 to 473, while the number of DSEK procedures performed each year decreased from 743 to 560, reflecting the growing preference for this newer surgical procedure. The prevalence of graft detachment was relatively stable among the patients who underwent DSEK, ranging from 6.2 to 8.4%; in contrast, the prevalence of graft detachment was generally higher among the patients who underwent DMEK, ranging from 11.8 to 14%.

Results of the machine learning models

After correction for the DMEK learning curve, a total of 3464 cases were included for machine learning analysis. The discriminatory power of the three machine learning models at predicting graft detachment (based on the AUC) ranged from 0.65 to 0.72 (Table 2). The sensitivity and specificity were similar both within and between models, indicating a similar ability to predict both detachment and non-detachments, and indicating that a considerable amount of variation was not captured by the predictive factors included in the dataset.

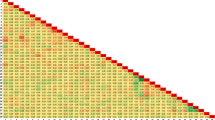

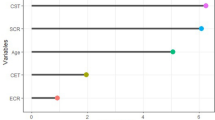

An overview of each model output is presented in Fig. 1 for the RFC model and in Supplementary Figs. S1 and S2 for the lasso and CTA models, respectively. The three models identified different sets of predictive factors, although several factors overlapped between the three models. The results of the most predictive factors identified by the three models are shown in Table 3. To simplify the analysis, factors that were categorized as either “unknown” or “unspecified” were omitted from Table 3.

Summary of the SHAP values based on the random forest classifier model. The y-axis shows the most relevant features for predicting graft detachment, from highest to lowest. The x-axis displays the SHAP values, reflecting the effect of each variable on the model’s outcome by indicating the predicted change in the probability of a graft detachment. A negative SHAP value represents a “protective effect” (i.e., decreased risk of detachment), while a positive SHAP value represents a “risk effect” (i.e., increased risk of detachment). Each symbol in the plot represents an individual patient in the test dataset. The color gradient ranging from blue to red represents the range of values of the variables. For binary variables, only two colors are used (red for “yes” and blue for "no"); for continuous variables, the values are depicted using the entire spectrum from red to blue.

We found that undergoing DMEK was associated with an increased risk of graft detachment in all three models. In contrast, none of the three models showed that performing a combined procedure was associated with an increased risk of postsurgical complications; however, both the LASSO and CTA models found that undergoing a combined procedure reduced the risk of graft detachment. In addition, the outcome was unclear for several factors, either because the model did not identify the factor as important or because the pattern was diffuse (see Fig. 1). Risk factors that were common to at least two of the three models included a previous graft failure, the type of insertion device, and the use of sulfur hexafluoride (SF6) gas during surgery. Protective factors that were common to at least two models included “donor preparation: other”, use of an AC maintainer during descemetorhexis, and combining surgical procedures (all combinations of procedures). It should be noted that by design, the type of insertion device is specific to the procedure (either DSEK or DMEK) being performed. In addition, it is important to note that “donor preparation method: other” is often entered as the method for preparing the graft for DMEK; based on a case-by-case assessment, we believe this is simply another way of saying “pre-cut tissue”. Similarly, “manual scraping of the endothelium” is likely another way of saying “pre-cut tissue” for DMEK.

Discussion and conclusions

Here, we report the use of three different machine learning models to explore factors that can predict the probability of graft detachment following posterior lamellar keratoplasty. The predictive power was similar between all three models and is considered to be acceptable (ranging from 0.65 to 0.72). Our identification of predictive factors can help surgeons make evidence-based changes of their practice patterns, provide insight in the marginal contribution to surgical safety of current practices, and can help generate hypotheses for empirical clinical research regarding the origins of graft detachments. Particularly, this study can function as a data-driven protocol standardization of future prospective studies regarding posterior lamellar keratoplasties.

A major strength of this study is our access to an extensive, nationwide dataset and the inclusion of practice patterns in our analysis of a national cornea transplantation registry. The real-world data of the transplantation registry are represented with practically absent inclusion bias, owing to the obligatory and incentivized data-entry in the register. The assessment of both linear and non-linear relationships between a wide range of factors is unique and revealed the complex interactions between factors8,11,12,27,36. Moreover, the presented approach enables the evaluation of the numerous modulations of practice patterns in use and the relative impact of proposed key factors, particularly of value in the rapidly evolving field of surgery in which assessing these practice patterns independently in a clinical study would not be feasible.

Despite these strengths several limitations warrant discussion. None of our three models explain all of the variance in our dataset, and not all factors that can affect graft detachment are registered in our dataset (e.g., patient’s behavior and compliance, unrecorded intraoperative events). Furthermore, the register lacks comprehensive contextual information and the completeness and correctness of the data in the registry could not be validated. This lack of contextual information is exemplified by our finding of unknown and/or missing observations in the model output; the interpretation of which is ambiguous and full of assumptions. We therefore chose to not report these outputs. With respect to the practice patterns, we cannot exclude response/recall bias regarding the replies to the questionnaire. Although the surgical protocols for DSEK and DMEK overlap to a large degree, they also differ in several respects. By aggregating the two procedures into a single database, procedure-specific predictive factors might not be identified. Certain predictive factors, such as aphakia, were relatively rare and therefore lack the necessary power to appear in the model output, whilst experts agree on the added risk of this particular condition. Therefore, the effect of certain variables cannot be estimated reliably. Finally, the retrospective nature of the study should be noted. Prospective validation of the results is essential to evaluate the usefulness of the models and possible applications in clinical practice.

The effect of donor-related factors, recipient-related factors, surgery-related factors, and practice patterns on the prevalence of graft detachment is an ongoing topic of discussion. Many surgeons in the Netherlands are transitioning from DSEK to DMEK37, and our results indicate that DMEK is associated with an increased risk of graft detachment, consistent with previous studies4,5,6. This increased risk may be due in part to increased difficulty when handling the DMEK graft and/or the fact the DMEK graft edge is more prone to curling up, thus lifting the graft from the recipient’s stromal bed38,39. In addition, partial detachments are more common after DMEK, possibly increasing the rate of rebubbling of the graft compared to DSEK40. Alternatively, the increased risk associated with DMEK may partially be related to the surgeon’s learning curve. Indeed, from 2016 to 2018 the prevalence of graft detachment decreased more steeply for DMEK than for DSEK4. In our model, we attempted to correct for this learning curve by excluding the first 20 DMEK surgeries performed at each clinic, although the results in Table 1 suggest a shallower learning curve; thus, our model may have overestimated the effect of the DMEK procedure and DMEK specific factors.

Our results show a diffuse pattern of donor age, recipient age, donor cause of death, and the interval between donor death and surgery. Regarding these factors, our analyses are inconclusive. Regarding preparation techniques, our results are consistent with previous studies that found no difference between pre-cut and surgeon-cut tissues13,15. Graft marking was associated with an increased risk of detachment in the Lasso model only. However, in the Netherlands graft marking is infrequently practiced, clouding the full assessment of the effect of this practice. Furthermore, and consistent with previous findings, our models indicate that patients who had one or more previously failed grafts had a higher risk of detachment36.

We also found that several types of graft insertion devices were associated with an increased risk graft detachment; however, we consider the choice of insertion device a proxy for idiosyncratic surgeon factors too subtle to be captured in our register or questionnaire. We opted not to enter to individual surgeon or center as a model factor, as this study is not designed as an exercise in benchmarking. Notwithstanding, these expected between-surgeon differences might now be attributed to proxy parameters. The insertion tools themselves are known to increase the risk of endothelial damage, although no significant differences have been found between the various commercially available insertion devices8,20,21. Furthermore, we found that a graft diameter > 8.4 mm may be associated with a reduced risk of graft detachment. Several groups previously hypothesized that a larger graft may overlap with the retained Descemet membrane in the recipient, thus inhibiting graft attachment22,23. However, no effect of graft size compared to the descemetorhexis size was found. Both DMEK and DSEK are increasingly combined with other procedures such as cataract surgery. In none of our models a combination of procedures was associated with an increased risk risk of graft detachment. Combining these results with previous studies we can conclude that combining surgical procedures does not increase the risk of graft detachment12,25,26. Finally, two of the three models in our study found that pre-operative laser peripheral iridotomy was more protective than surgical peripheral iridectomy, although none of the models found that surgical peripheral iridectomy substantially increased the risk of detachment. This difference between laser iridotomy and surgical iridectomy may be due to the increased risk of intraoperative fibrin formation during surgical iridectomy41.

Interestingly, we found that using air as the tamponade agent was not associated with an increased risk of graft detachment, while using SF6 gas appeared to increase the risk of graft detachment. This finding is in contrast with previous studies suggesting that the use of SF6 gas may reduce the risk of graft detachment32,42,43. This discrepancy may be explained in part by the recent transition of surgeons to using SF6 gas together with the concomitant transition to performing DMEK (with a subsequent increased risk of detachment in their learning curve). Nevertheless, we believe that the previously reported putative benefits associated with using SF6 gas might have been overestimated relative to all other factors and is exemplified by continued reports of relatively high rates of graft detachment5,6,12,40,44,45. After posterior lamellar keratoplasties, patients are instructed to remain in the supine position in order to maximize the beneficial effects of AC tamponade, and the length of time in this position can affect the risk of graft detachment. The results of our study indicate that strictly imposing a supine duration of at least 2 h reduced the risk of graft detachment. Similar results were also found if the patients were instructed to remain in the supine position for 48 h following surgery, consistent with the routine practice of most surgeons9,28.

Lastly, several previously suggested risk and protective factors were not identified by our models. For example, we found no effect of increasing intraocular pressure above physiological limits for a certain time, consistent with previous studies suggesting that overpressuring of the eye after graft insertion has only a limited protective effect30,46,47. Similarly, we found no increased risk of complications either during or following surgery; however, this apparent lack of effect may have been due to the relatively low incidence of these events.

In conclusion, we applied a supervised machine learning approach to a nationwide dataset and identified the most relevant factors for predicting graft detachment following posterior lamellar keratoplasties. Our analysis revealed that performing a DMEK procedure, the use of SF6 gas, and previous graft failure increased the risk of detachment, whereas performing a DSEK procedure, preoperative laser iridotomy, larger graft size, remaining strictly supine for at least 2 h, and a recommendation for staying in the supine position for 48 h reduced the risk of detachment. In contrast, performing a combined procedures and the use of pre-cut tissue had no effect on the risk of graft detachment, neither did overpressuring of the eye after graft-insertion. These results can help surgeons improve their practice patterns and can help researchers formulate new, testable hypotheses. Future studies should focus on improving the performance of machine learning approaches by including more detailed, contextual information. Importantly, these models’ “in silico” predictions should be tested in clinical practice.

Methods

Data collection

The data used in this study were acquired from the NOTR, which is hosted by the Netherlands Transplant Foundation (NTS). We included all DSEK and DMEK procedures registered in the NOTR between January 1, 2015, and December 31, 2018, including 12 months of follow-up data. The Netherlands Institute for innovative ocular surgery did not participate in the nationwide registry at the time of the data collection (2015–2018) and their data is therefore not included in this analysis. Two cornea banks (Amnitrans EyeBank, Rotterdam and the ETB-bislife, Beverwijk) supplied all of the corneal grafts assessed in this study. The NOTR steering committee provided Institutional Review Board (IRB) approval for the extraction and analysis of data in this study. All patients provided informed consent to be included in the registry for research purposes. No identifying information of donors or patients was available to the researchers and all data were anonymized prior to delivery to the researchers. No donor tissue was were procured from prisoners. In accordance with IRB approval, the data were not stratified at the individual surgeon, center, donor or patient level. The study was conducted in accordance with the principles of the Declaration of Helsinki and Dutch legislation. The NOTR data were restructured and made accessible for machine learning analysis.

Registry data processing

All available data in the registry regarding the donor, graft, recipient, and practice patterns was collected. The donor’s information included sex, age at the time of death, cause of death, endothelial cell count, interval between death and explantation of the eye, interval between explantation of the eye and preservation of the eye, and interval between death and the transplant procedure. The donor’s cause of death was classified into the following five categories: neoplasms/cancer, diseases of the respiratory system, trauma, diseases of the cardiovascular system, and other causes of death. The recipient’s information included sex, age at the time of surgery, indication for corneal transplantation surgery, and preoperative lens status. The indication for transplant surgery was classified into the following five categories: Fuchs corneal endothelial dystrophy (FECD), pseudophakic bullous keratopathy (PKB), graft failure, other corneal dystrophies, and other indications. Information regarding the surgical procedure included the surgeon’s position (staff surgeon or surgical fellow), date of surgery, instruments used for donor preparation, instruments used for graft insertion, diameter of the donor graft, diameter of the descemetorhexis, whether it was a combined surgical procedure, and surgical complications. Combined surgical procedures were recoded into the following five groups: peripheral surgical iridectomy, cataract surgery, posterior intraocular lens insertion without cataract extraction, anterior vitrectomy, and other combined surgical procedures or unspecified. Postoperative events recorded in the NOTR were classified as surgery-related (e.g., rebubbling, graft failure, immunological reaction, iatrogenic glaucoma, and/or cystoid macula edema) and not surgery-related (e.g., intravitreal injections, posterior segment surgery after primary transplant, and/or extra-ocular events). The recoding of the variables resulted in a set of 91 predictor variables.

Practice pattern questionnaire

We used a questionnaire to determine the practice patterns used by the transplantation centers that contributed their data to the registry, including the center-specific practice patterns (e.g., method of iridectomy, instruments used during surgery, AC tamponade, and supine time) and any protocol changes that may have occurred within the data collection period. All practice patterns questionnaires were collected by the NTS and anonymized before delivery to the researchers.

The center-specific practice patterns were connected to the respective patients in the registry, and protocol changes that occurred in the period between January 2015 and December 2018 were taken into account. To reduce the potential effects of a surgical learning curve, the first 20 DMEK surgeries performed at each center were removed from the dataset4.

Machine learning approach

The primary outcome measure of this study was postoperative graft detachment, which was defined as the occurrence of an intervention to re-adhere the graft (i.e., the incidence of rebubbling) reported in the NOTR. The dataset was divided into a training set and a test set, compromised of 70% and 30% of the dataset, respectively. The training set was used to develop a suitable model based on the predictive variables identified, and the test set was used to validate the model. The following three machine learning models were built: a L1 regularized logistic regression using least absolute shrinkage and selection operator (lasso) model, a classification tree algorithm (CTA), and random forest classification (RFC).

These three models have been chosen for the following reasons. Given the 91 predictors, a simple logistic regression analysis with outcome graft detachment is computationally difficult or even impossible. The lasso model is a special form of logistic regression in which the estimated regression coefficients are shrunken towards zero relative to the least squares estimates. As a result, some coefficients will be exactly zero, which leads to the selection of a subset of most predictive predictors for graft detachment. However, the Lasso will only be able to detect linear relations of the predictors with the outcome detachment. To detect non-linear relationships and higher-order relationships among the explanatory variables, we used CTA and RFC. The CTA partitioned the training dataset based on outcome (i.e., graft detachment/no graft detachment) using a series of successive splits (i.e., nodes)48. For each split, the explanatory variable that best partitioned the records was chosen based on accuracy, until the set could not be split further. To reduce over-fitting, pruning was performed using fivefold cross-validation, thus removing nodes that did not improve the accuracy of the tree. In the final tree, each leaf node was assigned the class with the highest frequency among its records, and each record reaching the node was predicted as being in that class. Although classification trees are very useful to detect higher-order relations and non-linear relations, they are not very robust, meaning that small changes in the data can result in large changes in the final estimated tree. RFC leads to a more robust classification model by building a large number of classification trees and splitting the data using a random sample of the entire set of explanatory variables to serve as split candidates. The resulting trees were combined by taking a majority vote, and the overall prediction was the most frequently occurring class among all predictions. By forcing each split to consider only a subset of variables, the RFC analysis overcomes the potential problem of one or more strong predictors dominating the solution, thus rendering the average of the trees less variable and therefore more reliable. In this study 1000 trees were used to build the RFC model.

The relatively low rate of graft detachment in the dataset resulted in a large imbalance between the two outcome categories (i.e., detachment versus no detachment) and can therefore affect the statistical model estimation and evaluation. To solve this imbalance, we performed random oversampling of examples (ROSE) for the lasso and the CTA49. The RFC model was trained in combination with weights to balance the outcome classes. No resampling techniques were used for the test set.

Statistical analysis

We summarized all quantitative and qualitative variables, including the donor characteristics, recipient characteristics, procedure characteristics, postoperative events, and practice patterns. The prevalence of graft detachment was determined separately for all procedures involving DSEK and all procedures involving DMEK independently. All statistical analyses were performed using the R statistical software package version 4.0.5 (Comprehensive R Archive Network, Vienna, Austria), except for the RFC which was performed using in Python version 3.8 and the scikit-learn package version 0.24.1 (Python Software Foundation. Python Language Reference, version 2.7).

The machine learning models were evaluated using the test set. The predicted outcome was compared with the observed outcome reported in the NOTR (i.e., the ground truth) by calculating the sensitivity, specificity, and the area under the curve (AUC). As different machine learning methods were used it was expected the models would diverge to some extend and a qualitative analysis of the model outcomes was performed. The overlap between models was used to identify factors associated with an increased or decreased risk.

Data availability

The data that support the findings of this study are available from the Netherlands Organ Transplant Registry but restrictions apply to the availability of these data, which were used under license for the current study, and are not publicly available.

References

Price, F. W., Feng, M. T. & Price, M. O. Evolution of endothelial keratoplasty: Where are we headed?. Cornea 34, S41–S47 (2015).

Nanavaty, M. A., Wang, X. & Shortt, A. J. Endothelial keratoplasty versus penetrating keratoplasty for Fuchs endothelial dystrophy. Cochrane Database Syst. Rev. https://doi.org/10.1002/14651858.CD008420.pub3 (2014).

Parker, J., Parker, J. S. & Melles, G. R. Descemet membrane endothelial keratoplasty—a review. US Ophthal. Rev. 06, 29 (2013).

Dunker, S. L. et al. Descemet membrane endothelial keratoplasty versus ultrathin descemet stripping automated endothelial keratoplasty: A multicenter randomized controlled clinical trial. Ophthalmology 127, 1152–1159 (2020).

Stuart, A. J., Romano, V., Virgili, G. & Shortt, A. J. Descemet’s membrane endothelial keratoplasty (DMEK) versus Descemet’s stripping automated endothelial keratoplasty (DSAEK) for corneal endothelial failure. Cochrane Database Syst. Rev. 2018, (2018).

Li, S. et al. Efficacy and safety of Descemet’s membrane endothelial keratoplasty versus Descemet’s stripping endothelial keratoplasty: A systematic review and meta-analysis. PLoS ONE 12, 1–21 (2017).

Singh, A., Zarei-Ghanavati, M., Avadhanam, V. & Liu, C. Systematic review and meta-analysis of clinical outcomes of descemet membrane endothelial keratoplasty versus descemet stripping endothelial keratoplasty/descemet stripping automated endothelial keratoplasty. Cornea 36, 1437–1443 (2017).

Terry, M. A. et al. Donor, recipient, and operative factors associated with graft success in the cornea preservation time study. Ophthalmology 125, 1700–1709 (2018).

Terry, M. A., Shamie, N., Chen, E. S., Hoar, K. L. & Friend, D. J. Endothelial keratoplasty. A simplified technique to minimize graft dislocation, iatrogenic graft failure, and pupillary block. Ophthalmology 115, 1179–1186 (2008).

Baydoun, L. et al. Endothelial survival after descemet membrane endothelial keratoplasty: Effect of surgical indication and graft adherence status. JAMA Ophthalmol. 133, 1277–1285 (2015).

Dirisamer, M. et al. Prevention and management of graft detachment in descemet membrane endothelial keratoplasty. Arch. Ophthalmol. 130, 280–291 (2012).

Dunker, S. et al. Rebubbling and graft failure in Descemet membrane endothelial keratoplasty: A prospective Dutch registry study. Br. J. Ophthalmol. 1–7 (2021) https://doi.org/10.1136/bjophthalmol-2020-317041.

Price, M. O., Baig, K. M., Brubaker, J. W. & Price, F. W. Randomized, prospective comparison of precut vs surgeon-dissected grafts for descemet stripping automated endothelial keratoplasty. Am. J. Ophthalmol. 146, (2008).

Rickmann, A. et al. Precut DMEK using dextran-containing storage medium is equivalent to conventional DMEK: A prospective pilot study. Cornea 38, 24–29 (2019).

Koechel, D., Hofmann, N., Unterlauft, J. D., Wiedemann, P. & Girbardt, C. Descemet membrane endothelial keratoplasty (DMEK): clinical results of precut versus surgeon-cut grafts. Graefe’s Arch. Clin. Exp. Ophthalmol. 259, 113–119 (2021).

Chen, E. S., Terry, M. A., Shamie, N., Hoar, K. L. & Friend, D. J. Precut tissue in descemet’s stripping automated endothelial keratoplasty. Donor characteristics and early postoperative complications. Ophthalmology 115, 497–502 (2008).

Oellerich, S. et al. Parameters associated with endothelial cell density variability after descemet membrane endothelial keratoplasty. Am. J. Ophthalmol. 211, (2020).

Rodríguez-Calvo De Mora, M. R. et al. Association between graft storage time and donor age with endothelial cell density and graft adherence after descemet membrane endothelial keratoplasty. JAMA Ophthalmol. 134, 91–94 (2016).

Price, F. W. & Price, M. O. Descemet’s stripping with endothelial keratoplasty in 200 eyes. Early challenges and techniques to enhance donor adherence. J. Cataract Refract. Surg. 32, 411–418 (2006).

Droutsas, K. et al. Comparison of endothelial cell loss and complications following DMEK with the use of three different graft injectors. Eye 32, 19–25 (2018).

Sati, A., Moulick, P. S., Sharma, V. & Shankar, S. Sheets glide-assisted versus Busin glide-assisted insertion techniques for descemet stripping endothelial keratoplasty (DSEK): A comparative analysis. Med. J. Armed Forces India 75, 370–374 (2019).

Tourtas, T. et al. Graft adhesion in Descemet membrane endothelial keratoplasty dependent on size of removal of host’s Descemet membrane. JAMA Ophthalmol. 132, 155–161 (2014).

Müller, T. M. et al. Histopathologic features of descemet membrane endothelial keratoplasty graft remnants, folds, and detachments. Ophthalmology 123, 2489–2497 (2016).

Sykakis, E., Lam, F. C., Georgoudis, P., Hamada, S. & Lake, D. Patients with fuchs endothelial dystrophy and cataract undergoing descemet stripping automated endothelial keratoplasty and phacoemulsification with intraocular lens implant: Staged versus combined procedure outcomes. J. Ophthalmol. 2015, (2015).

Terry, M. A. et al. Endothelial keratoplasty for Fuchs’ dystrophy with cataract. complications and clinical results with the new triple procedure. Ophthalmology 116, 631–639 (2009).

Chaurasia, S., Price, F. W., Gunderson, L. & Price, M. O. Descemet’s membrane endothelial keratoplasty: Clinical results of single versus triple procedures (combined with cataract surgery). Ophthalmology 121, 454–458 (2014).

Leon, P. et al. Factors associated with early graft detachment in primary descemet membrane endothelial keratoplasty. Am. J. Ophthalmol. 187, 117–124 (2018).

Dapena, I. et al. Standardized “no-touch” technique for descemet membrane endothelial keratoplasty. Arch. Ophthalmol. 129, 88–94 (2011).

Santander-García, D. et al. Influence of intraoperative air tamponade time on graft adherence in descemet membrane endothelial keratoplasty. Cornea 38, 166–172 (2019).

Schmeckenbächer, N., Frings, A., Kruse, F. E. & Tourtas, T. L. Role of initial intraocular pressure in graft adhesion after descemet membrane endothelial keratoplasty. Cornea 36, 7–10 (2017).

Pilger, D., Wilkemeyer, I., Schroeter, J., Maier, A. K. B. & Torun, N. Rebubbling in descemet membrane endothelial keratoplasty: Influence of pressure and duration of the intracameral air tamponade. Am. J. Ophthalmol. 178, 122–128 (2017).

Siebelmann, S. et al. Graft detachment pattern after descemet membrane endothelial keratoplasty comparing air versus 20% SF6 tamponade. Cornea 37, 834–839 (2018).

Srirampur, A. & Mansoori, T. Comment on: ‘Influence of lens status on outcomes of descemet membrane endothelial keratoplasty’. Cornea 38, e35–e36 (2019).

Norgeat, B., Glicksberg, B. S. & Butte, A. J. A call for deep-learning healthcare. Nat. Med. 25, 14–18 (2019).

Raghupathi, W. & Raghupathi, V. Big data analytics in healthcare: Promise and potential. Heal. Inf. Sci. Syst. 2, 1–10 (2014).

Nahum, Y., Leon, P., Mimouni, M. & Busin, M. Factors associated with graft detachment after primary descemet stripping automated endothelial keratoplasty. Cornea 36, 265–268 (2017).

Dunker, S. L. et al. Real-WORLD OUTCOMES of DMEK: A prospective Dutch registry study. Am. J. Ophthalmol. 222, 218–225 (2021).

Maier, A. K. B. et al. Influence of the difficulty of graft unfolding and attachment on the outcome in descemet membrane endothelial keratoplasty. Graefe’s Arch. Clin. Exp. Ophthalmol. 253, 895–900 (2015).

Woo, J. H., Ang, M., Htoon, H. M. & Tan, D. Descemet membrane endothelial keratoplasty versus descemet stripping automated endothelial keratoplasty and penetrating keratoplasty. Am. J. Ophthalmol. 207, 288–303 (2019).

Parekh, M. et al. Graft detachment and rebubbling rate in Descemet membrane endothelial keratoplasty. Surv. Ophthalmol. 63, 245–250 (2018).

Benage, M. et al. Intraoperative fibrin formation during Descemet membrane endothelial keratoplasty. Am. J. Ophthalmol. Case Rep. 18, 100686 (2020).

Terry, M. A. et al. Standardized DMEK technique: Reducing complications using prestripped tissue, Novel glass injector, and sulfur hexafluoride (SF6) gas. Cornea 34, 845–852 (2015).

Marques, R. E. et al. Sulfur hexafluoride 20% versus air 100% for anterior chamber tamponade in DMEK: A meta-analysis. Cornea 37, 691–697 (2018).

Siebelmann, S. et al. The Cologne rebubbling study: A reappraisal of 624 rebubblings after Descemet membrane endothelial keratoplasty. Br. J. Ophthalmol. https://doi.org/10.1136/bjophthalmol-2020-316478 (2020).

Deng, S. X. et al. Descemet membrane endothelial keratoplasty: safety and outcomes: A report by the American academy of ophthalmology. Ophthalmology 125, 295–310 (2018).

Muijzer, M. B., Soeters, N., Godefrooij, D. A., van Luijk, C. M. & Wisse, R. P. L. Intraoperative optical coherence tomography-assisted descemet membrane endothelial keratoplasty. Cornea 00, 1 (2020).

Titiyal, J. S., Kaur, M., Falera, R., Jose, C. P. & Sharma, N. Evaluation of time to donor lenticule apposition using intraoperative optical coherence tomography in descemet stripping automated endothelial keratoplasty. Cornea 35, 477–481 (2016).

James, G., Witten, D., Hastie, T. & Tibshirani, R. An Introduction to Statistical Learning: with Applications in R (Springer, 2013).

Menardi, R. & Torelli, N. Training and assessing classification rules with imbalanced data. Data Min. Knowl. Discov. 28, 92–122 (2014).

Acknowledgements

The authors would like to thank the members of the Netherlands Corneal Transplantation Network (NCTN) for sharing their practice patterns and data registration, and to Mrs Cynthia Konijn of the Dutch Transplant Foundation (NTS), Leiden, the Netherlands, for processing our data application and anonymization of the questionnaires.

Funding

This research is financially supported by unrestricted grants from the Dr. F.P. Fischer Foundation, Stichting Vrienden van het UMC Utrecht, Carl Zeiss Meditec AG, and applied data science grant of the University Utrecht.

Author information

Authors and Affiliations

Consortia

Contributions

M.B.M.: conceptualization, methodology, data collection, data curation, formal analysis, writing—original draft; C.M.W.H.: data curation, formal analysis, writing—review and editing; L.E.F.: methodology, data curation, formal analysis, writing—review and editing; G.V.: methodology, data curation, formal analysis, writing—review and editing; R.P.L.W.: conceptualization, methodology, writing—review and editing, supervision; Corneal Transplantation Network: data collection.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Muijzer, M.B., Hoven, C.M.W., Frank, L.E. et al. A machine learning approach to explore predictors of graft detachment following posterior lamellar keratoplasty: a nationwide registry study. Sci Rep 12, 17705 (2022). https://doi.org/10.1038/s41598-022-22223-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-22223-y

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.