Abstract

Blocking facial mimicry can disrupt recognition of emotion stimuli. Many previous studies have focused on facial expressions, and it remains unclear whether this generalises to other types of emotional expressions. Furthermore, by emphasizing categorical recognition judgments, previous studies neglected the role of mimicry in other processing stages, including dimensional (valence and arousal) evaluations. In the study presented herein, we addressed both issues by asking participants to listen to brief non-verbal vocalizations of four emotion categories (anger, disgust, fear, happiness) and neutral sounds under two conditions. One of the conditions included blocking facial mimicry by creating constant tension on the lower face muscles, in the other condition facial muscles remained relaxed. After each stimulus presentation, participants evaluated sounds’ category, valence, and arousal. Although the blocking manipulation did not influence emotion recognition, it led to higher valence ratings in a non-category-specific manner, including neutral sounds. Our findings suggest that somatosensory and motor feedback play a role in the evaluation of affect vocalizations, perhaps introducing a directional bias. This distinction between stimulus recognition, stimulus categorization, and stimulus evaluation is important for understanding what cognitive and emotional processing stages involve somatosensory and motor processes.

Similar content being viewed by others

Introduction

Dozens of behavioral studies have consistently corroborated Adam Smith’s1 insight that human beings tend to mimic, or imitate, observed behavior. This phenomenon includes body postures2, hand gestures3, head movements4, voice parameters5, pupils6, and facial expressions7,8. Moreover, because the execution of a congruent behavior facilitates recognition of the perceived one9,10,11,12,13, the current understanding of motor imitation suggests that it extends beyond being a simple by-product of stimulus–response associations14.

Here, we focus on facial mimicry; namely, the tendency to spontaneously imitate facial expressions of other people7,15,16,17. Although in most cases, facial mimicry is too weak to be detected by the naked eye, numerous electromyographic studies have demonstrated that even passively observing facial expressions of emotions can activate the observers’ relevant muscles in an automated, rapid, and emotion-specific manner18,19.

Importantly, the large body of research employing causal paradigms revealed that blocking spontaneous facial activity disrupts recognition of emotional categories displayed on perceived faces, which suggests, at least, a facilitative role of imitation20,21,22,23,24. Recently, the effect of blocking facial expressions has been shown to be generalised to the recognition of body expressions25. The results of these causal interventions are predominantly interpreted in line with the sensorimotor simulation account26,27 that emerged within embodied psychology28,29. However, since the existing body of evidence comes predominantly from tasks with visually displayed emotions and focused on categorical judgements, our knowledge of the emotions expressed through other than visual channels remain scarce. Thus, the main purpose of the present study was to investigate the causal involvement of facial mimicry in recognizing emotional categories of human affect vocalizations, their valence, and arousal.

Embodiment and simulation

Over the last four decades, numerous researchers have extensively argued that the body (including its brain representation) both constrains and facilitates cognition. As a result, higher cognition has begun to be framed as at least partially grounded in sensorimotor activity30,31,32. This view is popular in many fields of psychological science, including social cognition28,29. Although the scope of the embodiment33,34,35,36,37,38, and its methodological commitments39,40,41,42 are under extensive discussion, numerous researchers in the field agree that at least some aspects of conceptual understanding rely on a mechanism of simulation26,27,43. These simulations involve reenactments of sensorimotor and somatosensory networks of the brain that are primarily active during physical interactions with exemplars of a given category. Notably, proponents of embodied cognition argue that spontaneous imitation, or automatic mimicry, can reflect sensorimotor simulation, and thus, is involved in the conceptual processing of emotion28,44,45.

Various studies have demonstrated that spontaneous mimicry plays a causal role in face processing44. Specifically, these studies have focused on how manipulating the activity of facial muscles or somatosensory areas influences the recognition of several aspects of emotional expressions. For example, the results of the study with the “pen procedure” by Oberman, Winkielman, and Ramchandran20 revealed that manipulating the muscular activity of the lower part of the face (holding a pen with the teeth) selectively impaired recognition of happy and disgusted emotional faces; emotions that typically strongly activate the muscles affected by the procedure. Along similar lines, Ponari and colleagues23 also found hindered recognition of facial expressions of happiness and disgust during the increased tonic activity of lower face muscles (holding chopsticks with the teeth) and the impairment of the recognition of angry facial expressions while the brow area was activated (drawing together two little stickers placed close to the inner edge of eyebrows). Judgments of fear expressions were influenced independently of which area of the perceiver’s face was manipulated.

The selectivity of the mimicry blocking effect is also an argument against the explanation that observed reduction in accuracy rate is merely due to increased cognitive load in blocking conditions. Corroborating results have also been obtained in other behavioral studies22,24,44,46. The role of sensorimotor processes in face perception has also been examined in studies on the effects of brain damage or its temporal deactivation in laboratory conditions. Both lesions within the sensorimotor areas47 and transitory inactivation of the somatosensory face-related regions by repetitive TMS disrupted face recognition48 and judging whether a presented smile reflected genuine amusement or not49.

Importantly, Borgomaneri and colleagues25 investigated whether the effect of disrupting facial mimicry goes beyond hindering the recognition of facial expressions. They found that blocking facial mimicry (“pen procedure”) in perceivers disturbs not only their recognition of happiness portrayed on faces but also expressed with whole-body postures. It suggests that facial mimicry is not engaged solely in processing others’ facial expressions but is a part of the conceptual processing of emotion. This is consistent with the study by Connolly and colleagues50, who showed that, instead of multiple domain-specific factors, there is a supramodal emotion recognition ability that is linked to the recognition of both facial and bodily expressions. They demonstrated that this ability also generalises across modalities and is linked to the recognition of emotional vocalizations. The latter has been also suggested by Hawk and colleagues51, who explored the effect of cross-channel mimicry. They tested the impact of facial responses on the processing of emotional human vocalizations. The study presented participants with sounds that gradually transitioned from laughing to crying and vice versa. The participants’ task was to detect the moment of change of expression while holding the pen in one of two ways: in their teeth or in their hands. The results were contradictory to earlier studies using visual stimuli, reporting poorer or slower performance with mimicry blocking manipulations21. Specifically, the study by Hawk and colleagues revealed that the inhibition of spontaneous facial responses sped up the detection of change, as compared to when facial movements were not blocked. The authors interpreted this finding by suggesting that in a default, uninhibited condition, cross-channel mimicry of heard expressions strengthens the focus of attention on, and engagement with, the mimicked expression, which typically leads to slower responses when an opposite emotion appears.

Overall, the above results of causal interventions offer a persuasive argument against the view that sensorimotor activations during perception of emotional stimuli are just a by-product with no causal role. On the one hand, our knowledge regarding the generality of sensorimotor simulation as a mechanism supporting emotion processing remains limited because the discussed studies predominantly employed visual stimuli. On the other hand, the above-summarized studies primarily investigated the recognition of distinct emotion categories. While there is a growing consensus that valence and arousal are more fundamental dimensions of emotions than such categories52,53,54, they are still understudied in the embodied simulation account. The first of these dimensions, valence (negative–positive), is interpreted as a qualitative aspect of emotion, while the latter, arousal, as its intensity (low–high). In a nutshell, the approach defended by Russell and Barrett54 assumes that the emotion has a core component that is sufficiently defined by its valence and arousal dimensions, as well as additional elements, influenced by particular circumstances, which includes appraisals, behavioural reactions, the categorization of the experienced emotion, etc. Hence the dimensional aspect of emotion is more fundamental and requires less cognitive operations in juxtaposition to emotional categorization and conceptual processing. Additionally, this theoretical approach is uniquely useful for research involving physiological measurement as the elements of the core component, namely, arousal and valence dimensions, were shown to have very reliable physiological correlates55,56.

The study that addressed the issue of the impact of facial expression on valence and arousal ratings was conducted by Hyniewska and Sato57 in the context of the facial feedback hypothesis. They found that when participants activated the zygomaticus major following the instruction (“raise the cheeks”), they rated emotional facial expressions, both static and dynamic, as more positive compared to activating the corrugator supercilii (“lower the brows”). Consistently, many previous studies on facial feedback have shown that producing relevant facial movements may also impact various aspects of cognitive processing, including affective evaluation of emotional material58,59,60,61, memory of valence-consistent stimuli62, perceived valence of observed facial expressions57, and their intensity63. Moreover, the very act of producing facial expressions has been shown to evoke a corresponding emotional experience, including physiological reactions similar to actual emotions64. As summarized in a recent review, the effects of such facial feedback manipulations are overall robust, but “small and variable”60.

Nevertheless, to the best of our knowledge, the dimensions of valence and arousal were ignored in the studies employing causal interventions such as “the pen procedure”20, aiming to investigate the mechanism of sensorimotor simulation. This is not only important methodologically, but also theoretically because categorical tasks, such as assigning a stimulus to a category, rely on different cognitive skills than evaluative judgments on valence and arousal dimensions that can pick up broader changes in affective processes.

Objectives and hypotheses of the present study

To sum up the theoretical and empirical background leading to the present study, numerous proponents of embodied cognition argue that facial mimicry (automatic imitation) is linked to sensorimotor simulation that supports recognition of perceived emotional stimuli28,29,44. Previous studies, whose aim was to investigate the causal role of facial responses in recognition of emotions, focused predominantly on visual modality, and a majority of them employed images of facial expressions as stimuli20,21,22,23,24,46,47,48,49. Only a handful of studies included different kinds of stimuli like bodily expressions25 or emotional vocalizations51. Moreover, this research focused primarily on distinct emotional categories (e.g., “happy”), neglecting dimensions of valence and arousal (but see57).

The aim of the present study was to investigate whether blocking facial mimicry influences recognition of emotional sounds, and the perception of their intensity, valence, and arousal. In our study, we addressed the problem of the generality of the effect of mimicry in two ways. First, we investigated whether mimicry is involved only in processing facial displays or whether it might be constitutive for processing emotional expressions associated with emotion concepts. In other words, we intended to investigate the causal involvement of mimicry as a reaction to the affective meaning of a stimulus, which is in line with the sensorimotor simulation account. Secondly, we aimed to investigate whether mimicry might be involved in the recognition process only or also in the evaluative judgments.

Participants listened to brief non-verbal vocalizations of four emotion categories (anger, disgust, fear, happiness) and neutral sounds. Stimuli were selected from the Montreal Affective Voices database (henceforth MAV65), designed to be analogous to the so-called “Ekman faces”66,67. In the mimicry blocking condition of our study, participants performed the task while holding the chopsticks horizontally between their teeth with their mouth closed around it. It is important to note that this frequently used manipulation leads to an elevated, constant, non-specific muscle activity in the lower part of the face. This is unlike some other manipulations, such as Botox, that prevent any activity in the region of the face68. In the control condition, participants held the chopsticks horizontally between their lips, in front of their teeth, keeping the lower face relaxed (modeled after Davis, Winkielman, and Coulson’s study69). After each stimulus presentation, participants evaluated the sound on seven visual analog scales regarding the extent to which the presented vocalization expressed anger, disgust, fear, and happiness, as well as its valence and arousal.

Biting chopsticks creates a constant muscular tension, and as such interferes with the dynamic response to the stimuli with the zygomaticus major, the facial muscle activated while smiling. Accordingly, we expected to find poorer emotion recognition, particularly of happy vocalizations, in the blocking cognition (in comparison to the control condition), analogous to that observed in the previous studies with facial images as stimuli20,21,22,23,24. Additionally, we examined whether this manipulation might change the evaluation of intensity, valence, and arousal of presented emotional vocalizations57.

Method

Participants

The study had 60 participants; one of them was excluded from the statistical analyses due to not following the experimental procedure. The mean age of the remaining experimental group was 25.2 year old (SD = 4.83; range 18–40), with 36 females and 23 males. All participants had normal or corrected-to-normal hearing, provided informed consent, and received 50 PLN (around 10 EUR) as a financial reward. All methods and procedures used in this study conformed to ethical guidelines for testing human participants. The study was approved by the Ethics Committee for Experimental Research at the Institute of Psychology, Jagiellonian University (decision: KE/27_2021).

Materials

We used 50 brief sounds, which were nonverbal vocal expressions produced by humans of four basic emotions: anger, disgust, fear, happiness, and neutral sounds (vowel /ɑ/ sung in one note). Ten stimuli per each emotion category were used. The sounds were selected from the freely available MAV database65 and lasted approximately 1 s. As indicated by the validation provided by the database authors, the sounds were characterized by high decoding accuracy (ranging from 80 to 90%) apart from the fear expressing sounds (57% accuracy), which were often confused with the surprise expressions. Additionally, angry vocalizations had the lowest valence ratings, which was 16 on the 1–100 scale, followed by disgust (24), fear (24), neutral (47), and happy (85). As for the arousal, angry and fear expressing sounds were rated as the most arousing (both 72), followed by happy (57), disgust (36), and neutral (32) expressions.

Procedure

Using facial electromyography, we checked with three participants whether mimicry blocking manipulation leads to the intended effect on the activity of the zygomaticus major. We expected that the blocking condition, which involved holding the chopsticks horizontally between the teeth with the mouth closed around it, would constantly increase muscle activity, thus preventing spontaneous mimicry, compared to the control condition, in which the muscles remained relaxed, allowing spontaneous mimicry.

Upon arrival at the laboratory, the experimenter presented participants with the general purpose of the study and explained the procedures. Then, participants gave their informed consent. Next, they went through the training, during which they learned the details of the task and practiced the elements of the experimental procedure.

The experimental procedure consisted of two blocks. In the experimental block (mimicry blocking condition), participants were asked to hold the chopsticks horizontally between their teeth with their mouths closed around them. In the control block, participants were asked to gently hold the chopsticks horizontally between their lips in front of their teeth keeping the lower face relaxed. This method was modeled on the study by Davis, Winkielman, and Coulson69. There were 25 sounds in each block, and five stimuli of each emotion category were randomly assigned to each block. The order of the blocks was assigned pseudo-randomly so that half of the participants began with the experimental block and the other half from the control block.

The participants’ task was to evaluate each sound on seven scales. The first five concerned the emotion category portrayed by a given sound. Participants were asked To what extent the sound expressed the following emotion? corresponding to all five emotion categories. The scales were presented in random order. The sixth scale concerned valence (To what extent were the emotions expressed by the sound negative or positive?) and the seventh arousal (To what extent were the emotions expressed by the sound arousing?). Those two scales were always presented in the same order.

The answers were given on an unmarked visual analog scale ranging from 1 to 100. Verbal labels were at the extremities with “not at all” on the left and “extremely” on the right for emotion category expressed and arousal scale items, and “very negative” on the left and “very positive” on the right for a valence scale. Each scale was displayed on a separate screen. The training and the experimental procedure were run using the PsychoPy software70. The sounds were played through the headphones (Philips SHP2500) and the sound volume was self-adjusted by the participants before the main task, during the training procedure to be audible yet comfortable. Overall, the task lasted up to 30 min.

Results

Emotion intensity

To analyze the influence of the mimicry blocking condition on the perceived extent to which presented sounds expressed portrayed emotions (emotion intensity), we conducted a repeated measures analysis of variance (RM-ANOVA) with within-subject factors of emotion (5) and blocking condition (2) using IBM SPSS Statistics 27. In all cases where the sphericity assumption has been violated, the results are reported with Huynh–Feldt correction. We used Bonferroni correction for pairwise comparisons. The analysis yielded significant effect of emotion (F(4,232) = 11.78, p < 0.001, partial η2 = 0.169), but no significant difference between blocking and control conditions (F(1,58) = 0.45, p = 0.504), or the interaction of those factors (F(4,232) = 1.04, p = 0.389).

Regarding the impact of emotion category, post hoc t-tests revealed that the relevant intensity rating was the lowest for angry sounds among all the categories (M = 62.12, SE = 2.04). Specifically, the score for angry vocalizations was lower than for disgust (M = 68.56, SE = 1.98, p = 0.031), fear (M = 76.5, SE = 1.97, p < 0.001), happiness (M = 76.32, SE = 2.55, p < 0.001), and neutral sounds (M = 77.54, SE = 2.36, p < 0.001). Happy vocalizations were rated as equally expressing the portrayed emotion as fear and disgust expressions, as well as neutral sounds. Intensity ratings for all portrayed emotions on each rating scale are presented in Table 1. Mean values and standard errors for emotion intensity are presented in Table 3. (See also Table S1 in the Supplementary Information for the intensity results divided into two mimicry blocking conditions).

Valence and arousal

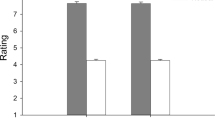

There was a significant effect of mimicry blocking condition on valence (F(1,58) = 4.29, p = 0.043, partial η2 = 0.069) with a lower overall rating in the control condition (M = 37.13, SE = 0.89, vs. M = 38.91, SE = 0.82). The valence ratings significantly differed depending on emotion (F(4,232) = 260.12, p < 0.001, partial η2 = 0.82). The interaction of both conditions was not significant (p = 0.961).

The expressions of happiness (M = 71.26, SE = 2.24) were rated as the most positive among all five categories (all differences p < 0.001). Further, neutral sounds (M = 38.02, SE = 1.33) significantly differed from all emotional expressions (all differences p < 0.001). Disgust expressions (M = 26.95, SE = 1.03) were rated as more negative than happy and neutral sounds, but less negative than fear expressions (M = 22.07, SE = 1.15, p < 0.001), and equally negative to the angry vocalizations (M = 23.86, SE = 1.21, p = 0.081).

There were consistent differences between the blocking conditions in terms of valence. While the mimicry was disrupted, ratings were higher in all emotion categories. However, the biggest difference was observed for neutral stimuli. Mean values and standard errors for all emotion categories divided into two blocking conditions are presented in Table 2.

Overall, arousal ratings did not differ between blocking conditions (p = 0.481). There was, however, a significant effect of emotion (F(4,232) = 173.84, p < 0.001, partial η2 = 0.75), while the interaction of blocking condition and emotion was not significant (p = 0.754).

Independent of the blocking condition, neutral sounds were rated as the least arousing (M = 23.29, SE = 1.87; all differences p < 0.001). Fear expressions (M = 69.56, SE = 1.76) were rated the highest, being rated as more arousing than the expressions of happiness (M = 61.54, SE = 1.83, p = 0.001) and disgust (M = 41.76, SE = 1.86, p < 0.001), and with marginal significance larger than the expressions of anger (M = 66.33, SE = 1.93, p = 0.054). The expressions of disgust were rated as less arousing than the expressions of anger, fear, and happiness (all differences p < 0.001). Mean values and standard errors for arousal ratings are presented in Table 3. (See also Table S2 in the Supplementary Information for the arousal ratings divided into two mimicry blocking conditions).

Emotion recognition

Emotion recognition scores were based on intensity ratings. For each participant and each stimuli, if the highest emotion intensity was attributed to the scale relevant for the portrayed emotion, it was considered a hit (correct recognition). Based on averaged hits, we then conducted repeated measures ANOVA with blocking condition (2) and emotion (5) as within-subject factors.

Recognition rates varied depending on emotion category of the sound (F(4,232) = 24.035, p < 0.001, partial η2 = 0.293) but did not significantly differ between blocking conditions (F(1,58) = 0.208, p = 0.65). Additionally, the interaction of emotion and blocking condition was not significant (F(4,232) = 0.509, p = 0.729). Regarding the emotion category, angry vocalizations had lower recognition rates than any other category of vocalizations (all p < 0.001). Additionally, recognition rate of disgust was lower than that of happy (p = 0.002) and neutral sounds (p = 0.041). Fear vocalizations were recognized as accurately as vocalizations of disgust (p = 1.0), happiness (p = 0.102), and neutral sounds (p = 0.231). Mean values and standard errors for recognition scores are presented in Table 3. (See also Table S3 in the Supplementary Information for the recognition scores divided into two mimicry blocking conditions).

Discussion

The main goal of the current study was to test whether disrupting spontaneous facial responses would influence the perception of emotional human vocalizations. The generalisation of the mimicry effect to auditory modality would suggest that sensorimotor processes engaged in face recognition are more broadly involved in recognizing emotional expressions. Moreover, we were interested in whether mimicry would be causally involved only in the recognition process or also in the more fundamental dimensional judgements. To this end, we focused on emotion recognition and the perception of stimuli’s intensity, valence, and arousal. We hypothesized that blocking spontaneous facial responses would lead to lower recognition scores for happy vocalization. Additionally, we expected that the mimicry blocking manipulation would also influence the evaluation of the intensity, valence, and arousal of emotional sounds.

Contrary to our predictions, disrupting the activity of the lower face muscles did not influence the recognition of vocalizations of neither happy, nor any other emotion category. Further, blocking mimicry did not significantly impact the perceived intensity and arousal of human affect vocalizations. Nevertheless, we observed a difference concerning judged valence between the conditions. When facial movements were disrupted, sounds were rated more positively than in the control condition; however, it is worth noting that the influence was minor and negative sounds still remained below the midpoint of the scale.

Facial activity and recognition of emotional sounds

Numerous studies have shown that disrupting facial activity impacts the recognition of facial emotional expressions20,21,22,23,24,25. This effect is elucidated within the embodied simulation framework28,29,44. Since what is being simulated is not the expression on its own but an affective meaning of the expression44,71, we predicted that disrupting facial activity would also hinder the recognition of vocal portrayals of emotions.

Consistently, single studies have suggested that interfering with spontaneous mimicry affects the recognition of other modality expressions. Recently, Borgomaneri et al.25 found that blocking mimicry disrupts the recognition of both facial and whole-body expressions of emotions. Regarding the auditory channel, Hawk and colleagues’ study51 revealed that blocking spontaneous activity of lower-face muscle using the “pen procedure” (based on Strack, different from ours) influenced the time needed to spot the moment of transition between crying and laughing. However, their effects were non-intuitive and varied from research with transitions between facial expressions where blocking leads to slower, less accurate performance21,68. In the Hawk et al.’ study51, “blocked” participants performed the auditory task faster than participants able to move their faces freely.

Our study, however, did not corroborate these earlier findings. Interfering with the spontaneous activity of lower-face muscles did not have any selective or general impact on the accuracy of recognition of emotions portrayed with human affective vocalization. There might be several reasons for not observing the predicted effect. Somatosensory simulation, a postulated mechanism for conceptual processing26,27, consists of the selected brain and peripheral activations present during our past encounters with examples of a given category, including emotion concepts71. Peripheral activity, such as facial movements, does not have to be present at or involved in every instance of emotion recognition. Simulation is thought to be a flexible process, influenced by various factors27,72. It has been found to be of particular importance during the perception of expressions that are ambiguous or perceptually demanding46,68. In the case of our study, the vocalizations might have been too clear, hence requiring less mental processing. Further, certain social factors have been shown to be linked to facial responses to emotional expressions, including one’s motivation to bond and understand15,73. However, despite that some studies indicated several factors affecting mimicry, it is still a challenge for the embodied cognition account to provide a ground for drawing precise predictions as to when facial mimicry should occur and play a causal role in recognizing emotional expressions. This challenge is not unique to emotion categories or concepts but applies more generally to understanding ways to which more abstract concepts (including “anger” or “happiness”) relate to specific motor activity36,37,38,43 and translate into evaluative judgments74.

Facial activity and valence perception

Interestingly, our study found that facial manipulation led to higher valence ratings in a non-category-specific manner, including neutral sounds. This is methodologically important and theoretically interesting and sheds light on the existing literature. Methodologically, it shows that our mimicry blocking manipulation (which involved slightly and constantly biting on the chopsticks) was effective. Theoretically, to the best of our knowledge, no study using this specific “pen procedure” to block spontaneous muscle activity has yet investigated valence, only recognition judgements. The only related study whose particular interest was on valence and arousal judgments was conducted by Hyniewska and Sato57. They presented participants with pictures of happy and angry facial expressions, and the participants’ task was to judge the expressions on valence and arousal scales while voluntarily contracting muscles following the task instruction (lower brows or raise cheeks). The authors found that raising the cheeks resulted in higher valence scores of both happy and angry expressions in comparison to the brow-lowering condition. Arousal was not influenced by the manipulation. Analogous to our experiment, the valence effect was not specific to one emotion category; namely, both happy and angry expressions received higher valence scores under the cheek-raising condition. The authors concluded that their results demonstrate the facial feedback effect58,60,61. The lack of neutral faces in Hyniewska and Sato’s study57 does not allow us to see whether the effect of facial manipulation would hold for neutral faces, as we observed for neutral stimuli in our experiment. Our study suggests that facial activity might not always be necessary for determining emotion category, however, it may play some role in evaluating expressions’ valence63.

Evaluation of emotional vocalizations

All measured indices in this experiment varied depending on the emotion category represented by vocalizations. Negative emotions were characterized by the lowest recognizability. Specifically, angry vocalizations were attributed to the relevant category with the smallest degree of accuracy among all tested emotion categories. Vocalizations of disgust and fear had the next smallest degree of accuracy. Although angry vocalizations were rated the highest on a scale corresponding to the expressed emotion, they also received high scores on the scales for fear and disgust. Similarly, fearful vocalizations were often confused with anger and disgust expression. Compared to Belin et al.65, recognition scores of fearful vocalizations observed in our study were high. However, our study did not include surprise vocalizations, often confused with fearful sounds in the MAV database validation. Happy sounds were best identified (~ 80%). The highest recognition scores for the positive vocalizations in our study are not surprising since happy sounds was the only category characterized by positive valence. In the study by Belin et al.65, happy expressions were recognized accurately in 60% of cases; however, there they were often confused with another positive category: pleased vocalizations. In contrast, in Paquette et al.’s study75, the correct identification of happy vocalizations was nearly 100%. It is worth noting, however, that the evaluation from our study and the validations made by Belin et al.65, and Paquette et al.75, differed in several respects. First, they included a different number of emotion categories – from four in Paquette et al.’s validation, through five in our study, to nine in the MAV validation. Secondly, unlike Belin et al. and us, Paquette et al. used a forced-choice task with four options. Those two factors affected the level of difficulty. Thirdly, in the MAV validation procedure, participants assessed the actor’s emotion intensity, valence, and arousal. We focused on the evaluation of the stimuli. Therefore, observed discrepancies are likely due to methodological differences, and direct comparisons should be made with caution. The same applies to valence and arousal ratings.

Emotion recognition was based on intensity scores, and thus, the intensity ratings follow a similar, yet not identical, pattern. In the line of the recognition scores, angry vocalizations were rated the lowest; participants considered them to the lowest extent to express anger. There were no differences between fear, disgust, and happiness in this regard.

The expressions of happiness were considered the most positive, followed by neutral sounds, and disgusted, angry, and fearful vocalizations, respectively. Although this pattern is consistent with the one found by Belin et al.65, the results of our study are less extreme, e.g., 71 vs. 85 for happiness, 24 vs. 16 for anger. Arousal rating clearly depended on portrayed emotion. It was the highest for fearful and angry vocalizations, followed by happy expressions. Disgusted vocalizations were rated as the least arousing among all four emotion categories, while neutral vocalizations were considered the least arousing overall. This pattern of results is consistent with those previously described by Belin et al.65.

Limitations and future directions

Drawing strong conclusions based on the results presented here is limited for several reasons. While we used continuous intensity rating scales for emotion categories to focus participants on dimensional aspects of emotion recognition, it is possible that the use of forced-choice (and thus categorical) task requiring time-pressured responses would reveal the effect of blocking facial mimicry in terms of accuracy and/or reaction times. Subsequent studies should investigate this possibility. Another limitation is related to the characteristics of the stimuli we used. It is known that simulation is stronger when stimuli are more ambiguous and more perceptually demanding46,68. The MAV’s vocalizations used in our study are perceptually clear and highly recognizable (especially happy vocalizations). It would be worthwhile to make the task more difficult by, for example, adding auditory noise to stimuli to make them more perceptually challenging or mixing vocalizations of different emotion categories in varying degrees to make them more ambiguous. The predicted effect of blocking facial mimicry could possibly be observed under these manipulations since they would force deeper conceptual processing of emotion categories. Furthermore, two recent studies revealed that emotional vocalizations taken from the MAV database are perceived as posed76 and evoke weaker facial reactions (measured with EMG) in comparison to authentic emotional vocalizations77. Less pronounced facial responses to the chosen set might have obscured the actual effect of facial mimicry blocking. The use of more ecologically valid stimuli might lead to revealing the effect. Finally, our study included only auditory stimuli; thus, direct comparisons with the recognition of facial stimuli is limited. This is important because our facial manipulation could lead to disruption of emotion recognition for faces (as seen in many previous studies), but only introduce a bias in the perception of non-facial stimuli, such as sounds or words.

Future studies may also investigate the differences between procedures used to “block mimicry”, as they are often treated as interchangeable but should lead to different effects. In some studies, participants were explicitly asked to voluntarily adopt certain facial configurations (e.g., resembling a smile), in other studies participants were put in a specific configuration by some method (e.g., pen in their mouth), yet other studies have attempted to fully disable the activity of the relevant muscles or their motor circuit (e.g., Botox, Transcranial Magnetic Stimulation; TMS). Specifically, our blocking procedure is based on the idea that a minor activation, such as creating a constant noise in the zygomaticus muscle, prevents it from selective dynamic responding to a positive stimulus69,78. Simultaneously, however, the constant activation of an emotion-relevant muscle creates a non-specific bias, as we, and others, have observed in valence ratings across all stimulus categories. In contrast, other “blocking” manipulations (like Botox) lead to a complete absence of responding from the relevant muscle68; the latter manipulation should not lead to any bias leading to non-specific enhancement or reduction in judgments of valence.

Concluding remarks

The current study contributes to the continuing debate about the mechanisms underlying recognition and evaluation of important emotion stimuli, such as faces and emotional vocalizations. Our results suggest that somatosensory and motor feedback plays a role in the evaluation process, perhaps introducing a directional bias, but its role in the earlier stages of emotion recognition and categorization process may be minor or bound by important boundary conditions. This mirrors recent debates about the scope of claims in the embodied cognition literature, with many researchers arguing for a secondary role of somatosensory and motor processes, but perhaps not in a profound, constitutive way33. This distinction between stimulus recognition, stimulus categorization, and the subsequent stimulus evaluation is important for understanding at what processing stages, if any, somatosensory and motor processes are involved in cognitive and emotional processes.

Data availability

The data collected and analyzed during the current study are available from the corresponding author upon request.

References

Smith, A. The propriety of action. in The theory of moral sentiments, Part I (eds. Raphael, D. D. & Macfie, A. L.) 9–66 (Oxford University Press, 1976).

Chartrand, T. L. & Bargh, J. A. The chameleon effect: The perception-behavior link and social interaction. J. Pers. Soc. Psychol. 76, 893–910 (1999).

Holler, J. & Wilkin, K. Co-speech gesture mimicry in the process of collaborative referring during face-to-face dialogue. J. Nonverbal Behav. 35, 133–153 (2011).

Bailenson, J. N. & Yee, N. Digital chameleons: Automatic assimilation of nonverbal gestures in immersive virtual environments. Psychol. Sci. 16, 814–819 (2005).

Mantell, J. T. & Pfordresher, P. Q. Vocal imitation of song and speech. Cognition 127, 177–202 (2013).

Prochazkova, E. & Kret, M. E. Connecting minds and sharing emotions through mimicry: A neurocognitive model of emotional contagion. Neurosci. Biobehav. Rev. 80, 99–114 (2017).

Dimberg, U. Facial reactions to facial expressions. Psychophysiology 19, 643–647 (1982).

Bush, L. K., Barr, C. L., McHugo, G. J. & Lanzetta, J. T. The effects of facial control and facial mimicry on subjective reactions to comedy routines. Motiv. Emot. 13, 31–52 (1989).

Tucker, M. & Ellis, R. On the relations between seen objects and components of potential actions. J. Exp. Psychol. Hum. Percept. Perform. 24, 830–846 (1998).

Iani, C., Ferraro, L., Maiorana, N. V., Gallese, V. & Rubichi, S. Do already grasped objects activate motor affordances?. Psychol. Res. 83, 1363–1374 (2019).

Scerrati, E. et al. Exploring the role of action consequences in the handle-response compatibility effect. Front. Hum. Neurosci. 14, 1 (2020).

Scerrati, E., Rubichi, S., Nicoletti, R. & Iani, C. Emotions in motion: Affective valence can influence compatibility effects with graspable objects. Psychol. Res. https://doi.org/10.1007/s00426-022-01688-6 (2022).

Vainio, L. & Ellis, R. Action inhibition and affordances associated with a non-target object: An integrative review. Neurosci. Biobehav. Rev. 112, 487–502 (2020).

Lipps, T. Das Wissen von fremden Ichen. in Psychologische untersuchungen. Band 1 694–722 (Engelmann, 1907).

Hess, U. & Fischer, A. H. Emotional mimicry: Why and when we mimic emotions. Soc. Pers. Psychol. Compass 8, 45–57 (2014).

Rymarczyk, K., Zurawski, Ł, Jankowiak-Siuda, K. & Szatkowska, I. Emotional empathy and facial mimicry for static and dynamic facial expressions of fear and disgust. Front. Psychol. 7, 1–11 (2016).

Olszanowski, M., Wróbel, M. & Hess, U. Mimicking and sharing emotions: A re-examination of the link between facial mimicry and emotional contagion. Cogn. Emot. 34, 367–376 (2020).

Cacioppo, J. T., Petty, E. R., Losch, E. M. & Kim, S. H. Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. J. Pers. Soc. Psychol. 50, 260–268 (1986).

Dimberg, U., Thunberg, M. & Elmehed, K. Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89 (2000).

Oberman, L. M., Winkielman, P. & Ramachandran, V. S. Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2, 167–178 (2007).

Niedenthal, P. M., Brauer, M., Halberstadt, J. B. & Innes-Ker, Å. H. When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cogn. Emot. 15, 853–864 (2001).

Künecke, J., Hildebrandt, A., Recio, G., Sommer, W. & Wilhelm, O. Facial EMG responses to emotional expressions are related to emotion perception ability. PLoS ONE 9, 1–10 (2014).

Ponari, M., Conson, M., D’Amico, N. P., Grossi, D. & Trojano, L. Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion 12, 1398–1403 (2012).

Ipser, A. & Cook, R. Inducing a concurrent motor load reduces categorization precision for facial expressions. J. Exp. Psychol. Hum. Percept. Perform. 42, 706–718 (2016).

Borgomaneri, S., Bolloni, C., Sessa, P. & Avenanti, A. Blocking facial mimicry affects recognition of facial and body expressions. PLoS ONE 15, e0229364 (2020).

Barsalou, L. W. Perceptual symbol systems. Behav. Brain Sci. 22, 577–660 (1999).

Barsalou, L. W. Challenges and opportunities for grounding cognition. J. Cogn. 3, 1–24 (2020).

Winkielman, P., Niedenthal, P. M., Wielgosz, J., Eelen, J. & Kavanagh, L. C. Embodiment of cognition and emotion. APA handbook of personality and social psychology, Volume 1: Attitudes and social cognition. 1, 151–175 (2014).

Winkielman, P., Coulson, S. & Niedenthal, P. M. Dynamic grounding of emotion concepts. Philos. Trans. R. Soc. B: Biol. Sci. 373, 20170127–20170129 (2018).

Clark, A. Being there: Putting brain, body, and world together again. (The MIT Press, 1997).

Glenberg, A. M. Embodiment as a unifying perspective for psychology. WIREs Cognit. Sci. 1, 586–596 (2010).

Gallese, V. & Lakoff, G. The brain’s concepts: The role of the sensory-motor system in conceptual knowledge. Cogn. Neuropsychol. 22, 455–479 (2005).

Meteyard, L., Cuadrado, S. R., Bahrami, B. & Vigliocco, G. Coming of age: A review of embodiment and the neuroscience of semantics. Cortex 48, 788–804 (2012).

Borghi, A. M. et al. The challenge of abstract concepts. Psychol. Bull. 143, 263–292 (2017).

Hohol, M. Foundations of geometric cognition. (Routledge, 2020).

Borghi, A. M. A future of words: Language and the challenge of abstract concepts. J. Cogn. 3, 1–18 (2020).

Scerrati, E., Lugli, L., Nicoletti, R. & Borghi, A. M. Is the acoustic modality relevant for abstract concepts? A study with the Extrinsic Simon task. in Perspectives on abstract concepts: Cognition, language and communication (eds. Bolognesi, M. & Steen, G.) (John Benjamins Publishing, 2019).

Borghi, A. M. & Zarcone, E. Grounding abstractness: Abstract concepts and the activation of the mouth. Front. Psychol. 7, 1 (2016).

Ostarek, M. & Bottini, R. Towards strong inference in research on embodiment—possibilities and limitations of causal paradigms. J. Cogn. 4, 1–21 (2021).

Miłkowski, M. & Nowakowski, P. Representational unification in cognitive science: Is embodied cognition a unifying perspective?. Synthese 199, S67–S88 (2019).

Wołoszyn, K. & Hohol, M. Commentary: The poverty of embodied cognition. Front. Psychol. 8, 1 (2017).

Goldinger, S. D., Papesh, M. H., Barnhart, A. S., Hansen, W. A. & Hout, M. C. The poverty of embodied cognition. Psychon. Bull. Rev. 23, 959–978 (2016).

Barsalou, L. W. & Wiemer-Hastings, K. Situating abstract concepts. in Grounding Cognition: The Role of Perception and Action in Memory, Language, and Thinking (eds. Pecher, D. & Zwaan, R. A.) 129–163 (Cambridge University Press, 2005).

Wood, A., Rychlowska, M., Korb, S. & Niedenthal, P. M. Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 20, 227–240 (2016).

Niedenthal, P. M., Mermillod, M., Maringer, M. & Hess, U. The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behav. Brain Sci. 33, 417–433 (2010).

Neal, D. T. & Chartrand, T. L. Embodied emotion perception: Amplifying and dampening facial feedback modulates emotion perception accuracy. Soc. Psychol. Personal. Sci. 2, 673–678 (2011).

Adolphs, R., Damasio, H., Tranel, D., Cooper, G. & Damasio, A. R. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690 (2000).

Pitcher, D., Garrido, L., Walsh, V. & Duchaine, B. C. Transcranial Magnetic Stimulation disrupts the perception and embodiment of facial expressions. J. Neurosci. 28, 8929–8933 (2008).

Paracampo, R., Tidoni, E., Borgomaneri, S., di Pellegrino, G. & Avenanti, A. Sensorimotor network crucial for inferring amusement from smiles. Cereb. Cortex 27, 5116–5129 (2017).

Connolly, H. L., Lefevre, C. E., Young, A. W. & Lewis, G. J. Emotion recognition ability: Evidence for a supramodal factor and its links to social cognition. Cognition 197, 104166 (2020).

Hawk, S. T., Fischer, A. H. & van Kleef, G. A. Face the noise: Embodied responses to nonverbal vocalizations of discrete emotions. J. Pers. Soc. Psychol. 102, 796–814 (2012).

Russell, J. A. Core affect and the psychological construction of emotion. Psychol. Rev. 110, 145–172 (2003).

Barrett, L. F., Wilson-Mendenhall, C. D. & Barsalou, L. W. The Conceptual Act Theory: A roadmap. in The psychological construction of emotion (eds. Feldman Barrett, L. & Russell, J. A.) (Guilford Press, 2014).

Russell, J. A. & Barrett, L. F. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J. Pers. Soc. Psychol. 76, 805–819 (1999).

Bradley, M. M. & Lang, P. J. Affective reactions to acoustic stimuli. Psychophysiology 37, 204–215 (2000).

Bradley, M. M., Miccoli, L., Escrig, M. A. & Lang, P. J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45, 602–607 (2008).

Hyniewska, S. & Sato, W. Facial feedback affects valence judgments of dynamic and static emotional expressions. Front. Psychol. 6, 291 (2015).

Strack, F., Martin, L. L., Stepper, S., Leonard, M. L. & Stepper, S. Inhibiting and facilitating conditions of the human smile: A nonobtrusive test. J. Pers. Soc. Psychol. 54, 768–777 (1988).

Wagenmakers, E. J. et al. Registered replication report: Strack, Martin, & Stepper (1988). Perspect. Psychol. Sci. 11, 917–928 (2016).

Coles, N. A., Larsen, J. T. & Lench, H. C. A meta-analysis of the facial feedback literature: Effects of facial feedback on emotional experience are small and variable. Psychol. Bull. 145, 610–651 (2019).

Coles, N. A. et al. A multi-lab test of the facial feedback hypothesis by the many smiles collaboration. Nat. Hum. Behav. https://doi.org/10.31234/osf.io/cvpuw (2022).

Laird, J. D., Wagener, J. J., Halal, M. & Szegda, M. Remembering what you feel: Effects of emotion on memory. J. Pers. Soc. Psychol. 42, 646–657 (1982).

Lobmaier, J. & Fischer, M. H. Facial feedback affects perceived intensity but not quality of emotional expressions. Brain Sci. 5, 357–368 (2015).

Levenson, R. W., Ekman, P. & Friesen, W. V. Voluntary facial action generates emotion-specific autonomic nervous system activity. Psychophysiology 27, 363–384 (1990).

Belin, P., Fillion-Bilodeau, S. & Gosselin, F. The Montreal Affective Voices: A validated set of nonverbal affect bursts for research on auditory affective processing. Behav. Res. Methods 40, 531–539 (2008).

Ekman, P. & Friesen, W. V. The facial action coding system: A technique for the measurement of facial movement. (Consulting Psychologists Press, 1978).

Ekman, P., Friesen, W. v. & Hager, J. C. Facial action coding system: Research nexus. (Network Research Information, 2002).

Bulnes, L. C., Mariën, P., Vandekerckhove, M. & Cleeremans, A. The effects of Botulinum toxin on the detection of gradual changes in facial emotion. Sci. Rep. 9, 1–13 (2019).

Davis, J. D., Winkielman, P. & Coulson, S. Sensorimotor simulation and emotion processing: Impairing facial action increases semantic retrieval demands. Cogn. Affect. Behav. Neurosci. 17, 652–664 (2017).

Peirce, J. et al. PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 51, 195–203 (2019).

Niedenthal, P. M., Barsalou, L. W., Winkielman, P., Krauth-Gruber, S. & Ric, F. F. Embodiment in attitudes, social perception, and emotion. Pers. Soc. Psychol. Rev. 9, 184–211 (2005).

Davis, J. D., Coulson, S., Arnold, A. J. & Winkielman, P. Dynamic grounding of concepts: Implications for emotion and social cognition. in Handbook of Embodied Psychology (eds. Robinson, M. D. & Thomas, L. E.) 23–42 (Springer, 2021).

Lakin, J. L. & Chartrand, T. L. Using nonconscious behavioral mimicry to create affiliation and rapport. Psychol. Sci. 14, 334–339 (2003).

Foroni, F. & Semin, G. R. When does mimicry affect evaluative judgment?. Emotion 11, 687–690 (2011).

Paquette, S., Peretz, I. & Belin, P. The, “Musical Emotional Bursts”: A validated set of musical affect bursts to investigate auditory affective processing. Front. Psychol. 4, 1–7 (2013).

Anikin, A. & Lima, C. F. Perceptual and acoustic differences between authentic and acted nonverbal emotional vocalizations. Q. J. Experim. Psychol. 71, 622–641 (2018).

Lima, C. F. et al. Authentic and posed emotional vocalizations trigger distinct facial responses. Cortex 141, 280–292 (2021).

Davis, J. D., Winkielman, P. & Coulson, S. Facial action and emotional language: ERP evidence that blocking facial feedback selectively impairs sentence comprehension. J. Cogn. Neurosci. 27, 2269–2280 (2015).

Acknowledgements

This study was supported by the grant “Preludium,” 2017/25/N/HS6/01052 (PI: Kinga Wołoszyn, Supervisor: Piotr Winkielman) funded by the National Science Centre, Poland. The open access publication has been supported by a grant from the Copernicus Center for Interdisciplinary Studies under the Strategic Programme Excellence Initiative at Jagiellonian University.

Author information

Authors and Affiliations

Contributions

All authors conceptualized and designed the study; K.W. and P.W. developed the methodology; K.W. and M.H. collected the data; K.W. analysed the data and prepared the tables; All authors substantially contributed to the interpretation of data; K.W., M.H., and PW wrote the manuscript. All authors reviewed and accepted the manuscript. K.W. acquired the funding for the study.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wołoszyn, K., Hohol, M., Kuniecki, M. et al. Restricting movements of lower face leaves recognition of emotional vocalizations intact but introduces a valence positivity bias. Sci Rep 12, 16101 (2022). https://doi.org/10.1038/s41598-022-18888-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-18888-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.