Abstract

According to the theory of embodied cognition, semantic processing is closely coupled with body movements. For example, constraining hand movements inhibits memory for objects that can be manipulated with the hands. However, it has not been confirmed whether body constraint reduces brain activity related to semantics. We measured the effect of hand constraint on semantic processing in the parietal lobe using functional near-infrared spectroscopy. A pair of words representing the names of hand-manipulable (e.g., cup or pencil) or nonmanipulable (e.g., windmill or fountain) objects were presented, and participants were asked to identify which object was larger. The reaction time (RT) in the judgment task and the activation of the left intraparietal sulcus (LIPS) and left inferior parietal lobule (LIPL), including the supramarginal gyrus and angular gyrus, were analyzed. We found that constraint of hand movement suppressed brain activity in the LIPS toward hand-manipulable objects and affected RT in the size judgment task. These results indicate that body constraint reduces the activity of brain regions involved in semantics. Hand constraint might inhibit motor simulation, which, in turn, would inhibit body-related semantic processing.

Similar content being viewed by others

Introduction

Our knowledge of an object, such as a pencil, depends on what we implicitly know about body movements—in this case, how to grip the pencil and how to move our hands and fingers in a coordinated manner to write. At least part of our knowledge is embedded in the interactions between the body and objects and between the body and the environment. Based on this framework of embodied cognition, many studies have explored the relationship between word meanings and body motions1,2,3,4,5,6,7,8. It is becoming clear, through behavioral and neuroscientific studies, that word semantics are closely related to motor simulations. For example, several researchers measured reaction time (RT) after participants learned to map specific gestures to objects or words denoting the objects9,10. Faster responses were found when the mapped gestures were congruent with the gestures typically associated with the objects. This suggests that the actions compatible with the objects are automatically activated not only by the visual information of the objects but also by the semantic information of the words. Moreover, action verbs (e.g., “grasp”) followed by an object name elicit the formation of a “motor prototype” of the object11,12. These findings seem to imply that semantic processing related to object manipulation is embodied. However, others have poisted that these results can be explained by the view that conceptual and motor systems do not share processing resources13. Chatterjee14 pointed out that abstract semantic processing requires disembodiment. An alternative perspective is that these results are not due to embodiment but rather encoding of the object's size15.

To ascertain whether conceptual and motor systems share at least some processing resources, studies have employed physical constraints that prevent body movements. For example, hand immobilization was found to decrease the proactivity of gaze behavior when observing others grasping an object. Specifically, gaze shifts became slower when participants’ hands were tied behind their back16. Dutriaux and Gyselinck17 confirmed that conceptual knowledge of words is closely related to somatosensory and motor systems. The authors asked participants to learn lists of words for manipulable or nonmanipulable objects; then, the authors compared data from a condition in which participants had their hands behind their back while memorizing words with data from a condition in which their hands were unrestrained on the desk. In a later free recall task, performance on words denoting objects that can be manipulated with one’s hands was reduced by restraining the hands. They speculated that information about the impossibility of hand movement might have suppressed activity in brain areas involved in motor simulation, as a possible reason why hand restraint suppressed memory for objects that could be manipulated with one’s hand. However, under their experimental conditions, hand position and hand visibility were not controlled, so Onishi & Makioka18 examined the effect of posture and visibility in more detail. The authors found that only suppression of hand movements degraded the memory of words denoting manipulable objects, not the visibility or position of the hand (whether the hand was placed on a desk or folded behind the back).

It has been shown that the activity of corticospinal tracts while participants were imagining a specific movement with their hand was influenced by their hand posture19. Corticospinal excitability was enhanced when the actual hand posture was congruent with the imagined movement. The relationship between hand immobilization and brain activity has been investigated using a variety of methodologies: motor evoked potential20,21,22, resting motor threshold23, local blood flow and event-related desynchronization24. However, it remains unclear whether constraining the hands during semantic tasks affects the activity of brain regions involved in semantic processing. In addition, although it has been found that hand restraint reduces memory for words denoting hand-manipulable objects17,18 , it is unclear at which processing stage this effect occurs. By examining the effects of hand constraints on the speed of semantic processing and brain activity, we expect to obtain a clearer understanding of semantic processing for objects that can be manipulated by hand.

Previous neurological studies have repeatedly indicated that the premotor and parietal lobes involve object operations and object knowledge25,26,27. Patients with damage to the parietal lobe or premotor cortex show impairment in their knowledge of manipulable objects and the movements needed to manipulate those objects28. It is known that damage to the left parietal lobe can result in ideomotor apraxia, which is the inability to perform an action according to verbal direction, and poor use of certain tools29,30. Chao and Martin27 found that the ventral area of the premotor cortex (BA 6) and left inferior parietal lobule (LIPL) are more strongly activated when individuals are observing or naming manipulable objects, such as pencils, erasers, and umbrellas, than when observing or naming nonmanipulable objects, such as animals, faces, and houses. The authors considered that the left parietal lobe processes information about visual features that characterize objects as tools, consistent with the finding that the anterior part of the intraparietal sulcus (AIP) of a monkey is activated when the monkey merely looks at graspable objects26,31.

It has been shown that the LIPL is related to the meanings of words. The LIPL, including the supramarginal gyrus (SMG, BA 40), is more strongly activated when word pairs presented in consecutive order are closely associated than when they are weakly associated32,33. Furthermore, it is known that patients with Alzheimer’s disease show reduced activation in the LIPL, including the angular gyrus (AG, BA 39), when judging categories of images and words compared with healthy seniors. The AG contributes to semantic processing, such as word reading, comprehension, and retrieval memory34. These findings support the view that the LIPL contributes to semantic processing35.

On the other hand, it has been revealed that the left intraparietal sulcus (LIPS), which is adjacent to the LIPL, is activated while observing an object-related action or while grasping or manipulating an object36; this activation is found to be related to motor intentions before executing a movement37. The LIPS is also known to be involved in spatial attention38 and spatial working memory39, suggesting that the area contributes to visual information processing required for object manipulation and spatial processing. At the same time, there is some evidence that the LIPS is activated even without visual information40: the area was activated while subjects classified words into categories. The findings from these studies suggest that the LIPS may also contribute to the comprehension of meaning.

Regarding the functional role of the LIPL on tool knowledge, researchers have proposed a framework where the SMG is involved in "action tool knowledge" and the AG is involved in "semantic tool knowledge"41. Action tool knowledge represents information about how to manipulate tools, and the SMG is involved in motor imagery42 through processing hand shapes and movements43,44,45. Semantic tool knowledge is information about the associative relationship of tools (what they are used with) and the functional relationship of tools (which category they are classified into functionally). It has been found that the AG, which contributes to semantic knowledge35, is also involved in semantic tool knowledge46. On the other hand, it has been known that there exists a multimodal object-related network in the occipitotemporal cortex that responds to both visual and haptic stimuli47.

Taken together, data from these studies suggest that the LIPS and LIPL are candidates for the areas responsible for semantic processing of hand-manipulable objects. The meaning of hand- manipulable objects might be represented in the LIPS and LIPL in association with the shape and movement of hands during manipulation. As noted above, hand constraint decreases the memory performance for hand-manipulable objects17,18. However, the inhibition of semantic processing itself and the change in brain activity caused by hand constraints have not yet been verified.

The aim of this study is to reveal whether hand constraint affects the activity of brain regions involved in semantic processing and whether it affects the speed of semantic processing. Specifically, we tested two hypotheses: (1) the activity of the LIPS and LIPL is reduced upon hand constraint while subjects execute semantic processing of manipulable objects, and (2) hand constraint delays semantic processing of manipulable objects. If these hypotheses are correct, they would suggest that the LIPS and LIPL conduct semantic processing on hand-manipulable objects and that such processing is inhibited by hand constraints; furthermore, they suggest that the semantic processing in the LIPS and LIPL is related to the motor simulation of hand movements. We therefore defined the regions of interest (ROIs) for this study as the anterior (AIP) and posterior (CIP) areas of the LIPS and the anterior (SMG) and posterior (AG) areas of the LIPL. Viewing hand-manipulable objects activates the precentral cortex27,48,49; such activity might be altered by hand constraint. Therefore, it would be beneficial to simultaneously record the LIPL and premotor cortex. However, in this study, the number of available channels were limited; thus, the measurement was limited to the LIPL.

Because this research aimed to investigate the effect of hand restrictions, it is essential to allow the participants to engage in the task under very few physical constraints. Therefore, we measured brain activity by employing functional near-infrared spectroscopy (fNIRS) to ensure that participants could sit down and undergo the experiment with unconstrained physical movement. Participants were asked to judge the size of the object represented by the visually presented word. The stimulus words represented objects that could be manipulated by hand or objects that could not be manipulated by hand. We used a transparent acrylic board to restrain the hand movements of the participants so that they could place their hands on the desk and still see them, as was done in Onishi & Makioka18. This allows us to manipulate the constraint of hand movement independently of the position and visibility of the hand. As a behavioral measure, we also examined the effect of hand constraint on RT. The experimental procedure is shown in Fig. 1.

Experimental procedure. (A) Procedure of the experiment. Two words representing objects were presented on the screen, and the participants verbally indicated the larger of the two objects. In this example, the two words are cup and broom. A red frame appeared around the words after the voice response. After 3,000 ms elapsed from initial presentation of the words, a blank screen was presented, followed 500 ms later by the next pair of words. (B) Experimental conditions. Left: no constraint condition, right: hand-constraint condition. In the hand constraint condition, hand movements were restrained by a transparent acrylic board. To reduce participant fatigue, separate sessions for each condition were conducted on different days.

Statistical analysis

Naming latency

We conducted a repeated-measures analysis of variance (rmANOVA) with a 2 × 2 within-subjects factorial design; the mean RT on the size judgment task was the dependent variable and hand constraint (hand constraint or no constraint) and stimuli manipulability (manipulable or nonmanipulable) were independent variables. All independent variables were manipulated within participants. The significance level was set at 5% for all analyses. In the post hoc analysis, the false discovery rate (FDR)50 was calculated to control for the probability of type I errors given multiple comparisons; the FDR-corrected significance level was set at 5%.

Hemodynamic activity of the ROIs

(a) The channel closest to the AIP (x = − 40, y = − 40, z = 40)51was selected among the designed probes (see supplementary information), and the z scores of the hemodynamic response of that channel were considered the dependent variable. (b) The channel closest to the CIP (x = − 15.7, y = − 67.8, z = 56.8)51 was selected among the designed probes, and the z scores of the hemodynamic response of that channel were considered the dependent variable. We conducted an rmANOVA with hand constraint and the manipulability of the stimuli as independent variables. (c) Two channels in the SMG (BA 40) and (d) two channels in the AG (BA 39) were selected among the designed probes, and the z scores of the hemodynamic responses of those channels were considered the dependent variables. We conducted an rmANOVA with channels, hand constraint, and manipulability as independent variables. The details of the fNIRS analysis are provided in the Supplementary Information.

Result

Naming latency

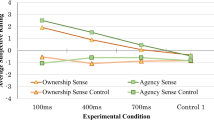

The mean RT for each oral response in the size judgment task is shown in Fig. 2. The number of trials in which participants failed to respond within the presentation time of the stimulus was 0.18% of all trials.

Mean RT (reaction time) in the size judgment task. The orange (hand constraint) and blue (no constraint) dots indicate the mean RT of each participant. Means are presented by horizontal lines. Vertical lines indicate the 95% confidence intervals calculated after subtracting the random effects of participants according to the Cousineau–Morey method52,53.

The results of rmANOVA and post hoc t test are shown in Table 1. The main effects of hand constraint and manipulability were not significant. The interaction between hand constraint and manipulability was significant. The post hoc analyses did not detect significant differences between conditions.

The significant interaction suggests that the interference effect of the hand constraint was stronger toward manipulable objects. Although the stimulus set used in the experiment was controlled for frequency and imaginability across conditions, the difficulty of the size judgments was not controlled. The mean RT was shorter in the manipulable condition without hand constraint, but this difference was not significant. However, significance of the interaction indicates that hand constraint had different effects on processing of manipulable and nonmanipulable objects, independent of the difficulty of judgments in each condition. These results are consistent with previous studies17,18, suggesting that hand constraint suppresses semantic processing of hand-manipulable objects.

fNIRS results

We used oxygenated hemoglobin as the dependent variable because it is the most sensitive parameter of the hemodynamic response54,55. We calculated the z scores of the smoothed hemodynamic response in the target task by using the mean value and standard deviation during the last 5 s of baseline activity.

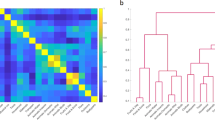

Figure 3 shows the mean z scores of hemodynamic responses, and Fig. 4 indicates the grand averaged waveforms of hemodynamic responses in the LIPS and LIPL. The dots on the brain displayed in Fig. 3 show the positions of channels that measure the activity of the AIP, CIP, SMG, and AG for each participant. The rmANOVA results showed a similar tendency for all regions (Table 1). The interaction between hand constraint and manipulability was significant in the CIP, SMG, and AG. In the post hoc analysis, the effect of hand constraint on manipulable objects was significant in AIP and CIP, while the main effect of hand constraint on nonmanipulable objects was not significant. The significant interaction in the CIP, SMG, and AG, together with the effect of hand constraint on manipulable objects in AIP and CIP, suggested that hand constraint suppressed the hemodynamic response to the manipulable objects in the LIPS and LIPL, which is consistent with the mean RT trend. This result is congruent with Chao & Martin's27 findings, showing that the AIP was activated by viewing and naming pictures of tools. It is also compatible with performance in memory tasks17,18.

Hemodynamic activity while the participants were performing the size judgment task. Hemodynamic activity of the channels located in the (a) AIP (anterior part of IPS), (b) CIP (caudal part of the IPS), (c) SMG (supramarginal gyrus, BA 40) and (d) AG (angular gyrus, BA 39). Each participant’s mean z score of the hemodynamic responses in the size judgment task is shown. The orange (hand constraint) and blue (no constraint) dots indicate the mean z score of each participant's hemodynamic response during the task (n = 28). Means are presented by horizontal lines. Vertical lines indicate the 95% confidence intervals calculated after subtracting the random effects of participants according to the Cousineau–Morey method52,53. The color of each group of dots on the brain displays the positions of the channels of each participant in the Montreal Neurological Institute (MNI) coordinates. In SMG and AG, the data from two channels are displayed. FDR corrections were applied to the post hoc t tests to control for multiple comparisons. *p < 0.05.

The averaged waveforms of hemodynamic responses in the LIPS and LIPL. The waveforms represent the mean z scores of the target task in each second. The standard error of the mean is illustrated as a shaded area around the waveform in corresponding colors. The task started at 0 s (vertical break line).

Discussion

The present study investigated how LIPS and LIPL activity during semantic processing of manipulable objects is affected by motor restrictions. The interactions between hand constraint and stimulus manipulability were significant in the hemodynamic responses of the CIP, SMG, and AG. In addition, the activity in response to manipulable objects was significantly decreased with hand constraint in AIP and CIP. Our first hypothesis that hand constraint reduces the activity of the LIPS and LIPL while executing semantic processing of manipulable objects was supported, at least for LIPS. As shown in Figs. 3 and 4, similar tendencies were observed between the AIP, CIP, SMG, and AG regions. The significant interaction in the analyses of the RT indicates that hand constraint had a more inhibitory effect on the manipulable object than on the nonmanipulable object. This result supported the second hypothesis that hand constraint delays semantic processing related to manipulable objects, and is congruent with the memory performance findings observed for manipulable objects when hand constraints were used17,18.

The current evidence that hand constraints suppressed semantic processing of manipulable objects in the LIPS and LIPL is consistent with previous studies suggesting that the LIPL provides essential motor information for recognizing manipulable objects27 and that the left parietal lobe has a key role in the use of tools and instruments28,56,57. This is also in line with several studies demonstrating that the LIPL is involved in word semantic processing32,33,58 and that the LIPS is activated in tasks that classify words into categories40. As mentioned in the introduction, the SMG involves processing motor imagery, such as hand shape and movement41.

Why did the hand restraints inhibit the activity of the LIPS and LIPL? As already mentioned, Dutriaux & Gyselinck17 speculated that information about the impossibility of hand movement might have suppressed activity in brain areas involved in motor simulation, resulting in decreased memory for manipulable objects. The authors assumed that the motor cortex is involved in motor simulation. On the other hand, we measured brain activity in the LIPS and LIPL and observed reduced activity due to hand constraints, suggesting that the LIPS and LIPL are engaged in motor simulation. It is known that sensory feedback that would occur during spontaneous action movement is predicted as the efference copy, and the predicted sensory consequences of the movement influence processing across the somatosensory cortex59. Several reports have suggested that the AIP compares the efference copy of a motor command with input sensory information about the action being performed60,61. Damage to the AG causes an inability to imitate gestures, which highlights the crucial role of the AG in encoding body parts62. The LIPL is further associated with the processing of somatosensory information. Specifically, the LIPL receives somatosensory information and processes spatial information, such as locations and distances obtained from tactile sensations, as well as structural information, such as body part location perceptions63. Since the somatosensory information that the hand is restrained is inconsistent with the results of motor simulations evoked by words denoting objects that can be manipulated with the hand, the activity of the LIPS and LIPL may be suppressed, and semantic processing based on the motor simulations may also be delayed.

The relationship between motor imagery and motor simulation has not been fully elucidated64. Understanding the meaning of words that denote hand-manipulable objects does not necessarily require the generation of motor imagery. However, in our experiment, participants were instructed to compare the sizes of two objects. This task may have evoked motor imagery of manipulating these objects by hand, possibly resulting in SMG activation.

In this study, the LIPS and LIPL, which are related to semantic processing, were defined as ROIs. Chao & Martin27 showed that the ventral premotor area (BA 6) is activated when a person is looking at manipulable objects, and it is quite possible that motor cortex activity is also decreased by hand constraint, as anticipated by Dutriaux and Gyselinck17. Previous studies have demonstrated that the premotor cortex is activated not only during actual movement but also when reading sentences containing motor actions65,66,67,68,69. Furthermore, some studies have suggested that the comparator system, which compares the efference copy with sensory feedback, is located in the premotor area rather than in the parietal lobe70,71,72,73. In future studies, the effect of hand restraint on motor cortex activity should also be examined.

We observed the effects of hand constraint on the speed of semantic processing and brain activity. Conversely, the influence of the stimuli’s semantic properties on the speed of hand movement responses has been investigated in previous studies. The motor-evoked potential in the hand observed when a transcranial magnetic stimulation (TMS) pulse was administered to the primary motor cortex was affected by the visual presentation of an adjective indicating that hand grasping is dangerous74, such as "sharp." When making judgments whether a visually presented noun referred to a natural or artificial object with either a precision or a power reach-to-grasp movement, adjectives that denoted decreased object graspability, such as "sharp," attached to the nouns, slowed RT75. In addition, RT to pictures of plants, animals, and artifacts, or words that represented them, were slower when the pictures or words implied that grasping them was dangerous76,77,78. These studies imply that hand movements are inhibited when a stimulus is meant to be dangerous to grasp with the hand. The effects of hand restraints on the speed of semantic processing and brain activity identified in this study are similar to these findings. Further insight into the relationship between semantic processing and motor simulation should be obtained by comparing the effects of hand constraint and stimulus danger on brain activity.

The present study shows, for the first time, that hand movement constraints suppress brain activity toward hand-manipulable objects and affected the RT of semantic processing. Because we adopted a design to analyze the activation of the whole block during the task, we could not analyze the time-related changes in the hemodynamic response in this study. Analysis of the temporal and causal relationships between the LIPS/LIPL and the ventral premotor cortex would reveal network structures of those areas. Future studies should examine the effects of body constraint on the flow of information across the semantic processing network in the brain.

Method

Participants

We determined the sample size via G*Power79. Assuming a moderate effect size (f = 0.25), when "α = 0.05" and "1 − β = 0.8", the sample size required for a 2 × 2 within-subjects factorial design was 24, so we recruited approximately 30 participants. Thirty-six students and graduate students (24 females and 12 males, average age 19.94 years) at Osaka Prefecture University participated in the experiment. All participants agreed to wear the NIRS equipment, to maintain the designated posture during the test, and to have their oral responses recorded; they also provided written informed consent before the experiment. The experiment was approved by the Ethics Committee of the Graduate School of Humanities and Sustainable System Science, Osaka Prefecture University, and was performed in accordance with the latest version of the Declaration of Helsinki.

All participants were native Japanese speakers. With the exception of one non-respondent and two left-handed participant, all other participants were identified as right-handed. The data from the two participants who did not attend the second session and those from the six participants who displayed a hemodynamic response with excessive noise (see Supplementary Information) were excluded from the analysis, resulting in a total of 28 participants (mean age = 19.68 years, 18 females, 10 males, 25 right-handed).

Materials

We used 64 names of hand-manipulable objects (e.g., cups, pencils) and 64 names of nonmanipulable objects (e.g., tires, stairs; see Supplementary Information Tables S1, S2). We defined manipulable objects as those that can be grasped and operated by hand. We selected the stimuli based on Amano and Kondo80 and Sakuma et al.81 to equalize the usage frequency, imaginability, and number of letters of each word between conditions. No significant differences were found between the conditions as a result of the t test (frequency of use: t(126) = − 1.35, p = 0.18, number of letters: t(126) = − 0.38, p = 0.70, mental imagery: t(126) = 1.31, p = 0.19). Imaginability was scored as the difficulty of recalling the words as mental images82.

Apparatus

All experiments were performed using MATLAB (version 2018a, The Mathworks Inc., Natick, MA, USA) and Psychtoolbox83,84,85 software version 3.0.17 running on an HP Pavilion Wave 600 with an Ubuntu 18.04.3 LTS operating system. Participants were presented with stimuli at a viewing distance of approximately 60 cm from the 24-in. monitor (ASUS VG248QE; resolution: 1920 × 1080). The stimulus words were an average of three characters in length, with each character approximately 1 cm × 1 cm in size.

fNIRS data acquisition

We employed a 20-channel fNIRS system (LABNIRS, Shimadzu Corp., Kyoto, Japan), which is able to detect concentration changes in oxygenated hemoglobin (HbO), deoxygenated hemoglobin, and their sum by using three types of near-infrared light (wavelengths: 780, 805, and 830 nm). See Supplementary Information for details.

Procedures

All participants wore the NIRS measurement holder on their heads. The holder was attached by the experimenter, who was careful not to make the participant feel any sense of compression. The participants were asked to keep their hands on the desk in the no-constraint condition and to hold their hands under a transparent board in the hand-constraint condition (Fig. 1). To reduce participant fatigue, separate sessions for each condition were conducted on different days. Each session took approximately one hour, including fitting of the holder and measurement of the channel position. In the hand-constrained condition, the height of the board was adjusted by the experimenter to the extent that the participants could pull their hands out if they pulled hard enough. The average interval between the 1st and 2nd sessions was 3.4 days. The order of the hand-constraint and no-constraint conditions was counterbalanced between participants.

After participants agreed to take part in the experiment, they engaged in eight practice trials. The experimental trials consisted of eight blocks. Each block consisted of a control phase in which participants performed morphological processing and a target phase in which participants performed semantic processing.

In the control phase, participants answered orally whether the nonword stimuli presented on a display were written in "hiragana" or "katakana". Japanese has two phonetic writing styles, "hiragana" and "katakana." All words in Japanese can be written in both hiragana and katakana; however, katakana is mainly used for foreign words. The task in the control phase can be regarded as comparable to the discrimination between uppercase and lowercase letters in English. Each stimulus was presented for 3000 ms at 500 ms intervals, regardless of whether the participant responded. A red rectangular frame was presented around the word only when the microphone obtained a verbal response. The control phase was conducted to obtain a baseline for the fNIRS analysis.

In the target phase, two words were shown on a display, and participants judged verbally which of objects denoted by the words was physically bigger than the other (Fig. 1). We instructed the participants that this task had no correct answer because object sizes are variable; then, we asked participants to judge the size based on their own images.

Data availability

The datasets collected in this study are available from the corresponding author on reasonable request.

References

Lakoff, G., & Johnson, M. Philosophy in the Flesh (The Embodied Mind and Its Challenge to Western Thought) (Basic Books, 1999).

Gallese, V. & Lakoff, G. The brain’s concepts: The role of the sensory-motor system in conceptual knowledge. Cogn. Neuropsychol. 22, 455–479 (2005).

Barsalou, L. W. Grounded cognition. Annu. Rev. Psychol. 59, 617–645 (2008).

Gallese, V. Mirror neurons and the social nature of language: The neural exploitation hypothesis. Soc. Neurosci. 3, 317–333 (2008).

Binder, J. R. & Desai, R. H. The neurobiology of semantic memory. Trends Cogn. Sci. 15, 527–536 (2011).

Anderson, M. L. & Penner-Wilger, M. Neural reuse in the evolution and development of the brain: Evidence for developmental homology?. Dev. Psychobiol. 55, 42–51 (2013).

Zwaan, R. A. Embodiment and language comprehension: Reframing the discussion. Trends Cogn. Sci. 18, 229–234 (2014).

Buccino, G., Colagè, I., Gobbi, N. & Bonaccorso, G. Grounding meaning in experience: A broad perspective on embodied language. Neurosci. Biobehav. Rev. 69, 69–78 (2016).

Tucker, M. & Ellis, R. Action priming by briefly presented objects. Acta Physiol. (Oxf) 116, 185–203 (2004).

Bub, D. N., Masson, M. E. J. & Cree, G. S. Evocation of functional and volumetric gestural knowledge by objects and words. Cognition 106, 27–58 (2008).

Borghi, A. M. & Riggio, L. Sentence comprehension and simulation of object temporary, canonical and stable affordances. Brain Res. 1253, 117–128 (2009).

Borghi, A. M. & Riggio, L. Stable and variable affordances are both automatic and flexible. Front. Hum. Neurosci. 9, 351 (2015).

Mahon, B. Z. & Caramazza, A. A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. J. Physiol. Paris 102, 59–70 (2008).

Chatterjee, A. Disembodying cognition. Langu. Cognit. 2, 79–116 (2010).

Halim Harrak, M., Heurley, L. P., Morgado, N., Mennella, R. & Dru, V. The visual size of graspable objects is needed to induce the potentiation of grasping behaviors even with verbal stimuli. Psychol. Res. https://doi.org/10.1007/s00426-021-01635-x (2022).

Ambrosini, E., Sinigaglia, C. & Costantini, M. Tie my hands, Tie my eyes. J. Exp. Psychol. Hum. Percept. Perform. 38, 263–266 (2012).

Dutriaux, L. & Gyselinck, V. Learning is better with the hands free: The role of posture in the memory of manipulable objects. PLoS ONE 11, e0159108 (2016).

Onishi, S. & Makioka, S. How a restraint of hands affects memory of hand manipulable objects: An investigation of hand position and its visibility. Cognit. Stud. 27, 250–261 (2020).

Vargas, C. D. et al. The influence of hand posture on corticospinal excitability during motor imagery: A transcranial magnetic stimulation study. Cereb. Cortex 14, 1200–1206 (2004).

Avanzino, L., Bassolino, M., Pozzo, T. & Bove, M. Use-dependent hemispheric balance. J. Neurosci. 31, 3423–3428 (2011).

Facchini, S., Muellbacher, W., Battaglia, F., Boroojerdi, B. & Hallett, M. Focal enhancement of motor cortex excitability during motor imagery: A transcranial magnetic stimulation study. Acta Neurol. Scand. 105, 146–151 (2002).

Kaneko, F., Hayami, T., Aoyama, T. & Kizuka, T. Motor imagery and electrical stimulation reproduce corticospinal excitability at levels similar to voluntary muscle contraction. J. NeuroEng. Rehabil. 11, 94 (2014).

Burin, D. et al. Movements and body ownership: Evidence from the rubber hand illusion after mechanical limb immobilization. Neuropsychologia 107, 41–47 (2017).

Burianova, H. et al. Adaptive motor imagery: A multimodal study of immobilization-induced brain plasticity. Cereb. Cortex 26, 1072–1080 (2016).

Taira, M., Mine, S., Georgopoulos, A. P., Murata, A. & Sakata, H. Parietal cortex neurons of the monkey related to the visual guidance of hand movement. Exp. Brain Res. 83, 29 (1990).

Sakata, H., Taira, M., Murata, A. & Mine, S. Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey. Cereb. Cortex 5, 429–438 (1995).

Chao, L. L. & Martin, A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage 12, 478–484 (2000).

Gainotti, G., Silveri, M. C., Daniel, A. & Giustolisi, L. Neuroanatomical correlates of category-specific semantic disorders: A critical survey. Memory 3, 247–263 (1995).

de Renzi, E. & Lucchelli, F. Ideational apraxia. Brain 111, 1173–1185 (1988).

Ochipa, C., Rothi, L. J. G. & Heilman, K. M. Ideational apraxia: A deficit in tool selection and use. Ann. Neurol. 25, 190–193 (1989).

Murata, A. et al. Object Representation in the Ventral Premotor Cortex (Area F5) of the Monkey. J. Neurophysiol. 78, 2226–2230 (1997).

Chou, T.-L. et al. Developmental and skill effects on the neural correlates of semantic processing to visually presented words. Hum. Brain Mapp. 27, 915–924 (2006).

Raposo, A., Moss, H. E., Stamatakis, E. A. & Tyler, L. K. Repetition suppression and semantic enhancement: An investigation of the neural correlates of priming. Neuropsychologia 44, 2284–2295 (2006).

Seghier, M. L. The angular gyrus. Neuroscientist 19, 43–61 (2013).

Binder, J. R., Desai, R. H., Graves, W. W. & Conant, L. L. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb. Cortex 19, 2767–2796 (2009).

Grèzes, J., Armony, J. L., Rowe, J. & Passingham, R. E. Activations related to “mirror” and “canonical” neurones in the human brain: An fMRI study. Neuroimage 18, 928–937 (2003).

Toni, I., Thoenissen, D. & Zilles, K. Movement preparation and motor intention. Neuroimage 14, S110–S117 (2001).

Vandenberghe, R. et al. Attentional responses to unattended stimuli in human parietal cortex. Brain 128, 2843–2857 (2005).

Todd, J. J. & Marois, R. Capacity limit of visual short-term memory in human posterior parietal cortex. Nature 428, 751–754 (2004).

Devereux, B. J., Clarke, A., Marouchos, A. & Tyler, L. K. Representational similarity analysis reveals commonalities and differences in the semantic processing of words and objects. J. Neurosci. 33, 18906–18916 (2013).

Lesourd, M. et al. Semantic and action tool knowledge in the brain: Identifying common and distinct networks. Neuropsychologia 159, 107918 (2021).

Pelgrims, B., Andres, M. & Olivier, E. Double dissociation between motor and visual imagery in the posterior parietal cortex. Cereb. Cortex 19, 2298–2307 (2009).

Buxbaum, L. J., Kyle, K., Grossman, M. & Coslett, B. Left inferior parietal representations for skilled hand-object interactions: evidence from stroke and corticobasal degeneration. Cortex 43, 411–423 (2007).

van Elk, M., van Schie, H. & Bekkering, H. Action semantics: A unifying conceptual framework for the selective use of multimodal and modality-specific object knowledge. Phys. Life Rev. 11, 220–250 (2014).

Haaland, K. Y., Harrington, D. L. & Knight, R. T. Neural representations of skilled movement. Brain 123, 2306–2313 (2000).

Kleineberg, N. N. et al. Action and semantic tool knowledge—Effective connectivity in the underlying neural networks. Hum. Brain Mapp. 39, 3473–3486 (2018).

Amedi, A., Malach, R., Hendler, T., Peled, S. & Zohary, E. Visuo-haptic object-related activation in the ventral visual pathway. http://neurosci.nature.com (2001).

Gough, P. M. et al. Nouns referring to tools and natural objects differentially modulate the motor system. Neuropsychologia 50, 19–25 (2012).

Visani, E. et al. The semantics of natural objects and tools in the brain: A combined behavioral and MEG study. Brain Sci. 12, 97 (2022).

Benjamini, Y. & Hochberg, Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B (Methodol.) 57, 289–300 (1995).

Shikata, E. et al. Localization of human intraparietal areas AIP, CIP, and LIP using surface orientation and saccadic eye movement tasks. Hum. Brain Mapp. 29, 411–421 (2008).

Cousineau, D. Confidence intervals in within-subject designs: Asimpler solution to Loftus and Masson’s method. Tutor. Quant. Methods Psychol. 1, 42–45 (2005).

Morey, R. D. Confidence intervals from normalized data: A correction to Cousineau (2005). Tutor. Quant. Methods Psychol. 4, 61 (2008).

Malonek, D. et al. Vascular imprints of neuronal activity: Relationships between the dynamics of cortical blood flow, oxygenation, and volume changes following sensory stimulation. Proc. Natl. Acad. Sci. 94, 14826–14831 (1997).

Strangman, G., Culver, J. P., Thompson, J. H. & Boas, D. A. A quantitative comparison of simultaneous BOLD fMRI and NIRS recordings during functional brain activation. Neuroimage 17, 719–731 (2002).

Binkofski, F. et al. Human anterior intraparietal area subserves prehension: A combined lesion and functional MRI activation study. Neurology 50, 1253–1259 (1998).

Perenin, M. T. & Vighetto, A. Optic ataxia: A specific disruption in visuomotor mechanisms: I. Different aspects of the deficit in reaching for objects. Brain 111, 643–674 (1988).

Barbeau, E. B. et al. The role of the left inferior parietal lobule in second language learning: An intensive language training fMRI study. Neuropsychologia 98, 169–176 (2017).

Christensen, M. S. et al. Premotor cortex modulates somatosensory cortex during voluntary movements without proprioceptive feedback. Nat. Neurosci. 10, 417–419 (2007).

Rice, N. J., Tunik, E. & Grafton, S. T. The anterior intraparietal sulcus mediates grasp execution, independent of requirement to update: new insights from transcranial magnetic stimulation. J. Neurosci. 26, 8176–8182 (2006).

Tunik, E., Rice, N. J., Hamilton, A. & Grafton, S. T. Beyond grasping: Representation of action in human anterior intraparietal sulcus. Neuroimage 36, T77–T86 (2007).

Goldenberg, G. Apraxia and the parietal lobes. Neuropsychologia 47, 1449–1459 (2009).

de Haan, E. H. F. & Dijkerman, H. C. Somatosensation in the brain: A theoretical re-evaluation and a new model. Trends Cogn. Sci. 24, 529–541 (2020).

O’Shea, H. & Moran, A. Does motor simulation theory explain the cognitive mechanisms underlying motor imagery? A critical review. Front. Hum. Neurosci. 11, 72 (2017).

Glenberg, A. M. & Kaschak, M. P. Grounding language in action. Psychon. Bull. Rev. 9, 558–565 (2002).

Hauk, O., Johnsrude, I. & Pulvermüller, F. Somatotopic representation of action words in human motor and premotor cortex. Neuron 41, 301–307 (2004).

Tettamanti, M. et al. Listening to action-related sentences activates fronto-parietal motor circuits. J. Cogn. Neurosci. 17, 273–281 (2005).

Zwaan, R. A. & Taylor, L. J. Seeing, acting, understanding: Motor resonance in language comprehension. J. Exp. Psychol. Gen. 135, 1–11 (2006).

Lee, C., Mirman, D. & Buxbaum, L. J. Abnormal dynamics of activation of object use information in apraxia: Evidence from eyetracking. Neuropsychologia 59, 13–26 (2014).

Garbarini, F. et al. To move or not to move? Functional role of ventral premotor cortex in motor monitoring during limb immobilization. Cereb. Cortex 29, 273–282 (2019).

Berti, A. et al. Shared Cortical Anatomy for Motor Awareness and Motor Control. https://www.science.org.

Fornia, L. et al. Direct electrical stimulation of the premotor cortex shuts down awareness of voluntary actions. Nat. Commun. 11, 1 (2020).

Bolognini, N., Zigiotto, L., Carneiro, M. I. S. & Vallar, G. “How did I make It?”: Uncertainty about own motor performance after inhibition of the premotor cortex. J. Cogn. Neurosci. 28, 1052–1061 (2016).

Gough, P. M., Campione, G. C. & Buccino, G. Fine tuned modulation of the motor system by adjectives expressing positive and negative properties. Brain Lang. 125, 54–59 (2013).

Garofalo, G., Marino, B. F. M., Bellelli, S. & Riggio, L. Adjectives modulate sensorimotor activation driven by nouns. Cognit. Sci. 45, e12953 (2021).

Anelli, F., Borghi, A. M. & Nicoletti, R. Grasping the pain: Motor resonance with dangerous affordances. Conscious. Cogn. 21, 1627–1639 (2012).

Anelli, F., Nicoletti, R., Bolzani, R. & Borghi, A. M. Keep away from danger: Dangerous objects in dynamic and static situations. Front. Hum. Neurosci. https://doi.org/10.3389/fnhum.2013.00344 (2013).

Anelli, F., Ranzini, M., Nicoletti, R. & Borghi, A. M. Perceiving object dangerousness: An escape from pain?. Exp. Brain Res. 228, 457–466 (2013).

Faul, F., Erdfelder, E., Lang, A. G. & Buchner, A. G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191 (2007).

Amano, S. & Kondo, K. NTT Psycholinguistic Databases “Lexical Properties of Japanese” (Vol.7, frequency) (San-seido, 2000).

Sakuma, N. et al. NTT Psycholinguistic Databases “Lexical Properties of Japanese” (Vol.8 ,words, imaginability). (San-seido, 2008).

Sakuma, N. et al. Imageability ratings for 50, 000 Japanese words : Two rating experiments of visual and auditory presentation. IEICE Tech. Rep. (Thought Lang.) 100, 9–16 (2000).

Brainard, D. H. The psychophysics toolbox. Spat. Vis. 10, 433–436 (1997).

Pelli, D. G. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis. 10, 437–442 (1997).

Kleiner, M., Brainard, D. & Pelli, D. What’s new in Psychtoolbox-3? Perception 36 ECVP Abstract Supplement (2007).

Funding

The funding was provided by Japan Society for the Promotion of Science (Grant No. 21K12613).

Author information

Authors and Affiliations

Contributions

All authors designed the experiment. S.O. conducted the experiment and analysis. All authors contributed to the discussion of the results. S.O. wrote the main manuscript text and prepared the figures and tables. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Onishi, S., Tobita, K. & Makioka, S. Hand constraint reduces brain activity and affects the speed of verbal responses on semantic tasks. Sci Rep 12, 13545 (2022). https://doi.org/10.1038/s41598-022-17702-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-17702-1

This article is cited by

-

Extended plant cognition: a critical consideration of the concept

Theoretical and Experimental Plant Physiology (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.