Abstract

Handling constraints imposed on physical dimensions of microwave circuits has become an important design consideration over the recent years. It is primarily fostered by the needs of emerging application areas such as 5G mobile communications, internet of things, or wearable/implantable devices. The size of conventional passive components is determined by the guided wavelength, and its reduction requires topological modifications, e.g., transmission line folding, or utilization of compact cells capitalizing on the slow-wave phenomenon. The resulting miniaturized structures are geometrically complex and typically exhibit strong cross coupling effects, which cannot be adequately accounted for by analytical or equivalent network models. Consequently, electromagnetic (EM)-driven parameter tuning is necessary, which is computationally expensive. When the primary objective is size reduction, the optimization task becomes far more challenging due to the presence of constraints related to electrical performance figures (bandwidth, power split ratio, etc.), which are all costly to evaluate. A popular solution approach is to utilize penalty functions. Therein, possible violations of constraints degrade the primary objective, thereby enforcing their satisfaction. Yet, the appropriate setup of penalty coefficients is a non-trivial problem by itself, and is often associated to extra computational expenses. In this work, we propose an explicit approach to constraint handling, which is combined with the trust-region gradient-search procedure. In our technique, the decision about the adjustment of the search radius is determined based on the reliability of rendering the feasible region boundary by linear approximation models of the constraints. Comprehensive numerical experiments conducted using three miniaturized coupler structures demonstrate superiority of the presented method over the penalty function paradigm. Apart from the efficacy, its appealing features include algorithmic simplicity, and no need for tailoring the procedure for a particular circuit to be optimized.

Similar content being viewed by others

Introduction

One of the important considerations in the design of modern high-frequency circuits and systems is miniaturization. Small size has become a prerequisite for a growing number of application areas that include mobile communications1, wearable2 and implantable devices3, internet of things4, medical imaging5, or energy harvesting6. Physical dimensions of conventional microwave passive components are related to the guided wavelength, which make them unsuitable for space-limited applications, except for structures implemented on high-permittivity substrates. In the context of circuit architecture, size reduction can be achieved by various means, including transmission line (TL) folding7,8, replacement of conventional TLs by compact microstrip resonant cells (CMRCs)9 capitalizing on slow-wave phenomenon10, as well as the employment of various geometrical modifications (e.g., defected ground structures11, slots12, stubs13, shorting pins14, substrate integrated waveguides15, etc.). The aforementioned techniques generally lead to complex, and often densely arranged layouts. The presence of electromagnetic (EM) cross-coupling effects within these structures makes the traditional characterization methods (analytical or equivalent network models) inadequate. Instead, reliable evaluation of miniaturized circuit has to rely on full-wave EM simulation tools.

Appropriate selection of the circuit architecture is only the first step of rendering a high-performance design. In order to achieve the smallest possible size while satisfying requirements imposed on the electrical parameters (allocation of operating frequencies, bandwidth, power split ratio, phase response), geometry parameters of the circuit have to be carefully tuned. Given multi-dimensional parameter spaces along with the necessity of handling several objectives and constraints, the parameter adjustment process needs to resort to rigorous numerical optimization algorithms16,17. At the same time, EM-driven optimization is computationally expensive: even local (e.g., gradient-based18 or derivative-free19) procedures may require many dozens of EM analyses, whereas tasks such as global search20, multi-objective design21, or uncertainty quantification22, are far costlier. Not surprisingly, the literature is replete with acceleration methods23,24,25,26,27. These include utilization of adjoint sensitivities28 or sparse Jacobian updates29 to expedite gradient-based procedures, the employment of dedicated solvers30, and, more and more popular, surrogate-based procedures31. The latter may employ data-driven (or approximation-based)32,33, and physics-based metamodels34, but also machine learning frameworks35. The latter are often combined with sequential sampling methodologies36 for iterative construction and refinement of the models. Some of popular approximation-based modelling methods in the context of EM-driven optimization include kriging37, Gaussian process regression38, neural networks in many variations (e.g.,39,40,41), support vector machines42, polynomial chaos expansion43. Physics-based metamodels are most often constructed using space mapping44, and response correction methods (e.g., adaptive response scaling45, manifold mapping46, etc.).

When it comes to EM-driven size reduction, a potentially high-cost of the process is only one of the challenges. The major issue is to control the constraints. As circuit miniaturization is generally detrimental to electrical performance figures, any practical design has to be a trade-off between achieving a possibly compact size and fulfilment of specifications imposed on the circuit characteristics. The latter are often expressed in terms of acceptance levels for return loss, bandwidth, power split, etc., over the frequency bands of interest. In mathematical terms, these conditions are essentially constraints. Their evaluation is computationally-heavy due to the involvement of EM analysis. Consequently, straightforward constraint handling is inconvenient. A widely used alternative is to incorporate penalty functions47, in which the main objective (size reduction) is supplemented with a linear combination of appropriately quantified constraint violations48. The advantage of this approach is problem reformulation, so that it becomes a formally unconstrained endeavor. Yet, the efficacy of optimization dependent on the setup of the proportionality factors of the aforementioned linear combination (referred to as penalty coefficients). Tailoring their values to a specific structure is non-trivial and typically requires execution of test runs, contributing to the overall computational cost of the process.

This paper discusses a novel methodology for simulation-driven miniaturization of microwave passive components. Our approach employs explicit handling of design constraints, which are approximated—in any given iteration of the optimization process—by their linear approximation models. The quality of this approximation, in particular the predictions concerning solution feasibility, are verified upon generating a new solution, and used to govern the decision-making process that controls the search radius within the trust-region procedure being the main optimization algorithm. The decision-making factors include the feasibility status of the current design, as well as the amount of constraint violation improvement (of the lack thereof). Furthermore, the tolerance levels for constraint violations are gradually tightened in the course of the optimization process, governed by its convergence indicators. The proposed constraint handling method is simple to implement and does not require any setup of control parameters (as opposed to penalty coefficients within the penalty function approach). It is validated using three structures of miniaturized rat-race and branch-line couplers with the constraints imposed on the circuit bandwidth and power split ratio. The obtained results are benchmarked against the penalty function techniques. We demonstrate that the presented procedure allows for a precise control over constraints, as well as for achieving competitive miniaturization rates. Perhaps its most appealing feature is that it does not have to be tuned to any specific circuit at hand.

Miniaturization of microwave passives with direct constraint control

Here, we introduce the procedure for miniaturization of microwave components proposed in this work. “Simulation-based size reduction: problem formulation” provides the formulation of the miniaturization task. In “Explicit constraint handling: the concept”, we discuss the concept of direct control of CPU-heavy constraints within trust-region gradient-based algorithm. The technical details of controlling the tolerance levels for constraint violation as well as decision-making process that adjusts search radius are elaborated on in “Explicit constraint handling: constraint-related gain ratios”. Finally, “Explicit constraint handling: optimization algorithm” summarizes the entire procedure.

Simulation-based size reduction: problem formulation

We denote by x = [x1 ⋯ xn]T a vector of adjustable parameters of the circuit under design. For passive components, these are normally geometry parameters (circuit dimensions). Let A(x) be the circuit size, e.g., its footprint area. The objective is to reduce the size as much as possible, i.e., to solve

where x* is the optimum parameter vector to be identified, whereas U(x) is the objective function. In the case of miniaturization, we have U(x) = A(x). The problem (Eq. 1) is subject to constraint, which can be of

or equality type

In this work, we will only consider inequality constraints, which are the most common. Also, an equality constraint ηk(x) = 0 can be represented in an inequality form as |ηk(x)|≤ 0.

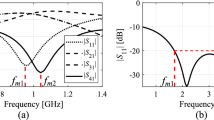

Let us consider an example of a microwave coupler, which is to be miniaturized while satisfying the following conditions.

-

The power split ratio |S31(x,f) − S21(x,f)| is zero at f = f0 (the operating frequency);

-

The matching and isolation characteristics are supposed to satisfy |S11(x,f)| ≤ − 20 dB, and |S41(x,f)| ≤ − 20 dB for f ∈ F, where F is a frequency range of interest (intended circuit bandwidth).

These conditions can be formulated as constraints γ1(x) ≤ 0 and γ2(x) ≤ 0, with γ1(x) =||S31(x,f) − S21(x,f)||, and γ2(x) = max{f ∈ F: max{|S11(x,f)|, |S41(x,f)|}} + 20.

Thus, for the exemplary coupler, we may formulate the miniaturization task as

subject to constraints

For the sake of illustration, let us consider another example of an optimization task, which is but oriented towards improving selected electrical performance figures rather than size reduction. Assume that the goal is to minimize the maximum reflection within the frequency range of interest of an impedance matching transformer. In this case, the merit function is defined as

In Eq. (7), |S11(x,f)| stands for the circuit reflection, whereas F refers to the frequency range of interest.

Explicit constraint handling: the concept

Evaluation of constraints imposed on electrical characteristics of microwave components is computationally expensive: their values are obtained by post-processing EM simulation data. This is troublesome from the point of view of numerical optimization procedures, as local methods typically require constraint gradients. Unless adjoint sensitivities are available49, estimation of these requires finite differentiation, and the constraints may not be differentiable due to their very formulation as minimax functions (cf. “Simulation-based size reduction: problem formulation”). Also, EM simulation results may contain a certain level of numerical noise, being a result of adaptive meshing techniques, or specific termination criteria used by the EM solvers. As mentioned before, a common mitigation method is a penalty function approach47, where the cost function is defined through aggregation of the main objective (size reduction) and contributions from constraint violations, appropriately scaled using weighting factors (penalty coefficients). Although conceptually attractive, optimum setup of the coefficient values is generally an intricate task, often associated with preparatory optimization runs.

This paper offers an alternative approach to constraint handling, which is an explicit method. It employs linear approximation models of the system response, therefore, a natural choice for the underlying optimization algorithm is the trust-region (TR) framework50. The standard TR procedure yields a series of approximate solutions x(i), i = 0, 1, …, that converge to x*. The new vector x(i+1) is obtained by solving

where UL(i) is a first-order Taylor model of the scalar merit function U. The solution to Eq. (8) is restricted to the vicinity of x(i) determined by the size parameter δ(i). Additionally, we have inequality constraints of the form of Eq. (2); equality constraints are not considered for the sake of simplicity, cf. “Simulation-based size reduction: problem formulation”. The TR radius δ(i) is adaptively adjusted using the standard TR rules (e.g.50).

The problem (Eq. 8) is solved using Matlab’s fmincon algorithm, which implements the Sequential Quadratic Programming (SQP) procedure, one of the state-of-the-art procedures for constrained continuous optimization. The SQP procedure directly handles geometry constraints (here, the TR condition ||x − x(i)|| ≤ δ(i)), whereas the constraints related to electrical performance figures are controlled using the explicit approach being the subject of this paper. The details are explained in the remaining part of this section.

If size reduction is of interest, the evaluation of the merit function incurs negligible costs: the structure size can be obtained directly from the system geometry parameters, i.e., the vector x. On the other hand, maintaining solution feasibility becomes problematic due to expensive constraints. In this work, constraint control is achieved by the incorporation of linearized models γL.k of the constraints γk, k = 1, …, nγ, and adaptive adjustment of the trust-region size parameter δ(i). The decision-making process governing the latter involves quantification of the reliability of γL.k in predicting the feasibility status in the course of the optimization process.

In the following, we will denote as r(x) the vector of EM-simulated outputs (e.g., S-parameters) of the circuit of interest. A first-order Taylor model rL(i)(x) of the response r(x), established at the design x(i), is defined

where the Jacobian matrix of the component response at the design x(i) is denoted as J(x(i)). In most cases, it is estimated by means of finite differentiation. For the purpose of subsequent considerations, we will explicitly indicate that the constraints are functions of the circuit characteristics, i.e., we have γk = γk(r(x)). Then, the linear model γL.k is defined as

When solving the trust-region sub-problem (Eq. 2), the exact constraints γk(r(x)) will be replaced by their linearized versions (Eq. 10). The accuracy of representing γk by γL.k depends on a particular location in the parameter space and on the trust-region size parameter δ(i), which is because ||γk(r(x)) − γL.k(x)|| is proportional to ||x − x(i)||2 (for sufficiently small design relocations). Consequently, a proper updating procedure for δ(i) is essential. In particular, maintaining small values of the TR radius improves the alignment between γL.k(x) and γk(r(x)), whereas increasing it allows for increased-size steps in the design space while solving (Eq. 8). The adjustment of δ(i) should take into account the solution feasibility predictions according to γL.k(x), but also the actual feasibility status (as verified by EM simulation). At the generic level, the adaptation scheme is arranged the same way as for conventional TR algorithms50, i.e.,

In Eq. (11), the coefficients minc and mdec are used for incrementing and decrementing the TR region size, respectively, whereas θinc and θdec represent appropriate threshold. In our approach, we employ their typical values, i.e., we have minc = 2 and minc = 3, as well as θinc = 0.75 and θdec = 0.2550. As mentioned earlier, these are the typical values used in the TR algorithms. According to classical theory (e.g.50), the specific values of coefficients are not critical for the algorithm operation.

However, the decision-making factor θ in Eq. (11) is the gain ratio pertinent to the constraints. It compares the actual alteration of constraint violations to those predicted by the linear model for subsequent iterations (specifically, the (i + 1)th versus the ith one). The modification coefficients as well as the thresholds in Eq. (11) mimic the conventional rules of the TR frameworks (cf.51).

In the case of multiple constraints, the coefficient ρ is generalized to account for the worst-case situation over the entire set gk, k = 1, …, ng. We have

Definition of the factors θk for each γk(x), k = 1, …, nγ, is pivotal to the successful operation of the proposed optimization procedure. It will be discussed at length in the next section.

Explicit constraint handling: constraint-related gain ratios

This section elaborates on the definition and evaluation of the constraint-based gain ratios θk, utilized to control the trust region size as discussed in “Explicit constraint handling: the concept”. In the following, we will denote by Γk(i) the threshold for accepting the violation of γk(x) at the iteration i. The threshold is iteration dependent for the reasons explained at the end of the section. At this point, we will outline the rules for computing the ratios θk utilized in decision-making process that governs the search radius adjustments:

-

Rule 1: If γk(r(x(i))) > Γk(i), i.e., the constraint violation before executing the (i + 1)th iteration exceeds the acceptance threshold, then

$$\theta_{k} = \frac{{\gamma_{k} ({\varvec{r}}({\varvec{x}}^{(i + 1)} )) - \gamma_{k} ({\varvec{r}}({\varvec{x}}^{(i)} ))}}{{\gamma_{L.k} ({\varvec{x}}^{(i + 1)} ) - \gamma_{L.k} ({\varvec{x}}^{(i)} )}}$$(13) -

Rule 2: If γk(x(i)) ≤ Γk(i), i.e., the constraint violation is at the acceptable level, then

$$\theta_{k} = \frac{1}{2}\left[ {1 + {\text{sgn}} \left( {\gamma_{k} ({\varvec{r}}({\varvec{x}}^{(i)} )) - \gamma_{k} ({\varvec{r}}({\varvec{x}}^{(i + 1)} ))} \right)} \right]$$(14) -

Rule 3: If θk < 0 (as computed using Eqs. (13) or (14)) but γk(r(x(i+1))) ≤ Γk(i), i.e., EM-evaluated constraint violation is acceptable, then the value of θk is overwritten to θk = 0.5.

The above rules serve for two purposes. On the one hand, one needs to impose penalty on insufficient prediction accuracy of γL.k if the constraint violation is large (Rule 1), or the feasibility condition has not been improved in the case of minor constraint infringement (Rule 2). On the other hand, the conditions (Eqs. 13 and 14) are employed to promote sufficient prediction of γk by γL.k (cf. Eq. (13)), or relocation of the design towards the feasible region (cf. Eq. (14)). The role of Rule 3 is to overwrite the previous ones if the EM-evaluated constraint violation γk(r(x(i+1))) at the candidate design x(i+1) is within the acceptance threshold. Rule 3 has been introduced to prevent erratic operation for the designs residing in the vicinity of the feasible region boundary, in particular, near-zero constraint infringements (either positive or negative) in any given iteration. The graphical illustration of acceptable and insufficient evaluation of the constraint γk by the linearized model γL.k has been provided in Fig. 1.

Prediction of design constraints by means of linear approximation model γL.k of (Eq. 10). The top picture illustrates relocation of the design from x(i) to x(i+1) obtained by solving (Eq. 8). In this example, x(i) is assumed feasible, whereas x(i+1) is allocated at the boundary of the feasible region according to the approximation model γL.k. The bottom-left picture illustrates a case of satisfactory constraint prediction by γL.k, i.e., the design x(i+1) is feasible according to the EM simulation data. The bottom-right picture shows a case of poor prediction: the design x(i+1) is infeasible according to the true constraint value evaluated through EM analysis. The latter will result in a reduction of the search region size δ(i) in the next iteration (cf. Eqs. (13, 14)).

In the remaining part of this section we discuss the acceptance thresholds Γk(i). At the early stages of the optimization process (far from convergence), it is advantageous to relax the acceptance thresholds for constraint violation in order to facilitate identification of small-size designs. However, when close to convergence, the thresholds should be tightened to ensure more precise control over constraints. In practice, this is realized by adjusting the threshold values based on the convergence status of the optimization process. Let Γk.max be a user-defined maximum violation level. Further, let ε be a small positive number determining the algorithm termination. In this work, the termination condition is formulated as ||x(i+1) − x(i)||< ε or δ(i) < ε. For a given iteration i, let us define the convergence indicator

Note that ψ(i) is large when the optimization process is launched, and it is reduced to unity upon convergence. We also have the threshold

As Γk(i+1) is proportional to ψ(i), it is initially equal to Γk.max, and gradually diminished to αΓk.max upon convergence. Here, α is assumes small values greater than zero, e.g., 0.1 or 0.01. This value is not of key importance for the operation of the procedure.

Explicit constraint handling: optimization algorithm

Figure 2 shows the operating flowchart of the proposed size reduction procedure with explicit handling of design constraints. Apart from the termination threshold, the algorithm only contains the following control parameters: the threshold Γk.max (maximum tolerance for constraint violation), and the scaling coefficient α. These parameters are not critical for the algorithm performance. As a matter of fact, we will keep these values fixed for all verification case studies considered in “Demonstration examples”. The acceptance of the candidate design x(i+1) produced by solving (Eq. 8) is based on the standard TR rules, i.e., it is accepted if the decision-making factor θ is positive, and rejected otherwise. In the latter case, the iteration is repeated with a reduced search region. Note that θ > 0 if either the violation of the constraint has been reduced to a sufficient extent, or the design was relocated to the feasible region.

Demonstration examples

This section summarizes the results of numerical experiments conducted to validate and benchmark the proposed size reduction approach. Verification is based on three compact microstrip couplers, including a branch-line and two rat-race circuits. Our procedure is compared to optimization involving penalty function approach in several variations featuring different setups of penalty coefficients. The performance figures of interest include the obtained circuit size, as well as the accuracy of controlling the constraints, related to the circuit bandwidth and the power split ratio.

Verification circuits

The considered circuits have been shown in Fig. 3. Their computational models are implemented in CST Microwave Studio and evaluated using the time-domain solver. In all cases, the main objective is reduction of the circuit footprint area. There are two constraints imposed on the circuit S-parameters: γ1(x) =||S31(x,f0) − S21(x,f0)|| − 0.1 dB, and γ2(x) = max{f ∈ F: max{|S11(x,f)|, |S41(x,f)|}} + 20 dB, where f0 is the center frequency, and F is the intended circuit bandwidth. Two scenarios are considered with different choice of bandwidth for each circuit.

The first constraint enforces equal power split ratio within 0.1 dB tolerance, whereas the second ensures that the circuit impedance matching and port isolation are better or equal − 20 dB within the operating band. Table 1 provides essential data about all three structures.

Experimental setup and results

The proposed optimization procedure has been applied to the circuits of Fig. 3. In each case, the initial design (the last row of Table 1) was obtained by optimizing the respective circuits to improve the matching and isolation within the operating bandwidth F, subject to equal power split constraint. This means, in particular, that the starting points are feasible from the point of view of both constraints γ1 and γ2 (cf. “Verification circuits”). The termination threshold is set to ε = 10−3, the acceptance threshold are chosen to be Γ1.max = 1 dB, Γ2.max = 0.3 dB, and α = 0.1.

The results are compared to the algorithm employing the penalty function approach. Therein, the objective function is defined as

where the penalty functions c1 and c2 measure relative constraint violations, i.e., we have

The benchmark algorithm is run for all combinations of the penalty coefficients β1 ∈ {10, 100, 1000, 10,000}, β2 ∈ {10, 100, 1000, 10,000}. This is to illustrate the fact that optimum performance of the algorithm requires identification of the appropriate setup of the penalty terms, and sub-optimal setup leads to inferior constraint control or miniaturization rates.

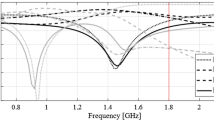

The numerical results have been gathered in Tables 2, 3 and 4. These include the achieved footprint area of the respective circuits, as well as constraint violations at the final design. Figures 4, 5 and 6 show the circuit characteristics at the initial and the optimized designs for all circuits, along with the history of the circuit footprint area and violation of constraints during the optimization run.

Initial (gray) and optimized (black) S-parameters of Circuit I. The vertical and horizontal lines mark the target operating bandwidth and the acceptance level for the matching |S11| and isolation |S41| responses. Also shown is the evolution of the circuit size and constraint violations (in case of feasibility, violations shown as zero): (a) design scenario I (bandwidth 1.45–1.55 GHz), (b) design scenario II (bandwidth 1.47–1.53 GHz).

Initial (gray) and optimized (black) S-parameters of Circuit II. The vertical and horizontal lines mark the target operating bandwidth and the acceptance level for the matching |S11| and isolation |S41| responses. Also shown is the evolution of the circuit size and constraint violations (in case of feasibility, violations shown as zero): (a) design scenario I (bandwidth 0.9–1.1 GHz), (b) design scenario II (bandwidth 0.95–1.05 GHz).

Initial (gray) and optimized (black) S-parameters of Circuit III. The vertical and horizontal lines mark the target operating bandwidth and the acceptance level for the matching |S11| and isolation |S41| responses. Also shown is the evolution of the circuit size and constraint violations (in case of feasibility, violations shown as zero): (a) design scenario I (bandwidth 1.15–1.25 GHz), (b) design scenario II (bandwidth 1.18–1.22 GHz).

Discussion

The results presented in “Experimental setup and results” allow us to formulate a number of remarks concerning performance of the proposed optimization procedure with explicit handling of design constraints. Furthermore, our methodology can be conclusively compared with the benchmark methods employing the penalty function approach. The observations are as follows.

-

The proposed algorithm performs consistently for all considered verification circuits. In particular, it enables a satisfactory control of both design constraints. There is no violation for power split ratio constraint observed, whereas the maximum violation for the matching/isolation constraint is only 0.5 dB for Circuit II (design scenario II); however, it should be noted that the acceptance threshold is − 20 dB.

-

The performance of benchmark methods is highly dependent on the penalty coefficient setup. Among sixteen combination of parameters, only a few lead to satisfactory results in terms of both ensuring good miniaturization ratio and sufficiently precise constraint handling. For Circuit I we have about three of such ‘good’ setups, for Circuit II there is only one (per design scenario), whereas for Circuit III about three.

-

For the particular setups ensuring good performance of the benchmark procedure, the obtained circuit sizes are comparable to those obtained by the proposed algorithm (which, on the other hand, does not require any setup or tailoring to the task at hand).

-

In the case of Circuit II, for most combinations of penalty coefficients featuring β1 ≥ 102, the optimization process becomes stuck at the early stages of the optimization process, leaving large feasibility margin for the second constraint. This is due to the fact that a large value of the first penalty coefficient along with a small margin for the power split ratio constraint (only 0.1 dB), makes the problem numerically challenging. More specifically, the objective function becomes highly nonlinear near the feasible region boundary, which hinders exploration of that region and leads to a premature convergence of the process. A similar effect can be observed for Circuit III, although it is less pronounced.

-

The proposed algorithm turns out to be less prone to the aforementioned issues due to the adaptive adjustment of the acceptance thresholds governed by the convergence status of the algorithm (cf. Eqs. (15, 16)).

-

The average costs of rendering the optimal designs by the proposed approach equal: 110, 80 and 55 full-wave simulations, for Circuit I, II and III, respectively. Whereas in the case of the benchmark procedure the corresponding costs are: 115, 57 and 45 EM simulations. Therefore, the proposed procedure is around 20% more expensive in terms of the number of EM analyses necessary for the algorithm to converge. Yet, given that in our approach there is virtually no need for tailoring the algorithm to render a satisfactory design meeting the design specifications, this additional cost seems to be justifiable. This is because tuning the optimization procedure to ensure its satisfactory operation normally entails additional computational expenses (e.g., for adjusting penalty coefficients in the case of implicit methods). Furthermore, the primary purpose of the presented technique was to improve the precision of controlling design constraints, and miniaturization rate, both of which have been conclusively demonstrated.

Given a large combined number of circuits, design scenarios, as well as penalty coefficient setups involved in this verification study, the observations summarized above should be categorized as conclusive. Overall, the performance of the presented procedure can be considered competitive over the benchmark (implicit) methods, both with respect to the accuracy of constraint handling and achievable miniaturization rates. The important advantages of the proposed algorithm include easy implementation and no need for adjusting any control parameters. The latter normally incurs extra computational expenses and may require a certain level of experience pertinent to optimization methods.

Conclusion

The purpose of this work was to propose a novel procedure for simulation-based miniaturization of microwave passives. Our approach involves direct control of constraints imposed on electrical performance figures of the circuit under design. Linear approximation models of the constraint functions are employed to make predictions concerning solution feasibility. Appropriately quantified quality of these predictions is utilized in the decision-making process that controls the search region size within the trust region framework. Furthermore, the constraint violation tolerance thresholds are governed by the convergence indicators of the optimization process in order to foster more aggressive size reduction at the early stages of the optimization process. Comprehensive numerical verification involving three microstrip couplers and six design scenarios demonstrate superior performance of the proposed technique as compared to the benchmark methods employing a penalty function approach for implicit constraint handling. Its major advantages include competitive size reduction ratios, accuracy in controlling constraint violation levels, consistency of results obtained for a variety of problems, straightforward implementation, as well as no need for tailoring the procedure to handle a particular microwave structure. The last feature is particularly important in practical applications: tuning the optimization procedure to ensure satisfactory operation (e.g., setting up penalty coefficients for implicit methods) normally entails additional computational expenses and may require optimization-related knowhow lacking by many microwave engineers.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request. Contact person: anna.dabrowska@pg.edu.pl.

References

Khan, M. S. et al. Eight-element compact UWB-MIMO/diversity antenna with WLAN band rejection for 3G/4G/5G communications. IEEE Open J. Ant. Propag. 1, 196–206 (2020).

Jiang, Z. H., Gregory, M. D. & Werner, D. H. Design and experimental investigation of a compact circularly polarized integrated filtering antenna for wearable biotelemetric devices. IEEE Trans. Biomed. Circ. Syst. 10(2), 328–338 (2016).

Kracek, J., Švanda, M., Mazanek, M. & Machac, J. Implantable semi-active UHF RFID tag with inductive wireless power transfer. IEEE Ant. Wireless Propag. Lett. 15, 1657–1660 (2016).

Zhang, J., Yan, S., Hu, X. & Vandenbosch, G. A. E. Dual-band dual-polarized wearable button array with miniaturized radiator. IEEE Trans. Biomed. Circ. Syst. 13(6), 1583–1592 (2019).

Zhang, H. et al. A low-profile compact dual-band L-shape monopole antenna for microwave thorax monitoring. IEEE Ant. Wireless Propag. Lett. 19(3), 448–452 (2020).

He, Z. & Liu, C. “A compact high-efficiency broadband rectifier with a wide dynamic range of input power for energy harvesting. IEEE Microwave Wireless Comp. Lett. 30(4), 433–436 (2020).

Martinez, L., Belenguer, A., Boria, V. E. & Borja, A. L. Compact folded bandpass filter in empty substrate integrated coaxial line at S-Band. IEEE Microwave Wireless Comp. Lett. 29(5), 315–317 (2019).

Hou, Z. J. et al. A compact and low-loss bandpass filter using self-coupled folded-line resonator with capacitive feeding technique. IEEE Electron Dev. Lett. 39(10), 1584–1587 (2018).

Chen, S. et al. A frequency synthesizer based microwave permittivity sensor using CMRC structure. IEEE Access 6, 8556–8563 (2018).

Qin, W. & Xue, Q. Elliptic response bandpass filter based on complementary CMRC. Electr. Lett. 49(15), 945–947 (2013).

Sen, S. & Moyra, T. Compact microstrip low-pass filtering power divider with wide harmonic suppression. IET Microwaves Ant. Propag. 13(12), 2026–2031 (2019).

Zhang, W., Shen, Z., Xu, K. & Shi, J. A compact wideband phase shifter using slotted substrate integrated waveguide. IEEE Microwave Wireless Comp. Lett. 29(12), 767–770 (2019).

Wei, F., Jay Guo, Y., Qin, P. & WeiShi, X. Compact balanced dual- and tri-band bandpass filters based on stub loaded resonators. IEEE Microwave Wireless Comp. Lett. 25(2), 76–78 (2015).

Yang, D., Zhai, H., Guo, C. & Li, H. A compact single-layer wideband microstrip antenna with filtering performance. IEEE Antennas Wireless Propag. Lett. 19(5), 801–805 (2020).

Liu, S. & Xu, F. Compact multilayer half mode substrate integrated waveguide 3-dB coupler. IEEE Microwave Wireless Comp. Lett. 28(7), 564–566 (2018).

Rayas-Sanchez, J. E., Koziel, S. & Bandler, J. W. Advanced RF and microwave design optimization: A journey and a vision of future trends. IEEE J. Microwaves 1(1), 481–493 (2021).

Zhang, F., Li, J., Lu, J. & Xu, C. Optimization of circular waveguide microwave sensor for gas-solid two-phase flow parameters measurement. IEEE Sensors J. 21(6), 7604–7612 (2021).

Feng, F. et al. Parallel gradient-based EM optimization for microwave components using adjoint- sensitivity-based neuro-transfer function surrogate. IEEE Trans. Microwave Theory Techn. 68(9), 3606–3620 (2020).

Na, W. et al. Efficient EM optimization exploiting parallel local sampling strategy and Bayesian optimization for microwave applications. IEEE Microwave Wireless Comp. Lett. 31(10), 1103–1106 (2021).

Koziel, S., Pietrenko-Dabrowska, A. & Al-Hasan, M. Improved-efficacy optimization of compact microwave passives by means of frequency-related regularization. IEEE Access 8, 195317–195326 (2020).

Güneş, F., Uluslu, A. & Mahouti, P. Pareto optimal characterization of a microwave transistor. IEEE Access 8, 47900–47913 (2020).

Ochoa, J. S. & Cangellaris, A. C. Random-space dimensionality reduction for expedient yield estimation of passive microwave structures. IEEE Trans. Microwave Theory Techn. 61(12), 4313–4321 (2013).

Zhang, Z., Cheng, Q. S., Chen, H. & Jiang, F. An efficient hybrid sampling method for neural network-based microwave component modeling and optimization. IEEE Microwave Wireless Comp. Lett. 30(7), 625–628 (2020).

Van Nechel, E., Ferranti, F., Rolain, Y. & Lataire, J. Model-driven design of microwave filters based on scalable circuit models. IEEE Trans. Microwave Theory Technol. 66(10), 4390–4396 (2018).

Li, Y., Xiao, S., Rotaru, M. & Sykulski, J. K. A dual kriging approach with improved points selection algorithm for memory efficient surrogate optimization in electromagnetics. IEEE Trans. Magn. 52(3), 1–4 (2016).

Spina, D., Ferranti, F., Antonini, G., Dhaene, T. & Knockaert, L. Efficient variability analysis of electromagnetic systems via polynomial chaos and model order reduction. IEEE Trans. Comp. Packaging Manufact. Technol. 4(6), 1038–1051 (2014).

Zhao, P. & Wu, K. Homotopy optimization of microwave and millimeter-wave filters based on neural network model. IEEE Trans. Microwave Theory Techn. 68(4), 1390–1400 (2020).

Sabbagh, M. A. E., Bakr, M. H. & Bandler, J. W. Adjoint higher order sensitivities for fast full-wave optimization of microwave filters. IEEE Trans. Microwave Theory Techn. 54(8), 3339–3351 (2006).

Pietrenko-Dabrowska, A. & Koziel, S. Computationally-efficient design optimization of antennas by accelerated gradient search with sensitivity and design change monitoring. IET Microwaves Ant. Prop. 14(2), 165–170 (2020).

F. Arndt. WASP-NET®: Recent advances in fast full 3D EM CAD of waveguide feeds and aperture antennas. in IEEE Int. Symp. Ant. Propag., APS-URSI, Spokane, WA, pp. 2724-2727, 2011.

Koziel, S. & Bekasiewicz, A. Multi-Objective Design of Antennas Using Surrogate Models (World Scientific, 2016).

Easum, J. A., Nagar, J., Werner, P. L. & Werner, D. H. Efficient multi-objective antenna optimization with tolerance analysis through the use of surrogate models. IEEE Trans. Ant. Prop. 66(12), 6706–6715 (2018).

Liu, B., Yang, H. & Lancaster, M. J. Global optimization of microwave filters based on a surrogate model-assisted evolutionary algorithm. IEEE Trans. Microwave Theory Techn. 65(6), 1976–1985 (2017).

Cervantes-González, J. C. et al. Space mapping optimization of handset antennas considering EM effects of mobile phone components and human body. Int. J. RF Microwave CAE 26(2), 121–128 (2016).

Toktas, A., Ustun, D. & Tekbas, M. Multi-objective design of multi-layer radar absorber using surrogate-based optimization. IEEE Trans. Microwave Theory Techn. 67(8), 3318–3329 (2019).

Torun, H. M. & Swaminathan, M. High-dimensional global optimization method for high-frequency electronic design. IEEE Trans. Microwave Theory Technol. 67(6), 2128–2142 (2019).

Leifsson, L., Du, X. & Koziel, S. Efficient yield estimation of multi-band patch antennas by polynomial chaos-based kriging. Int. J. Numer. Modeling 33(6), e2722 (2020).

Jacobs, J. P. Characterization by Gaussian processes of finite substrate size effects on gain patterns of microstrip antennas. IET Microwaves Ant. Prop. 10(11), 1189–1195 (2016).

Zhang, W., Feng, F., Jin, J. & Zhang, Q. J. Parallel multiphysics optimization for microwave devices exploiting neural network surrogate. IEEE Microwave Wireless Comp. Lett. 31(4), 341–344 (2021).

Feng, F. et al. Multifeature-assisted neuro-transfer function surrogate-based EM optimization exploiting trust-region algorithms for microwave filter design. IEEE Trans. Microwave Theory Techn. 68(2), 531–542 (2020).

Kim, D., Kim, M. & Kim, W. Wafer edge yield prediction using a combined long short-term memory and feed- forward neural network model for semiconductor manufacturing. IEEE Access 8, 215125–215132 (2020).

Cai, J., King, J., Yu, C., Liu, J. & Sun, L. Support vector regression-based behavioral modeling technique for RF power transistors. IEEE Microwave Wireless Comp. Lett. 28(5), 428–430 (2018).

Petrocchi, A. et al. Measurement uncertainty propagation in transistor model parameters via polynomial chaos expansion. IEEE Microwave Wireless Comp. Lett. 27(6), 572–574 (2017).

Li, S., Fan, X., Laforge, P. D. & Cheng, Q. S. Surrogate model-based space mapping in postfabrication bandpass filters’ tuning. IEEE Trans. Microwave Theory Tech. 68(6), 2172–2182 (2020).

Koziel, S. & Unnsteinsson, S. D. Expedited design closure of antennas by means of trust-region-based adaptive response scaling. IEEE Antennas Wireless Prop. Lett. 17(6), 1099–1103 (2018).

Y. Su, J. Li, Z. Fan, and R. Chen. Shaping optimization of double reflector antenna based on manifold mapping. in Int. Applied Comp. Electromagnetics Soc. Symp. (ACES), Suzhou, China, 1–2 (2017).

Koziel, S. & Pietrenko-Dabrowska, A. On computationally-efficient reference design acquisition for reduced-cost constrained modeling and re-design of compact microwave passives. IEEE Access 8, 203317–203330 (2020).

A. Pietrenko-Dabrowska and S. Koziel. Numerically efficient algorithm for compact microwave device optimization with flexible sensitivity updating scheme. Int. J. RF & Microwave CAE. 29(7) (2019).

Koziel, S., Mosler, F., Reitzinger, S. & Thoma, P. Robust microwave design optimization using adjoint sensitivity and trust regions. Int. J. RF Microwave CAE 22(1), 10–19 (2012).

A.R. Conn, N.I.M. Gould, & P.L. Toint, Trust Region Methods, MPS-SIAM Series on Optimization (2000).

Koziel, S. Objective relaxation algorithm for reliable simulation-driven size reduction of antenna structure. IEEE Ant. Wireless Prop. Lett. 16(1), 1949–1952 (2017).

Tseng, C. & Chang, C. A rigorous design methodology for compact planar branch-line and rat-race couplers with asymmetrical T-structures. IEEE Trans. Microwave Theory Technol. 60(7), 2085–2092 (2012).

Koziel, S. & Pietrenko-Dabrowska, A. Reduced-cost surrogate modeling of compact microwave components by two-level kriging interpolation. Eng. Opt. 52(6), 960–972 (2019).

K. V. Phani Kumar & S. S. Karthikeyan. A novel design of rat-race coupler using defected microstrip structure and folding technique. in IEEE Applied Electromagnetics Conf. (AEMC), Bhubaneswar, India, 1–2 (2013).

Acknowledgements

The authors would like to thank Dassault Systemes, France, for making CST Microwave Studio available. This work is partially supported by the Icelandic Centre for Research (RANNIS) Grant 206606 and by National Science Centre of Poland Grant 2018/31/B/ST7/02369.

Author information

Authors and Affiliations

Contributions

Conceptualization, S.K. and A.P.; methodology, S.K. and A.P.; software, S.K. and A.P.; validation, S.K. and A.P.; formal analysis, S.K.; investigation, S.K. and A.P.; resources, S.K.; data curation, S.K. and A.P.; writing—original draft preparation, S.K. and A.P.; writing—review and editing, S.K.; visualization, S.K. and A.P.; supervision, S.K.; project administration, S.K.; funding acquisition, S.K All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koziel, S., Pietrenko-Dabrowska, A. Direct constraint control for EM-based miniaturization of microwave passives. Sci Rep 12, 13320 (2022). https://doi.org/10.1038/s41598-022-17661-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-17661-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.