Abstract

Using existing data can be a reliable and cost-effective way to predict species distributions, and particularly useful for recovering or expanding species. We developed a current gray wolf (Canis lupus) distribution model for the western Great Lakes region, USA, and evaluated the spatial transferability of single-state models to the region. This study is the first assessment of transferability in a wide-ranging carnivore, as well as one of few developed for large spatial extents. We collected 3500 wolf locations from winter surveys in Minnesota (2017–2019), Wisconsin (2019–2020), and Michigan (2017–2020). We included 10 variables: proportion of natural cover, pastures, and crops; distance to natural cover, agriculture, developed land, and water; major and minor road density; and snowfall (1-km res.). We created a regional ensemble distribution by weight-averaging eight models based on their performance. We also developed single-state models, and estimated spatial transferability using two approaches: state cross-validation and extrapolation. We assessed performance by quantifying correlations, receiver operating characteristic curves (ROC), sensitivities, and two niche similarity indices. The regional area estimated to be most suitable for wolves during winter (threshold = maximum sensitivity/specificity) was 106,465 km2 (MN = 48,083 km2, WI = 27,757 km2, MI = 30,625 km2) and correctly predicted 88% of wolf locations analyzed. Increasing natural cover and distance to crops were consistently important for determining regional and single-state wolf distribution. Extrapolation (vs. cross-validation) produced results with the greatest performance metrics, and were most similar to the regional model, yet good internal performance was unrelated to greater extrapolation performance. Factors influencing species distributions are scale-dependent and can vary across areas due to behavioral plasticity. When extending inferences beyond the current occurrence of individuals, assessing variation in ecology such as habitat selection, as well as methodological factors including model performance, will be critical to avoid poor scientific interpretations and develop effective conservation applications. In particular, accurate distribution models for recovering or recovered carnivores can be used to develop plans for habitat management, quantify potential of unoccupied habitat, assess connectivity modeling, and mitigate conflict, facilitating long-term species persistence.

Similar content being viewed by others

Introduction

Understanding which factors limit species distributions is a foundational question in ecology and conservation, and species distribution models (SDMs) have become an important tool to map and predict species occurrences1,2,3,4,5. Use of SDMs has proliferated in the past several decades due to increasing accessibility and quantity of species occurrence data, the development of robust modeling algorithms (e.g.6), and the improvement of software and technological resources7,8,9. In addition, SDMs have been used successfully for on-the-ground conservation and management initiatives10. For example, information regarding species occurrence and distribution is critical for natural resource decision making, including International Union for Conservation of Nature plans11, invasive species management12, state wildlife action plans13,14, and conservation of endangered species15,16. In this context, researchers often use SDMs to understand species responses to different land uses and land covers, and spatial predictions can highlight priority locations to facilitate conservation initiatives.

Because natural resource management agencies and conservation organizations often have limited resources, using existing data to predict species distributions is an important line of research17. When species location data are unavailable or limited, researchers can estimate species distributions by transferring results across spatial and temporal extents and resolutions18. Studies on spatial transferability assess how well a model can be generalized to other areas19,20, such as evaluating niche opportunities for non-native species21 or species reintroduction assessments22. However, when models are overfitted to local conditions (i.e. when a model fits the calibration data too closely), performance on validation data and the spatial transferability of the model can be reduced23. Despite the importance of spatial transferability in distribution models, and the existence of studies designed to evaluate them (e.g.19,24,25,26), most are focused on species with marked ecological or climatic limits, or virtual species (i.e. simulations27), rather than wide-ranging or ecologically flexible species.

Evaluating transferability of SDMs is particularly important when assessing recovering species and species with expanding distributions, as it can provide forecasts of future distributions and provide insights into potential transferability of a model rather than proceeding under untested assumptions28. Specifically, SDMs for recovering or recovered carnivores can be used to develop plans for habitat management, quantify potential of unoccupied habitat, assess connectivity modeling, and mitigate conflict (e.g.29,30,31). Globally, large carnivores have experienced vast range contractions driven mostly by human persecution, loss and degradation of habitat, and prey depletion32,33,34. However, these declines can be reversed (e.g.32), and populations of several large carnivores are recovering, particularly in the northern hemisphere34,35. In North America, gray wolves (Canis lupus) have recolonized portions of their historic range after severe population declines in the mid-twentieth century36,37. Our objective was to develop a current wolf distribution model for the western Great Lakes region, expecting likelihood of occurrence to be positively influenced by increasing natural cover, and negatively influenced by increasing human disturbance38,39. In addition, we assessed SDM transferability by comparing model performance in making predictions within or outside the geographical domains of the model1. This study is the first assessment of SDM transferability in a behaviorally plastic and wide-ranging large carnivore, while also adding to a limited number of studies that assessed SDM transferability in any type of ecosystem at a regional (i.e. thousands of square kilometers) scale (e.g.19,40,41).

Methods

Species background

Wolves were nearly extirpated from the contiguous United States by the 1930s, mainly due to persecution to protect livestock, habitat loss, and prey declines37. By 1974, wolves were listed on the United States Endangered Species Act (16 U.S.C. 1531–1544, 87 Stat. 884). In January 2021, wolves in Michigan, Wisconsin, and Minnesota were removed from the United States list of Threatened and Endangered Species, however a federal judge restored protections on 10 February 2022, continuing a decades-long tug and pull between state and federal control of wolf management. Given the estimated population size (about 3800 individuals), the gray wolf population was considered recovered in the western Great Lakes region by the US Fish and Wildlife Service37, encompassing roughly two-thirds of the current total population in the contiguous United States42.

Study area

Our study area comprised Minnesota, Wisconsin, and Michigan, USA. The northern portion of the study area is forest-dominated, with agriculture and human development predominant in the south. The area includes 44% forested lands, 31% cultivated crops, 6% pastures, and 7% developed lands; with lesser amounts of wetlands, shrubland, and herbaceous land covers43. Elevations ranges from 174 to 701 m above sea level. The area contains abundant lakes and streams, with most (86%) areas within 10 km of water. Human population density is low in northern portions of the study area and increases to > 100 people/km2 in southern portions.

Wolf surveys

We collected wolf location data from winter surveys in Minnesota (2017–2018), Wisconsin (2019–2020), and Michigan (2017–2020). In Minnesota, the Minnesota Department of Natural Resources (MN DNR) mailed instructions to participants (i.e. trained natural resources staff at county, state, federal, and tribal agencies) and asked them to record locations and group size estimates of all wolves and wolf sign (e.g., track, scat) observed during normal work duties from November until snowmelt the following spring (about mid-May). Participants could record locations on forms or maps, but most data were entered by participants in a web-based GIS survey application. This database was combined with wolf observations and signs recorded during other wildlife surveys (e.g., carnivore scent station survey, furbearer winter track survey) coordinated by MN DNR.

In Wisconsin, the Wisconsin Department of Natural Resources (WI DNR) conducts wolf snow-track surveys every winter in areas of known or suspected wolf pack activity, with potential wolf range divided into 164 survey blocks to ensure comprehensive coverage. During winter 2019–2020, 158 blocks (96%) were sampled. Survey blocks were delineated to ensure an entire block could be surveyed in a day. Survey blocks average 500 km2 and are bordered by public roads, waterways, or state boundaries. Wisconsin Department of Natural Resources staff, Tribal biologists, and trained volunteers conduct the surveys 1–3 days after snowfalls and attempt to traverse most snow-covered roads in survey blocks. Trackers attempt to survey blocks at least 3 times (average 2.8 surveys per block) to identify the number of individuals in every pack.

In Michigan, the Michigan Department of Natural Resources (MI DNR), with assistance from the United States Department of Agriculture Wildlife Services (USDA WS), conducts wolf track surveys every other year during winter (December–March/April, e.g. 2018 survey was from December 2017 to April 2018), consisting of intensive and extensive searches of roads and trails by truck and snowmobile for wolf tracks and sign, to count the number of individuals in each pack. The MI DNR also attempts to capture and attach GPS collars to wolves in areas to be surveyed during the next survey period, to locate packs and spatially differentiate adjacent packs. The Upper Peninsula of Michigan, where wolves currently occur, is divided into 21 wolf survey units from which a random sample, stratified by historic wolf density, is drawn for each survey (targeting at least 50% of the Upper Peninsula to be surveyed). Michigan DNR and USDA WS staff are assigned to conduct track surveys in specific units, and surveys in adjacent units are coordinated to avoid duplicate counting of wolves.

To standardize the three datasets and decrease spatial autocorrelation, we filtered the data so no more than one location (randomly selected) occurred per 1-km2 pixel (i.e. the resolution of our covariates, see below). To further test if spatial autocorrelation influenced results, we additionally filtered data to no more than one location every 5 km. We compared modeling results for both datasets by assessing model performance (ROC and sensitivity, see below), and calculating the spatial correlation between resulting maps (using Band Collection Statistics in ESRI ArcMap 10.7).

Distribution modeling

We considered 10 variables for our wolf distribution models: proportion of natural cover, proportion of pastures, proportion of cultivated crops, distance to natural cover, distance to agriculture, distance to developed land, distance to water, major road density, minor road density, and annual snowfall. All variables were resampled to 1-km resolution. For land cover, we used data from the National Land Cover Database 201643. We regrouped the original land cover types into four categories: natural, cultivated crops, pastures, and developed cover. Natural cover included all non-developed covers (i.e. forest, grassland, shrubland, etc.), and developed cover included all four NLCD developed categories (i.e. open, low, high, and very high). We calculated proportion of land covers (natural, pasture, and crops) by aggregating the original 30-m resolution layer and calculating the proportion of each cover at the 1-km scale. We developed four distance to cover layers: distance to natural cover, distance to crops, distance to pastures, and distance to developed cover, at 1-km resolution. Using data from the National Hydrography Dataset (2016), we developed a distance to water layer (i.e. rivers and lakes). We created two road density layers using TIGER/Line Shapefiles (US Census Bureau): minor road density (county and local roads) and major road density (primary and secondary roads, e.g. highways and main arteries). Snowfall data (i.e. snow depth) was obtained from the National Weather Service National Snowfall Analysis (http://www.nohrsc.noaa.gov/snowfall) which estimates snowfall in the recent past by gathering several operational data sets into a unified analysis; we obtained the total annual winter snowfall from winters 2017–2018 to 2019–2020 and calculated the average across years. To compare the range of environmental values in each state, we created a variable range violin graph each state and the regional study area.

We created a correlation matrix using all GIS layers. We found strong correlation between distance to pastures and distance to crops (r = 0.82) as well as between proportion of natural cover and proportion of crops (r = − 0.78). To determine the best combination of variables, we ran all possible combinations for the regional model (never including two correlated variables in the same model) and chose the one with the greatest performance metrics (ROC and sensitivity, see below). Because models require background data (e.g. pseudo-absence points), we generated a randomly drawn sample of 10,000 background points from the study area, gave equal weight to presence and pseudo-absence points during modeling44. We used the entire study area to draw background points assuming all of it was available to wolves, as indicated by historical distribution36,37 and more recent data confirming sporadic occurrence outside areas snow track surveys were conducted (MN DNR, WI DNR, MI DNR, unpublished data).

We used an ensemble model approach to achieve more robust predictions45, combining 8 individual algorithms: random forest (RF), generalized boosted regression (GBM), Maximum entropy (MaxEnt), generalized linear model (GLM), generalized additive model (GAM), classification tree analysis (CTA), surface range envelop (SRE, also known as BIOCLIM), and flexible discriminant analysis (FDA) (algorithm details in Appendix S1). We assessed internal performance of individual models using threefold random cross-validation, with 80% of locations used as SDM training data and 20% as SDM testing data for each iteration. We evaluated models using the area under the curve of a receiver operating characteristic (ROC) plot, true skill statistics (TSS)46, and sensitivity scores represented as the ratio of presence sites correctly predicted over the number of positive sites in the sample9.

We created the ensemble model by weight-averaging all individual models proportionally to their performance evaluation metrics scores9, which resulted in a map representing continuous likelihood of presence. We quantified the influence of each variable in each individual model by permutation importance9, the greater the value of this metric, the more importance the predictor variable has on the model. To evaluate uncertainty, we created a committee averaging map, in which each individual model estimates if the species is present or absent in a pixel by transforming the continuous likelihood of presence to a yes (1) or no (0) binary response using an optimized threshold (maximum sensitivity and specificity). When the value of the committee averaging map is 1 or 0, it means that all models predicted presence (1) or absence (0), respectively. When the prediction approaches 0.5, about half the models identified the species as present. We used the biomod2 package9 in R v. 3.6.2 (R Core Team 2020) to develop individual models, ensembles, and committee consensus maps.

Spatial transferability

Models are often sensitive to the spatial extent of the study area47 and background points strategy48, and dividing data into multiple geographic regions provides inference into how well models perform in unsampled regions28. We estimated spatial transferability using two complementary approaches: making predictions within (state cross-validation) or outside (extrapolation via restriction of background points) the geographical domains of the models. To perform a balanced comparison, for the spatial transferability analyses all three states had an equal number of points included in each model. Because Michigan had the lowest number of locations after filtering to 1-km resolution (478 locations), we randomly subsampled locations from the other two states to match that value.

For the cross-validation assessment, we used a spatially structured approach by creating three distribution models, each iteration using points from one state (i.e., MI, MN, and WI) as modeling locations, points from throughout the study area as background pseudo-absence locations, and wolf locations from the other two states as validation. For the extrapolation assessment, we similarly created three distribution models using points from only one state as modeling locations and the locations for the other two states as validation, however we restricted the background locations to occur only in the same state as the modeling locations (e.g., MI locations with MI only pseudoabsences). By doing this, we effectively created state-specific models, and then extrapolated the results to the other two unsampled states.

We quantified how well each single-state model (MI-crossvalidation, MN-crossvalidation, WI-crossvalidation, MI-extrapolation, MN-extrapolation, WI-extrapolation) predicted suitability for the study area by calculating Spearman correlation coefficients between all single-state and regional models, as well as ROC and sensitivity for the validation datasets. Additionally, we compared probability distributions with Schoener’s D and Hellinger’s I metrics49,50, which calculate niche similarity by comparing the estimates of suitability of each grid cell of the study area, and vary from 0 (no overlap) to 1 (complete overlap).

Results

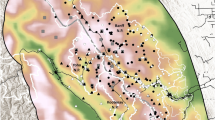

We collected 3513 wolf locations: 594 in MI, 1597 in MN, and 1322 in WI. The 1-km filtering resulted in 2703 locations (MI 478, MN 1372, WI 853), and the 5-km filtering in 1315 locations (MI 241, MN 691, WI 383). Both filtered datasets resulted in ensemble models with high performance (ROC = 0.91 and sensitivity = 88% for 1 km, and ROC = 0.89 and sensitivity = 84% for 5 km). Due to almost identical performance and high spatial correlation between the two ensembles (98.2%), we considered the 1-km filtering process satisfactory and proceeded with analyses using this dataset (Fig. 1). Additionally, after testing all combinations of correlated variables (see “Methods”), the combination of proportion of natural cover and distance to crops had the best performance metrics (Appendix Table S1), therefore we excluded distance to pastures and proportion of crops from subsequent analyses.

Wolf distribution in the western Great Lakes region, USA. (a) Wolf presence locations during 2017–2020 resampled to no more than one point per km2 with major roads shown as grey lines. (b) Regional landscape suitability (see “Methods” and Fig. 2). (c) Binary map indicating areas most suitable for wolves (suitability threshold 47.5%, see “Methods”). Maps developed with ArcMap 10.8.1 (desktop.arcgis.com). Wolf photograph: J. Belant, Global Wildlife Conservation Center.

The regional model (Fig. 1) had a ROC of 0.91. Variable permutation importance identified proportion of natural cover and distance to crops as having the greatest influence on regional wolf presence (Table 1). Likelihood of wolf presence increased with greater proportions of natural cover (~ 20% wolf likelihood at 0–20% natural cover vs. ~ 80% likelihood at 80–100% natural cover; Supplementary material Fig. S1) and greater distances from crops (20% wolf likelihood at 0 km from crops vs. 70–80% likelihood at ~ 1 km from crops). Less important variables included distance to developed cover (decreasing wolf likelihood with increasing distance), minor road density (greatest wolf likelihood at lower densities, with 50% of locations occurring at < 0.72 km/km2, and 90% at < 1.26 km/km2), and snowfall (wolf likelihood increasing to ~ 2.5 m snow depth then decreasing; Supplementary material Fig. S1). Using committee averaging, the greatest uncertainty in suitability predictions among individual models occurred in the periphery of the distribution (Supplementary material Fig. S2), with high consistency in the core distribution. Using the binary map (optimized threshold = 47.5% suitability), the estimated winter area most suitable for wolves was 106,465 km2 (MN = 48,083 km2, WI = 27,757 km2, MI = 30,625 km2, [MI upper peninsula = 18,812 km2, MI lower Peninsula = 11,813 km2]; Fig. 1), with wolf presence sensitivity of 88%, and pseudoabsence specificity of 75%.

Compared to the regional model, each single-state cross-validation model over- or underestimated suitability in different parts of the study area (Fig. 2), with overestimation often surrounding the presence locations. The WI-crossvalidation model had the greatest correlation with the regional model and the highest sensitivity for the validation points (0.74 and 78% respectively), followed by MN, then MI (Fig. 3). Internal ROC values were overall high (0.93–0.96), however the validation ROC values were markedly lower (Fig. 3), being greatest for MI and WI, followed by MN.

Top: Calibration (wolf presences) vs background (pseudo-absences) locations for the regional model, and cross-validation vs. extrapolation assessments. Bottom: Performance of each single-state model compared to the regional model (Fig. 1) in estimating landscape suitability for wolves, western Great Lakes region, USA, 2017–2020. Maps developed with ArcMap 10.8.1 (desktop.arcgis.com). Wolf photograph: J. Belant, Global Wildlife Conservation Center.

Performance metrics for the single-state distribution models (see “Methods”, Fig. 2) for wolves, western Great Lakes region, USA, 2017–2020. ROC_internal = area under the curve of an operator characteristic curve (ROC) for wolf calibration locations, ROC_validation = ROC for wolf validation locations, Validation sensitivity = proportion of correctly predicted wolf presence locations within the validation data, Correlation = Spearman’s r in relation to the regional model, Schoener’s D and Hellinger’s I = niche similarity metrics with the regional model (0 = no overlap, 1 = complete overlap, see “Methods”), MI Michigan, MN Minnesota, and WI Wisconsin.

Comparisons between the regional model and each single-state extrapolation model also indicated over- or underestimation of suitability in different parts of the study area, though not as strongly as the cross-validation models (Figs. 2, 3). The correlation values with the regional model and validation sensitivity percentages were high; best performing was MN (0.92 and 88% respectively), followed by WI and MI (Fig. 3). Internal ROC values were again overall high (0.90–0.93), with validation ROC values lower (Fig. 3), but similar for the three states (0.78–0.80).

The comparison of cross-validation and extrapolation results indicated that overall, the extrapolation models performed more similarly to the regional model, with greater correlation with the regional model, higher sensitivity for validation points, greater validation ROC values, and greater Schoener’s D and Hellinger’s I metrics. However, for internal prediction (within the spatial extent of presence locations used), the single-state cross-validation assessment resulted in marginally greater mean ROC values (0.95 vs. 0.92). The variable permutation importance of single-state models revealed the most influential variables were similar, with distance to crops and proportion of natural cover (the two most influential for the regional model) always among the top three variables (Fig. 4). However, snowfall was also sometimes within the top 3 variables, with widely different ranking across states. The variable range violin graph revealed that the available environmental gradient varied among states, with only two variables (i.e. proportion of natural and proportion of pastures) having similar ranges in all three states (Supplementary material Fig. S3). Wolves overall did not appear to select for or against any specific distance to developed land, using them as available throughout the region (Supplementary material Fig. S3).

Average variable permutation importance (8 models, see “Methods”) for wolf distribution models, western Great Lakes region, USA, 2017–2020. Shown are the regional model and each single-state cross-validation and extrapolation model (see Fig. 2). MI Michigan, MN Minnesota, WI Wisconsin, ‘cross’ cross-validation, and ‘ext’ extrapolation.

Discussion

We developed a current wolf winter distribution model for the western Great Lakes region and evaluated model transferability comparing single-state model predictions to the regional model. Single-state models with restricted background points performed slightly better than the regional model at describing wolf distribution within the geographic extent of their presence locations, likely because local models are able to detect smaller-scale variations more effectively than global models that incorporate greater heterogeneity in environment–species relationships19,41. In contrast to a previous study40, our spatial transferability assessment indicated that extrapolation produced results most similar to the regional model, as well as having better performance in relation to the validation data. Proportion of natural cover and distance to crops were the most important covariates determining regional wolf distribution, and single-state models also consistently indicated crops and natural cover among the three most influential variables.

Among states, we expected good internal performance (ROC) to be correlated with extrapolation performance51, but we found no such relationship between internal ROC and validation ROC. Similarly, a study in the Iberian Peninsula found that better internal performance metrics did not correspond with extrapolation success19. We observed variable extrapolation performance among states, likely a result of environmental differences or biotic factors such as area-specific species interactions20. From a modeling perspective, the differences in the variable extent within each state is expected to influence their extrapolation performance. For example, MN is the only state that envelops the complete extent of values for distance to crops (see Supplementary material, Fig. S3), a key variable for the regional wolf distribution model and possibly resulting in the better performance we observed for the MN-extrapolation model, and the poor performance when extrapolating to MN from the other two states. Barbosa and coauthors19 concluded that the analyzed range of values of the predictors is possibly more important than other factors, such as having a dataset free of false absences.

The regional model results suggested that distance to crops and proportion of natural cover were the most influential covariates explaining wolf distribution across the Great Lakes region. Previous studies in this area also found that wolves avoid agricultural land52, occur in forest cover52,53,54 and select for natural areas while avoiding human-modified covers including pastures, hayfields, and farms55. Wolves in Europe behaved similarly, selecting wild areas far from human disturbance39. Snowfall had minor importance at the regional level, but was more influential at the state level, particularly in Michigan, likely because it is the state with the greatest snowfall range variation, and wolves occur in some of the northernmost areas within the state. At finer spatial scales wolves seem to select areas with less snow, potentially in response to prey distributions56 or human activities57, which suggests that snowfall has a scale-dependent effect on winter wolf distribution.

All models predicted the northern half of the Lower Peninsula of Michigan to have suitable areas for wolves, though wolves are absent. The Lower Peninsula is the last major area of the western Great Lakes region with potential habitat where a breeding population of wolves are not established58. Gray wolves have only rarely been sighted in the Lower Peninsula in the past 15 years, even though wolves could cross the Straits of Mackinac (separating the Lower from the Upper Peninsula) during winters with adequate ice formation59. Habitat-based density estimates have calculated the potential for 40–10560 or 52–63 wolves59 in the Lower Peninsula. However, greater proportions of livestock-based agriculture in the Lower Peninsula, as well as greater road and human densities, may result in increased challenges such as human-caused wolf mortalities and human-wolf conflicts.

Persistence of large carnivores in human-modified landscapes is facilitated by their behavioral plasticity, which allows them to adapt to human activity through variable spatiotemporal patterns of habitat selection that facilitate human avoidance while supplying resources for persistence (e.g. prey, resting sites, etc.). In particular, road density has been identified as a major determinant of wolf presence, with wolf probability very low in areas with road densities exceeding 0.7 km/km254,59,60,62. However, half of the wolf locations in our study area occurred above this threshold (90% occurred below 1.26 km/km2), which could in part be influenced by our survey methods. Wolf responses to low-traffic roads are context-dependent, conditional on ease of travel, human settlements, time of day, prey densities, mortality risks, and seasons (e.g.63,64,65,66,67). Behavioral responses of wolves to anthropogenic disturbance can also vary due to internal factors, such as behavioral states and social affiliations61. Nonetheless, suitable land covers (i.e. forests and shrublands) are consistently selected for, suggesting some habitat selection patterns will persist regardless of context (e.g. selection of natural cover). Although behavioral plasticity might facilitate wildlife occurrence, it might not be enough to ensure long-term population viability and persistence in areas with decreasing habitat quality and availability.

When species absence data are unavailable, SDMs (such as in this study) use pseudo-absences, and the environmental span of the background from which pseudo-absences are drawn has important ramifications for predictions and performance of SDMs68,69,70. Defining the spatial extent of pseudo-absences can be subjective (except for populations limited by geographical barriers), and different strategies have been proposed to improve the selection of an appropriate dataset (e.g. random, environmental exclusion, minimum–maximum distance), with some distribution modeling techniques including regression being more affected than others (i.e. machine learning, classification trees; see44). Specifically, limiting the maximum distance of background points may improve sensitivity performance44,70. In agreement, we found that for each single-state model, though restricting background points to the same state as the calibration data had no clear effect in internal performance, it always increased validation sensitivity values, indicating improved discrimination ability. Despite being beyond the scope of this study, we highlight how the background choice can influence SDM results, and encourage further exploration of this topic (see68,70).

Conclusions

While using existing data to predict species patterns for areas with limited information is a valuable and relevant research topic17, transferring model results into unsampled regions is more complex than simply filling gaps within a landscape26. We present a first assessment of the current distribution and spatial transferability for a flexible and wide-ranging large carnivore, finding that extrapolation had better predictive power into unsampled states, and that among states, good internal performance did not ensure extrapolation success. Consideration of these limitations can help develop better spatially-explicit models of conservation priority areas, which are becoming increasingly important71,72. Assessing spatial transferability performance is key when assessing expanding and recovering species and can identify concerns with extending inferences beyond the current occurrence of individuals. Matching distribution models to the needs of particular objectives73, and assessing variation in predictive power, internal performance, as well as ecological and behavioral inferences, will continue to be critical to avoid poor scientific interpretations and develop appropriate conservation and management applications.

Data availability

The data that support the findings of this study are available from the Michigan, Minnesota, and Wisconsin Departments of Natural Resources. Legal restrictions apply to the availability of these data, which were used under agreement for the current study, and so are not publicly available. Data should be requested from each natural state agency (see author list for reference).

References

Elith, J. & Leathwick, J. R. Species distribution models: Ecological explanation and prediction across space and time. Annu. Rev. Ecol. Evol. Syst. 40, 677–697 (2009).

Guisan, A. & Thuiller, W. Predicting species distribution: Offering more than simple habitat models. Ecol. Lett. 8, 993–1009 (2005).

Guisan, A. & Zimmermann, N. E. Predictive habitat distribution models in ecology. Ecol. Model. 135, 147–186 (2000).

Wilkinson, D. P., Golding, N., Guillera-Arroita, G., Tingley, R. & McCarthy, M. A. A comparison of joint species distribution models for presence–absence data. Methods Ecol. Evol. 10, 198–211 (2018).

Zimmermann, N. E., Edwards, T. C., Graham, C. H., Pearman, P. B. & Svenning, J.-C. New trends in species distribution modelling. Ecography (Cop.) 33, 985–989 (2010).

Elith, J. et al. Novel methods improve prediction of species’ distributions from occurrence data. Ecography (Cop.) 29, 129–151 (2006).

Kass, J. M. et al. Wallace: A flexible platform for reproducible modeling of species niches and distributions built for community expansion. Methods Ecol. Evol. 9, 1151–1156 (2018).

Morisette, J. T. et al. VisTrails SAHM: Visualization and workflow management for species habitat modeling. Ecography (Cop.) 36, 129–135 (2013).

Thuiller, W., Lafourcade, B., Engler, R. & Araújo, M. B. BIOMOD—A platform for ensemble forecasting of species distributions. Ecography (Cop.) 32, 369–373 (2009).

Guisan, A. et al. Predicting species distributions for conservation decisions. Ecol. Lett. 16, 1424–1435 (2013).

Syfert, M. M. et al. Using species distribution models to inform IUCN Red List assessments. Biol. Conserv. 177, 174–184 (2014).

Robinson, A. P., Walshe, T., Burgman, M. A. & Nunn, M. Invasive Species: Risk Assessment and Management (Cambridge University Press, 2017).

Fontaine, J. J. Improving our legacy: Incorporation of adaptive management into state wildlife action plans. J. Environ. Manag. 92, 1403–1408 (2011).

Gantchoff, M., Conlee, L. & Belant, J. Conservation implications of sex-specific landscape suitability for a large generalist carnivore. Divers. Distrib. 25, 1488–1496 (2019).

Camaclang, A. E., Maron, M., Martin, T. G. & Possingham, H. P. Current practices in the identification of critical habitat for threatened species. Conserv. Biol. 29, 482–492 (2014).

Schwartz, M. W. The Performance of the Endangered Species Act. Annu. Rev. Ecol. Evol. Syst. 39, 279–299 (2008).

Acevedo, P. et al. Generalizing and transferring spatial models: A case study to predict Eurasian badger abundance in Atlantic Spain. Ecol. Model. 275, 1–8 (2014).

Werkowska, W., Márquez, A. L., Real, R. & Acevedo, P. A practical overview of transferability in species distribution modeling. Environ. Rev. 25, 127–133 (2017).

Barbosa, A. M., Real, R. & MarioVargas, J. Transferability of environmental favourability models in geographic space: The case of the Iberian desman (Galemys pyrenaicus) in Portugal and Spain. Ecol. Model. 220, 747–754 (2009).

Randin, C. F. et al. Are niche-based species distribution models transferable in space?. J. Biogeogr. 33, 1689–1703 (2006).

Jiménez-Valverde, A. et al. Use of niche models in invasive species risk assessments. Biol. Invasions 13, 2785–2797 (2011).

Torres, R. T. et al. Favourableness and connectivity of a Western Iberian landscape for the reintroduction of the iconic Iberian ibex Capra pyrenaica. Oryx 51, 709–717 (2016).

Luoto, M., Kuussaari, M. & Toivonen, T. Modelling butterfly distribution based on remote sensing data. J. Biogeogr. 29, 1027–1037 (2002).

Cerasoli, F. et al. Determinants of habitat suitability models transferability across geographically disjunct populations: Insights from Vipera ursinii urs inii. Ecol. Evol. 11, 3991–4011 (2021).

Dobrowski, S. Z. et al. Modeling plant ranges over 75 years of climate change in California, USA: Temporal transferability and species traits. Ecol. Monogr. 81, 241–257 (2011).

Townsend Peterson, A., Papeş, M. & Eaton, M. Transferability and model evaluation in ecological niche modeling: A comparison of GARP and Maxent. Ecography (Cop.) 30, 550–560 (2007).

Qiao, H. et al. An evaluation of transferability of ecological niche models. Ecography (Cop.) 42, 521–534 (2018).

Wenger, S. J. & Olden, J. D. Assessing transferability of ecological models: An underappreciated aspect of statistical validation. Methods Ecol. Evol. 3, 260–267 (2012).

Gantchoff, M., Conlee, L. & Belant, J. L. Planning for carnivore recolonization by mapping sex-specific landscape connectivity. Glob. Ecol. Conserv. 21, e00869 (2020).

Gantchoff, M. G. et al. Potential distribution and connectivity for recolonizing cougars in the Great Lakes region, USA. Biol. Conserv. 257, 109144 (2021).

Boudreau, M. et al. Spatial prioritization of public outreach in the face of carnivore recolonization. J. Appl. Ecol. 59, 757–767 (2002).

Chapron, G. et al. Recovery of large carnivores in Europe’s modern human-dominated landscapes. Science 80(346), 1517–1519 (2014).

Laliberte, A. S. & Ripple, W. J. Range contractions of North American carnivores and ungulates. Bioscience 54, 123 (2004).

Ripple, W. J. et al. Status and ecological effects of the world’s largest carnivores. Science 343, 1241484 (2014).

Gompper, M. E., Belant, J. L. & Kays, R. Carnivore coexistence: America’s recovery. Science 347, 382–383 (2015).

Mech, L. D. Where can wolves live and how can we live with them?. Biol. Conserv. 210, 310–317 (2017).

United States Fish and Wildlife Service (USFWS). Endangered and threatened wildlife and plants; Removing the gray wolf (Canis lupus) from the list of endangered and threatened wildlife. Fed. Reg. 85(213), 69778–69895 (2020).

Gehring, T. M. & Potter, B. A. Wolf habitat analysis in Michigan: An example of the need for proactive land management for carnivore species. Wild. Soc. Bull. 33, 1237–1244 (2005).

Falcucci, A., Maiorano, L., Tempio, G., Boitani, L. & Ciucci, P. Modeling the potential distribution for a range-expanding species: Wolf recolonization of the Alpine range. Biol. Conserv. 158, 63–72 (2013).

Torres, L. G. et al. Poor transferability of species distribution models for a pelagic predator, the grey petrel, indicates contrasting habitat preferences across ocean basins. PLoS One 10, e0120014 (2015).

Olson, L. E. et al. Improved prediction of Canada lynx distribution through regional model transferability and data efficiency. Ecol. Evol. 11, 1667–1690 (2021).

Carroll, C., Rohlf, D. J., vonHoldt, B. M., Treves, A. & Hendricks, S. A. Wolf delisting challenges demonstrate need for an improved framework for conserving intraspecific variation under the endangered species act. Bioscience 71, 73–84 (2020).

Yang, L. et al. A new generation of the United States National Land Cover Database: Requirements, research priorities, design, and implementation strategies. ISPRS J. Photogramm. Remote Sens. 146, 108–123 (2018).

Barbet-Massin, M., Jiguet, F., Albert, C. H. & Thuiller, W. Selecting pseudo-absences for species distribution models: How, where and how many?. Methods Ecol. Evol. 3, 327–338 (2012).

Marmion, M., Parviainen, M., Luoto, M., Heikkinen, R. K. & Thuiller, W. Evaluation of consensus methods in predictive species distribution modelling. Divers. Distrib. 15, 59–69 (2009).

Allouche, O., Tsoar, A. & Kadmon, R. Assessing the accuracy of species distribution models: Prevalence, kappa and the true skill statistic (TSS). J. Appl. Ecol. 43, 1223–1232 (2006).

Paton, R. S. & Matthiopoulos, J. Defining the scale of habitat availability for models of habitat selection. Ecology https://doi.org/10.1890/14-2241.1 (2015).

Derville, S., Torres, L. G., Iovan, C. & Garrigue, C. Finding the right fit: Comparative cetacean distribution models using multiple data sources and statistical approaches. Divers. Distrib. 24, 1657–1673 (2018).

Warren, D. L., Glor, R. E. & Turelli, M. Environmental niche equivalency versus conservatism: Quantitative approaches to niche evolution. Evolution 62, 2868–2883 (2008).

Warren, D. L., Glor, R. E. & Turelli, M. ENMTools: A toolbox for comparative studies of environmental niche models. Ecography https://doi.org/10.1111/j.1600-0587.2009.06142.x (2010).

Arntzen, J. W. From descriptive to predictive distribution models: A working example with Iberian amphibians and reptiles. Front. Zool. 3, 1–11 (2006).

Conway, K. Wolf recovery—GIS facilitates habitat mapping in the Great Lake States. GIS WORLD 9, 54–57 (1996).

Boitani, L. Wolf conservation and recovery. In Wolves: Behavior, Ecology, and Conservation (eds Mech, L. D. & Boitani, L.) (University of Chicago Press, 2007).

Mladenoff, D. J., Sickley, T. A., Haight, R. G. & Wydeven, A. P. A regional landscape analysis and prediction of favorable gray wolf habitat in the northern Great Lakes region. Conserv. Biol. 9, 279–294 (1995).

Treves, A., Martin, K. A., Wiedenhoeft, J. E. & Wydeven, A. P. Dispersal of gray wolves in the Great Lakes region. In Recovery of Gray Wolves in the Great Lakes Region of the United States 191–204 (Springer, 2009).

Nelson, M. E. Winter range arrival and departure of white-tailed deer in northeastern Minnesota. Can. J. Zool. 73, 1069–1076 (1995).

Droghini, A. & Boutin, S. Snow conditions influence grey wolf (Canis lupus) travel paths: The effect of human-created linear features. Can. J. Zool. 96, 39–47 (2018).

Beyer, D. E., Peterson, R. O., Vucetich, J. A. & Hammill, J. H. Wolf population changes in Michigan. In Recovery of Gray Wolves in the Great Lakes Region of the United States 65–85 (Springer, 2009).

Claeys, G. B. Wolves in the Lower Peninsula of Michigan: Habitat modeling, evaluation of connectivity, and capacity estimation (Doctoral dissertation, Duke University) (2010)..

Gehring, T. M. & Potter, B. A. Wolf habitat analysis in Michigan: An example of the need for proactive land management for carnivore species. Wildl. Soc. Bull. 33, 1237–1244 (2005).

Mancinelli, S., Falco, M., Boitani, L. & Ciucci, P. Social, behavioural and temporal components of wolf (Canis lupus) responses to anthropogenic landscape features in the central Apennines, Italy. J. Zool. 309, 114–124 (2019).

Potvin, M. J. et al. Monitoring and habitat analysis for wolves in upper Michigan. J. Wildl. Manag. 69, 1660–1669 (2005).

Whittington, J. et al. Caribou encounters with wolves increase near roads and trails: A time-to-event approach. J. Appl. Ecol. 48, 1535–1542 (2011).

Zimmermann, B., Nelson, L., Wabakken, P., Sand, H. & Liberg, O. Behavioral responses of wolves to roads: Scale-dependent ambivalence. Behav. Ecol. 25, 1353–1364 (2014).

Kojola, I. et al. Wolf visitations close to human residences in Finland: The role of age, residence density, and time of day. Biol. Conserv. 198, 9–14 (2016).

Gaynor, K. M., Hojnowski, C. E., Carter, N. H. & Brashares, J. S. The influence of human disturbance on wildlife nocturnality. Science 360, 1232–1235 (2018).

Kautz, T. M. et al. Large carnivore response to human road use suggests a landscape of coexistence. Glob. Ecol. Conserv. 30, e01772 (2021).

Thuiller, W., Brotons, L., Araújo, M. B. & Lavorel, S. Effects of restricting environmental range of data to project current and future species distributions. Ecography (Cop.) 27, 165–172 (2004).

Václavík, T. & Meentemeyer, R. K. Equilibrium or not? Modelling potential distribution of invasive species in different stages of invasion. Divers. Distrib. 18, 73–83 (2011).

VanDerWal, J., Shoo, L. P., Graham, C. & Williams, S. E. Selecting pseudo-absence data for presence-only distribution modeling: How far should you stray from what you know?. Ecol. Model. 220, 589–594 (2009).

Brum, F. T. et al. Global priorities for conservation across multiple dimensions of mammalian diversity. Proc. Natl. Acad. Sci. U.S.A. 114, 7641–7646 (2017).

Rosauer, D. F., Pollock, L. J., Linke, S. & Jetz, W. Phylogenetically informed spatial planning is required to conserve the mammalian tree of life. Proc. Biol. Sci. 284, 20170627 (2017).

Guillera-Arroita, G. et al. Is my species distribution model fit for purpose? Matching data and models to applications. Glob. Ecol. Biogeogr. 24, 276–292 (2015).

Acknowledgements

Manuscript preparation was supported by the State University of New York College of Environmental Science and Forestry and Camp Fire Conservation Fund. We are grateful to individuals from the respective state agencies for data collection and federal and tribal agencies, as well as individuals from the public, that reported observations. We thank two anonymous reviewers for their comments and suggestions.

Author information

Authors and Affiliations

Contributions

M.G.G.: Conceptualization, Methodology, Formal analysis, Writing—Original draft, Writing—Review and editing. J.L.B.: Conceptualization, Methodology, Writing—Review and editing, Supervision. D.E.B., J.D.E., D.M.M., D.C.N., J.L.P.T., and B.J.R.: Resources, Data curation, Writing—Review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gantchoff, M.G., Beyer, D.E., Erb, J.D. et al. Distribution model transferability for a wide-ranging species, the Gray Wolf. Sci Rep 12, 13556 (2022). https://doi.org/10.1038/s41598-022-16121-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-16121-6

This article is cited by

-

Applying XGBoost and SHAP to Open Source Data to Identify Key Drivers and Predict Likelihood of Wolf Pair Presence

Environmental Management (2024)

-

Ecological niche model transferability of the white star apple (Chrysophyllum albidum G. Don) in the context of climate and global changes

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.