Abstract

Deep learning architectures have transformed data analytics in geosciences, complementing traditional approaches to geological problems. Although deep learning applications in geosciences show encouraging signs, their potential remains untapped due to limited data availability and the required in-depth knowledge to provide a high-quality labeled dataset. We approached these issues by developing a novel style-based deep generative adversarial network (GAN) model, PetroGAN, to create the first realistic synthetic petrographic datasets across different rock types. PetroGAN adopts the architecture of StyleGAN2 with adaptive discriminator augmentation (ADA) to allow robust replication of statistical and esthetical characteristics and improve the internal variance of petrographic data. In this study, the training dataset consists of > 10,000 thin section images both under plane- and cross-polarized lights. Here, using our proposed novel approach, the model reached a state-of-the-art Fréchet Inception Distance (FID) score of 12.49 for petrographic images. We further observed that the FID values vary with lithology type and image resolution. The generated images were validated through a survey where the participants have various backgrounds and level of expertise in geosciences. The survey established that even a subject matter expert observed the generated images were indistinguishable from real images. This study highlights that GANs are a powerful method for generating realistic synthetic data in geosciences. Moreover, they are a future tool for image self-labeling, reducing the effort in producing big, high-quality labeled geoscience datasets. Furthermore, our study shows that PetroGAN can be applied to other geoscience datasets, opening new research horizons in the application of deep learning to various fields in geosciences, particularly with the presence of limited datasets.

Similar content being viewed by others

Introduction

Advances in artificial intelligence and machine learning in the last decades have accelerated the process of digital transformation in geosciences and helped to generate meaningful insights from geological data like never before, using a vast array of algorithms1,2,3,4. Recently, with the advent of generative models like Generative adversarial networks (GANs)5, Variational Auto-Encoders (VAEs)6, transformer GANs7, and Diffusion models8, applications of deep learning have led to state-of-the-art results in various aspects, including geosciences. In addition, some reports reveal that the outcomes of these generative models could match geologist-level analysis in various aspects of visual recognition (Table 1). Studies have demonstrated that GANs are a powerful tool to generate realistic and diverse images in an unsupervised manner and are already adopted in several fields, including superresolution, image-to-image translation, text-to-image translation, style-mixing, and generation of realistic images (Table 1). In general, GANs objective is to capture the data distribution via a minimax two-player game that aims to produce synthetic samples based on the original dataset, mimicking its statistical and esthetical characteristics5 even going as far as deceiving human observers in the ability to discriminate real images from generated ones9,10,11. In recent years, geosciences have adopted deep learning-based analytics in their workflows, such as image processing tasks. However, the lack of high-quality labeled, varied, and sufficiently large datasets12 has resulted in images being overtrained and overfit to certain geological contexts13, or there is insufficient data to yield satisfactory results with deep learning algorithms such as Convolutional Neural Networks (CNNs)14. As a result, transfer learning has been suggested as an alternative approach4,15,16 to avoid or minimize the risk of overtraining in a single geological context using such an approach. Furthermore, the high accuracy obtained from the transfer learning methods creates another dimension of uncertainty whereby a model trained to recognize animals or other daily objects can be applied to classify geological images, such as seismic and petrographic images that have entirely different distributions and features (high- and low-level). Therefore, there is still a gap in image processing and analysis that generative models could help address by bringing deep learning applications closer to geological tasks.

Historically, clustering techniques and edge detection algorithms were the main algorithms for digital image processing17. Recent studies have explored and utilized deep CNNs to address visual recognition and image processing in geosciences4,17. The implementation of GANs in geosciences, particularly the petrographic image dataset, has been limited and still relatively under-explored. One of the significant limitations to applying GANs in petrographic images is that most GAN architectures require a massive amount of data, as illustrated in the first implementation of GAN with the MNIST and CIFAR datasets (60,000 images each)5. Additionally, the use of image datasets in the 1000s range has led to the overfitting of the generator in GANs18. This work addresses the implementation of GANs for petrographic images by (i) experimenting with different dataset sizes, (ii) using various image resolutions, and (iii) applying truncation values to create a framework to generate realistic synthetic petrographic datasets. Our work uses the adaptive discriminator augmentation (ADA) application of StyleGAN219,20,21 to realistically generate synthetic petrographic images due to the high-quality of generated images by this model, backward compatibility, stability, and the viability of its use in nonfacial generation datasets. In addition, employing the state-of-the-art Fréchet Inception Distance (FID)22 scores provides a better metric to evaluate the generated images. The dataset size limitation in petrographic thin sections is a problem addressed in this paper, as images collected and sliced were of a sufficient volume after preprocessing to produce meaningful results using this generative model. The ultimate objectives of this work are to

-

1.

Explore the best image resolution and dataset size to generate realistic thin sections.

-

2.

Develop a novel deep learning framework to generate petrographic synthetic datasets.

-

3.

Discuss the properties of a petrographic GAN model using latent space, transfer learning, interpolation, truncation, and feature extraction21,23,24,25.

-

4.

Evaluate the synthetic datasets through a simple survey from subject matter experts.

We further aim to highlight the application of GAN algorithms and other generative models as a way forward for exploring self-labeling and image generation tasks and how it could support the successful execution of deep learning algorithms and provide a novel workflow for image analysis in geosciences.

Related work

Recently, GANs have been widely adopted in geosciences with the motivation to explore and apply generative models to generate and manipulate a latent space associated with the geological data of interest, i.e., the highdimensional space where a representation of the data is encoded2,26,27. This space is used to upscale the dimensionality and upsample the quality of image datasets. Previous works have demonstrated the far-reaching impact and application of GANs in geosciences, from reservoir simulation to history matching (see Table 1)2,27,28,29,30.

Furthermore, GANs have been proposed as a tool to create synthetic carbonate components4 and for obtaining superresolution micro-computed tomography (Micro-CT) images29,32 for digital rock physics workflows. Additionally, GANs have also been used successfully to assist in the reconstruction and classification of carbonate thin sections31, positioning GANs as a possible tool to enhance carbonate lithology interpretation workflows in combination with core images and Fullbore Formation MicroImager (FMI) images. Recent applications have also repurposed GANs designed for 2D image generation to 1D time-series generation, an implementation that could have extensive applications in the geosciences33.

Methods

In this study, the datasets consisted of cross-polarized (XPL) and plane-polarized (PPL) RGB thin section images. Information from XPL and PPL images is crucial to determine the type of minerals and lithological variations in thin sections. The datasets were prepared using (i) the provided dataset tool generation from the StyleGAN repository20,21 and (ii) image slicing as a data augmentation technique. The StyleGAN architecture was selected based on its state-of-the-art (SoTA) scores and the ability to experiment with the generated latent space (Table 2),20,21. This architecture and its derivatives are the current SoTA for unconditional image generation with the CIFAR-10 dataset (Table 3)34.

PetroGAN architecture

In this work, the proposed GAN model, PetroGAN, adopts a style-based GAN architecture (Fig. 1). The model consists of (i) a mapping network from the latent vector z (i.e., the latent vector representation of an image in latent space Z), (ii) a mapping of this vector using eight fully connected layers in the W Space the space of all style vectors w, and (iii) used in conjunction with Adaptive Instance Normalization (AdaIN) layers35 to control the features in the generator. This is managed using progressive image growth, reducing the complexity of generating high-resolution images by taking a step-by-step approach19. However, this has been linked to the production of artifacts in the generated image, one of the main reasons behind the re-engineering of the model adopted in StyleGAN220,21.

Original StyleGAN architecture, (a) The latent vector z introduced, (b) eight fully connected layers used to obtain, (c) latent code w containing the features and (d) a series of AdaIN, normalization and convolutions using progressive growth to generate high- resolution images19. Major modifications were made to (e) StyleGAN in (f) StyleGAN2. The model is simplified by removing the initial processing of the constant (1), the removal ofremoving the mean in the process of normalizing the features (2), and the transfer of the noise module (+) outside of the style block (3). M(modified from the StyleGAN and StyleGAN2 papers)19,20.

Eq. (1) is a special normalization operation where the input feature map, xi, is normalized by instance, then scaled and biased using the style information, µ being the mean and σ the standard deviation of xi, with ys,i and yb,i being a pair of style values21.

For this reason, we use the second iteration of the StyleGAN line of models, Fig. 1f20,21, which further developed the original StyleGAN19. This architecture is constantly developed and improved and has backward compatibility with the preceding StyleGAN architectures with the same dataset preparation tools, accepted image resolutions, and workflows utilized. Although the latest iteration of this model is StyleGAN343, we did not choose this architecture because an acceptable FID score was not achieved, and the model diverged with the same dataset size. The generation of unintended artifacts in StyleGAN, primarily due to the progressive growth technique, was addressed by creating the StyleGAN2 model20,21. This was achieved by simplifying and eliminating steps in the architecture (Fig. 1f). Instead of using progressive growth to generate high-resolution images, we employed skip connections in StyleGAN220. This method allows skipping some layers in the model and feeding this output to the subsequent layers as realized in the Residual Networks (ResNet) architectures44. Style-mixing is a different application of this architecture, with styles extracted after the fully connected layers by an Affine transform (A in Fig. 1e); these style blocks further extract coarser and fine styles from an image dataset. For a facial dataset, this ranges from pose (coarse) to eye color (fine). The style blocks of this architecture consist of modulation, convolution, and normalization layers; the style block starts with a modulation operation, Eq. (2), being applied, which scales each input feature from the extracted style21.

where w and w′ are the modulated weights and si is the scale for each input. This is followed by a 3 × 3 convolution operation, finalizing the style block with a normalization of the weights using Eq. (3), with a constant ε added to avoid instability during training.

Dataset sources

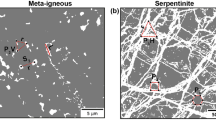

Petrographic images were collected from publicly available sources, as listed in Table 4. The dataset consists of high-resolution images of 1701 × 1686 pixels collected from the Virtual Petrographic Microscope project (VPM) in PPL and XPL with different rotation angles for each image45 (Fig. 2e). This dataset is complemented by 800 × 533 pixel petrographic images taken from the Strekeisen project (Fig. 2a–d)46 Images from all datasets were divided into four main rock types: (1) plutonic, (2) volcanic, (3) metamorphic, and (4) sedimentary classes. Magnifications were also considered to obtain several representations of various minerals, ranging from 10× and 20× from the Strekeisen project and the Atlas of sedimentary rocks book47, 4× from JD. Derochette project48, and full thin section photomicrographs for the VPM.

Four lithology classes extracted from Streckeisen dataset: (a) plutonic, (b) metamorphic, (c) volcanic and (d) sedimentary rocks in thin sections, (e) example of image slicing applied to the VPM dataset. Image slicing was applied to MacQuarie university images, splitting the original into five representative subsections.

Image slicing and final dataset

Data processing was performed through standard image manipulation made available as part of the StyleGAN2 application, Numpy49, OpenCV, Pillow, and PyTorch50. As per the requirements of the StyleGAN2 architecture, images needed to be in a square format with dimensions in powers of two (i.e., 32×32, 256×256, 512×512 px, etc.). The original images were cropped or sliced to 512x512 px to achieve a sizable dataset for the GAN to train and satisfy the StyleGAN2 dataset requirement18,19,20 using the highest possible resolution while preserving the essential features of the petrographic dataset. The final dataset consisted of 10070 petrographic images belonging to four classes; this combined set of images is used to train the GAN for generating 512x512 px images. One of the main objectives of the generated dataset was to achieve greater than 10k images and have a class balance between lithologies, as shown in Table 5.

Training procedures

Stage I

As a Minimum Viable Product (MVP), training was conducted using only igneous images consisting of 15,294 images with 32×32 pixels in size. Images were taken exclusively from the igneous rocks available from the VPM and SP. The objective of this test was to ensure that convergence in the model was viable, as training time for GAN models usually needs extensive training and high-end computing capabilities entailing one or several Graphical Processing Units (GPU). The MVP trained for four days and 13 hours, using a Quadro M4000 with 8 Gb of video RAM, 30 Gb of RAM, and an eight-core CPU; the model converged and achieved an FID score of 7.49.

Stage II

The images were set to a standard size of 512x512 px, which was the maximum size possible with the available dataset. The final dataset consisted of 10,070 representative images of thin sections in both XPL and PPL from four different classes; (i) plutonic, (ii) volcanic, (iii) metamorphic, and (iv) sedimentary rocks. Moreover, the initial model was evaluated using the FID score when it reached 80 Kimgs to assess the training speed. The following model was evaluated every 140 Kimgs processed. Additional models were trained using 256x256 and 128x128 px versions of the dataset with to evaluate how well the FID score performed under various resolutions while keeping the same dataset size. The training was terminated when the values did not improve and started oscillating, i.e., convergence, the model with the lowest FID score was selected. The training was conducted using a Quadro RTX 5000 with 16 Gb of video RAM, 30 Gb of RAM, and an eight-core GPU taking (1) 264 GPU hours for the 512x512 px model, (2) three days and five hours for the 256x256 px model, and (3) 72 GPU hours for the 128x128 px model.

Stage III

To evaluate the model’s capability to adapt to specific lithologies, we tested the 512 × 512 model as a starting step for generating domain-specific thin section models. With this in mind, we used three lithology classes from the original dataset, using data augmentation and slicing on the original dataset. The main goal is to generate the highest number of domain-specific lithologies without the limitation of class balancing, previously used in the all-lithology model. The training was resumed using the 512 × 512 all-lithology model and trained during 1120 Kimgs, and it lasted for 44 h with the GPU used.

Metrics

Several metrics help evaluate a GAN performance, such as the FID score, Inception score, and evaluation with domain experts. The most used and state-of-of-the-art metric is the Fréchet Inception Distance score9,19,21,22, which is a way of capturing the similarity of generated images to real ones; it is better than the other metrics like the Inception score24. In addition, this metric evaluates the statistical distribution of the generated images and its proximity to the statistical distribution of real images, using the last layer of the InceptionV3 model to capture features of the generated and real images, summarizing the activation as a multivariate Gaussian distribution, and calculating its means and covariance22. Finally, the distance between the distributions, real and fake, is computed using the similarity via the Fréchet distance22. Figure 3 illustrates the behavior of the FID scores reacting to progressive image contamination in the context of petrographic images. The lower the FID score, the closer two image distributions, i.e., the closer a generated image dataset is to real images.

Comparison of different disturbances on FID score for a nepheline foidite from Streckeisen dataset; (a) Original image, (b) increasing the kernel size median filter applied to the original image, (c) adding salt and pepper noise to the image, (d) increase in FID score for corresponding kernel size in median filter, and (e) increasing the noise-to-signal ratio effect on FID score.

Visual evaluation

As an additional step for evaluating the performance of the final model, a survey was made to assess if the generated thin sections were indistinguishable from the real ones; this survey was aimed at subject matter experts from academia and industry with both geoscience and non-geoscience backgrounds, globally. In the survey, ten actual petrographic images were selected randomly from the training dataset, and ten randomly generated artificial images with randomly selected seed numbers were compared. In addition, the location of the correct image was also randomized. For example, the correct image is on the right and corresponds to an Aillikite from the Strekeisen dataset, and the generated image to the left corresponds to seed 0008 in the model, as seen in Fig. 4. In total, more than two hundred responses were received during a short three-day survey.

Results

Model performance

The FID score obtained for the reduced size 32x32 px model was low compared with other resolutions, and the final FID score obtained was 7.5 for this dataset (Fig. 5a). A timelapse of the generated images for the 32x32 and 512x512 px models is shown in (Fig. 5). The images reveal the evolution of a 3 × 3 grid of images from noise to low-resolution artificial thin sections in the 32x32 px model and a single thin section in the 512x512 px model. For every 240 Kimgs processed, the FID score was evaluated for the 32x32 px pixel dataset. Furthermore, it was evaluated for the 512x512 px pixel image dataset for every 140 Kimgs. As stated in the methods section and after proving that a generative model using StyleGAN2 was feasible with the MVP, the network was trained with 512x512 px resolution images, and the FID score obtained was 12.49 (Fig. 5b). Based on the literature review, this is encouraging because this is a state-of-the-art FID score for a GAN model trained on microphotographs encompassing all three lithologies. In training, the FID score stabilized at around 2740 Kimgs, and no significant increase was observed after 6520 Kimgs; hence, we obtained the lowest FID score achieved as the final model.

The final model was used to train specific petrographic groups of thin sections of various dataset sizes. Using it as a way of transfer learning and style-mixing in the context of GANs, training was stopped at 1120 Kimgs for each lithology compared to the 6520 Kimgs reached by the original model. Different lithologies and image sizes were trained. A summary of training iterations is provided in Table 5.

Synthetic petrographic images

The images were generated in grids when the FID score was calculated every 140 Kimgs in the case of the 512 × 512 px model, evaluating the progressive improvement in the quality of the generated images, as shown in Fig. 5. The GAN starts from random noise and progressively improves until it reaches convergence, i.e., the point where no further training would improve the model, as seen in Fig. 5. The grid visualization also helps spot mode collapse, whereby the generator becomes proficient at producing one thin section and only generates variants of that image. Nine selected generated images are shown in Fig. 6 with different FID scores during the training of the 512 × 512 model; the seeds were the same, showing a progressive improvement of mineral-like structures in the synthetic images.

Survey results

Results of the survey to evaluate the quality of the generated images are presented in Fig. 7. The survey was applied to 205 individuals worldwide from different backgrounds in industry and academia contexts. Most responses come from undergraduate and postgraduate geoscience students (backgrounds are shown in Fig. 7). Although the survey's overall results in various backgrounds were similar, we observe that the performance of participants with different backgrounds (“other” in Fig. 7), is generally lower than those with a geoscience background. Across all background categories, undergraduate students have the highest performance, postgraduates have the lowest performance, and researchers have the highest percentage of doubts. Overall, the survey results show that, on average, the generated images perform better on all backgrounds.

Discussion

The proposed use of GAN trained on geological data and with petrographic images enables the visualization of thin sections as a moving system. This could be a way to picture the changing state of different lithologies. Thus far, this application aims to provide a real thin section not seen by the model during training and searching for the associated latent vector. This could lead to similar images found in the model. For example, an oolitic limestone taken from the University of Oxford Rocks Under the Microscope project52 is transferred to the model (Fig. 8a), which then proceeds to search for the most similar image within its latent space (Fig. 8b)—resulting in a vector generated for the artificial image found within latent space. The proposed use of this feature is to search for similar thin sections and experiment with proximal vectors and lithology visually. Searching for a similar thin section in latent space could help us visualize how a computer machine learning model organizes a petrographic set of images, which sections it tends to group, and which ones uses to group lithologies in latent space, what features are more dominant, and how to control the most important ones from a geological point of view, e.g., grain size or foliation, to generate specific textures. The Truncation Trick is a modification of the latent distribution by applying a truncation of the normal distribution used to generate images, i.e., truncating the values which fall above a certain threshold23. This has been shown to improve and boost the FID score of the generated images and was used in the survey to increase the probability of an artificial thin section appearing as a real one, using 0.7 as a truncation value. The truncation value experimentation generates more unrealistic minerals the greater the threshold value, producing images with varying threshold values as shown in Fig. 9. Conversely, reducing the truncation value produces more down-to-earth minerals, albeit with a tendency to make the general color of the thin section gray.

Results of interpolating an image into the model (a) Ooilitc limestone52, and (b) interpolated oolitic limestone in model’s latent space.

An application of synthetic data generation is the ability to extract human-readable feature vectors in latent space. We used the Closed-Form Factorization24 of latent vectors for the all lithologies 512 × 512 model. This method could be used in the future for visualizing different features being modified on the same mineral assemblage, Fig. 10. Moreover, we could use the trained model to extract vectors that can be used to modify the same thin section and add or remove certain constituents. Future applications of this factorization could be petrographic and petrological modeling, especially if this vector can be associated with certain characteristics of geological environments. An interesting application is grain size modification and the kind of minerals present; this model could also be used to visualize facies and lithological changes and assist in geological workflows that rely heavily on petrographic information.

We also applied this method to an image classification problem, using 200 images of landscapes and 200 artificial thin sections. We trained a deep convolutional neural network architecture and tested the model using images of landscapes and actual thin sections. The model reached 95% accuracy with the training data and 80% with the testing set. Synthetic images were more prone to be classified as real, Fig. 7, than actual thin sections. This phenomenon could be explained because generated images tend to look more like an average thin section, given that they are trained to assimilate an entire distribution of images. This "archetypical" thin section is erroneously classified as the real one compared with a single real thin section in a binary classification task, i.e., real, or fake, when a human is used as the classifier. Images that are 'more real than real' have already been observed in GANs trained with faces10,11, and Gestalt theory has been previously used in deep learning in preprocessing steps to obtain efficient image descriptors for CNN53 training. We propose that this "gestalt," i.e., the laws on our ability to make meaningful perceptions of the world54, GAN phenomenon could extend to nonfacial geological datasets and that should be considered and further studied. This phenomenon could indicate continuity, memory, similarity, closure, and superior figure in the sense of Gestalt theory regarding our understanding and perception of synthetic and real petrographic data. We attempted to address one of these Gestalt principles with a symmetry test between the real and fake images used for the survey, which were found to have higher symmetry.

The significance of this model is enabling the generation of artificial thin sections. With further studies, it could be used as a viable method for dataset augmentation, with the potential as a tool for self-labeling being input to semi-supervised and unsupervised learning algorithms; explainability of this kind of model is also an area of research and could elucidate in the future how a GAN organizes data in its latent space. It is also noted and encouraged that the final model can be used as the starting point for training more domain-specific petrographic datasets, and this could be done through style-mixing of the GAN model, to generate more specific generative models, e.g., in the generation of carbonate constituents4 or an only metamorphic thin section generator. The use of style-mixing in a petrographical dataset is shown in Fig. 11, where the model learns parameters such as grain size and XPL/PPL, changing them as the style of the thin section is mixed between domains (Source A and B in Fig. 11). Style-mixing features of interest in a geological dataset could be used to increase diversity or even fill under-represented classes32. This architecture also makes it possible for the images to be generated according to a signal, application of this being the audio-reactive GAN "MAUA" implementation55. Further exploration and evaluation of the generated thin sections in latent space could aid in evaluating how a given lithological feature evolves. In the future, this could be used to assist in interactive explanation and visualization or modeling of petrographic environments, e.g., the impact of varying levels of metamorphism on a thin section and the effects of change in energy levels in a sedimentary environment. We also observe that, with the different image sizes tested, we expect to get lower scores, i.e., better, for smaller image sizes. A comparison in Table 5 gives us an idea of the dataset size needed to achieve a target FID score. For validation against other geological datasets, we test the same architecture with two other geoscience-related datasets, a foraminifera species-level dataset collected, and a general-level pollen dataset published. Both datasets were collected for CNN-Classification tasks, and we selected the nine foraminifera species and five pollen genera with the most images, i.e., 103. These datasets were resized to 18,166 2562 px images for the foraminifera and 7925 642 px images for the pollen dataset, reaching 15.8 and 18.68 FID scores, respectively Fig. 12.

Future recommendations

We encourage implementing the recently released StyleGAN3 model and upcoming GAN architectures to improve the current model further and use the trained model in more domain-specific datasets. Exploration of latent space and feature modification of thin sections is needed as ways to prove that this type of architecture will help in the visualization of changing variables in geological environments by way of changes in latent space, image-to-image translation is suggested to generate petrographic images from another type of images, and implementation of super-resolution29 would be most needed to upsample available petrographic datasets resolutions. Exploration of features extracted from the model is a way forward to control specific geological characteristics of the generated data, i.e., a feature for controlling the grain size, the predominance of the matrix over grains, or the abundance of a particular mineral species. It is also recommended to explore ways to associate latent vectors with geochemical data to visualize the effects of changing modal composition on a thin section; this could be useful, for example, to generate thin sections based on modal composition in metamorphic petrology modeling. A more discrete survey is advised, i.e., generating a model trained on a specific lithology, thus enabling more domain-specific tests to be made, e.g., assessing sedimentologists or petrologists to give an artificial thin section tentative metamorphic or sedimentary facies56,57. We tested the GAN model capacity as a tool to generate datasets for other machine learning algorithms. For this, we trained a binary image classifier using a convolutional neural network over 100 synthetic thin sections versus 100 landscape images, the model achieved over 90% accuracy on training and testing, and when tested against 40 real thin sections, the accuracy dropped but was over 80% nonetheless, further validation is needed to use this kind of model as a data augmentation tool in future geoscience workflows.

Conclusions

-

1.

It is possible to generate an artificial dataset of petrographic thin sections using Generative adversarial networks, via the architecture of StyleGAN2. Training of a viable GAN using StyleGAN2 in this context needs at least 5000 images to achieve sufficiently good images, and more than 10,000 images are recommended to generate an optimal model (i.e., lower than 15 FID score).

-

2.

Based on the result of the survey, we conclude that artificially generated thin sections can be indistinguishable from real ones and even be seen as more authentic than real ones, allowing this tool to generate thin sections of sufficient quality to be able to deceive domain subject experts.

-

3.

Latent space exploration of the model is a method of visualization and interpolation of real thin sections into the model. Further exploration of styles in the context of petrography is needed to support GAN models as a technique for petrographic modeling.

-

4.

Closed form factorization of latent space in a petrographic image generator is used for extracting at least two human readable vectors that could be used in the future for modeling purposes in the geosciences.

-

5.

Both dataset size requirements 103—104 and GPU computing costs prevent the application of GAN-based frameworks, especially in certain geological subfields where data is limited and/or high dimensional.

Data availability

The dataset and code used and/or analysed during the current study available from the corresponding author on reasonable request. This is a manuscript under review process and the trained models will be available soon. For the StyleGAN2 + ADA implementation please refer to https://github.com/NVlabs/stylegan2-ada-pytorch.

References

Caté, A., Perozzi, L., Gloaguen, E. & Blouin, M. Machine learning as a tool for geologists. Lead. Edge 36, 215–219. https://doi.org/10.1190/tle36030215.1 (2017).

Mosser, L., Dubrule, O. & Blunt, M. J. Reconstruction of three-dimensional porous media using generative adversarial neural networks. Phys. Rev. E https://doi.org/10.1103/PhysRevE.96.043309 (2017).

Dramsch, J. S. 70 years of machine learning in geoscience in review. Adv. Geophys. 61, 1–55. https://doi.org/10.1016/bs.agph.2020.08.002 (2020).

Koeshidayatullah, A., Morsilli, M., Lehrmann, D. J., Al-Ramadan, K. & Payne, J. L. Fully automated carbonate petrography using deep convolutional neural networks. Mar. Petroleum Geol. https://doi.org/10.1016/j.marpetgeo.2020.104687 (2020).

Goodfellow, I. J. et al. Generative adversarial networks. Proc. 27th Int. Conf. on Neural Inf. Process. Syst. 2, 2672–2680 (2014).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes https://doi.org/10.48550/ARXIV.1312.6114 (2013).

Jiang, Y., Chang, S. & Wang, Z. Transgan: Two pure transformers can make one strong gan, and that can scale up. https://doi.org/10.48550/ARXIV.2102.07074 (2021).

Dhariwal, P. & Nichol, A. Diffusion models beat gans on image synthesis. https://doi.org/10.48550/ARXIV.2105.05233 (2021).

Curtó, J. D., Zarza, I. C., de la Torre, F., King, I. & Lyu, M. R. High-resolution deep convolutional generative adversarial networks. https://doi.org/10.48550/ARXIV.1711.06491 (2017).

Lago, F. et al. More real than real: A study on human visual perception of synthetic faces [applications corner]. IEEE Signal Process. Mag. 39, 109–116. https://doi.org/10.1109/MSP.2021.3120982 (2022).

Nightingale, S. J. & Farid, H. Ai-synthesized faces are indistinguishable from real faces and more trustworthy. Proc. Natl. Acad. Sci. United States Am. https://doi.org/10.1073/pnas.2120481119 (2022).

Izadi, H., Sadri, J., Hormozzade, F. & Fattahpour, V. Altered mineral segmentation in thin sections using an incrementaldynamic clustering algorithm. Eng. Appl. Artif. Intell. https://doi.org/10.1016/j.engappai.2019.103466 (2020).

de Lima, R. P., Duarte, D., Nicholson, C., Slatt, R. & Marfurt, K. J. Petrographic microfacies classification with deep convolutional neural networks. Comput. Geosci. https://doi.org/10.1016/j.cageo.2020.104481 (2020).

Maitre, J., Bouchard, K. & Bédard, L. P. Mineral grains recognition using computer vision and machine learning. Comput. Geosci. 130, 84–93. https://doi.org/10.1016/j.cageo.2019.05.009 (2019).

de Lima, R. P. P. & Duarte, D. Pretraining convolutional neural networks for mudstone petrographic thin-section image classification. Geoscience (Switzerland) https://doi.org/10.3390/GEOSCIENCES11080336 (2021).

Wu, B., Meng, D., Wang, L., Liu, N. & Wang, Y. Seismic impedance inversion using fully convolutional residual network and transfer learning. IEEE Geosci. Remote. Sens. Lett. 17, 2140–2144. https://doi.org/10.1109/LGRS.2019.2963106 (2020).

Koh, E., Eiman, A., Geoffrey, M. & Nick, B. Utilising convolutional neural networks to perform fast automated modal mineralogy analysis for thin-section optical microscopy. Miner. Eng. 173, 107230. https://doi.org/10.1016/j.mineng.2021 (2021).

Feng, Q., Guo, C., Benitez-Quiroz, F. & Martinez, A. When do gans replicate? On the choice of dataset size. https://doi.org/10.48550/ARXIV.2202.11765 (2022).

Karras, T., Laine, S. & Aila, T. A style-based generator architecture for generative adversarial networks. https://doi.org/10.48550/ARXIV.1812.04948 (2018).

Karras, T. et al. Analyzing and improving the image quality of stylegan. https://doi.org/10.48550/ARXIV.1812.04948 (2019).

Karras, T. et al. Training generative adversarial networks with limited data. https://doi.org/10.48550/ARXIV.2006.06676 (2020).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. https://doi.org/10.48550/ARXIV.1706.08500 (2017).

Brock, A., Donahue, J. & Simonyan, K. Large scale gan training for high fidelity natural image synthesis. https://doi.org/10.48550/ARXIV.1809.11096 (2018).

Shen, Y. & Zhou, B. Closed-form factorization of latent semantics in gans. https://doi.org/10.48550/ARXIV.2007.06600 (2020).

Radford, A., Metz, L. & Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. https://doi.org/10.48550/ARXIV.1511.06434 (2016).

White, T. Sampling generative networks https://doi.org/10.48550/ARXIV.1609.04468 (2016).

Song, S., Mukerji, T. & Hou, J. Geological facies modeling based on progressive growing of generative adversarial networks (gans). Comput. Geosci. 25, 1251–1273. https://doi.org/10.1007/s10596-021-10059-w (2021).

Coiffier, G., Renard, P. & Lefebvre, S. 3d geological image synthesis from 2d examples using generative adversarial networks. Front. Water https://doi.org/10.3389/frwa.2020.560598 (2020).

Niu, Y., Wang, Y. D., Mostaghimi, P., Swietojanski, P. & Armstrong, R. T. An innovative application of generative adversarial networks for physically accurate rock images with an unprecedented field of view. Geophys. Res. Lett. https://doi.org/10.1029/2020GL089029 (2020).

Jo, H., Pan, W., Santos, J. E., Jung, H. & Pyrcz, M. J. Machine learning assisted history matching for a deepwater lobe system. J. Petrol. Sci. Eng. https://doi.org/10.1016/j.petrol.2021.109086 (2021).

Nanjo, T. & Tanaka, S. Carbonate lithology identification with generative adversarial networks. Int. Petrol. Technol. Conf. (2020).

Bizhani, M., Ardakani, O. H. & Little, E. Reconstructing high fidelity digital rock images using deep convolutional neural networks. Sci. Rep. 12, 4264. https://doi.org/10.1038/s41598-022-08170-8 (2022).

Klyuchnikov, N., Ismailova, L., Kovalev, D., Safonov, S. & Koroteev, D. Generative adversarial networks for synthetic wellbore data: Expert perception vs. mathematical metrics. J. Petrol. Sci. Eng. https://doi.org/10.1016/j.petrol.2022.110106 (2022).

Krizhevsky, A. Learning multiple layers of features from tiny images. Tech. Rep., Canadian Institute For Advanced Research (2009).

Huang, X. & Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. https://doi.org/10.48550/ARXIV.1703.06868 (2017)

Sauer, A., Schwarz, K. & Geiger, A. Stylegan-xl: Scaling stylegan to large diverse datasets. https://doi.org/10.48550/arXiv.2202.00273 (2022).

Vahdat, A., Kreis, K. & Kautz, J. Score-based generative modeling in latent space. https://doi.org/10.48550/arXiv.2106.05931 (2021).

Jing, B., Corso, G., Berlinghieri, R. & Jaakkola, T. Subspace diffusion generative models https://doi.org/10.48550/arXiv.2205.01490 (2022).

Dockhorn, T., Vahdat, A. & Kreis, K. Score-based generative modeling with critically-damped langevin diffusion. https://doi.org/10.48550/arXiv.2112.07068 (2021).

Kang, M., Shim, W., Cho, M. & Park, J. Rebooting acgan: Auxiliary classifier gans with stable training. https://doi.org/10.48550/arXiv.2111.01118 (2021).

Kim, D., Shin, S., Song, K., Kang, W. & Moon, I.-C. Soft truncation: A universal training technique of score-based diffusion model for high precision score estimation. https://doi.org/10.48550/arXiv.2106.05527 (2021).

Lam, M. W. Y., Wang, J., Su, D. & Yu, D. Bddm: Bilateral denoising diffusion models for fast and high-quality speech synthesis. https://doi.org/10.48550/arXiv.2203.13508 (2022).

Karras, T. et al. Alias-free generative adversarial networks https://doi.org/10.48550/ARXIV.2106.12423 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. Proc. IEEE Comput. Soc. Conf. on Comput. Vis. Pattern Recognit. 2016-December, 770–778, DOI: https://doi.org/10.1109/CVPR.2016.90 (2016).

Tetley, M. G. & Daczko, N. R. Virtual petrographic microscope: a multi-platform education and research software tool to analyse rock thin-sections. Aust. J. Earth Sci. 61, 631–637. https://doi.org/10.1080/08120099.2014.886624 (2014).

da Mommio, A. Strekeisen thin section online database (2007). Accessed: 2022–03–09.

E., A. A., W.S., M. & C., G. Adams Atlas of Sedimentary Rocks Under the Microscope-Longman (Prentice Hall, 1984), 1 edn.

Derochette, J. M. Minerals microscopy and spectroscopy (2008). Accessed: 2022–03–09.

Harris, C. R. et al. Array programming with numpy. Nature 585, 357–362. https://doi.org/10.1038/s41586-020-2649-2 (2020).

Paszke, A. et al. Pytorch: An imperative style, high-performance deep learning library. https://doi.org/10.48550/ARXIV.1912.01703 (2019).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. https://doi.org/10.48550/ARXIV.1512.00567 (2015).

Waters, D. Earth sciences image store: Rocks under the microscope (2005). Accessed: 2022–03–09.

Hörhan, M. & Eidenberger, H. Gestalt descriptions for deep image understanding. Pattern Anal. Appl. 24, 89–107. https://doi.org/10.1007/s10044-020-00904-6 (2021).

Shi, Q., Desheng, W., Ying, C. & Jun, F. Lung nodules detection in ct images using gestalt-based algorithm. Chin. J. Electron. 25, 711–718. https://doi.org/10.1049/cje.2016.07.009 (2016).

Brouwer, H. Audio-reactive latent interpolations with stylegan. 4th Work. on Mach. Learn. for Creat. Des. NeurIPS (2020).

Koeshidayatullah, A. Optimizing image-based deep learning for energy geoscience via an effortless end-to-end approach. J. Pet. Sci. Eng. 215, 110681. https://doi.org/10.1016/j.petrol.2022.110681 (2022).

Koeshidayatullah, A. et al. Quantitative evaluation of the roles of ocean chemistry and climate on ooid size across the Phanerozoic: Global versus local controls. Sedimentology https://doi.org/10.1111/sed.12998.

Acknowledgements

We thank Dr. Alessandro Da Mommio from the University degli Studi di Milano and Dr. Nathan Daczko from the University of Macquarie for the collected images made publicly available at their respective sites. Thanks to Dr. Sreenivas Bhattiprolu for reviewing an early version paper and giving helpful insights. Thanks to the people at Paperspace, especially Jordan Burke for the help provided during training troubleshooting and James Skelton for the wonderful exposition of the cloud service features. Thanks to all students, professors, and professionals who helped with the survey; answers to the real thin sections made in the test are attached as an annex. Thanks to the members of the Society of Economic Geologists student chapter from the National University of Colombia in Bogotá and the King Fahd University of Petroleum and Minerals for the divulgation efforts and support of this work. (KFUPM Startup Grant: SF21011).

Author information

Authors and Affiliations

Contributions

I.F. model training, creation of tests, and manuscript writing A.K. original idea, manuscript writing. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ferreira, I., Ochoa, L. & Koeshidayatullah, A. On the generation of realistic synthetic petrographic datasets using a style-based GAN. Sci Rep 12, 12845 (2022). https://doi.org/10.1038/s41598-022-16034-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-16034-4

This article is cited by

-

Carbonate lithofacies classification in optical microscopy: a data-centric approach using augmentation and GAN synthetic images

Earth Science Informatics (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.