Abstract

Coronary artery disease (CAD) is a prevalent disease with high morbidity and mortality rates. Invasive coronary angiography is the reference standard for diagnosing CAD but is costly and associated with risks. Noninvasive imaging like cardiac magnetic resonance (CMR) facilitates CAD assessment and can serve as a gatekeeper to downstream invasive testing. Machine learning methods are increasingly applied for automated interpretation of imaging and other clinical results for medical diagnosis. In this study, we proposed a novel CAD detection method based on CMR images by utilizing the feature extraction ability of deep neural networks and combining the features with the aid of a random forest for the very first time. It is necessary to convert image data to numeric features so that they can be used in the nodes of the decision trees. To this end, the predictions of multiple stand-alone convolutional neural networks (CNNs) were considered as input features for the decision trees. The capability of CNNs in representing image data renders our method a generic classification approach applicable to any image dataset. We named our method RF-CNN-F, which stands for Random Forest with CNN Features. We conducted experiments on a large CMR dataset that we have collected and made publicly accessible. Our method achieved excellent accuracy (99.18%) using Adam optimizer compared to a stand-alone CNN trained using fivefold cross validation (93.92%) tested on the same dataset.

Similar content being viewed by others

Introduction

Coronary artery disease (CAD) is a prevalent condition that affects a growing number of people worldwide. In the United States, about 18.2 million Americans ≤ 20 years of age have coronary heart disease. One American will suffer a heart attack every 40 s, and more than 350,000 will die from coronary heart disease every year1. Early diagnosis of CAD facilities intensification of guideline-directed medical therapy and, if indicated, coronary intervention, to avert major adverse cardiac events and improve clinical outcomes.

Clinicians cannot rely on patient’s symptoms alone to diagnose CAD as they are neither sensitive nor specific. Heart imaging can help the physicians to detect CAD earlier and treat patients more effectively. The reference standard for diagnosis of CAD is invasive coronary angiography. CAD is diagnosed with more than 50% stenosis of the left main, left anterior descending, circumflex or right coronary artery. However, invasive coronary angiography is expensive and carries potential risks. Cardiac imaging is cheaper, safer, and can help physicians confidently diagnose CAD noninvasively. Thereby imaging alone can serve as a gatekeeper to downstream invasive coronary angiography and definitive revascularization therapy. Stress electrocardiography, echocardiography, nuclear myocardial perfusion scans, and coronary computed tomographic angiography (CCTA) are the mainstays of noninvasive imaging tests for CAD. However, CCTA suffers from more than 30% false positive rate2. To reduce the number of misdiagnosed cases, HeartFlow Inc. developed a noninvasive image and physics-based technology to reduce the number of unnecessary invasive coronary angiography. However, the HeartFlow Inc. approach needs a few days for analysis, and it still has more than 20% error.

Not being limited by acoustic window access (as in echocardiography) or ionizing radiation concerns (as in nuclear imaging and CCTA), cardiac magnetic resonance (CMR) has emerged as a viable alternative for noninvasive assessment of CAD3. It provides precise measurements of heart chamber structure and function, myocardial perfusion and infarct extent, as well as parametric quantitation of myocardial tissue characteristics. These readouts facilitate comprehensive CAD diagnosis, disease surveillance, and monitoring of therapeutic response4,5.

The manual interpretation of diagnostic imaging tests requires time and expertise. Moreover, data-mining and machine learning methods are exploited to automate medical diagnoses to reduce analysis time and potentially improve accuracy. CMR images may be 2D or 3D. The sensitivity and specificity of 3D CMR of the coronary arteries is inadequate due to excessive artefacts. This explains why 3D CMR coronary angiography for CAD diagnosis has not become a clinical routine. On the contrary, CMR 2D cine images are commonly used to diagnose CAD based on indirect evidence of myocardial ischemia or infarct, such as segmental regional wall motion abnormalities and myocardial thinning in typical coronary arterial territories. Regarding artificial intelligence-enabled diagnosis, deep models can conglomerate pixel-level features in an image to a degree that is not possible with the human eye. The excellent results of our model for detection of CAD attests to this postulation. Based on the description above, in this paper, we proposed the use of deep learning combined with a random forest classifier to analyse CMR 2D images for CAD diagnosis. The main contributions of this paper are as follows:

-

We have collected and made publicly available a dataset comprising multiparametric CMR images that can be used to train, test, and validate automated CAD detection methods.

-

We have developed a novel ensemble approach for diagnosing CAD on CMR images. The method deployed convolutional neural networks (CNNs) to convert high-dimension CMR images to low-dimension numeric features, which were then used to build the nodes of random forest decision trees. Without CNNs, each pixel of the CMR image would have been considered one feature, which would render the random forest intractable due to an inordinately high number of features.

-

CNNs are designed to extract the essential features of any image dataset automatically. Therefore, using CNNs predictions as features enhances the generalizability of the proposed method.

-

By using an ensemble of decision trees, our method was able to achieve high classification accuracy.

In the remainder of this paper, related works are reviewed (“Related work” Section), background is discussed (“Background ” Section), the dataset is introduced (“Dataset description” Section), the proposed method is described (“Proposed method” Section), and experimental results are presented (“Experimental results” Section). Additionally, discussion and conclusion are presented in “Discussion and Conclusion” Sections, respectively.

Related work

To the best of our knowledge, our work is the first that uses CMR data as input to ensemble of deep neural networks in order to diagnose CAD. Therefore, in this section, existing CAD diagnosis methods that use other input data such as electrocardiography (ECG), phonocardiography (PCG), etc. are reviewed. This way we can compare our method based on CMR with existing ones based on other data types. We also review some of the ensemble-based methods since our method uses an ensemble of decision trees for CAD diagnosis.

ECG

Butun et al.6 reported a technique for automated diagnosis of CAD from ECG signals based on capsule networks (CapsNet). They applied the 1D version of CapsNet for automated detection of CAD ("1D-CADCapsNet" model) on two- and five-second ECG segments, and attained optimal diagnostic accuracy of 98.62% for five-second ECG signals. Acharya et al.7 proposed a CNN comprising four convolutional, three fully-connected, and four max-pooling layers that achieved 94.95% and 95.11% accuracy rates for discriminating between abnormal (from patients with CAD and myocardial infarct) and normal ECGs on two- and five-second ECG segments, respectively. Khan et al.8 presented a signal processing framework to diagnose CAD on raw ECG signals of 9–12 min durations. The ECG signals were pre-processed and segmented using empirical mode decomposition by selecting intrinsic mode function 2–5. The features for optimal classification of data included marginal factor, impulse factor, shape-factor, kurtosis, etc. The pre-processed signals were fed to the support vector machine classifier, and achieved an accuracy of 95.5%.

PCG

The PCG records heart sounds and murmurs that may become altered in CAD, but these changes can be subtle and imperceptible to the human ear. To overcome the low signal-to-noise ratio of PCG signals due to environmental noise, Pathak et al.9 developed a PCG-based CAD detection method. They recorded PCG signals from four auscultation sites on the left anterior chest using a multichannel data acquisition system. Evaluated in the presence of white noise, vehicle and babble, the system was effective in collecting essential information from both diastolic and systolic phases of the cardiac cycle. Li et al.10 introduced a new feature fusion approach that fed Mel-frequency cepstral coefficients to a CNN to output valuable features that were in turn fused and provided to a multilayer perceptron for classification. In subsequent work, Li et al.11 demonstrated the efficacy of a new dual-input neural network that analyzed simultaneously assembled PCG and ECG signals using combined deep learning and feature extraction to extract useful underlying information in the signals.

Blood assays

Dyslipidemia is a pathogenetic factor that contributes to the development of CAD. Guo et al.12 investigated whether the atherogenic index of plasma (AIP) is an independent predictor of CAD risk in postmenopausal women. Compared with controls, triglyceride (TG) was higher, high-density lipoprotein cholesterol (HDL-C) levels lower, and non-traditional lipid profile values (AIP, total cholesterol/ HDL-C ratio) more elevated in CAD subjects. Circular RNAs (circRNAs) have emerged as a potential biomarker for CAD. Liang et al.13 elucidated the role of circZNF609 in atherosclerosis. They demonstrated on logistic regression an independent inverse relationship between circZNF609 expression (quantitated in peripheral blood leukocytes using real-time polymerase chain reaction) and CAD risk among 209 controls and 330 CAD patients.

Clinical features and CCTA

Al’Aref et al.14 used a machine learning method to predict obstructive CCTA using clinical factors and the coronary artery calcium score. They employed the boosted ensemble algorithm XGBoost with tenfold cross-validation. Age, gender, and coronary artery calcium score were found to be the most prominent features. Baskaran et al.15 validated a machine learning model to predict obstructive CAD and revascularization on the Coronary Computed Tomographic Angiography for Selective Cardiac Catheterization (CONSERVE) study dataset16, which outperformed the CAD consortium clinical score. Imaging variables were most correlated with revascularization, and the performance did not differ whether the imaging parameters used were derived from invasive coronary angiography or CCTA.

Ensemble methods

Since our method is based on ensemble of decision trees, we review some of the existing literature on CAD diagnosis which use ensemble of classifiers. In17_bookmark44, three base classifiers namely K-Nearest-Neighbor, random forest and SVM are combined to form an ensemble method for CAD diagnosis. The final decision is made based on ensemble voting techniques. The experiments were carried out on Z-Alizadeh Sani dataset18. Another method based on rotation forest algorithm has used neural networks as the base classifiers19_bookmark46. The method has been evaluated on Cleveland dataset. Kausar et al.20 combined the advantages of supervised and unsupervised methods by utilizing K-means and SVM. They performed dimensionality reduction via principle component analysis and tested their method on Cleveland dataset.

Abdar et al.21 used nu-SVC as the base algorithm to present NE-nu-SVC method for CAD diagnosis. To gain better results, the authors balanced the studied datasets (Cleveland and Z-Alizadeh Sani) and performed feature selection. Finally, Hedeshi et al.22 took a PSO-based approach to extract set of rules for CAD diagnosis. The extracted rules were reported to have good Interpretability.

Background

Considering that the proposed method is based on random forest and decision tree, these methods are briefly reviewed in this section.

Decision tree

A decision tree can be considered a series of Yes/No questions asked to make predictions about data. Decision trees are interpretable models since they carry out classification much as humans do. A series of questions are answered to arrive at a conclusion. The decision tree nodes are created that minimizes Gini impurity measures. For each node, the Gini impurity measure is the probability that a randomly drawn sample from the node is misclassified. As nodes of the tree are created, Gini impurity is reduced. Any node with zero Gini impurity is considered a leaf node and is not expanded anymore. For a classification problem with C classes, the Gini impurity of a node n is computed as.

where p(i) is the probability of picking a sample with class i in node n.

A typical decision tree is presented in Fig. 1. At each node, a specific condition is checked with the input sample. Depending on the situation being True or False, one of the two offsprings of the node is chosen. The routine continues until a leaf node is reached or some termination condition is met18.

Random forest

Random forest is a model that contains multiple decision trees. To build each of the trees, a random subset of the training data is used. The samples that form the subset are drawn with replacement from the training data. Therefore, some samples may be used multiple times in a single tree. Moreover, to split tree nodes, a random subset of features is considered. During the testing phase, each decision tree of the forest makes a prediction about the given test sample. The final prediction result is then decided by majority voting (Fig. 2)23. Using multiple trees, the random forest achieves good prediction accuracy by avoiding the overfitting that a single decision tree may be prone to. Each of the decision trees is built using a random subset of data features. For classification problems, the subset cardinality is usually set as \(\sqrt{\#features}\). Assuming the dataset has 36 features, six features will typically be considered to split each tree node. This is by no means immutable. For instance, it is common to deploy all features for node splitting in regression problems.

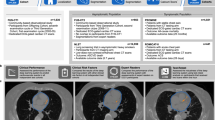

Dataset description

The dataset contains 63,648 multiparametric CMR images. The number of images with CAD disease is 26,104 and the number of images representing healthy patients is 37,544 (see examples of both in Fig. 3). The dataset is publicly accessible24. In the patient group, CAD was confirmed on invasive coronary angiography, the diagnostic gold standard. To collect the CMR images, four types of sequences namely LGE, Perfusion, T2 weighted, and SSFP have been used. In each of these sequences, long and short axes planes of the heart have been considered (Fig. 4). Long axis consists of two, three, and four chamber views. For each of these views, one slice from 2 different angles has been captured. Short axis consists of 10 slices from base to apex of the heart. Again, each of the 10 slices has been captured from 2 different angles. Based on the description above, for each patient 13 slices have been collected in four different sequence types. Therefore, 13 × 4 CMR images have been collected per patient. Dividing the total number of CMR images (63,648) in the dataset by 13 × 4 reveals that 1224 patients (722 healthy and 502 CAD) have participated in the collection of this dataset.

Example cardiac magnetic resonance images from coronary artery disease patients (a–c) and healthy subjects (d–f). c is a black-blood spinecho image; the rest are single-phase images of steady-state free precession CINE images. The lesions indicating CAD have been marked with yellow color in parts (a–c).

To be able to use the collected dataset in research studies regarding diagnostic and therapeutic purposes, institutional approval was granted. Approval was granted on the grounds of existing datasets. Before collecting data, patients were informed about the data collection process and their consent were obtained. Execution of all methods was compliant with relevant guidelines and regulations. To use data, ethical approval was obtained from Tehran Omid hospital.

CMR imaging protocols

From September 2018 to September 2019, the study prospectively recruited subjects who attended the CMR department of Omid Hospital, Tehran, Iran. The institutional ethics committee of Tehran Omid hospital had approved the study. All participants gave written informed consent. CMR examination was performed on a 1.5-T system (Magnetom Aera Siemens, Erlangen, Germany) using dedicated body coils. In each subject, segmented true fast imaging with steady-state precession (TrueFISP) cine images as well as high-resolution phase-sensitive inversion-recovery (PSIR) early (EGE, immediately after bolus infusion of gadoterate meglumine contrast at 0.1 mmol/kg body weight) and late gadolinium enhancement (LGE, 15 min after contrast administration) images were acquired in the standard long- (LAX) and short-axis (SAX) views. Parametric maps of left ventricular myocardial T2, native T1, and post-contrast T1 (10–30 min after contrast administration) were acquired in the basal, mid and apical SAX views. Table 1 summarizes the details of CMR sequences and typical parameters.

Proposed method

The motivation behind the proposed method and its detailed description are explained in this section.

Motivation

-

Each pixel of an image forms a meaningful pattern connecting neighbouring pixels. The conversion of pixels to feature vectors would delink all interpixel relationships leading to violation of pixel locality and severe degradation of classification performance.

-

To feed image data to a decision tree, we would be forced to treat each pixel as one feature. This would increase the number of features inordinately, thereby incurring the curse of dimensionality.

As such, it became apparent that we had to use a different approach than the pixel-based method to convert the whole image into numeric features that can be fed efficiently to the decision trees. To this end, we trained CNNs on image data and used the CNN outputs as input features for the decision trees of the random forest. CNNs can extract essential features from whole input images26 for accurate prediction, thereby avoiding the curse of dimensionality. Moreover, with CNNs, the locality of the pixels is preserved27.

It is known that training the same CNN multiple times will lead to a different parameter set. The reason is two-fold. First, each run uses a different random seed leading to different initialization of CNN parameters. Second, CNNs are trained on mini-batch of samples drawn randomly from the training set. The stochastic nature of mini-batch sampling affects the overall training process. Therefore, one training run might result in a better parameter set than the other. To reduce the unwanted effects of stochastic training, we train multiple CNNs employing K-fold cross validation and use their prediction during random forest creation leading to better overall performance. Given that we used stratified K-fold cross validation, the percentage of healthy and CAD samples within each fold was preserved. Recall that in our dataset, the total numbers of healthy and CAD patients were 722 and 502, respectively. Therefore, in each fold, the numbers of healthy and CAD patients were approximately 144 and 100, respectively.

Proposed method description

The proposed method used a combination of random forest and CNNs. Using CNNs, 2D CMR images were converted into vectors of real values automatically. These vectors were fed to the random forest of decision trees instead of direct pixel-by-pixel feeding of CMR images as the latter would otherwise have led to violation of pixel locality (i.e., loss of inter-pixel spatial relationships) and incurred the curse of dimensionality. The CNNs could be thought of as the “feature generation” step that preceded the “classification” step comprising the random forest of decision trees. The structure of each of the CNNs is depicted in Fig. 5. At the pre-processing phase, input data were resized to \(100\times 100\) and normalized between 0 and 1. Next, the dataset D was divided into five parts for fivefold cross validation. The training was repeated five times for k = 1,…, 5. In each iteration k, the training set \({f}_{k}\subset D\) consisted of data from four out of the five folds. The remaining fold was used for testing. To train the CNNs \(\{{C}_{i}, i=1, \dots , N\}\), each training set \({f}_{k}\) was divided to n subsets \(\{{f}_{k1}, \dots , {f}_{kn}|{f}_{kl}\cap {f}_{km}=\varnothing , l,m\in \{1,\dots ,n\}\wedge l\ne m\}\). The number of CNNs (n) is the hyperparameter of the proposed method which was set to 10 in our experiments.

To ensure that the CNNs would have different sets of parameters, each CNN \({C}_{i}, i=1,\dots ,n\) was trained on subset \({f}_{k}-{f}_{ki}\) and validated on subset \({f}_{ki}\). The trained CNNs were used to create the random forest decision trees. Each node of a decision tree represented one randomly selected CNN-generated numerical feature of a randomly selected CMR image sample of the training set. Computationally, one of \(|{f}_{k}|\) rows and one of n columns were randomly selected from the \([|{f}_{k}|\times n]\) matrix of outputs obtained by feeding \(\left|{f}_{k}\right|\) CMR images to n CNNs for each fold k of K-fold cross validation.

Finally, the test set \(D-{f}_{k}\) was used to evaluate the trained model and the final results were presented. To this end, each test sample was fed to the CNNs and their outputs were used as input to the random forest. Based on the CNNs outputs, the decision trees of the random forest determined whether the test sample had CAD disease or not. The final prediction was presented using majority voting between the decision trees predictions. High-level steps of the proposed method are presented in Fig. 6. In contrast to conventional studies where validation is only performed after obtaining the final output of the classifier, training and validation were conducted for each CNN during the “feature generation step” as well as for the random forest of decision trees based on the aggregated results of the former during the “classification” stage (see Fig. 6).

The pseudo-code of the proposed method is presented in Algorithm 1. In lines 1–2, the training data are pre-processed. Next, the K-fold cross validation loop is begun. Training data for k-th iteration (\({f}_{k}\)) is divided to n subsets. The subsets are used for training of n CNNs in lines 6–7. The trained CNNs are used to compute matrix P in lines (8–11). Based on matrix P, decision trees of RF are built one by one in lines (13–19). For better clarification, the operations performed in lines (8–19) of Algorithm 1 are depicted in Fig. 7.

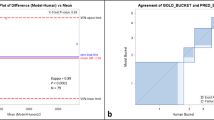

Experimental results

All the experiments were implemented in Python using the Keras library. The models were trained using GeForce GTX 950 GPU and 16 GB of RAM. Further, we compared our method with a CNN (as a single classifier) to investigate the effect of using an ensemble of classifiers versus just one. As mentioned in “Random forest” Section, n = 10 CNNs were used to convert the image dataset into n numeric features that were required to build the random forest decision trees nodes. The hyperparameters of the CNNs are summarized in Table 2. We used 15 decision trees in the random forest. The experiments were conducted in two phases. First, CNNs were trained for 10 epochs following the procedures explained in “Random forest” Section _bookmark9 and Fig. 6. Next, the trained CNNs were used to generate the numeric features that were required for the creation of the decision trees. We compared our method with a single CNN that was trained using fivefold cross validation. To make the comparison fair, the training time assigned to the single CNN was equal to the total training time of our approach. In other words, the CNNs used in our approach were trained only for 10 epochs but the comparator single CNN model was trained for as many epochs as possible until reaching the total training time of our method (464.75 s). The effect of using different optimizers has also been inspected. To this end, the experiments have been performed for three optimizers namely Adagrad28, RMSProp29, and Adam30. The performance of our method and the stand-alone CNN are compared in Table 3, which shows the superiority of our method. As can be seen, regardless of the optimizer type, our method has outperformed the stand-alone CNN which shows that our method is not sensitive to the choice of the optimizer. Moreover, among the evaluated optimizers, Adam has yielded the best performance. In Table 3, we did not include loss function for our method since it is a hybrid approach.

We have compared the performance of our method using CMR data with existing ones using other data types in Table 4. To ease the comparison between our method and existing ones, the last row of Table 3 has been repeated as the last row of Table 4. Clearly, our method has outperformed the rival ones in Table 4 which suggests that CMR can be used as a reliable data source for CAD diagnosis.

Discussion

Random forests have proved to be robust and accurate in challenging classification problems. As they are designed to work with numeric features, feeding image data pixel by pixel directly to random forests causes them to incur the curse of dimensionality. Even for a small image 28 × 28, the random forest will have to deal with 282=784 features, which renders the learning process prohibitively demanding. To this end, we relied on the automatic feature extraction property of CNNs to convert images to useful numeric values. However, as CNNs get more complex, the training time increases. As the random forest comprises an ensemble of decision trees, we can train multiple lightweight CNNs (with moderate accuracy) in a shorter time and still achieve good performance by harnessing an ensemble of decision trees that use CNNs predictions as input features. Despite being slow to train, the CNNs could perform quite fast during the testing phase. Once trained, the model can deliver real-time performance.

The advantages of the proposed method are as follows:

-

Using random forest for image data classification reinforces the reliability of the prediction since an ensemble of decision trees is used.

-

Since CNNs perform automatic feature extraction, we do not need to design hand-crafted features. This enhances the generalizability of our method.

-

The proposed method is applicable to any image-based classification problem as CNNs can learn any image dataset. Therefore, we can train a reasonable number of CNNs on the dataset and use their predictions as features in random forest decision trees.

The disadvantages of our method are discussed below:

-

Complex problems may demand more features to achieve acceptable accuracy. Considering that we rely on CNNs to map images to useful numeric features, the training time of our method will increase commensurately with the increased number of features.

-

The performance of the random forest is affected by the number of features used in the decision trees. In our method, each feature corresponds to the prediction of a trained CNN. Currently, the number of CNNs (features) is treated as a hyperparameter that is set by trial and error. This introduces a degree of arbitrariness to our method. However, it is possible to use random forests to choose features that play more significant roles in classification performance. Conceivably, we can start by training several CNNs and then use the random forest to screen for the CNNs in which the predictions are more critical in classification performance. The CNNs that are less important can then be discarded. Such an approach constitutes an interesting direction for future work.

-

The dataset samples used in the experiments have been obtained in a single institution. It is desirable to validate the model on other datasets.

Conclusion

In this paper, a novel classification method was presented for diagnosing CAD patients based on ensemble of CNNs and random forest. The method harnesses the classification power of multiple decision trees of the random forest. We trained multiple CNNs on the image dataset, and the trained CNNs predictions were used as features during the decision tree building process. Our method was able to achieve high accuracy using lightweight CNNs to derive features. We reported the classification results on our CMR image dataset, which has also been released for public use. By exploiting the representation capability of CNNs, the proposed method has enabled the use of random forest for the classification of any image-based dataset.

Data availability

The datasets analysed during the current study are available in the CAD Cardiac MRI Dataset repository, https://www.kaggle.com/danialsharifrazi/cad-cardiac-mri-dataset.

References

Benjamin, E., Emelia, J., Michael, J. & Stephanie, E. Heart disease and stroke statistics—2017 update: a report from the American Heart Association. Circu. 135(10), e146–e603 (2017).

Pontone, G. et al. Coronary artery disease: diagnostic accuracy of CT coronary angiography—a comparison of high and standard spatial resolution scanning. Radiology 271(3), 688–694 (2014).

Catalano, O. et al. Cardiac magnetic resonance in stable coronary artery disease: added prognostic value to conventional risk profiling. BioMed Res. Int. https://doi.org/10.1155/2018/2806148 (2018).

Kolentinis, M., Le, M., Nagel, E. & Puntmann, V. O. Contemporary cardiac MRI in chronic coronary artery disease. Eur. Cardiol. Rev. https://doi.org/10.15420/ecr.2019.17 (2020).

Śpiewak, M. Imaging in coronary artery disease cardiac magnetic resonance. Cor et Vasa 57(6), e453–e461 (2015).

Butun, E., Yildirim, O., Talo, M., Tan, R.-S. & Acharya, U. R. 1D-CADCapsNet: One dimensional deep capsule networks for coronary artery disease detection using ECG signals. Phys. Med. 70, 39–48 (2020).

Acharya, U. R. et al. Automated characterization and classification of coronary artery disease and myocardial infarction by decomposition of ECG signals: A comparative study. Inf. Sci. 377, 17–29 (2017).

Khan, M. U., Aziz, S., Naqvi, S. Z. H., Rehman, A. Classification of coronary artery diseases using electrocardiogram signals. In 2020 International Conference on Emerging Trends in Smart Technologies (ICETST); 2020: IEEE. pp. 1–5.

Pathak, A., Samanta, P., Mandana, K. & Saha, G. An improved method to detect coronary artery disease using phonocardiogram signals in noisy environment. Appl. Acoust. 164, 107242 (2020).

Li, H. et al. Dual-input neural network integrating feature extraction and deep learning for coronary artery disease detection using electrocardiogram and phonocardiogram. IEEE Access 7, 146457–146469 (2019).

Li, H. et al. A fusion framework based on multi-domain features and deep learning features of phonocardiogram for coronary artery disease detection. Comput. Biol. Med. 120, 103733 (2020).

Guo, Q. et al. The sensibility of the new blood lipid indicator—atherogenic index of plasma (AIP) in menopausal women with coronary artery disease. Lipids Health Dis. 19(1), 1–8 (2020).

Liang, B. et al. CircRNA ZNF609 in peripheral blood leukocytes acts as a protective factor and a potential biomarker for coronary artery disease. Ann. Transl. Med. 8(12), 741 (2020).

Al’Aref, S. J. et al. Machine learning of clinical variables and coronary artery calcium scoring for the prediction of obstructive coronary artery disease on coronary computed tomography angiography: Analysis from the CONFIRM registry. Eur. Heart J. 41(3), 359–67 (2020).

Baskaran, L. et al. Machine learning insight into the role of imaging and clinical variables for the prediction of obstructive coronary artery disease and revascularization: An exploratory analysis of the conserve study. PLoS One 15(6), e0233791 (2020).

Chang, H. J. et al. Selective referral using CCTA versus direct referral for individuals referred to invasive coronary angiography for suspected CAD: A randomized, controlled, open-label trial. JACC Cardiovasc. Imaging 12(7 Part 2), 1303–12 (2019).

Velusamy, D. & Ramasamy, K. Ensemble of heterogeneous classifiers for diagnosis and prediction of coronary artery disease with reduced feature subset. Comput. Methods Programs Biomed. 198, 105770 (2021).

Alizadehsani, R. et al. A data mining approach for diagnosis of coronary artery disease. Comput. Methods Programs Biomed. 111(1), 52–61 (2013).

Karabulut, E. M. & İbrikçi, T. Effective diagnosis of coronary artery disease using the rotation forest ensemble method. J. Med. Syst. 36(5), 3011–3018 (2012).

Kausar, N. et al. Ensemble clustering algorithm with supervised classification of clinical data for early diagnosis of coronary artery disease. J. Med. Imaging Health Inform. 6(1), 78–87 (2016).

Abdar, M., Acharya, U. R., Sarrafzadegan, N. & Makarenkov, V. NE-nu-SVC: A new nested ensemble clinical decision support system for effective diagnosis of coronary artery disease. IEEE Access 7, 167605–167620 (2019).

Hedeshi, N. G., Abadeh, M. S. An expert system working upon an ensemble PSO-based approach for diagnosis of coronary artery disease. In 2011 18th Iranian Conference of Biomedical Engineering (ICBME); 2011: IEEE. pp. 249–54.

Sekhar, C. R. & Madhu, E. Mode choice analysis using random forrest decision trees. Transp. Res. Proced. 17, 644–652 (2016).

https://www.kaggle.com/danialsharifrazi/cad-cardiac-mri-dataset. 2021.

Schowengerdt, R. A. Techniques for Image Processing and Classifications in Remote Sensing (Academic Press, 2012).

Khodatars, M., Shoeibi, A., Ghassemi, N. et al. Deep learning for neuroimaging-based diagnosis and rehabilitation of autism spectrum disorder: A review. arXiv preprint arXiv:2007.01285 (2020).

Shoeibi, A. et al. Epileptic seizures detection using deep learning techniques: A review. Int. J. Environ. Res. Public Health 18(11), 5780 (2021).

Ruder, S. An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747 (2016).

Zou, F., Shen, L., Jie, Z., Zhang, W., Liu, W. A sufficient condition for convergences of adam and rmsprop. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2019. pp. 11127-35.

Kingma, D. P., Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Ghosh, S. K., Ponnalagu, R., Tripathy, R. & Acharya, U. R. Automated detection of heart valve diseases using chirplet transform and multiclass composite classifier with PCG signals. Comput. Biol. Med. 118, 103632 (2020).

Fischer, A. M. et al. Accuracy of an artificial intelligence deep learning algorithm implementing a recurrent neural network with long short-term memory for the automated detection of calcified plaques from coronary computed tomography angiography. J. Thorac. Imaging 35, S49–S57 (2020).

Zhang, X. et al. Automated detection of cardiovascular disease by electrocardiogram signal analysis: A deep learning system. Cardiovasc. Diagn. Ther. 10(2), 227 (2020).

Sharma, M. & Acharya, U. R. A new method to identify coronary artery disease with ECG signals and time-Frequency concentrated antisymmetric biorthogonal wavelet filter bank. Pattern Recogn. Lett. 125, 235–240 (2019).

Yang, L. et al. Meta-analysis: diagnostic accuracy of coronary CT angiography with prospective ECG gating based on step-and-shoot, Flash and volume modes for detection of coronary artery disease. Eur. Radiol. 24(10), 2345–2352 (2014).

Sridhar, C., Acharya, U. R., Fujita, H., Bairy, G. M. Automated diagnosis of coronary artery disease using nonlinear features extracted from ECG signals. In 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC); 2016: IEEE. pp. 000545–9.

Alizadehsani, R. et al. Diagnosis of coronary artery disease using data mining techniques based on symptoms and ecg features. Eur. J. Sci. Res. 82(4), 542–553 (2012).

Chaikovsky, I., Kohler, J., Hecker, T. et al. Detection of coronary artery disease in patients with normal or unspecifically changed ECG on the basis of magnetocardiography. In Proceedings of the 12-th International Conference on Biomagnetism; 2000: Citeseer. pp. 565–8.

Samanta, P., Pathak, A., Mandana, K. & Saha, G. Classification of coronary artery diseased and normal subjects using multi-channel phonocardiogram signal. Biocybern. Biomed. Eng. 39(2), 426–443 (2019).

Zhang, H. et al. Detection of coronary artery disease using multi-modal feature fusion and hybrid feature selection. Physiol. Meas. 41(11), 115007 (2020).

Choudhury, A. D., Banerjee, R., Pal, A., Mandana, K. A fusion approach for non-invasive detection of coronary artery disease. In Proceedings of the 11th EAI International Conference on Pervasive Computing Technologies for Healthcare; 2017. pp. 217–20.

Banerjee, R., Vempada, R., Mandana, K., Choudhury, A. D., Pal, A. Identifying coronary artery disease from photoplethysmogram. In Proceedings of the 2016 Acm International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct; 2016. pp. 1084–8.

Banerjee, R., Bhattacharya, S., Bandyopadhyay, S., Pal, A., Mandana, K. Non-invasive detection of coronary artery disease based on clinical information and cardiovascular signals: A two-stage classification approach. In 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (cbms); 2018: IEEE. pp. 205–10.

Pathak, A., Samanta, P., Mandana, K. & Saha, G. Detection of coronary artery atherosclerotic disease using novel features from synchrosqueezing transform of phonocardiogram. Biomed. Signal Process. Control 62, 102055 (2020).

Arabasadi, Z., Alizadehsani, R., Roshanzamir, M., Moosaei, H. & Yarifard, A. A. Computer aided decision making for heart disease detection using hybrid neural network-genetic algorithm. Comput. Methods Programs Biomed. 141, 19–26 (2017).

Alizadehsani, R. et al. Diagnosis of coronary artery disease using data mining based on lab data and echo features. J. Med. Bioeng. 1(1), 26–29 (2012).

Acknowledgements

There is not acknowledgement to declare.

Author information

Authors and Affiliations

Contributions

Contributed to prepare the first draft: R.A., S.H., A.S., F.K., N.H.I., M.K., D.S. and J.H.J. Contributed to editing the final draft: S.N., Z.A.S., A.K., U.R.A., and R.T. Contributed to all analysis of the data and produced the results accordingly: D.S., N.H.I., and R.A. Searched for papers and then extracted data: S.H., A.S., F.K., and J.H.J. Provided overall guidance and managed the project: M.T., S.N., Z.A.S., A.K., U.R.A., S.M.S.I, and R.T.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khozeimeh, F., Sharifrazi, D., Izadi, N.H. et al. RF-CNN-F: random forest with convolutional neural network features for coronary artery disease diagnosis based on cardiac magnetic resonance. Sci Rep 12, 11178 (2022). https://doi.org/10.1038/s41598-022-15374-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-15374-5

This article is cited by

-

Explaining decisions of a light-weight deep neural network for real-time coronary artery disease classification in magnetic resonance imaging

Journal of Real-Time Image Processing (2024)

-

Independent effects of the triglyceride-glucose index on all-cause mortality in critically ill patients with coronary heart disease: analysis of the MIMIC-III database

Cardiovascular Diabetology (2023)

-

Risk prediction of heart failure in patients with ischemic heart disease using network analytics and stacking ensemble learning

BMC Medical Informatics and Decision Making (2023)

-

Body composition predicts hypertension using machine learning methods: a cohort study

Scientific Reports (2023)

-

Automated stenosis classification on invasive coronary angiography using modified dual cross pattern with iterative feature selection

Multimedia Tools and Applications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.