Abstract

An accurate three-dimensional (3D) segmentation of the maxillary sinus is crucial for multiple diagnostic and treatment applications. Yet, it is challenging and time-consuming when manually performed on a cone-beam computed tomography (CBCT) dataset. Recently, convolutional neural networks (CNNs) have proven to provide excellent performance in the field of 3D image analysis. Hence, this study developed and validated a novel automated CNN-based methodology for the segmentation of maxillary sinus using CBCT images. A dataset of 264 sinuses were acquired from 2 CBCT devices and randomly divided into 3 subsets: training, validation, and testing. A 3D U-Net architecture CNN model was developed and compared to semi-automatic segmentation in terms of time, accuracy, and consistency. The average time was significantly reduced (p-value < 2.2e−16) by automatic segmentation (0.4 min) compared to semi-automatic segmentation (60.8 min). The model accurately identified the segmented region with a dice similarity co-efficient (DSC) of 98.4%. The inter-observer reliability for minor refinement of automatic segmentation showed an excellent DSC of 99.6%. The proposed CNN model provided a time-efficient, precise, and consistent automatic segmentation which could allow an accurate generation of 3D models for diagnosis and virtual treatment planning.

Similar content being viewed by others

Introduction

Maxillary sinus (antrum of Highmore) is the largest of the four paranasal sinuses, which are air-filled spaces located within the skull surrounding the nasal cavity1. An adult’s maxillary sinus has a pyramidal shape and lies in the body of the maxilla. It is bounded superiorly by the orbital floor, extending laterally into the zygomatic process of the maxilla and the zygomatic bone. At the medial side, it coincides with the lateral wall of the nasal cavity communicating with it through the sinus ostium. The floor of the sinus is formed by the alveolar and palatine processes of the maxilla, which is in close proximity to the roots of the maxillary posterior teeth2,3,4,5.

Owing to the vital position of the sinus, its assessment is of paramount importance for maxillofacial surgeons, dentists, ENT surgeons, and dentomaxillofacial radiologists1. An accurate three- dimensional (3D) segmentation of the sinus is crucial for multiple diagnostic and treatment applications, where evaluation of sinus changes, remodeling at follow-up, volumetric analysis6,7 or creation of 3D virtual models is required. Furthermore, the most relevant surgical procedures requiring sinus assessment include implant placement, sinus augmentation8,9 and orthognathic surgery.

Although maxillary sinus is a well-delineated cavity, its 3-D segmentation is not a simple task. The close proximity of the maxillary sinus to the nasal passages and the teeth roots, along with its anatomical variations and frequently associated sinus thickening, makes the segmentation a challenging task. Such 3-D segmentations could be performed either by multi-slice (MSCT)10 or cone-beam computed tomography (CBCT). In oral health care, the maxillary sinus is mostly visualized using CBCT imaging for diagnosis and treatment planning11,12,13. It provides a multiplanar sinus reconstruction, relatively lower radiation dose and isotropic volume resolution14. However, the segmentation of CBCT images still remains a challenging task due to the issues of image noise, low soft-tissue contrast, beam hardening artifacts and lack of absolute Hounsfield Unit15 (HU) calibration16,17.

The manual segmentation of the maxillary sinus on CBCT images is time- consuming and dependent on the practitioner’s experience with high inter- and intra-observer variability18. Other techniques, such as semi-automatic segmentation improve the segmentation efficiency, yet it still requires manual adjustments that can also induce error10,19. Recently, artificial intelligence (AI) technologies have started to play a growing role in the field of dentomaxillofacial radiology20,21. In particular, deep learning algorithms have gained much attention in the medical field for their ability to handle large and complex data, extract useful information and allow automatic learning of feature hierarchies such as edges, shapes and corners22.

Convolutional neural network (CNN) is one of the deep learning approaches that has shown an excellent performance in the field of image analysis. It uses multi-layer neural computational connections for image processing tasks such as classification and segmentation22. The application of CNN for CBCT image segmentation could overcome the challenges associated with the other techniques by providing an efficient and consistent segmentation tool, while keeping the anatomical accuracy. Therefore, the aim of this study was to develop and validate a novel automated CNN-based methodology for the segmentation of maxillary sinus on CBCT images.

Materials and methods

This study was conducted in accordance with the standards of the Helsinki Declaration on medical research. Institutional ethical committee approval was obtained from the Ethical Review Board of the University Hospitals Leuven (reference number: S57587). Informed consent was not required as patient-specific information was anonymized. The study plan and report followed the recommendations of Schwendicke et al.23 for reporting on artificial intelligence in dental research.

Dataset

A sample of 132 CBCT scans (264 sinuses,75 females and 57 males, mean age 40 years) from 2013 to 2021 with different scanning parameters was collected (Table 1). Inclusion criteria were patients with permanent dentition and maxillary sinus with/without mucosal thickening (shallow > 2 mm, moderate > 4 mm) and/or with semi-spherical membrane in one of the walls24. Scans having dental restorations, orthodontic brackets and implants were also included. The exclusion criteria were patients with a history of trauma, sinus surgery and presence of pathologies affecting its contour.

The Digital Imaging and Communication in Medicine (DICOM) files of the CBCT images were exported anonymously. Dataset was further randomly divided into three subsets: (1) training set (n = 83 scans) for training of the CNN model based on the ground truth; (2) validation set (n = 19 scans) for evaluation and selection of the best model; (3) testing set (n = 30 scans) for testing the model performance by comparison with ground truth.

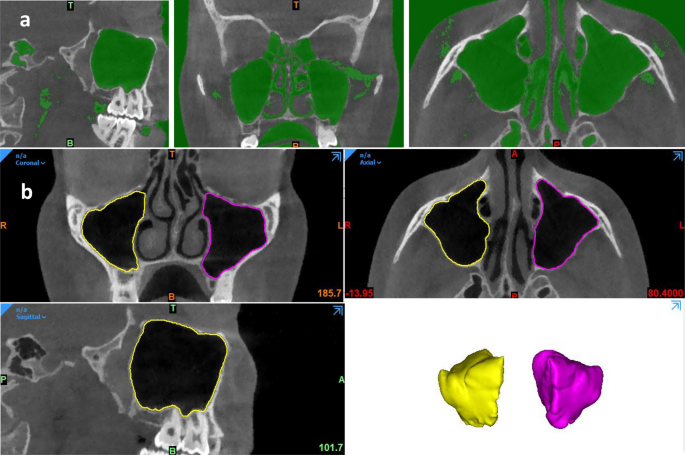

Ground truth labelling

The ground truth datasets for training and testing of the CNN model were labelled by semi-automatic segmentation of the sinus using Mimics Innovation Suite (version 23.0, Materialise N.V., Leuven, Belgium). Initially, a custom threshold leveling was adjusted between [− 1024 to − 200 Hounsfield units (HU)] to create a mask of the air (Fig. 1a). Subsequently, the region of interest (ROI) was isolated from the rest of the surrounding structures. A manual delineation of the bony contours was performed using eclipse and livewire function, and all contours were checked in coronal, axial, and sagittal orthogonal planes (Fig. 1b). To avoid any inconsistencies in the ROI of different images, the segmentation region was limited to the early start of the sinus ostium from the sinus side before continuation into the infundibulum (Fig. 1b). Finally, the edited mask of each sinus was exported separately as a standard tessellation language (STL) file. The segmentation was performed by a dentomaxillofacial radiologist (NM) with seven years of experience and subsequently re-assessed by two other radiologists (KFV&RJ) with 15 and 25 years of experience respectively.

CNN model architecture and training

Two 3D U-Net architecture were used25, both of which consisted of 4 encoder and 3 decoder blocks, 2 convolutions with a kernel size of 3 × 3 × 3, followed by a rectified linear unit (ReLU) activation and group normalization with 8 feature maps26. Thereafter, max pooling with kernel size 2 × 2 × 2 by strides of two was applied after each encoder, allowing reduction of the resolution with a factor 2 in all dimensions. Both networks were trained as a binary classifier (0 or 1) with a weighted Binary Cross Entropy Loss:

for each voxel n with ground truth value \({y}_{n}\) = 0 or 1, and the predicted probability of the network = \({p}_{n}\)

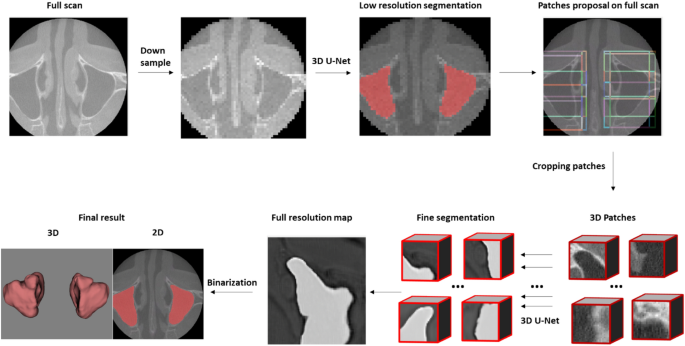

A two-step pre-processing of the training dataset was applied. First, all scans were resampled at the same voxel size. Thereafter, to overcome the graphics processing unit (GPU) memory limitations, the full-size scan was down sampled to a fixed size.

The first 3D U-Net was used to provide roughly low-resolution segmentation for proposing 3D patches and cropped only those which belonged to the sinus. Later, those relevant patches were transferred to the second 3D U-Net where they were individually segmented and combined to create the full resolution segmentation map. Finally, binarization was applied and only the largest connected part was kept, followed by application of a marching cubes algorithm on the binary image. The resultant mesh was smoothed to generate a 3D model (Fig. 2).

The model parameters were optimized with ADAM27 (an optimization algorithm for training deep learning models) having an initial learning rate of 1.25e−4. During training, random spatial augmentations (rotation, scaling, and elastic deformation) were applied. The validation dataset was used to define the early stopping which indicates a saturation point of the model where no further improvement can be noticed by the training set and more cases will lead to data overfitting. The CNN model was deployed to an online cloud-based platform called virtual patient creator (creator.relu.eu, Relu BV, Version October 2021) where users could upload DICOM dataset and obtain an automatic segmentation of the desired structure.

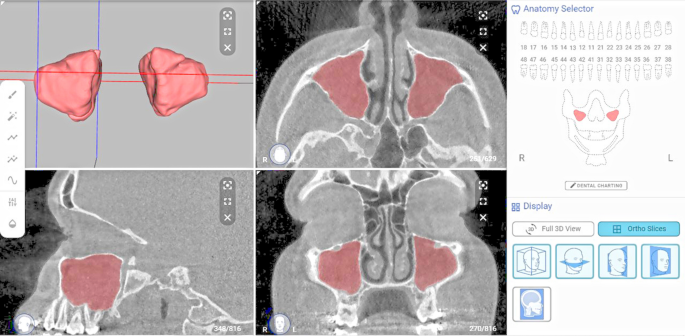

Testing of AI pipeline

The testing of the CNN model was performed by uploading DICOM files from the test set to the virtual patient creator platform. The resulting automatic segmentation (Fig. 3) could be later downloaded in DICOM or STL file format. For clinical evaluation of the automatic segmentation, the authors developed the following classification criteria: A—perfect segmentation (no refinement was needed), B—very good segmentation (refinements without clinical relevance, slight over or under segmentation in regions other than the maxillary sinus floor), C—good segmentation (refinements that have some clinical relevance, slight over or under segmentation in the maxillary sinus floor region), D—deficient segmentation (considerable over or under segmentation, independent of the sinus region, with necessary repetition) and E—negative (the CNN model could not predict anything). Two observers (NM and KFV) evaluated all the cases, followed by an expert consensus (RJ). In cases where refinements were required, the STL file was imported into Mimics software and edited using the 3D tools tab. The resulting segmentation was denoted as refined segmentation.

Evaluation metrics

The evaluation metrics28,29 are outlined in Table 2. The comparison of outcome amongst the ground truth and automatic and refined segmentation was performed by the main observer on the whole testing set. A pilot of 10 scans were tested at first, which showed a Dice similarity coefficient (DSC) of 0.985 ± 004, Intersection over union (IoU) of 0.969 ± 0.007 and 95% Hausdorff Distance (HD) of 0.204 ± 0.018 mm. Based on these findings, the sample size of the testing set was increased up to 30 scans according to the central limit theorem (CLT)30.

Time efficiency

The time required for the semi-automatic segmentation was calculated starting from opening the DICOM files in Mimics software till export of the STL file. For automatic segmentation, the algorithm automatically calculated the time required to have a full resolution segmentation. The time for the refined segmentation was calculated similarly to that of semi-automatic segmentation and later added to the initial automatic segmentation time. The average time for each method was calculated based on the testing set sample.

Accuracy

A voxel-wise comparison amongst ground truth, automatic and refined segmentation of the testing set was performed by applying a confusion matrix with four variables: true positive (TP), true negative (TN), false positive (FP) and false negative (FN) voxels. Based on the aforementioned variables, the accuracy of the CNN model was assessed according to the metrics mentioned in Table 2.

Consistency

Once the CNN model is trained it is deterministic; hence it was not evaluated for consistency. For illustration, one scan was uploaded twice on the platform and the resultant STLs were compared. Intra- and inter-observer consistency were calculated for the semi-automatic and refined segmentation. The intra-observer reliability of the main observer was calculated by re-segmenting 10 scans from the testing set with different protocols. For the inter-observer reliability, two observers (NM and KFV) performed the needed refinements, then the STL files were compared with each other.

Statistical analysis

Data were analyzed with RStudio: Integrated Development Environment for R, version 1.3.1093 (RStudio, PBC, Boston, MA). Mean and standard deviation was calculated for all evaluation metrics. A paired-sample t-test was performed with a significance level (p < 0.05) to compare timing required for semi-automatic and automatic segmentation of the testing set.

Results

Time efficiency

The average time required for the semi-automatic segmentation was 60.8 min (3649.8 s) and 24.4 s for automatic segmentation, showing a significant reduction (p-value < 2.2e−16). Considering the refined data, around 30% of the testing set needed refinements (20% class B, 10% class C, no class D and E) with an average refinement time of 7.1 min (422.84 s). The automatic and refined segmentations were approximately 149 and 9 times faster than the semi-automatic segmentation, respectively.

Accuracy

Table 3 provides an overview of the accuracy metrics for automatic segmentation. Overall, the automatic segmentation showed a DSC of 98.4% and RMS of 0.21 mm in comparison to the ground truth, implying that the 3D volumes and models along with the surfaces were closely matched between them. (Fig. 4).

The comparison between automatic and refined segmentations showed a DSC of 99.6% and RMS of 0.21 mm indicating perfect overlap between them. The minimal difference meant that minor refinements were needed.

Consistency

Table 4 shows the metrics for intra- and inter-observer reliability with a DSC of 98.4% and 99.6% respectively. For the CNN model test–retest reliability, it had by default an identical match with a DSC value of 100%.

Discussion

CBCT imaging has been widely employed in the field of oral and maxillofacial radiology for the visualization of orofacial structures, pre-surgical planning and follow-up assessment11,12,13. It allows for a 3D evaluation that is crucial for an accurate diagnosis and management of certain pathologies affecting the maxillofacial complex. Volumetric (3D) assessment of the maxillary sinus not only enhances the diagnostic process but also permits creation of reconstructed virtual models for presurgical planning purposes including implant placement, sinus floor elevation, removal of (impacted) posterior teeth and/or root remnants, reconstructive and orthognathic surgical procedures. In this sense, an accurate segmentation of the sinus cavity is an essential step.

Manual segmentation is not a feasible task in a daily clinical practice since it is a time-consuming task and requires high operator experience. Semi-automatic segmentation techniques still require operator intervention for manual threshold selection. Additionally, the manual adjustments of segmented structures also require a considerable amount of time and may induce operator-based errors31. For overcoming the above-mentioned limitations and to provide a reproducible and consistent technique, the present study aimed to develop and validate a novel automated maxillary sinus segmentation methodology on CBCT images using a CNN-based model.

The model in the current study was trained using data acquired by 2 CBCT devices (NewTom VGi evo and 3D Accuitomo 170) with different scanning parameters. Furthermore, images both with and without metal artifacts were included for increasing its robustness. A comparison was performed between the CBCT devices by using the CNN model versus the ground truth, and no significant differences were observed. Both devices showed a high DSC value of 98.37% (NewTom VGi evo) and 98.43% (3D Accuitomo 170). Hence, the whole dataset was treated as one sample.

When comparing the performance of the automatic versus the semi-automatic technique, the CNN-model showed remarkable results in relation to time, accuracy and consistency. The automatic segmentation was approximately 149 times faster (24.4 s) than the semi-automatic approach (60.8 min). When considering all the evaluation metrics, the CNN model showed a high similarity to the ground truth (see Table 3).

Based on the proposed classification for the clinical evaluation of automatic segmentation, almost 70% of the testing set was classified as perfect segmentation (class A), with no refinements required. For cases classified as B or C, refinements were mainly associated with cases having mucosal thickening. No deficient or negative predictions were present. Moreover, the small difference between automatic and refined segmentations (see Table 3) suggested that minimal refinements were needed. The inter-observer reliability for the refined segmentation showed a DSC of 99.6% which implied consistency amongst observers. The models’ performance was also 100% consistent during repeated segmentation of the same case which is a great advantage to overcome human variability. As the human performance will always be variable each time a segmentation is performed. Additionally, the developed model was fully automatic without the need for any human intervention which also overcomes the issues of threshold leveling and grey scale variability.

To date, few researchers32,33,34 have investigated maxillary sinus segmentation from CBCT datasets with different study designs. Bui et al.32 investigated an automatic segmentation technique of the paranasal sinuses and the nasal cavity from 10 CBCT images. They applied a multi-step level coarse to fine active contour modelling and reported a dice of 95.7% in comparison to manual segmentation by considering experts as a ground truth. Neelapu et al.33 developed a knowledge-based algorithm for automatically segmenting the maxillary sinus from 15 CBCT imaging scans. The authors compared five segmentation techniques following automatic contour initialization and reported a dice ranging between 80–90% for all the segmentation methods. Ham et al.34 proposed an automatic maxillary sinus segmentation technique using one 3D U-Net and found a DSC score of 92.8%. Even though a comparison with the aforementioned studies was difficult due to the variability in relation to CBCT devices, scanning protocol and study design, the currently proposed CNN model in the current study showed better results considering the metrics evaluated. Furthermore, the time needed for each segmentation method was clearly stated and sample size was justified, which have been rarely reported in the previous studies. Recent studies35,36 have reported on automatic segmentation of sinus mucosal thickening and pathological lesions, yet this was not the focus of our study.

The limitations of this study were similar to the already present challenges of artificial intelligence in dentistry21,37. Firstly, lack of data heterogeneity and model generalizability exists, which could be solved by incorporating data from different CBCT devices having variable scanning parameters. Secondly, the online platform only allowed visualization and export of the automatic segmentation, and a third-party software was required for performing the refinements. Recently, some editing tools have been added to the platform and additional features will be added soon to overcome this issue. Finally, the CNN model enabled to extract the normal clear sinus and separate the bony borders in cases with sinus thickening, however, it cannot delineate the soft tissue. Future work will focus on the pathological conditions of the maxillary sinus.

Conclusions

A novel 3D U-Net architecture CNN model was developed and validated for automatic segmentation and 3D virtual model creation of the maxillary sinus from CBCT imaging. Owing to its promising performance in relation to time, accuracy and consistency, it can represent a solid base for future studies by incorporation of pathological conditions. An additional benefit of the model is the deployment to an online web-based user-interactive platform which could facilitate its application in clinical practice.

References

Whyte, A. & Boeddinghaus, R. The maxillary sinus: Physiology, development and imaging anatomy. Dentomaxillofac. Radiol. 48, 20190205. https://doi.org/10.1259/dmfr.20190205 (2019).

Standring, S. Gray’s Anatomy: The Anatomical Basis of Clinical Practice 41st edn. (Elsevier, 2015).

James, A. & Duncavage, S. S. B. The Maxillary Sinus: Medical and Surgical Management (Thieme Medical Publishers, 2011).

Chanavaz, M. Maxillary sinus: Anatomy, physiology, surgery, and bone grafting related to implantology–eleven years of surgical experience (1979–1990). J. Oral Implantol. 16, 199–209 (1990).

Iwanaga, J. et al. Clinical anatomy of the maxillary sinus: Application to sinus floor augmentation. Anat. Cell Biol. 52, 17–24. https://doi.org/10.5115/acb.2019.52.1.17 (2019).

Andersen, T. N. et al. Accuracy and precision of manual segmentation of the maxillary sinus in MR images-a method study. Br. J. Radiol. 91, 20170663–20170663. https://doi.org/10.1259/bjr.20170663 (2018).

Giacomini, G. et al. Computed tomography-based volumetric tool for standardized measurement of the maxillary sinus. PLoS ONE 13, e0190770. https://doi.org/10.1371/journal.pone.0190770 (2018).

Berberi, A. et al. Evaluation of three-dimensional volumetric changes after sinus floor augmentation with mineralized cortical bone allograft. J. Maxillofac. Oral Surg. 14, 624–629. https://doi.org/10.1007/s12663-014-0736-3 (2015).

Starch-Jensen, T. & Jensen, J. D. Maxillary sinus floor augmentation: A review of selected treatment modalities. J. Oral Maxillofac. Res. 8, e3. https://doi.org/10.5037/jomr.2017.8303 (2017).

Xu, J. et al. Automatic CT image segmentation of maxillary sinus based on VGG network and improved V-Net. Int. J. Comput. Assist. Radiol. Surg. 15, 1457–1465. https://doi.org/10.1007/s11548-020-02228-6 (2020).

Venkatesh, E. & Elluru, S. V. Cone beam computed tomography: Basics and applications in dentistry. J. Istanb. Univ. Fac. Dent. 51, S102–S121. https://doi.org/10.17096/jiufd.00289 (2017).

Carter, J. B., Stone, J. D., Clark, R. S. & Mercer, J. E. Applications of cone-beam computed tomography in oral and maxillofacial surgery: An overview of published indications and clinical usage in United States Academic Centers and Oral and Maxillofacial Surgery Practices. J. Oral Maxillofac. Surg. 74, 668–679. https://doi.org/10.1016/j.joms.2015.10.018 (2016).

Scarfe, W. C., Farman, A. G., Levin, M. D. & Gane, D. Essentials of maxillofacial cone beam computed tomography. Alpha Omegan 103, 62–67. https://doi.org/10.1016/j.aodf.2010.04.001 (2010).

Bozdemir, E., Gormez, O., Yıldırım, D. & Aydogmus Erik, A. Paranasal sinus pathoses on cone beam computed tomography. J. Istanb. Univ. Fac. Dent. 50, 27–34. https://doi.org/10.17096/jiufd.47796 (2016).

Pauwels, R., Jacobs, R., Singer, S. R. & Mupparapu, M. CBCT-based bone quality assessment: Are Hounsfield units applicable?. Dentomaxillofac. Radiol. 44, 20140238–20140238. https://doi.org/10.1259/dmfr.20140238 (2015).

Chang, Y.-B. et al. 3D segmentation of maxilla in cone-beam computed tomography imaging using base invariant wavelet active shape model on customized two-manifold topology. J. Xray Sci. Technol. 21, 251–282. https://doi.org/10.3233/XST-130369 (2013).

Wang, L. et al. Automated segmentation of dental CBCT image with prior-guided sequential random forests. Med. Phys. 43, 336–336. https://doi.org/10.1118/1.4938267 (2016).

Tingelhoff, K. et al. Analysis of manual segmentation in paranasal CT images. Eur. Arch. Otorhinolaryngol. 265, 1061–1070. https://doi.org/10.1007/s00405-008-0594-z (2008).

Tingelhoff, K. et al. Comparison between manual and semi-automatic segmentation of nasal cavity and paranasal sinuses from CT images. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 5505–5508, 2007. https://doi.org/10.1109/iembs.2007.4353592 (2007).

Hung, K., Montalvao, C., Tanaka, R., Kawai, T. & Bornstein, M. M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 49, 20190107–20190107. https://doi.org/10.1259/dmfr.20190107 (2020).

Hung, K., Yeung, A. W. K., Tanaka, R. & Bornstein, M. M. Current applications, opportunities, and limitations of AI for 3D imaging in dental research and practice. Int. J. Environ. Res. Public Health 17, 4424. https://doi.org/10.3390/ijerph17124424 (2020).

Lee, J. G. et al. Deep learning in medical imaging: General overview. Korean J. Radiol. 18, 570–584. https://doi.org/10.3348/kjr.2017.18.4.570 (2017).

Schwendicke, F. et al. Artificial intelligence in dental research: Checklist for authors, reviewers, readers. J. Dent. 107, 103610. https://doi.org/10.1016/j.jdent.2021.103610 (2021).

Bornstein, M. M. et al. An analysis of frequency, morphology, and locations of maxillary sinus septa using cone beam computed tomography. Int. J. Oral Maxillofac. Implants 31, 280–287. https://doi.org/10.11607/jomi.4188 (2016).

Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T. & Ronneberger, O. Medical Image Computing and Computer-Assisted Intervention 424–432 (Springer, 2016).

Wu, Y. & He, K. Group normalization. Int. J. Comput. Vision 128, 742–755. https://doi.org/10.1007/s11263-019-01198-w (2020).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. CoRR 1412, 6980 (2015).

Zhang, D. et al. An efficient approach to directly compute the exact Hausdorff distance for 3D point sets. Integr. Comput. Aid. Eng. 24, 261–277. https://doi.org/10.3233/ICA-170544 (2017).

Taha, A. A. & Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 15, 29. https://doi.org/10.1186/s12880-015-0068-x (2015).

Kwak, S. G. & Kim, J. H. Central limit theorem: The cornerstone of modern statistics. Korean J. Anesthesiol. 70, 144–156. https://doi.org/10.4097/kjae.2017.70.2.144 (2017).

Cellina, M. et al. Segmentation procedures for the assessment of paranasal sinuses volumes. Neuroradiol. J. 34, 13–20. https://doi.org/10.1177/1971400920946635 (2021).

Bui, N. L., Ong, S. H. & Foong, K. W. C. Automatic segmentation of the nasal cavity and paranasal sinuses from cone-beam CT images. Int. J. Comput. Assist. Radiol. Surg. 10, 1269–1277 (2014).

Neelapu, B. C. et al. A pilot study for segmentation of pharyngeal and sino-nasal airway subregions by automatic contour initialization. Int. J. Comput. Assist. Radiol. Surg. 12, 1877–1893. https://doi.org/10.1007/s11548-017-1650-1 (2017).

Sungwon Ham, A.-R.L., Jongha Park, Y. B., Sangwook Lee, M. B. & Kim, N. Medical Imaging with Deep Learning (Springer, 2018).

Hung, K. F. et al. Automatic detection and segmentation of morphological changes of the maxillary sinus mucosa on cone-beam computed tomography images using a three-dimensional convolutional neural network. Clin. Oral Invest. https://doi.org/10.1007/s00784-021-04365-x (2022).

Jung, S. K., Lim, H. K., Lee, S., Cho, Y. & Song, I. S. Deep active learning for automatic segmentation of maxillary sinus lesions using a convolutional neural network. Diagnostics https://doi.org/10.3390/diagnostics11040688 (2021).

Schwendicke, F., Samek, W. & Krois, J. Artificial intelligence in dentistry: chances and challenges. J. Dent. Res. 99, 769–774. https://doi.org/10.1177/0022034520915714 (2020).

Funding

Open access funding provided by Karolinska Institute.

Author information

Authors and Affiliations

Contributions

N.M., H.W. and R.J. conceptualized and designed the study. N.M. collected, curated, segmented the dataset and performed the statistical analysis. A.V.G. and A.S. curated, analyzed the data, trained the CNN model and provided the results. N.M. and K.F.V. performed the clinical evaluation and participated in writing the manuscript. All authors reviewed the manuscript. H.W. and R.J. provided resources and supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Morgan, N., Van Gerven, A., Smolders, A. et al. Convolutional neural network for automatic maxillary sinus segmentation on cone-beam computed tomographic images. Sci Rep 12, 7523 (2022). https://doi.org/10.1038/s41598-022-11483-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-11483-3

This article is cited by

-

Deep learning driven segmentation of maxillary impacted canine on cone beam computed tomography images

Scientific Reports (2024)

-

AI-based MRI auto-segmentation of brain tumor in rodents, a multicenter study

Acta Neuropathologica Communications (2023)

-

Comparison of 2D, 2.5D, and 3D segmentation networks for maxillary sinuses and lesions in CBCT images

BMC Oral Health (2023)

-

Transfer learning with CNNs for efficient prostate cancer and BPH detection in transrectal ultrasound images

Scientific Reports (2023)

-

SinusC-Net for automatic classification of surgical plans for maxillary sinus augmentation using a 3D distance-guided network

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.