Abstract

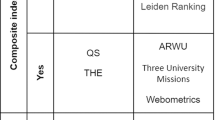

University rankings are increasingly adopted for academic comparison and success quantification, even to establish performance-based criteria for funding assignment. However, rankings are not neutral tools, and their use frequently overlooks disparities in the starting conditions of institutions. In this research, we detect and measure structural biases that affect in inhomogeneous ways the ranking outcomes of universities from diversified territorial and educational contexts. Moreover, we develop a fairer rating system based on a fully data-driven debiasing strategy that returns an equity-oriented redefinition of the achieved scores. The key idea consists in partitioning universities in similarity groups, determined from multifaceted data using complex network analysis, and referring the performance of each institution to an expectation based on its peers. Significant evidence of territorial biases emerges for official rankings concerning both the OECD and Italian university systems, hence debiasing provides relevant insights suggesting the design of fairer strategies for performance-based funding allocations.

Similar content being viewed by others

Introduction

Far beyond being mere educational institutions, universities play an outstanding role in citizen formation, scientific and technological progress, elaboration of new models of economy and society, which makes them drivers of development1. Universities contribute to the society both on an individual scale, as they often represent one of the most effective social elevators, and on a collective scale, as they build knowledge to face global challenges, with a specific attention, increasing in recent years, to the ones related to sustainable development goals2. The rise in relevance of rankings concerning academic institutions is a rather recent phenomenon, that emerged since the late 1980s3, mainly due to the demand for information on academic quality by potential students, triggered by the worldwide expansion of the access to higher education4. However, rankings have become an increasingly internalized tool for comparison and success quantification far beyond the matter of student’s choice, influencing researchers, employers and, most relevantly, academic evaluators and companies.

University rankings are compiled taking into account the variety of missions higher education is called to, which are not limited to teaching and research, but also involve the so called “third mission”5 or “knowledge transfer”6,7, namely the impact of the academic activity on the community in which the institution is embedded. Efficient higher education institutions can actually prompt a spillover process in which regions collect knowledge and human resources, that can contribute to foster their economic progress8,9,10,11. Therefore, it is evident that the evaluation of academic activity should be configured as a multi-purpose assessment12, which takes into account not only the scientific impact, but also the benefits brought to the territory, measured by an empirical quantification of the third mission outcomes13.

Rankings, whatever they are meant to measure, are not neutral tools, and their use has a series of relevant drawbacks. Problematic aspects mainly stem from the effects of positive feedback between the prestige of an institution, certified by its ranked score, and the possibility to receive public funding on an awarding basis or to attract investments by private companies12,14,15. The strong causal relation between ranking outcomes and funding triggers off undesired phenomena. The first problem is reactivity to rankings, namely the development of adaptation strategies to gain competitive advantages with respect to the evaluation criteria, leading to academic conformism12,16,17,18,19,20. Another critical aspect is represented by territorial biases, that reward universities placed in an advantageous socioeconomic context8,21,22,23,24,25, that can be, for example, more receptive than others with respect to third mission activities. The third issue is the onset of a “Matthew effect”, that, through the feedback between ranking and funding, consolidates existing gaps in third mission26, internationalization27,28, research29,30, scholarships31, and even diffusion of scientific ideas32.

Lately, rankings have prompted deep changes in the higher education system, affecting resource distribution, decision making and status definition3,16,33,34. However, they fail to capture individual specificities and tend to marginalize parts of the academic community whose distinctive traits are not suited to the general rating framework31,35. Most importantly, the indicators used to compile university rankings can be affected by biases related to the specific institution mission and to territorial features, such as the presence of human resources, stakeholders, and a vital industrial background. Though the methodology behind them is criticized, and their overall role is questioned36, rankings nowadays represent a consolidated evaluation framework, mainly due to their simplicity and practicality, combined with the lack of suitable alternatives37. On one hand, students reasonably require “absolute” ratings of universities, to aim for the best affordable education and to maximize the possibilities of a good employment in a wealthy environment after graduation. On the other hand, a different kind of rankings, in which the effects of structural factors is mitigated, can be relevant for academic evaluators and policy makers. Such redefined rankings could actually help identify both virtuous cases of outstanding institutions emerging in a difficult context, and cases in which the performance is below expectations, which therefore require intervention. Therefore, it is urgent to define transparent, data-driven, shared and reproducible procedures to evaluate academic performance, taking into account taking into account the effect of structural features, such as the territorial embedding of universities and their educational mission.

The objectives of our research are: (1) analyzing university rankings in order to detect biases, determined by either the territorial context or the educational offer; (2) quantifying the effect of the aforementioned biases on the performance of each university; (3) defining a new score in which biases are mitigated. We remark that the expressions “unbiased”, “debiased/debiasing”, “fairer” used in the following will be exclusively referred to the kind of biases considered in our work, namely those related to territorial features and educational offer. To achieve these results, we will follow a vision that stems from the concept of economic complexity38,39,40,41, according to which the performance of a country in a given economic task is the result of several underlying context variables, also including intangible assets. In order to generalize the idea to our problem, we will model the academic environment as a complex system, in which interconnections among its elements prompt the emergence of similarities and properties in an unsupervised and data-driven way. Complex network theory provides the mathematical machinery to formalize such a description42,43,44,45, and represents a multidisciplinary tool, increasingly used to investigate real-world systems consisting of nontrivially interconnected constituents. Application fields include economics41,46,47,48,49,50, human mobility51,52, neuroscience53,54,55,56, genetics57,58, just to mention a few. Moreover, recent studies are endowing the complex network toolbox with new instruments, such as multilayer networks59 and network potentials60,61. Complex network methods have already been applied to the problem of ranking analysis, to formalize the detection of competitiveness patterns among sport teams62 and universities63, and to encompass multifaceted context-based information in the evaluation of performances achieved by countries64.

In this work, we construct network models to analyze two case studies: the set of universities from member countries of Organization for Economic Co-operation and Development (OECD), appearing in the 2021 Times Higher Education (THE) rankings65, and the Italian tertiary education system, surveyed through the rankings compiled by CENSIS (Centro Studi Investimenti Sociali) for the academic year 2019/202066. The motivation of studying the Italian university environment as a national case is rooted in the geographical polarization of the country between wealthy regions in the northern part and struggling ones in the south. In particular, more than one fourth of Italian population lives in one of the four “convergence regions”, whose GDP per capita is lower than 75% of the European Union average. On the other hand, since university evaluation criteria are uniform throughout the nation, it is reasonable to expect some sort of bias in rankings.

In both cases of OECD and Italy, we construct two complex networks, based on the territorial similarity and the educational offer similarity, respectively. We first determine whether there is a tendency for institutions belonging to similar territories, or providing analogous educational offers, to achieve comparable scores in a given ranking. Since our findings reveal the presence of a bias in many specific rankings, we construct a suitable unbiased “reference system”, determined in an unsupervised way, to evaluate a university’s performance. The rationale of this approach is the detection of those institutions that share common features, and can therefore be fairly compared. Finally, we introduce a quantifier of the bias that affects each single university, and define for each index a new fair ranking, in which bias has been removed. We present the details of our findings in the “Results” section, and comment their implications in the “Discussion”. The “Materials and methods” section contains technical details on data collection, network construction, and implementation of the complex-network methods.

Results

Scheme representing the workflow of the analysis. Indicators on the territorial features (used to construct a network of subnational areas) and information on the educational offer of academic institutions constitute the basis to build a territorial network and an educational offer network of universities, respectively. Network analysis enables to detect and quantify biases in university rankings, providing the necessary information to define a new score in view of a fair evaluation.

The goals of this work are detecting structural biases in university rankings, measuring their impact on scores and defining a debiasing strategy to develop a fairer rating system. A scheme of the workflow implemented in the present research is displayed in Fig. 1. We model the university environment as a pair of complex networks, in which connections are determined by similarity of territorial features and educational offers. Evidence of biases emerges from this framework for a variety of official rankings. To quantify the effects of these biases on the outcomes achieved by single universities, we partition the academic ecosystem in homogeneous groups through community detection algorithms, and perform comparisons among peers therein. This approach allows to define fairer scores, in which the detected bias is decoupled from performance evaluation. In this section, we describe the main findings of our analysis, separating the presentation into two case studies: the former concerning the best-ranked universities of OECD countries, and the latter focusing on the whole ecosystem of Italian universities.

OECD university networks

We consider 1088 universities, ranked in the THE website65 and distributed in 343 OECD subregions at a subnational scale (see “Materials and methods” for details). The THE ranking measures the performance of universities on a global scale by providing an overall rating that combines different scores related to the fundamental aspects of the university mission: teaching, research, citations, industry income and international outlook. To analyze the performance achieved by the institutions in the light of their starting conditions, we set up two different networks: (i) a territorial network determined by the features of the region in which each institution is based, thus encoding information that is external to the academic system; (ii) an educational offer network, that incorporates internal features of the academic environment.

Territorial OECD university network

To highlight similarities and differences between territories, the most natural choice is to build a subnational area network, whose nodes coincide with the aforementioned 343 OECD subregions. Affinity among territories is determined from 97 indicators on geography, economy and society, and modeled by means of weighted edges. A pair of subregions is connected by an edge provided the Pearson correlation between the sets of their related territorial indicators is statistically significant; the procedure, described in detail in “Materials and methods”, returns 29,415 edges, weighted by the correlation itself. Starting from the OECD subnational area network, we construct the proper territorial university network, consisting of 1088 nodes representing universities, and 351,186 edges between them, related to the similarity between the corresponding subregions. We assign edges and weights of the territorial network according to this criterion: universities belonging to two regions related by a weighted edge in the subnational area network are connected by an edge of the same weight; different universities belonging to the same region are connected by an edge of unitary weight. In this way, we embed the university network in the territorial context.

Educational offer OECD university network

The second international university network is constructed from the educational offer of the same 1088 universities that appear in the THE rankings. The 539,305 edges connecting each pair of institutions are weighted by a measure of the overlap between their didactical offers. Since the presence of a given sector in the educational offer range represents a binary variable, we use the Dice index to quantify such an overlap. The implementation of this procedure is described in detail in “Materials and methods”.

Bias detection in THE rankings through assortativity analysis

We begin the analysis of OECD university networks by investigating whether the similarity among THE scores is related to the similarity, in terms of either territory or educational offer, among the institutions that achieve them. The tendency in a network to connect nodes with similar attribute values is quantified by assortativity (see “Materials and methods” section for definition and characterization). As reported in the first row of Table 1, the territorial network is assortative with respect to the THE overall score. This finding represents strong evidence of an evaluation bias related to the geographical context: universities based in similar territories tend to achieve comparable scores. Furthermore, the territorial network is assortative in a statistically significant way with respect to all the specific THE ranking dimensions, with the largest values registered for THE citations and THE international outlook. In the case of the educational offer network, instead, even the few significant values are negligible. Accordingly, this finding suggest that educational specificities do not play a relevant role in determining a positive outcome of an academic institution in the ranking. A qualitative manifestation of the different tendencies discussed above can be observed in the scatter plots shown in Supplementary Fig. S1.

Communities in OECD university networks. Community detection allows to collect universities that share homogeneous features in a network built from mutual similarity relations44,45, providing the basis for a comparison among peers. Thus, it represents the most crucial step to go from bias detection to bias quantification. The application of hierarchical community detection (see “Materials and methods” for implementation details) on the territorial network provides the following optimal partition:

-

OT1a: 80 universities in Argentina, Brazil, Colombia, Costa Rica, Mexico, Peru;

-

OT1b: 78 universities in Chile, Mexico, Turkey, United States (south-east);

-

OT2a: 430 universities in Australia, Western Europe, Israel, Japan, New Zealand;

-

OT2b: 241 universities in Canada, United States, Australia, New Zealand, Japan, Estonia, Spain (Balearic Islands);

-

OT3a: 122 universities in Eastern Europe, Korea;

-

OT3b: 137 universities in Southern and Central Europe, Korea.

The geographical distribution of the above communities is reported in Supplementary Fig. S2, generated with the MapChart online tool67. As for the educational offer network, hierarchical community detection returns:

-

OE1a: 128 universities with predominant engineering and computer science areas, and underrepresented humanities, health and social science areas;

-

OE1b: 173 universities with predominant science, engineering and economics areas;

-

OE2a: 221 universities with a very generalized range of educational offer, not including veterinary science;

-

OE2b: 206 universities with a very generalized range of educational offer, differing from OE2a due to the overrepresentation of “veterinary science” and “agriculture and forestry” areas, present in 100% and 92% of institutions, respectively;

-

OE3a: 179 universities with a generalized range of educational offer, but underrepresented engineering areas;

-

OE3b: 48 universities with an educational offer strongly focused on the health area;

-

OE3c: 133 universities with predominant economics, humanities and social science areas.

It is worth noticing that the underrepresentation of engineering in the universities belonging to community OE3a is related in around two thirds of cases to the presence of a technical university (belonging to communities OE1a-OE1b) in the same territory. The community nomenclature, referring to both the territorial and educational offer networks, is explained in “Materials and methods”. The full list of universities with their community membership is reported in Supplementary Data S1, while numerical details of hierarchical community detection and information on the intermediate-level partitions are provided in the Supplementary Sect. 1.2.

Quantifying biases and redefining scores in THE rankings

Community membership represents the main tool to quantify biases and fairly evaluate the performance of academic institutions in rankings. Once we have clustered universities in homogeneous OT and OE communities, we introduce new indicators that highlight the relationship between the success of an institution in rankings and either the territory where it is based or its educational offer.

For a given ranked index I we associate to each university u two debiasing parameters \(\delta _T(u)\) and \(\delta _E(u)\), respectively referred to the territorial network and the educational offer network. These parameters evaluate the performance of an institution by comparison with the rest of the community it belongs to. For each university u belonging to the territorial community \(C_T\) and to the educational offer community \(C_E\), we associate debiasing parameters to a ranked index I as follows

namely as the difference between the index value I(u) of the considered institution and the average of the indexes I(v) in the rest of the community, weighted by the (u, v) edge weight \(w_{uv}^S,\, (S=T,E)\) of the considered network. Notice that community peers that are weakly connected to u give a negligible contribution to the average appearing in Eq. (1). A positive (negative) value of the debiasing parameter indicates a better (worse) performance than the average expectation for universities that are similar, in terms of either territory or educational offer. Debiasing parameters do not represent per se a measure of bias. Their joint distribution, instead, can highlight systematic advantages for members of specific communities in a given ranked index. The scatterplot of \((\delta _T,\delta _E)\) values related to the THE overall score in Fig. 2, shows an evident grouping among territorial communities. An analogous arrangement is not present at all if points in the scatterplot are partitioned according to their educational offer community membership.

Territorial bias in THE overall ranking. In this scatter plot each dot corresponds to an OECD university, and its coordinates along the horizontal and vertical axes represent the debiasing parameters. The values \(\delta _T\) and \(\delta _E\) assess the results achieved by the institution in the THE overall ranking, respectively by comparison with the rest of the OT and OE community it belongs to. Each dot in the scatter plot is colored according to its OT community membership. The arrangement of different colors in the scatter plot indicates the presence of a territorial bias. The arrows indicate the direction of the principal components PC1 (positive slope) and PC2 (negative slope), with their length proportional to the corresponding standard deviations.

The distribution of \((\delta _T,\delta _E)\) values in Fig. 2, as well as those obtained from the sectorial THE dimensions, reported in Supplementary Fig. S3, indicates that the grouping in terms of territorial communities mostly occurs along a direction that is orthogonal to the one of maximal variance. To quantify such a tendency, isolate the territorial bias affecting university rankings, and define a fairer rating, we determine the principal components of the distributions in the \((\delta _T,\delta _E)\) planes referred to all THE scores. Actually, as we will shortly demonstrate, the first principal component (PC1) represents a redefined ranking, in which geographical influence is mitigated, while the second one (PC2) provides a measure of the territorial dragging effect on the performance in the original ranking. To support this interpretation, we discuss in detail the case of THE overall. Here, the component PC2, accounting for 8.6% of the total variance, is markedly anticorrelated (Pearson correlation \(-0.523\), with \(p<10^{-9}\) from the exact distribution test) with the GDP per capita PPP (Gross Domestic Product per capita at Purchasing Power Parity) related to the OECD subregions where the main seats of the universities are located. This result indicates that the arrangement of territorial communities in Fig. 2 follows a wealth hierarchy: therefore, universities with higher values of PC2 are experiencing the most detrimental effect on their performance due to a disadvantaged territorial context. On the other hand, PC1, accounting for 91.4% of the total variance, shows a weaker positive correlation (0.367, with \(p<10^{-9}\) from the exact distribution test) with the GDP per capita PPP. This outcome should also be compared with the Pearson correlation between the GDP and the original ranking (0.481, with \(p<10^{-9}\)), which is intermediate between the absolute values of the correlations with PC1 and PC2. This property is shared by all the sectorial THE dimensions, as shown in Supplementary Table S1. Moreover, most importantly, the assortativity of the territorial university network with respect to PC1 (0.054) is significantly reduced compared to that of the original ranking, showing that PC1 represents a fairer rating system, in which the effect of the territorial bias is substantially mitigated. Actually, the parametrization of points in Fig. 2 in terms of the principal components leads to incorporating most of the bias in PC2: the assortativity of the territorial university network with respect to this direction (0.227) is about twice the value obtained for the original ranking. The discussed behavior is actually common to all THE dimensions, as shown in Supplementary Table S1. Top ten performers in PC1 and PC2 related to THE overall are displayed in Tables 2 and 3, respectively, while the full lists, also including the principal component values with respect to each ranking dimension, are contained in Supplementary Data S2.

The first quartile of largest improvements in PC1 with respect to the original ranking is composed of 97 universities from community OT3a, 70 from OT1a, 68 from OT1b, 26 from OT3b, 9 from OT2a, none from OT2b. From this result, we can observe how shifting from the original ranking to PC1 highlights the performance of universities that emerge in comparatively difficult development contexts. Therefore, one of the merits of the PC1 redefined ranking is the recognition of universities that, despite being in relatively disadvantaged territories, are able to achieve good results compared to their peers in both the territorial and the educational offer community.

Italian university networks

The second part of the analysis is dedicated to the Italian university environment as a whole. The system consists of 92 institutions of diversified size and educational target. The Italian university rankings examined in our research are compiled by CENSIS64, with the aim of providing a guideline to the choice of future students. Due to the target, these rankings evaluate aspects that have a relevant impact on the quality of students’ academic experience, such as the service provision, scholarship and structure availability, communication through digital instruments, internationalization, and employability of graduates. The results for each specific dimension are combined into a global score (CENSIS overall). Following a similar procedure as in the OECD case, we will perform a deep investigation of the connection between the scores achieved by Italian academic institutions in CENSIS rankings and both the territorial features and the educational offer.

Territorial Italian university network

The analysis starts from a network of subnational areas, corresponding to the Italian provinces (province), a statistical subdivision of the country of outstanding relevance, midway between regions and municipalities. The 53 provinces in which the Italian universities are distributed constitute the nodes of the subnational area network, in which 854 weighted edges are constructed according to a criterion of similarity, quantified by the Pearson correlation between 121 socio-economic indicators (see “Materials and methods” for details). The proper territorial network, in which universities are encoded as nodes, is constructed in the same way as in the OECD case, and consists of 92 nodes and 2396 weighted edges.

Educational offer Italian university network

A second university network is constructed, as in the OECD case, based on the similarity among the educational offers provided by the different institutions (see “Materials and methods” section for details). The variety of Italian higher education ranges from large general-purpose universities, to those small and with very circumscribed offer, passing through large but specialized ones such as polytechnics, focused on engineering, and private institutions mainly oriented to economics and law. The educational offer network is made of 92 nodes and 2007 weighted edges.

Bias detection in CENSIS rankings through assortativity analysis

We study the Italian academic system by means of an assortativity analysis which is analogous to the one implemented in the OECD case. The most relevant of the results reported in Table 4 is that the territorial network is assortative with respect to the CENSIS overall score, indicating a statistically significant territorial bias, while the educational offer network’s assortativity is negligible. Concerning the dimensions composing the CENSIS overall ranking, the territorial bias is extremely relevant in the case of employability, an index that has no counterpart among the THE scores, and is high also in the case of international outlook, as in the corresponding THE ranking. As in the international case, no significant assortativity is found for any CENSIS ranking dimension in the educational offer network. The quantitative results on assortativity find a qualitative counterpart in the scatter plots shown in Supplementary Fig. S4.

Communities in Italian university networks

Hierarchical community detection is performed for the Italian university networks, in the same spirit and with the same procedure as in the OECD case. The following partition of the territorial university network is obtained:

-

IT1a: 25 universities in center-north provinces with a small administrative center;

-

IT1b: 35 universities in center-north provinces, mostly with a large or historically relevant administrative center;

-

IT2a: 13 universities in center-south and Sardinia;

-

IT2b: 19 universities in the south.

Such a partition reflects once more the long-standing social and economic gap between north and south of Italy (see Supplementary Fig. S567). As concerns the educational offer network, community detection provides

-

IE1: 31 small, telematic universities, oriented to law, economics or foreign languages;

-

IE2: 44 medium-to-large general-purpose universities;

-

IE3: 9 polytechnic and small engineering-oriented universities;

-

IE4: 8 research hospitals and health-oriented small universities.

The full list of universities with their community membership in both the territorial and educational offer networks is reported in Supplementary Data S3. The community nomenclature is explained in “Materials and methods”. Numerical details of hierarchical community detection and the intermediate-level partitions that lead to the aforementioned results are reported, for both networks, in the Supplementary Sect. 2.2.

Territorial bias in CENSIS overall ranking. In this scatter plot each dot corresponds to an Italian university, and its coordinates along the horizontal and vertical axes represent the debiasing parameters. The values \(\delta _T\) and \(\delta _E\) assess the results achieved by the institution in the CENSIS overall ranking, respectively by comparison with the rest of the IT and IE community it belongs to. Each dot in the scatter plot is colored according to its IT community membership. The arrangement of dots and their color distribution in the scatter plot indicate the existence of two clusters with a very neat geographical characterization, separated by a gap that represents the territorial bias. The arrows indicate the direction of the principal components PC1 (positive slope) and PC2 (negative slope), with their length proportional to the corresponding standard deviations.

Quantifying biases and redefining scores in CENSIS rankings

Even in the Italian case study, we adopt communities and the debiasing parameters as the main tool to implement a context-aware assessment of the scores achieved by universities in rankings. The scatter plot in Fig. 3 represents universities in the \((\delta _T,\delta _E)\) plane in the case of the CENSIS overall rating; analogous plots associated to the sectorial dimensions of CENSIS ranking are displayed in Supplementary Fig. S6. The arrangement of points in Fig. 3 bears similarities to that shown in Fig. 2 for the OECD case study, but differs in a crucial point: in the \((\delta _T,\delta _E)\) plane referred to the overall CENSIS rating, two well-separated clusters are present, apparently distributed along parallel lines. These clusters are geographically characterized, in the sense of a north-south polarization.

The principal components of point distributions in the \((\delta _T,\delta _E)\) plane provide a redefined ranking (PC1) and a measure of the territorial dragging effect (PC2). This interpretation is supported by results analogous to those obtained in the international case. In particular, for CENSIS overall, the component PC2, accounting for 12.8% of the total variance, is strongly anticorrelated to the average per capita available income of the province hosting the main university seat (Pearson correlation \(-0.636\), with \(p=10^{-9}\) from the exact distribution test). On the other hand, PC1, accounting for 87.2% of the total variance, shows a positive and weaker correlation with the same wealth indicator (0.405, with \(p=3\cdot 10^{-4}\) from the exact distribution test). Moreover, the assortativity value 0.113 of the territorial network with respect to PC1 is strongly mitigated compared to the one related with the original ranking, while the assortative behavior concentrates on PC2 (0.450). Also in the Italian case, the Pearson correlation between the GDP and the original ranking (0.553, with \(p=3\cdot 10^{-7}\)), is intermediate between the absolute values of the correlations with PC1 and PC2. As reported in Supplementary Table S2, the described phenomenology is found, with few exceptions, in the sectorial CENSIS ranking dimensions. Top ten performers in PC1 and PC2 related to CENSIS overall are displayed in Tables 5 and 6, respectively, while the full lists, also including the principal component values with respect to each ranking dimension, are contained in Supplementary Data S4. The top quartile of largest improvements from the original ranking to PC1 is composed of 7 universities from community IT2a, 7 from IT2b, 2 from IT1b, none from IT1a. Therefore, also in the Italian case, the redefined ranking highlights the performance of universities that emerge in comparatively difficult development contexts.

The gap between the two clusters appearing in Fig. 3, measured along the PC2 direction, is numerically relevant: actually, it corresponds to 2.8 times the average standard deviation of the PC2 distributions within each cluster. This result is obtained by quantifying the gap as the distance 0.147 between the two peaks of the distribution of the principal component PC2, after checking its consistency with a bimodal Gaussian by means of a Gaussian Mixture model (see “Materials and methods” for details). The idea of a systematic separation between clusters is further corroborated by independently checking that the respective distributions in the \((\delta _T,\delta _E)\) plane are fitted by two regression lines that are parallel within their standard errors:

for the upper cluster, and

for the lower one, with the uncertainties determined by 10-fold cross validation. The average vertical distance \(\Delta \delta _E\) between these lines can be interpreted as a measure of the north-south structural gap separating institutions that equally perform with respect to their own territory: given a pair of universities belonging to different clusters and characterized by the same \(\delta _T\), regardless of its value, the university in the center-north cluster tends to have \(\delta _E\) larger by \(\Delta \delta _E = 0.260\) (which amounts to 25.2% of the total range of \(\delta _E\)) than that of the university in the center-south cluster.

Discussion

In this work, we have achieved two relevant results: measuring the impact of the territory on the scores of universities in rankings, and decoupling this bias from the definition of performance, thus developing a fairer rating system. Nowadays, awareness of the effect of structural inequalities on performance evaluation is increasing, as testified by the many different attempts to detect and mitigate it. On one hand, the same rating agencies try to enact normalization strategies, that are only partially effective and require an independent testing of the residual bias. On the other hand, various studies have used regression methods to investigate the impact of possible underlying structural factors (such as GDP per capita, R&D expenditure and English as a first language, to mention a few) on a university’s score68,69,70,71,72. This approach, recently employed even to perform bias removal73, relies on the choice of a necessarily restricted and arbitrary set of aggregated indicators as possible bias sources. In our work, we overcome this limitation by following the paradigm of economic complexity, which allows to refer the performance of a university to a multifaceted representation of its context, determined by a large number of indicators. We also remark that our analysis is characterized by a higher geographical resolution than the state of the art; in particular, the case of OECD universities is investigated here on a subnational scale, whereas previous research on international rankings employed data available on a national basis.

We are able to determine the bias affecting the score of each single university (PC2), and a fairer ranking (PC1) in which the effect of structural inequalities is substantially mitigated. The quantities PC1 and PC2 are computed from the newly introduced debiasing parameters, which allow to refer the performance of each university to an expectation based on its peers, namely other universities located in a similar territorial context or providing an analogous educational offer. To the best of our knowledge, the proposed approach represents an unprecedented case of unsupervised and fully data-driven debiasing in rankings.

Universities achieving the largest placement improvements in PC1 with respect to the original overall rankings belong to comparatively disadvantaged territorial communities. Not surprisingly, the same geographical areas host universities with the highest values of PC2, namely those most negatively affected by the territorial bias. Top performers in PC1 are still based in the most developed communities, indicating that the excellence of some academic institutions persists even after bias removal. Nonetheless, a pair of European universities achieve significant upgrades among top-10 placements in PC1 related to THE overall, and some universities from the south of Italy manage to bridge the gap and appear among top-10 PC1 associated to CENSIS overall.

The analysis reveals that the territorial bias generally affects the score of OECD universities in all the THE rankings. This behavior is emphasized in the case of THE international outlook: actually, infrastructures and cultural connections that characterize important and wealthy areas provide a much stronger boost to academic internationalization than those of peripheric and poorer zones69,74. Territorial bias is relevant also in THE citations, confirming a Matthew effect already known from previous literature73: the reputational stock of eminent academic institutions, usually embedded in a wealthy context, attracts human and financial resources to foster new high-quality research, but at the same time induces biases in scientific production and paper citations, fueling a self-reinforcement mechanism. Instead, THE research and THE industry income are characterized by a comparatively low territorial bias: this rather counterintuitive result is due to a normalization with respect to GDP per capita PPP in the index definitions, that is capable of partially decoupling the bias from performance evaluation.

The case of Italian universities is characterized by the presence a gap in the distribution of PC2 related to the CENSIS overall ranking and to some specific dimensions (see Supplementary Fig. S6), indicating a neat separation between positively and negatively biased universities. Such a feature has profound and structural reasons, related to the economic, historical and social gap between north and south of the country. The absence of a similar phenomenology in the international case indicates that OECD subregions are distributed according to a more smeared spectrum of development. The Italian north-south polarization becomes particularly striking in the case of CENSIS employability: low employability is actually, with few exceptions, a widespread problem that graduates from universities based in south of Italy have to face, thus representing a crucial factor in determining internal student mobility. Finally, it is interesting to remark that CENSIS international outlook is also affected by a strong territorial bias, as well as its THE counterpart.

In this paper we have observed that the territorial context affects by a relevant amount an academic institution’s performance, which, if positive, can contribute to further improvements through self-reinforcing awarding mechanisms. At this point, a natural question arises as to how much the advantageous features of a territory are determined by the presence of an outstanding university. This problem, that requires specific data and further analytic tools, represents an interesting research perspective that can be addressed in future works.

Materials and methods

Experimental design

The goal of our analysis is to provide an interpretation to the score of universities in rankings, that takes into account both the territory in which they are embedded and their educational targets. Specifically, we aim at shedding light on the influence that is exerted on an academic institution by the context it belongs to. The analysis, replicated for the best-ranked institutions of OECD countries and for the Italian university environment, is made of the following steps:

-

collecting (1) values of territorial indicators, combining in a reasonable way data abundance and recentness, (2) information on the educational offer of universities, (3) higher-education rankings;

-

constructing a pair of complex networks with nodes representing universities, the one based on territorial similarity and the other based on educational offer similarity, for both the international (OECD) and the Italian case;

-

quantifying the assortativity of each university network with respect to the scores in higher-education rankings;

-

using the unsupervised partition of these networks, obtained by community detection algorithms, to divide the university environment in homogeneous groups in terms of territory or educational offer, in order to compare the performance of each institution with those of its peers.

In the following, we detail the implementation of the aforementioned process, leaving more strictly technical aspects to the Supplementary Information S1.

Data collection and preprocessing

Due to their heterogeneous nature, the data employed to construct the networks are collected from different sources. In particular, we use data from the following datasets:

-

OECD subregions data. Territorial indicators are collected from the OECD Regional Statistics database75,76. We choose to associate each university seat to the region it belongs to at the Territorial Level 2 (TL2) of subdivision. For data availability reasons, we make exceptions to this rule, considering TL3 regions for Estonia and Latvia. The missing values of infant mortality for the regions of Brazil, and of life expectancy at birth for the regions of Brazil and Argentina, are collected from Global Data Lab (dataset version updated to November 2020), that focuses on emerging economies77. The total number of international indicators is 103. For each indicator and subregion the most recent value available has been considered. The full list of OECD subregional indicators is reported in Supplementary Table S3.

-

Educational offer data of the best-ranked universities in the world, scraped from the THE website65. The full list of the educational offer categories available at universities in the THE rankings is reported in Supplementary Table S4.

-

Two datasets, prepared by the Italian National Institute of Statistics (ISTAT), of territorial indicators on welfare & sustainability (Indicatori benessere e sostenibilità)78, and on development policies (Indicatori territoriali per le politiche di sviluppo)79, updated to November 2019. These datasets contain a total of 144 indicators referred to each Italian province, from which we exclude “Average per capita income (EUR)”. For each indicator, data from the most recent year available are considered, with possible integrations of missing entries borrowed from at most 5 years before. The list of indicators along with their reference years is reported in Supplementary Table S5.

-

Data collected by the Italian Ministry of University and Research (MUR) in the survey on the educational offer of Italian universities, indicating which degree categories (classi di laurea), determined by both the degree level and sector, are available at each institution80. The classification of Italian degree categories according to the International Standard Classification of Education (ISCED)81 is reported in Supplementary Table S6.

Indicators for the territorial analysis are subjected to a selection, which eliminates those correlated to another indicator by a Pearson correlation larger than 0.98, as they carry redundant information. In cases of statistical redundancy, we retain the indicator with the wider data availability. This selection leaves 97 and 121 indicators for the territorial analysis of international and Italian universities, respectively. The selected indicators are finally normalized in order to compute Pearson correlation between different subregions and develop the complex network model. More precisely, before performing the linear rescaling of an indicator in the interval [0, 1], the values exceeding the 99th percentile from above and the 1st percentile from below are replaced by the reference percentiles, in order to mitigate the effect of outliers.

On the other hand, rankings are retrieved from the following sources:

-

the 2021 rankings of world universities compiled by THE65; while the overall score is publicly provided only for the best 200 positions, the dimensions that contribute to it, namely teaching, research, citations, industry income and international outlook, are available for 1526 universities; the overall score is then computed following the instructions on the THE website; of all the ranked universities, we focus on 1088 institutions, located in OECD countries, for which a reasonable amount of territorial data is available;

-

the academic year 2019/2020 rankings of Italian universities compiled by CENSIS, including, besides an overall score, specific ratings for the institution performances in the areas of services, scholarships, structures, communication and digital services, international outlook, employability66; data are available for 74 Italian universities, except those on employability, that are not given for 16 private institutions.

All the ranking scores, both overall and sectorial, are rescaled in the interval [0, 1]. As a benchmark to validate our procedure of ranking reinterpretation, we use indicators related to the wealth of regions. Specifically, we consider data on territorial GDP per capita at purchasing power parity (PPP), obtained from the OECD National Accounts Statistics database (set “USD per head, constant prices, constant PPP, base year 2015”)82 in the international case, and the indicator “Average per capita income (EUR)” from the ISTAT database in the Italian case. For both benchmark indicators, data from the most recent year available are considered, with missing values integrated by entries from at most 5 years before. The GDP per capita at PPP of the regions HU10 (Central Hungary) and PL11 (Mazovia) has been derived by an average, weighted by region populations, of the values for HU11 (Budapest) and HU12 (Pest) in the first case, PL91 (Warsaw) and PL92 (Mazovian region) in the second case.

Complex network construction

Here we illustrate the methods to construct the two classes of complex networks employed as analysis tools in our work, namely territorial networks, based on the similarity between the regions to which the main university seats belong, and educational offer networks, based on the presence, in different universities, of degrees in the same educational areas.

Territorial networks

We start by constructing subnational area networks, whose nodes are represented by the regions of the chosen territorial partition, namely the OECD TL2 regions (with few TL3 exceptions) and the Italian provinces (province). In principle, each region can be connected to any other, with the weight of an edge being determined by the Pearson correlation between the vectors of territorial indicators pertaining to the two connected regions. However, we choose to not consider a given edge in case the null hypothesis of uncorrelated sets of indicators cannot be rejected at a significance level of 1% (i.e., when \(p>10^{-2}\), according to the test based on comparing the sample Pearson correlation with the exact distribution of the correlation values between two random vectors, independent and normally distributed). Therefore, territorial networks are not complete, and edges can have both positive and negative values. Starting from the subnational area networks, we construct the proper territorial university networks, in which nodes represent universities, and edges between them are related to the similarity between their regions.

Educational offer networks

In this case, we start with a network whose nodes coincide with universities. The weight of the edge connecting two given institutions u and v is determined by the Dice index

between the sets \(\Gamma _u\) and \(\Gamma _v\) of their educational areas appearing in THE rankings (for the OECD case) or active degree categories (for the Italian case), with \(|\dots |\) denoting the cardinality of a set. Even in this case, though the network is in principle complete, we perform a selection of edges, to provide it with a non-trivial topology. Specifically, for each node in the complete network, we identify the largest weight of the edges connected to it, and collect all these largest weights in a set Q. Then, we remove from the complete network all the edges between universities u and v such that \(DSC_{uv}<\min Q\). By construction, this procedure does not leave isolated nodes.

Assortativity

In the case of a binary network, the definition of assortativity with respect to a continuous attribute with values \(x_i\) for each node i reads42

with \(k_i\) the node degrees, \(A_{ij}\) the adjacency matrix and m the total number of edges in the network. The values of assortativity range from \(-1\) (maximally antiassortative network) to \(+1\) (maximally assortative). A network consisting of two equal and fully connected components, with no connection between them and characterized by two different values of an attribute, is maximally assortative. A network consisting of two subsets with the same cardinality, each characterized by a specific attribute value, with no internal connection but with each node only connected to nodes of the other subset, is maximally antiassortative. If \(r=0\), there is no relevant linear correlation between the values \(x_i\) and \(x_j\) of the attributes of nodes (i, j) connected by an edge (notice, however, that this does not exclude the existence of nonlinear correlations).

The above definition can be straightforwardly generalized to weighted networks43,

where \(w_{ij}\) is the weight of the edge (i, j), \(s_i=\sum _j w_{ij}\) is the strength of node i, and \(W=\sum _{ij} w_{ij}\). The expression of \(r_w\) remains meaningful only in the case of positive weights. Therefore, before evaluating assortativity, we associate to territorial networks, which can contain negative-weight edges, an auxiliary subnetwork where only positive-weight edges are retained.

The definition of assortativity for a weighted network is formally equivalent to the weighted Pearson correlation between two vectors of length 2m, with m the number of edges, whose entries coincide respectively with the attributes \(x_i\) and \(x_j\) of the nodes at the ends of each edge (i, j); the contribution of a given pair \((x_i,x_j)\) to the overall correlation is determined by the weight \(w_{ij}\) of the corresponding edge. The interpretation of assortativity in terms of a weighted Pearson correlation allows to associate to \(r_w\) the standard error

that is evaluated, along with the related p-value, based on the Student t-distribution hypothesis83. The assortativity and the associated standard errors and p-values are computed through an algorithm implemented in the “weights” R library84.

Community detection

Community detection is performed using the Spin Glass algorithm85,86. While the resolution \(\gamma\) is treated as a free parameter, varied in the interval [0.8, 1] with step width 0.05, the other parameters of the algorithm are fixed to default values, outlined in detail in the Supplementary Sect. 4. For a fixed set of parameters, we perform \(K=100\) runs of the algorithm, each one with a different seed of the pseudorandom number generator. The partition in communities is then chosen by majority voting. However, in order to evaluate the stability of the detected partition in communities, we do not just count the frequency of the majority partition. Instead, we introduce a new stability criterion, that takes into account the similarity between different partitions \(\{p_j\}_{(j=1,..,K)}\), based on the average Normalized Mutual Information

where \(NMI(p_a,p_b)\) is the Normalized Mutual Information between a given pair of partitions, and \(K(K-1)/2\) is the number of distinct pairs. The majority partition over \(K=100\) runs is approved under the condition \(\langle NMI \rangle \ge 0.90\), related to the general stability of the community detection. Moreover, the majority partition must satisfy the following further requirements:

-

it must not be trivial (i.e., consisting of a single community, coinciding with the whole network);

-

it must not be too fragmented, containing communities whose cardinality is less than 5% of the cardinality of the whole network.

If the results obtained for 100 runs, at different values of the resolution \(\gamma\), satisfy the above conditions, we choose the output with larger \(\langle NMI \rangle\), and the majority partition corresponding to this choice is identified as the result of community detection. This scheme is employed to determine the most stable partition of universities based on territorial features and educational offer. In the first case, territorial community detection is determined on the subnational area network, where nodes represent regions, and then the university network is divided according to a partition in which a node (now coinciding with an institution) inherits community membership of the pertaining region.

Considering the different kinds of networks and the hierarchical community detection process, we use the following nomenclature for communities:

-

“O” labels communities of an OECD network;

-

“I” labels communities of an Italian network;

-

“T” labels communities of a territorial network;

-

“E” labels communities of an educational offer network;

-

communities labelled with the same number derive from the same community found at the first hierarchical level;

-

the lower-case letters after the number are used to distinguish communities found at the second hierarchical level.

For example, communities OE1a and OE1b belong to an OECD educational offer network, and are obtained at the second hierarchical level by subdividing the same community found at the first hierarchical level. On the other hand, communities OE2b and OE3b are obtained from two different first-level communities, as they are labelled by different numbers; the fact that they are labelled with the same lower-case letter is accidental.

Gaussian mixture models

To investigate the multimodal nature of the principal components, we apply a procedure based on fitting PC1 and PC2 with a family of unidimensional Gaussian Mixture models, characterized by a number \(n_c\) of components that varies from 1 to 10. At fixed \(n_c\), the Gaussian character of each component is checked by the Shapiro-Wilk test87, in which the null hypothesis of Gaussian distribution is not rejected if its p-value is larger than 5%. Finally, we retain only the multimodal Gaussian models in which all the \(n_c\) components pass the test. A further selection can be made on the accepted candidate models by choosing the one that minimizes the AIC or BIC score88. The numerical findings are discussed in the Supplementary Sect. 2.4.

Data availability

The data that support the findings of this study are either publicly available on databases cited in the bibliography, or reported in Supplementary Data files. Computer code is available from the corresponding author upon reasonable request.

Change history

08 April 2022

The original online version of this Article was revised: In the original version of this Article, the Supplementary Data files, which were included with the initial submission, were omitted. Supplementary Data 1, Supplementary Data 2, Supplementary Data 3 and Supplementary Data 4 files now accompany the original Article.

References

Chankseliani, M., Qoraboyev, I. & Gimranova, D. Higher education contributing to local, national, and global development: New empirical and conceptual insights. High. Educ. 81, 109–127 (2021).

Leal Filho, W. About the role of universities and their contribution to sustainable development. High. Educ. Policy 24, 427–438 (2011).

Sauder, M. & Espeland, W. N. The discipline of rankings: Tight coupling and organizational change. Am. Sociol. Rev. 74, 63–82 (2009).

Dill, D. D. & Soo, M. Academic quality, league tables, and public policy: A cross-national analysis of university ranking systems. High. Educ. 49, 495–533 (2005).

Laredo, P. Revisiting the third mission of universities: Toward a renewed categorization of university activities?. High. Educ. Policy 20, 441–456 (2007).

Bekkers, R. & Bodas Freitas, I.M. Analysing knowledge transfer channels between universities and industry: To what degree do sectors also matter? Res. Policy 37, 1837–1853 (2008).

Abreu, M., Demirel, P., Grinevich, V. & Karatas-Ozkan, M. Entrepreneurial practices in research-intensive and teaching-led universities. Small Bus. Econ. 47, 695–717 (2016).

Rodrigues, C. Universities, the second academic revolution and regional development: A tale (Solely) “Made of Techvalleys’’?. Eur. Plan. Stud. 19, 179–194 (2011).

Saxenian, A. L. Regional Advantage: Culture and Competition in Silicon Valley and Route 128 (Harvard University Press, Cambridge, MA, 1994).

Mas-Verdu, F., Roig-Tierno, N., Nieto-Aleman, P. A. & Garcia-Alvarez-Coque, J.-M. Competitiveness in European regions and top-ranked universities: Do local universities matter?. J. Competitiveness 12, 91–108 (2020).

Agasisti, T., Barra, C. & Zorzi, R. Research, knowledge transfer, and innovation: The effect of Italian universities’ efficiency on local economic development 2006–2012. J. Reg. Sci. 59, 819–849 (2019).

Oancea, A. Research governance and the future(s) of research assessment. Palgrave Commun. 5, 27 (2019).

Benneworth, P. & Hospers, G.-J. The new economic geography of old industrial regions: Universities as global-local pipelines. Environ. Plan. 25, 779–802 (2007).

Hicks, D. Performance-based university research funding systems. Res. Policy 41, 251–261 (2012).

Jonkers, K. & Zacharewicz, T. Research Performance Based Funding System: a Comparative Assessment (Publications Office of the European Union, Luxembourg, 2016).

Espeland, W. N. & Sauder, M. Rankings and Reactivity: How Public Measures Recreate Social Worlds. Am. J. Sociol. 113, 1–40 (2007).

Ranking-system doubts. Nature 494, 509 (2013).

Livan, G. Don’t follow the leader: How ranking performance reduces meritocracy. R. Soc. open sci. 6, 1 (2019).

Li, W., Aste, T., Caccioli, F. & Livan, G. Early coauthorship with top scientists predicts success in academic careers. Nat. Commun. 10, 5170 (2019).

Fire, M. & Guestrin, C. Over-optimization of academic publishing metrics: observing Goodhart’s law in action. Gigascience 8, giz053 (2019).

Trippl, M., Sinozic, T. & Lawton Smith, H. The role of universities in regional development: Sweden and Austria. Eur. Plan. Stud. 23, 1722–1740 (2015).

Lawton Smith, H. & Bagchi-Sen, S. The research university, entrepreneurship and regional development: Research propositions and current evidence. Entrepreneurship Reg. Dev. 24, 383–404 (2012).

Rodrigues, C., da Rosa Pires, A. & de Castro, E. Innovative universities and regional institutional capacity building: The Case of Aveiro. Portugal. Ind. High. Educ. 15, 251–255 (2001).

Charles, D. Universities as key knowledge infrastructures in regional innovation systems. Innovation (Abingdon) 19, 117–130 (2006).

Gunasekara, C. The generative and developmental roles of universities in regional innovation systems. Sci. Public Policy 33, 137–150 (2006).

Heher, A. D. Return on investment in innovation: Implications for institutions and national agencies. J. Technol. Transf. 31, 403–414 (2006).

Rauhvargers, A. Global University Rankings and Their Impact - Report II (European University Association, Brussels, 2013).

van Vught, F. Mission diversity and reputation in higher education. High. Educ. Policy 21, 151–174 (2008).

Clauset, A., Arbesman, S. & Larremore, D. B. Systematic inequality and hierarchy in faculty hiring networks. Sci. Adv. 1, e1400005 (2015).

Way, S. F., Morgan, A. C., Larremore, D. B. & Clauset, A. Productivity, prominence, and the effects of academic environment. PNAS 116, 10729–10733 (2019).

Pusser, B. & Marginson, S. University rankings in critical perspective. J. High. Educ. 84, 544–568 (2013).

Morgan, A. C., Economou, D. J., Way, S. F. & Clauset, A. Prestige drives epistemic inequality in the diffusion of scientific ideas. EPJ Data Sci. 7, 40 (2018).

Johnson, A. M. The destruction of the holistic approach to admissions: The pernicious effects of rankings. Indiana Law J. 82, 309–358 (2006).

Stake, J. E. & Evans, J. The interplay between law school rankings, reputations, and resource allocations: Ways rankings mislead. Indiana Law J. 82, 229–270 (2006).

Sugimoto, C. R. & Lariviére, V. Measuring Research: What Everyone Needs to Know (Oxford University Press, New York, NY, 2018).

Hazelkorn, E. & Gibson, A. Global science, national research, and the question of university rankings. Palgrave Commun. 3, 21 (2017).

Coates, H. “Reporting alternatives: Future transparency mechanisms for higher education” in Global Rankings and the Geopolitics of Higher Education (Routledge, London, ed. 1, 2016), chap. 16.

Hausmann, R. et al. The Atlas of Economic Complexity (MIT Press, Cambridge, MA, 2014).

Tacchella, A., Cristelli, M., Caldarelli, G., Gabrielli, A. & Pietronero, L. A new metrics for countries fitness and products complexity. Sci. Rep. 2, 723 (2012).

Delgado, M., Ketels, C., Porter, M. E. & Stern, S. The Determinants of National Competitiveness (Technical Report, National Bureau of Economic Research, 2012).

Pugliese, E. et al. Unfolding the innovation system for the development of countries: Co-evolution of Science, technology and production. Sci. Rep. 9, 16440 (2019).

Newman, M. Networks (Oxford University Press Inc, New York, NY, ed. 2, 2018).

Farine, D. R. Measuring phenotypic assortment in animal social networks: Weighted associations are more robust than binary edges. Anim. Behav. 89, 141–153 (2014).

Newman, M. E. J. Fast algorithm for detecting community structure in networks. Phys. Rev. E 69, 066133 (2004).

Fortunato, S. Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Hidalgo, C., Klinger, B., Barabasi, A.-L. & Hausmann, R. The product space conditions the development of nations. Science 317, 482–487 (2007).

Battiston, S., Puliga, M., Kaushik, R., Tasca, P. & Caldarelli, G. DebtRank: Too central to fail? Financial networks, the FED and systemic risk. Sci. Rep. 2, 541 (2012).

Amoroso, N. et al. Economic interplay forecasting business success. Complexity 2021, 8861267 (2021).

Bardoscia, M., Battiston, S., Caccioli, F. & Caldarelli, G. Pathways towards instability in financial networks. Nat. Commun. 8, 14416 (2017).

Bardoscia, M. et al. The physics of financial networks. Nat. Rev. Phys. 3, 490–507 (2021).

Fagiolo, G. & Santoni, G. Country centrality in the international multiplex network. Netw. Sci. 3, 377–407 (2015).

Alessandretti, L., Sapiezynski, P., Sekara, V., Lehmann, S. & Baronchelli, A. Evidence for a conserved quantity in human mobility. Nat. Hum. Behav. 2, 485–491 (2018).

Sporns, O. The human connectome: A complex network. Ann. N. Y. Acad. Sci. 1224, 109–125 (2011).

Amoroso, N. et al. Multiplex networks for early diagnosis of Alzheimers disease. Front. Aging Neurosci. 10, 365 (2018).

Amoroso, N. et al. Deep learning and multiplex networks for accurate modeling of brain age. Front. Aging Neurosci. 11, 115 (2019).

Bellantuono, L. et al. Predicting brain age with complex networks: From adolescence to adulthood. Neuroimage 225, 117458 (2021).

Monaco, A. et al. Shannon entropy approach reveals relevant genes in Alzheimer’s disease. PLoS ONE 14, e0226190 (2019).

Monaco, A. et al. Identifying potential gene biomarkers for Parkinson’s disease through an information entropy based approach. Phys. Biol. 18, 016003 (2020).

Bianconi, G. Multilayer Networks-Structure and Function (Oxford University Press, New York, NY, 2018).

Amoroso, N. et al. Potential energy of complex networks: A quantum mechanical perspective. Sci. Rep. 10, 18387 (2020).

Amoroso, N., Bellantuono, L., Pascazio, S., Monaco, A. & Bellotti, R. Characterization of real-world networks through quantum potentials. PLoS ONE 16, e0254384 (2021).

Criado, R., García, E., Pedroche, F. & Romance, M. A new method for comparing rankings through complex networks: Model and analysis of competitiveness of major European soccer leagues. Chaos 23, 043114 (2013).

Fernández Tuesta, E., Bolaños-Pizarro, Pimentel Neves, M.D., Fernández, G. & Axel-Berg, J. Complex networks for benchmarking in global universities rankings. Scientometrics 125, 405–425 (2020).

Bellantuono, L. et al. An equity-oriented rethink of global rankings with complex networks mapping development. Sci. Rep. 10, 18046 (2020).

Times Higher Education. World University Rankings. https://www.timeshighereducation.com/content/world-university-rankings. Accessed 15 July 2021.

CENSIS. La classifica CENSIS delle università italiane (edizione 2020/2021). https://www.censis.it/sites/default/files/downloads/classifica_universit%C3%A0_2020_2021.pdf. Accessed 15 July 2021.

MapChart - Create your own custom map. https://mapchart.net. Accessed 1 Feb 2022.

Li, M., Shankar, S. & Tank, K. Why does the USA dominate university league tables?. Stud. High. Educ. 36, 923–937 (2011).

Frenken, K., Heimeriks, G. J. & Hoekman, J. What drives university research performance? An analysis using the CWTS Leiden Ranking data. J. Informetr. 11, 859–872 (2017).

Bornmann, L., Mutz, R. & Daniel, H. D. Multilevel-statistical reformulation of citation-based university rankings: The Leiden ranking 2011/2012. J. Assoc. Inf. Sci. Technol. 64, 1649–1658 (2013).

Bornmann, L., Stefaner, M., de Moya Anegón, F. & Mutz, R. What is the effect of country-specific characteristics on the research performance of scientific institutions? Using multi-level statistical models to rank and map universities and research-focused institutions worldwide. J. Informetr. 8, 581–593 (2014).

Daraio, C., Bonaccorsi, A. & Simar, L. Rankings and university performance: A conditional multidimensional approach. Eur. J. Oper. Res. 244, 918–930 (2015).

Safón, V. & Docampo, D. Analyzing the impact of reputational bias on global university rankings based on objective research performance data: The case of the Shanghai Ranking (ARWU). Scientometrics 125, 2199–2227 (2020).

Guo, W., Del Vecchio, M. & Pogrebna, G. Global network centrality of university rankings. R. Soc. open sci. 4, 171172 (2017).

OECD. OECD Regional Statistics (database) (OECD, Paris, 2020). http://dx.doi.org/10.1787/region-data-en

Measuring the distance to the SDGs in regions and cities Webtool. http://www.oecd-local-sdgs.org/. Accessed 15 July 2021.

Download Area Database—Global Data Lab. https://globaldatalab.org/areadata/download_files/. Accessed 15 July 2021.

Misure del Benessere dei territori. https://www.istat.it/it/archivio/230627. Accessed 15 July 2021.

Indicatori territoriali per le politiche di sviluppo. https://www.istat.it/it/archivio/16777/. Accessed 15 July 2021.

MIUR - Organizzazioni—Open Data dell’istruzione superiore. http://dati.ustat.miur.it/organization/miur. Accessed 15 July 2021.

UNESCO Institute for Statistics. ISCED fields of education and training 2013 (ISCED-F 2013) (UNESCO Institute for Statistics, Montreal, 2013).

Regional Demography. https://stats.oecd.org/Index.aspx?DataSetCode=REGION_DEMOGR. Accessed 15 July 2021.

Kendall, M.G. & Stuart, A. The Advanced Theory of Statistics, Volume 2: Inference and Relationship (Hafner, New York, NY, 1973).

Pasek, J. weights: Weighting and Weighted statistics. https://cran.r-project.org/web/packages/weights/. Accessed 15 July 2021.

Reichardt, J. & Bornholdt, S. Statistical mechanics of community detection. Phys. Rev. E 74, 016110 (2006).

Traag, V. A. & Bruggeman, J. Community detection in networks with positive and negative links. Phys. Rev. E 80, 036115 (2009).

Shapiro, S. S. & Wilk, M. B. An analysis of variance test for normality (complete samples). Biometrika 52, 591–611 (1965).

Ivezić, Ž, Connolly, A., Vanderplas, J. & Gray, A. Statistics, Data Mining and Machine Learning in Astronomy (Princeton University Press, Princeton, NJ, 2014).

Acknowledgements

Code development/testing and results were obtained on the IT resources hosted at ReCaS data center. ReCaS is a project financed by the italian MIUR (PONa3_00052, Avviso 254/Ric.).

Disclaimer

Vincenzo Aquaro: The designations employed and the presentation of the material in this paper do not imply the expression of any opinion whatsoever on the part of the United Nations concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. The designations “developed” and “developing” economics are intended for statistical convenience and do not necessarily imply a judgment about the state reached by a particular country or area in the development process. The term “country” as used in the text of this publication also refers, as appropriate, to territories or areas. The views expressed are those of the individual authors of the paper and do not imply any expression of opinion on the part of the United Nations. Marco Bardoscia: Any views expressed are solely those of the author(s) and so cannot be taken to represent those of the Bank of England or to state Bank of England policy. This paper should therefore not be reported as representing the views of the Bank of England or members of the Monetary Policy Committee, Financial Policy Committee or Prudential Regulation Committee.

Author information

Authors and Affiliations

Contributions

Conceptualization: L.B., A.M., N.A., R.B.. Methodology: L.B., A.M., N.A., R.B. Investigation: L.B. Visualization: L.B., A.D.L., A.M., N.A. Supervision: R.B. Writing-original draft: L.B. Writing-review and editing: L.B., A.M., N.A., V.A., M.B., A.D.L., A.L., S.T., R.B.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bellantuono, L., Monaco, A., Amoroso, N. et al. Territorial bias in university rankings: a complex network approach. Sci Rep 12, 4995 (2022). https://doi.org/10.1038/s41598-022-08859-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-08859-w

This article is cited by

-

Detecting the socio-economic drivers of confidence in government with eXplainable Artificial Intelligence

Scientific Reports (2023)

-

Relationship between bibliometric indicators and university ranking positions

Scientific Reports (2023)

-

How to make university rankings fairer

Nature Italy (2022)

-

Pairwise and high-order dependencies in the cryptocurrency trading network

Scientific Reports (2022)

-

Come rendere più eque le classifiche delle università

Nature Italy (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.