Abstract

Amid accelerating threats to species and ecosystems, technology advancements to monitor, protect, and conserve biodiversity have taken on increased importance. While most innovations stem from adaptation of off-the-shelf devices, these tools can fail to meet the specialized needs of conservation and research or lack the support to scale beyond a single site. Despite calls from the conservation community for its importance, a shift to bottom-up innovation driven by conservation professionals remains limited. We surveyed practitioners, academic researchers, and technologists to understand the factors contributing to or inhibiting engagement in the collaborative process of technology development and adoption for field use and identify emerging technology needs. High cost was the main barrier to technology use across occupations, while development of new technologies faced barriers of cost and partner communication. Automated processing of data streams was the largest emerging need, and respondents focused mainly on applications for individual-level monitoring and automated image processing. Cross-discipline collaborations and expanded funding networks that encourage cyclical development and continued technical support are needed to address current limitations and meet the growing need for conservation technologies.

Similar content being viewed by others

Introduction

The integration of new technologies for conservation can improve how we monitor and measure changes to species and whole ecosystems1,2,3, which is critical to guide and evaluate management and policy decisions4. Technology can provide novel data sources, expanded spatial and temporal coverage, access to real-time information, and rapid processing and analysis for intervention1,5,6,7. For example, the inclusion of real-time transmission and processing of data streams from acoustic devices has advanced remote detection and response to illegal logging8. The rapid growth and availability of technologies has been driven largely by adapting existing and consumer-oriented technologies to fit specific conservation needs9, including hobby drones for monitoring and response to threats10,11, in situ molecular analyses in remote field settings12, radar data to forecast bird migrations at continental scales13, and the application of blockchain protocols for fisheries supply chain management14.

While these options are widely available for commercial application, they may lack features required for ecological conservation purposes such as limited durability and power efficiency, constraints from proprietary silos, or high technical knowledge barriers15,16,17,18. In other cases, adoption of data-rich and real-time sensors can lead to secondary problems with managing large datasets that often require their own custom approaches and pipelines3,13,19. Such constraints are thought to limit the uptake of new tools, but only recently have efforts been made to assess the degree to which they restrict the use of technologies in conservation settings and how to prioritize improvements for future development15.

In response to the limitations of off-the-shelf technologies, efforts have grown to actively create novel technologies geared towards conservation16,20. Conservation-driven efforts for purpose-built research and monitoring tools include hardware with a lower price compared with private consumer versions21,22, development of custom hardware to meet specific needs23 and integration of existing platforms for real-time alerts24. They may also require collaborations with technologists (defined as experts in technology-related fields including hardware engineering, software development, and machine learning) and companies to produce open-source products for research and management, such as Microsoft’s MegaDetector25, Google Earth Engine26,27, Vulcan’s EarthRanger28, and Wildlife Insights29. The bottom-up approach of small scale innovation puts increasing importance on cross-discipline collaborations between end users with first-hand knowledge of real world needs and existing obstacles (i.e., practitioners, researchers, and governments), and technologists, who have the skills to develop and adapt custom technologies20,30.

A recent broad survey on the state of the conservation technology field identified that collaboration and information sharing across disciplines and projects was a primary opportunity15. For technology-based solutions to have substantial conservation impacts, there is a need for collaborations that effectively identify feature needs, share data, and facilitate iterative development and support9,15. To facilitate development of conservation technologies and effectively leverage the support of technologists, we aimed to understand the factors contributing to or inhibiting engagement in the collaborative process of technology development and adoption for field use. We surveyed active conservation practitioners, researchers and conservation-oriented technologists regarding conservation technology development to answer three questions: 1) What are the technical barriers for technological uptake among end-users, and are development priorities focused on alleviating these?; 2) How are conservation technology collaborations structured, and what are the perceived barriers to successful collaborations?; and 3) To guide future development, what upcoming technologies are the conservation community looking for?

Results

Of the 101 completed survey responses, we categorized respondents into three groups: 53 were conservation practitioners, 42 were academic researchers, and seven were technologists. Familiarity and experience with conservation technologies varied widely among respondents. Most (71%) of respondents reported being extremely or very familiar with technologies, while 26% reported being moderately familiar. Most (96%) respondents also had experience using existing technologies for conservation applications, while fewer had experience in testing new or unproven tools (48%), adaptation or iterative development of existing tools (54%) or design of new tools (34%). Among user groups, more conservation practitioners were engaged in the development of new conservation technologies (71%) compared to academic researchers (45%).

To address our first question, the technical barriers for using technologies identified by conservation practitioners and academic researchers were similar, highlighting durability (OR = 2.48, 95% CI[1.44 – 4.26]), cost (OR = 8.91, 95% CI[5.07 – 15.65], power efficiency (OR = 4.24, 95% CI[2.45 – 7.35], data management (OR = 2.42, 95% CI[1.39 – 4.22]), and real-time transmission (OR = 3.59, 95% CI[2.03 – 6.35]), (Fig. 1a). However, only cost (OR = 6.10, 95% CI[3.02 – 13.16]) was identified as likely to prevent the use of a technology in the field (Fig. 1b). Development priorities that were highly ranked among practitioners and researchers were aligned with reported technical issues: durability (OR = 7.65, 95% CI[3.09 – 18.97]), cost (OR = 4.34. 95% CI[1.84 – 10.22], and power efficiency (OR = 3.74, 95% CI[1.57 – 8.88]) (Fig. 1c). In the limited responses from technologists, we found feature priorities were focused on cost (7/7 respondents included cost in the top three) and ease of use (4/7). In contrast, durability (2/7 in top three) and power efficiency (1/7) were not highly ranked among technologists (SM Appendix 2 Fig. 1).

The importance of technology features as barriers to use and development priorities. Coefficient estimates (odds ratios) and 95% confidence intervals are shown for predicted relationships between feature types and (a) the frequency of feature-related issues experienced during use, (b) the frequency that feature-related issues prevented use of a tool or device, and (c) the feature priority in development of new tools and devices. For A and B, blue circles indicate where respondents experienced more problems. For C, blue circles indicate where respondents ranked features with higher priority. Asterixis denote where coefficient estimates and confidence intervals did not overlap 1 and indicate a significant influence.

For our second question, we recorded 84 unique collaborations ranging from 2 to 15 partners with a median of five partners. Of the collaborations, 93% involved practitioners, 68% involved academic researchers, and 58% involved technologists. Only 29% of collaborations used websites or forum resources (e.g. wildlabs.net), but 75% of these occurred in a collaboration without a tech expert. Technologists were disproportionately involved in the design stage, while practitioners and researchers were mainly involved in the testing and use phase (SM Appendix 2 Fig. 4). Among barriers to collaborations with conservation technology, high cost (53%) was reported most frequently, followed by delayed timelines (41%) and lack of technical support (25%) (SM Appendix 2 Fig. 5). In terms of factors affecting collaboration experience, our model with poor communication between conservationists and technologists (OR = 0.23, 95% CI[0.06–0.96] and high cost (OR = 0.34, 95% CI[0.11–1.02]) was the most parsimonious model in explaining poor collaborations (SM Appendix 2 Table 7).

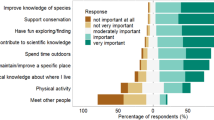

In response to our third question, we identified several strong themes for desired future technologies. Most of the listed technologies were improvements or extensions to existing tools (e.g. mesh network tracking tags, field-ready genetic analysis kits), while some had specific use cases, such as a device to non-invasively collect and protect hair samples for DNA analysis (SM Appendix 2 Table 9). Automation was mentioned in nearly one third of responses (32/101), with most use cases for automation in reference to animal image processing (53%) and individual-level monitoring (22%) (Fig. 2b). Additionally, researchers were largely focused on automation advancements, while practitioners listed a more diverse set of feature needs (Fig. 2a). For all responses on desired technologies, individual-level monitoring (51%) and animal image processing (28%) were the most-mentioned use cases.

Categories of improvements to existing technology identified by occupation group and application type. The x-axis denotes the counts of respondents. Answers were derived from a theme analysis of the open-ended survey question “Assuming unlimited funding and resources, what technological solution would you want to see developed?”. Theme analysis codebooks can be found in SM Appendix 2 Table 9 and 10.

Discussion

The shift in conservation technology from adaptation of off-the-shelf devices to bottom-up innovation requires a strong collaborative environment and solid understanding of the current and future needs of conservation practitioners and researchers20. Our assessment of technical barriers identified frequent issues with multiple feature types, but cost disproportionately prevented the use of technologies in conservation and research settings. While previous studies have touted advanced technologies as a cost-effective pathway to expand the reach and resolution of environmental monitoring1,10, our results suggest that the high upfront cost of new technologies puts currently-available tools out of reach for many groups. These costs manifest across device purchase, training and implementation time, maintenance, data storage, and processing. In addition, low cost is often misaligned with other features that respondents identified, such as durable environment-proofing and robust technical support. For example, the popular AudioMoth low-cost acoustic monitoring platform is sold without a protective case for 60 USD, but users can purchase a case for 35 USD31. While this extra durability increases the cost by over 50%, the design demonstrates a flexible approach to keep prices low for users who do not require robust environmental protection or can build their own solution.

Our assessment of collaboration structures found that just over half involved a technologist, which may explain the highly reported issues with delayed timelines and lack of technical support. While lack of communication between partners was only reported in 17% of responses, it was the most significant contributor to poor collaborations. This appears to stem from identified issues that end users were under-represented in the development and adaptation stages of the development cycle, and technologists were under-represented in the testing and use phases. While reports of conservation technology failures are not widely reported in the literature, our results align with themes from successful technology applications. For example, the ElephantBook tool32 was developed between a team of computer scientists, students, researchers, and conservation managers to aid re-identification of elephants using machine learning. They improved functionality by integrating with existing data platforms and have continued to provide technical support to advance the tool32. Similarly, Snapshot Safari33 has found success with cyclical development in collaboration with multiple stakeholders and technologists. The project provides a platform for camera trap data processing and has slowly expanded to include more study sites, expanded functionality to allow tagging by citizen scientists, and added machine learning to pre-process images33.

Additionally, our limited data from technologists suggest a different set of feature priorities for conservation technologies and highlights the importance of involving end-users from the beginning to ensure that tool specifications meet conservation needs30. Potential solutions to this include adopting a ‘lean start-up’ approach used in commercial sectors that seeks to identify end-users, define features prior to development, and iteratively improve on new products34. In concert, platforms like Wildlabs.org and events such as technology challenges and hackathons (e.g. conservationxlabs.com) can allow end users and developers to connect around conservation problems and foster cross-discipline collaborations. However, further research on the success and limitations of these avenues could help improve and expand networking options in the future. Further, documenting tools and operating instructions in white papers, publications, or setup and troubleshooting guides (e.g. AuidoMoth Getting Started Guide), could help uptake by end users. In the absence of direct technology support, websites and forums also appeared to be an important source of information among respondents. Conservation technology sites that collate solutions (e.g. Wildtech.mongabay.com) and platforms that facilitate networking and information sharing (e.g. Wildlabs.org) can be a viable solution to alleviate some of the technical knowledge roadblocks to the development and use of technologies in practice.

Our assessment of emerging needs in the conservation technology space identified software-based automation tools as the largest desire. Many respondents referenced the need to handle the increasing size of data streams, suggesting that automation is an important need among the conservation community. This aligns with recent results from a broad survey of the conservation technology field pointing to the need to enhance capacity for large-scale data analyses15. Surprisingly, many of the ideas for automation technology already exist in some form, such as automated identification and counting of individual animals in camera trap images19. This suggests that scaling new devices and software beyond the original project may prove difficult when most end users lack the technical know-how and infrastructure to adapt it to their specific use case. One example of this scenario is AI-based classification and detection models for camera trap images, where the drift in species assemblages and environments between sites can severely degrade classification performance25, and where users require the skills or collaborators to implement models and code from open source repositories25. Tools developed by Google’s Wildlife Insights29 are now available for researchers to process data for a wide range of species and habitats with a simple user interface. In other cases, automation improvements in one area may lead to secondary problems. For example, real-time tracking data of wildlife using accelerometer sensors can automatically flag immobility due to injury or poaching3 but requires in-depth analysis of specificity and sensitivity to improve allocation of management resources (G. Wittemyer pers. comm. 2021).

To reduce existing barriers and meet the emerging needs of conservation professionals through bottom-up innovation, our results point to the importance of an adaptive development process that brings end-users to the table early and keeps developers involved beyond the initial release. In the commercial and industrial sectors, spiral development processes with build-test-feedback-revise iterations are shown to get products to market quicker35. Further, companies that focus on the voice of the customer can build better and longer-lasting products36. In the conservation sphere however, continual developer support may not always be feasible as pro-bono engineers switch to new projects or grant cycles end20. In these cases, establishing a strategy to build financially sustainable products using alternative funding models from the beginning of the project may help sustain the tool beyond the end of the initial funding cycle. Research on financial models for conservation technology are limited15, but opportunities include open-source designs that can be community-maintained37, social impact enterprises that follow commercial strategies to maximize environmental impact alongside profits38, or public–private partnerships that have been used to support technology growth in other underfunded sectors39. In concert, the funding network for conservation technologies can encourage best practices of iterative development and continued product support, while reducing cost barriers to scale beyond pilot sites. To achieve the full potential of conservation technologies through small-scale innovation, we must continue to foster collaborations across disciplines, sustain product support, and seek alternative funding models for future tech developments.

Methods

Survey

We identified our survey population using groups with a conservation or conservation-technology focus. First, we selected groups for which 1) there was active membership; 2) members were likely to have at least some familiarity with technology for conservation; and 3) it was possible to obtain the number of people that the survey was sent to estimate response rates. We also sought to distribute the survey to groups that would capture practitioners and scientists working in diverse fields and environments. We also identified groups that would have a high percentage of technology experts. Through this process we identified 11 groups: Society for Conservation Biology Working Groups for Freshwater, Conservation Technology, and Animal Behavior in Conservation, Snapshot Safari, Wildlife Insights, Vulcan EarthRanger developers, Smithsonian Institute, San Diego Zoo Wildlife Alliance, Wildlabs, and the AI for Conservation Slack channel. We dropped the Society for Conservation Biology Conservation Technology working group because we received no responses.

The survey instrument (SM Appendix 1) was distributed via email and listserv postings to each group. In the case of the AI for Conservation group, the survey was sent through Slack. Due to privacy requirements, it was not always possible to collect individual email addresses for distribution, so it was possible for a person to receive the survey multiple times if they were a part of different distribution groups. The survey consisted of 24 questions, involving a combination of multiple-choice, Likert-scale40, and open-response questions. The survey was designed to answer three overarching research questions: 1) What are the technical barriers for technological uptake among end-users, and are development priorities focused on alleviating these?; 2) How are conservation technology collaborations structured, and what are the perceived barriers to successful collaborations?; and 3) What future technologies are the conservation community looking for?

To better-evaluate these questions, the survey was structured around two ways of interacting with conservation technology: 1) the use of technology tools for conservation and research, and 2) the development (i.e., design, adaptation, and testing) of new tools. Respondents were asked to specify their occupation from a list of 6 options. Due to the limited sample size, occupation was collapsed into three categories: conservation practitioners (front-lines conservationists, non-academia researchers and conservation facilitators), academic researchers (professor/faculty/postdoc and graduate student), and technologists. In conjunction, we defined four distinct roles that respondents could take on: Use of existing and established tools for work, testing of new or unproven tools, adaptation or iterative development of existing tools, and design of new tools. Respondents were allowed to select more than one role. We used skip logic to only show respondents questions relevant to their experience and roles with conservation technology.

We administered the survey online through Qualtrics from 10th July 2020 to 30th October 2020. To access the survey, respondents were required to consent to participate in our study and were assured that their responses would remain completely anonymous. The survey distribution list reached 648 people. Follow-up emails were sent to each group once, approximately one month after the initial email. We received 101 complete responses, for a response rate of 15.6%. Although this rate is relatively low41, it is consistent with other online surveys that used email to contact respondents42,43. The survey was carried out according to the United States Federal Policy for the Protection of Human Subjects, and all protocols and methods were approved by Colorado State University’s Institutional Review Board before implementation (Protocol No. 20-10050H). Informed consent was obtained from all participants.

Statistical analysis

Descriptive statistics were reported as percentages. For all models, responses from technologists were withheld and evaluated separately due to low response rates from this occupation group. We conducted all statistical analyses using R version 4.0.3 and the ordinal package44,45.

To investigate our first question on technical barriers, we used two questions in the survey. First, to identify the prevalence of issues, we asked respondents to list the frequency at which they encountered different technical limitations. We used an ordinal logistic regression model to evaluate the frequency of occurrence, defined as Never to Always with five categories, in relation to technical limitation and occupation. We categorized seven possible limitations: 1. high or prohibitive cost; 2. lack of durability; 3. poor power efficiency; 4. data access limitations; 5. data management problems; 6. lack of interoperability with other devices or software; or 7. lack of or poor real-time data transmission. Durability was defined to respondents as features that prevented damage to tools (e.g. waterproofing, theft-proofing, etc.). Second, to determine the extent to which technical limitations impacted use of technologies, we asked respondents to indicate whether each limitation had prevented them from using a device or tool in the past. We used a logistic regression model to evaluate the prevention of use in relation to the technical limitation and occupation.

To investigate our question on conservation technology collaborations, we first quantified collaboration structures. For each collaboration, we calculated the percentage of collaborator types involved in each of the four roles (design, adapt, test, and use) of the development process. Collaborator types were collapsed into four categories: practitioners and non-academic researchers, academic researchers, technologists, and website and forum resources. To evaluate barriers, we first summarized the overall frequency of barrier types reported and evaluated the relationship between barriers and collaboration success using ordinal logistic regression models. We used Likert-scale40 ratings of collaboration experience, defined as Poor to Excellent with five categories as the response variable (n = 54), and collaboration group size (continuous), type of technology (hardware or software), and collaboration limitations as possible predictors. Collaboration limitations were defined as: 1. high cost; 2. delayed timeline, 3. lack of project management, 4. misunderstanding on deliverables, 5. lack of technical support, 6. poor communication between conservationists and technologists, and 7. lack of partners. To select the most parsimonious model, we first fit a full model that included all covariates. From this full model, we sequentially dropped the least informative covariate (defined by minimum absolute value of b/SE) and refit the model. The higher order model was discarded if eliminating a covariate led to a reduction in AICc, and this approach was carried out until no additional covariate could be eliminated without leading to an increase in AICc46.

To investigate our question on emerging needs, we assessed unmet needs using answers derived from a theme analysis of the open-ended survey question “Assuming unlimited funding and resources, what technological solution would you want to see developed?”. SB used NVivo 12 Pro47 to inductively (i.e., without predetermined categories) code responses into themes (SM Appendix 2 Table 9, 10). After the initial coding, all authors re-examined, refined, and integrated codes, when necessary, based on our research objectives48,49.

Data availability

The authors declare that the data supporting the findings of this study are available within the paper and its Supplementary Information files.

Code availability

The code that supports the ordinal and logistic regression findings presented here is available within the paper and its Supplementary Information files.

References

Pimm, S. L. et al. Emerging technologies to conserve biodiversity. Trends Ecol. Evol. 30, 685–696 (2015).

Marvin, D. C. et al. Integrating technologies for scalable ecology and conservation. Glob. Ecol. Conserv. 7, 262–275 (2016).

Wall, J., Wittemyer, G., Klinkenberg, B. & Douglas-Hamilton, I. Novel opportunities for wildlife conservation and research with real-time monitoring. Ecol. Appl. 24, 593–601 (2014).

Snaddon, J., Petrokofsky, G., Jepson, P. & Willis, K. J. Biodiversity technologies: tools as change agents. Biol. Lett. 9, 20121029 (2013).

Pettorelli, N., Safi, K., Turner, W. Satellite remote sensing, biodiversity research and conservation of the future. Philos. Trans. R. Soc. B Biol. Sci. 369, 20130190 (2014).

Ripperger, S. P. et al. Thinking small: Next-generation sensor networks close the size gap in vertebrate biologging. PLOS Biol. 18, e3000655 (2020).

Xu, H., Wang, K., Vayanos, P. & Tambe, M. Strategic coordination of human patrollers and mobile sensors with signaling for security games. 8 (2018).

Liu, Y. et al. AI for Earth: Rainforest conservation by acoustic surveillance. 2 (2019).

Joppa, L. N. Technology for nature conservation: an industry perspective. Ambio 44, 522–526 (2015).

Koh, L. P. & Wich, S. A. Dawn of drone ecology: low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 5, 121–132 (2012).

Hahn, N. et al. Unmanned aerial vehicles mitigate human–elephant conflict on the borders of Tanzanian Parks: a case study. Oryx 51, 513–516 (2017).

Pomerantz, A. et al. Real-time DNA barcoding in a rainforest using nanopore sequencing: opportunities for rapid biodiversity assessments and local capacity building. GigaScience 7, (2018).

Van Doren, B. M. & Horton, K. G. A continental system for forecasting bird migration. Science 361, 1115–1118 (2018).

Howson, P. Building trust and equity in marine conservation and fisheries supply chain management with blockchain. Mar. Policy 115, 103873 (2020).

Speaker, T. et al. A global community-sourced assessment of the state of conservation technology. Conserv. Biol. cobi. https://doi.org/10.1111/cobi.13871 (2022).

Pearce, J. M. Building research equipment with free Open-Source Hardware. Science 337, 1303–1304 (2012).

Gibb, R., Browning, E., Glover-Kapfer, P. & Jones, K. E. Emerging opportunities and challenges for passive acoustics in ecological assessment and monitoring. Methods Ecol. Evol. 10, 169–185 (2019).

current constraints and future priorities for development. Glover-Kapfer, P., Soto-Navarro, C. A. & Wearn, O. R. Camera-trapping version 3.0. Remote Sens. Ecol. Conserv. 5, 209–223 (2019).

Norouzzadeh, M. S. et al. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. 115, E5716–E5725 (2018).

Berger-Tal, O. & Lahoz-Monfort, J. J. Conservation technology: the next generation. Conserv. Lett. 11, 1–6 (2018).

Hill, A. P. et al. AudioMoth: Evaluation of a smart open acoustic device for monitoring biodiversity and the environment. Methods Ecol. Evol. 9, 1199–1211 (2018).

Zárybnická, M., Kubizňák, P., Šindelář, J. & Hlaváč, V. Smart nest box: a tool and methodology for monitoring of cavity-dwelling animals. Methods Ecol. Evol. 7, 483–492 (2016).

Kalmár, G. et al. Animal-Borne Anti-Poaching System. in Proceedings of the 17th Annual International Conference on Mobile Systems, Applications, and Services 91–102 (ACM, 2019). https://doi.org/10.1145/3307334.3326080.

Weise, F. J. et al. Lions at the gates: Trans-disciplinary design of an early warning system to improve human-lion coexistence. Front. Ecol. Evol. 6, 242 (2019).

Beery, S., Van Horn, G. & Perona, P. Recognition in Terra Incognita. in Proceedings of the European Conference on Computer Vision (ECCV) (eds. Ferrari, V., Hebert, M., Sminchisescu, C. & Weiss, Y.) 472–489 (Springer International Publishing, 2018). https://doi.org/10.1007/978-3-030-01270-0_28.

Crego, R. D., Masolele, M. M., Connette, G. & Stabach, J. A. Enhancing animal movement analyses: spatiotemporal matching of animal positions with remotely sensed data using google earth engine and R. Remote Sens. 13, 4154 (2021).

Gorelick, N. et al. Google earth engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 202, 18–27 (2017).

Vulcan. EarthRanger. https://earthranger.com.

Ahumada, J. A. et al. Wildlife insights: A platform to maximize the potential of camera trap and other passive sensor wildlife data for the planet. Environ. Conserv. 47, 1–6 (2020).

Lahoz-Monfort, J. J. et al. A call for international leadership and coordination to realize the potential of conservation technology. Bioscience 69, 823–832 (2019).

Group Gets - AudioMoth. https://groupgets.com/manufacturers/open-acoustic-devices/products/audiomoth.

Kulits, P., Wall, J., Bedetti, A., Henley, M. & Beery, S. ElephantBook: A semi-automated human-in-the-loop system for elephant re-identification. in ACM SIGCAS Conference on Computing and Sustainable Societies (COMPASS) 88–98 (ACM, 2021). https://doi.org/10.1145/3460112.3471947.

Pardo, L. E. et al. Snapshot Safari: A large-scale collaborative to monitor Africa’s remarkable biodiversity. South Afr. J. Sci. 117, (2021).

Iacona, G. et al. Identifying technology solutions to bring conservation into the innovation era. Front. Ecol. Environ. 17, 591–598 (2019).

Cooper, R. G. What’s next?: After stage-gate. Res.-Technol. Manag. 57, 20–31 (2014).

Cooper, R. G. The drivers of success in new-product development. Ind. Mark. Manag. 76, 36–47 (2019).

Pearce, J. M. The case for open source appropriate technology. Environ. Dev. Sustain. 14, 425–431 (2012).

Mair, J., Battilana, J. & Cardenas, J. Organizing for society: A typology of social entrepreneuring models. J. Bus. Ethics 111, 353–373 (2012).

Meissner, D. Public-private partnership models for science, technology, and innovation cooperation. J. Knowl. Econ. 10, 1341–1361 (2019).

Likert, R. A technique for the measurement of attitudes. Arch. Psychol. 22, 1–55.

Mayer, A. L. & Wellstead, A. M. Questionable survey methods generate a questionable list of recommended articles. Nat. Ecol. Evol. 2, 1336–1337 (2018).

Archie, K. M., Dilling, L., Milford, J. B. & Pampel, F. C. Climate Change and Western Public Lands: a Survey of U.S. Federal Land Managers on the Status of Adaptation Efforts. Ecol. Soc. 17 (2012).

Jimenez, M. F. et al. Underrepresented faculty play a disproportionate role in advancing diversity and inclusion. Nat. Ecol. Evol. 3, 1030–1033 (2019).

Christensen, R. ordinal - Regression Models for Ordinal Data. R package version 2019.12-10. (2019).

R Core Team. R: A language and environment for statistical computing. (2020).

Arnold, T. W. Uninformative parameters and model selection using Akaike’s information criterion. J. Wildl. Manag. 74, 1175–1178 (2010).

QSR International Pty Ltd. Nvivo 12 Pro. (2020).

Glesne, C. Making words fly: Developing understanding through interviewing. Becom. Qual. Res. Introd. 3, (2006).

Creswell, J. W. & Creswell, J. D. Research design: Qualitative, quantitative, and mixed methods approaches. (Sage publications 2017).

Acknowledgements

We thank our survey respondents for their participation, and the graduate students at Colorado State University who helped to pilot early versions of the survey. NRH is supported by the National Science Foundation. The Colorado State University Libraries Open Access Research and Scholarship Fund helped support the publication of this study.

Author information

Authors and Affiliations

Contributions

N.R.H., S.P.B., and G.W. conceived the study, designed the survey, and wrote the manuscript. N.R.H. and S.P.B. analyzed the data. All authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hahn, N.R., Bombaci, S.P. & Wittemyer, G. Identifying conservation technology needs, barriers, and opportunities. Sci Rep 12, 4802 (2022). https://doi.org/10.1038/s41598-022-08330-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-08330-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.