Abstract

Wikipedia, a paradigmatic example of online knowledge space is organized in a collaborative, bottom-up way with voluntary contributions, yet it maintains a level of reliability comparable to that of traditional encyclopedias. The lack of selected professional writers and editors makes the judgement about quality and trustworthiness of the articles a real challenge. Here we show that a self-consistent metrics for the network defined by the edit records captures well the character of editors’ activity and the articles’ level of complexity. Using our metrics, one can better identify the human-labeled high-quality articles, e.g., “featured” ones, and differentiate them from the popular and controversial articles. Furthermore, the dynamics of the editor-article system is also well captured by the metrics, revealing the evolutionary pathways of articles and diverse roles of editors. We demonstrate that the collective effort of the editors indeed drives to the direction of article improvement.

Similar content being viewed by others

Introduction

After the invention of writing and printing we are currently witnessing the third communication revolution, which is digitally-mediated and resulting in the omnipresent availability of what mankind has ever intellectually produced1. Human knowledge has rapidly moved to the Internet that is accessible to everyone contributing to the massive data deluge, so that our problem “how to access information” has turned to “how to select information”. Any improvement in our ability to orient and/or navigate in this ever-increasing knowledge space is of great value2.

Wikipedia3, as the largest online encyclopedia, is a paradigmatic example of such a collective knowledge space that is based on “wisdom of crowds”. The successful model of Wikipedia is that volunteers collaboratively edit the articles, improving their quality in a self-organized manner4,5. The entire mechanism of Wikipedia, including the editing, the organization of editors and the categorization of the articles, is based on a bottom-up process of world-wide human creativity. Thus it is an extraordinarily appealing field of research due to its size, complex structure and—last but not least—because its full content and history is well documented and publicly accessible. Wikipedia is presently available in 285 languages, with 19 languages having more than one million articles.

In this paper we restrict ourselves to investigate the largest, i.e. the English language Wikipedia having currently more than 6.23 million articles (January 2021). Naturally, this huge number, together with the system of free editing result in a large variability in the length, depth and overall quality of the articles. In order to orientate the readers in this plethora of information, Wikipedia has introduced a labelling system of the articles such that some of them are literally labelled as “good” or “featured”, and some are listed as “controversial issues”6, while the majority remain unlabelled. This categorization is based on human judgement and follows the overall bottom-up principle. There has been an effort to automatize the identification of “featured” articles using machine learning technique7 and of controversial ones by means of revert statistics8,9. These approaches are in general useful, but they tell little about the level of sophistication of the articles, which should be reflected in the complexity of the language7,10. However, as regards to the content of an article, there is much more to it11. In particular, the evolution of the quality of articles deserves special attention as it should shed light onto the mechanism of how the articles improve as a consequence of the collaborative effort of editors. The understanding of this mechanism could pave the way to automated prediction of high quality articles.

In complex systems an adequate characterization of the constituents is often a formidable task because of the interactions, which—even if they are just binary—get extended to the entire system due to the interaction chains. For example, this explains the difference between the degree of a node in a complex network and its PageRank12. The former is a single node property, while the latter takes into account the importance of the other nodes as well and can be calculated iteratively in a self-consistent manner, resulting in often a much better characterization of a node’s importance. Similar reasoning in a different context has lead to the DebtRank model13, which evaluates banks from the point of view of systemic risk.

Wikipedia defines a bipartite graph14 with the set of the articles and the set of the editors, where a link between an editor and an article means that the former has worked on the latter. Bipartite graphs are ubiquitous in various complex contexts, examples being the networks of authors and their papers, words and sentences they occur in, or countries and goods they produce for international trade. Recently an interesting attempt has been put forward for the case of the bipartite network of the international trade to characterize the countries and their products15,16. Here the concepts of “product complexity” and “country fitness” were introduced and iteratively calculated. On one hand the idea is that the fitness of a country depends on the diversity and the complexity of the products it can provide to the international market and, on the other hand, a product’s complexity depends on how fit the countries have to be in order to be able to produce it. This framework has turned out to be very useful in categorising countries according to their fitness and even to make predictions about their evolution17,18.

In the bipartite graph of Wikipedia not only the articles have a broad distribution in quality but also the editors are very diverse in their interest, knowledge, editing skills, and devotion to Wikipedia19. Some of them are specialists, others work on many articles, some are highly knowledgeable and others are less so and, of course, their impact on the articles can be largely different. This is why the characterization of the articles’ level of sophistication or complexity cannot be simply measured by the number of different editors working on them. Inspired by the earlier studies of a different system11,15,16 and taking into account the above mentioned inherent features of Wikipedia we define the complexity of an article and the scatteredness of an editor, and determine them in a self-consistent manner. The aim of the present study is to show that these new concepts are meaningful for the characterization of the bipartite network of Wikipedia. Moreover, we investigate how the complexity of the articles evolves in time and serve as a means to identifying high quality articles.

Results

Self-consistent metrics for the editors and articles

Let us define a metric that enables us to rank the articles and the editors more sensitively than the local characteristics like degrees of the nodes of the network, i.e., the number of editors working on an article and the number of articles edited by a single editor or the strengths of the nodes, i.e., the total number of edits made on an article and the total number of edits carried out by an editor, respectively. On one hand, this metric should reflect the indirect effect that can be taken into account in a self-consistent way and, on the other hand, it should be automatically determinable without text analysis. This is a non-trivial task. The level of sophistication or complexity of an article is expected to increase by the number of editors working and the number of edits made on it (i.e., the degree and the strength of a node, respectively). However, the contribution of the editors can be very different from this point of view. Extremely active editors, who monitor a very large number of articles have less energy or time to improve the article significantly, and there are specialists who deal with articles in their expertise only and can thus contribute in a more substantial way. This bears some similarities with the problem of world trade11,16, but there are also marked differences.

Wikipedia can be considered as a weighted and directed bipartite graph or network that consists of \(N_e\) editors and \(N_a\) articles. If an editor \(\epsilon \) has made an edit on article \(\alpha \), there is a link \(\epsilon \rightarrow \alpha \) and the number of such edits constitutes the weight of that link. The unweighted version of bipartite graph can be represented by the binary \(N_e \times N_a\) matrix B:

and the corresponding weighted version by the weighted matrix W:

As shown in Table 1 in the Methods section, the binary and weighted networks of Wikipedia are characterized by broad degree and strength distributions, respectively, as the standard deviations are larger than the corresponding averages. Both networks exhibit positive nestedness as compared to the configuration model with the same degree and strength distributions20. In what follows we will mainly use the bipartite network based on the 1000 most active editors but the results for the most active 2000 and 5000 editors are found to be similar. In order to increase computational efficiency we trim the network by taking into account the articles that have the degree (i.e. the number of editors on them) 10 or more for the following analysis. For details on the selection see the “Methods” section.

To start we rank the articles by the number of editors \(k_a\) that have contributed by editing them (see Table 2). As expected, this simple degree centrality captures some features of the articles as it identifies many of the prominent ones. According to this measure, seven articles out of the top 10 turn out to be “popular” articles, i.e. the ones in the top 100 list of the total page views over the period from 2007 to the present (Jan. 2020)21. However, the strong correlation between the degree centrality and popularity raises some problems. For example the popularity can result from the article being “controversial” or affected by news or fashion. In fact, 6 articles out of the 7 “popular” articles from the above list are “controversial”, while only 2 are labelled “featured” and 3 “good”. Thus we can conclude that the high degree rank is more characteristic for the article’s “controversiality” and “popularity” than for its quality or complexity. This problem could not be resolved by using instead of the degree, the strength rank calculated from the weighted matrix W. In addition, we have tried to incorporate the indirect effect of nodes at a distance by using the ranking based on the eigenvector centrality22 of the projection of the bipartite network onto the set of articles. However, this does not improve the situation considerably, as shown in Table 2 in the Methods section and in the larger lists in SI, Table 3 and 4.

The impact of the editors on the goodness of an article is expected to be a non-monotonic function of the activity and the diversity of articles an editor deals with. Very low activity editors, who edit one or a few articles a few times only have usually little impact on the goodness of an article. On the other hand, very active editors monitoring and contributing to a large number of articles (e.g., admins) are again less responsible for the contextual improvements as their role is more maintenance. As we consider the top 1000 editors, all of them turn out to edit thousands of articles. Some of the edits are substantial additions to complex matters and some can be more maintenance type edits such as small or systematic corrections. Without text analysis it is hard to identify the maintenance like activities. However, we can expect that an editor working on a very large number of articles (some editors, indeed, edit millions), especially including articles with low “goodness” (or complexity) values, is less likely to make substantial content edits to improve the complexity of articles, as their capacities (time and energy) are scattered among too many items. On the other hand, active editors working on relatively few articles, high quality or complexity are expected to add essential contributions to the articles by their edits. Therefore, we define the scatteredness \(D_i\) of an editor i, as the harmonic sum of the article complexities he or she edits. The complexity of an article is then naturally defined as a harmonic sum of the scatteredness values of the editors who edited the article, which is essentially the sum of the editors’ contributions to the contents writing.

In order to differentiate the goodness or complexity of an article from its popularity and controversiality and to have an adequate measure to characterise also the editors, we introduce the following self-consistent metrics:

Here \(D_i^{(n)}\) is the scatteredness of the editor i, and \(C_j^{(n)}\) is the complexity of the article j, as calculated after n times of recursive evaluation steps. Note that after each re-evaluation step, Eq. (3) we normalize the scatteredness and complexity measures to get Eq. (4).

Starting from the uniform initial condition \(D^{(0)}_i = 1, \ C^{(0)}_j = 1\), the proposed recursive process yields good convergence for both the scatteredness and complexity. In addition, the obtained distributions turn out to be smooth, as depicted in Fig. 1. Although the scatteredness of editors and the complexity of articles are correlated with the corresponding degrees, they show considerable variations. Because we adopt a harmonic sum in our metric, one can expect that the result is sensitive to the threshold we set for the article degree to be taken into account for the analysis. However, we confirm that the good convergence and the smoothness of the score distributions are kept for different threshold values, and the performance of the score is the best for the chosen threshold value as discussed in detail in the Methods Section and in the SI.

The distribution of the scatteredness of editors and the complexity of articles calculated from the self-consistent measures in Eqs. (3, 4). (A) The rank plot of the scatteredness of editors, (B) the rank plot of the complexity of articles, (C) the density distribution of editors in the rank–rank plot of the strength \(s_\epsilon = \sum _{\alpha } w_{\epsilon \alpha }\) and the scatteredness, and (D) the density distribution of articles in the rank-rank plot of the strength \(s_\alpha = \sum _{\epsilon } w_{\epsilon \alpha }\) and the complexity.

Characterization of articles by the self-consistent complexity measure

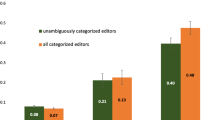

Let us investigate how the complexity measure characterizes the articles labelled “featured”, “good”, “popular”, and “controversial” in comparison with other measures. In Fig. 2 we compare the efficiency of the different measures by plotting the cumulative number of identified articles with different labels as a function of the article’s rank as calculated from the measure. It is seen in the left panel that the complexity measure C works better for finding the “featured” articles than the other commonly used measures, i.e., the degree, the strength, and the eigenvector centrality. From this figure it is also evident that the complexity measure turns out to pick up selectively the “popular” articles (middle panel) and the “controversial” articles (right panel). This distinguishing feature of the complexity measure is also seen in the different distributions of the labeled articles of the strength rank—complexity rank plane, as depicted in Fig. 3 A. In this plot we observe that in comparison to all as well as “good” articles, the “featured”, and “controversial” articles share a common characteristic by both of them being concentrated in the top-rank area (bottom-left corner) of the strength rank—complexity rank plane. Moreover, the “controversial” articles appear most strongly concentrated, with strength rank considerably higher than the complexity rank, while the “featured” articles are distributed more evenly but concentrated slightly to higher complexity ranks.

Next we turn our attention to the differences between the complexity rank and the strength rank by focusing on the “newly featured” articles, i.e. the articles that were labeled as “featured” within the period from 20th September 2019 to 20th February 2020, having been before that “non-labelled” or “good” articles. As depicted in Fig. 3 B, the “newly featured” articles that emerged mainly from “good” but some also from “non-labelled” articles have a clear tendency for the complexity rank being higher than the strength rank. On the other hand the “newly featured” articles that were around the above-mentioned time period also “emerging popular” articles (i.e. the ones that earned top 5000 page views in 2019), tend to have the strength rank higher than the complexity rank. In Fig. 3C we show the distribution of articles labelled “popular”, “popular” & “featured”, “popular” & “controversial”, and “popular” & “featured” & “controversial” in the strength rank—complexity rank plane. All of these articles are located in the upper triangular part of the plane, thus implying that their strength rank is clearly higher than their complexity rank. However, some of the doubly and the triply labelled articles show relatively high complexity rank. This observation supports the view that the higher rank in the complexity metric C serves as an indicator of the character of an article. On the other hand the high strength (but also degree) rank of the “featured” articles is partly due to a “pre-featured” or “post-featured” process that might make the finding of “featured” articles by the complexity measure C, difficult. Some possible examples of such processes are further proof-reading edits of the article before nomination (pre-process) or the article becoming “popular” or “controversial” because of the “featured” label (post-process).

As discussed above and shown in Fig. 3A,C, the “featured” and “controversial” articles share a common characteristic, as their distributions in the strength rank—complexity rank plane partly overlap but are still distinguishable. In order to better differentiate their distributions we introduce the following rank ratio \(\displaystyle J_\alpha = \frac{R_{C_\alpha }}{R_{s_\alpha }},\) where \(R_{C_\alpha }\) and \(R_{s_\alpha }\) are the ranks of the article \(\alpha \) according to the complexity measure and to the strength in the descending order, respectively. This complexity—strength rank ratio basically evaluates the average scatteredness of the editors of an article, relative to other articles with similar strength. In Fig. 4 we plot the complexity—strength rank ratio vs. the strength rank, and indeed the distribution of the “featured” articles become quite well separated from the distribution of the “controversial” articles. Furthermore, we observe that for the “featured” articles typically the complexity—strength rank ratio gets values \(J<1\), which indicates that complexity rank values exceed those of strength rank, while for the “controversial” articles this is overturned and the ratio gets typically higher values \(J>1\), as expected.

Cumulative number of “featured”, “popular”, and “controversial” articles contained in the top-ranked or top-N hit articles for Complexity, Degree, Strength, and Eigenvector centrality measures. The Complexity works best for finding the “featured” articles, while it picks the “popular” articles and the “controversial” articles more selectively, than the other measures.

Distributions of labeled Wikipedia articles in the strength rank vs. complexity rank plane. (A) Contour plot of the density distributions of all (gray dotted line), “good” (green dashed line), “featured” (blue solid line), and “controversial” (magenta dot-dashed line) articles. In comparison to the density distribution of all as well as “good” articles, the “featured” and “controversial” articles are accumulated in the region of higher ranks, i.e. where both \(R_s\) and \(R_C\) are small, while the “good” articles have lower ranks and both \(R_s\) and \(R_C\) larger. The “featured” articles tend to have higher complexity rank than all articles for its strength, while “controversial” articles tend to have extremely high strength rank but smaller complexity rank than “featured” articles. (B) In the \(R_s\) vs. \(R_C\) plane the positions of “newly featured” articles that were labelled “featured” within the period from 20th September 2019 to 20th February 2020. Green circles and black squares are the “newly featured” articles promoted from the “good” articles and non-labeled articles, respectively, shown on top the contour (gray dotted) lines of the density distribution of all articles. The “newly featured” articles are mainly promoted from the “good” articles, the distribution of which is concentrated in the lower triangle of the \(R_s\) vs. \(R_C\) plane compared to the distribution of all articles. The original positions of “newly featured” articles are found further to the right, compared to the overall distribution of “good” articles, which indicates a strong tendency that articles with higher complexity rank relative to strength rank correlate with the higher chance for them to obtain “featured” label. This tendency is stronger for the originally non-labeled articles. The “newly featured” articles that are also labelled as “emerging popular” articles (red diamonds), having earned top 5, 000 page views around the observation period, tend to be located in the upper triangle region, i.e. the strength rank is higher than the complexity rank. (C) In the \(R_s\) vs. \(R_C\) plane the positions of articles labelled “popular” (orange star), “popular” & “featured” (blue squares), “popular” & “controversial” (magenta circles), and “popular” & “featured” & “controversial” (cyan diamonds), shown on top of the contour (gray dotted) lines of the density distribution of all articles. These “popular” singly, doubly and triply labelled articles are localized in the region of high strength rank and relatively high complexity rank, such that “popular” & “controversial” labelled articles correlate with higher strength rank while “popular” & “featured” labelled articles correlated with the higher complexity rank.

Dynamics of articles in Wikipedia

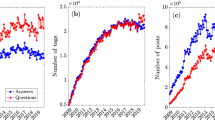

In the previous subsection we demonstrated the relevance of our complexity measure in characterizing and ranking the articles of different categories or labels. Here we focus on the temporal evolution of the articles in terms of their relative ranks of the strength, \(r_s = R_s/N_a\), and of the complexity–strength rank ratio J, \(r_J = R_J/N_a\). As shown in the main panel of Fig. 5, the Wikipedia articles evolve in time basically by gaining strength. Also it turns out that an article getting new edits would lead to an increase in its complexity, unless the scatterdnesses of some of the already connected editors shoot up during the observation time. The rate of the complexity increment of an article is determined by the average of the inverse scatterdness (\(D_\epsilon ^{-1}\)) of the editors who recently edited it. The relation between this rate and the original strength is well visualized in the average flow pattern (see the main panel in Fig. 5). Here we see that there is an overall leftward stream with down trend, i.e. the rank in the complexity does not get higher when compared to the change of rank in strength. This implies that, as an article gets more edits, it becomes increasingly difficult to keep getting even more edits from the less scattered editors (i.e. specialists). In the panel of relative ranks of all articles in Fig. 5 it is seen that the overall evolutionary flow of labelled and non-labelled articles does not go only towards a single “sink” in the bottom left corner with both the relative strength rank \(r_s\) and complexity–strength rank ratio \(r_J\) small, but towards a broad area in the left boundary with \(r_s\) small but \(r_J\) variable. This reflects the fact that the average gain of complexity per edit tends to relax to a mature value with considerable diversity.

In order to see the role of articles with different labels (i.e. “featured”, “newly featured”, “good”, “controversial”, and “emerging popular”) in the overall evolutionary flow of articles we present them as separate small panels in Fig. 5. Here it is evident that the flow patterns of labelled articles exhibit clear differences in reflection of their evolutionary path from non-labelled stage to different labelled stages. Here we also see that the “featured” and “newly featured” articles have low-J rank, especially in the low-strength region. However, the “featured” articles in the high-strength region show an upstream trend, meaning that their acquiring contents-edit contributions tend to be kept, even in their matured stage. The movements of “newly featured” articles is found to be stochastic in nature, due to not being averaged. However, these movements tend to keep J low and some of them show a very drastic upward motion i.e. a rapid growth in complexity. As for the articles labelled “Good” we observe their evolution to be quite similar to the evolution of all articles, but showing a slightly enhanced upward trend (due to contents edits), especially in the high-strength (matured) region. In the case of the “controversial” articles we observe them mostly concentrated in the high-strength-rank and high-J-rank region as already shown in Fig. 4. In this region the pattern of flow bears some similarity with the flow patterns of “featured” and “good” articles as well as to some extent with all articles. Outside this region the flow pattern looks rather chaotic. Of all the labelled articles the “popular” articles are found to have most stable flow pattern over the period of observation and to be concentrated in the bottom-left triangle region in the \(r_s\) vs. \(r_J\) plane (see Fig. 8 in SI). In contrast the evolution of “Emerging popular” articles, constructed from the top 5, 000 page views in 2019, show rapid growth in the strength and the downward trending flow pattern to the bottom-left corner the \(r_s\) vs. \(r_J\) plane. This behavior might be explained as the high popularity for a shorter time span was achieved because it became controversial and hence needed maintenance edits, or, inversely, it became controversial in terms of the high need of maintenance because of its intrinsic emerging popularity.

As is mentioned above, some “controversial” articles show eye-catching upward move like the “featured” articles, while some others move down like ordinary articles or even drastically like the “emerging popular” articles. This might reflect the fact that there are different reasons for articles to be controversial. If the controversy is mainly from the impact or delicateness of that topic to the public, the editing activity of “reverting war” and the maintenance effort should be similar to that of the majority “controversial” articles and “emerging popular” articles. If the controversy is of the multifaceted nature or the difficulty in settling correct explanation of that issue, updates to fix the article need much expertise of that field and hence the activity becomes more like the one of “featured” articles. In fact, five out of top-10 upward moving articles in the top 1% strength area in the \(r_s-r_J\) plot, namely, sexually transmitted infection, disability, eugenics, tobacco, and string theory, are about the category of “Science, Biology, and Health”, which occupies only about 10% of “controversial” articles (motion of each of these articles can be seen in Fig. 9 in SI). Moreover, seven out of top 10 “controversial” articles in the increase of complexity (namely, Chiropractic, Aspartame, Aspartame controversy, Alternative medicine, Homeopathy, Vaccine hesitancy, and Ebola virus disease) are from “Science, Biology, and Health”, while only two are from the category of “People” that constitutes about 30% of “controversial” articles.

Also in the “newly featured” articles, we see some counter flows (downward motion). Most conspicuous ones are found to be Horologium and Portrait of Mariana of Austria (as shown in Fig. 10 of SI). It is due to their rapid growth in strength with slight increase in complexity. In the edit history of these articles one can find that there were intense editing by active editors just before the nomination of those articles for “featured” articles, which is a good example of above argued possible “pre-featured” process. The reason why these particular articles needed such a process seems to be that those are in the disciplines which require special fine formats to be a qualified article (constellation and paintings, respectively). These investigations on the counter flows in labeled articles show the usefulness of our complexity measure and the derivative index of complexity–strength rank ratio J to characterize the articles, even beyond the article labeling.

The temporal evolution of articles in the plane of relative ranks of the strength \(r_s = R_s/N_a\), and of the complexity–strength rank ratio \(r_J = R_J/N_a\), for the period ranging from 20th Sep 2019 to 20th Feb 2020. ( Top left) Average flows of all articles, i.e. both labelled and non-labelled articles. ( Top right to bottom right and then horizontally to the left) Flows of articles labeled as “Featured”, “Newly featured”, “Emerging popular”, “Controversial”, and “Good”. Arrows are colored according to their directions, as shown in the top left panel. Each arrow for the “Newly featured” articles corresponds to each of the 70 articles without averaging. For other panels, the average flows are plotted. The flows of labeled articles are overlaid on the average flow of all articles (grey). Note that in the low strength regime, many articles have the same strength and hence have the tie rank, which appears as the vertical blank strips in the flow plot.

Discussion

We have shown that in analysing the quality of the Wikipedia articles and their evolutionary paths the complexity measure C and the derivative measure of complexity - strength rank ratio J serve as sensitive indicators. The performance of C and J for finding “featured” articles with less picking “popular” and “controversial” articles is better than other measures which also use only the information of editing network, i.e. the degree, strength, and eigenvector centrality. However, the main significance of our study is in discovering more holistically the ecology of Wikipedia, i.e., how the different types of editorial activities influence the complexity of the articles and its evolutionary dynamics. The usefulness of the complexity measure C in finding “featured” articles implies that the main contribution to the complexity of an article is not due to the most active editors, whose contributions are scattered among many articles but rather due to those non-top editors, who focus in their edits on contributing on substance. This means that, although the editing activity is heavily dominated by the top-editors, their role is mainly devoted to maintain the Wikipedia system, while the improvement of the complexity of an article is expected from editors with more specialized interest.

A novel aspect of our work is the systematic study of the evolution of articles in the complexity–strength rank space in Fig. 5. The basic assumption of Wikipedia that the articles are continuously improving by the collective effort of the editors has been challenged repeatedly23. The overall trend of the articles towards the high complexity - high strength corner in Fig. 5 is a quantitative evidence for this assumption. The apparent differences in the flow diagrams with regard to the different characters or labeling of the articles is a further indication of the sensitivity of our approach to separate articles with high editorial activity according to their level of sophistication. The observed general trend seems to be persistent as confirmed by the data from the period of February 2021–July 2021.

As for the different language Wikipedias they are known to show very diverse statistics and dynamics reflecting cultural diversity24,25,26. While our findings from English Wikipedia have been confirmed to be universal in time, their validity in the Wikipedias of other languages remains to be tested. Also we note that investigating the variability of the evolution of the articles with respect to disciplines or fields is an important future direction to help understand better the mechanism of quality maintenance of articles in different fields27. It should be emphasized that our method is able to cope with these challenges.

Our self-consistent formulation for analysing the quality of Wikipedia articles share features with the analysis of countries’ economic fitness and the complexity of products they provide for the world trade network16,17. However, there are fundamental differences between these two eco-systems, which stem from differences in the underlying processes and dependence on available resources (for comparison in terms of the metrics used, see the SI). While the economic ecosystem is dependent on countries’ limited natural and human resources, the Wikipedia ecosystem depends on rather unlimited collective knowledge space of human creativity by individual editors. Their individual choices or selectiveness in editing articles gives insight into their behavioural and activity patterns. As discussed above, the selective edit records, focusing mainly on good articles, can be regarded as a good indication of some editors’ tendency to make contents writing contribution to the article, which in our analysis framework is (inversely) measured by the scatterdness of individual editors. Finally, as we expect that this kind of bipartite mechanisms might be quite universal in other human collaborative, creative or social networks, they would be interesting further targets for our self-consistent analysis approach.

Methods

Data preparation

The data we use in this study consists of the edit records on individual articles, which were dumped from Wikimedia Downloads28 over a period ranging from the 20th Sept. 2019 till the 20th Feb 2020. We exclude bot or bot-like editors and non-content articles from our analysis, by removing bots listed in Wikipedia bot29, unregistered anonymous users, and editors who edit mainly non-article pages. Articles labeled as “redirect” or “disumbiguation” are also excluded from our analysis. The basic characteristics of the Wikipedia edit network after this pre-processing are shown in Table 1.

In order to calculate the self-consistent metric for the scatteredness of editors and the complexity of articles, we construct a network composed of articles that have been edited by 10 or more editors. Articles with extremely low complexity have typically very few editors and are generated systematically and then left almost intact. Examples of such would be every settlement in a certain state, and every (sub) species in a certain taxon, and lists of those. However, filtering articles by their “list” tags does not work well because some articles with list tags contain rich information and some other articles essentially contain only list information but without list tags.

As shown in Table 1, the only remarkable change in the network characteristics after the trimming is that the nestedness of the weighted network in the editor side becomes smaller than that of the configuration model. This implies a division-of-labour aspect of the editing activity on articles edited by many editors (ten or more, here). Also the top 10 articles in degree rank are listed with the article titles, labels, and rank in the Eigenvector centrality measure, as presented in Table 2. While all of those are prominent articles, their prominence are more associated with the popularity and controversiality of the article.

Calculating self consistent measures

s In order to obtain the scatteredness of editors and the complexity of articles, we iterate the calculation Eqs. (3) and (4) until it converges around a fixed point. We stop the iteration when the following convergence condition is fulfilled:

A typical number of iteration steps we need for the convergence is less than 100. The scores obtained from the fixed point are different for the non-trimmed network from that of the trimmed network, while the good convergence and the smoothness of the score distributions remain. However, the change in the resulting score ranking is rather discontinuous against the change in the threshold value and the performance of the score for the non-trimmed network is worse in the later discussed sense. Therefore, our choice of the threshold value at 10 is not arbitrary (see SI for detail).

References

Crowley, D. & Heyer, P. Communication in History. Technology, Culture, Society (Routledge, 2015).

Dimitrov, D., Lemmerich, F., Flöck, F. & Strohmaier, M. Different topic, different traffic: How search and navigation interplay on wikipedia. J. Web Sci. 6 (2019).

Broughton, J. Wikipedia: The Misssing Manual (O’Reilly Media, 2008).

Yasseri, T. & Kertész, J. Value production in a collaborative environment. J. Stat. Phys. 151, 414–439. https://doi.org/10.1007/s10955-013-0728-6 (2013).

https://en.wikipedia.org/wiki/Wikipedia:List_of_controversial_issues. Online. Accessed 21 June 2020.

Lipka, N. & Stein, B. Identifying featured articles in Wikipedia: Writing style matters. in WWW ’10: Proceedings of the 19th International Conference on World Wide Web. 1147–1148 (2010).

Yasseri, T., Sumi, R., Rung, A., Kornai, A. & Kertész, J. Dynamics of conflicts in Wikipedia. PLoS ONE 7, e38869 (2012).

Gandica, Y., Carvalho, J. & dos Aidos, F. S. The dynamic nature of conflict in Wikipedia. Europhys. Lett. 108, 18003 (2014).

Yasseri, T., Kornai, A. & Kertész, J. A practical approach to language complexity: A Wikipedia case study. PLoS ONE 7, e48386 (2012).

Carsetti, A. Epistemic Complexity and Knowledge Construction (Springer, 2013).

Gleich, D. F. PageRank beyond the web. SIAM Rev. 57. https://doi.org/10.1137/140976649 (2015).

Battiston, S., Puliga, M., Kaushik, R., Tasca, P. & Caldarelli, G. DebtRank: Too central to fail?. Sci. Rep. 2, 541 (2012).

Barabási, A.-L. Network Science (Cambridge, 2016).

Hidalgo, C. & Hausmann, R. The building blocks of economic complexity. Proc. Natl. Acad. Sci. 106, 10570–10575 (2009).

Tacchella, A., Cristelli, M., Caldarelli, G., Gabrielli, A. & Pietronero, L. A new metrics for countries’ fitness and products’ complexity. Sci. Rep. 2, 723 (2012).

Cristelli, M., Gabrielli, A., Tacchella, A., Caldarelli, G. & Pietronero, L. Measuring the intangibles: A metrics for the economic complexity of countries and products. PLoS One 8, e70726 (2013).

Tacchella, A., Cristelli, M. & Pietronero, L. A dynamical systems approach to gross domestic product forecasting. Nat. Phys. 14, 861–865 (2018).

Yun, J., Lee, S. H. & Jeong, H. Intellectual interchanges in the history of the massive online open-editing encyclopedia, Wikipedia. Phys. Rev. E 93, 012307 (2016).

Jonhson, S., DomiÂÂnguez-GarciÂÂa, V. & Noz, M. A. M. Factors determining nestedness in complex networks. PLoS ONE 8, e74025 (2013).

https://en.wikipedia.org/wiki/Wikipedia:Multiyear_ranking_of_most_viewed_pages. Online. Accessed 17 June 2020.

Newman, M. E. J. The structure and function of complex networks. SIAM Rev. 45, 167–256 (2003).

Pappas, T. The Executive Editor of Encyclopedia Britannica on Wikipedia. (The Guardian, 2004).

Yasseri, T., Sumi, R. & Kertész, J. Circadian patterns of Wikipedia editorial activity: A demographic analysis. PLoS ONE 7(1), e30091 (2012).

Yasseri, T., Spoerri, A., Graham, M. & Kertész, J. The most controversial topics in wikipedia: A multilingual and geographical analysis. in Global Wikipedia: International and Cross-cultural Issues in Online Collaboration (Hara, F. N. ed.) (Scarecrow Press, 2014).

Jemielniak, D. & Wilamowski, M. Cultural diversity of quality of information on Wikipedias. J. Assoc. Inf. Sci. Technol. 68. https://doi.org/10.1002/asi.23901 (2017).

Shafee, T. et al. Evolution of Wikipedia’s medical content: Past, present and future. J. Epidemiol. Commun. Health 71, 1122–1129. https://doi.org/10.1136/jech-2016-208601 (2017). https://jech.bmj.com/content/71/11/1122.full.pdf.

Wikimedia Foundation. Wikimedia Downloads. https://dumps.wikimedia.org. Online. Accessed 1 July 2019.

https://en.wikipedia.org/wiki/Category:All_Wikipedia_bots. Online. Accessed 26 July 2019.

Acknowledgements

FO was partly supported by JSPS KAKENHI grant number 21K19826. JK and KK acknowledge support from EU HORIZON 2020 INFRAIA-2019-1 (SoBigData++) No. 871042. KK also acknowledges the Visiting Fellowship at The Alan Turing Institute, UK. TS was partly supported by JSPS KAKENHI grant number 18K03449. FO, JK, and TS thank for hospitality of Aalto University.

Author information

Authors and Affiliations

Contributions

All authors (F.O., J.K., K.K., and T.S.) conceived the study plan. F.O. and T.S. conducted the data preparation and the numerical calculation. All authors analysed the results and participated in writing the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ogushi, F., Kertész, J., Kaski, K. et al. Ecology of the digital world of Wikipedia. Sci Rep 11, 18371 (2021). https://doi.org/10.1038/s41598-021-97755-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-97755-w

This article is cited by

-

Comparison of metrics for measuring Wikipedia ecology: characteristics of self-consistent metrics for editor scatteredness and article complexity

Artificial Life and Robotics (2023)

-

Simulating the development of resilient human settlement in Changsha

Journal of Geographical Sciences (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.