Abstract

Machine learning (ML) holds great promise in transforming healthcare. While published studies have shown the utility of ML models in interpreting medical imaging examinations, these are often evaluated under laboratory settings. The importance of real world evaluation is best illustrated by case studies that have documented successes and failures in the translation of these models into clinical environments. A key prerequisite for the clinical adoption of these technologies is demonstrating generalizable ML model performance under real world circumstances. The purpose of this study was to demonstrate that ML model generalizability is achievable in medical imaging with the detection of intracranial hemorrhage (ICH) on non-contrast computed tomography (CT) scans serving as the use case. An ML model was trained using 21,784 scans from the RSNA Intracranial Hemorrhage CT dataset while generalizability was evaluated using an external validation dataset obtained from our busy trauma and neurosurgical center. This real world external validation dataset consisted of every unenhanced head CT scan (n = 5965) performed in our emergency department in 2019 without exclusion. The model demonstrated an AUC of 98.4%, sensitivity of 98.8%, and specificity of 98.0%, on the test dataset. On external validation, the model demonstrated an AUC of 95.4%, sensitivity of 91.3%, and specificity of 94.1%. Evaluating the ML model using a real world external validation dataset that is temporally and geographically distinct from the training dataset indicates that ML generalizability is achievable in medical imaging applications.

Similar content being viewed by others

Introduction

Intracranial hemorrhage (ICH) is a source of significant morbidity and mortality1,2. It is a frequently encountered clinical problem with an overall incidence of 24.6 per 100,000 person-years3. A non-contrast computed tomography (CT) scan of the head is the most common method used to diagnose ICH as it is fast, accurate, and widely available. Since nearly half of ICH related mortality occurs within the first 24 h4, rapid and accurate diagnosis is critical if interventions that can improve patient outcomes are to be successful5,6,7,8.

In high volume clinical radiology settings with complex patients and frequent interruptions, significant delays between patient imaging and imaging interpretation are often unavoidable. Inevitably, this delay will impact the time required to identify patients with critical or life-threatening findings9. Machine learning (ML) models have been proposed as an approach to automatically triage and prioritize medical imaging studies10. Multiple investigators have demonstrated the accuracy of ML models in detecting ICH on non-contrast CT scans11,12,13,14,15. However, many previously published investigations have not evaluated performance of these ML models in real world, high volume clinical environments. The importance of real world evaluation is best demonstrated by case studies which have shown failures in translation from laboratory to clinical settings due to a variety of sociotechnical factors16. Another limitation of many of these studies is a common source of the training, validation, and test datasets.

A demonstration of generalizable ML model performance on real world data is necessary prior to the adoption of these tools. In this paper, we developed an ML model for ICH detection in non-contrast CTs of the head and examined generalization performance in the real world setting of a major neurosurgical and trauma center. To our knowledge, this is the first study to both develop and assess generalization performance of an ML model for ICH detection.

Methods

The following methods were carried out in accordance with relevant institutional guidelines and regulations. This retrospective study was approved by the St. Michael’s Hospital Research Ethics Review Board. Due to retrospective nature of this study, informed consent was waived and approved by the St. Michael’s Hospital Research Ethics Review Board.

Training dataset

The Radiological Society of North America (RSNA) Intracranial Hemorrhage CT dataset17 was used for ML model training. This multi-institutional and multi-national dataset is composed of head CTs and annotations of the five types of intracranial hemorrhage. Each CT image in this dataset was annotated by a neuroradiologist for the presence or absence of epidural (EDH), subdural (SDH), subarachnoid (SAH), intraventricular (IVH), and intraparenchymal (IPH) hemorrhage. This dataset consists of 874,035 images with class imbalance amongst the types of ICH (Table 1).

Model development

An overview of the ML model is presented in Fig. 1. The main steps are as follows:

-

1.

Adjustment of the window center and width of each CT image;

-

2.

Feature extraction from each image;

-

3.

Incorporation of spatial dependencies between images along the craniocaudally axis;

-

4.

Thresholding inference results to generate a binary decision and a probability distribution over the 5 types of ICH.

A CT scan of the head is represented as S = (S1,…,SN) where N is the total number of images in the scan. Each image Sn is passed through three window center and width adjustment filters to enhance differences between blood, brain parenchyma, cerebrospinal fluid, soft tissues, and bone18 as presented in Fig. 1. The three enhanced images are then stacked and passed to two deep convolutional neural networks (DCNN) with three input channels which are SE-ResNeXt-50 and SE-ResNeXt-101, pre-trained on ImageNet19. Each DCNN model produces a probability distribution over the target data classes for each Sn and their average is defined as the vector pn = (pn(1), pn(2), pn(3), pn(4), pn(5)), where indexes 1 to 5 refer to the EDH, SDH, SAH, IVH, and IPH classes, respectively. An ensemble of the probability distributions generated by the DCNNs was used to reduce the variance of predictions. In order to incorporate spatial dependency between axial images, a sliding window module takes the probability vectors of ΔS images from each side of image Sn as Pn = (pn−ΔS, pn−ΔS+1, …, pn, pn+1, … pn+ΔS). The prediction Pn is then enhanced by incorporating inter-slice dependencies using an ensemble of the LightGBM, CatBoost, and XGBoost gradient boosting models20. These models work on structured data and generally their ensemble is used to generate more robust solutions. Unlike the ResNeXt models which are utilized for image-level classification, these models focus on a series of images. The core idea is that the neighboring images of a given image within a series, can be useful to enhance the predictions of that particular image. As an example, the likelihood of an image Sn being SDH is higher, if the adjacent images Sn−1 and Sn+1 are inferred as SDH. Each boosting model generates a probability distribution over hemorrhage types per image by incorporating the probability vectors of neighboring images with a sliding window size of 9 (ΔS = 4). Hence, the average of the ensemble model produces a probability distribution over the 5 hemorrhage types for the slice Sn. This distribution is passed to a set of thresholds where if at least the predicted probability of one hemorrhage type is more than or equal to its corresponding threshold, the output label will be positive for ICH.

A Bayesian optimizer21 was used to determine the probability thresholds (TEDH = 0.47, TSDH = 0.37, TSAH = 0.45, TIVH = 0.37, and TIPH = 0.20) that maximize the AUC score when generating binary (positive/negative) decisions. Bayesian optimization constructs a posterior distribution of functions that best describes an objective function to maximize AUC of the model. The input dimensions of the optimization landscape are hemorrhage types and the objective value is the AUC value. In this approach, the optimizer searches for a combination of parameters (thresholds) that are close to the optimal combination, which maximizes the AUC value on the validation dataset. We have used the Bayesian Optimization Python library for this aim, where the search interval of each parameter was [0, 1], the dimensionality of the optimization landscape was 5 (corresponding to 5 hemorrhage types), the number of initial search points was 20, and the number of search iterations was 500. Visualization of predicted areas of ICH was performed using feature maps from layer 4, the layer before adaptive average pooling, in the SE-ResNeXt-50 (32 × 4d) model using GradCAM and GradCAM++ methods22 (Fig. 2). This visualization is used to confirm that the ML model is capable of detecting areas of hemorrhage without performing any geometrical prepossessing (e.g. image registration, noise removal) on the input head CT images even in the presence of suboptimal patient positioning or other artifacts.

Visualization of feature maps from layer 4 (the layer before adaptive averaging pooling) in SE-ResNeXt-50 (32 × 4d). Left: Input head CT image; Middle left: GradCAM heat map; Middle: GradCAM++ heat map; Middle right: GradCAM result superimposed on CT image; Right: GradCAM++ result superimposed on CT image.

Model training and evaluation

The training portion of the RSNA Intracranial Hemorrhage CT dataset of 752,803 images (21,784 examinations) was used to train the DCNNs and divided into 8 stratified folds. Images from the same patient were grouped into the same fold by using the patient identifier embedded in DICOM metadata. This prevents a potential information leak during cross-validation as neighboring images within a CT scan may resemble each other and are more likely to share the same class labels. Each DCNN model was trained and cross-validated on these 8 folds. The training hyper-parameters of the DCNNs were set to a mini-batch size of 32, training epoch of 4, and adaptive learning rate with initial rate of 1 × 10–4 with an Adam optimizer23. The checkpoints from the 3rd and 4th epochs were used to make out-of-fold predictions and were then averaged. These out-of-fold predictions were used as meta features for training gradient boosting models. Cross-validation was performed on the same 8 folds.

The model was evaluated on the 3528 examinations that compose the test set of the RSNA Intracranial Hemorrhage CT dataset. Log loss performance during training and validation was determined for SE-ResNeXt50-32 × 4d and SE-ResNeXt101-32 × 4d for each fold and epoch (Supplementary Information). In addition, log loss was determined for LightGBM, Catboost, and XGB, as well as their average as an ensemble, for each hemorrhage type. A confusion matrix was constructed by comparing the ground truth of each CT scan to the ML model prediction.

Evaluation of model generalizability

The demonstration of generalizability of model performance requires external validation using data which is ideally both temporarily and geographically distinct from that used to train a model24. As a busy neurosurgical and trauma center in one of the world’s most diverse cities, the data from our institution is well suited for the purposes of external validation. In order to capture a real-world distribution of patients, we included every unenhanced head CT performed on emergency department patients over the course of 1 year without any exclusion criteria.

External validation dataset

The hospital’s radiology information system (syngo, Siemens Medical Solutions USA, Inc., Malvern, PA) was searched using Nuance mPower (Nuance Communications, Burlington, MA) for emergency patients that underwent a non-contrast CT scan of the head between January 1 and December 31, 2019. Every CT which included non-contrast imaging of the head acquired at 2.5 or 5.0 mm slice thickness was included in this study. All examinations were performed on a 64 row multi-detector CT scanner (Revolution, LightSpeed 64, or Optima 64, General Electric Medical Systems, Milwaukee, WI, U.S.).

The ground truth was established by having each CT scan labeled as positive or negative for ICH by a trained research assistant who reviewed the associated radiology report. A total of 5965 (674 positive, 5291 negative) head CT examinations from 5536 patients (2600 female, 3365 male; age range 13–101 years; mean age 58.2 ± 20.4 years) were included in this study.

Scans that were positive for ICH were further classified for the presence of the 5 types of ICH using the same radiology report. A random sample of 600 reports and CT scans (64 positive and 536 negative) were reviewed by a radiologist to validate the report labeling process. All positive and negative scans were correctly classified by the research assistant at the patient level. For the positive scans, 314 of 320 (98.1%) labels detailing the types of ICH were correctly labeled. A total of 103 of 105 ICH subtype positive labels were correct, 2 were reclassified, and 5 were added after radiologist review.

Evaluation

ML model predictions were compared to the ground truth for each scan at the patient level and for each type of ICH. A three member panel reviewed each CT scan where the ground truth label based on the clinical radiology report was discrepant with the ML model prediction. This review allowed us to identify cases where a radiologist missed ICH that was correctly detected by the ML model and cases of “over-calling” by a radiologist. All panel reviewers were fellowship trained in neuroradiology with 10 (A.B.), 5 (A.L.), and 2 (S.S.) years of experience following fellowship training. Cases were reviewed on a Picture Archiving and Communications System workstation (Carestream PACS, Carestream Health, Rochester, New York) which provided panel members with access to radiology reports, prior imaging examinations, and if available, follow-up imaging. A majority vote served as consensus for the review of these cases. CT scans that were deemed equivocal for ICH by the panel despite the availability of prior and follow-up imaging were treated as positive cases in evaluating ML model performance. The rationale for this decision is that equivocal cases should be flagged by a triaging system for urgent review by a radiologist.

There is a substantial difference in prevalence between the test (35.2%) and external validation (11.3%) datasets. In order to compare performance of the models at an equivalent ICH prevalence, a bootstrap approach was used to sample negative scans from the external validation dataset to simulate a prevalence of 35.2% (679 positive + 1241 negative cases). A total of 1000 independent samplings were performed.

From a probability theory perspective, we can model each CT scan as an independent event with respect to a hemorrhage type, that is either is positive (success) or negative (failure). For a one-year sample of data, this set of events can be modeled as a Bernoulli process25. A binomial distribution for a large number of samples can be approximated by a Gaussian distribution using the Central Limit Theorem25,26 and be confidently used to calculate the confidence intervals (CI).

In order to visually illustrate the performance of the ML model compared to the ground-truth per scan at different scan intervals, cumulative positive case versus ground truth plots were generated at the patient level and for each type of ICH. If a CT scan is positive for a hemorrhage type, one is added to the cumulative value and if it is negative, zero is added. More divergence of the curves means less agreement between the ML model and the ground-truth. The difference at the last index is the number of scans where the ML model has made errors. If overall, the prediction curve is above the ground-truth curve, it means the ML model has over-called and if the prediction curve is below the ground-truth curve, the ML model has failed to diagnose cases with that specific type of hemorrhage.

Results

Evaluation of ML model performance on the test dataset revealed an AUC of 98.4%, a balanced accuracy of 98.4%, an imbalanced accuracy of 98.3%, sensitivity of 98.8%, specificity of 98.0%, positive predictive value of 96.5% and negative predictive value of 99.3% for ICH detection (Table 2).

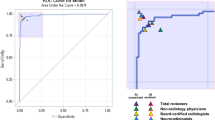

ML model performance was then evaluated on the external validation dataset which revealed an AUC of 95.4%, a balanced accuracy of 92.7%, an imbalanced accuracy of 93.8%, sensitivity of 91.3%, specificity of 94.1%, positive predictive value of 66.3% and negative predictive value of 98.8% for ICH detection (Table 3). The 95% CI with respect to the balanced accuracy score for each hemorrhage class is as follows: EDH (± 1.22%), SDH (± 0.63%), SAH (± 0.72%), IVH (± 0.64%), IPH (± 0.65%), and ICH (± 0.66%) (Fig. 3). The 95% CIs of EDH show a 35.58% difference between the CI of the accuracy and balanced accuracy scores. This indicates high generalization performance of the ML model for all types of ICH except EDH. Receiver operating characteristic (ROC) curves were created using the external dataset by generated probabilities per ICH type (Fig. 4a) and generated decisions after applying thresholds (Fig. 4b). A higher positive predictive value, accuracy, Matthews correlation coefficient, and F1 score were demonstrated with matched prevalence between the test and external validation datasets (Table 4).

Figure 5 shows the distribution of predicted hemorrhage probability by the ML model for the external validation dataset at the patient level. This figure shows that the probability distribution of prediction for both negative and positive EDH cases is very similar. Figure 6 shows the cumulative positive cases between the ML model prediction and ground-truth. The ML model has under-called (i.e. missed) cases of EDH while over-calling SAH, IVH, and IPH. For SDH, the two curves are more aligned than the other hemorrhage types and represents the highest agreement between the ML model and ground-truth. The accuracy results in Table 3 express a similar conclusion.

Probability distribution of the predicted labels for ground truth negative and positive cases. The central red line indicates the median, the bottom and top edges of the box indicate the 25th and 75th percentiles, respectively, and the whiskers extend to the most extreme data points not considered outliers. The outliers are plotted individually using the red “+’’ symbol and the found threshold by Bayesian optimizer is plotted using the black “*” symbol. Cases with a probability higher than the threshold are counted toward the corresponding positive and negative label.

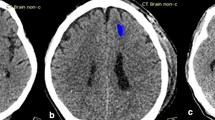

A panel of neuroradiologists reviewed the CT scans of patients which were classified as false negative and false positive. Following this review, 17 of the 59 false negative and 16 of the 313 false positive predictions by the ML model were considered equivocal despite the availability of prior and follow-up imaging. Two cases of ICH were correctly detected by the ML model but missed when reported by a radiologist (Fig. 7).

(a,b) Two examples of SDH that were missed by a radiologist but detected by the ML model. Visualization of feature maps from layer 4 (the layer before adaptive averaging pooling) in SE-ResNeXt-50 (32 × 4d). Left: Input head CT image; Middle left: GradCAM heat map; Middle: GradCAM++ heat map; Middle right: GradCAM result superimposed on CT image; Right: GradCAM++ result superimposed on CT image. The arrows indicate the SDH.

Discussion

In this study, we have shown that an ML model is able to demonstrate high generalizable performance in the detection of ICH. While many studies on ICH detection report high accuracy, a deeper examination shows that many of these studies suffer from limitations that may impede translation of ML models into real world clinical environments. For example, data from a common institution is often used for ML model training, validation, and testing. Many prior studies evaluate model performance on curated datasets that may not reflect the prevalence and variety of ICH encountered in clinical practice. Furthermore, investigators often do not specify the method used to curate such datasets. When ML models are tested in real world environments, the sample size and evaluation period is often limited while the inclusion and exclusion criteria may not be clearly defined. After initial studies showing great performance, follow-up studies have shown that some ML models display lower accuracy and higher false positive rates in different clinical environments27,28. The RSNA and the American College of Radiology have recently expressed concern that many commercially available ML algorithms have failed to demonstrate comprehensive generalizability in heterogeneous patient populations, radiologic equipment, and imaging protocols29.

We believe that we help address some of these concerns by demonstrating high model performance in a large heterogeneous dataset of head CTs performed over the course of one year in a busy neurosurgical and trauma centre in one of the world’s most diverse cities30. This dataset did not exclude any emergency department patients irrespective of image quality and the presence of artifacts (e.g. motion, streak, etc.). Furthermore, the data used to train the ML model was distinct from our institutional dataset which shows that ML model generalizability can be achieved. The level of accuracy demonstrated by the ML model supports its use as a triage system, a second reader, or as part of a quality assurance system.

We considered equivocal cases for ICH as positive as we believe these cases should be flagged for urgent radiologist review. This decision had the impact of decreasing the reported performance of the ML model and an increase in the number of false negative classifications. In terms of false negative cases, a substantial number were thin subdural hematomas. The clinical significance of not detecting these hematomas is not certain but prior studies have suggested that a large proportion of small extra-axial collections do not require intervention31. The false positive rate we encountered translates into less than one case per day which is a trivial increase in radiologist workload and would not have a significant impact in delaying the review of other imaging studies. In fact, many of these false positive cases included mimickers of ICH such as brain neoplasms and diffuse hypoxic ischemic injury that represent significant pathology.

The ML model detected ICH on two scans which were missed by the interpreting radiologist in our subspecialty academic radiology practice environment. With “real-world” error rates in the interpretation of head CTs ranging between 0.8 and 2.0%32,33, ML tools may have an important role to play in quality assurance or as a second reader. This could be particularly important in clinical environments with limited access to neuroradiology expertise.

We have shown that the probability distribution of prediction for both negative and positive EDH cases is very similar. This can be justified by the threshold found by the Bayesian optimizer where the threshold is very close to 0.5 (i.e. that is 50% chance of being EDH). This observation shows the bias of the ML model toward other hemorrhage types due to the limited number of training samples, which is a common problem in training ML models on medical images34. Potential solutions in addressing limited training data include image augmentation through geometrical transformations35 and image synthesis36.

This study has several limitations. The model was trained on the RSNA Intracranial Hemorrhage CT dataset. Images with EDH represent only a tiny fraction of this dataset which is reflected in the poorer performance of our model in detecting EDH. This issue could be mitigated by augmenting the amount of EDH training data through computer mediated techniques such as synthetic data34 or by pooling data from a larger numbers of sites. In addition, the expert labelers of the RSNA dataset annotated cases with post-operative collections as positive for ICH which accounts for the number of false positive cases with post-operative changes in our study. Adding a post-operative label to the training dataset would likely help reduce the number of false positives. ML model training and validation were performed on 5 mm slice thickness images and our clinical test dataset was composed of 2.5 and 5 mm slice thickness images. The model’s performance may be further improved by incorporating prior imaging studies, taking into account the natural evolution of ICH, and refining model training continuously. The ML model was evaluated on historical data rather than on a prospective basis. Ideally, the ML model would be evaluated as part of a prospective controlled trial at multiple institutions with different CT scanners and imaging protocols. If incorporated as part of a triaging system, such a study could help evaluate the impact on report turn-around time and patient outcomes. Traditionally studies have evaluated model performance on the basis of a confusion matrix, accuracy, sensitivity, and specificity which fails to take into account the impact of incorrect classification on patient outcomes particularly since the impact of false negative and positive predictions can be quite asymmetric. We hope this study can help lay the foundation for future investigators to examine these issues.

Data availability

The publicly available RSNA Intracranial Hemorrhage CT dataset used for model training is available at https://www.kaggle.com/c/rsna-intracranial-hemorrhage-detection/data. The external validation dataset is not publicly available. Model output and ground truth labels are available upon reasonable request by contacting the corresponding author.

Code availability

The source code used in this project can be made available on reasonable request by contacting the corresponding author.

References

Sacco, S., Marini, C., Toni, D., Olivieri, L. & Carolei, A. Incidence and 10-year survival of intracerebral hemorrhage in a population-based registry. Stroke 40, 394–399 (2009).

Flemming, K. D., Wijdicks, E. F. & Li, H. Can we predict poor outcome at presentation in patients with lobar hemorrhage?. Cerebrovasc. Dis. 11, 183–189 (2001).

Asch, C. J. V. et al. Incidence, case fatality, and functional outcome of intracerebral haemorrhage over time, according to age, sex, and ethnic origin: A systematic review and meta-analysis. Lancet Neurol. 9, 167–176 (2010).

Fogelholm, R. et al. Long term survival after primary intracerebral haemorrhage: A retrospective population based study. J. Neurol. Neurosurg. Psychiatry 76, 1534–1538 (2005).

Cordonnier, C., Demchuk, A., Ziai, W. & Anderson, C. S. Intracerebral haemorrhage: Current approaches to acute management. Lancet 392, 1257–1268 (2018).

Abid, K. A. et al. Which factors influence decisions to transfer and treat patients with acute intracerebral haemorrhage and which are associated with prognosis? A retrospective cohort study. BMJ Open 3, e003684 (2013).

Morgenstern, L. B. et al. Guidelines for the management of spontaneous intracerebral hemorrhage. Stroke 41, 2108–2129 (2010).

Dorhout Mees, S. M., Molyneux, A. J., Kerr, R. S., Algra, A. & Rinkel, G. J. E. Timing of aneurysm treatment after subarachnoid hemorrhage. Stroke 43, 2126–2129 (2012).

Glover, M. IV., Almeida, R. R., Schaefer, P. W., Lev, M. H. & Mehan, W. A. Jr. Quantifying the impact of noninterpretive tasks on radiology report turn-around times. J. Am. Coll. Radiol. 14, 1498–1503 (2017).

Jha, S. Value of triage by artificial intelligence. Acad. Radiol. 27, 153–155 (2020).

Arbabshirani, M. R. et al. Advanced machine learning in action: Identification of intracranial hemorrhage on computed tomography scans of the head with clinical workflow integration. npj Digit. Med. 1, 9 (2018).

Prevedello, L. M. et al. Automated critical test findings identification and online notification system using artificial intelligence in imaging. Radiology 285, 923–931 (2017).

Kuo, W., Hӓne, C., Mukherjee, P., Malik, J. & Yuh, E. L. Expert-level detection of acute intracranial hemorrhage on head computed tomography using deep learning. Proc. Natl. Acad. Sci. U.S.A. 116, 22737–22745 (2019).

Chilamkurthy, S. et al. Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet 392, 2388–2396 (2018).

Ojeda, P., Zawaideh, M., Mossa-Basha, M. & Haynor, D. The utility of deep learning: Evaluation of a convolutional neural network for detection of intracranial bleeds on non-contrast head computed tomography studies. In Medical Imaging 2019: Image Processing (eds Angelini, E. D. & Landman, B. A.) (SPIE, 2019).

Beede, E. et al. A human-centered evaluation of a deep learning system deployed in clinics for the detection of diabetic retinopathy. in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (ACM, 2020).

Flanders, A. E. et al. Construction of a machine learning dataset through collaboration: The RSNA 2019 brain CT hemorrhage challenge. Radiol. Artif. Intell. 2, e190211 (2020).

Epstein, C. L. Introduction to the Mathematics of Medical Imaging (Society for Industrial and Applied Mathematics, 2007).

Deng, J. et al. ImageNet: A large-scale hierarchical image database. in 2009 IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2009).

Ke, G. et al. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 30, 3146–3154 (2017).

Mockus, J. Bayesian Approach to Global Optimization: Theory and Applications (Kluwer, 1989).

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 128, 336–359 (2019).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Kim, D. W., Jang, H. Y., Kim, K. W., Shin, Y. & Park, S. H. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: Results from recently published papers. Korean J. Radiol. 20, 405 (2019).

Loève, M. Probability Theory (Springer, 1977).

Witten, I. H. & Frank, E. Data mining. SIGMOD Rec. 31, 76–77 (2002).

Ginat, D. T. Analysis of head CT scans flagged by deep learning software for acute intracranial hemorrhage. Neuroradiology 62, 335–340 (2019).

Rao, B. et al. Utility of artificial intelligence tool as a prospective radiology peer reviewer—Detection of unreported intracranial hemorrhage. Acad. Radiol. https://doi.org/10.1016/j.acra.2020.01.035 (2020).

Fleishon, H. B. & Haffty, B. G. Docket no. fda-2019-n-5592 “public workshop—Evolving role of artificial intelligence in radiological imaging”; comments of the American college of radiology (2020).

Qadeer, M. Ethnic Segregation in a Multicultural City in Desegregating the City: Ghettos, Enclaves, and Inequality (State University of New York Press, 2005).

Bajsarowicz, P. et al. Nonsurgical acute traumatic subdural hematoma: What is the risk?. JNS 123, 1176–1183 (2015).

Wu, M. Z., McInnes, M. D. F., Blair Macdonald, D., Kielar, A. Z. & Duigenan, S. CT in adults: Systematic review and meta-analysis of interpretation discrepancy rates. Radiology 270, 717–735 (2014).

Babiarz, L. S. & Yousem, D. M. Quality control in neuroradiology: Discrepancies in image interpretation among academic neuroradiologists. AJNR Am. J. Neuroradiol. 33, 37–42 (2011).

Salehinejad, H., Colak, E., Dowdell, T., Barfett, J. & Valaee, S. Synthesizing chest X-ray pathology for training deep convolutional neural networks. IEEE Trans. Med. Imaging 38, 1197–1206 (2019).

Salehinejad, H., Valaee, S., Dowdell, T. & Barfett, J. Image Augmentation Using Radial Transform for Training Deep Neural Networks. in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2018). https://doi.org/10.1109/icassp.2018.8462241.

Salehinejad, H., Valaee, S., Dowdell, T., Colak, E. & Barfett, J. Generalization of deep neural networks for chest pathology classification in X-rays using generative adversarial networks. in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2018). https://doi.org/10.1109/icassp.2018.8461430.

Acknowledgements

The authors would like to acknowledge the contributions of Blair Jones and Dr. Zsolt Zador.

Author information

Authors and Affiliations

Contributions

Guarantor: E.C. had full access to the study data and takes responsibility for the integrity of the complete work and the final decision to submit the manuscript. Study concept and design: H.S., E.C., M.M. Acquisition, analysis, or interpretation of data: All. Drafting of the manuscript: H.S., E.C. Critical revision of the manuscript: All. Obtaining funding: N/A. Administrative or technical support: H.S., E.C., H.M.L. Supervision: E.C., M.M.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Salehinejad, H., Kitamura, J., Ditkofsky, N. et al. A real-world demonstration of machine learning generalizability in the detection of intracranial hemorrhage on head computerized tomography. Sci Rep 11, 17051 (2021). https://doi.org/10.1038/s41598-021-95533-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-95533-2

This article is cited by

-

Diagnostic test accuracy of machine learning algorithms for the detection intracranial hemorrhage: a systematic review and meta-analysis study

BioMedical Engineering OnLine (2023)

-

External validation of binary machine learning models for pain intensity perception classification from EEG in healthy individuals

Scientific Reports (2023)

-

Systematic Review of Artificial Intelligence for Abnormality Detection in High-volume Neuroimaging and Subgroup Meta-analysis for Intracranial Hemorrhage Detection

Clinical Neuroradiology (2023)

-

Generation of microbial colonies dataset with deep learning style transfer

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.