Abstract

Dialysis adequacy is an important survival indicator in patients with chronic hemodialysis. However, there are inconveniences and disadvantages to measuring dialysis adequacy by blood samples. This study used machine learning models to predict dialysis adequacy in chronic hemodialysis patients using repeatedly measured data during hemodialysis. This study included 1333 hemodialysis sessions corresponding to the monthly examination dates of 61 patients. Patient demographics and clinical parameters were continuously measured from the hemodialysis machine; 240 measurements were collected from each hemodialysis session. Machine learning models (random forest and extreme gradient boosting [XGBoost]) and deep learning models (convolutional neural network and gated recurrent unit) were compared with multivariable linear regression models. The mean absolute percentage error (MAPE), root mean square error (RMSE), and Spearman’s rank correlation coefficient (Corr) for each model using fivefold cross-validation were calculated as performance measurements. The XGBoost model had the best performance among all methods (MAPE = 2.500; RMSE = 2.906; Corr = 0.873). The deep learning models with convolutional neural network (MAPE = 2.835; RMSE = 3.125; Corr = 0.833) and gated recurrent unit (MAPE = 2.974; RMSE = 3.230; Corr = 0.824) had similar performances. The linear regression models had the lowest performance (MAPE = 3.284; RMSE = 3.586; Corr = 0.770) compared with other models. Machine learning methods can accurately infer hemodialysis adequacy using continuously measured data from hemodialysis machines.

Similar content being viewed by others

Introduction

Dialysis adequacy is an important survival indicator in patients with chronic hemodialysis1,2. Recent guidelines recommend that the dialysis dose should be adjusted using a blood test at least once per month and suggest a target single pool Kt/V (spKt/V) of 1.4 per hemodialysis session for patients treated thrice weekly3. Although some hemodialysis devices estimate spKt/V using sodium clearance, it is limited to devices from specific manufactures and cannot be applied to all equipment. In contrast, the urea reduction ratio (URR) is easily calculated and used as a standard measurement for the delivered hemodialysis dose4,5. However, there are disadvantages; it uses needles, exposes the medical staff and patients to blood, and has costs associated with processing and analyzing blood samples. Additionally, hemodialysis sessions are frequently terminated for reasons such as intradialytic hypotension, vascular access problems, and poor compliance. Therefore, URR is not easily measured regularly in practice.

During hemodialysis, several clinical parameters such as blood flow, ultrafiltration and dialysate flow rates, vessel pressure, temperature, and bicarbonate and sodium levels are continuously generated. Monitoring and recording these parameters in real-time is possible with the commercial software provided with the hemodialysis machine. Considering urea kinetics, some of these measurements, the type of dialyzer, and the dialysis duration may be related to dialysis adequacy. However, the relationship between these measurements and dialysis adequacy is not simple, and models using machine learning (ML) rather than traditional statistical models may be more appropriate for predicting dialysis adequacy. Artificial intelligence has already been used in the healthcare field for medical imaging, natural language processing, and genomics6. Recently, studies also used ML or deep learning (DL) (a subfield of ML) to investigate kidney disease7.

In this study, we hypothesized that the ML technique could predict dialysis adequacy in chronic hemodialysis patients using clinical demographics and repeated measurements obtained during hemodialysis sessions. This study aimed to build models that predict URR based on repeated measurement data from patients during hemodialysis.

Results

Hemodialysis sessions

This study included 1333 hemodialysis sessions corresponding to the monthly examination dates of 61 patients where URR was measured. The mean blood flow was 265.2 mL/min (SD, 41.4), the mean dialysate flow was 571.0 mL/min (SD, 116.3), the mean dialyzer surface area was 1.8 m2 (SD, 0.2), the mean URR was 77.7% (SD, 5.3), and the mean total ultrafiltration volume was 2209.0 mL (SD, 826.5) (Table 1). The fivefold cross-validation method divided the data into five approximately equal-sized portions (the minimum and the maximum number of participants was 12 and 13, respectively). The total number of data points was 319,920.

Model performances

Table 2 summarizes the MAPE, RMSE, and Corr performance measurements for each model using the fivefold cross-validation. For the linear regression model, the models with time-fixed and time-varying covariates had better performances than the model with fixed covariates alone (MAPE = 3.546; RMSE = 3.785; Corr = 0.751). Among the time-varying covariates, the blood flow rate measurement improved performance the most (MAPE = 3.329; RMSE = 3.648; Corr = 0.766). The linear regression model with all covariates had the best performance among the linear regression models (MAPE = 3.284; RMSE = 3.586; Corr = 0.770). However, the linear regression models had a lower performance than the ML and DL models. The ML methods had better performances than the other methods, and the XGBoost model had the best performance among the ML methods (MAPE = 2.500; RMSE = 2.906; Corr = 0.873). The DL models with the convolutional neural network (MAPE = 2.835; RMSE = 3.125; Corr = 0.833) and gated recurrent unit (MAPE = 2.974; RMSE = 3.230; Corr = 0.824) had similar performances. The detailed relationship between URR and the predicted values for each model are depicted using scatter plots in Fig. 1. The results of other hyperparemeter settings are summarized in Supplementary Table S1.

Scatter plots of URR and the predicted value for each model using Spearman’s rank correlation coefficient and the best fit line. (A) Linear regression (R = 0.771). (B) Random forest (R = 0.864). (C) XGBoost (R = 0.873). (D) Deep learning with convolutional neural network (R = 0.834). URR urea reduction ratio, XGBoost extreme gradient boosting.

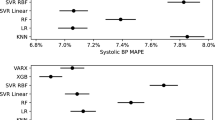

Feature importance

Feature importance was calculated for the random forest and XGBoost models to investigate which covariates affect the URR prediction the most (Fig. 2). Pre-dialysis weight was the most important covariate for predicting URR in both models, followed by height and gender. Artificial features extracted by blood flow rate (i.e., the mean and intercept of the linear regression) had higher importance than other artificial features.

Sensitivity analyses

Sensitivity analyses were conducted to confirm the fivefold cross-validation results, which were performed in units of sessions instead of patients. After randomizing the sessions, the linear regression, ML, and DL models were trained, and the sensitivity analysis results were similar to the primary results (Table 3). The ML and DL models still performed better than the linear regression model. Sensitivity analysis was also performed on data that eliminated URR outliers to determine how outliers affected model fitting. Sessions with URR values greater than the 95th percentile and less than the 5th percentile were removed. The model performances are summarized in Table 3. The models had better performances after eliminating outliers. However, the performance differences among models were similar before and after outlier removal.

Discussion

Current guidelines recommend checking dialysis adequacy once per month because dialysis adequacy is related to the prognosis of end-stage kidney disease patients3. However, determining adequacy is challenging owing to the cost and blood exposure. The prediction model used parameters that determine hemodialysis efficiency, such as blood flow and dialysate flow rates, dialysis time, and the dialyzer type8,9,10. However, it is difficult to predict dialysis adequacy using these parameters through traditional statistical methods as the relationships between these parameters and urea clearance are not linear; they frequently change during hemodialysis with fluctuations in blood pressure or other symptoms. This study showed that ML and DL models using continuous measurements obtained during hemodialysis predicted dialysis adequacy. Furthermore, there are significant implications in repeated measurements from hemodialysis machines for making such predictions. For example, there is no additional cost because the adequacy predictions are based on measurements obtained from any hemodialysis machine, making this approach useful when remote monitoring is required, such as with at-home hemodialysis.

DL has been mainly used for image processing, although recently, DL has also been used for predicting laboratory results or the short-term prognosis of patients based on continuously measured data. Additionally, large-scale intensive care unit datasets, such as the Medical Information Mart for Intensive Care III11 and eICU Collaborative Research Database12, and intra- or post-operative vital sign data are now available for use in research13. Various studies have also used DL to investigate hemodialysis. Akl et al.14 suggested decades ago that the neural network can achieve artificial-intelligent dialysis control, and studies on intradialytic hypotension predictions15,16,17,18, the optimal dry weight setting19, and anemia control20 for hemodialysis have been presented. DL in research has also expanded to other kidney diseases to predict acute kidney injury outcomes21,22 and hyperkalemia23. Despite challenges, such as data cleansing costs, the required modeling resources, and algorithm validations, the DL approach is expected to improve the prognosis of hemodialysis patients in the future.

There are some limitations to our study. First, despite a relatively large number of hemodialysis sessions, this study was conducted on a small number of patients. For this reason, DL models might show lower performances than random forest or XGBoost models in this study. A large, prospective study is needed to validate our model. Second, some factors influencing the blood urea nitrogen level during hemodialysis were not considered (e.g., the catabolic status, the exact residual renal function, and access recirculation). However, this study was based on outpatient clinic data with few acutely ill patients, and ultrafiltration (a factor affecting the blood urea nitrogen level) was included in our model. Therefore, the effect of the catabolic status was minimized. Finally, URR has been used as a standard method to measure the hemodialysis dose4. However, the current guidelines do not recommend using URR for hemodialysis adequacy. Nevertheless, URR is widely used in clinics because it is easy to calculate and has a similar sensitivity to urea reduction compared with other methods24. Models that predict spKt/V require verification in the future.

In conclusion, ML can accurately infer hemodialysis adequacy through repeatedly measured data during hemodialysis sessions. We expect to be able to develop personalized hemodialysis profile recommendation models through prospective data collection soon.

Materials and methods

Study population

The data were extracted from the Severance Hospital hemodialysis database, which stores information about each hemodialysis session. A total 21,004 sessions of 75 outpatients aged over 19 which were automatically recorded in the Therapy Data Management System from May 2015 to September 2020 were screened. Among them, 61 patients who were examined for dialysis adequacy regularly were finally selected and clinical information including dialysis adequacy was additionally collected. The study was performed following the Declaration of Helsinki principles, and the Severance Hospital institutional review board approved this study (no. 4-2021-0056) and waived informed consent as only de-identified, previously collected data was accessed.

Data collection and measurements

Demographic and anthropometric data (including sex and age) were collected corresponding to the hemodialysis date from electric medical records. Blood pressure, the vascular access type (arteriovenous fistula, arteriovenous graft, and catheter), and the dialyzer type (surface area) were recorded at the initiation of each hemodialysis session. Data, including blood flow and ultrafiltration rates, bicarbonate and sodium levels, dialysate flow, vein and artery pressures, and the dialysate temperature, were measured every minute from the start of each session unless problems or interventions occurred. Monitoring software linked to each dialysis machine recorded the hemodialysis measurements in real-time and collected 240 measurements (about 4 h) from each session; missing values were completed using an interpolation method. URR (the blood urea concentration decrease [%] during hemodialysis) was measured as an indicator of dialysis adequacy. All hemodialysis sessions included in this study used the Fresenius 5008S (Fresenius Medical Care, Bad Homburg, Germany) hemodialysis device.

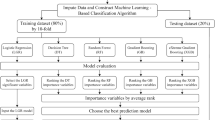

Model building

Linear regression was considered the base model for performance comparisons with ML and DL algorithms. Random forest25 and XGBoost26 were chosen for the ML algorithms. The convolutional neural network and gated recurrent unit27 architectures were chosen for the DL algorithms to extract features from time-varying covariates. The DL algorithms were trained with a batch size of 128, Adam optimizer28 and the root mean squared error (RMSE) loss function. The detailed architectures of the DL algorithms are illustrated in Supplementary Figure S1 and Supplementary Figure S2. The hyperparameters were optimized to minimize the RMSE through a random search with fivefold cross-validation in ML algorithms. All selected hyperparameters are described in Supplementary Table S1.

Covariates were normalized to have values between 0 and 1 in the DL algorithms, which can automatically extract features from time-varying covariates. In contrast, the linear regression and ML algorithms require a data pre-processing step to extract artificial features from time-varying covariates. Thus, the means and standard deviations (SDs) from time-varying covariates by session for the linear regression and ML algorithms were extracted, and then, a linear regression for the time-varying covariates by session was implemented. From this linear regression, the intercept, coefficient, and mean squared error (MSE) were extracted.

Statistical analyses

Descriptive statistics were used to describe covariates. Categorical variables were expressed as the number of patients and percentages, and continuous variables were presented as the mean and SD. The mean absolute percentage error (MAPE), RMSE, and Spearman’s rank correlation coefficient (Corr) were calculated for performance evaluation using fivefold cross-validation. Two sensitivity analyses were also performed for result confirmation. First, each session was regarded as belonging to a different person. Then, the main analysis was repeated after eliminating URR outliers. Analyses, including the linear regression and ML algorithms, were performed using R software (version 3.6.1; www.r-project.org; R Foundation for Statistical Computing, Vienna) with the authors’ own program written code using the XGBoost and ranger packages. Python software (version 3.7; www.python.org; Python Software Foundation, Wilmington) was used with the Keras library for the DL algorithms. A computer with a Xeon processor (24 core, Intel, USA) and Quadro RTX 6000 (Nvidia, USA) was used for all analyses.

Data availability

The datasets generated during the current study are not publicly available due to the data security requirement of our hospital.

References

Gotch, F. A. & Sargent, J. A. A mechanistic analysis of the National Cooperative Dialysis Study (NCDS). Kidney Int. 28, 526–534. https://doi.org/10.1038/ki.1985.160 (1985).

Lowrie, E. G., Laird, N. M., Parker, T. F. & Sargent, J. A. Effect of the hemodialysis prescription of patient morbidity: Report from the National Cooperative Dialysis Study. N. Engl. J. Med. 305, 1176–1181. https://doi.org/10.1056/NEJM198111123052003 (1981).

National Kidney, F. KDOQI clinical practice guideline for hemodialysis adequacy: 2015 update. Am. J. Kidney Dis. 66, 884–930. https://doi.org/10.1053/j.ajkd.2015.07.015 (2015).

Owen, W. F. Jr., Lew, N. L., Liu, Y., Lowrie, E. G. & Lazarus, J. M. The urea reduction ratio and serum albumin concentration as predictors of mortality in patients undergoing hemodialysis. N. Engl. J. Med. 329, 1001–1006. https://doi.org/10.1056/nejm199309303291404 (1993).

Sherman, R. A., Cody, R. P., Rogers, M. E. & Solanchick, J. C. Accuracy of the urea reduction ratio in predicting dialysis delivery. Kidney Int. 47, 319–321. https://doi.org/10.1038/ki.1995.41 (1995).

Esteva, A. et al. A guide to deep learning in healthcare. Nat. Med. 25, 24–29. https://doi.org/10.1038/s41591-018-0316-z (2019).

Niel, O. & Bastard, P. Artificial intelligence in nephrology: Core concepts, clinical applications, and perspectives. Am. J. Kidney Dis. 74, 803–810. https://doi.org/10.1053/j.ajkd.2019.05.020 (2019).

Hassell, D. R., van der Sande, F. M., Kooman, J. P., Tordoir, J. P. & Leunissen, K. M. Optimizing dialysis dose by increasing blood flow rate in patients with reduced vascular-access flow rate. Am. J. Kidney Dis. 38, 948–955. https://doi.org/10.1053/ajkd.2001.28580 (2001).

Ouseph, R. & Ward, R. A. Increasing dialysate flow rate increases dialyzer urea mass transfer-area coefficients during clinical use. Am. J. Kidney Dis. 37, 316–320. https://doi.org/10.1053/ajkd.2001.21296 (2001).

Leon, J. B. & Sehgal, A. R. Identifying patients at risk for hemodialysis underprescription. Am. J. Nephrol. 21, 200–207. https://doi.org/10.1159/000046248 (2001).

Johnson, A. E. et al. MIMIC-III, a freely accessible critical care database. Sci. Data 3, 160035. https://doi.org/10.1038/sdata.2016.35 (2016).

Pollard, T. J. et al. The eICU Collaborative Research Database, a freely available multi-center database for critical care research. Sci. Data 5, 180178. https://doi.org/10.1038/sdata.2018.178 (2018).

Lee, H. C. & Jung, C. W. Vital recorder-a free research tool for automatic recording of high-resolution time-synchronised physiological data from multiple anaesthesia devices. Sci. Rep. 8, 1527. https://doi.org/10.1038/s41598-018-20062-4 (2018).

Akl, A. I., Sobh, M. A., Enab, Y. M. & Tattersall, J. Artificial intelligence: A new approach for prescription and monitoring of hemodialysis therapy. Am. J. Kidney Dis. 38, 1277–1283. https://doi.org/10.1053/ajkd.2001.29225 (2001).

Barbieri, C. et al. Development of an artificial intelligence model to guide the management of blood pressure, fluid volume, and dialysis dose in end-stage kidney disease patients: Proof of concept and first clinical assessment. Kidney Dis. (Basel) 5, 28–33. https://doi.org/10.1159/000493479 (2019).

Chen, J.-B., Wu, K.-C., Moi, S.-H., Chuang, L.-Y. & Yang, C.-H. Deep learning for intradialytic hypotension prediction in hemodialysis patients. IEEE Access 8, 82382–82390 (2020).

Lin, C. J. et al. Intelligent system to predict intradialytic hypotension in chronic hemodialysis. J. Formos. Med. Assoc. 117, 888–893. https://doi.org/10.1016/j.jfma.2018.05.023 (2018).

Lee, H. et al. Deep learning model for real-time prediction of intradialytic hypotension. Clin. J. Am. Soc. Nephrol. https://doi.org/10.2215/CJN.09280620 (2021).

Niel, O. et al. Artificial intelligence outperforms experienced nephrologists to assess dry weight in pediatric patients on chronic hemodialysis. Pediatr. Nephrol. 33, 1799–1803. https://doi.org/10.1007/s00467-018-4015-2 (2018).

Barbieri, C. et al. An international observational study suggests that artificial intelligence for clinical decision support optimizes anemia management in hemodialysis patients. Kidney Int. 90, 422–429. https://doi.org/10.1016/j.kint.2016.03.036 (2016).

Tomasev, N. et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 572, 116–119. https://doi.org/10.1038/s41586-019-1390-1 (2019).

Park, S. et al. Intraoperative arterial pressure variability and postoperative acute kidney injury. Clin. J. Am. Soc. Nephrol. 15, 35–46. https://doi.org/10.2215/CJN.06620619 (2020).

Galloway, C. D. et al. Development and validation of a deep-learning model to screen for hyperkalemia from the electrocardiogram. JAMA Cardiol. 4, 428–436. https://doi.org/10.1001/jamacardio.2019.0640 (2019).

Uhlin, F., Fridolin, I., Magnusson, M. & Lindberg, L. G. Dialysis dose (Kt/V) and clearance variation sensitivity using measurement of ultraviolet-absorbance (on-line), blood urea, dialysate urea and ionic dialysance. Nephrol. Dial. Transplant. 21, 2225–2231. https://doi.org/10.1093/ndt/gfl147 (2006).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Chen, T. & Guestrin, C. Xgboost: A scalable tree boosting system in Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining, pp. 785–794 (2016).

Cho, K. et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv:1406.1078 (2014).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv:1412.6980 (2014).

Acknowledgements

This study was supported by a Severance Hospital Research fund for Clinical excellence (SHRC) (C-2020-0011).

Author information

Authors and Affiliations

Contributions

H.W.K. and B.S.K. contributed to the research idea and the study design; H.W.K., A.K., and J.Y.K. were involved in data acquisition and cleansing; H.W.K. and S.J.H. contributed to the statistical analyses and model building. B.S.K. and C.M.N. were responsible for data analysis and interpretation and supervision or mentorship. All authors approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kim, H.W., Heo, SJ., Kim, J.Y. et al. Dialysis adequacy predictions using a machine learning method. Sci Rep 11, 15417 (2021). https://doi.org/10.1038/s41598-021-94964-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-94964-1

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.