Abstract

In this paper, we proposed a multi-method modeling approach to community-level spreading of COVID-19 disease. Our methodology was composed of interconnected age-stratified system dynamics models in an agent-based modeling framework that allowed for a granular examination of the scale and severity of disease spread, including metrics such as infection cases, deaths, hospitalizations, and ICU usage. Model parameters were calibrated using an optimization technique with an objective function to minimize error associated with the cumulative cases of COVID-19 during a training period between March 15 and October 31, 2020. We outlined several case studies to demonstrate the model’s state- and local-level projection capabilities. We further demonstrated how model outcomes could be used to evaluate perceived levels of COVID-19 risk across different localities using a multi-criteria decision analysis framework. The model’s two, three, and four week out-of-sample projection errors varied on a state-by-state basis, and generally increased as the out-of-sample projection period was extended. Additionally, the prediction error in the state-level projections was generally due to an underestimation of cases and an overestimation of deaths. The proposed modeling approach can be used as a virtual laboratory to investigate a wide range of what-if scenarios and easily adapted to future high-consequence public health threats.

Similar content being viewed by others

Introduction

During the current COVID-19 pandemic, global efforts have taken place to contain the spread of the virus and develop effective non-therapeutic (e.g., social distancing, partial and full lockdowns) and therapeutic treatments (e.g., vaccination). As the COVID-19 pandemic has spread across the globe since early 2020, researchers have identified gaps in data and our understanding of ways in which the disease spreads within and between communities including its potential impacts on general and at-risk populations1,2.

Computational modeling has been long employed to further increase our understanding of complex infectious diseases as well as their development, spread dynamics, and potential treatments3. Using computational modeling, we have been able to identify common patterns in infectious diseases allowing us to leverage lessons learned through investigating past widespread disease events to predict who may get infected, where vaccination efforts should be prioritized, and how to limit the spread of infectious diseases in future events4,5,6,7.

Two methods, System Dynamics (SD) and Agent-Based Modeling (ABM), have been frequently used in recent years to investigate the complex nature of infectious diseases and their potential containment strategies. SD has a long history of being applied to the study of infectious disease epidemiology. This method operates at a high level of abstraction by compartmentalizing the population into different disease stages such as Susceptible (S), Infected (I), and Recovered (R), among others while assuming population homogeneity within each compartment8,9. Previous studies have identified limitations of SD in modeling infectious diseases such as inability to model multi-strain infections, deterministic nature, inability to model time-varying infectivity, and assumptions regarding population homogeneity, among others10. With the boom in computer processing capability in the twenty-first century, ABM has been recently used in modeling infectious disease dynamics11,12. ABM uses a bottom-up approach, where a complex dynamic system is described as interacting objects with their own behaviors such that systemic behavior can potentially emerge as a summary of the individual actions of agents13,14. ABM for infectious diseases focuses on incorporating individual information such as personal interactions, movements, and health information in an attempt to provide a more granular profile of disease spread as compared to the homogenous population of SD models. However, ABM is not without its limitations: (1) model parameters (e.g., reproduction number for infectious diseases) are often difficult to quantify; (2) model validation can be difficult to assess, particularly when modeling unobserved associations15; (3) ABM can become exceedingly computationally intensive when applied to large populations16; and (4) lack of individualized data may result in increased model assumptions and uncertainty17.

In this paper, we propose a multi-method, also known as hybrid, modeling approach to community-level infectious disease spread. The idea of multi-method modeling is to integrate different methods of computational modeling to overcome the limitations of individual methods and get the most from each one18,19,20. Our Multi-Method Community Disease Risk Model, hereafter referred to as M2-CDRM, combines the advantages of SD and ABM, allowing the simulation of spatially explicit scenarios representing future states of disease transmission within different communities and testing risk management policies across a wide range of scenarios using what-if analysis. The model integrates multiple layers of data including population demographics, observed cases of illness and death, and hospital demands at the local county-level within different states to make location-specific predictions about COVID-19 illness and death. M2-CDRM can be used as a virtual laboratory to: (1) identify “hot spots” of potential areas (e.g., counties) with highest levels of infected individuals within the United States that can potentially act as infection hubs during the ongoing pandemic; (2) examine population-specific characteristics (e.g., gender, age) that can result in disproportionate distribution of mortality and morbidity in cases across the United States; (3) prioritize counties based on their perceived disease risks considering multiple decision criteria; and (4) evaluate the effectiveness of candidate mitigation options (e.g., social distancing, wide-spread testing) aimed at reducing the likelihood of disease transmission within different communities. This paper outlines a case study of our proposed approach focused on modeling COVID-19 at a community level in the United States. Additionally, we introduced an example of how this model could be potentially used in conjunction with a Multi-Criteria Decision Analysis (MCDA) framework to assess and prioritize different communities in terms of their perceived risk of COVID-19.

Methods

Model overview

We developed M2-CDRM as a highly customizable, evidence-based, and data-driven model by integrating an SD modeling approach within an ABM framework to study the COVID-19 transmission on multiple levels of aggregation in the United States (Fig. 1). The model is implemented in AnyLogic (Professional Edition, Version: 8.5.2, Link: https://www.anylogic.com), a modeling framework that integrates support for SD, ABM, and other dynamic computational methods. M2-CDRM included all 50 states as well as their individual counties with a simulation period between March 15 and December 31st, 2020.

Disease transmission models

With COVID-19, different subpopulations have been shown to be more or less susceptible, more or less likely to be infectious, and more or less likely to recover from the disease21,22,23. Therefore, treating the entire population with the same static assumptions about these rates can cause decision makers to miss key aspects of the disease’s likely trajectory. M2-CDRM addresses this limitation by including five separate SD models to simulate COVID-19 disease dynamics in distinct age cohorts within each individual county: 0–17, 18–44, 45–64, 65–74, and 75 + years of age. While these cohorts were initially selected to stratify the population based on their ages, the model design is flexible and can accommodate any age stratification. Each SD model was defined using eight compartments, including Susceptible (S), Exposed (E), Asymptomatic Infection (AI), Mild Infection (MI), Severe Infection (SI), Critical Infection (CI), Recovered (R), and Death (D). In each model, severe infection and critical infection represented general admission to the hospital as well as ICU admission, respectively. During the early stage of the pandemic, the confirmed COVID-19 case counts in the U.S. did not capture the total burden of the pandemic. This was primarily because testing was restricted to individuals with moderate to severe symptoms due to limited test availability24. Therefore, in order to correct for biased testing and imperfect diagnostic accuracy and provide a more realistic assessment of COVID-19 burden, we further adjusted simulated infection cases (I) by an under-reporting factor.

For each state, the spread of COVID-19 in county j and for age cohort i was modeled based on the following set of differential equations (Fig. 2):

where Si,j represents susceptible population in age cohort i (i = 1,…,5) in county j, Sj(0) represents initial susceptible population in county j across all age cohorts, Ei,j represents exposed population in age cohort i in county j, Ii,j represents symptomatic infectious population in age cohort i in county, AIi,j represents asymptomatic infectious population in age cohort i in county j, Hi,j represents hospitalized population (severe infection) in age cohort i in county j, Ci,j represents critically infected population (ICU admission) in age cohort i in county j, Ri,j represents recovered (non-infectious) population in age cohort i in county j, Di,j represents deceased population in age cohort i in county j, IP represents incubation period (days), FRAI represents fraction of asymptomatic population, MIPH represents duration of mild infection prior to hospitalization (days), MIP represents duration of mild infection prior to recovery (days), AIP represents duration of asymptomatic infection (days), HRi represents hospitalization rate for age cohort i (i = 1,…,5), URF represents under-reporting factor of symptomatic infections, SIPICU represents severe infection period prior to transfer to ICU (days), SIP represents severe infection period prior to recovery (days), CRi represents critical infection rate in age cohort i (i = 1,…,5), CIPD represents critical infection period prior to death (days), CIP represents critical infection period prior to recovery (days), FRi represents fatality rate in age cohort i (i = 1,…,5), APIi represents average period of infectiousness in age cohort i (i = 1,…,5), and REt,j represents effective reproduction number at time t in county j.

ABM framework to connect SD models

Within each county, we defined population age cohorts (0–17, 18–44, 45–64, 65–74, 75 +) as individual agents. Each of these individual agents was then coupled with all other agents within the same county with explicit interactivity patterns. By focusing on micro-level interactions, this framework was able to explain emergent patterns such as transient dynamics on a system level and identify important mechanisms, taking into account heterogeneity of entities (e.g., individual age cohorts as agents) and spatial and temporal heterogeneity of processes (e.g., variability in disease dynamics across different counties). Additionally, the ABM structure allowed for the possibility of advanced data inputs such as age-specific reproduction numbers, interaction, and mobility patterns across age cohorts and counties, county- and age-specific adherence to social distancing policies, and what-if analysis such as customizable vaccine distribution networks. Outputs from our framework were timeseries of system-level variables further stratified by age cohorts, counties and states: (1) number of infected; (2) number of hospitalized; (3) number of ICU admissions; (4) number of deaths; and (5) hospital utilization considering available general and ICU beds in different counties.

Effective reproduction number

Since a population will rarely be totally susceptible to an infection in the real world, the effective reproduction number, REt, and not the basic reproduction number, R0, should be used as a measure of disease transmissibility at time t25. REt represents the expected number of new infections caused by an infectious individual in a population where some individuals may no longer be susceptible. Estimates of REt are typically used to assess how changes in policy, population immunity, and population behaviors, among other factors, have affected transmission at specific points in time26,27,28,29.

Using observed number of daily cases of COVID-19 in county j, we calculated timeseries of REt,j based on the methodology discussed in Cori et al. and implemented in the R-package EpiEstim30. This package implements a Bayesian approach for quantifying transmissibility over time during an epidemic and reports a 95% confidence interval for REt. More specifically, it allows estimating the instantaneous and case reproduction numbers during an epidemic for which a timeseries of incidence is available and the distribution of the serial interval (time between symptoms onset in a primary case and symptoms onset in secondary case) is more or less precisely known. To calculate REt,j, we assumed the median, mean, and standard deviation of the serial interval were 4.0, 4.7, and 2.9 days, respectively31. REt,j was calculated as:

where t0,j represents time associated with the first observed case of illness in county j, tEC,j represents time associated with the end of model calibration period in county j (i.e., the last date with observed case of illness), and \(RE_{t,j}^{*}\) represents output from the EpiEstim package. \(\beta_{0,j}\) and \(\beta_{1,j}\) are coefficients from fitting an exponential regression model to the estimated \(RE_{t,j}^{*}\) values in the last two weeks, assuming that \(RE_{t,j}^{*}\) continue the same trend observed in the past two weeks. The minimum value of 0.3 represents the estimated reproduction number in the City of Wuhan after the lockdown of the region26.

Calibration of model parameters

Model calibration is the process of identifying the model parameter configurations that best explain the observed real-time values (e.g., observed cases of illness). While simple models with fewer parameters can be potentially calibrated by manually adjusting parameter values, calibration of complex models, such as M2-CDRM, requires extensive computational effort and resources. We used a simulation-based “optimization” method to calibrate selected model parameters by estimating their values and plausible ranges such that the model outcomes would closely match existing historic data of number of observed cases of illness.

The optimization engine in AnyLogic automatically finds the best values for different model parameters with respect to certain pre-defined constraints and requirements using the OptQuest Engine that incorporates metaheuristics to guide its search algorithm toward better solutions32. Inputs selected for model calibration including their ranges of plausible values are listed in Table 1. We performed the model calibration at both state and individual county levels by matching the number of reported cases of COVID-19 with model predictions, while defining constraints with respect to the expected number of deaths in the state (or individual counties). Considering October 31st, 2020 as the model training end date, we used a weighted L1 norm equation as:

where Y = {Yi} (i = 1,…,T) is the target timeseries until day T (i.e., October 31st, 2020), X = {Xi} (i = 1,…,T) is the model output (i.e., number of cases of illness) and a is the decay factor. We used a = 0.4 in the model optimization as reported by Venkatramanan et al.33.

Multi-criteria framework for prioritizing counties based on the perceived risk of COVID-19

We used an MCDA framework to generate risk maps for individual states that highlight counties where surveillance and disease control measures could be potentially targeted based on the perceived levels of COVID-19 risks. The methodological steps required in our MCDA approach encompassed: (i) selection of decision criteria; (ii) definition of criterion measures; (iii) definition of scores assigned to each decision criterion representing low (1), medium (3), and high (9) perceived levels of risk; (iv) attribution of weights to decision criteria and (v) aggregation of risk scores across all selected decision criteria to generate the spatial maps for perceived levels of risk in each state.

Decision criteria, measures, and risk scores for ranking individual counties in each state are provided in Table 2 and briefly discussed in the following.

-

New daily cases (NDC) this criterion, comparable to incidence in epidemiology represents the incident number of COVID-19 cases in a community. We considered a three-day average of the predicted new cases (across all age cohorts) and a cut-off value of less than five new cases per 100,000 residents to score this criterion. A risk score of low (1), medium (3), or high (9) was assigned to this criterion in each county if the cut-off value was met within 21 days since the training end date (October 31st, 2020), after 21 days since the training end date but before the end of the simulation period (December 31st, 2020), or was never met during the simulation period, respectively.

-

Decline in new daily deaths (NDD) we assumed that a county must experience a sustained decline in the three-day rolling average of predicted daily hospital deaths over the course of a 21-day period to be considered low risk. Alternatively, counties that have seen few COVID cases overall would satisfy this metric if the three-day rolling average of daily new hospital deaths has never exceeded one. We used three-day average of the projected number of deaths across all age cohorts in each county and scored the county as low (1), medium (3), or high (9) if the cut-off value was met within 21 days since the training end date, after 21 days since the training end date but before the end of the simulation period, or was never met during the simulation period, respectively.

-

New hospitalizations (NH) In addition to monitoring the decline in disease trajectory, it is important to monitor the absolute level of infection in each county. It is possible for a county that has seen a high level of infections to see a sustained decline in hospitalizations and deaths over a 21-day period while still having an underlying infection rate that is too high. Using the total number of projected new hospitalization cases across all age cohorts, each county needed to have fewer than two new hospitalizations per 100,000 residents to be considered low risk. We used three-day average of the projected number of new hospitalizations across all age cohorts in each county and scored the county as low (1), medium (3), or high (9) if the cut-off value was met within 21 days since the training end date, after 21 days since the training end date but before the end of the simulation period, or was never met during the simulation period, respectively.

-

ICU bed utilization (BU) It is critical that regional healthcare systems have sufficient capacity for ICU beds. Taking into account the projected number of critically infected patients in each county across all ages and the ICU bed capacity in each county, we scored each county as low (1), medium (3), or high (9) if the cut-off value of 50% was met within 21 days since the training end date, after 21 days since the training end date but before the end of the simulation period, or was never met during the simulation period, respectively.

To simplify the scoring approach, we assigned equal weights to selected decision criteria and calculated aggregate risk scores across all decision criteria for different counties (RSi):

Summary of the model inputs

Data used in M2-CDRM came from a variety of sources, grouped into three categories of disease impact, demographic data, and hospital resources. Summary data used in the model, including data sources is listed in Table 3.

Results

State-level predictions

Tables 4 and 5 summarize the model predictions for number of COVID-19 cases aggregated across all age cohorts in the 20 most populous states in the United States. We reported a range of values for two-week (November 14, 2020), three-week (November 21, 2020), and four-week (November 28, 2020) out-of-sample model predictions based on the 95% confidence intervals reported for REt. We also reported the cumulative observed values for COVID-19 cases by selected dates and % error calculated by comparing the observed values with mean predictions. For each of these states, selected model parameters (listed in Table 1) were calibrated to replicate the observed cumulative number of cases between March 15 and October 31, 2020 across the whole state. We further used the state-wide calibrated model parameters for all individual counties in the selected state assuming no change in disease epidemiology in different localities (e.g., no change in critical infection rate for a particular age cohort across different counties in California). Summary results typically showed underestimated number of COVID-19 cases with variability in % error across different states. Furthermore, we observed relative decrease in model accuracy when period of out-of-sample predictions was increased from two to four weeks. For example, average % error for two-week out-of-sample prediction of cases was − 6.7% across all 20 states with a range of values between − 1.1% (California) and -16.9% (Michigan). We observed lower accuracy for the four-week out-of-sample case predictions with an average % error value of − 16.2% across all 20 states and a range of values between − 7.1 and − 32.4% for California and Michigan, respectively. Model results showed similar patterns for predicted number of COVID-19 deaths across these selected states (Table 5); however, the prediction accuracies were typically higher for cumulative number of deaths by selected dates. For example, average % error for two-week out-of-sample prediction of deaths across selected states was 3.2% (compared to − 6.7% error for prediction of cases) with a range of values between 0.1 and 15.2% for Missouri and Washington, respectively.

County-level predictions

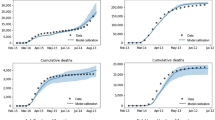

For each of the state-level predictions listed in Tables 4 and 5, our model generated results for each individual county within a state, allowing for analysis of the heterogenous disease growth patterns across localities. Although each county used an independent predicted timeseries for REt based on the county-specific observed cases of illness, a simplifying assumption was made that calibrated disease parameters (listed in Table 1) were homogenous across all counties in a particular state when model was trained to replicate the state-level observed cumulative number of cases and deaths between March 15, 2020–October 31, 2020. We further investigated the impact of this assumption on the model prediction accuracy by conducting a county-level calibration experiment across three localities in Virginia, including Richmond City, Montgomery County, and Norfolk City. The experiment included two scenarios to evaluate the out-of-sample model prediction accuracy between November 1 and 28 based on: (1) calibrated model parameters using state-level observed data (223,568 and 3973 for observed cumulative cases of illness and deaths in Virginia, respectively); and (2) county-level calibrated model parameters based on the county-specific observed data (6606, 3884, and 6423 for observed cases and 82, 15, and 89 for observed deaths in Richmond City, Montgomery County, and Norfolk City, respectively).

Figure 3 shows the resulting timeseries for the out-of-sample model predictions between November 1 and 28, 2020 for selected localities in Virginia including cumulative number of observed COVID-19 cases during the same time period. Each predicted timeseries represents model results for the cumulative COVID-19 cases based on the mean REt value as well as range of cases based on the 95% confidence interval associated with REt (shaded areas). Results indicated that conducting county-level model calibration led to increase in model accuracy. For example, % errors for four-week out-of-sample predictions were − 11.3%, − 15.4%, and − 8.4% for Richmond City, Montgomery County, and Norfolk City, respectively, when model parameters were calibrated using state-level cumulative number of observed cases. When model parameters were calibrated for each individual county, % errors reduced to − 6.9%, − 7.8%, and − 4.0% for the selected counties.

State-level risk maps using MCDA

In addition to out-of-sample case and death predictions across different localities in individual states, we utilized various county-level model outputs, including three-day rolling average of new daily cases per 100,000 residents, three-day rolling average of daily new hospital deaths, three-day rolling average of new hospitalizations per 100,000 residents, and ICU bed utilization percentages, and time to meet their cut-off values (listed in Table 2) to score individual counties with respect to their perceived levels of COVID-19 risks. Examples of model outputs for selected decision criteria are shown in Figs. 4,5,6 and 7 for four localities in Virginia, including Charlottesville City, Hampton City, Portsmouth City, and Spotsylvania County. Model results typically showed substantial variability in number of days required to achieve the scoring requirements for selected decision criteria since the training end date (October 31, 2020). For example, for the counties that have not met the criterion requirement before October 31, number of days to achieve a three-day rolling average of new cases per 100,000 residents of five or less was 59.7 days on average with a minimum value of only two days for Norton City while 85 out of 133 counties (64%) did not satisfy this requirement by the end of the model simulation time of December 31, 2020 (data not shown here).

We also calculated the aggregated risk scores across selected decision criteria for all counties in Virginia. The risk map based on the aggregated scores is shown in Fig. 8. Aggregated risk scores showed spatial variability with an average value of 14.3 across all counties and minimum and maximum values of 4 and 30, respectively. The model typically predicted higher aggregated risk scores (15 or higher) in the southwestern localities while lower scores (15 or lower) in the northern and eastern localities of the state, primarily due to additional hospital resources (e.g., number of general and ICU beds) in those counties.

Discussion

The COVID-19 pandemic has resulted in a global health crisis with unprecedented growing economic, social, and health impacts not seen since the 1918 Spanish flu pandemic. Computational models have played an important role in the ongoing crisis by providing insights regarding the disease spread dynamics as well as the potential impacts of public policies at the local, national, and global levels. Different models with a wide range of underlying methodologies have been used by policy makers and public health officials to assess the evolution of the COVID-19 pandemic, design and analyze control measures, and study various what-if scenarios. For example, the Centers for Disease Control and Prevention (CDC) has been working with different partners to bring together weekly COVID-19 forecasts based on statistical and mathematical models aiming to predict national and state numbers of new and total COVID-19 deaths as well as cases of infection and hospitalization34. Table 6 provides a summary of selected COVID-19 computational models available from the CDC website including their key features, geographic scope, methodology, frequency of updates, and ability to conduct what-if scenario analysis. The majority of these models have adapted different forms of the SD-based models (e.g., SEIR) with geographical scopes typically limited to the national or state level predictions. All models faced challenges due to availability of data, rapidly evolving pandemic and unprecedented control measures put in place. Despite these challenges, we believe that mathematical models can provide useful and timely information to the policy makers.

Like other computational modeling methods, commonly used SD-based models can be especially useful when invoked for the right task, however they are not appropriate for all forecasting, prediction, and scenario simulations. These models operate at an elevated level of abstraction, assume population homogeneity, and typically lack the ability to update underlying model parameters once new, real-time data become available. In this study, we developed a multi-method modeling approach by using an ABM framework to combine thousands of age-stratified and location-specific SEIR models that could potentially capture essential virus transmission dynamics for the purpose of modeling COVID-19 spread over time and in different localities with increased model fidelity. The proposed simulation model showed potential for use by decision makers as an effective virtual laboratory in performing what-if analysis and quantifying perceived levels of health risks by combining forecasted outcomes with user-defined health metrics in a multi-criteria decision framework. While the current case study is focused on COVID-19, the modular framework of our solution easily allows future adaptation to any high-consequence public health threats.

We have also addressed some of the key limitations of SD-based epidemiological models. First, current SD-based epidemiological models typically approximate the spread of COVID-19 at the state and national level. These models do not account for the effect of mitigation policies, population demographics, or cohort behaviors on disease spread dynamics at local levels. Our multi-method approach provided enhanced precision and fidelity at the local level. Second, existing SD-based models typically focus on the constant value of the basic reproduction number (R0) as a measure of disease transmissibility. We used potential changes in R0 over time, represented by RE, which reflected how the disease transmission within the population changed over time. We used this dynamic adjustment to assess how changes in mitigation policies, population immunity, and population behaviors, among other factors, could potentially affect COVID-19 transmission at specific time and location points. Lastly, most SD-based models fail to account for the effect of population demographics (e.g., age), particularly at the county and local levels. We believe that characterizing model parameters such as disease transmission, hospitalization, critical infection, and fatality rates based on the population demographics potentially mitigates the bias for under-represented segments of the population.

We are also aware that computational models are approximations of the real-life scenarios. There are currently no predictive models that generate a highly accurate picture of the COVID-19 disease spread or its clinical impacts, including ours, as too many factors can potentially affect the spread of the disease. For example, our model showed to underestimate cases and overestimate deaths. Modelling exercises tend to carry forward certain distortions that are inherent to the complex and dynamic characteristics of real-world reporting systems when considering rapidly evolving epidemiological scenarios. In the case of COVID-19, factors such as a sub-optimal standardization in the coding and reporting of potential, suspected, and confirmed cases may have introduced information biases in reality that generate mismatches with the model outcomes. A similar phenomenon could have taken place in terms of inaccuracies regarding causes of deaths and the role of COVID-19 in death certificates.

We also acknowledge that there were multiple sources of uncertainty in our model resulting in prediction inaccuracies and errors as reported in Tables 4 and 5. Key sources of uncertainty in our model potentially included model structure (e.g., set of differential equations identified for disease dynamics), model detail (e.g., simplifying assumptions related to reinfection as well as between-county population movements), model calibrations (e.g., state versus county-level parameter calibration), and scenario reasonableness (e.g., assumption of homogenous age-stratified reproduction numbers.

There are areas for improvement in our modeling approach that can potentially reduce the above uncertainties and enhance the prediction accuracies. For example, alternative sets of scientific or technical assumptions might be available for developing the complex dynamics of COVID-19 disease spread. The implications of these alternative foundations may be evaluated by constructing alternative models and comparing results across different solutions. It may be possible to potentially parameterize alternative model structures into a higher order model, and to evaluate the impact of modeling assumptions using sensitivity analysis. Also, while we used the observed daily cases of COVID-19 to characterize location-specific timeseries for RE, future values were approximated using exponential regression models fitted to the latest two weeks of data. This approximation may potentially pose bias and limitations in forecasting the disease dynamics in populous areas where changes in behaviors (e.g., lack of social distancing, limited stay-at-home restrictions) can significantly impact the disease spread trajectory. We understand that recent studies have demonstrated promising use of novel forecasting methodologies to characterize relationships between human micro-level activities and movements based on telemetry data and micro-level RE values35,36,37. Such methodologies can be potentially coupled with our modeling approach. Furthermore, our model relies on the current body of evidence with regards to the chances of reinfection. In this sense, recovered patients are considered to be immune to future COVID-19 infections. These assumptions are being revised as new viral variants are identified, which might imply the need to redefine the basic assumptions of the model. Also, the current approach for calibrating the model parameters is largely an ad-hoc simulation-based procedure based on the state-level observed cases of infection as well as death. Although computationally intensive, we demonstrated that the model accuracy could be substantially improved when calibrations were conducted at the local levels (e.g., individual counties). Finally, we did not estimate age-stratified timeseries for RE because reported daily cases of COVID-19 currently do not contain demographic data including age. Accounting for heterogeneity in transmission due to demographic factors and also estimating age-stratified reproduction numbers could provide insight into differences in transmission potential by age and other factors. In addition, although the use of age serves as proxy of several risk factors and health conditions, subsequent improvements of this modeling approach could account for other epidemiological and demographic population characteristics that are highly correlated with COVID-19 transmission and outcomes. This is the case for co-morbidities, mobility patterns, population density, and climate, among others.

References

Lang, T. Plug COVID-19 research gaps in detection, prevention and care. Nature 583, 333 (2020).

Bhatia, R., Sledge, I. & Baral, S. The missing science: Epidemiological data gaps for COVID-19 policy in the United States. medRxiv. https://doi.org/10.1101/2021.02.11.21251602 (2021).

Jenner, A. L., Aogo, R. A., Davis, C. L., Smith, A. M. & Craig, M. Leveraging computational modeling to understand infectious diseases. Curr. Pathobiol. Rep. 8, 149–161 (2020).

Kok, S. et al. Optimizing an HIV testing program using a system dynamics model of the continuum of care. Health Care Manag. Sci. 18(3), 334–362 (2015).

Thompson, K. M., Duintjer Tebbens, R. J., Pallansch, M. A., Wassilak, S. G. & Cochi, S. L. Polio eradicators use integrated analytical models to make better decisions. Interfaces 45(1), 5–25 (2015).

Sharareh, N., Sabounchi, N.S., Sayama, H. & MacDonald, R. The Ebola crisis and the corresponding public behavior: a system dynamics approach. PLoS Curr. 8 (2016).

van Ackere, A. & Schulz, P. J. Explaining vaccination decisions: a system dynamics model of the interaction between epidemiological and behavioral factors. Socio-Econ. Plan. Sci. 71, 100750 (2020).

Anderson, R. M. & May, R. M. Infectious Diseases of Humans: Dynamics and Control (Oxford University Press, 1991).

Keeling, M. J. & Rohani, P. Modeling Infectious Diseases in Humans and Animals (Princeton University Press, 2011).

Roberts, M., Andreasen, V., Lloyd, A. & Pellis, L. Nine challenges for deterministic epidemic models. Epidemics 10, 49–53 (2015).

Merler, S. et al. Spatiotemporal spread of the 2014 outbreak of Ebola virus disease in Liberia and the effectiveness of non-pharmaceutical interventions: a computational modelling analysis. Lancet Infect. Dis. 15(2), 204–211 (2015).

Crooks, A. T. & Hailegiorgis, A. B. An agent-based modeling approach applied to the spread of cholera. Environ. Modell. Softw. 62, 164–177 (2014).

Macal, C. M. & North, M. J. (2005). Tutorial on agent-based modeling and simulation. Proceedings of the Winter Simulation Conference, IEEE. https://doi.org/10.1109/WSC.2005.1574234 (2005).

Crooks, A. T. & Heppenstall, A. J. Introduction to Agent-Based Modeling. in Agent-based models of geographical systems 85–105 (Springer, Netherlands, 2012).

Midgley, D., Marks, R. & Kunchamwar, D. Building and assurance of agent-based models: an example and challenge to the field. J. Bus. Res. 60(8), 884–893 (2007).

Bonabeau, E. Agent-based modeling: methods and techniques for simulating human systems. PNAS 99(3), 7280–7287 (2002).

Frias-Martinez, E., Williamson, G. & Frias-Martinez, V. An agent-based model of epidemic spread using human mobility and social network information. In 2011 IEEE 3rd international conference on privacy, security, risk and trust and 2011 IEEE third international conference on social computing, IEEE. https://doi.org/10.1109/PASSAT/SocialCom.2011.142 (2011).

Li, Y., Zhang, Y. & Cao, L. Evaluation and selection of hospital layout based on an integrated simulation method. WSC, 2560–2568 (2020).

Brailsford, S.C. Hybrid simulation in healthcare: new concepts and new tools. WSC, 1645–1653 (2015).

Viana, J. Reflections on two approaches to hybrid simulation in healthcare. WSC, 1585–1596 (2014).

Vaughan, L. et al. Relationship of socio-demographics, comorbidities, symptoms and healthcare access with early COVID-19 presentation and disease severity. BMC Infect. Dis. 21(1), 1–10 (2021).

Davies, N. G. et al. Age-dependent effects in the transmission and control of COVID-19 epidemics. Nat. Med. 26, 1205–1211 (2020).

Lau, M. S. et al. Characterizing superspreading events and age-specific infectiousness of SARS-CoV-2 transmission in Georgia, USA. PNAS 117(36), 22430–22435 (2020).

Wu, S. L. et al. Substantial underestimation of SARS-CoV-2 infection in the United States. Nat. Commun 11, 4507 (2020).

Gostic, K. M. et al. Practical considerations for measuring the effective reproductive number, Rt. medRxiv https://doi.org/10.1101/2020.06.18.20134858 (2020).

Pan, A. et al. Association of public health interventions with the epidemiology of the COVID-19 outbreak in Wuhan, China. JAMA 323(19), 1915–1923 (2020).

Kucharski, A. J. et al. Early dynamics of transmission and control of COVID-19: a mathematical modeling study. Infect. Dis. 20(5), 553–558 (2020).

Cauchemez, S., Kiem, C. T., Paireau, J., Rolland, P. & Fontanet, A. Lockdown impact on COVID-19 epidemics in regions across metropolitan France. Lancet 396(10257), 1068–1069 (2020).

Flaxman, S. et al. Estimating the effects of non-pharmaceutical interventions on COVID-19 in Europe. Nature 584, 257–261 (2020).

Cori, A., Ferguson, N. M., Fraser, C. & Cauchemez, S. A new framework and software to estimate time- varying reproduction numbers during epidemics. Am. J. Epidemiol. 178(9), 1505–1512 (2013).

Nishiura, H. Correcting the actual reproduction number: a simple method of estimating R0 from early epidemic growth data. Int. J. Environ. Res. Public Health 7, 291–302 (2020).

Laguna, M. Optimization of Complex Systems with OptQuest (University of Colorado, 1997).

Venkatramanan, S. et al. Using data-driven agent-based models for forecasting emerging infectious diseases. Epidemics 22, 43–49 (2018).

Center for Disease Control and Prevention. Interpretation of forecasts of new and total deaths. CDC https://www.cdc.gov/coronavirus/2019-ncov/covid-data/forecasting-us.html (2021).

Rudiger, S. et al. Forecasting the SARS-CoV-2 effective reproduction number using bulk contact data from mobile phones. medRxiv. https://doi.org/10.1101/2020.10.02.20188136 (2020).

Linka, K., Goriely, A. & Kuhl, E. Global and local mobility as a barometer for COVID-19 dynamics. medRxiv. https://doi.org/10.1101/2020.06.13.20130658 (2020).

Leung, K., Wu, J.T. & Leung G.M. Real-time tracking and prediction of COVID-19 infection using digital proxies of population mobility and mixing. medRxiv. https://doi.org/10.1101/2020.10.17.20214155 (2020).

Author information

Authors and Affiliations

Contributions

A.M. and C.M. contributed equally to this work. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mokhtari, A., Mineo, C., Kriseman, J. et al. A multi-method approach to modeling COVID-19 disease dynamics in the United States. Sci Rep 11, 12426 (2021). https://doi.org/10.1038/s41598-021-92000-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-92000-w

This article is cited by

-

Wastewater-based epidemiology predicts COVID-19-induced weekly new hospital admissions in over 150 USA counties

Nature Communications (2023)

-

Systematic review of MCDM approach applied to the medical case studies of COVID-19: trends, bibliographic analysis, challenges, motivations, recommendations, and future directions

Complex & Intelligent Systems (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.