Abstract

Aphasia affects at least one third of stroke survivors, and there is increasing awareness that more fundamental deficits in auditory processing might contribute to impaired language performance in such individuals. We performed a comprehensive battery of psychoacoustic tasks assessing the perception of tone pairs and sequences across the domains of pitch, rhythm and timbre in 17 individuals with post-stroke aphasia and 17 controls. At the level of individual differences we demonstrated a correlation between metrical pattern (beat) perception and speech output fluency with strong effect (Spearman’s rho = 0.72). This dissociated from more basic auditory timing perception, which did not correlate with output fluency. This was also specific in terms of the language and cognitive measures, amongst which phonological, semantic and executive function did not correlate with beat detection. We interpret the data in terms of a requirement for the analysis of the metrical structure of sound to construct fluent output, with both being a function of higher-order “temporal scaffolding”. The beat perception task herein allows measurement of timing analysis without any need to account for motor output deficit, and could be a potential clinical tool to examine this. This work suggests strategies to improve fluency after stroke by training in metrical pattern perception.

Similar content being viewed by others

Introduction

Post-stroke aphasia (PSA) is prevalent1 and debilitating2, and there is increasing awareness that deficits in non-verbal cognitive processing might contribute to language performance in such individuals3,4,5. Indeed, there is evidence from multiple aetiologies including congenital amusia6,7, developmental dyslexia8, specific language impairment9, children who stutter10 and primary progressive aphasia11,12 that language impairment can co-occur with more fundamental deficits in auditory processing, just as auditory processing abilities associate with language performance in healthy children13 and adults14. However, there has been no systematic investigation of auditory processing deficits across the range of pitch and melody, rhythm and metre, and timbre in post-stroke aphasia and whether these are associated with participants’ language impairments. In particular, it is unknown whether ‘output’ speech production deficits are associated with ‘input’ auditory processing deficits post stroke, as has been found in primary progressive aphasia11,12. We have therefore assessed central auditory processing of pitch, rhythm and timbre in a cohort of individuals with chronic PSA.

There are several specific questions we sought to address.

The small number of previous studies assessing central auditory processing in PSA have failed to assess the full range of auditory processing domains pertinent to language, including pitch and melody, rhythm and metre, and timbre15,16. For instance, both Fink et al. and Von Steinbüchel et al. found impaired temporal order judgement in PSA, but did not assess melody, metre or timbre processing17,18. Similarly, Robson et al. assessed pure tone frequency discrimination and timbre but not melody, rhythm or metre in a selective PSA group of Wernicke’s aphasia5,19, while Robin et al. assessed pitch and rhythm but not timbre processing20. Sihvonen et al. assessed pitch and rhythm but not metre or timbre21. Furthermore, most previous studies have tested the auditory processing of tone pairs or short tone sequences rather than longer and more abstract tone sequences, which may overlook processes used during the comprehension and production of more complex, naturalistic auditory stimuli such as language. We therefore sought to measure auditory processing of tone pairs and sequences across the range of pitch and melody, rhythm and metre, and timbre domains in individuals with PSA.

Individuals with primary progressive aphasia have deficits in non-verbal auditory processing12. Intriguingly, recent work found that the non-fluent variant is particularly associated with auditory processing deficits for sequences of tones, suggesting the existence of a common neural substrate for processing auditory rhythmic structure in both auditory input and speech output11. Such a relationship has never been investigated in PSA; the aforementioned studies have only examined the relationship between auditory processing and language tests assessing comprehension but not speech production. Furthermore, previous work identified differences in auditory sequence processing at the group level between non-fluent and fluent variants of primary progressive aphasia11, but was not able to assess whether auditory sequence processing correlated with behavioural measures of speech fluency across individuals. If present, a relationship between auditory processing of tone sequences and speech output fluency would have significant implications for our understanding of fluent speech production, and for rehabilitation strategies such as melodic intonation therapy that might target such underlying auditory processing deficits in PSA22. We therefore had the a priori hypothesis that speech output fluency would correlate with the auditory processing of sequences of tones, but not with the processing of simpler tone pairs, in PSA.

We performed a comprehensive battery of psychoacoustic tasks assessing pitch, rhythm and timbre in a cohort of individuals with chronic PSA for whom a large range of detailed language assessments encompassing phonology, semantics, fluency and executive function were available. Our aims were: (a) to characterise the profile of auditory processing deficits in chronic PSA; and (b) to test the hypothesis that speech output fluency would correlate with the ability to process sequences of tones, but not with the ability to process simpler tone pairs.

Materials and methods

Participants

17 individuals with chronic PSA (the ‘PSA subgroup’) and 17 healthy controls participated in the psychoacoustic testing. Individuals with PSA were at least 1 year post left hemispheric stroke (either ischaemic or haemorrhagic), pre-morbidly right-handed and part of a larger cohort of 76 stroke survivors recruited from community groups throughout the North West of England for whom extensive neuropsychological and imaging data was available (Supplementary Methods). A number of the participants with PSA have been included in previous publications23,24. Aphasia was diagnosed and classified using the Boston Diagnostic Aphasia Examination (BDAE)25 and individuals with severe motor-speech disorders were excluded after being screened by a qualified speech-language pathologist. Controls were recruited from the volunteer panel of the MRC Cognition and Brain Sciences Unit, were right handed and had no history of neurological injury. All participants were native English speakers. Informed consent was obtained from all participants according to the Declaration of Helsinki under approval from the ‘North West—Haydock’ NHS research ethics committee (reference 01/8/094).

Demographic and clinical data for the PSA subgroup and controls are shown in the Supplementary Information. The PSA subgroup and controls were not statistically significantly different at the group level for age (mean 60.9 [SD 9.5] years in stroke survivors vs 62.5 (SD 4.3) in controls; t-test, t22 = − 0.60, two-sided p = 0.55) and sex (8 females in stroke survivors vs 9 females in controls; Chi-Square test, χ21 = 0.12, two-sided p = 0.73) but the PSA subgroup had significantly fewer years of education than controls (median 11.0 [IQR 6.0] years in stroke survivors vs 16.0 [IQR 4.0] in controls; Mann–Whitney U-test, U = 216.5, two-sided p = 0.01) (Supplementary Table S1). Over half (52.9%) of the PSA subgroup had a classification of ‘anomia’; the remainder were classified as Broca’s aphasia (17.6%), conduction aphasia (17.6%), mixed nonfluent aphasia (5.9%) and transcortical motor aphasia (5.9%) (Supplementary Table S2). PSA participants were in the chronic phase post-stroke (median 38 [IQR 42] months from stroke to neuropsychological testing) (Supplementary Table S2).

Pure-tone audiograms were recorded in all participants using a Guymark Maico MA41 audiometer with Sennheiser HDA300 headphones to assess for evidence of peripheral hearing loss that might affect performance on the psychoacoustic tests. All participants had mean hearing levels < 20 dB HL between 0.25–1 kHz at octave intervals in at least one ear (Supplementary Table S3). There were no significant differences in pure tone audiometry thresholds between the PSA subgroup and controls (Supplementary Table S4). Mean pure tone detection thresholds between 0.25 and 1 kHz were not significantly correlated with any of the psychoacoustic measures in the PSA subgroup (Supplementary Table S5).

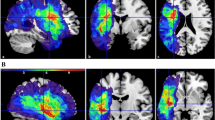

Lesion overlap map

Lesions were segmented from structural T1-weighted MRI images and normalised to MNI space using LINDA v0.5.0 (http://www.github.com/dorianps/LINDA)26,27. The lesion overlap map of the PSA subgroup is shown in Fig. 1; it encompasses most of the left hemisphere including subcortical white matter.

Neuropsychological tests

The entire cohort of 76 stroke survivors had previously been administered an extensive battery of neuropsychological tests and principal component analysis (PCA) results from earlier collection rounds of this database have been published28,29,30. Controls performed the ‘Cookie theft’ description (BDAE25) and the Raven’s Coloured Progressive Matrices31. Neuropsychological test scores for the entire cohort of 76 individuals with PSA are shown in Supplementary Table S6.

Psychoacoustic tests of pitch, rhythm and timbre

The psychoacoustic tests used in this study had been developed and published previously11 and were administered using Matlab R2018a between February 2018 and July 2019. Controls performed the ‘Cookie theft’ description25 and the Raven’s31 on the same day as psychoacoustic testing, while PSA participants had a median time interval of 36 (IQR 21) months between neuropsychological and psychoacoustic testing (Supplementary Table S2). Participants with PSA were stable in their cognitive performance over time (see Supplementary Table S7). In both the PSA subgroup and controls we measured: pitch basic change detection (P1); pitch detection of local change (P2); pitch detection of global change (P3); single time interval discrimination (R1); isochrony deviation detection (R2); metrical pattern discrimination (R3); and Dynamic Modulation (DM) detection. P2 and P3 required a same-different choice at a fixed difficulty level, whereas the other tests (P1, R1, R2, R3, DM) used a two-alternative forced-choice adaptive paradigm with a two-down, one-up algorithm that estimated the 70.9% correct point of the psychometric function32. Of note, single time interval discrimination is taken to be a measure of rhythm processing in this study.

At the start of each test, instructions were explained in a way that was understandable for the participant, and practice trials with feedback were performed to ensure that the participant understood the task and could perform reliably at the easiest difficulty level. Certain tasks using the adaptive difficulty paradigm were frequently too difficult for PSA participants to perform the default starting difficulty used in controls; PSA participants were therefore started at an easier level on these tasks. Due to the large number of trials performed (> 50), all participants reached a stable performance plateau by the end of each test. A basic description of the psychoacoustic tasks are provided below, but a more detailed description was published previously11.

All three pitch tasks (P1–P3) used pure tones of 250 ms duration and a 2000 ms interval between stimuli on each trial. In P1, two pairs of pure tones were presented on each trial. The participant had to indicate whether the first or the second pair had a change in frequency (either up or down). The size of the change in frequency was adaptively reduced and the threshold, in semitones, was used as the outcome measure. The task comprised of 50 trials.

In P2 and P3, two sequences of four tones were played on each trial. Tones within each sequence had varying pitch; participants had to indicate whether the first and second sequences were the same or different to each other. In P2, on the ‘different’ trials, there was one change in frequency in the third or fourth tone that produced a ‘local’ change in pitch without a change in the ‘global’ pattern of ‘ups and downs’. In P3, on the ‘different’ trials, the change in frequency of the third or fourth tone produced a change in the global pitch ‘contour’ of ‘ups and downs’. P2 and P3 each consisted of 40 trials (20 ‘same’ and 20 ‘different). The percentage (score) correct on each task was the outcome measure.

All three rhythm tasks (R1-R3) used 500 Hz, 100 ms pure tones. In R1, two tone pairs were played on each trial. Each pair of tones had a slightly different inter-onset interval; the range of inter-onset intervals was 300–600 ms. Participants had to indicate whether the first or the second tone pair had the longer interval. R1 comprised of 50 trials; the difference between the interval of the first and the second pair was adjusted adaptively. The threshold, expressed as the percentage difference between the interval of the shorter and longer tone pair relative to the inter-onset-interval of the shorter pair, was used as the outcome measure.

In R2, each trial consisted of two five-tone sequences. One sequence was perfectly isochronous (i.e. had a constant inter-onset interval, with a value between 300 and 600 ms that was varied between trials); the other sequence was not isochronous but had one lengthened inter-onset interval (between the third and fourth tone). Participants had to indicate whether the first or the second sequence contained an ‘extra gap’. R2 comprised of 50 trials; the difference between the lengthened inter-onset interval and the isochronous inter-onset interval was adaptively adjusted. The threshold, expressed as the percentage difference (relative to the otherwise isochronous inter-onset-interval), was used as the outcome measure.

In R3, each trial consisted of three seven-tone rhythmic sequences. Each sequence contained seven tones distributed over 16 time units of 180 to 220 ms each, with the unit duration varied between sequences (i.e. within trials). In its correct version the pattern of the sequences featured a strongly metrical beat induced by accented tones occurring every fourth time unit33. The first sequence on each trial always had the correct pattern, one of the second or the third sequence on each trial was the same as the first, but the remaining sequence (i.e. the third or the second) had a perturbation in the rhythm that affected the entire pattern and distorted its metricality (for details see33). The participant had to indicate whether the second or the third sequence sounded ‘different’ or ‘wrong’. R3 contained 50 trials; the percentage difference in relative interval timing was adaptively adjusted and the threshold, expressed as the percentage of perturbation relative to the correct pattern, used as the outcome measure as described in33.

In the DM detection task, two 1000 ms sounds were played on each trial. One sound was unmodulated, the other sound was spectro-temporally modulated13,15. Participants had to indicate whether the first or the second sound was modulated. The degree of modulation was adaptively adjusted over 50 trials in the adaptive paradigm; the threshold was used as the outcome measure.

Sample audio files of example trials for each of the psychoacoustic tests are available as Supplementary Material.

Statistical analysis

All variables were assessed for normality using the Kolmogorov–Smirnov test. As the PSA subgroup had significantly fewer years of education than controls (see earlier), group level comparisons between PSA and control participants were performed with years of education as a covariate of no interest. As several psychoacoustic, neuropsychological and pure tone audiometry variables were not normally distributed, non-parametric tests (Mann–Whitney U tests, one-way rank analysis of covariance (ANCOVA)34, Spearman correlations) were used.

Given the large number of neuropsychological measures available, we used a varimax-rotated PCA to reduce these scores to a smaller number of dimensions, as has been done previously23,28. As PCA is more stable with larger samples35, we performed the PCA on the correlation matrix of neuropsychological scores of the entire cohort of PSA participants (n = 76), which has been shown formally to be highly reliable and stable29. Scores from Principal Components (PCs) with an eigenvalue greater than 1 were taken to be estimates of underlying cognitive components in our 17 PSA participants and were used in correlation analyses with the psychoacoustic measures. We confirmed that PC scores remained representative of underlying cognitive components in the 17 PSA subgroup through correlation analyses with neuropsychological scores.

We defined statistical significance as p < 0.05 with Bonferroni correction (by the number of tests) applied to the significance thresholds (i.e. reported p-values are uncorrected). Reported p-values for correlations between neuropsychological and/or psychoacoustic scores are one-tailed due to a priori hypotheses as to the direction of the associations, i.e. that better performance on auditory tasks would be associated with higher language scores. See the Supplementary Material for additional methodological details.

Results

Speech output fluency and executive function

At the group level, the PSA subgroup was not significantly worse than controls on the Raven’s Progressive Coloured Matrices (one-way rank ANCOVA with years of education as covariate, F(1,32) = 0.41, p = 0.53). The PSA subgroup produced significantly fewer words per minute (one-way rank ANCOVA with years of education as covariate, F(1,32) = 23.35, p = 0.00003), mean length of utterances (one-way rank ANCOVA with years of education as covariate, F(1,32) = 24.75, p = 0.00002) and speech tokens (one-way rank ANCOVA with years of education as covariate, F(1,32) = 15.72, p = 0.0004) on the ‘Cookie theft’ description task than controls (Supplementary Table S8). This confirms that the PSA subgroup had significantly impaired fluency of connected speech, but relatively preserved non-linguistic cognitive function, compared to controls. Speech fluency and cognitive function test scores for the PSA subgroup and controls are shown in Supplementary Table S1.

Auditory processing

Figure 2 shows the time course of the participants’ performances on the seven psychoacoustic tests. P2 and P3 required a same-different choice at a fixed difficulty level and their cumulative scores correct graphs demonstrate a progressive increase of the cumulative correct score throughout both tests. The other psychoacoustic tests (P1, R1-R3, DM) used an adaptive difficulty paradigm and their “staircase plots” demonstrate a consistent decrease in the detection or discrimination threshold until a stable plateau was reached before the end of each test. Figure 2 suggests that participants in the PSA subgroup were able to perform the psychoacoustic tests consistently, without evidence of fatigue or losing track of the task. Psychoacoustic scores for the PSA subgroup and controls are shown in Supplementary Table S1.

Timecourses for the seven psychoacoustic tests. Each graph shows the timecourse of all participants’ performances throughout one of the seven psychoacoustic tests. X-axes represent trial number. Grey shaded areas represent ± the mean absolute difference from the median performance for all PSA participants (dark grey) and all control participants (light grey); overlap between PSA participants and controls is indicated by crossed-hatch. ‘P1’ = pitch basic change detection; ‘P2’ = pitch detection of local change; ‘P3’ = pitch detection of global change; ‘R1’ = rhythm single time interval discrimination; ‘R2’ = rhythm isochrony deviation detection; ‘R3’ = rhythm metrical pattern discrimination for a strongly metrical sequence; ‘DM’ = Dynamic Modulation detection.

The PSA group were only statistically significantly impaired in comparison to the controls on one of the seven psychoacoustic tests performed; this was Dynamic Modulation detection (one-way rank ANCOVA with years of education as covariate, F(1,32) = 12.58, p = 0.001; threshold higher in participants with PSA than controls) (Table 1). For the other six psychoacoustic tests performed there was no significant impairment at the group level (Table 1).

It was possible that PSA participants might have been significantly impaired on psychoacoustic tasks at the individual level, even if there was no group difference compared to controls. We therefore compared, individually, each PSA participant’s psychoacoustic scores to the control group data using the Bayesian Test for a Deficit controlling for years of education as a covariate36. This conservative analysis identified a number of impairments at the individual level. In addition, several participants with PSA were unable to perform one or more of the rhythm processing tasks at the easiest difficulty level; we were not able to include these participants in group difference or correlation analyses of the corresponding psychoacoustic test as a reliable threshold was not able to be obtained, but these participants clearly had impaired auditory processing as well. Overall, five participants with PSA were able to perform the task but had significantly impaired performance on DM detection; three participants with PSA were able to perform the task but had significantly impaired performance on basic pitch discrimination (P1); one participant with PSA was able to perform the task but had significantly impaired performance on single time interval discrimination (R1), with an additional participant being unable to perform this task at the easiest difficulty level; two participants with PSA were able to perform the task but had significantly impaired performance on isochrony deviation detection (R2), with an additional three participants being unable to perform this task at the easiest difficulty level; and two participants with PSA were unable to perform metrical pattern discrimination (R3) at the easiest difficulty level (Supplementary Table S9). We did not find evidence that any of the participants with PSA were significantly impaired at the individual level on either of the psychoacoustic tests requiring processing of pitch in short tone sequences (P2, P3) (Supplementary Table S9).

Principal component analysis of neuropsychological scores

We performed varimax-rotated PCA on the correlation matrix of neuropsychological test scores of the entire cohort of stroke survivors (n = 76), including those who underwent psychoacoustic testing (n = 17) and those who did not (n = 59). The Kaiser–Meyer–Olkin value was 0.82, indicating adequate sampling37. Four rotated PCs with eigenvalues greater than 1 explaining 27.73% (PC1), 17.71% (PC2), 17.63% (PC3) and 15.22% (PC4) of the variance were obtained, consistent with previous publications using data from this cohort of stroke survivors28,29,30 (Table 2). PC1 was loaded onto primarily by immediate non-word and word repetition (subtests 8 and 9 from the Psycholinguistic Assessment of Language Processing in Aphasia battery38), Boston Naming Test39, Forward Digit Span40, and Cambridge Semantic Battery picture naming41; it was interpreted as representing phonological ability. PC2 was loaded onto primarily by the Cambridge Semantic Battery spoken word-to-picture matching41, spoken sentence comprehension from the Comprehensive Aphasia Test42, 96-trial synonym judgement test43 and Type-Token Ratio from the ‘Cookie theft’ picture description task; PC2 was interpreted as representing semantic processing. PC3 was loaded onto mainly by the number of speech tokens, Mean Length of Utterance and Words Per Minute from the ‘Cookie theft’ picture description task; this component was interpreted as representing fluency of connected speech. PC4 was loaded onto primarily by the Raven’s Coloured Progressive Matrices and the Brixton Spatial Anticipation Test44; this was interpreting as representing executive function.

Principal Component 3 as a measure of speech output fluency

In the PCA performed on the neuropsychological scores of the entire cohort of 76 individuals with PSA, PC3 was loaded by measures of connected speech fluency obtained from the ‘Cookie theft’ description task (all loadings ≥ 0.79); PC3 was not loaded by any of the other neuropsychological scores (all other loadings ≤ 0.25) (Table 2). To ensure that PC3 remained associated with speech fluency but not the other neuropsychological scores in the PSA subgroup, we computed Spearman correlations between PC3 score and all 14 neuropsychological test scores in the PSA subgroup (Supplementary Table S10). The three ‘Cookie theft’ fluency scores (Words Per Minute, Mean Length of Utterance and number of speech tokens) correlated positively with PC3 (lowest Spearman rho 0.91), even after Bonferroni correcting for 14 multiple comparisons (Supplementary Table S10). By contrast, all other neuropsychological test scores did not significantly correlate with PC3 (highest Spearman rho 0.34), even before Bonferroni correction (Supplementary Table S10). This confirms that PC3 is a specific measure of speech output fluency in the PSA subgroup who underwent psychoacoustic testing.

In the PCA performed on the neuropsychological scores of the entire cohort of 76 individuals with PSA, PCs were, by definition, orthogonal. In the PSA subgroup, PC3 was neither significantly correlated with PC1 (Spearman’s rho = − 0.25; two-sided p = 0.33) nor with PC4 (Spearman’s rho = 0.08; two-sided p = 0.77) but was significantly negatively correlated with PC2 (Spearman’s rho = − 0.67; two-sided uncorrected p = 0.003; better PC3 fluency associated with worse PC2 semantics). In subsequent analyses assessing associations between auditory processing and PC3 (fluency), we therefore partialled out PC2 scores to ensure that any associations with PC3 could not be explained by confounding with PC2.

Auditory sequence processing and speech output fluency

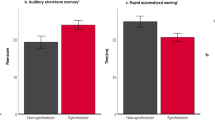

In order to test the hypothesis that the auditory analysis of tone sequences would be associated with speech output fluency in PSA, we computed Spearman correlations between psychoacoustic measures and PC3 (Table 3). The psychoacoustic measures that correlated significantly with PC3 after Bonferroni correction were: P3 (Spearman’s rho = 0.63; uncorrected one-sided p = 0.003; better pitch global change detection associated with better speech fluency); R1 (Spearman’s rho = − 0.64; uncorrected one-sided p = 0.004; better single time interval discrimination associated with better speech fluency); and R3 (Spearman’s rho = − 0.72; uncorrected one-sided p = 0.001; better metrical pattern discrimination associated with better speech fluency) (Fig. 3) (Table 3).

Psychoacoustic measures and speech fluency. Scatter plots showing significant correlations between ‘fluency’ Principal Component 3 scores (y-axis) and P3 (better pitch global change detection associated with better speech fluency), R1 (better single time interval discrimination associated with better speech fluency) and R3 (better metrical pattern discrimination associated with better speech fluency) (on x-axes) in the group of participants with post-stroke aphasia. The straight line represents the least-squares line of best fit. ‘PC’ = Principal Component; ‘P3’ = pitch detection of global change; ‘R1’ = rhythm single time interval discrimination; ‘R3’ = rhythm metrical pattern discrimination for a strongly metrical sequence.

We wanted to identify psychoacoustic measures that were specifically associated with fluency, independent of other components of language. As PC3 scores were significantly negatively correlated with ‘semantic’ PC2 scores in this PSA subgroup, we repeated Spearman correlations between psychoacoustic measures and ‘fluency’ PC3 scores, while partialling out ‘semantic’ PC2 scores (Table 4). After partialling out PC2, only R3 remained significantly correlated with PC3 fluency scores (Spearman’s rho = − 0.66, uncorrected one-sided p = 0.005; more precise metrical rhythmic pattern discrimination associated with higher speech fluency) (Table 4). The psychoacoustic measures from the other tests based on tone sequences (P2, P3 and R2), or the more basic psychoacoustic tests using single sounds or tone pairs (P1, R1 and DM), were not significantly correlated with PC3 scores after partialling out PC2 and Bonferroni correcting for multiple comparisons (n = 7; Table 4).

In order to confirm that rhythm metrical pattern discrimination (R3) was specifically associated with PC3 fluency, and not with other PCs of language, we performed Spearman correlations between R3 and PC1, PC2 and PC4 scores. R3 was not significantly correlated with PC1 (Spearman’s rho = − 0.03; one-sided uncorrected p = 0.45), PC2 (Spearman’s rho = 0.39; one-sided uncorrected p = 0.07) or PC4 (Spearman’s rho = 0.29, one-sided uncorrected p = 0.15). In order to confirm that R3′s association with PC3 was not due to increased executive processing demands during R3 relative to other psychoacoustic tasks, we performed Spearman correlations between R3 and PC3 while partialling out PC4 ‘executive’ scores. R3 remained significantly correlated with PC3 ‘fluency’ scores with no decrease in strength after partialling out PC4 (Spearman’s rho = − 0.75; one-sided uncorrected p = 0.001). Finally, to exclude effects of peripheral hearing, we confirmed that R3 was not significantly correlated with mean pure tone audiometry thresholds between 0.25 and 1 kHz on either side (Supplementary Table S5).

Dynamic modulation detection and language

Since the PSA subgroup performed significantly worse than controls on DM detection (Table 1), we additionally performed Spearman correlations to look for any association between DM detection and the other PCs of language. DM detection was not significantly correlated with PC1 (Spearman’s rho = − 0.03; one-sided uncorrected p = 0.45), PC2 (Spearman’s rho = 0.31; one-sided uncorrected p = 0.11) or PC4 (Spearman’s rho = − 0.14; one-sided uncorrected p = 0.29). As DM detection has previously been associated with spoken comprehension in participants with Wernicke’s aphasia5, we additionally looked for associations between DM detection and individual neuropsychological tests assessing spoken comprehension. However, DM detection was not significantly correlated with Spoken Word-to-Picture Matching (Spearman’s rho = 0.07; one-sided uncorrected p = 0.39) or Spoken Sentence Comprehension (Spearman’s rho = 0.09; one-sided uncorrected p = 0.37).

Summary

Group level comparisons identified significantly impaired fluent speech production and timbre DM processing in the PSA subgroup relative to controls. Individual case-group control comparisons identified further deficits in specific psychoacoustic measures. Correlations demonstrated a strong association between rhythm metrical pattern discrimination and ‘fluency’ Principal Component 3.

Discussion

In order to investigate central auditory processing deficits in PSA, and whether auditory input processing of tone sequences relates to speech output fluency, we have performed an extensive battery of tests assessing the processing of pitch and melody, rhythm and metre, and timbre in individuals with chronic aphasia following left hemisphere stroke and controls. Intriguingly, there was a strong association between participants’ ability to discriminate the metrical pattern of strongly metrical tone sequences, and speech output fluency. This provides novel insights into the nature of fluent speech production in PSA and has conceptually replicated and extended previous work in primary progressive aphasia that suggested a relationship between auditory input processing of tone sequences and fluent speech production11.

A central aim of this work was to characterise central auditory processing deficits in PSA across the domains of pitch and melody (P1–P3), rhythm and metre (R1–R3) and timbre (DM detection). Previous work in PSA has tended to focus on auditory processing of single sounds or tone pairs (rather than sequences) in a limited number of domains, and has broadly found impaired processing of rhythm17,18,20 and timbre5 following left hemisphere stroke despite relative preservation of pitch processing5,20. The present study assessed pitch (P1–P3) and rhythm (R1–R3) of tone pairs and sequences, as well as an aspect of timbre processing (DM detection), in 17 individuals with a variety of aphasia profiles. Despite the PSA group having significantly impaired speech fluency (Supplementary Table S8) and the lesion overlap map encompassing large parts of the left hemisphere (Fig. 1), we found no significant group-level impairment for the rhythm processing tasks performed (Table 1). We did not find evidence that any of the PSA participants were significantly impaired on the psychoacoustic tests processing pitch in tone sequences (P2, P3) (Supplementary Table S9). Three of the PSA participants had significantly impaired detection of basic pitch changes in tone pairs (P1); one participant had impaired discrimination of time intervals in tone pairs (R1) with an additional participant being unable to perform this task at the easiest difficulty level; two participants had impaired isochrony deviation detection in tone sequences (R2) with an additional three being unable to perform this task at the easiest difficult level; and two participants were unable to perform metrical pattern discrimination at the easiest difficult level (Supplementary Table S9). Timbre spectro-temporal modulation was the only psychoacoustic task that was significantly impaired in the PSA subgroup (Table 1), but we found no association between timbre processing ability and any of the PCs of language, despite an association with auditory comprehension having been demonstrated previously in Wernicke’s aphasia5,19. A possible explanation for this relative lack of group-level auditory processing deficits is the heterogeneity of PSA and the possibility that auditory processing deficits differ depending on the lesion location and neuropsychological profile; indeed, our PSA subgroup did not contain anyone with classical Wernicke’s aphasia, as studied by Robson et al.5. We recruited individuals with any aphasia type, but the resultant subgroup consisted mainly of individuals with ‘expressive’ aphasia classifications (Supplementary Table S2). Previous studies associating timbre processing with auditory comprehension did so in a group selected for having Wernicke’s aphasia5,19. It is possible that if our sample had included more individuals with severe comprehension deficits, we would have observed an association between timbre processing and auditory comprehension as well. Furthermore, three participants with PSA in this study were unable to perform one or more of the rhythm processing tasks at the easiest difficulty level (Supplementary Table S9). We were not able to include these participants in the group difference or correlation analyses because we could not quantify a reliable threshold; however, these three individuals with PSA clearly had impaired rhythm processing.

Our second main hypothesis was that there would be an association between the auditory processing of tone sequences and speech output fluency in PSA. This was based on previous research suggesting that individuals with the nonfluent variant of primary progressive aphasia are significantly impaired at auditory sequence processing relative to fluent variants11. The present study looked for associations between four tests of auditory sequence processing, as well as three psychoacoustic tests using tone pairs or sounds, and behavioural measures of speech output fluency. We found a strong association between speech output fluency (PC3) and one of the sequence processing tasks, namely rhythm metrical pattern discrimination (R3) (Table 4). Furthermore, this psychoacoustic measure was not correlated with the other PCs of language (PC1, PC2 or PC4) and thus was not a generic marker of aphasia severity. Neither the other sequence processing tasks (P2, P3, R2) nor the tasks involving processing of pairs of tones (P1, R1) or sounds (DM) were significantly associated with speech output fluency in this sample (Table 4). The present study therefore demonstrates that there is an association between ‘input’ auditory processing and ‘output’ speech fluency in PSA, in particular between the discrimination of metrical pattern in tone sequences and fluent speech production. A novel implication of this study is therefore that the ability to detect metrical pattern in the incoming auditory stream might be a sensitive measure of an ability that is critical for the fluent production of connected speech.

The metricality of a tone sequence, as used in R3, is the higher-order temporal structure determined by the grouping of salvos of notes that induce the sense of a regularly occurring metrical ‘beat’ or ‘downbeat’, even when all notes have the same intensity, duration and pitch33,45,46. A high degree of metrical structure enables us to anticipate and predict the higher-order temporal structure of upcoming sound, akin to a ‘temporal scaffolding’ based on the metrical beat33. The association between metricality discrimination and fluency observed in this study suggests that the cognitive process of predicting the higher-order temporal structure of future sound might be important for both the discrimination of metricality in incoming auditory sound, and the production of metrical sound in fluent connected speech. Critically, isochrony deviation detection (R2) was not associated with speech fluency in this study. The difference between the two tasks is two-fold. Isochrony deviation detection (R2) tests lower-order differences in timing between consecutive tones in a simple isochronous sequence and uses a local deviation. By contrast, the deviation in the metrical task (R3) is distributed across the entire pattern, and the pattern is more abstract with a hierarchically organised beat structure. Previous lesion work47,48,49 has suggested that higher-order metrical processing can be doubly-dissociated from the processing of lower-order differences in timing between consecutive tones in a sequence. The lack of an observed association between isochrony deviation detection and fluency therefore raises the possibility that the association between fluency and metrical pattern discrimination might not be due to rhythm processing in general. Rather, there might be a specific association between fluency and the ability to process the higher-order regularity of accented tones within a sequence as embodied by metrical patterns. This is in keeping with previous work showing an association between rapid automatised naming in healthy adults and their ability to detect a roughly regular beat in an otherwise irregular sequence, but not their ability to detect isochrony deviation50.

It might be argued that the metrical pattern discrimination task (R3) is harder than the other three sequence processing tasks (P2, P3, and R2), because R3 requires comparisons between three sequences of tones (and the other three tasks require comparisons between two sequences of tones). However, we think this is unlikely to be the reason for the observed association between metrical pattern discrimination and speech fluency. Firstly, R3 requires the detection of a perturbation that is detectable within the sequence, unlike P2 or P3. Although R2 also requires the detection of a perturbation within the sequence, in R3 the deviation is distributed across the entire sequence with a number of different interval ratios, whereas R2 requires the analysis of one deviating interval from an otherwise isochronous beat. Secondly, the same sequence is used as the reference on all 50 trials in R3; a different metrical pattern does not have to be remembered on each trial. This is similar to R2 (which uses the same sequence at different tempi) but is in stark contrast to the two pitch sequence tasks (P2 and P3), which use different reference sequences on each trial that have to be remembered and compared to the target sequence. Thirdly, unlike P2 and P3, R3 used a two-alternative forced-choice adaptive difficulty paradigm with a two-down, one-up algorithm that is designed to reduce working memory load32. Fourthly, R3 was not significantly correlated with PC4 ‘executive’ scores, and its association with PC3 ‘fluency’ scores remained after partialling out PC4 ‘executive’ scores.

The strong and extremely robust correlation between rhythm metrical pattern discrimination and speech output fluency might seem surprising given the absence of impairments at the group and individual-level for this psychoacoustic task in the PSA subgroup relative to controls. Assuming that the impaired speech fluency observed in the PSA subgroup was a consequence of stroke, this suggests that some of the observed correlation between fluency and R3 might not be a consequence of stroke damaging a single neural substrate that is responsible for both fluency and R3. One possible explanation is that premorbid inter-individual differences in the ability to discriminate metrical pattern mitigates the effect of stroke on speech output fluency. Alternatively, recovery mechanisms post-stroke might have involved improvements in metricality discrimination which in turn helped fluency to recover. Similar possibilities were recently proposed when structural changes outside the lesion mask (and thus not directly caused by stroke) were associated with reading recovery in post-stroke central alexia51. It would be of great interest if rehabilitation strategies that use singing to aid recovery of propositional speech, such as melodic intonation therapy22, were found to be mediated by improved metricality discrimination. Existing techniques that augment metricality using rhythmic auditory stimuli improve speech output post stroke52,53. These findings go further by suggesting that a potential avenue for future therapies might be to target auditory metricality discrimination to aid fluency in PSA.

A limitation of the current work is that we were not able to elucidate the neural structures associated with central auditory processing, and particularly metricality discrimination, in PSA. Previous work using the Montreal Battery of Evaluation of Amusia54 in individuals with focal cortical damage following tumour or epilepsy surgery has suggested that the anterior superior temporal gyrus (STG) is critical for melody and metre processing48,49. Parkinson’s disease, in which basal ganglia degeneration occurs, is associated with impaired metricality-based rhythm discrimination55. Metrical rhythms elicit greater activation in the basal ganglia and supplementary motor area56, and striatal activation is thought to represent metricality prediction (rather than detection)57. Future work should elucidate the neural substrates of auditory processing post-stroke, and particularly whether the anterior STG, striatum or supplementary motor area contribute to speech fluency through metricality discrimination in PSA.

Conclusion

Our findings demonstrate that there is a strong association between the ability to analyse metrical pattern structure in the incoming auditory stream and fluent production of connected speech. This has significant implications for our understanding of fluent speech production, and for rehabilitation strategies that might use rhythm processing to aid recovery of fluency post stroke.

References

Engelter, S. T. et al. Epidemiology of aphasia attributable to first ischemic stroke: Incidence, severity, fluency, etiology, and thrombolysis. Stroke 37, 1379–1384 (2006).

Tsouli, S., Kyritsis, A. P., Tsagalis, G., Virvidaki, E. & Vemmos, K. N. Significance of aphasia after first-ever acute stroke: Impact on early and late outcomes. Neuroepidemiology 33, 96–102 (2009).

Geranmayeh, F., Brownsett, S. L. & Wise, R. J. Task-induced brain activity in aphasic stroke patients: What is driving recovery?. Brain 137, 2632–2648 (2014).

Schumacher, R., Halai, A. D. & Lambon Ralph, M. A. Assessing and mapping language, attention and executive multidimensional deficits in stroke aphasia. Brain 142, 3202–3216 (2019).

Robson, H., Grube, M., Lambon Ralph, M. A., Griffiths, T. D. & Sage, K. Fundamental deficits of auditory perception in Wernicke’s aphasia. Cortex 49, 1808–1822 (2013).

Foxton, J. M., Dean, J. L., Gee, R., Peretz, I. & Griffiths, T. D. Characterization of deficits in pitch perception underlying “tone deafness”. Brain 127, 801–810 (2004).

Foxton, J. M., Nandy, R. K. & Griffiths, T. D. Rhythm deficits in “tone deafness”. Brain Cogn. 62, 24–29 (2006).

Witton, C. et al. Sensitivity to dynamic auditory and visual stimuli predicts nonword reading ability in both dyslexic and normal readers. Curr. Biol. 8, 791–797 (1998).

Corriveau, K., Pasquini, E. & Goswami, U. Basic auditory processing skills and specific language impairment: A new look at an old hypothesis. J. Speech Lang. Hear. Res. 50, 647–666 (2007).

Wieland, E. A., McAuley, J. D., Dilley, L. C. & Chang, S. E. Evidence for a rhythm perception deficit in children who stutter. Brain Lang. 144, 26–34 (2015).

Grube, M. et al. Core auditory processing deficits in primary progressive aphasia. Brain 139, 1817–1829 (2016).

Goll, J. C. et al. Non-verbal sound processing in the primary progressive aphasias. Brain 133, 272–285 (2010).

Grube, M., Kumar, S., Cooper, F. E., Turton, S. & Griffiths, T. D. Auditory sequence analysis and phonological skill. Proc. Biol. Sci. 279, 4496–4504 (2012).

Grube, M., Cooper, F. E. & Griffiths, T. D. Auditory temporal-regularity processing correlates with language and literacy skill in early adulthood. Cogn. Neurosci. 4, 225–230 (2013).

Chi, T., Gao, Y., Guyton, M., Ru, P. & Shamma, S. Spectro-temporal modulation transfer functions and speech intelligibility. J. Acoust. Soc. Am. 106, 2719–2732 (1999).

Rosen, S. Temporal information in speech: Acoustic, auditory and linguistic aspects. Philos. Trans. R. Soc. Lond. B Biol. Sci. 336, 367–373 (1992).

Fink, M., Churan, J. & Wittmann, M. Temporal processing and context dependency of phoneme discrimination in patients with aphasia. Brain Lang. 98, 1–11 (2006).

von Steinbüchel, N., Wittmann, M., Strasburger, H. & Szelag, E. Auditory temporal-order judgement is impaired in patients with cortical lesions in posterior regions of the left hemisphere. Neurosci. Lett. 264, 168–171 (1999).

Robson, H., Griffiths, T. D., Grube, M. & Woollams, A. M. Auditory, phonological, and semantic factors in the recovery from Wernicke’s aphasia poststroke: Predictive value and implications for rehabilitation. Neurorehabil. Neural Repair 33, 800–812 (2019).

Robin, D. A., Tranel, D. & Damasio, H. Auditory perception of temporal and spectral events in patients with focal left and right cerebral lesions. Brain Lang. 39, 539–555 (1990).

Sihvonen, A. J. et al. Neural basis of acquired amusia and its recovery after stroke. J. Neurosci. 36, 8872–8881 (2016).

Zumbansen, A., Peretz, I. & Hébert, S. The combination of rhythm and pitch can account for the beneficial effect of melodic intonation therapy on connected speech improvements in Broca’s aphasia. Front. Hum. Neurosci. 8, 592 (2014).

Halai, A. D., Woollams, A. M. & Lambon Ralph, M. A. Using principal component analysis to capture individual differences within a unified neuropsychological model of chronic post-stroke aphasia: Revealing the unique neural correlates of speech fluency, phonology and semantics. Cortex 86, 275–289 (2017).

Zhao, Y., Lambonralph, M. A. & Halai, A. D. Relating resting-state hemodynamic changes to the variable language profiles in post-stroke aphasia. Neuroimage Clin. 20, 611–619 (2018).

Goodglass, H. & Kaplan, E. The Assessment of Aphasia and Related Disorders. 2nd ed. (Lea & Febiger, 1983).

Pustina, D. et al. Automated segmentation of chronic stroke lesions using LINDA: Lesion identification with neighborhood data analysis. Hum. Brain Mapp. 37, 1405–1421 (2016).

Ito, K. L., Kim, H. & Liew, S. L. A comparison of automated lesion segmentation approaches for chronic stroke T1-weighted MRI data. Hum. Brain Mapp. 40, 4669–4685 (2019).

Butler, R. A., Lambon Ralph, M. A. & Woollams, A. M. Capturing multidimensionality in stroke aphasia: Mapping principal behavioural components to neural structures. Brain 137, 3248–3266 (2014).

Halai, A. D., Woollams, A. & Lambon Ralph, M. A. Investigating the effect of changing parameters when building prediction models in post-stroke aphasia. Nat. Hum. Behav. 4, 725-735 (2020).

Alyahya, R. S. W., Halai, A. D., Conroy, P. & Lambon Ralph, M. A. A unified model of post-stroke language deficits including discourse production and their neural correlates. Brain 143, 1541–1554 (2020).

Raven, J. C. Advanced Progressive Matrices, set II. (H. K. Lewis, 1962).

Levitt, H. Transformed up-down methods in psychoacoustics. J. Acoust. Soc. Am. 49(Suppl 2), 467 (1971).

Grube, M. & Griffiths, T. D. Metricality-enhanced temporal encoding and the subjective perception of rhythmic sequences. Cortex 45, 72–79 (2009).

Quade, D. Rank analysis of covariance. J. Am. Stat. Assoc. 62, 1187–1200 (1967).

Preacher, K. J. & MacCallum, R. C. Exploratory factor analysis in behavior genetics research: Factor recovery with small sample sizes. Behav. Genet. 32, 153–161 (2002).

Crawford, J. R., Garthwaite, P. H. & Ryan, K. Comparing a single case to a control sample: Testing for neuropsychological deficits and dissociations in the presence of covariates. Cortex 47, 1166–1178 (2011).

Kaiser, H. An index of factor simplicity. Psychometrika 39, 31–36 (1974).

Kay, J., Lesser, R. & Coltheart, M. PALPA: Psycholinguistic Assessments of Language Processing in Aphasia (Erlbaum, New Jersey, 1992).

Kaplan, E., Goodglass, H. & Weintraub, S. Boston Naming Test. (Lea & Febiger, 1983).

Wechsler, D. A. Wechsler Memory Scale—Revised Manual. (Psychological Corporation, 1987).

Bozeat, S., Lambon Ralph, M. A., Patterson, K., Garrard, P. & Hodges, J. R. Non-verbal semantic impairment in semantic dementia. Neuropsychologia 38, 1207–1215 (2000).

Swinburn, K., Baker, G. & Howard, D. CAT: The Comprehensive Aphasia Test. (Psychology Press, 2005).

Jefferies, E., Patterson, K., Jones, R. W. & Lambon Ralph, M. A. Comprehension of concrete and abstract words in semantic dementia. Neuropsychology 23, 492–499 (2009).

Burgess, P. W. & Shallice, T. The Hayling and Brixton tests (Thames Valley Test Company, Suffolk, 1997).

Povel, D. & Essens, P. Perception of temporal patterns. Music Percept. 2, 411–440 (1985).

Povel, D. J. A theoretical framework for rhythm perception. Psychol. Res. 45, 315–337 (1984).

Peretz, I. Processing of local and global musical information by unilateral brain-damaged patients. Brain 113, 1185–1205 (1990).

Liégeois-Chauvel, C., Peretz, I., Babaï, M., Laguitton, V. & Chauvel, P. Contribution of different cortical areas in the temporal lobes to music processing. Brain 121, 1853–1867 (1998).

Baird, A. D., Walker, D. G., Biggs, V. & Robinson, G. A. Selective preservation of the beat in apperceptive music agnosia: A case study. Cortex 53, 27–33 (2014).

Bekius, A., Cope, T. E. & Grube, M. The beat to read: A cross-lingual link between rhythmic regularity perception and reading skill. Front. Hum. Neurosci. 10, 425 (2016).

Aguilar, O. M. et al. Dorsal and ventral visual stream contributions to preserved reading ability in patients with central alexia. Cortex 106, 200–212 (2018).

Stahl, B., Kotz, S. A., Henseler, I., Turner, R. & Geyer, S. Rhythm in disguise: Why singing may not hold the key to recovery from aphasia. Brain 134, 3083–3093 (2011).

Brendel, B. & Ziegler, W. Effectiveness of metrical pacing in the treatment of apraxia of speech. Aphasiology 22, 77–102 (2008).

Peretz, I., Champod, A. S. & Hyde, K. Varieties of musical disorders. The Montreal Battery of Evaluation of Amusia. Ann. N. Y. Acad. Sci. 999, 58–75 (2003).

Grahn, J. A. & Brett, M. Impairment of beat-based rhythm discrimination in Parkinson’s disease. Cortex 45, 54–61 (2009).

Grahn, J. A. & Brett, M. Rhythm and beat perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906 (2007).

Grahn, J. A. & Rowe, J. B. Finding and feeling the musical beat: striatal dissociations between detection and prediction of regularity. Cereb. Cortex 23, 913–921 (2013).

Acknowledgements

We would like to thank the volunteers for participating in this research.

Funding

JDS is a Wellcome clinical PhD fellow funded on grant 203914/Z/16/Z to the Universities of Manchester, Leeds, Newcastle and Sheffield. The research was supported by an ERC Advanced grant to MALR (GAP: 670428), by WT106964MA to TDG, and by Medical Research Council intramural funding (MC_UU_00005/18). The funding sources had no involvement in study design, data collection, analysis, writing or submission.

Author information

Authors and Affiliations

Contributions

J.D.S. contributed to study design, data collection, analysis and write-up. M.A.L.R. contributed to study design and write-up. B.D.D.P. contributed to data collection and write-up. T.D.G. and M.G. contributed to study design, analysis and write-up.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stefaniak, J.D., Lambon Ralph, M.A., De Dios Perez, B. et al. Auditory beat perception is related to speech output fluency in post-stroke aphasia. Sci Rep 11, 3168 (2021). https://doi.org/10.1038/s41598-021-82809-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-82809-w

This article is cited by

-

Embodying Time in the Brain: A Multi-Dimensional Neuroimaging Meta-Analysis of 95 Duration Processing Studies

Neuropsychology Review (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.