Abstract

The spread of intelligent transportation systems in urban cities has caused heavy computational loads, requiring a novel architecture for managing large-scale traffic. In this study, we develop a method for globally controlling traffic signals arranged on a square lattice by means of a quantum annealing machine, namely the D-Wave quantum annealer. We first formulate a signal optimization problem that minimizes the imbalance of traffic flows in two orthogonal directions. Then we reformulate this problem as an Ising Hamiltonian, which is compatible with quantum annealers. The new control method is compared with a conventional local control method for a large 50-by-50 city, and the results exhibit the superiority of our global control method in suppressing traffic imbalance over wide parameter ranges. Furthermore, the solutions to the global control method obtained with the quantum annealing machine are better than those obtained with conventional simulated annealing. In addition, we prove analytically that the local and the global control methods converge at the limit where cars have equal probabilities for turning and going straight. These results are verified with numerical experiments.

Similar content being viewed by others

Introduction

For the last two decades, intelligent and efficient transportation systems have been developing, and therefore, control methods for cooperative management of such systems have become increasingly important1,2,3. In particular, the adaptive traffic signal operation reflecting the traffic conditions is crucial for avoiding stagnation of traffic flows4,5. Various methods, which employ techniques such as genetic algorithm6, swarm intelligence7, neural networks8, and reinforcement learning9,10, have been proposed for such adaptive control11,12,13,14,15,16. In these studies, local control, where the state of each signal is determined from neighboring information, is considered, which hardly achieves a global optimum for managing the traffic conditions of the entire city. Solving a large-scale combinatorial optimization, however, is necessary in order to achieve such a global optimum. The difficulty of finding an optimal solution of the latter scales exponentially with the size of the city, because of the computational complexity of the combinatorial optimization.

Similar computational difficulty frequently appears in other fields. Accordingly, in recent years, various dedicated algorithms and hardware have been developed for solving this issue17,18,19. Their main strategy is to focus on solving particular combinatorial optimization problems, which can be transformed into an Ising model. Examples of the specialized hardware include the Coherent Ising Machine provided by NTT Corporation20,21, the Simulated Bifurcation Machine by Toshiba Corporation22, and the Digital Annealer by Fujitsu Corporation23,24. Among them, the Quantum Annealer 2000Q from D-Wave Systems Inc. has been attracting much attention for its being the world’s first hardware implementation of quantum annealing25 using a quantum processor unit. In the quantum annealing, a phenomenon called quantum fluctuation is used to simultaneously search candidate solutions of the given problem, which is expected to enable fast and accurate solution search compared with other heuristic search methods25,26. In this paper, we refer to the method using the 2000Q as the quantum annealing. Although the quantum annealing is expected to be an effective prescription for the large-scale combinatorial optimization problems, it is not a panacea because the advantage over the classical simulated annealing methods is reduced depending on types of the transformed Ising model. Besides the hardware constraints hinder the number of available variables and the class of solvable problems27. Hence, the search for compatible applications which exploit the quantum annealing power is becoming an active research area28,29,30,31,32,33,34.

In this paper, we propose a method for globally controlling traffic signals in an urban city using the quantum annealing. We consider a situation in which many cars moving on a lattice network are controlled via traffic signals installed at each intersection. To analytically handle this network, we consider a simplified situation in which two states are assumed for each signal: traffic is allowed in either the north–south direction or the east–west direction. The cars moving on the lattice are assumed to choose whether to make a turn or to go straight at an intersection with a given probability. We then formulate the signal operation problem as a combinatorial optimization problem. The objective function of the formulated problem is shown to be formally consistent with the Hamiltonian of the Ising model. The Ising model is a statistical ferromagnetism physics model that represents the behavior of a spin system, and it captures the relation between the microscopic state of spins and the macroscopic phenomena of magnetic phase transitions35,36,37,38. Importantly, the problem reformulated by means of the Ising model, with the aid of a graph embedding technique, is compatible with the class of problems that the 2000Q accepts; hence, one can apply the quantum annealing to solve the signal optimization problem.

By reformulating the problem using Ising minimization, this study makes three contributions to signal optimization. First, by performing numerical experiments, we confirm the engineering effectiveness of the proposed method using quantum annealing. Results of experiments using a large city consisting of \(50 \times 50\) intersections show that the proposed method achieves high quality signal operation, compared with the results of a conventional local control method39. The reformulated optimization problem is also solved using a classical simulated annealing method, but the quantum annealing method is found to give a better solution in a specific parameter domain. Second, a theoretical correspondence between the local and global control methods is found. We analytically show that the conventional local control is consistent with the solution of the global signal optimization problem at the limit where the probability of cars going straight is equal to the probability of them turning. This result provides a theoretical basis for the numerical prediction of the previous study39, where the local control is found to cause phase transitions similar to those of the Ising model. The last contribution is the knowledge gained for the cooperative operation of traffic signals. Our numerical experiments show a strong correlation between a signal and its neighboring signals. In addition, a strong temporal correlation of signals emerges, that is, the signal display at a certain time is correlated with the displays in the previous several steps. This spatio-temporal correlation becomes stronger as the straight driving probability of cars increases. Our results suggest the necessity of signal cooperation for smooth traffic flow, with variation of cooperation strength depending on the rate at which vehicles drive straight.

Results

Traffic signal optimization problem

Consider \(L\times L\ (L\in {{\mathbb{N}}})\) roads arranged in east–west and north–south directions with a periodic boundary condition. Each road consists of two lanes, one in each direction. Traffic signals are located at each intersection to control the flow of vehicles traveling on the roads. The signal at each node i has one of two states: \(\sigma _{i}=+1\), which allows vehicle flow only in the north–south direction, and \(\sigma _{i}=-1\), which allows vehicle flow only in the east–west direction. Each car goes straight through each intersection at fixed probability \(a\in [0,1]\) and otherwise turns to the left or right with equal probabilities, that is, \((1-a)/2\) for each direction. Figure 1 illustrates this situation.

Traffic signal model. (a) Grid pattern of roads. (b) The two states of traffic signals at each intersection. In the case of \(\sigma =+1\), the vehicles coming from the horizontal direction stop, and the vehicles coming from the vertical direction go straight at the rate of a, turn right at the rate of \((1-a)/2\), and turn left at the rate of \((1-a)/2\). The rate \(1-a\) shown for the horizontal direction is the sum of the vehicles from the two vertical directions. In the case of \(\sigma =-1\), the roles of the vertical and horizontal directions are reversed. This problem setting is basically following Ref.39.

Reference39 shows that the number of vehicles \(q_{ij}\in {{\mathbb{R}}}_{+}\) in the traffic lane from intersection j to i evolves according to the following difference equation:

where \(\alpha :=2a-1\), and \(s_{ij}\in \{\pm 1\}\) is the direction of the lane from node j to i; here, \(s_{ij}=+1\) denotes north–south and \(s_{ij}=-1\) denotes east–west. We note here that \(q_{ij}\) is normalized by the numbers of cars passing per unit of time. Precisely, in terms of the mean flux of moving cars \(Q_{\mathrm{av}}\) and the dimensional time unit \(\Delta t\), \(t=t^{*}/\Delta t\) and \(q_{ij}=q^{*}_{ij}/(Q_{\mathrm{av}}\Delta t)\), where \(t^{*}\) is the dimensional time and \(q^{*}_{ij}\) is the number of vehicles in a lane. See “Methods” for the detailed derivation of Eq. (1). We define a quantity that represents the deviation of the north-south flow and the east–west flow at each intersection i as

where \({{\mathcal{N}}}(i)\) represents the index of the four intersections adjacent to intersection i. Equation (2) transforms Eq. (1) into a time evolution equation for the flow bias x(t) as follows:

where the flow bias vector is defined as \({\mathbf{x}}:=[x_{1}, \ldots , x_{{L^{2}}}]^{\top }\) and the signal state vector is defined as \(\pmb {\sigma }:=[\sigma _{1}, \ldots , \sigma _{{L^{2}}}]^{\top }\). The matrix \(A\in {{\mathbb{R}}}^{L^{2}\times L^{2}}\) is the adjacent matrix of the periodic lattice graph.

Next, we define the following objective function to evaluate traffic conditions at each time step:

where the first term on the right-hand side suppresses the flow bias during the next time step at each intersection, the second term prevents the traffic signal state at each intersection from switching too frequently, and \(\eta \in {{\mathbb{R}}}_{+}\) is a weight parameter for determining the ratio of the two terms. The traffic signal state \(\sigma _{i}(t)\) at each time step is determined so that the objective function (4) is minimized; that is, we want to find the value of \(\pmb {{\bar{\sigma }}}(t)\) that satisfies

Ising formulation and optimization

Substituting Eq. (3) into Eq. (4) gives the following representation:

where c(t) is a constant term that does not include \(\pmb {\sigma }(t)\). By defining the variables

we can represent the objective function (6) as follows:

Equation (10) is a quadratic form with variables \(\{\pm 1\}\), which matches the Hamiltonian form of the Ising model35. Hence, solving the signal optimization problem of the objective function (4) is regarded as equivalent to the problem of finding the spin direction \(\sigma _{i}\in \{\pm 1\}\) that minimizes the Ising Hamiltonian of Eq. (10). Because the Ising Hamiltonian is compatible with the class of problems that the 2000Q accepts, the quantum annealing can be applied to solve the signal optimization problem.

We use a city consisting of \(50 \times 50\) intersections to consider the signal operation problem, and we compare the results of numerical experiments on the following three methods for traffic control:

-

Local control, which determines the signal display at each time step with the following local rules:

$$\begin{aligned} {\left\{ \begin{array}{ll} \sigma _{i}(t) \leftarrow +1 &{}\quad {\text{if }} x_{i}(t) \ge +\theta ,\\ \sigma _{i}(t) \leftarrow -1 &{}\quad {\text{if }} x_{i}(t) \le -\theta . \end{array}\right. } \end{aligned}$$(11)Equation (11) switches the display of the traffic signals to reduce the flow bias when the magnitude of the bias becomes larger than the threshold value \(\theta \in {{\mathbb{R}}}_{+}\) at each intersection. To compare the local control with the optimal control, the value of the switching parameter \(\theta\) is determined such that the common objective function (4) is minimized. For details, refer to “Methods” section.

-

Optimal control with simulated annealing, which reduces Eq. (10) at each time step using the simulated annealing. The simulated annealing is an algorithm for finding a solution by examining the vicinity of the current solution at each step and probabilistically determining whether it should stay in the current state or switch to a vicinity state. See Ref.40 for details of the simulated annealing. We used the neal library provided by D-Wave for executing this algorithm.

-

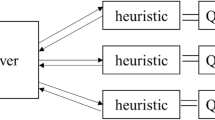

Optimal control with quantum annealing, which reduces Eq. (10) by using the quantum annealing with the D-Wave 2000Q. Because the problem size exceeds the size of problems that 2000Q can solve, it is subdivided by the graph partitioning technique. We used the ocean library provided by D-Wave for executing this algorithm. See “Methods” for the detailed procedure.

Figure 2 shows snapshots of the signal display at time \(t = 100\) for \(\alpha = 0.8\) and \(\eta =1.0\), where \(\alpha\) is the parameter related to vehicle’s straight driving probability and \(\eta\) is the weight parameter in the objective function (4). The flow bias distribution at the initial time \({\mathbf{x}}(0)\) is generated as random numbers following a uniform distribution in \([-5.0,\ 5.0]\), and the signal states at the initial time \(\pmb {\sigma }(0)\) are generated as random numbers following a binomial distribution of \(\{\pm 1\}\). In Fig. 2, blue dots mean that the cars are allowed to pass in the east–west direction, and red dots mean that the cars are allowed to pass in the north–south direction. We observe the synchronization of proximity signals under optimal control (see Fig. 2b,c), while the two direction states are distributed rather uniformly under local control (see Fig. 2a). The correlation of proximity signal states is quantitatively analyzed in “Discussion”.

Snapshots of traffic signals under different control methods. (a) Local controller using Eq. (11), (b) Global controller optimizing Eq. (10) with the simulated annealing, and (c) Global controller optimizing Eq. (10) with the D-Wave 2000Q. Red and blue dots represent vertical and horizontal directions allowed at each crossing, respectively. Parameters \(\alpha , \eta\), and L are fixed as \(\alpha =0.8, \eta =1.0\), and \(L=50\), respectively. For the D-Wave method, the Hamiltonian is divided into 42 groups and the optimization problem is solved in parallel. See “Methods” for details. (The data are plotted with software Python/matplotlib).

Figure 3a plots the time evolution of the Hamiltonian of Eq. (10) for each method in the case of \(\alpha = 0.8\) and \(\eta =1.0\). In all three methods, the signals change rapidly over time to reduce the Hamiltonian. The value of the Hamiltonian in the steady state is the smallest in the quantum annealing method, followed by the simulated annealing method, and it is the largest under local control. That is, the optimal control using the quantum annealing exhibits the best performance among the these methods. An attempt to compare with the exact solution has also been made for the same simulation using Gurobi package. Although the full exact solution for the entire time interval was not feasible in a reasonable time because of the large number of variables (2500 variables), the Hamiltonian averaged over first three steps showed the same order of accuracy. In Fig. 3, the response of the quantum annealing and the simulated annealing is more oscillatory than that of the local control. This is because the objective function of Eq. (4) only contains states up to one step ahead. An optimal value at one time is not necessarily consistent with the optimal values for long time behavior, resulting in more oscillatory response. If we use an objective function including more than two steps ahead, the oscillatory phenomenon should be suppressed, although the latter makes the formulation more complex, hindering the direct use of the quantum annealing.

Hamiltonian of Eq. (10) under different control methods. (a) Time evolution of the Hamiltonian, where the parameters \(\alpha , \eta\), and L are fixed as \(\alpha =0.8, \eta =1.0\), and \(L=50\), respectively. (b) Time average of Hamiltonian as functions of \(\alpha\), where the parameters \(\eta\) and L are the same as those in (a).

We examine the effect of changing the parameter \(\alpha\), the vehicle’s straight driving probability, on the Hamiltonian of Eq. (10). The time average of the Hamiltonian of Eq. (10), denoted as \({\bar{H}}\), is plotted in Fig. 3b. As \(\alpha\) approaches zero, the values of the Hamiltonian for the local and optimal control methods converge to a common value. This suggests that the local control gives the solution to the signal optimization problem at the limit of \(\alpha \rightarrow 0\). The validity of this conjecture is explored in “Discussion”. In the interval of \(\alpha \in [0.2,\ 0.8]\), the Hamiltonian under optimal control is smaller than that under local control, showing that the optimum control method exhibits performance better than that of the local control in this range. However, in the simulated annealing method at \(\alpha > 0.8\), the value of the Hamiltonian is larger than that under the local control method, suggesting that the simulated annealing does not reach the global optimal solution. Conversely, under the quantum annealing method, the value of the Hamiltonian is smaller than those under the other two methods, which means that the solution is closer to the global optimum. Here, we briefly discuss the slightly better value of \({\bar{H}}\) for the simulated annealing in a parameter domain of \(\alpha \in [0.2,\ 0.8]\), than that for the quantum annealing. In the range of large values of \(\alpha\), obtaining an exact solution of Eq. (4) is hard because of the high impact of the quadratic term. Actually in this parameter range, the quantum annealing gives better optimization results than the simulated annealing. On the other hand, regardless of problem to be solved, the quantum annealing generally contains stochastic fluctuations in the solutions28,32. When the parameter \(\alpha\) is in the intermediate range where the difficulty inherent in the optimization problem is moderate, both the simulated annealing and the quantum annealing give high quality solutions, but the simulated annealing gives slightly better solutions than the quantum annealing because the relative strength of stochastic fluctuations is large.

Discussion

Performance analysis of quantum annealing

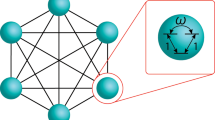

The performance of the D-Wave 2000Q is known to vary depending on the structure of the problem. In particular, when the matrix J in Eq. (8) has a sparse structure, the accuracy of the solution is improved21. To check the sparseness of our formulated problem, we examine the value of all components of J in Eq. (8). First, expanding J yields the following expression:

where the number of non-zero elements in each column of A is 4, because it is equal to the number of degrees of each node in the lattice graph (see the green nodes in Fig. 4a). Also, the number of non-zero elements in each column of \(A^\top A\) is 9 because it coincides with the number of nodes which are connected with the reference node via two edges in the lattice graph (see the orange nodes in Fig. 4a). Thus, the number of all non-zero elements in J is expressed as \(13L^{2}\). From this, we calculate \(S_{J}(L)\), the sparseness of matrix J, defined as the ratio of the number of 0-valued elements and the number of all elements in the matrix:

where we confirm that \(S_{J}(L)\rightarrow 1\) as \(L\rightarrow \infty\). In Fig. 4b, we plot \(S_{J}(L)\) given in Eq. (13), to show that the sparseness of matrix J increases as increasing city size. This allows us to expect that the performance of the D-Wave 2000Q is enhanced in the case of the signal optimization problem for rather large cities, such as \(L=50\), the one considered in the present paper.

Local and optimal control correspondence

As shown in Fig. 5, when the parameter \(\alpha\) of Eq. (1) is sufficiently small, the local control of Eq. (11) approaches the optimal control that is the solution of Eq. (5). When \(\alpha \approx 0\) is valid, the term associated with \(\alpha\) in Eq. (10) can be ignored, yielding

Because J in Eq. (14) is a diagonal matrix, the first term \(\pmb {\sigma }(t)^{\top } J \pmb {\sigma }(t)\) on the right-hand side of Eq. (10) is a constant that does not depend on \(\pmb {\sigma }\). Therefore, the minimizer of \(H(\pmb {\sigma }(t))\) is determined depending only on the sign of h in Eq. (15), that is,

for all \(i=1,\ldots ,{L^{2}}\). By transforming Eq. (16), we obtain

for all \(i=1,\ldots ,{L^{2}}\). The control method of Eq. (17) is equivalent to the local control (11) in Ref.39.

Magnetization of Eq. (18) under different control methods. (a) Time evolution of magnetization. Parameters \(\alpha , \eta\), and L are fixed as \(\alpha =0.8, \eta =1.0\), and \(L=50\), respectively. (b) Time average of magnetization as a function of \(\alpha\). Parameters \(\eta\) and L are the same as those in (a).

Because \(\alpha = 0 \Leftrightarrow a = 0.5\) holds, this optimality means that an appropriate vehicle turning rate autonomously eases the flow bias in the local control laws. In addition, the occurrence of this magnetic transition for the signal display, stated in Ref.39, is consistent with the fact that the local control in Eq. (11) actually minimizes the Ising Hamiltonian in Eq. (10). However, note that the optimality of the local control is valid only when \(\alpha \approx 0\), but not when \(\alpha \rightarrow 1\), where the phase transition occurs.

Signal synchronization analysis

To analyze the signal correlation observed in Fig. 2, we calculate the magnetization, which is regarded as an important quantity in the Ising model:

In the Ising model, this value represents the spin bias of the entire system, and it is an indicator of ferromagnetic transitions in the system. Figure 5a shows the time variation of magnetization m(t) . The value of magnetization remains small under local control, whereas it becomes significantly larger under both optimal control methods (simulated annealing and quantum annealing). For each method, at \(\alpha =0.8\), the response of the magnetization oscillates or fluctuates around zero. To confirm this observation, the time average of the magnetization of Eq. (18), denoted as \({\bar{m}}\), is plotted in Fig. 5b. Here, the ferromagnetic transition at \(\alpha \rightarrow 1\), that is, the finite value of \({\bar{m}}\), is observed for the magnetization under local control, which was originally reported in Ref.39. Also, under optimal control, the time average of the magnetization \({\bar{m}}\) takes a large value when \(\alpha \rightarrow 1\), which shows that a ferromagnetic transition similar to that under local control occurs under optimal control.

In addition to the ferromagnetic transition, the large amplitudes observed under optimal control are indeed a quantification of the synchronization of proximity signals observed in Fig. 2. For further analysis of this synchronization, we also evaluate two types of autocorrelation functions. Figure 6a shows the autocorrelation function obtained from the time-series data of the signal state \(\sigma _{i}(t)\) for \(t\in [0,200]\). Here, the autocorrelation function is computed at all intersections, and the average value is displayed in Fig. 6a. Under local control, there is a negative correlation peak around \(t = 3\), which means that the signals switch approximately every 3 time steps. In contrast, under optimal control, the negative correlation peak is in the interval of \(t = [10,15]\) steps, and the same state is maintained for a time longer than that under local control. In general, excessive signal switching is undesirable from a traffic engineering standpoint, and the optimization-based method overcomes this issue. Next, Fig. 6b shows the correlation between the display of signals at one intersection and another intersection, with the distance between the intersections as a parameter. Here, the correlation function is calculated for all the intersections for fixed time \(t=100\), and the average value thereof is plotted. In Fig. 6b, the distance is normalized to make the distance of adjacent intersections equal to 1. There is almost no correlation between adjacent signals under local control, while there is a positive correlation of up to 4–6 adjacent intersections under optimal control.

Then, we extract quantities from these correlation functions to investigate the effect of \(\alpha\). First, considering that both the temporal and spatial autocorrelations in Fig. 6 decay while oscillating, both functions are fitted with the following equation:

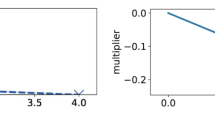

where \(\lambda\) represents the damping rate coefficient, \(\omega\) represents the vibration frequency coefficient, and \(z\in {{\mathbb{R}}}_{+}\) represents different variables, i.e., the time t for the time autocorrelation function and the distance between intersections for the spatial autocorrelation function. Figure 7a plots \(\omega\) values obtained by fitting Eq. (19) to the time autocorrelation, as a function of \(\alpha\). Under local control, the vibration frequency is \(\omega \approx 1\) regardless of the value of \(\alpha\), while \(\omega\) decreases as increasing \(\alpha\) under optimal control. This suggests that the frequency of signal switching reduces as the vehicle straight driving rate increases in order to guarantee optimality. In view of the large difference in \(\omega\) between the local control and the optimization-based controls for large values of \(\alpha\), we expect that optimization-based signal controls are particularly effective in preventing excessive switching for high vehicle straight driving rates. Next, we show in Fig. 7b the value of \(\lambda\) obtained by fitting Eq. (19) to the spatial autocorrelation, as a function of \(\alpha\). Under local control, the correlation decreases with an attenuation factor of \(\lambda \approx 1.75\), regardless of the value of \(\alpha\). In contrast, under optimal control, \(\lambda\) decreases as \(\alpha\) increases, which means that the signal displays between the more distant intersections remain correlated. These observations show that the synchronization of proximity signals in time and space becomes important for achieving a balanced traffic flow as the probability of vehicles going straight increases.

Parameters extracted from time and spatial autocorrelations, as functions of \(\alpha\) for different control methods. (a) Time autocorrelation function frequency \(\omega\) versus \(\alpha\) and (b) Radially averaged autocorrelation decay rate \(\lambda\) versus \(\alpha\). Parameters \(\eta\) and L are fixed as \(\eta =1.0\) and \(L=50\), respectively.

Effect of parameter \(\eta\)

Here we examine the effect of parameter \(\eta\), which controls the priority of the smoothness of the entire traffic flow to the signal switching frequency in the objective function of Eq. (4). The objective function is designed such that the priority is to smooth the flow of the car for small value of \(\eta\), and inversely for a large value of \(\eta\), preventing excessive signal switching is prior. The time average of the objective function \({\bar{H}}\) is obtained for various values of \(\eta\); \(\eta \in \{ 0.125,0.25,0.5,1,2,4,8\}\). We show the results in Fig. 8 for the simulated annealing (\({\bar{H}}_{\mathrm{SA}}\)) and the quantum annealing (\({\bar{H}}_{\mathrm{QA}}\)), where the ratios \({\bar{H}}_{\mathrm{QA}}/{\bar{H}}_{\mathrm{SA}}\) is plotted; \({\bar{H}}_{\mathrm{QA}}/{\bar{H}}_{\mathrm{SA}}<1\) means that the quantum annealing is better than the simulated annealing, and vice versa. The quantum annealing method shows better performances for \(\eta\) larger than 0.5, and the simulated annealing is better for \(\eta\) smaller than 0.5. This suggests that the quantum annealing works better when the priority is on preventing excessive signal switching.

Future improvements

Here we discuss three possible improvements of the results obtained in this study. First, we expect that the solution is improved by using the most recently released D-Wave’s machine. The D-Wave machine used in this study has 2048 qubits that are connected with the chimera structure, in which closely connected 8-qubit units are arranged41. Since the chimera structure is sparser than the fully-connected structure, the representation of arbitrary Ising problems requires a process called minor-embedding to map logical variables to physical qubits. This process however significantly reduces the number of available qubits, and also deteriorates the computational accuracy. Very recently, D-Wave has updated the hardware with a new graph structure called the pegasus structure. The number of qubits has increased from 2048 to 5024, and the maximum number of connections in the graph structure has increased from 8 to 1542. These improvements allow us to deal with much larger problems, and to realize efficient embedding with sacrificing less physical qubits, than the previous D-Wave machine. For the proposed method, this enhancement will significantly reduce the number of divisions of Hamiltonian (see “Methods” for details), which contributes to fast and high-accurate computations.

Next, adjusting the hyper-parameters of the solver could improve the performance of our method. The D-Wave machine contains a few hyper-parameters, such as, number of samplings, chain strength, and post processing. We left most of the parameters at their default values because we focus on examining ability of the quantum annealing to solve the traffic signal optimization problem. However, as these hyperparameters affect the optimized result, more careful tuning of these parameters may achieve faster and more accurate calculations. An error mitigation scheme proposed in Ref.43 could enhance the performance. We remark here that the problem formulated in this study has the form of Ising model that is solvable by using several dedicated computers other than the D-Wave machine20,21,22,23. Since the development of these dedicated machines is expected to further accelerate, the proposed framework for traffic flow control will be more generally available in the future.

We finally discuss a further improvement toward application to a real city. The parameter \(\alpha\) in our model is expected to be relatively large in a city with many rational players, because in a grid-like city, each vehicle can reach its destination from any starting point with only one right or left turn. In our experiments, the size of the grid L is empirically determined as \(L=50\) so that it would be comparable to the size of typical grid cities in the world (Kyoto, Japan; Barcelona, Spain; La Plata, Argentina, etc.). It is however desirable to identify these parameters in advance using real-world data. Since the constant probability of each vehicle driving straight ahead and the grid topology of the city are both idealistic assumptions, our traffic signal control method has to be further improved by relaxing these assumptions.

Methods

Derivation of traffic model

Here we derive the model shown in Eq. (1). Let \(q_{ij}^{*}(t)\) be the numbers of cars that exist between the intersections i and j and \(\Delta t\) be the minimum time interval at which a signal is switched. We denote by \(Q_{\mathrm{av}}\) the average flow rate of cars passing during \(\Delta t\). Then the change in the numbers of cars from time \(t^{*}\) to the next time \(t^{*}+\Delta t\) is represented as

By normalizing Eq. (20) with \(t:=t^{*}/\Delta t\) and \(q:=q^{*}/(Q_{\mathrm{av}} \Delta t)\), we obtain the following equation:

which is essentially identical to Eq. (1). In this paper, we consider the result of solving Eq. (21). The dimensional time and the actual numbers of cars are recovered with inverse transformation of the above normalization.

We remark on the numbers of cars, speed, and minimum signal switching interval on the model. First, a solution of the model in Eq. (1) is valid for an arbitrary numbers of cars. For example, the solution for \({\bar{q}}^{*}(0)=\gamma q^{*}(0)\) with some \(\gamma \in {{\mathbb{R}}}_{+}\), is obtained by setting \({\bar{Q}}_{\mathrm{av}} = \gamma Q_{\mathrm{av}}\) because the average flow rate is defined by “vehicle density” \(\times\) “average speed”. Second, let us consider the case of the average speed multiplied by \(\gamma\). The average flow rate \(Q_{\mathrm{av}}\) should then be \({\tilde{Q}}_{\mathrm{av}} = \gamma Q_{\mathrm{av}}\), while \(q^{*}(0)\) remains the same. Therefore, while Eq. (21) normalized by \(\tilde{Q}_{\mathrm{av}}\) does not apparently change, the initial value should be appropriately adjusted \({\tilde{q}}_{ij}(0) = q_{ij}^{*0}/(\tilde{Q}_{\mathrm{av}} \Delta t) = q_{ij}(0)/\gamma\). Similarly, for the case of \(\Delta {\hat{t}} := \gamma \Delta t\), the normalized Eq. (21) does not apparently change, but the initial value should be \({\hat{q}}_{ij}(0) = q_{ij}^{*0}/(Q_{\mathrm{av}} \Delta {\hat{t}}) = q_{ij}(0)/\gamma\).

Parameter identification for objective function

As stated in “Discussion”, a direct correspondence between the optimal control and local control is established for small values of \(\alpha\), with the apparent relation \(\theta = \eta\) between the local control switching constant \(\theta\) in Eq. (11) and the optimal control weight parameter \(\eta\) in Eq. (4). To make a systematic comparison for an arbitrary value of \(\alpha\), however, we still need to construct a protocol to determine the values of \(\theta\) and \(\eta\). The strategy is described as follows. Given a value of \(\eta\), we select a value of \(\theta\), denoted by \({\hat{\theta }}\), from a candidate set \(\Theta\) via the following auxiliary numerical analysis:

-

1.

For one value of \(\theta\) in the set \(\Theta\), numerical simulation using local control (11) is performed to obtain time series data \({\mathbf{x}}(t)\) and \(\pmb {\sigma }(t)\). The value of the objective function (4) using the given \(\eta\) is calculated from the obtained time series data. This time average is denoted as \({\bar{H}}(\theta )\).

-

2.

Step 1 is performed for all \(\theta\) in \(\Theta\) to find \({\hat{\theta }}\) that minimizes the time average \({\bar{H}}\), that is, \({\hat{\theta }} = \mathop {\mathrm{arg min}}\nolimits _{\theta \in \Theta }{\bar{H}}(\theta )\).

We plot the result of the above procedure in Fig. 9. Figure 9a shows \({\bar{H}}\) against \(\theta\) when \(\eta\) is fixed as \(\eta = 1.0\). When \(\alpha = 0\), \({\bar{H}}\) is a convex function and indeed \({\hat{\theta }} \approx \eta\) is satisfied. However, for larger values of \(\alpha\), \({\bar{H}}\) becomes non-convex, and particularly for \(\alpha =0.995\), the relation \({\hat{\theta }} = \eta\) no longer holds. Figure 9b shows the value of \({\hat{\theta }}\) that minimizes H versus \(\eta\) for the interval \(\eta \in [0.0, \ 3.0]\). When \(\alpha = 0\), the linear relation \({\hat{\theta }} = \eta\) approximately holds, but when \(\alpha \ne 0\), this relation breaks down and some discontinuities appear. These discontinuities correspond to the changes in the local minima observed in Fig. 9a.

Correspondence between \(\eta\) and \(\theta\). (a) Time average of the objective function \({\bar{H}}\) versus \(\theta\), when the value of \(\eta\) is fixed as \(\eta =1.0\). The cases with \(\alpha \in \{0.0,0.5,0.995\}\) are shown. (b) \({\hat{\theta }}\) versus \(\eta\) for \(\alpha \in \{0.0,0.5,0.995\}\).

Implementation on D-wave 2000Q

All experiments in this study are conducted on a Linux computer with 64 GB of memory and a clock speed of 3.70 GHz. All methods are implemented using the programming language Python (version 3.7).

We use DW_2000Q_VFYC_5 as a machine solver with the aid of D-Wave’s ocean library for the actual implementation. Here, the VFYC solver partially emulates some qubits that are temporarily unavailable because of hardware failures44. The number of samplings is specified through a parameter named num_reads, which we set 100 in all experiments. The validity of this parameter setting is confirmed by preliminary experiments using several candidate parameters.

For embedding the logical variables to the physical qubits on the D-Wave machine, we use a tool called minorminer (Apache license 2.0), which is a heuristic embedding method in ocean library45. We perform embedding operation every time when the problem is sent to 2000Q in order to average out the bias of the embedding quality.

For the simulated annealing, neal solver in ocean library is used. This solver also allows us to specify the number of samplings through a parameter called num_reads, which we set 100, i.e., the same value as the one in the quantum annealing.

For the chimera structure in the 2000Q, \(N^{2}/4\) physical qubits are necessary for embedding N-variable problem for the worst case, which means that the maximum number of variables that the 2000Q is capable of handling is as small as 64. This implies that \(L^{2}\le 64\Leftrightarrow L\le 8\) must be satisfied for the number of roads L in our problem setting. A method exists for solving a problem that exceeds the size limitation: to divide the Hamiltonian variable of Eq. (10) into several groups and minimize the approximate Hamiltonian for each group. We define the traffic signal state vector of the jth group as \(\pmb {\sigma }^{j} := [\sigma _{i_{1}}, \sigma _{i_{2}}, \ldots , \sigma _{i_{m}}]^{\top }\), where \(i_{1}, i_{2}, \ldots , i_{m}\) are subscripts of variables included in the jth group. Then, we define the Hamiltonian of the group j as

where \(J_{jj}\) is a matrix extracting the (j, j) th components of matrix J in Eq. (10). Similarly, \(h_{j}\) is a vector obtained by extracting the jth component of h. The index \({\bar{j}}\) represents the set of variables not belonging to group j. One naive approximation is to regard the variables outside group j as constant. This allows the annealing machine to deal with a Hamiltonian exceeding the limitation, but at the same time this approach degrades the control performance. To reduce such errors, the variables having a large interaction should be in the same group, and the variable interaction between different groups should be small. Such a problem is called a graph partitioning problem, which is known to be an NP-hard problem, but there are some approximation methods with adequate accuracy. For the actual implementation, we used the Metis software (Apache license 2.0), which is a widely used solver for graph partitioning problems, to break up the large-scale problem into several groups having fewer than 64 variables46. Figure 10 shows the result of the graph partitioning of the city of \(L = 50\) into 42 groups using Metis, where we certainly see that the adjacent intersections, i.e., the strongly interacting variables, are included in the same group.

Graph partitioning using Metis. Each node represents a component of the Hamiltonian coefficient matrix J in Eq. (10), and the color of each node indicates the group to which the component belongs. (The data obtained using Metis 5.1.0 are plotted with software Python/matplotlib).

We evaluate the effect of this graph partitioning method. To this end, the simulated annealing optimization on a system with \(L=8\) is performed, and results are compared between the no-partitioned and quadruple-partitioned cases. Figure 11 shows the time average of the objective function for various \(\alpha\). The values with partitioning are larger than those without partitioning, where the difference between these values represents the error caused by partitioning. The error increases with larger \(\alpha\), indicating that the larger the straight driving rate of vehicles, the more the partitioning has a negative effect. In Fig. 3, the quantum annealing has advantage at large \(\alpha\), and thus a higher performance signal control should be achieved once the D-wave without partitioning is realized.

References

Zhang, J. et al. Data-driven Intelligent Transportation Systems: A Survey. IEEE Trans. Intell. Transp. Syst. 12, 1624–1639. https://doi.org/10.1109/TITS.2011.2158001 (2011).

Bishop, R. Intelligent Vehicle Technology and Trends (Artech House, Norwood, 2005).

Cheng, X., Yang, L. & Shen, X. D2D for Intelligent Transportation Systems: A Feasibility Study. IEEE Trans. Intell. Transp. Syst. 16, 1784–1793. https://doi.org/10.1109/TITS.2014.2377074 (2015).

Papageorgiou, M., Diakaki, C., Dinopoulou, V., Kotsialos, A. & Wang, Y. Review of Road Traffic Control Strategies. Proc. IEEE 91, 2043–2067. https://doi.org/10.1109/JPROC.2003.819610 (2003).

Wei, H., Zheng, G., Gayah, V. & Li, Z. A Survey on Traffic Signal Control Methods. arXiv:1904.08117 [cs, stat] (2019).

Gokulan, B. P. & Srinivasan, D. Distributed Geometric Fuzzy Multiagent Urban Traffic Signal Control. IEEE Trans. Intell. Transp. Syst. 11, 714–727. https://doi.org/10.1109/TITS.2010.2050688 (2010).

García-Nieto, J., Alba, E. & Carolina Olivera, A. Swarm Intelligence for Traffic Light Scheduling Application to Real Urban Areas. Eng. Appl. Artif. Intell. 25, 274–283. https://doi.org/10.1016/j.engappai.2011.04.011 (2012).

Srinivasan, D., Choy, M. C. & Cheu, R. L. Neural Networks for Real-time Traffic Signal Control. IEEE Trans. Intell. Transp. Syst. 7, 261–272. https://doi.org/10.1109/TITS.2006.874716 (2006).

Arel, I., Liu, C., Urbanik, T. & Kohls, A. G. Reinforcement Learning-based Multi-agent System for Network Traffic Signal Control. IET Intell. Transp. Syst. 4, 128–135. https://doi.org/10.1049/iet-its.2009.0070 (2010).

Nishi, T., Otaki, K., Hayakawa, K. & Yoshimura, T. Traffic Signal Control based on Reinforcement Learning with Graph Convolutional Neural Nets. In 2018 21st International conference on intelligent transportation systems (ITSC), 877–883. https://doi.org/10.1109/ITSC.2018.8569301 (IEEE, 2018).

Hunt, P. B., Robertson, D. I., Bretherton, R. D. & Winton, R. I. Scoot—A Traffic Responsive Method of Coordinating Signals (Publication of, Transport and Road Research Laboratory, 1981).

Roess, R. P., Prassas, E. S. & McShane, W. R. Traffic Engineering (Pearson/Prentice Hall, Upper Saddle River, 2004).

Koonce, P. & Rodegerdts, L. Traffic Signal Timing Manual. Tech. Rep., United States. Federal Highway Administration (2008).

Faouzi, N.-E.E., Leung, H. & Kurian, A. Data Fusion in Intelligent Transportation Systems: Progress and Challenges—A Survey. Inf. Fusion 12, 4–10. https://doi.org/10.1016/j.inffus.2010.06.001 (2011).

Khamis, M. A., Gomaa, W. & El-Shishiny, H. Multi-objective Traffic Light Control System based on Bayesian Probability Interpretation. In 2012 15th International IEEE conference on intelligent transportation systems, 995–1000. https://doi.org/10.1109/ITSC.2012.6338853 (IEEE, 2012).

Varaiya, P. The Max-pressure Controller for Arbitrary Networks of Signalized Intersections. In Advances in Dynamic Network Modeling in Complex Transportation Systems (eds Ukkusuri, S. V. & Ozbay, K.) 27–66 (Springer, New York, 2013). https://doi.org/10.1007/978-1-4614-6243-9.

Blum, C. & Roli, A. Metaheuristics in Combinatorial Optimization: Overview and Conceptual Comparison. ACM Comput. Surv. 35, 268–308. https://doi.org/10.1145/937503.937505 (2003).

Puchinger, J. & Raidl, G. R. Combining Metaheuristics and Exact Algorithms in Combinatorial Optimization: A Survey and Classification. In Artificial Intelligence and Knowledge Engineering Applications: A Bioinspired Approach (eds Mira, J. & Álvarez, J. R.) 41–53 (Springer, Berlin Heidelberg, 2005). https://doi.org/10.1007/11499305_5.

Chakroun, I., Melab, N., Mezmaz, M. & Tuyttens, D. Combining Multi-core and GPU Computing for Solving Combinatorial Optimization Problems. J. Parallel Distrib. Comput. 73, 1563–1577. https://doi.org/10.1016/j.jpdc.2013.07.023 (2013).

Inagaki, T. et al. A Coherent Ising Machine for 2000-node Optimization Problems. Science 354, 603–606. https://doi.org/10.1126/science.aah4243 (2016).

Hamerly, R. et al. Experimental Investigation of Performance Differences between Coherent Ising Machines and a Quantum Annealer. Sci. Adv.https://doi.org/10.1126/sciadv.aau0823 (2019).

Goto, H., Tatsumura, K. & Dixon, A. R. Combinatorial Optimization by Simulating Adiabatic Bifurcations in Nonlinear Hamiltonian Systems. Sci. Adv. 5, eaav2372. https://doi.org/10.1126/sciadv.aav2372 (2019).

Matsubara, S. et al. Ising-model Optimizer with Parallel-trial bit-sieve Engine. In Complex, Intelligent, and Software Intensive Systems (eds Barolli, L. & Terzo, O.) 432–438 (Springer, Cham, 2018). https://doi.org/10.1007/978-3-319-61566-0_39.

Aramon, M. et al. Physics-inspired Optimization for Quadratic Unconstrained Problems using a Digital Annealer. Front. Phys.https://doi.org/10.3389/fphy.2019.00048 (2019).

Kadowaki, T. & Nishimori, H. Quantum Annealing in the Transverse Ising Model. Phys. Rev. E 58, 5355–5363. https://doi.org/10.1103/PhysRevE.58.5355 (1998).

Johnson, M. W. et al. Quantum Annealing with Manufactured Spins. Nature 473, 194–198. https://doi.org/10.1038/nature10012 (2011).

Das, A. & Chakrabarti, B. K. Colloquium: Quantum Annealing and Analog Quantum Computation. Rev. Mod. Phys. 80, 1061–1081. https://doi.org/10.1103/RevModPhys.80.1061 (2008).

King, J., Yarkoni, S., Nevisi, M. M., Hilton, J. P. & McGeoch, C. C. Benchmarking a Quantum Annealing Processor with the Time-to-Target Metric. arXiv:1508.05087 [quant-ph] (2015).

McGeoch, C. C. & Wang, C. Experimental Evaluation of an Adiabiatic Quantum System for Combinatorial Optimization. In Proceedings of the ACM International Conference on Computing Frontiers, CF ’13, 23, https://doi.org/10.1145/2482767.2482797. (Association for Computing Machinery, New York, NY, USA, 2013).

Venturelli, D., Marchand, D. J. J. & Rojo, G. Quantum Annealing Implementation of Job-Shop Scheduling. arXiv:1506.08479 [quant-ph] (2016).

O’Malley, D., Vesselinov, V. V., Alexandrov, B. S. & Alexandrov, L. B. Nonnegative/Binary Matrix Factorization with a D-wave Quantum Annealer. PLoS ONE 13, e0206653. https://doi.org/10.1371/journal.pone.0206653 (2018).

Ohzeki, M., Okada, S., Terabe, M. & Taguchi, S. Optimization of Neural Networks via Finite-value Quantum Fluctuations. Sci. Rep. 8, 1–10. https://doi.org/10.1038/s41598-018-28212-4 (2018).

Inoue, D. & Yoshida, H. Model Predictive Control for Finite Input Systems using the D-wave Quantum Annealer. Sci. Rep. 10, 1–10. https://doi.org/10.1038/s41598-020-58081-9 (2020).

Ayanzadeh, R., Halem, M. & Finin, T. Reinforcement Quantum Annealing: A Hybrid Quantum Learning Automata. Sci. Rep. 10, 7952. https://doi.org/10.1038/s41598-020-64078-1 (2020).

Yang, C. N. The Spontaneous Magnetization of a Two-dimensional Ising Model. Phys. Rev. 85, 808–816. https://doi.org/10.1103/PhysRev.85.808 (1952).

McCoy, B. M. & Wu, T. T. The Two-Dimensional Ising Model (Courier Corporation, North Chelmsford, 2014).

Binder, K. Finite Size Scaling Analysis of Ising Model Block Distribution Functions. Z. Phys. B Condens. Matter 43, 119–140. https://doi.org/10.1007/BF01293604 (1981).

Glauber, R. J. Time-dependent Statistics of the Ising Model. J. Math. Phys. 4, 294–307. https://doi.org/10.1063/1.1703954 (1963).

Suzuki, H., Imura, J.-I. & Aihara, K. Chaotic Ising-like Dynamics in Traffic Signals. Sci. Rep. 3, 1–6. https://doi.org/10.1038/srep01127 (2013).

Suman, B. & Kumar, P. A Survey of Simulated Annealing as a Tool for Single and Multiobjective Optimization. J. Oper. Res. Soc. 57, 1143–1160. https://doi.org/10.1057/palgrave.jors.2602068 (2006).

Boothby, T., King, A. D. & Roy, A. Fast Clique Minor Generation in Chimera qubit Connectivity Graphs. Quantum Inf. Process. 15, 495–508. https://doi.org/10.1007/s11128-015-1150-6 (2016).

Johnson, M. W. Future Hardware Directions of Quantum Annealing. In Qubits Europe 2018 D-Wave Users Conference (Munich, 2018).

Ayanzadeh, R., Dorband, J., Halem, M. & Finin, T. Post-quantum Error-Correction for Quantum Annealers. arXiv:2010.00115 [quant-ph] (2020).

See https://docs.dwavesys.com/docs/latest/c_solver_2.htm for the VFYC solver

Cai, J., Macready, W. G. & Roy, A. A Practical Heuristic for Finding Graph Minors. arXiv:1406.2741 [quant-ph] (2014).

Karypis, G. & Kumar, V. A Fast and High Quality Multilevel Scheme for Partitioning Irregular Graphs. SIAM J. Sci. Comput. 20, 359–392 (1998).

Acknowledgements

The authors would like to thank Dr. Kiyosumi Kidono of Toyota Central R&D Labs. for the useful discussions. This research was performed as a project of Intelligent Mobility Society Design, Social Cooperation Program (Next Generation Artificial Intelligence Research Center, the University of Tokyo with Toyota Central R&D Labs., Inc.).

Author information

Authors and Affiliations

Contributions

D.I. conceived and developed the concept and carried out all the experiments. D.I. and H.Y. analyzed the results and wrote the manuscript. A.O., T.M., K.A., and H.Y. designed the research plan and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Inoue, D., Okada, A., Matsumori, T. et al. Traffic signal optimization on a square lattice with quantum annealing. Sci Rep 11, 3303 (2021). https://doi.org/10.1038/s41598-021-82740-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-82740-0

This article is cited by

-

Replacement of signalized traffic network design with Hamiltonian roads: delay? Nevermind

Soft Computing (2023)

-

Demonstration of long-range correlations via susceptibility measurements in a one-dimensional superconducting Josephson spin chain

npj Quantum Information (2022)

-

Application of QUBO solver using black-box optimization to structural design for resonance avoidance

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.