Abstract

Corneal opacities are important causes of blindness, and their major etiology is infectious keratitis. Slit-lamp examinations are commonly used to determine the causative pathogen; however, their diagnostic accuracy is low even for experienced ophthalmologists. To characterize the “face” of an infected cornea, we have adapted a deep learning architecture used for facial recognition and applied it to determine a probability score for a specific pathogen causing keratitis. To record the diverse features and mitigate the uncertainty, batches of probability scores of 4 serial images taken from many angles or fluorescence staining were learned for score and decision level fusion using a gradient boosting decision tree. A total of 4306 slit-lamp images including 312 images obtained by internet publications on keratitis by bacteria, fungi, acanthamoeba, and herpes simplex virus (HSV) were studied. The created algorithm had a high overall accuracy of diagnosis, e.g., the accuracy/area under the curve for acanthamoeba was 97.9%/0.995, bacteria was 90.7%/0.963, fungi was 95.0%/0.975, and HSV was 92.3%/0.946, by group K-fold validation, and it was robust to even the low resolution web images. We suggest that our hybrid deep learning-based algorithm be used as a simple and accurate method for computer-assisted diagnosis of infectious keratitis.

Similar content being viewed by others

Introduction

Corneal opacities are a major cause of blindness worldwide and are ranked in the top 5 causes of blindness1. The major cause of the corneal opacities is infectious keratitis2, and slit-lamp examinations are the gold standard examination method to not only diagnose but to also identify the causative pathogen in eyes with infectious keratitis. However, the accuracy in identifying the causative pathogen is low even for board-certified ophthalmologists including corneal specialists. Laboratory culture tests are essential for the identification of the causative pathogen but the results can take weeks for the culturing and identification. PCR examinations are also very good but they are not universally available.

The significant and major pathogen categories for infectious keratitis are bacterial, fungal, acanthamoeba, and viral infections such as herpes simplex virus (HSV).

Thus, the purpose of this study was to develop hybrid deep learning (DL) algorithm that can determine the causative pathogen category in eyes with keratitis with a high probability score by analyzing slit-lamp images. To accomplish this, we used facial recognition techniques3 because the images of the faces are also recorded from different angles, different levels of illuminations, and different degrees of resolution.

Using this approach, we determined the probability scores of the pathogen category that was causing the keratitis that can be used for machine learning classifications. This DL-based diagnosis should be able to determine the pathogen category with high accuracy. The identification could avoid inappropriate treatments at the early stage of infection leading to an improvement of the visual outcomes.

Results

To obtain images of infectious keratitis, all of the 669 consecutive cases of suspected infectious keratitis that were referred to the Cornea Outpatient Clinic of the Tottori University Hospital between 2005 August and 2020 December, were assessed for the diagnosis based on the criteria. The top 4 categories of causative pathogens were bacteria, fungi, acanthamoeba, and HSV, and we focused on these 4 categories. The images of 362 cases with a definite identification of the causative pathogen were used for the analyses (Supplementary Fig. 1). Based on the criteria, the 362 cases were identified as belonging to one of the four categories of infectious keratitis.

The mean age of the patients whose images were used was 59.4 ± 21.8 years, and 201 cases (55.5%) were men. Of the 362 cases, 225 cases were bacterial keratitis, 76 were HSV, 42 were fungal, and 19 were acanthamoeba keratitis.

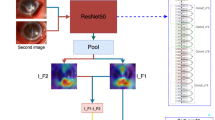

We first developed a DL algorithm based on ResNet50 (Fig. 1a) for diagnosing a single image. To obtain pathogen probability scores for each category of disease for classification, the DL algorithm was trained using the Ring loss-augmented softmax function which is known to be highly effective for large scale facial recognition tasks4.

Architecture of deep learning (DL) algorithm to determine the causative pathogen by analyzing slit-lamp images and comparisons of diagnostic accuracy of expert clinicians. (a) Architecture of deep learning algorithm at a development stage based on ResNet50. Input image is classified into ‘bacterial’ or ‘non-bacterial’ (1st classifier). Image classified as ‘non-bacteria’ is then classified into 'acanthamoeba', 'fungal' or 'HSV' (2nd classifier). Nμ, weighted average; HSV, herpes simplex virus. (b) Windows of KeratiTest is shown for the 20th question. Accuracy of answers by clinicians were compared to that by the algorithm. The algorithm at a development stage (a) outperformed all the sessions with clinicians. N = 35. (c) Ensemble architecture of deep learning algorithm based on InceptionResNetV2. Probability scores of each causative pathogen was calculated by feature normalization using softmax with Ring loss. The image that was classified as bacterial was also connected to a second classifier to obtain probability scores of acanthamoeba, fungi, and HSV for second classifiers. Pathogen probability scores, argmax of pathogen probability scores of 2 step classifier, pathogen probability scores of second classifier, and argmax of pathogen probability scores of second classifier, and argmax of pathogen probability scores for fluorescein-stained images were used as feature values for learning by gradient boosting decision tree (GBDT).

The DL algorithm was trained using 1426 images collected before March 14, 2019. The flow chart for the analyses are shown in Supplementary Fig. 2. The diagnostic accuracy of multiclass classification for each category of disease was assessed using 140 single test images which were not used for the training.

To compare the diagnostic accuracy of AI and clinicians, we solicited 35 board-certified ophthalmologists throughout Japan including 16 faculty members specialized in corneal diseases. We assessed their diagnostic accuracy using a diagnostic application software named “KeratiTest” in which the AI algorithm diagnosed the single images (Fig. 1b). When the multiclass diagnostic accuracy was assessed, the algorithm outperformed expert clinicians in all of the session of 20 images (Fig. 1b).

For the diagnosis of bacteria and non-bacteria, the area under the curve (AUC) was 0.82 for the algorithm and 0.58 for the ophthalmologists. For the diagnosis of acanthamoeba keratitis, the AUC was 0.84 for the algorithm and 0.59 for the ophthalmologists. For fungal keratitis, the AUC for the algorithm was 0.78 and for the ophthalmologist was 0.52, and for the diagnosis of HSV, the AUC for the algorithm was 0.73 and that for the ophthalmologist was 0.59. Thus, the algorithm outperformed ophthalmologist for all the causative types of keratitis.

Clinically, diagnosing by slit-lamp examinations is typically made by examining different images including those with different angles of view, different types and levels of illumination, and staining or not staining of the corneas. Thus, increasing the viewing of different images should improve the diagnostic efficiency. Therefore, we used up to 4 different recording conditions as a batch of learning and calculated the probability scores of each pathogen using normalization by Ring loss augmented softmax function as score level feature (Fig. 2). For decision level feature values, the argmax of pathogen probability scores for 2 step classifier, 2nd classifier, and fluorescence 2nd classifier were used (Fig. 1c).

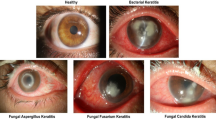

Representative images of each causative pathogens with 100% probability scores. Each pathogen probability score for single image was calculated using softmax with Ring loss in InceptionResNetV2 architecture (Fig. 1c) and is shown as confidence. Acanthamoeba image with high confidence shows ring filtrate which is located in the center of the cornea while unaffected corneal lesion is relatively clear and without edema. Image of bacterial keratitis with high confidence shows dense infiltrate with intense corneal edema surrounding the lesion. Fungal image with high confidence shows feathery infiltrate with satellite lesions while surrounding lesion are unaffected. HSV image with high confidence shows marginal ulcer with epithelial defect. ‘bac’, ‘aca’, ‘fun’, and ‘her’ represent bacteria, acanthamoeba, fungi, and herpes simplex virus (HSV), respectively.

To further mitigate uncertainty inherent to the disease condition, all of the above feature values were learned for a score level and decision level fusion using gradient boosting decision tree (GBDT) machine learning algorithm (Fig. 1c).

The final model constructed based on InceptionResNetV2 was evaluated using group K-fold validations for 4306 images (3994 clinical and 312 web images). The overall accuracy of the multiclass diagnosis was 88.0%. The results of the confusion matrix are shown in Table 1.

We next evaluated the diagnostic accuracy of each category of disease using binary classification of group K-fold validation. The diagnostic accuracy was 97.9% for acanthamoeba, 90.7% for bacteria, 95.0% for fungi, and 92.3% for HSV. When evaluated for diagnostic efficacy using ROC analysis, the AUC for acanthamoeba was 0.995 (95% CI: 0.991–0.998), for bacteria was 0.963 (95% CI: 0.952–0.973), for fungi was 0.975 (95% CI: 0.964–0.984), and for HSV was 0.946 (95% CI: 0.926–0.964) (Fig. 3).

Receiver operating characteristic analysis of hybrid deep learning-based algorithm. (a) Pathogen probability scores were calculated by softmax with Ring loss in InceptionResNetV2 architecture. The pathogen probability scores and argmax values of the pathogen probability scores of second classifiers, two step classifiers, and fluorescein-stained images were used for learning by Gradient Boosting Decision Tree in a batch of up to 4 serial images, and validated by group K-fold evaluation by the final algorithm with Gradient Boosting Decision Tree. The diagnostic accuracy of the binary classification was assessed for the area under the curve (area, AUC). AUC showed high diagnostic accuracy for all the causative pathogens. (b) The 4306 images were randomly divided into 3882 training images and 424 testing images so that different images of the same eyes were in either the training or the testing group and not in both. The algorithm was initialized and retrained using the training images and assessed for the AUC using test images in batch of up to 4 serial images. The AUC had high diagnostic accuracy for all the causative pathogens. The AUC had high diagnostic accuracy. Some decrease of AUC was observed for bacteria and fungi.

To validate the robustness of the algorithm to an unknown dataset, the algorithm was initialized and retrained using the training images. For this, all of the 4306 images were randomly divided into 3882 training images and 424 testing images so that different images of the same eyes were in either the training or the testing group but not in both.

Of all of the 4306 images, 1314 images were fluorescein stained. In the 3882 training data set, 1190 was fluorescein stained. In the 424 testing images, 124 images were fluorescein stained.

We then calculated the diagnostic accuracy of each category of disease using binary classification of the test data set. For acanthamoeba, the diagnostic accuracy was 96.7%; for bacteria, the diagnostic accuracy was 77.6%; for fungi, the accuracy was 84.2%; and for HSV, the accuracy was 91.7%. The AUC for acanthamoeba was 0.995 (95% CI: 0.989–0.999), the AUC for bacteria was 0.889 (95% CI: 0.856–0.917), the AUC for fungi was 0.889 (95% CI: 0.855–0.920), and the AUC for HSV was 0.956 (0.933–0.974) (Fig. 3).

To further assess the robustness of the algorithm to presence or absence of fluorescein staining, the algorithm was initialized and retrained using the 2692 training images without fluorescein staining. The algorithm tested for 300 images without fluorescein staining using a binary classification. The diagnostic accuracy was 94.3% for acanthamoeba, was 77.0% for bacteria, was 83.0% for fungi, and 93.7% for HSV. The AUC was 0.991 (95% CI: 0.982–0.998) for acanthamoeba, was 0.873 (95% CI: 0.829–0.911) for bacteria, was 0.856 (95% CI: 0.805–0.899) for fungi, and was 0.932 (0.891–0.967) for HSV (Supplementary Fig. 3). This indicated that the decrease in the diagnostic efficacy was minimal although the images of the training images were reduced.

Then, we tested the universality of the algorithm and robustness to low resolution images. Web images are universal for their availability but typically have low resolution, which may compromise the effective optimization because of the low norm features. Therefore, the web test data set was used to assess the effectiveness of the algorithm using binary classification. For acanthamoeba, the diagnostic accuracy was 91.7%; for bacteria, the diagnostic accuracy was 83.3%; for fungi, the accuracy was 88.9%; and for HSV, the accuracy was 97.2%. The AUC for acanthamoeba was 0.998 (95% CI: 0.990–1.0), the AUC for bacteria was 0.913 (95% CI: 0.794–1.0), the AUC for fungi was 0.969 (95% CI: 0.903–1.0), and the AUC for HSV was 1.0 (0.999–1.0). This indicated that the web image classification outperformed the classification of overall test images.

To understand the steps of diagnosis in gradient boosting decision tree (GBDT) algorithm, the first 4 decisions trees are shown in Fig. 4. The first tree used the bacterial probability scores of different images and set different thresholds for the classification. For the second tree, the images were classified as acanthamoeba/bacteria and fungus/HSV. Then, this step was repeated or the fungal probability score was applied for classification. The third tree classified HSV and others, this step was repeated, or fungal probability score was applied. The fourth decision tree classified acanthamoeba and others, then applied the HSV or acanthamoeba probability score for the classification.

Sequence of decision trees in gradient boosting decision tree (GBDT) algorithm. Deep learning derived probability scores (acanthamoeba: aca_, bacteria: bac_, fungus: fun_, HSV: her_) and argmax of the pathogen probability scores (Classifier2step_) were shown utilized for effective classification by GBDT. Acanthamoeba, bacteria, fungus, and HSV were coded as 0, 1, 2, 3 for Classifier2step_. Numbers following “_” (1–4) indicate serial number of images for the same batch. First decision tree uses bacterial probability scores. Second decision tree classifies acanthamoeba/ bacteria and fungus/HSV and uses fungal probability score. Third decision tree classifies HSV and uses the fungal probability score. Fourth decision tree classifies acanthamoeba and uses the probability scores of HSV and acanthamoeba.

Then, we assessed the importance of feature values and their interactions using Xgbfir (https://pypi.org/project/xgbfir/, Supplementary Fig. 4). When the total gain was used as an importance score, the bacterial probability score (bac_1) had the highest importance score, followed by argmax of importance score (Classifier2step_1), fungal probability score (fun_1), and acanthamoeba (aca_1) (Supplementary Fig. 4). These findings indicate the importance of the bacterial probability score. In contrast, the pathogen classifiers for fluorescein staining (FluoSecClassifier as Fluorescence 2nd classifier, Fig. 1c) were much lower in their importance, suggesting their role as a complementing pathogen probability score thus mitigating the uncertainty of fluorescein staining.

Then, we assessed how effectively each pathogen probability score was in classifying pathogen categories in the GBDT. Histograms of frequency of split values for pathogen probability scores are shown in Supplementary Fig. 5. The frequency of bacterial probability score had an intense accumulation of cut-off values for 0 (0%) and 1 (100%) compared to the intermediate probabilities between them. This characteristic distribution had a good signal to noise ratio. Other pathogen probability scores also had similar characteristics which supports the validity of the pathogen probability score for classification. In addition, split values for all the 4 pathogen probability scores were more frequent for around 0 (0%) than 1 (100% probability). This indicated that the exclusion diagnosis was used more frequently for the classification.

Discussion

Recent advances in DL technology in the ophthalmic field has allowed rapid and accurate diagnosis of several retinal diseases. These advances have led to the predictions of the prognosis, and they have also identified systemic markers of the disease. Importantly, the diagnostic performance of DL algorithms was equivalent to or even surpassed the diagnostic abilities of trained clinicians.

Currently, the reported DL algorithms for the analyses of anterior segment slit-lamp images appear to be developing with the intension of screening common diseases as a substitution of clinicians. An inception-based algorithm developed by Gu et al. classified a broad category of diseases including cataracts, neoplasms, non-infectious and infectious disorders, and corneal dystrophy5. This type of AI does not need to surpass the capabilities of clinicians but be equal to them. Another type of AI has been developed to surpass clinicians’ ability of diagnosis, however, reports on this type of AI for corneal diseases has been scarce.

Predicting the causative pathogen in infectious keratitis is one such representative challenge which needs to surpass the clinicians’ ability. Because of its vision-threatening nature, prompt and accurate diagnosis will benefit the patients and clinicians.

We calculated the pathogen probability scores after feature normalization using a DL algorithm, and then constructed a diagnostic algorithm using GBDT, a hierarchical series of decision trees. (Fig. 4) GBDT is a machine learning algorithm, which uses successive series of decision trees for learning. In GBDT, the coefficient or weight of the first tree is adjusted by the second tree, which is further adjusted by a third tree, and so on. GBDT is well recognized for its high accuracy and efficacy in classification problems, and it has been used in many AI competitions including Kaggle. Thus, the learning of an effective classification algorithm should help clinicians to diagnose more accurately.

In the decision-making process implemented by GBDT (Fig. 4), we found that bacterial probability score for the initial diagnosis was the most important decision (Fig. 4, 1st tree). For a correct diagnosis, the use of combinations of bacterial probability scores are indicated by the GBDT at the first stage. A different set of probability scores can augment the information to be learned because different illuminations, angles, or fluorescein staining serve as complementary roles. This is also similar to the clinical decision-making process.

Clinically, an alternative of bacterial probability score can be obtained by laboratory testing, including the outcomes of the culture and smear tests. GBDT can also manage these important features together if necessary to improve the diagnostic accuracy of the algorithm.

The second tree in the GBDT classified fungal and HSV keratitis using the fungal probability score (Fig. 4, 2nd tree) Then, the 3rd tree classified the fungal keratitis from the HSV suspected image again using the fungal probability score. Clinically, this diagnostic process is facilitated by calcofluor or fungiflora staining of the smear, considering its specificity. However, in our hands, the incorporation of staining into our slit-lamp images based on the GBDT algorithm did not appreciably improve the overall diagnostic accuracy.

The fourth tree first classifies acanthamoeba keratitis (Fig. 4), then rules out possibility of HSV infection using the HSV probability score. Non-acanthamoeba images are reexamined using the acanthamoeba probability score. This process illustrates the differential diagnosis of acanthamoeba and HSV. For example, in the early stage of acanthamoeba keratitis, pseudo dendritic lesions are often observed masquerading as herpetic keratitis. This leads to improper use of antiviral drugs or steroids. However, the acanthamoeba probability score of the slit-lamp images represented the characteristics of acanthamoeba infection well, and high AUC was obtained (Fig. 3).

In the diagnosis of fungal keratitis, a relatively lower AUC was obtained (Fig. 3). The GBDT indicated requirements for differential diagnosis from HSV in the second tree.

For diagnosis of HSV infection, real-time PCR is a very effective examination6. Thus, its incorporation to GBDT as another feature characteristic should significantly improve its diagnostic accuracy although the availability of PCR is limited in most clinical practice.

Generally, the diagnostic accuracy of identifying the causative pathogen by slit-lamp examinations is low for the general ophthalmologist. It was surprising to learn the low diagnostic accuracy by expert ophthalmologists (Fig. 1b).This was also true for corneal specialists7. In our setting, the accuracy of identification of the four categories of pathogens averaged about 40% for board-certified ophthalmologists. This reflects the difficulty of identifying the causative pathogen in the real-world setting in a tertiary referral hospital.

For corneal diseases, the available literatures on DL algorithms are still very limited5,7. This is in marked contrast to the abundance of retinal imaging AI. Compared to retinal images, the development of anterior segment image AI is hampered by several difficulties arising from differences in the acquisition of the images as stated earlier, and the large number of clinical signs that need to be learned8. For example, when infectious keratitis images were assessed, the performance of a well-established DL framework, VGG16, trained for whole image was insufficient and the overall accuracy remained at 55.24%7.

There are several factors that might explain why the determination of the causative pathogens was so difficult by examinations of the slit-lamp images alone. One was the difficulty in extracting sufficient information from one image9. Another difficulty arises from difference in illuminations or recording angles. This is in marked contrast to the imaging of the fundus in which the images are obtained at the same angle with similar quality.

To overcome such difficulty, several approaches have been used to improve the accuracy of the classifications. One approach is the patch level feature learning. Li et al. reported segmentation of the anatomical structures and annotations of the pathological features for deep learning8. They used 54 pathological features, including the presence of corneal edema, ulcer, corneal opacity, neovascularization, hypopyon, pterygium, and cataract8.

Xu applied patch level learning for classification of infectious keratitis, that was bacterial, fungal, and herpetic stromal keratitis. For this, infectious lesion, conjunctival injection, and anterior chamber inflammation were annotated by manual drawings7. Using the patch level classification outcomes, the accuracy was 52.5% for VGG167. To improve classification accuracy, smaller lesions were randomly sampled from each patch, and the resultant sequence of smaller lesions were used as sequential features for a long short-term memory algorithm. Using the inner-outer sequential order patch algorithm, the accuracy of classification of bacterial keratitis was improved to 75.29%, and the AUC was 0.92.

However, the patch level learning model has some drawbacks. One significant drawback is the requirement of manual drawing of the patch identification by clinicians. This requires large efforts by examiners and may cause some bias for patch detection. Another is the issue of universality or robustness to low resolution images. For example, it remains unclear whether sequential order patch algorithm can perform equally well for fluorescein-stained images. In addition, their softmax based calculations are not robust for low resolution images, photographs obtained with different angles of illumination, or distractors4,10.

Deep learning-based recognition has been used for many practical applications. Face recognition is one important field of security. However, face recognition is a challenging task, because few samples per individual are available for training, and image quality of face or their angles or illumination differ. To overcome this problem, feature normalization using Ring loss was developed4. This allowed the normalization of feature characteristics with the norm constraint of the target. Generally, the preservation of convexity in loss function is known to be important for effective optimization of the network. Because Ring loss maintains convexity of softmax function, effective learning is achieved4. Moreover, Ring loss approach has been robust to numbers of distractors, lower resolution, or extreme pose (angle) images. Thus, Ring loss with softmax is a simple approach and does not need meticulous annotation by manual drawings.

Generally, the integration of multiple modalities can improve the classification efficacy11. This can be conducted at the score level or the decision level. The score level and decision level fusion scheme has been shown to improve the discrimination efficacy greatly in the field of multi-biometric verification such as for authentication in banking11. To improve the classification efficacy in this study, the probability score and decisions steps were integrated using GBDT which was versatile and efficient.

There are some limitations in our study. Our algorithm was developed based on more than 4000 images, however the case numbers may still be limited and may not be applicable to geographic regions which have epidemiologically different pathogenic species. Another limitation is that our algorithm classified four categories of pathogens. Based on therapeutic purposes, this classification appears appropriate. However, we are aware that some pathogens have clinical characteristics that resemble that of other organisms. For example, the clinical characteristics of mycobacterium infection is somewhat similar to fungal keratitis. This difficulty can be overcome by training with more detailed classifications which can be easily implemented by our algorithm.

In conclusion, we have developed an AI algorithm which can identify the causative pathogens of infectious keratitis. This algorithm outperformed the accuracy of clinicians. The development of this DL algorithm is important and may become the basis for future development of auto-diagnosis by slit-lamp as well as establishment of efficient tele-medicine platform for anterior segment diseases.

Material and methods

Demographics of patients

Clinical images were collected from 362 consecutive patients diagnosed with infectious keratitis caused by four categories of pathogens; bacteria, acanthamoeba, fungi, and HSV. All of the patients were examined between 2005 August to 2020 December in the Tottori University Hospital.

We collected the images from the medical records that were recorded by a digital photography system attached to the slit-lamp. Photographs were taken with slit or diffuser illumination, or with blue light illumination to view fluorescein-stained corneas. Various slit lamps (SL130 (ZEISS), and SL-D7 (Topcon), and cameras (EOS kiss × 7 (Canon), α6000 (Sony), and D90 (Nikon)) were used to ensure the diversity of the training samples. The images were obtained from eyes with active diseases at different stages of the natural course of the disease process. Representative images from the 362 consecutive patients are shown in Supplementary Fig. 1.

Diagnosis of infectious keratitis for inclusion into enrolled clinical images

The diagnosis of the infectious keratitis was made by the clinical characteristics12,13, responsiveness to appropriate drug treatment, and detection of specific pathogens. The patients with data meeting these criteria were studied.

A confirmation on the responsiveness to a drug treatment was determined after standard treatment. For bacterial, fungal, and HSV keratitis, antibiotics, anti-fungal drugs, and anti-viral drugs, respectively, were used for the treatment. Antifungal drugs and chlorhexidine and/or polyhexamethylene biguanide were used to treat acanthamoeba infections.

To diagnose the cause of the infections, corneal samples were collected from all of the patients. To confirm the diagnosis, bacteria were identified by using one or more of the following criteria; detection of bacteria in Gram stained smears, positive bacterial culture, and quantification of bacterial DNA using broad range PCR for 16S r-DNA14. Amplified 16S r-DNAs were sequenced when necessary for the identification of the bacteria at the species level.

A diagnose of fungal keratitis was made by the detection of hypha in the smear by fluorescent staining with Fungiflora Y or a positive fungal culture15,16. The clinical characteristics included dry-appearing infiltrates with feathered margins and multifocal infiltrates as satellite lesions. The causative bacteria and fungi are listed in Supplementary Table 1.

To diagnose acanthamoeba keratitis, the clinical characteristics and either the detection of cysts in the smear by staining with Fungiflora Y, positive acanthamoeba culture, or quantification of acanthamoeba DNA using real-time PCR were required17,18. The clinical characteristics include pseudo dendrites, radial keratoneuritis, multifocal stromal infiltrates, and ring infiltrates in the advanced stages19.

For the diagnosis of HSV infection, real-time PCR was used in all the cases to determine the HSV DNA levels6. In addition to the typical clinical findings of HSV keratitis of dendritic or geographical keratitis, atypical stromal keratitis or necrotizing keratitis cases were included based on the outcome of real-time PCR.

The final diagnosis was made after all the outcomes of the tests were obtained and patients were successfully treated. Images from cases with mixed infections were excluded from the analysis. To assure a correct diagnosis, three independent observers reviewed the medical records, and a uniform agreement was made in all cases.

The protocol for this study was approved by the Tottori University Ethics Committee. All of the procedures used in this study conformed to the tenets of the Declaration of Helsinki. Informed consent was obtained from all the participants.

Deep learning algorithm

For effective slit-lamp diagnosis of infectious keratitis, illumination by diffuse light and cobalt blue filtered light after fluorescein staining is required. Therefore, slit-lamp images with these illuminations were used to develop the DL algorithm.

To classify the images of infectious keratitis, we used a convolutional neural network (CNN) as the DL algorithm. The CNN was pretrained by transfer learning using the ImageNet image database. We used two types of pretrained CNN models: ResNet-5020 and the InceptionResNetV221. The training and validation data were provided with the Group K-Fold approach.

The final model was constructed based on InceptionResNetV2 (299 × 299 resolution of the images) using 4306 images made up of 3994 clinical and 312 web images. The AI model was trained with 80% of a randomly selected slit-lamp images and validated using the remaining 20% of different ID patients’ images (group K-fold validation). The calculated accuracy by Group K-fold indicated the accuracy of the samples which were not used for the model construction. Different images of the same eye were not used for the calculation of the accuracy.

The learning process of the DL algorithm was facilitated by balanced data. To compensate for an imbalance in the number of images in the four categories, a hierarchical CNN model was constructed. An overview of the approach is shown in Fig. 1. The first CNN classifier estimated whether an image was from an eye with 'bacterial' or 'non-bacterial' infection. Then, the second classifier estimated the probability scores of 'acanthamoeba', 'fungal' or 'HSV' for the image classified as 'others' (Fig. 1a).

When the first classifier answer was “bacteria”, the image was also directly connected to the second classifier to obtain the prediction probability scores of acanthamoeba, fungi, and HSV to be used for feature values for second step classifier (Fig. 1c).

For calculation of probability score of each pathogen and activation function, softmax, was trained using Ring loss for feature normalization, which constrained the norm of deep feature vectors.

Using the probability score of each pathogen, the argmax of pathogen probability scores for two step classification, second step classification, and second step classification for fluorescent stained image, were calculated as decision level feature values.

For the machine learning algorithm, GBDT (https://xgboost.readthedocs.io/en/latest/#) was trained using all of these feature values in batch of up to 4 serial images with different angles, illuminations, or staining (Fig. 1c).

The universality of the images and robustness to low resolution image is another important issue in determining the applicability of the DL algorithm. Therefore, we searched for infectious keratitis images of the four causative categories by an internet search of publications using keywords. (Supplementary Table 2) The resultant 312 images were also used for the development of the algorithm22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103. (Supplementary Table 3).

Image evaluations by clinicians and validation of deep learning (DL) algorithm

The sequential CNN algorithm (Fig. 1a) was assessed for its performance of 1426 images collected before 2019 March 14. To understand the diagnostic difficulties of processing the images, the application software, “KeratiTest” was created.

KeratiTest

The KeratiTest used 140 single test images which were not used for training or validation of the algorithm. The KeratiTest presented 20 randomly selected photographic images to the application users (clinicians) and prompt answer for a single image which was obtained by either a slit-lamp or diffuser illumination, or cobalt blue for fluorescein staining but not a combination of them (Fig. 1b). When 20 random sequential images were answered by the users, the KeratiTest summarized the accuracy score, the time required to answer, and compared the performance of humans to that of AI for that set of images. Thus, in the KeratiTest, clinicians played against the algorithm, and competed for the diagnostic accuracy of each image.

Statistical analyses

To assess the diagnostic performance, the receiver operating characteristic analysis was used to calculate the area under the curve (AUC) with 95% confidence intervals.

Data availability

The datasets analyzed during the current study are available from the corresponding author on reasonable request.

References

Flaxman, S. R. et al. Global causes of blindness and distance vision impairment 1990–2020: A systematic review and meta-analysis. Lancet Glob. Health 5, e1221–e1234. https://doi.org/10.1016/s2214-109x(17)30393-5 (2017).

Ung, L., Bispo, P. J. M., Shanbhag, S. S., Gilmore, M. S. & Chodosh, J. The persistent dilemma of microbial keratitis: Global burden, diagnosis, and antimicrobial resistance. Surv. Ophthalmol. 64, 255–271. https://doi.org/10.1016/j.survophthal.2018.12.003 (2019).

Ross, A. A., Nandakumar, K. & Jain, A. K. in Handbook of Multibiometrics 1–198 (Springer, 2006).

Zheng, Y., Pal, D. K. & Savvides, M. Ring Loss: Convex Feature Normalization for Face Recognition. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5089–5097 (2018).

Gu, H. et al. Deep learning for identifying corneal diseases from ocular surface slit-lamp photographs. Sci. Rep. 10, 17851. https://doi.org/10.1038/s41598-020-75027-3 (2020).

Kakimaru-Hasegawa, A. et al. Clinical application of real-time polymerase chain reaction for diagnosis of herpetic diseases of the anterior segment of the eye. Jpn. J. Ophthalmol. 52, 24–31. https://doi.org/10.1007/s10384-007-0485-7 (2008).

Xu, Y. et al. Deep sequential feature learning in clinical image classification of infectious keratitis. Engineering https://doi.org/10.1016/j.eng.2020.04.012 (2020).

Li, W. et al. Dense anatomical annotation of slit-lamp images improves the performance of deep learning for the diagnosis of ophthalmic disorders. Nat. Biomed. Eng. 4, 1–11. https://doi.org/10.1038/s41551-020-0577-y (2020).

Yip, M. Y. T. et al. Technical and imaging factors influencing performance of deep learning systems for diabetic retinopathy. NPJ Digit Med. 3, 40. https://doi.org/10.1038/s41746-020-0247-1 (2020).

Ba, J., Kiros, J. & Hinton, G. E. Layer Normalization. http://arxiv.org/abs/1607.06450 (2016).

Dwivedi, R. & Dey, S. A novel hybrid score level and decision level fusion scheme for cancelable multi-biometric verification. Appl. Intell. 49, 1016–1035 (2018).

Chidambaram, J. D. et al. Prospective study of the diagnostic accuracy of the in vivo laser scanning confocal microscope for severe microbial keratitis. Ophthalmology https://doi.org/10.1016/j.ophtha.2016.07.009 (2016).

Bhadange, Y., Das, S., Kasav, M. K., Sahu, S. K. & Sharma, S. Comparison of culture-negative and culture-positive microbial keratitis: Cause of culture negativity, clinical features and final outcome. Br. J. Ophthalmol. 99, 1498–1502. https://doi.org/10.1136/bjophthalmol-2014-306414 (2015).

Shimizu, D. et al. Effectiveness of 16S ribosomal DNA real-time PCR and sequencing for diagnosing bacterial keratitis. Graefes Arch. Clin. Exp. Ophthalmol. 258, 157–166. https://doi.org/10.1007/s00417-019-04434-8 (2020).

Inoue, T. et al. Utility of Fungiflora Y stain in rapid diagnosis of Acanthamoeba keratitis. Br. J. Ophthalmol. 83, 632–633. https://doi.org/10.1136/bjo.83.5.628g (1999).

Miyazaki, D. et al. Efficacy of Gram-Fungiflora Y double staining in diagnosing infectious keratitis. Nippon Ganka Gakkai Zasshi 117, 351–356 (2013).

Ikeda, Y. et al. Assessment of real-time polymerase chain reaction detection of Acanthamoeba and prognosis determinants of Acanthamoeba keratitis. Ophthalmology 119, 1111–1119. https://doi.org/10.1016/j.ophtha.2011.12.023 (2012).

Miyazaki, D. et al. Presence of Acanthamoeba and diversified bacterial flora in poorly maintained contact lens cases. Sci. Rep. 10, 12595. https://doi.org/10.1038/s41598-020-69554-2 (2020).

Szentmary, N. et al. Acanthamoeba keratitis: Clinical signs, differential diagnosis and treatment. J. Curr. Ophthalmol. 31, 16–23. https://doi.org/10.1016/j.joco.2018.09.008 (2019).

He, K., Zhang, X., Ren, S., Sun, J. & Research, M. Deep residual learning for image recognition. CVPR https://doi.org/10.1109/cvpr.2016.90 (2016).

Szegedy, C., Ioffe, S., Vanhoucke, V. & Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence 4278–4284, https://doi.org/10.5555/3298023.3298188 (2017).

Al Kharousi, N. & Wali, U. K. Confoscan: An ideal therapeutic aid and screening tool in acanthamoeba keratitis. Middle East Afr. J. Ophthalmol. 19, 422–425. https://doi.org/10.4103/0974-9233.102766 (2012).

Alfawaz, A. Radial keratoneuritis as a presenting sign in acanthamoeba keratitis. Middle East Afr. J. Ophthalmol. 18, 252–255. https://doi.org/10.4103/0974-9233.84062 (2011).

Alkatan, H. M. & Al-Essa, R. S. Challenges in the diagnosis of microbial keratitis: A detailed review with update and general guidelines. Saudi J. Ophthalmol. 33, 268–276. https://doi.org/10.1016/j.sjopt.2019.09.002 (2019).

Altun, A. et al. Effectiveness of posaconazole in recalcitrant fungal keratitis resistant to conventional antifungal drugs. Case Rep. Ophthalmol. Med. 2014, 701653. https://doi.org/10.1155/2014/701653 (2014).

Bagga, B. et al. Efficacy of topical miltefosine in patients with acanthamoeba keratitis: A pilot study. Ophthalmology 126, 768–770. https://doi.org/10.1016/j.ophtha.2018.12.028 (2019).

Bagga, B., Kate, A., Joseph, J. & Dave, V. P. Herpes simplex infection of the eye: An introduction. Commun. Eye Health 33, 68–70 (2020).

Bautista-Ruescas, V., Blanco-Marchite, C. I., Donate-Tercero, A., BlancoMarchite, N. & Alvarruiz-Picazo, J. Streptococcus pneumoniae keratitis, a case report. Arch. Med. 1, 1–3. https://doi.org/10.3823/031 (2009).

Bethke, W. Meeting the challenge of fungal keratitis. Rev. Ophthalmol. (2013). https://www.reviewofophthalmology.com/article/meeting-the-challenge-of-fungal-keratitis-44204.

Bronner, A. Managing microbial keratitis. Rev. Cornea Contact Lenses (2017). https://www.reviewofcontactlenses.com/article/rccl1117-managing-microbial-keratitis.

Bronner, A. Fungal ulcers: Missed and misunderstood. Rev. Cornea Contact Lenses (2018). https://www.reviewofcontactlenses.com/article/fungal-ulcers-missed-and-misunderstood.

Carnt, N., Samarawickrama, C., White, A. & Stapleton, F. The diagnosis and management of contact lens-related microbial keratitis. Clin. Exp. Optom. 100, 482–493. https://doi.org/10.1111/cxo.12581 (2017).

Carnt, N. A. & Dart, J. K. Diagnosing Acanthamoeba keratitis: What does the future hold?. Int. J. Ophthalmic Pract. 5, 129–133. https://doi.org/10.12968/ijop.2014.5.4.129 (2014).

Cheng, S. C., Lin, Y. Y., Kuo, C. N. & Lai, L. J. Cladosporium keratitis: A case report and literature review. BMC Ophthalmol. 15, 106. https://doi.org/10.1186/s12886-015-0092-1 (2015).

Cheung, N. C. & Hammersmith, K. M. Keeping the bugs at bay: Fungi and protozoa in contact lens wearers. Rev. Cornea Contact Lenses, 16–21 (2015).

Dalmon, C. et al. The clinical differentiation of bacterial and fungal keratitis: A photographic survey. Invest. Ophthalmol. Vis. Sci. 53, 1787–1791. https://doi.org/10.1167/iovs.11-8478 (2012).

Das, S., Rao, A. S., Sahu, S. K. & Sharma, S. Corynebacterium spp as causative agents of microbial keratitis. Br. J. Ophthalmol. 100, 939–943. https://doi.org/10.1136/bjophthalmol-2015-306749 (2016).

Di Zazzo, A. et al. A global perspective of pediatric non-viral keratitis: literature review. Int. Ophthalmol. 40, 2771–2788. https://doi.org/10.1007/s10792-020-01451-z (2020).

Doliveira, P. C. B. & Bisol, T. Contact lens-related bilateral and simultaneous Acremonium keratitis. Rev. Bras. Oftalmol. 76, 213–215. https://doi.org/10.5935/0034-7280.20170044 (2017).

Eghrari, A. O. et al. First human case of fungal keratitis caused by a Putatively novel species of Lophotrichus. J. Clin. Microbiol. 53, 3063–3067. https://doi.org/10.1128/JCM.00471-15 (2015).

Feizi, S. & Azari, A. A. Approaches toward enhancing survival probability following deep anterior lamellar keratoplasty. Ther. Adv. Ophthalmol. 12, 2515841420913014. https://doi.org/10.1177/2515841420913014 (2020).

Fernandes, M., Gangopadhyay, N. & Sharma, S. Stenotrophomonas maltophilia keratitis after penetrating keratoplasty. Eye 19, 921–923. https://doi.org/10.1038/sj.eye.6701673 (2005).

Fu, L. & Gomaa, A. Acanthamoeba keratitis. N. Engl. J. Med. 381, 274. https://doi.org/10.1056/NEJMicm1817678 (2019).

Fukumoto, A., Sotozono, C., Hieda, O. & Kinoshita, S. Infectious keratitis caused by fluoroquinolone-resistant Corynebacterium. Jpn. J. Ophthalmol. 55, 579–580. https://doi.org/10.1007/s10384-011-0052-0 (2011).

Garg, P. Fungal, Mycobacterial, and Nocardia infections and the eye: An update. Eye 26, 245–251. https://doi.org/10.1038/eye.2011.332 (2012).

Garg, P., Kalra, P. & Joseph, J. Non-contact lens related Acanthamoeba keratitis. Indian J. Ophthalmol. 65, 1079–1086. https://doi.org/10.4103/ijo.IJO_826_17 (2017).

Garg, P. & Rao, G. N. Corneal ulcer: Diagnosis and management. Commun. Eye Health 12, 21–23 (1999).

Gjerde, H. & Mishra, A. Contact lens-related Pseudomonas aeruginosa keratitis in a 49-year-old woman. CMAJ 190, E54. https://doi.org/10.1503/cmaj.171165 (2018).

Hamroush, A. & Welch, J. Herpes simplex epithelial keratitis associated with daily disposable contact lens wear. Cont. Lens Anterior Eye 37, 228–229. https://doi.org/10.1016/j.clae.2013.11.007 (2014).

Hassan, H. M., Papanikolaou, T., Mariatos, G., Hammad, A. & Hassan, H. Candida albicans keratitis in an immunocompromised patient. Clin. Ophthalmol. 4, 1211–1215. https://doi.org/10.2147/OPTH.S7953 (2010).

Hilliam, Y., Kaye, S. & Winstanley, C. Pseudomonas aeruginosa and microbial keratitis. J. Med. Microbiol. 69, 3–13. https://doi.org/10.1099/jmm.0.001110 (2020).

Hirabayashi, K. E., Lin, C. C. & Ta, C. N. Oral miltefosine for refractory Acanthamoeba keratitis. Am. J. Ophthalmol. Case Rep. 16, 100555. https://doi.org/10.1016/j.ajoc.2019.100555 (2019).

Hoarau, G. et al. Moraxella keratitis: Epidemiology and outcomes. Eur. J. Clin. Microbiol. Infect. Dis. 39, 2317–2325. https://doi.org/10.1007/s10096-020-03985-7 (2020).

Hoffman, J., Burton, M. & Foster, A. Common and important ocular surface conditions. Commun. Eye Health 29, 50–51 (2016).

Hue, B., Doat, M., Renard, G., Brandely, M. L. & Chast, F. Severe keratitis caused by Pseudomonas aeruginosa successfully treated with ceftazidime associated with acetazolamide. J. Ophthalmol. 2009, 794935. https://doi.org/10.1155/2009/794935 (2009).

Karsten, E., Watson, S. L. & Foster, L. J. Diversity of microbial species implicated in keratitis: A review. Open Ophthalmol. J. 6, 110–124. https://doi.org/10.2174/1874364101206010110 (2012).

Kent, D. & Mangan, R. Find infectious keratitis’s root. Rev. Optometry (2019). https://www.reviewofoptometry.com/article/find-infectious-keratitiss-root.

Khor, W. B. et al. An outbreak of Fusarium keratitis associated with contact lens wear in Singapore. JAMA 295, 2867–2873. https://doi.org/10.1001/jama.295.24.2867 (2006).

Khurana, A. et al. Clinical characteristics, predisposing factors, and treatment outcome of Curvularia keratitis. Indian J. Ophthalmol. 68, 2088–2093. https://doi.org/10.4103/ijo.IJO_90_20 (2020).

Kim, S. J., Cho, Y. W., Seo, S. W., Kim, S. J. & Yoo, J. M. Clinical experiences in fungal keratitis caused by Acremonium. Clin. Ophthalmol. 8, 283–287. https://doi.org/10.2147/OPTH.S54255 (2014).

Kodavoor, S. K., Sarwate, N. J. & Ramamurhy, D. Microbial keratitis following accelerated corneal collagen cross-linking. Oman J. Ophthalmol. 8, 111–113. https://doi.org/10.4103/0974-620X.159259 (2015).

Kolkata, B. S. in Diseases of the Cornea Ch. 4, (2011).

Kumar, A. & Khurana, A. Bilateral curvularia keratitis. J. Ophthalmic. Vis. Res. 15, 574–575. https://doi.org/10.18502/jovr.v15i4.7796 (2020).

Kuo, M. T., Chen, J. L., Hsu, S. L., Chen, A. & You, H. L. An omics approach to diagnosing or investigating fungal keratitis. Int. J. Mol. Sci. https://doi.org/10.3390/ijms20153631 (2019).

Kuo, M. T. et al. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci. Rep. 10, 14424. https://doi.org/10.1038/s41598-020-71425-9 (2020).

Leck, A. & Burton, M. Distinguishing fungal and bacterial keratitis on clinical signs. Commun. Eye Health 28, 6–7 (2015).

Lee, C. Y. et al. Recurrent fungal keratitis and blepharitis caused by Aspergillus flavus. Am. J. Trop. Med. Hyg. 95, 1216–1218. https://doi.org/10.4269/ajtmh.16-0453 (2016).

Leon, S. Herpes simplex keratitis: Managing the masquerader. Rev. Cornea Contact Lenses (2020). https://www.reviewofcontactlenses.com/article/herpes-simplex-keratitis-managing-the-masquerader.

Lindquist, T. D., Sher, N. A. & Doughman, D. J. Clinical signs and medical therapy of early Acanthamoeba keratitis. Arch. Ophthalmol. 106, 73–77. https://doi.org/10.1001/archopht.1988.01060130079033 (1988).

Lorenzo-Morales, J., Khan, N. A. & Walochnik, J. An update on Acanthamoeba keratitis: Diagnosis, pathogenesis and treatment. Parasite 22, 10. https://doi.org/10.1051/parasite/2015010 (2015).

Miller, D., Cavuoto, K. M. & Alfonso, E. C. in Infections of the Cornea and Conjunctiva (eds S. Das & V. Jhanji) 85–104 (Springer, 2020).

Murphy, A. L. & Frick, R. Understanding corneal infection care. Rev, Cornea Contact Lenses (2015). https://www.reviewofcontactlenses.com/article/understanding-corneal-infection-care.

Mutoh, T., Matsumoto, Y. & Chikuda, M. A case of radial keratoneuritis in non-Acanthamoeba keratitis. Clin. Ophthalmol. 6, 1535–1538. https://doi.org/10.2147/OPTH.S36192 (2012).

Nguyen, V. & Lee, G. A. Management of microbial keratitis in general practice. Aust. J. Gen. Pract. 48, 516–519. https://doi.org/10.31128/AJGP-02-19-4857 (2019).

Nivenius, E. & Montan, P. Candida albicans should be considered when managing keratitis in Atopic keratoconjunctivitis. Acta Ophthalmol. 93, 579–580. https://doi.org/10.1111/aos.12708 (2015).

Nizeyimana, H. et al. Clinical efficacy of conjunctival flap surgery in the treatment of refractory fungal keratitis. Exp. Ther. Med. 14, 1109–1113. https://doi.org/10.3892/etm.2017.4605 (2017).

Ospina, P. D. in Keratoplasties - Surgical techniques and complications 101–120 (Intechopen, 2012).

Palme, C., Steger, B., Haas, G., Teuchner, B. & Bechrakis, N. E. Severe reactive ischemic posterior segment inflammation in Acanthamoeba keratitis: Case report of a patient with Sjogren’s syndrome. Spektrum Augenheilkd 31, 10–13. https://doi.org/10.1007/s00717-017-0334-0 (2017).

Pérez-Balbuena, A. L., Santander-García, D., Vanzzini-Zago, V. & Cuevas-Cancino, D. in Keratoplasties - Surgical Techniques and Complications Ch. 2, 11–32 (2012).

Reddy, J. C. & Rapuano, C. J. Current concepts in the management of herpes simplex anterior segment eye disease. Curr. Ophthalmol. Rep. 1, 194–203 (2013).

Robles-Contreras, A. et al. in Common Eye Infections (Intechopen, 2013).

Sanz-Marco, E., Lopez-Prats, M. J., Garcia-Delpech, S., Udaondo, P. & Diaz-Llopis, M. Fulminant bilateral Haemophilus influenzae keratitis in a patient with hypovitaminosis A treated with contaminated autologous serum. Clin. Ophthalmol. 5, 71–73. https://doi.org/10.2147/OPTH.S15847 (2011).

Shah, S. I. A. Etiology of infectious keratitis as seen at a tertiary care center in Larkana, Pakistan. Pak. J. Ophthalmol. 32, 48–52 (2016).

Shi, W. et al. Risk factors, clinical features, and outcomes of recurrent fungal keratitis after corneal transplantation. Ophthalmology 117, 890–896. https://doi.org/10.1016/j.ophtha.2009.10.004 (2010).

Shrestha, G. S., Vijay, A. K., Stapleton, F., Henriquez, F. L. & Carnt, N. Understanding clinical and immunological features associated with Pseudomonas and Staphylococcus keratitis. Cont. Lens. Anterior Eye 44, 3–13. https://doi.org/10.1016/j.clae.2020.11.014 (2021).

Sibley, D. & Larkin, D. F. P. Update on Herpes simplex keratitis management. Eye 34, 2219–2226. https://doi.org/10.1038/s41433-020-01153-x (2020).

Sowka, J. & Kabat, A. G. Make this virus vanish. Rev. Optometry 144 (2007). https://www.reviewofoptometry.com/article/make-this-virus-vanish.

Tabatabaei, S. A., Tabatabaei, M., Soleimani, M. & Tafti, Z. F. Fungal keratitis caused by rare organisms. J. Curr. Ophthalmol. 30, 91–96. https://doi.org/10.1016/j.joco.2017.08.004 (2018).

Tan, C. S., Krishnan, P. U., Foo, F. Y., Pan, J. C. & Voon, L. W. Neisseria meningitidis keratitis in adults: A case series. Ann. Acad. Med. Singap. 35, 837–839 (2006).

Thomas, P. A. Fungal infections of the cornea. Eye 17, 852–862. https://doi.org/10.1038/sj.eye.6700557 (2003).

Thomas, P. A. & Kaliamurthy, J. Mycotic keratitis: Epidemiology, diagnosis and management. Clin. Microbiol. Infect. 19, 210–220. https://doi.org/10.1111/1469-0691.12126 (2013).

Trobe, J. D. in The Physician's Guide to Eye Care Ch. 188, (American Academy of Ophthalmology, 1993).

Tu, E. Y., Joslin, C. E., Sugar, J., Shoff, M. E. & Booton, G. C. Prognostic factors affecting visual outcome in Acanthamoeba keratitis. Ophthalmology 115, 1998–2003. https://doi.org/10.1016/j.ophtha.2008.04.038 (2008).

Upadhyay, M. P., Srinivasan, M. & Whitcher, J. P. Diagnosing and managing microbial keratitis. Commun. Eye Health 28, 3–6 (2015).

Vanzzini Zago, V., Alcantara Castro, M. & Naranjo Tackman, R. Support of the laboratory in the diagnosis of fungal ocular infections. Int. J. Inflam. 2012, 643104. https://doi.org/10.1155/2012/643104 (2012).

Vemuganti, G. K., Pasricha, G., Sharma, S. & Garg, P. Granulomatous inflammation in Acanthamoeba keratitis: An immunohistochemical study of five cases and review of literature. Indian J. Med. Microbiol. 23, 231–238 (2005).

Watson, S., Cabrera-Aguas, M. & Khoo, P. Common eye infections. Aust. Prescr. 41, 67–72. https://doi.org/10.18773/austprescr.2018.016 (2018).

Wilhelmus, K. R. et al. Bilateral acanthamoeba keratitis. Am. J. Ophthalmol. 145, 193–197. https://doi.org/10.1016/j.ajo.2007.09.037 (2008).

Zago, V. V. & Perez-Balbuena, A. L. in Common Eye Infections (Intechopen, 2013).

Elmer, Y. T. C. et al. Prognostic factors affecting visual outcome in Acanthamoeba keratitis. Ophthalmology 115, 1998–2003. https://doi.org/10.1016/j.ophtha.2008.04.038 (2008).

Gaurav, P. K. et al. The three faces of herpes simplex epithelial keratitis: A steroid-induced situation. BMJ J. https://doi.org/10.1136/bcr-2014-209197 (2015).

Nicole, C. et al. diagnosis and management of contact lens-related microbial keratitis. Clin. Exp. Optometry 100, 482–493. https://doi.org/10.1111/cxo.12581 (2017).

Shreesha, K. K. et al. Microbial keratitis following accelerated corneal collagen cross-linking. Oman J. Ophythalmol. 8, 111–113. https://doi.org/10.4103/0974-620X.159259 (2015).

Acknowledgements

This work was supported by Grant-in-Aid for Scientific Research from the Japanese Ministry of Education, Science, and Culture: 17K11481, 21K09720, and 21K09742.

Author information

Authors and Affiliations

Contributions

A.K. and D.M. designed the research study and analyzed data. A.K. and D.M. wrote the manuscript. A.K. and Y.S. performed experiments. D.M., Y.N., and Y.A. wrote the code for deep learning models and GBDT. A.K., D.M., H.M., F.E., S.S., and Y.I. conducted evaluation on diagnosis of clinical images. Y.I. supervised experiments and data analysis. All of the authors approved the manuscript to be published and agreed to be accountable for all aspects of the study.

Corresponding author

Ethics declarations

Competing interests

Dai Miyazaki reports lecture fee from Santen Pharmaceutical, Senju Pharmaceutical, Alcon, outside the submitted work. Funding: Grant-in-Aid for Scientific Research from the Japanese Ministry of Education, Science, and Culture, 21K09742. Yoshitsugu Inoue reports grants and lecture fee from Senju Pharmaceutical Co, Ltd., grants from Santen Pharmaceutical Co, Ltd., grants from Alcon Japan, Ltd., outside the submitted work. Funding: Grant-in-Aid for Scientific Research from the Japanese Ministry of Education, Science, and Culture, 17K11481, 21K09720. Ayumi Koyama, Yuji Nakagawa, Yuji Ayatsuka, Hitomi Miyake, Fumie Ehara, Shin-ichi Sasaki, and Yumiko Shimizu declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koyama, A., Miyazaki, D., Nakagawa, Y. et al. Determination of probability of causative pathogen in infectious keratitis using deep learning algorithm of slit-lamp images. Sci Rep 11, 22642 (2021). https://doi.org/10.1038/s41598-021-02138-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-02138-w

This article is cited by

-

Potential applications of artificial intelligence in image analysis in cornea diseases: a review

Eye and Vision (2024)

-

Corneal ring infiltrate- far more than Acanthamoeba keratitis: review of pathophysiology, morphology, differential diagnosis and management

Journal of Ophthalmic Inflammation and Infection (2023)

-

Bacterial and Fungal Keratitis: Current Trends in Its Diagnosis and Management

Current Clinical Microbiology Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.