Abstract

Memory-one strategies are a set of Iterated Prisoner’s Dilemma strategies that have been praised for their mathematical tractability and performance against single opponents. This manuscript investigates best response memory-one strategies with a theory of mind for their opponents. The results add to the literature that has shown that extortionate play is not always optimal by showing that optimal play is often not extortionate. They also provide evidence that memory-one strategies suffer from their limited memory in multi agent interactions and can be out performed by optimised strategies with longer memory. We have developed a theory that has allowed to explore the entire space of memory-one strategies. The framework presented is suitable to study memory-one strategies in the Prisoner’s Dilemma, but also in evolutionary processes such as the Moran process. Furthermore, results on the stability of defection in populations of memory-one strategies are also obtained.

Similar content being viewed by others

Introduction

The Prisoner’s Dilemma (PD) is a two player game used in understanding the evolution of cooperative behaviour, formally introduced in1. Each player has two options, to cooperate (C) or to defect (D). The decisions are made simultaneously and independently. The normal form representation of the game is given by:

where \(S_p\) represents the utilities of the row player and \(S_q\) the utilities of the column player. The payoffs, \((R, P, S, T)\), are constrained by \(T> R> P > S\) and \(2R > T + S\), and the most common values used in the literature are \((R, P, S, T) = (3, 1, 0, 5)\)2. The numerical experiments of our manuscript are carried out using these payoff values. The PD is a one shot game, however, it is commonly studied in a manner where the history of the interactions matters. The repeated form of the game is called the Iterated Prisoner’s Dilemma (IPD).

Memory-one strategies are a set of IPD strategies that have been studied thoroughly in the literature3,4, however, they have gained most of their attention when a certain subset of memory-one strategies was introduced in5, the zero-determinant strategies (ZDs). In6 it was stated that “Press and Dyson have fundamentally changed the viewpoint on the Prisoner’s Dilemma”. A special case of ZDs are extortionate strategies that choose their actions so that a linear relationship is forced between the players’ score ensuring that they will always receive at least as much as their opponents. ZDs are indeed mathematically unique and are proven to be robust in pairwise interactions, however, their true effectiveness in tournaments and evolutionary dynamics has been questioned7,8,9,10,11,12.

The purpose of this work is to reinforce the literature on the limitations of extortionate strategies by considering a new approach. More specifically, by considering best response memory-one strategies with a theory of mind of their opponents. There are several works in the literature that have considered strategies with a theory of mind5,6,13,14,15,16. These works defined “theory of mind” as intention recognition13,14,15,16 and as the ability of a strategy to realise that their actions can influence opponents6. Compared to these works, theory of mind is defined here as the ability of a strategy to know the behaviour of their opponents and alter their own behaviour in response to that.

We present a closed form algebraic expression for the utility of a memory-one strategy against a given set of opponents and a compact method of identifying it’s best response to that given set of opponents. The aim is to evaluate whether a best response memory-one strategy behaves in a zero-determinant way which in turn indicates whether it can be extortionate. We do this using a linear algebraic approach presented in17. This is done in tournaments with two opponents. Moreover, we introduce a framework that allows the comparison of an optimal memory-one strategy and an optimised strategy which has a larger memory.

To illustrate the analytical results obtained in this manuscript a number of numerical experiments are run. The source code for these experiments has been written in a sustainable manner18. It is open source (https://github.com/Nikoleta-v3/Memory-size-in-the-prisoners-dilemma) and tested which ensures the validity of the results. It has also been archived and can be found at19.

Methods

One specific advantage of memory-one strategies is their mathematical tractability. They can be represented completely as an element of \({\mathbb {R}}^{4}_{[0, 1]}\). This originates from20 where it is stated that if a strategy is concerned with only the outcome of a single turn then there are four possible ‘states’ the strategy could be in; both players cooperated (\(CC\)), the first player cooperated whilst the second player defected (\(CD\)), the first player defected whilst the second player cooperated (\(DC\)) and both players defected (\(DD\)). Therefore, a memory-one strategy can be denoted by the probability vector of cooperating after each of these states; \(p=(p_1, p_2, p_3, p_4) \in {\mathbb {R}}_{[0,1]} ^ 4\).

In20 it was shown that it is not necessary to simulate the play of a strategy p against a memory-one opponent q. Rather this exact behaviour can be modeled as a stochastic process, and more specifically as a Markov chain whose corresponding transition matrix \(M\) is given by Eq. (2). The long run steady state probability vector \(v\), which is the solution to \(v M = v\), can be combined with the payoff matrices of Eq. (1) to give the expected payoffs for each player. More specifically, the utility for a memory-one strategy \(p\) against an opponent \(q\), denoted as \(u_q(p)\), is given by Eq. (3).

This manuscript has explored the form of \(u_q(p)\), to the best of the authors knowledge no previous work has done this, and Theorem 1 states that \(u_q(p)\) is given by a ratio of two quadratic forms21.

Theorem 1

The expected utility of a memory-one strategy \(p\in {\mathbb {R}}_{[0,1]}^4\) against a memory-one opponent \(q\in {\mathbb {R}}_{[0,1]}^4\), denoted as \(u_q(p)\), can be written as a ratio of two quadratic forms:

where \(Q, {\bar{Q}}\) \(\in {\mathbb {R}}^{4\times 4}\) are square matrices defined by the transition probabilities of the opponent \(q_1, q_2, q_3, q_4\) as follows:

\(c \text { and } {\bar{c}}\) \(\in {\mathbb {R}}^{4 \times 1}\) are similarly defined by:

and the constant terms \(a, {\bar{a}}\) are defined as \(a = - P q_{2} + P + T q_{4}\) and \({\bar{a}} = - q_{2} + q_{4} + 1\mathbf{}\).

The proof of Theorem 1 is given in the Supplementary Information. Theorem 1 can be extended to consider multiple opponents. The IPD is commonly studied in tournaments and/or Moran Processes where a strategy interacts with a number of opponents. The payoff of a player in such interactions is given by the average payoff the player received against each opponent. More specifically the expected utility of a memory-one strategy against \(N\) opponents is given by:

Equation (9) is the average score (using Eq. (4)) against the set of opponents.

Estimating the utility of a memory-one strategy against any number of opponents without simulating the interactions is the main result used in the rest of this manuscript. It will be used to obtain best response memory-one strategies in tournaments in order to explore the limitations of extortion and restricted memory.

Results

The formulation as presented in Theorem 1 can be used to define memory-one best response strategies as a multi dimensional optimisation problem given by:

Optimising this particular ratio of quadratic forms is not trivial. It can be verified empirically for the case of a single opponent that there exists at least one point for which the definition of concavity does not hold. The non concavity of \(u(p)\) indicates multiple local optimal points. This is also intuitive. The best response against a cooperator, \(q=(1, 1, 1, 1)\), is a defector \(p^*=(0, 0, 0, 0)\). The strategies \(p=\left( \frac{1}{2}, 0, 0, 0\right) \) and \(p=\left( \frac{1}{2}, 0, 0, \frac{1}{2}\right) \) are also best responses. The approach taken here is to introduce a compact way of constructing the discrete candidate set of all local optimal points, and evaluating the objective function Eq. (9). This gives the best response memory-one strategy. The approach is given in Theorem 2.

Theorem 2

The optimal behaviour of a memory-one strategy player \(p^* \in {\mathbb {R}}_{[0, 1]} ^ 4\) against a set of \(N\) opponents \(\{q^{(1)}, q^{(2)}, \dots , q^{(N)} \}\) for \(q^{(i)} \in {\mathbb {R}}_{[0, 1]} ^ 4\) is given by:

The set \(S_q\) is defined as all the possible combinations of:

Note that there is no immediate way to find the zeros of \(\frac{d}{dp} \sum \limits _{i=1} ^ N u_q(p)\) where,

For \(\frac{d}{dp} \sum \limits _{i=1} ^ N u_q(p)\) to equal zero then:

The proof of Theorem 2 is given in the Supplementary Information. Finding best response memory-one strategies is analytically feasible using the formulation of Theorem 2 and resultant theory22. However, for large systems building the resultant becomes intractable. As a result, best responses will be estimated heuristically using a numerical method, suitable for problems with local optima, called Bayesian optimisation23.

Limitations of extortionate behaviour

In multi opponent settings, where the payoffs matter, strategies trying to exploit their opponents will suffer. Compared to ZDs, best response memory-one strategies, which have a theory of mind of their opponents, utilise their behaviour in order to gain the most from their interactions. The question that arises then is whether best response strategies are optimal because they behave in an extortionate way.

The results of this section use Bayesian optimisation to generate a data set of best response memory-one strategies for \(N=2\) opponents. The data set is available at24. It contains a total of 1000 trials corresponding to 1000 different instances of a best response strategy in tournaments with \(N=2\). For each trial a set of 2 opponents is randomly generated and the memory-one best response against them is found. In order to investigate whether best responses behave in an extortionate matter the SSE method described in17 is used. More specifically, in17 the point \(x^*\), in the space of memory-one strategies, that is the nearest extortionate strategy to a given strategy \(p\) is given by,

where \({\bar{p}}=(p_1 - 1, p_2 - 1, p_3, p_4)\) and

Once this closest ZDs is found, the squared norm of the remaining error is referred to as sum of squared errors of prediction (SSE):

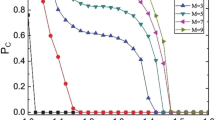

Thus, SSE is defined as how far a strategy is from behaving as a ZD. A high SSE implies a non extortionate behaviour. The distribution of SSE for the best response in tournaments (\(N=2\)) is given in Fig. 1. Moreover, a statistical summary of the SSE distribution is given in Table 1.

For the best response in tournaments with \(N=2\) the distribution of SSE is skewed to the left, indicating that the best response does exhibit ZDs behaviour and so could be extortionate, however, the best response is not uniformly a ZDs. A positive measure of skewness and kurtosis, and a mean of 0.34 indicate a heavy tail to the right. Therefore, in several cases the strategy is not trying to extort its opponents. This highlights the importance of adaptability since the best response strategy against an opponent is rarely (if ever) a unique ZDs.

Limitations of memory size

The other main finding presented in5 was that short memory of the strategies was all that was needed. We argue that the second limitation of ZDs in multi opponent interactions is that of their restricted memory. To demonstrate the effectiveness of memory in the IPD we explore a best response longer-memory strategy against a given set of memory-one opponents, and compare it’s performance to that of a memory-one best response.

In25, a strategy called Gambler which makes probabilistic decisions based on the opponent’s \(n_1\) first moves, the opponent’s \(m_1\) last moves and the player’s \(m_2\) last moves was introduced. In this manuscript Gambler with parameters: \(n_1 = 2, m_1 = 1\) and \(m_2 = 1\) is used as a longer-memory strategy. By considering the opponent’s first two moves, the opponents last move and the player’s last move, there are only 16 \((4 \times 2 \times 2)\) possible outcomes that can occur, furthermore, Gambler also makes a probabilistic decision of cooperating in the opening move. Thus, Gambler is a function \(f: \{\text {C, D}\} \rightarrow [0, 1]_{{\mathbb {R}}}\). This can be hard coded as an element of \([0, 1]_{{\mathbb {R}}} ^ {16 + 1}\), one probability for each outcome plus the opening move. Hence, compared to Eq. (10), finding an optimal Gambler is a 17 dimensional problem given by:

Note that Eq. (9) can not be used here for the utility of Gambler, and actual simulated players are used. This is done using26 with 500 turns and 200 repetitions, moreover, Eq. (18) is solved numerically using Bayesian optimisation.

Similarly to the previous section, a large data set has been generated with instances of an optimal Gambler and a memory-one best response, available at24. Estimating a best response Gambler (17 dimensions) is computational more expensive compared to a best response memory-one (4 dimensions). As a result, the analysis of this section is based on a total of 152 trials. As before, for each trial \(N=2\) random opponents have been selected.

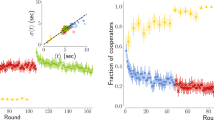

The ratio between Gambler’s utility and the best response memory-one strategy’s utility has been calculated and its distribution in given in Fig. 2. It is evident from Fig. 2 that Gambler always performs as well as the best response memory-one strategy and often performs better. There are no points where the ratio value is less than 1, thus Gambler never performed less than the best response memory-one strategy and in places outperforms it. However, against two memory-one opponents Gambler’s performance is better than the optimal memory-one strategy. This is evidence that in the case of multiple opponents, having a shorter memory is limiting.

Dynamic best response player

In several evolutionary settings such as Moran Processes self interactions are key. Previous work has identified interesting results such as the appearance of self recognition mechanisms when training strategies using evolutionary algorithms in Moran processes11. This aspect of reinforcement learning can be done for best response memory-one strategies, as presented in this manuscript, by incorporating the strategy itself in the objective function as shown in Eq. (10). Where \(K\) is the number of self interactions that will take place.

For determining the memory-one best response with self interactions, an algorithmic approach called best response dynamics is proposed. The best response dynamics approach used in this manuscript is given by Algorithm 1.

To investigate the effectiveness of this approach, more formally a Moran process will be considered. If a population of \(n\) total individuals of two types is considered, with \(K\) individuals of the first type and \(n-K\) of the second type. The probability that the individuals of the first type will take over the population (the fixation probability) is denoted by \(x_K\) and is known to be27:

where:

To evaluate the formulation proposed here the best response player (taken to be the first type of individual in our population) will be allowed to act dynamically: adjusting their probability vector at every generation. In essence using the theory of mind to find the best response to not only the opponent but also the distribution of the population. Thus for every value of \(K\) there is a different best response player.

Considering the dynamic best response player as a vector \(p\in {\mathbb {R}}^4_{[0, 1]}\) and the opponent as a vector \(q\in {\mathbb {R}}^4_{[0, 1]}\), the transition probabilities depend on the payoff matrix \(A ^ {(K)}\) where:

-

\(A ^ {(K)}_{11}=u_{p}(p)\) is the long run utility of the best response player against itself.

-

\(A ^ {(K)}_{12}=u_{q}(p)\) is the long run utility of the best response player against the opponent.

-

\(A ^ {(K)}_{11}=u_{p}(q)\) is the long run utility of the opponent against the best response player.

-

\(A ^ {(K)}_{11}=u_{q}(q)\) is the long run utility of the opponent against itself.

The matrix \(A ^ {(K)}\) is calculated using Eq. (4). For every value of \(K\) the best response dynamics algorithm (Algorithm 1) is used to calculate the best response player.

The total utilities/fitnesses for each player can be written down:

where \(f_1^{(K)}\) is the fitness of the best response player, and \(f_2^{(K)}\) is the fitness of the opponent.

Using this:

and:

which are all that are required to calculate \(x_K\).

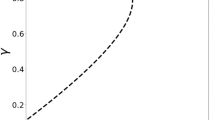

Figure 3 shows the results of an analysis of \(x_K\) for dynamically updating players. This is obtained over 182 Moran process against 122 randomly selected opponents. For each Moran process the fixation probabilities for \(K\in \{1, 2, 3\}\) are collected. As well as recording \(x_K\), \({\tilde{x}}_K\) is measured where \(\tilde{x}_K\) represents the fixation probability of the best response player calculated for a given \(K\) but not allowing it to dynamically update as the population changes. The ratio \(\frac{x_K}{{\tilde{x}}_K}\) is included in the Figure. This is done to be able to compare to a high performing strategy that has a theory of mind of the opponent but not of the population density. The ratio shows a relatively high performance compared to a non dynamic best response strategy. The mean ratio over all values of \(K\) and all experiments is \(1.044\). In some cases this dynamic updating results in a 25% increase in the absorption probability.

As denoted before it is clear that the best response strategy in general does not have a low SSE (only 25% of the data is below 0.923 and the average is 0.454) this is further compounded by the ratio being above one showing that in many cases the dynamic strategy benefits from its ability to adapt. This indicates that memory-one strategies that perform well in Moran processes need to more adaptable than a ZDs, and aim for mutual cooperation as well as exploitation which is in line with the results of28 where their strategy was designed to adapt and was shown to be evolutionary stable. The findings of this work show that an optimal strategy acts in the same way.

Discussion

This manuscript has considered best response strategies in the IPD game, and more specifically, memory-one best responses. It has proven that:

-

The utility of a memory-one strategy against a set of memory-one opponents can be written as a sum of ratios of quadratic forms (Theorem 1).

-

There is a compact way of identifying a memory-one best response to a group of opponents through a search over a discrete set (Theorem 2).

There is one further theoretical result that can be obtained from Theorem 1, which allows the identification of environments for which cooperation cannot occur (Details are in the Supplementary Information). Moreover, Theorem 2 does not only have game theoretic novelty, but also the mathematical novelty of solving quadratic ratio optimisation problems where the quadratics are non concave.

The empirical results of the manuscript investigated the behaviour of memory-one strategies and their limitations. The empirical results have shown that the performance of memory-one strategies rely on adaptability and not on extortion, and that memory-one strategies’ performance is limited by their memory in cases where they interact with multiple opponents. These relied on two bespoke data sets of 1000 and 152 pairs of memory-one opponents equivalently, archived at24.

A further set of results for Moran processes with a dynamically updating best response player was generated and is archived in29. This confirmed the previous results which is that high performance requires adaptability and not extortion. It also provides a framework for future stability of optimal behaviour in evolutionary settings.

In the interactions we have considered here the players do not make mistakes; their actions were executed with perfect accuracy. Mistakes, however, are relevant in the reasearch of repeated games4,30,31,32. In future work we would consider interactions with “noise”. Noise can be incoroporated into our formulation and it can be shown that the utility remains a ratio of quadratic forms (Details see the Supplementary Information). Another avenue of investigation would be to understand if and/or when an evolutionary trajectory leads to a best response strategy.

By specifically exploring the entire space of memory-one strategies to identify the best strategy for a variety of situations, this work adds to the literature casting doubt on the effectiveness of ZDs, highlights the importance of adaptability and provides a framework for the continued understanding of these important questions.

References

Flood, M. M. Some experimental games. Manage. Sci. 5, 5–26. https://doi.org/10.1287/mnsc.5.1.5 (1958).

Axelrod, R. & Hamilton, W. D. The evolution of cooperation. Science 211, 1390–1396. https://doi.org/10.1126/science.7466396 (1981).

Nowak, M. & Sigmund, K. The evolution of stochastic strategies in the prisoners dilemma. Acta Appl. Math. 20, 247–265. https://doi.org/10.1007/BF00049570 (1990).

Nowak, M. & Sigmund, K. A strategy of win-stay, lose-shift that outperforms tit-for-tat in the prisoners dilemma game. Nature 364, 56. https://doi.org/10.1038/364056a0 (1993).

Press, W. H. & Dyson, F. J. Iterated prisoners dilemma contains strategies that dominate any evolutionary opponent. Proc. Natl. Acad. Sci. 109, 10409–10413. https://doi.org/10.1073/pnas.1206569109 (2012).

Stewart, A. J. & Plotkin, J. B. Extortion and cooperation in the prisoners dilemma. Proc. Natl. Acad. Sci. 109, 10134–10135. https://doi.org/10.1073/pnas.1208087109 (2012).

Adami, C. & Hintze, A. Evolutionary instability of zero-determinant strategies demonstrates that winning is not everything. Nat. Commun. 4, 2193 (2013).

Hilbe, C., Nowak, M. & Sigmund, K. Evolution of extortion in iterated prisoners dilemma games. Proc. Natl. Acad. Sci. https://doi.org/10.1073/pnas.1214834110 (2013).

Hilbe, C., Nowak, M. & Traulsen, A. Adaptive dynamics of extortion and compliance. PLoS ONE 8, 1–9. https://doi.org/10.1371/journal.pone.0077886 (2013).

Hilbe, C., Traulsen, A. & Sigmund, K. Partners or rivals? strategies for the iterated prisoners dilemma. Games Econ. Behav. 92, 41–52. https://doi.org/10.1016/j.geb.2015.05.005 (2015).

Knight, V., Harper, M., Glynatsi, N. E. & Campbell, O. Evolution reinforces cooperation with the emergence of self-recognition mechanisms: An empirical study of strategies in the moran process for the iterated prisoners dilemma. PLoS ONE 13, 1–33. https://doi.org/10.1371/journal.pone.0204981 (2018).

Lee, C., Harper, M. & Fryer, D. The art of war: Beyond memory-one strategies in population games. PLoS ONE 10, 1–16. https://doi.org/10.1371/journal.pone.0120625 (2015).

Han, T. A., Pereira, L. M. & Santos, F. C. Intention recognition promotes the emergence of cooperation. Adapt. Behav. 19, 264–279. https://doi.org/10.1177/1059712311410896 (2011).

De Weerd, H., Verbrugge, R. & Verheij, B. How much does it help to know what she knows you know? An agent-based simulation study. Artif. Intell. 199, 67–92. https://doi.org/10.1016/j.artint.2013.05.004 (2013).

Devaine, M., Hollard, G. & Daunizeau, J. Theory of mind: Did evolution fool us?. PLoS ONE 9, e87619. https://doi.org/10.1371/journal.pone.0087619 (2014).

Han, T. A., Pereira, L. M. & Santos, F. C. Corpus-based intention recognition in cooperation dilemmas. Artif. Life. https://doi.org/10.1162/ARTL_a_00072 (2012).

Knight, V. A., Harper, M., Glynatsi, N. E. & Gillard, J. Recognising and evaluating the effectiveness of extortion in the iterated prisoners dilemma. Preprint at https://arxiv.org/abs/1904.00973 (2019).

Benureau, F. C. & Rougier, N. P. Re-run, repeat, reproduce, reuse, replicate: Transforming code into scientific contributions. Front. Neuroinform. 11, 69. https://doi.org/10.3389/fninf.2017.00069 (2018).

Glynatsi, N. E. & Knight, V. A. Nikoleta-v3/Memory-size-in-the-prisoners-dilemma: Initial release. Zenodo. https://doi.org/10.5281/zenodo.3533146 (2019).

Nowak, M. & Sigmund, K. Game-dynamical aspects of the prisoners dilemma. Appl. Math. Comput. 30, 191–213. https://doi.org/10.1016/0096-3003(89)90052-0 (1989).

Kepner, J. & Gilbert, J. Graph Algorithms in the Language of Linear Algebra (SIAM, Philadelphia, 2011).

Jonsson, G. & Vavasis, S. Accurate solution of polynomial equations using macaulay resultant matrices. Math. Comput. 74, 221–262 (2005).

Močkus, J. On Bayesian methods for seeking the extremum. In Optimization Techniques IFIP Technical Conference Novosibirsk (ed. Stoe, J.) (Springer, Heidelberg, 1975).

Glynatsi, N. E. & Knight, V. A. Raw data for: A theory of mind. Best responses to memory-one strategies. The limitations of extortion and restricted memory. Zenodo. https://doi.org/10.5281/zenodo.3533094 (2019).

Harper, M. et al. Reinforcement learning produces dominant strategies for the iterated prisoners dilemma. PLoS ONE 12, 1–33. https://doi.org/10.1371/journal.pone.0188046 (2017).

The Axelrod project developers. Axelrod: 4.4.0. Zenodo. https://doi.org/10.5281/zenodo.1168078 (2016).

Nowak, M. A. Evolutionary Dynamics: Exploring the Equations of Life (Harvard University Press, Cambridge, 2006).

Hilbe, C., Chatterjee, K. & Nowak, M. A. Partners and rivals in direct reciprocity. Nat. Hum. Behav. 2, 469–477. https://doi.org/10.1038/s41562-018-0320-9 (2018).

Glynatsi, N. E. & Knight, V. A. Raw data Moran Experiments: A theory of mind Best responses to memory-one strategies. The limitations of extortion and restricted memory. Zenodo. https://doi.org/10.5281/zenodo.4036427 (2020).

Boyd, R. Mistakes allow evolutionary stability in the repeated prisoners dilemma game. J. Theor. Biol. 136, 47–56. https://doi.org/10.1016/s0022-5193(89)80188-2 (1989).

Imhof, L. A., Fudenberg, D. & Nowak, M. A. Tit-for-tat or win-stay, lose-shift?. J. Theor. Biol. 247, 574–580. https://doi.org/10.1016/j.jtbi.2007.03.027 (2007).

Wu, J. & Axelrod, R. How to cope with noise in the iterated prisoners dilemma. J. Conflict Resolut. 39, 183–189. https://doi.org/10.1177/0022002795039001008 (1995).

Tim, H. et al. scikit-optimize/scikit-optimize: v0.5.2. Zenodo. https://doi.org/10.5281/zenodo.1207017 (2018).

Hunter, J. D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 9, 90–95. https://doi.org/10.1109/MCSE.2007.55 (2007).

Meurer, A. et al. Sympy: Symbolic computing in python. PeerJ Comput. Sci. https://doi.org/10.7717/peerj-cs.103 (2017).

Van Der Walt, S., Colbert, S. C. & Varoquaux, G. The NumPy array: A structure for efficient numerical computation. Comput. Sci. Eng. 13, 22–30. https://doi.org/10.1109/MCSE.2011.37 (2011).

Acknowledgements

A variety of software libraries have been used in this work: The Axelrod library for IPD simulations26. The Scikit-optimize library for an implementation of Bayesian optimisation33. The Matplotlib library for visualisation34. The SymPy library for symbolic mathematics35. The Numpy library for data manipulation36.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

N.G. and V.K. conceived the idea. N.G. conducted the experiments, N.G. and V.K. analysed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Glynatsi, N.E., Knight, V.A. Using a theory of mind to find best responses to memory-one strategies. Sci Rep 10, 17287 (2020). https://doi.org/10.1038/s41598-020-74181-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-74181-y

This article is cited by

-

Cooperation in alternating interactions with memory constraints

Nature Communications (2022)

-

Evolution of cooperation through cumulative reciprocity

Nature Computational Science (2022)

-

Misperception influence on zero-determinant strategies in iterated Prisoner’s Dilemma

Scientific Reports (2022)

-

Human players manage to extort more than the mutual cooperation payoff in repeated social dilemmas

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.