Abstract

We use a data-driven approach to study the magnetic and thermodynamic properties of van der Waals (vdW) layered materials. We investigate monolayers of the form \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\), based on the known material \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\), using density functional theory (DFT) calculations and machine learning methods to determine their magnetic properties, such as magnetic order and magnetic moment. We also examine formation energies and use them as a proxy for chemical stability. We show that machine learning tools, combined with DFT calculations, can provide a computationally efficient means to predict properties of such two-dimensional (2D) magnetic materials. Our data analytics approach provides insights into the microscopic origins of magnetic ordering in these systems. For instance, we find that the X site strongly affects the magnetic coupling between neighboring A sites, which drives the magnetic ordering. Our approach opens new ways for rapid discovery of chemically stable vdW materials that exhibit magnetic behavior.

Similar content being viewed by others

Introduction

The discovery of graphene ushered in a new era of studies of materials properties in the two-dimensional (2D) limit1. For many years after this discovery only a handful of van der Waals (vdW) materials were extensively studied. Recently, over a thousand new 2D crystals have been proposed2,3,4,5. The explosion in the number of known 2D materials increases demands for probing them for exciting new physics and potential applications6,7. Several 2D materials have already been shown to exhibit a range of exotic properties including superconductivity, topological insulating behavior and half-metallicity8,9,10,11. Consequently, there is a need to develop tools to quickly screen a large number of 2D materials for targeted properties. Traditional approaches, based on sequential quantum mechanical calculations or experiments are usually slow and costly. Furthermore, a generic approach to design a crystal structure with any desired property, although an active area of research12,13,14,15 and of practical significance, does not exist yet. Research towards building structure-property relationships of crystals is in its infancy16,17,18,19.

Long-range ferromagnetism and anti-ferromagnetism in 2D crystals has recently been discovered20,21,22,23,24, sparking a push to understand the properties of these 2D magnetic materials and to discover new ones with improved behavior5,19,25,26,27,28,29,30,31,32. 2D crystals provide a unique platform for exploring the microscopic origins of magnetic ordering in reduced dimensions. Long-range magnetic order is strongly suppressed in 2D according to the Mermin-Wagner theorem33, but magnetocrystalline anisotropy can stabilize magnetic ordering34. This magnetic anisotropy is driven by spin-orbit coupling which depends on the relative positions of atoms and their identities. As a result, the magnetic order should be strongly affected by changes in the structural arrangements of atoms and chemical composition of the crystal.

Chemical instability presents a crucial limitation to the fabrication and use of 2D magnetic materials. For instance, black phosphorous degrades upon exposure to air and thus needs to be handled and stored in vacuum or under inert atmosphere35. Structural stability is a necessary ingredient for industrial scale application of magnetic vdW materials, such as \(\hbox {CrI}_3\) and \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\)23,24. In addition to designing 2D materials for desirable magnetic properties, it is important to screen for materials that are chemically stable. In our approach, we employ the calculated formation energy as a proxy for the chemical stability36. A recent data-driven study found that formation energy was one of the most important predictors of 2D MXene stability37, lining up with heuristics identified in the 2D Materials community5,38. To calculate the formation energy, we obtain the total energies of systems at zero temperature, and obtain the difference in total energy between the crystal and its constituent elements in their respective crystal phases. This quantity determines whether the structure is thermodynamically stable or would decompose. This formulation ignores the effects of zero-point vibrational energy and entropy on the stability.

While the formation energy provides evidence for thermodynamic stability, dynamic stability can also be assessed. By computing the phonon spectrum of the 0K structure, the presence of negative phonon frequencies demonstrates dynamic instability. All-positive frequencies demonstrate a structure stable against small perturbations, suggesting that a freestanding monolayer may be stable and experimentally accessible5.

The magnetic properties at finite temperatures are also important. There is growing interest in identifying 2D materials with magnetic order above room temperature for fundamental research and device applications29,39. Consequently, it is desirable to build a tool to screen for 2D materials which are ferromagnetic at elevated temperatures. In our study, we analyze the magnetic excitation energy, along with the magnetic anisotropy to estimate the magnetic properties at finite temperatures.

Recently, machine learning (ML) has been combined with traditional methods (experiments and ab initio calculations) to advance rapid materials discovery2,3,36,40,41,42,43,44,45. ML models trained on a number of structures can predict the properties of a much larger set of materials. In particular, there is presently a growing interest in exploiting ML for discovery of magnetic materials27,46. ML studies of ferromagnetism in transition metal alloys have highlighted the importance of data analytics techniques to tackle problems in condensed matter physics46. In addition, the computational study of layered transition metal carbides and nitrides, known as MXenes, using high-throughput DFT relies on tuning the atomic composition of known MXenes to identify new ferromagnetic phases32,47. Further to this, recent work uses ML models to optimize the chemical composition of magnetic materials with three-dimensional crystal structures27. Therefore, it is conceivable that tuning the atomic composition could provide an additional degree of freedom in the search for stable 2D materials with interesting magnetic properties48. Even more compelling is the prospect of ML tools to assist in uncovering the physics underlying the stability and magnetism of 2D materials49,50. Specifically, ML methods can identify patterns in a high-dimensional space revealing relationships that could be otherwise missed51,52.

Methodology

In order to develop a path towards discovering 2D magnetic materials, we generate a database of structures based on monolayer \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\) (Fig. 1a) using density functional theory (DFT) calculations with non-collinear spin and spin-orbit interactions included. The possible structures amount to a combinatorially large number of type \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) (\(\sim 10^4\)) with different elements occupying the A, B and X sites. We select an initial subset of 198 structures due to computational constraints (and calculate additional structures at a later stage). We obtain the total energy, magnetic order, and magnetic moment of each structure. The ground-state properties were determined by examining the energies of the fully optimized structure with several spin configurations, including non-spin-polarized, parallel, and anti-parallel spin orientations at the A sites (Fig. 1b). The energy difference between parallel and anti-parallel spin configurations estimates an excited state property, the magnetic excitation energy. This is linked to the stability of magnetic order at finite temperatures. Using the Heisenberg model on a honeycomb lattice we extract the effective exchange energies J for the set of structures from their corresponding magnetic excitation energies53. The Curie temperatures can be estimated using analytical methods which involve J as well as the magneto-crystalline anisotropy (MCA)5,54. MCA is estimated by calculating the magnetic anisotropy energy5,55 (see Supplementary Information S1).

We then employ a set of materials descriptors which comprise easily attainable atomic properties, and are suitable for describing magnetic phenomena. We employ additional descriptors which are related to the formation energy56. The performance of descriptors in predicting the magnetic properties or thermodynamic stability sheds some light into the origin of these properties.

To create the database we use DFT calculations with the VASP code57. We used the GGA-PBE for the exchange-correlation functional58. The plane-wave energy cutoff was 300 eV for the initial set of calculations; this was increased to 450 eV at a later stage. The vacuum region was thicker than 20 Å. The atoms were fully relaxed until the force on each atom was smaller than 0.01 eV/Å. A \(\Gamma\)-centered \(10\times 10 \times 1\)k-point mesh was utilized.

We create the different structures by substituting one of two Cr atoms (A site) in the unit cell with a transition metal atom from the list: Ti, V, Cr, Mn, Fe, Co, Ni, Cu, Y, Nb, and Ru. In the two B sites we place combinations of Ge, Si, and P atoms, namely \(\hbox {Ge}_2\), GeSi, GeP, \(\hbox {Si}_2\), SiP, and \(\hbox {P}_2\). The atoms at X sites were either S, Se, or Te, that is, \(\hbox {S}_6\), \(\hbox {Se}_6\), or \(\hbox {Te}_6\). Figure 1c shows the choice of substitution atoms in the Periodic Table. An example of a structure created through this process is (CrTi)(SiGe)\(\hbox {Te}_6\).

The careful choice of descriptors is essential for the success of any ML approach59,60. We use atomic properties data from the python mendeleev package 0.4.161 to build descriptors for our ML models. We performed supervised learning with atomic properties data as inputs, with target properties the magnetic moment, the magnetic excitation energy and the formation energy. The choice of the set of descriptors for the magnetic properties was motivated by the Pauli exclusion principle, which gives rise to the exchange and super-exchange interactions. We also consider the magneto-crystalline anisotropy62 by building inter-atomic distances and electronic orbital information into our descriptors. With respect to the formation energy, the choice of descriptors was motivated, in part, by the extended Born-Haber model56, and include the dipole polarizability, the ionization energy and the atomic radius (see Supplementary Information S1 for a full list of atomic properties and descriptors used).

The data were randomly divided into a training set, a cross-validation set and a test set. Training data and cross-validation were typically 60% of the total data while test data comprised 40% of all the data. We employed the following ML models: kernel ridge regression, extra trees regression, support vector classification, and neural networks. Kernel ridge regression with a Gaussian kernel has been shown to be successful in several materials informatics studies. Extra trees regression allows us to determine the relative importance of features used in a successful model63. A support vector classifier was used to predict the low-energy magnetic order64. An analysis of hidden layers of the deep neural networks could allow us to identify patterns in 2D materials properties data, thereby guiding theoretical studies50,52.

Results and discussion

Magnetic properties

(a) Energy difference between parallel and anti-parallel spin configurations (\(E_{\text {parallel}}-E_{\text {anti-parallel}}\) in \(\hbox {eV}/\hbox {unit cell}\)) of \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures. Purple (black) represents a spin flip to the anti-parallel (parallel) configuration during DFT calculations, which makes the magnetic excitation energy unaccessible. (b) Magnetic moment per unit cell (in \(\mu _B\)) for each \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structure at the lowest energy spin configuration. The occupation of the two B sites is shown on the horizontal axis while that of one of the A site is shown on the vertical axis.

We find that the non-spin-polarized configuration has the highest energy for all the structures considered. That is, all structures prefer either parallel or anti-parallel ordering in the A plane. Figure 2a shows the energy difference of parallel and anti-parallel spin configurations. Negative (positive) energy difference means the parallel (anti-parallel) is more stable. We note that, because of the supercell size limit, we do not consider more complex spin configurations in this study. For example, the lowest-energy spin configuration of \(\hbox {Cr}_2\hbox {Si}_2\hbox {Te}_6\) was reported to be zigzag anti-ferromagnetic type53. We find that for some structures, spin configurations initialized to one class may switch to the other during the calculation. That is, the higher energy spin configuration may not be readily constrained, causing difficulty in obtaining its energy. In this case, we expect a large magnitude for the magnetic excitation energy, although its value is unknown. In Fig. 2a we highlight the presence of spin flip in the calculations, where purple (black) color presents a spin flip to the anti-parallel (parallel) configuration during the DFT calculation.

This energy difference between parallel and anti-parallel spin configurations, namely, the magnetic excitation energy, is not only used to determine the magnetic order of a structure, it is also used to estimate the effective magnetic coupling strength J by the Heisenberg model with nearest-neighbor couplings. The magnetic excitation energy, together with the magnetic anisotropy energy, a key component of magnetism in two-dimensions54, are used to estimate the Curie temperature. We list a few examples of structures with Curie temperature higher than that of \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\) in Table 1.

Total magnetic moments for the lowest energy spin configuration of each structure are presented in Fig. 2b. There are 14 structures that have magnetic moments higher than that of \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\). Examples of these structures include \(\hbox {(CrMn)Si}_2\hbox {Te}_6\), \(\hbox {(CrFe)(SiP)Se}_6\), and \(\hbox {(CrFe)(GeP)S}_6\), which exhibit magnetic moments up to \(7\,\mu _B\) per unit cell. We find that only atoms in the A sites show finite magnetic moments, while the moments in the B and X sites are small. Distinct patterns for regions of high and low magnetic moments are observed for X = Te, Se and S in Fig. 2b. Structures created by substituting non-magnetic atoms at the A site, such as Cu, have small variations in their relatively small magnetic moments, as seen in the rows of Fig. 2b. However, substitutions of magnetic atoms, such as Mn, result in a set of structures with a large variation in the magnetic moment, with a much larger upper limit to the range of values observed.

Both the magnetic order and magnetic moment are sensitive to the occupancy of B and X sites, even though the atoms in these sites have negligible contribution to the overall magnetic moment. Atoms in the X sites strongly mediate the magnetic coupling between neighboring A sites53. Atoms at the B sites can affect the relative positions of A and X sites. Direct exchange between first nearest neighbor A sites competes with super-exchange interactions mediated by the \(\textit{p}\)-orbitals at the X sites. The ground state magnetic order is determined by the interplay between first, second and third nearest neighbor interactions. Changing the identity of one of the A, B or X sites affects the interplay between the direct exchange and super-exchange interactions. Recent work has shown that applying strain to the \(\hbox {Cr}_2\hbox {Si}_2\hbox {Te}_6\) lattice tunes the first nearest neighbor interaction, resulting in a change in the magnetic ground state from zig-zag antiferromagnetic to ferromagnetic53. Our work demonstrates that tuning the composition of the \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) lattice can have an equivalent effect. For instance, whereas X=Te structures show more parallel (\(\bar{{\bar{P}}}\)) than anti-parallel (anti-\(\bar{{\bar{P}}}\)) spin-configurations with lower energy, there is a clear change when X \(=\) Se or S. As X moves up the periodic table, there are increasingly more regions of anti-parallel spin configuration, as well as regions in which \(\bar{{\bar{P}}}\) and anti-\(\bar{{\bar{P}}}\) are degenerate. In particular, we find that the distance between nearest neighbor A and X sites, as well as two adjacent X sites is linked to the magnitude of the magnetic moment (see Supplementary Information S1 for details).

We use extra trees regression63 to approximate the relationship between the total magnetic moment and a set of descriptors designed for magnetic property prediction (see Supplementary information S1). Training and test data are considered for the X = Te, Se, and S structures individually. The model performance for X = Te, using a data set with size \(N = 262\) (see Supplementary Information S1), is shown in Fig. 3a. We find reasonable prediction performance for X = Te that deteriorates for X = Se and is even worse for X = S. This suggests that our model, along with the set of descriptors used to predict X = Te structures, is not easily optimized to include X=Se and S structures. This could arise due to the fact that there are more structures that have degenerate \(\bar{{\bar{P}}}\) and anti-\(\bar{{\bar{P}}}\) spin configurations for X = Se and S than for X = Te. Furthermore, the magnetic moment for X = Se and S structures have larger variations across B sites when compared to X = Te structures. These variations likely pose a learning challenge for statistical models. Subgroup discovery65 implies that the identity of the X site strongly affects the magnetic properties of the structures. We included a modified Bag of Bonds descriptor66 to capture the orbital overlap between adjacent sites. The model performs poorly for X = Se and S, perhaps because of missing second and third nearest-neighbor interactions in the descriptor that are important in determining magnetic couplings.

ML predictions of magnetic moments of \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures. (a) Extra trees model performance for the magnetic moment (in \(\mu _B\)) prediction. A subset of structures for X = Te are displayed. The red squares indicate the test data, the green circles show the training data. (b) Top six descriptors for the extra trees prediction of the magnetic moment. The size of the bar indicates relative descriptor importance (see text for details).

Determining which descriptors are most important for making good predictions of a property can be exploited for knowledge discovery, especially when a large number of descriptors are available but their relationships with the target property are not known67. Figure 3b shows the descriptor importances64 as derived from extra trees regression. It shows that the following are among the top six descriptors in the set examined: (i) the ‘average number and variance of spin up electrons’ (“Nup avg” and “Nup var” in Fig. 3b), which are linked to the atomic magnetic moments, (ii) the ‘chemical space value’ (“cs BoB”, a modified Bag of Bonds descriptor66, see Supplementary Information S1), (iii) the ‘maximum difference and variance of valence electron number’ (“nvalence max dif” and “nvalence var”), and (iv) the ‘average dipole polarizability’ (“dipole avg”). The magnetic moment per unit cell is a function of the magnetic moments of the individual atoms in the unit cell. However, determining the exact value and the orientation of the \(\mu\) localized at each site is not trivial. We examine the local magnetic moments at the A sites to determine how the magnetic moment per unit cell is constructed. The local magnetic moment at the A sites (\(\hbox {A}_{\text {Cr}}\) and \(\hbox {A}_{\text {TM}}\)) can be different from the atomic dipole magnetic moment of the corresponding element. For instance, while the atomic magnetic moment of \(\hbox {Cr}^{3+}\) is \(3~\mu _B\), the local magnetic moment at \(\hbox {A}_{\text {Cr}}\) fluctuates from 2.7 to \(3.2~\mu _B\). Fig. 4a shows the local magnetic moment at \(\hbox {A}_{\text {TM}}\). The atomic magnetic moment can be roughly considered as an upper limit of the magnetic moment of the corresponding lattice site in a compound. It is also linked to the magnetic moment per unit cell. The model prediction error for the magnetic moment per unit cell is increased by only 1% when the atomic magnetic moments are excluded from the set of descriptors. This suggests that there exists redundancy in the descriptor space. For predicting properties in which the physics involved is sophisticated, such redundancy seems inevitable.

Furthermore, we use the magnetic excitation energy data in Fig. 2a to train a support vector classification model, to predict the ground-state magnetic order of \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures. The ground state is FM (AFM) if the magnetic excitation energy is negative (positive). We achieve an \(82\%\) success rate for the prediction of ferromagnetic order. Antiferromagnetic order prediction has an \(80\%\) success rate (see Supplementary Information S1). Attempts to use a regression model to predict the amplitude of the magnetic excitation energy are not successful, perhaps due to missing physics in the descriptor space or insufficient quantities of training data to learn the sophisticated physics.

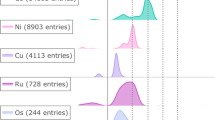

(a) Local magnetic moment of the transition metal A site, \(\hbox {A}_{\text {TM}}\) (in \(\mu _{\text {B}}\)). (b) Formation energy (in eV/cell) for \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures at the lowest energy spin configuration. Conventions are the same as in Fig. 2.

Formation energy

In addition to identifying structures with specific magnetic properties, the ability to screen for chemical stability is also important. DFT-calculated formation energies (for the lowest energy spin configuration) are shown in Fig. 4b. We note that the formation energy in this work is referenced to the corresponding elemental phases. Since the errors from DFT are usually inconsistent between pure elemental phases and compounds, there are potential errors in the absolute values of formation energy. This can be improved in a future work by utilizing fitted elemental-phase reference energies68. Presently, we do not use the energies of the competing compound phases to calculate formation energies, due to lack of information about the competing phases in the synthesis (see Supplementary information S1) .

Structures comprising certain elements, such as Y, decrease the formation energy considerably in comparison to those without it. Certain transition metals, such as Cu, tend to destabilize the \(\hbox {(CrA)B}_2\hbox {X}_6\) structures. The formation energy, \(E_f\) becomes less negative as the substituted atom at the A site goes from the left to the right of the first and second row of transition metal elements in the Periodic Table. This is linked to the filling of the d-orbital, where elements with a filled d-orbital do not form chemical bonds with other elements. Varying the composition at the B site does not appear to have a strong impact on the formation energy (see Supplementary Information, Fig. S1). Changing the X site from Te to Se and then S results in the overall trend of decreasing formation energy.

To exploit the trends in the formation energy data, we use statistical models to predict the formation energy and to infer structure-property relationships. We find that some descriptors, such as the atomic dipole polarizability, are strongly correlated with the formation energy, and are therefore important in generating good ML predictions. Since useful descriptors are not always revealed in an analysis of the Pearson correlation coefficient67, we consider other methods to learn descriptor importances such as the extra trees model64. Using the ML models to predict the formation energy of \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures permits the quick calculation of the formation energy for a large set of compounds. Whereas DFT calculations of \(10^4\) structures could require much more than 1 million CPU hours, the ML prediction takes a few seconds. Figure 5a shows the prediction performance for kernel ridge regression using a Gaussian kernel. Figure 5b shows the performance of a neural network (The neural network is implemented by tensorflow69. It is comprised of 3 hidden layers with sizes 10, 30 and 10 units) while Fig. 5c shows the performance of the extra forests regression. Both training set and test set results are displayed, as well as the test scores for kernel ridge regression, extra trees regression, and neural network regression.

Formation energy prediction performance of (a) kernel ridge regression, (b) deep neural network regression and (c) extra trees regression. Red squares are test data and green circles training data. (d) Performance of the extra trees regression model on the test data as the training set size increases, in terms of the \(\hbox {R}^2\) and mean absolute error (MAE) scores.

(a) ML predicted formation energies (in eV/cell) for a wide range of substitutions that were not included in the DFT data set covering 4223 new structures (570 are shown here). (b) The first-step ML predicted magnetic moments (in \(\mu _B\)) for a wide range of substitutions that were not included in the DFT data set covering 4223 new structures (190 are shown here for X=Te). Conventions same as in Fig. 2.

Further analysis (see Supplementary Information S1) shows that the ‘variance in the ionization energy of atoms’ and the ‘average number of valence electrons’ are the two most important descriptors in the set examined. This demonstrates a link between the formation energy and the atomic ionization energy, emanating from the increased atomic ionizability which produces stronger chemical bonding. In addition, the number of valence electrons is linked to the number of electrons available for bonding. For instance, substitutions by atoms with a filled outer orbital shell will create less stable bonds, leading to chemical instability. The ability of our models to generalize is demonstrated by the high scores on the test data. We further examined how the test set performance varies with the training set size. Figure 5d shows test scores as a function of training set size using extra trees regression. The test score reaches a plateau at about a training set size of 40%, with test score (\(\hbox {R}^2\)) as high as 0.91.

High-throughput screening using ML models

We can use our trained ML models to make predictions on a wide range of structures not included in the original DFT data set. Thus far, we have used our ML models to estimate the formation energy for an additional 4,223 \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures, constructed as follows: (i) For A site substitutions, we considered transition metals not used in the DFT dataset. (ii) We included Al, Sn and Pb in the set of atomic substitutions for B sites (not shown). (iii) For the X sites, we added O to our previous choice of S, Se and Te. The resulting predictions, partly shown in Fig. 6a, provide a means to quickly screen a large data set of structures for chemical stability. For instance, our ML predictions suggest that structures based on Er, Ta, Hf, Mo, Zr, and Sc in the A site and Al in the B site are likely to be stable and thus good candidates for further exploration.

We use a two-step process to find materials with high magnetic moments. In the first stage, a regression model trained with a small data set size (\(N = 66\)) was used to estimate the magnetic moment. The magnetic moment predictions are shown in Fig. 6b. From the results of the ML predictions we select structures with formation energies below \(-1.0\hbox { eV}\) and magnetic moments above \(5~\mu _B\) (for X=Te only). From the 4223 predictions, we obtained 40 that satisfied our constraints. 15 of these were randomly selected for DFT verification. The 15 structures have relatively low formation energies and high magnetic moments, but only 5 of them fulfill the criteria with hard cutoffs (formation energy lower than \(-1.0\hbox { eV}\) and magnetic moment higher than \(4.5\,\mu _B\)).

The second round of model training included the additional 15 structures calculated by DFT. This improved model was then used to predict the magnetic moment. Surprisingly, all the candidate structures predicted by the new model were verified to meet the criteria by DFT. They are \(\hbox {(CrMo)Si}_2\hbox {Te}_6\) (\(E_\text {f} = -1.32\hbox { eV}\), \(\mu = 6.00\,\mu _B\)), \(\hbox {(CrW)Si}_2\hbox {Te}_6\) (\(E_\text {f} = -1.11\hbox { eV}\), \(\mu = 5.89 \mu _B\)), and \(\hbox {(CrMo)(SiP)Te}_6\) (\(E_\text {f} = -1.10\hbox { eV}\), \(\mu = 5.01\,\mu _B\)). This shows that the model can be substantially improved by feeding accurate DFT data from structures that are close to the phase space linked to desirable properties. The first step narrows down the target region where candidate systems are more likely to appear. By sampling the target region using DFT and feeding data to the training in the second step, the resulting model learns to distinguish the desirable structures more accurately. This two-step or iterative method, analogous to active learning70, is capable of building accurate models for materials discovery using limited quantities of training data.

We computed phonon spectra for some of the above promising structures using the open-source software package phonopy71 in the frozen phonon method. We relaxed our unit cell to very high precision (within 0.0001 eV/A) with cutoff energies ranging from 400 to 500 eV with a substantially finer mesh to model the augmentation charges around ions, with an augmentation energy cutoff (ENAUG in VASP) of 2000 eV. We found that the candidate compounds \(\hbox {CrMo}\hbox {Si}_2\hbox {Te}_6\), \(\hbox {CrW}\hbox {Si}_2\hbox {Te}_6\), and \(\hbox {CrMnSi}_2\hbox {Te}_6\) evidenced dynamical stability (see Supplementary information S1 for phonon spectra and further discussion).

Discussions and conclusion

We presented evidence that the magnetic properties of \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) monolayer structures can be tuned by making atomic substitutions at A, B, and X sites. This provides a non-traditional framework for investigating the microscopic origin of magnetic order of 2D layered materials and could lead to insights into magnetism in systems of reduced dimension23,24. Our work represents a path toward tailoring magnetic properties of materials for applications in spintronics and data storage72. We showed that ML methods are promising tools for predicting the magnetic properties of 2D magnetic materials. In particular, our data-driven approach highlights the importance of the X site in determining the magnetic order of the structure. Changing the composition of the \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structure alters the inter-atomic distances and the identity of electronic orbitals. This impacts the interplay between first, second and third nearest neighbor exchange interactions, which determines the magnetic order.

One goal of this work was to find magnetic 2D materials that are also thermodynamically stable. ML models were trained to predict chemical stability that allow the rapid screening of a large number of possible structures. We showed that the chemical stability of \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures based on \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\) can be tuned by making atomic substitutions. Examples of structures that satisfy both magnetic moment and formation energy requirements include the following: \(\hbox {(CrMo)Si}_2\hbox {Te}_6\), \(\hbox {(CrW)Si}_2\hbox {Te}_6\), and \(\hbox {(CrMo)(SiP)Te}_6\), which are not included in our original DFT database. In addition, we found structures in our set of DFT calculations that also satisfied our requirements:

\(\hbox {(CrMn)Si}_2\hbox {Te}_6\) (\(E_\text {f} = -1.77\hbox { eV}\), \(\mu = 7.02\)\(\mu _B\)), \(\hbox {(CrMn)Ge}_2\hbox {Se}_6\) (\(E_\text {f} = -3.24\hbox { eV}\), \(\mu = 7.00\,\mu _B\)), \(\hbox {(CrFe)(SiP)S}_6\) (\(E_\text {f} = -3.97\hbox { eV}\), \(\mu = 6.99\,\mu _B\)), \(\hbox {(CrFe)(GeP)Se}_6\) (\(E_\text {f} = -2.28\hbox { eV}\), \(\mu =6.99 \mu _B\)), and \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Se}_6\) (\(E_\text {f}= -3.67\hbox { eV}\), \(\mu = 6.02\,\mu _B\)). Furthermore, we included temperature effects by exploiting the magnetic excitation energy and the magnetic anisotropy energy to estimate the Curie temperature. We identified several structures with magnetic excitation energy much greater than that of \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\), which corresponds to a higher Curie temperature. In Table 1 we show seven \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) structures which may have Curie temperatures above that of \(\hbox {Cr}_2\hbox {Ge}_2\hbox {Te}_6\). \(\hbox {(CrTc)(SiSn)Te}_6\) and \(\hbox {(CrTc)Sn}_2\hbox {Te}_6\) were also ML recommended structures which we excluded because of the radioactive elements they contain. The recommendations we generated can then be subjected to additional screening with more computationally expensive tests for chemical stability73,74, such as calculations of the dynamic stability75,76. The most promising results will serve as viable options for materials synthesis and experimental verification.

Subsequent to generating ML predictions of vdW magnets of the form \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\), we sought to verify the chemical stability and magnetic properties of our candidate structures by performing a literature search of each candidate. Our ML guided literature review revealed that many materials of the type \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) have been synthesized and their magnetic properties characterized77,78. Reference77 presents a review of experimental studies done on bulk crystals of transition metal phosphorous trisulfides. There are over 10 structures reported to have been synthesized which overlap with those predicted in this study. Since our study is restricted to monolayers, we cannot directly compare our results with experiments described in Ref.77. However, the review highlights that many structures of the form \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) exist in nature.

A second review article78 highlights experimental studies of bulk layered metal thio(seleno) phosphates, \(\hbox {APX}_3\). A is a transition metal and X = (S, Se). For instance, \(\hbox {CuAP}_2\hbox {Se}_6\) (A=In, Cr) compounds have been synthesized and studied. \(\hbox {CuCrP}_2\hbox {Se}_6\) is one of the structures studied in our work. To the best of our knowledge none of the layered materials reported in Ref.78 have been thinned down to the monolayer. Nevertheless, these findings suggest that our approach provides a successful framework for the targeted investigation of monolayers of this class of material.

This work provides the impetus for further exploration of structures with other architectures not considered here, that is, with more complex atomic substitutions beyond 1 in 2 replacement of Cr atoms at the A site. We estimate a total number of at least \(3\times 10^4\) structures of the \(\hbox {A}_2\hbox {B}_2\hbox {X}_6\) type described in Fig. 1. A computationally efficient estimation of the magnetic properties and formation energy is required to quickly explore this vast chemical space. We have already transferred our materials informatics framework to a study of a different family of crystal structures, the transition metal dichalcogenides (TMDs). We successfully predicted the formation energies of TMDs using machine learning models trained on a database of DFT calculations36. The detailed results will be presented in a separate work. We expect the ML methods explored here, with proper modification, to allow an efficient exploration of other families of 2D magnets, such as \(\hbox {CrI}_3\), CrOCl and \(\hbox {Fe}_3\hbox {GeTe}_2\)23,28,79.

During the review process we came across a similar study which also uses machine learning to predict the properties of 2D magnetic materials80. This work exploited data from the C2DB5 database and used different descriptors from those presented in this study.

Data availability

The results of these DFT calculations will be used to build a database of monolayer 2D materials which will be publicly available to the scientific community. See the Supplementary Information for details.

References

Bhimanapati, G. R. et al. Recent advances in two-dimensional materials beyond graphene. ACS Nano 9, 11509–11539 (2015).

Cheon, G. et al. Data mining for new two-and one-dimensional weakly bonded solids and lattice-commensurate heterostructures. Nano Lett. 17, 1915–1923 (2017).

Mounet, N. et al. Two-dimensional materials from high-throughput computational exfoliation of experimentally known compounds. Nat. Nanotechnol. 13, 246 (2018).

Ashton, M., Paul, J., Sinnott, S. B. & Hennig, R. G. Topology-scaling identification of layered solids and stable exfoliated 2D materials. Phys. Rev. Lett. 118, 106101 (2017).

Haastrup, S. et al. The computational 2d materials database: high-throughput modeling and discovery of atomically thin crystals.. 2D Mater. 5, 042002 (2018).

Chen, W., Santos, E., Zhu, W., Kaxiras, E. & Zhang, Z. Tuning the electronic and chemical properties of monolayer \(\text{ MoS}_2\) adsorbed on transition metal substrates. Nano Lett. 13, 509–514 (2013).

Nourbakhsh, A. et al.\(\text{ MoS}_2\) field-effect transistor with sub-10 nm channel length. Nano Lett. 16, 7798–7806 (2016).

Novoselov, K., Mishchenko, A., Carvalho, A. & Neto, A. C. 2d materials and van der waals heterostructures. Science 353, aac9439 (2016).

Zeng, J., Chen, W., Cui, P., Zhang, D. & Zhang, Z. Enhanced half-metallicity in orientationally misaligned graphene/hexagonal boron nitride lateral heterojunctions. Phys. Rev. B 94, 235425 (2016).

Choi, J., Cui, P., Chen, W., Cho, J. & Zhang, Z. Atomistic mechanisms of van der waals epitaxy and property optimization of layered materials. Wiley Interdiscip. Rev. Comput. Mol. Sci. 7, 1300 (2017).

Chen, W., Yang, Y., Zhang, Z. & Kaxiras, E. Properties of in-plane graphene/\(\text{ MoS}_2\) heterojunctions. 2D Mater 4, 045001 (2017).

Oganov, A. R. & Lyakhov, A. O. Towards the theory of hardness of materials. J. Superhard Mater. 32, 143–147 (2010).

Pickard, C. J. & Needs, R. J. Ab initio structure searching. J. Phys.: Condens. Matter 23, 53201 (2011).

Mansouri Tehrani, A. et al. Machine Learning Directed Search for Ultraincompressible, Superhard Materials. J. Am. Chem. Soc. 140, 9844–9853 (2018).

Smidt, T. A case study in neural networks for scientific data: generating atomic structures BAPS.2019.MAR.H52.00001 (2019).

Huo, H. & Rupp, M. Unified representation for machine learning of molecules and crystals. arXiv:1704.06439 (2017).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry. In Precup, D. & Teh, Y. W. (eds.) Proceedings of the 34th International Conference on Machine Learning, Proc. Mach. Learn. Res.70, 1263–1272 (2017).

Isayev, O. et al. Universal fragment descriptors for predicting properties of inorganic crystals. Nat. Commun. 8, 15679 (2017).

Rhone, T. D., Chen, W., Desai, S., Yacoby, A. & Kaxiras, E. Machine learning study of two-dimensional magnetic materials BAPS.2019.MAR.E22.00011 (2019).

Lee, J. U. et al. Ising-Type Magnetic Ordering in Atomically Thin \(\text{ FePS}_3\). Nano Lett. 16, 7433–7438 (2016).

Wang, X. et al. Raman spectroscopy of atomically thin two-dimensional magnetic iron phosphorus trisulfide (\(\text{ FePS}_3\)) crystals. 2D Mater. 3, 31009 (2016).

Xing, W. et al. Electric field effect in multilayer \(\text{ Cr}_2\text{ Ge}_2\text{ Te}_6\) : a ferromagnetic 2d material. 2D Mater. 4, 024009 (2017).

Huang, B. et al. Layer-dependent ferromagnetism in a van der waals crystal down to the monolayer limit. Nature 546, 270 (2017).

Gong, C. et al. Discovery of intrinsic ferromagnetism in two-dimensional van der waals crystals. Nature 546, 265 (2017).

Cui, P. et al. Contrasting structural reconstructions, electronic properties, and magnetic orderings along different edges of zigzag transition metal dichalcogenide nanoribbons. Nano Lett. 17, 1097–1101 (2017).

Miyazato, I., Tanaka, Y. & Takahashi, K. Accelerating the discovery of hidden two-dimensional magnets using machine learning and first principle calculations. J. Phys.: Condens. Matter 30, 06LT01 (2018).

Möller, J. J., Körner, W., Krugel, G., Urban, D. F. & Elsässer, C. Compositional optimization of hard-magnetic phases with machine-learning models. Acta Mater. 153, 53–61 (2018).

Miao, N., Xu, B., Zhu, L., Zhou, J. & Sun, Z. 2d intrinsic ferromagnets from van der waals antiferromagnets. J. Am. Chem. Soc. 140, 2417–2420 (2018).

O’Hara, D. J. et al. Room temperature intrinsic ferromagnetism in epitaxial manganese selenide films in the monolayer limit. Nano Lett.18, 3125–3131 (2018).

Moaied, M., Lee, J. & Hong, J. A 2D ferromagnetic semiconductor in monolayer Cr-trihalide and its Janus structures. Phys. Chem. Chem. Phys. 20, 21755–21763 (2018).

Ashton, M. et al. Two-dimensional intrinsic half-metals with large spin gaps. Nano Lett. 17, 5251–5257 (2017).

Zhao, S., Kang, W. & Xue, J. Manipulation of electronic and magnetic properties of \(\text{ M}_2\text{ C }\) (M = Hf, Nb, Sc, Ta, Ti, V, Zr) monolayer by applying mechanical strains. Appl. Phys. Lett. 104, 133106 (2014).

Mermin, N. D. & Wagner, H. Absence of ferromagnetism or antiferromagnetism in one-or two-dimensional isotropic heisenberg models. Phys. Rev. Lett. 17, 1133 (1966).

Hope, S., Choi, B.-C., Bode, P. & Bland, J. Direct observation of the stabilization of ferromagnetic order by magnetic anisotropy. Phys. Rev. B 61, 5876 (2000).

Abate, Y. et al. Recent progress on stability and passivation of black phosphorus. Adv. Mater. 30, 1704749 (2018).

Rasmussen, F. A. & Thygesen, K. S. Computational 2d materials database: electronic structure of transition-metal dichalcogenides and oxides. J. Phys. Chem. C 119, 13169–13183 (2015).

Frey, N. C. et al. Prediction of synthesis of 2D metal carbides and nitrides (MXenes) and their precursors with positive and unlabeled machine learning. ACS Nano 13, 3031–3041 (2019).

Singh, A. K., Mathew, K., Zhuang, H. L. & Hennig, R. G. Computational screening of 2D materials for photocatalysis. J. Phys. Chem. Lett. 6, 1087–1098 (2015).

Li, Q. et al. Patterning-Induced Ferromagnetism of \(\text{ Fe}_3\text{ GeTe}_2\) van der Waals Materials beyond Room Temperature. Nano Lett. 18, 5974–5980 (2018).

Rupp, M., Tkatchenko, A., Müller, K.-R. & von Lilienfeld, O. A. Fast and accurate modeling of molecular atomization energies with machine learning. Phys. Rev. Lett. 108, 058301 (2012).

Meredig, B. et al. Combinatorial screening for new materials in unconstrained composition space with machine learning. Phys. Rev. B 89, 094104 (2014).

Seko, A. et al. Prediction of low-thermal-conductivity compounds with first-principles anharmonic lattice-dynamics calculations and bayesian optimization. Phys. Rev. Lett. 115, 205901 (2015).

Ueno, T., Rhone, T. D., Hou, Z., Mizoguchi, T. & Tsuda, K. Combo: an efficient bayesian optimization library for materials science. Mater. Discov. 4, 18–21 (2016).

Choudhary, K., Kalish, I., Beams, R. & Tavazza, F. High-throughput identification and characterization of two-dimensional materials using density functional theory. Sci. Rep. 7, 5179 (2017).

Ju, S. et al. Designing nanostructures for phonon transport via bayesian optimization. Phys. Rev. X 7, 021024 (2017).

Landrum, G. A. & Genin, H. Application of machine-learning methods to solid-state chemistry: ferromagnetism in transition metal alloys. J. Solid State Chem. 176, 587–593 (2003).

Khazaei, M. et al. Novel electronic and magnetic properties of two-dimensional transition metal carbides and nitrides. Adv. Funct. Mater. 23, 2185–2192 (2013).

Lu, A.-Y. et al. Janus monolayers of transition metal dichalcogenides. Nat. Nanotechnol. 12, 744 (2017).

Schoenholz, S. S., Cubuk, E. D., Sussman, D. M., Kaxiras, E. & Liu, A. J. A structural approach to relaxation in glassy liquids. Nat. Phys. 12, 469 (2016).

Cubuk, E. D., Malone, B. D., Onat, B., Waterland, A. & Kaxiras, E. Representations in neural network based empirical potentials. J. Chem. Phys. 147, 024104 (2017).

Vandermause, J. et al. On-the-fly active learning of interpretable Bayesian force fields for atomistic rare events. NPJ Comput. Mater. 6, 20 (2020).

Umehara, M. et al. Analyzing machine learning models to accelerate generation of fundamental materials insights. NPJ Comput. Mater. 5, 1–9 (2019).

Sivadas, N., Daniels, M. W., Swendsen, R. H., Okamoto, S. & Xiao, D. Magnetic ground state of semiconducting transition-metal trichalcogenide monolayers. Phys. Rev. B 91, 235425 (2015).

Torelli, D. & Olsen, T. Calculating critical temperatures for ferromagnetic order in two-dimensional materials. 2D Mater. 6, 015028 (2018).

Xie, Y., Tritsaris, G. A., Granas, O. & Rhone, T. D. Data-driven studies of the magnetic anisotropy of two-dimensional magnetic materials. APS March Meeting BAPS.MAR.2020.M39.5 (2020).

Heinz, H. & Suter, U. W. Atomic charges for classical simulations of polar systems. J. Phys. Chem. B 108, 18341–18352 (2004).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Ghiringhelli, L. M., Vybiral, J., Levchenko, S. V., Draxl, C. & Scheffler, M. Big data of materials science: Critical role of the descriptor. Phys. Rev. Lett. 114, 105503 (2015).

Seko, A., Hayashi, H., Nakayama, K., Takahashi, A. & Tanaka, I. Representation of compounds for machine-learning prediction of physical properties. Phys. Rev. B 95, 144110 (2017).

Mentel, L. Mendeleev—a python resource for properties of chemical elements, ions and isotopes https://bitbucket.org/lukaszmentel/mendeleev. (2014).

Chikazumi, S. & Graham, C. D. Physics of Ferromagnetism94, (Oxford University Press on Demand, 2009).

Tibshirani, R., James, G., Witten, D. & Hastie, T. An introduction to statistical learning—with applications in R (Springer, Berlin, 2013).

Pedregosa, F. et al. Scikit-learn: Machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Goldsmith, B. R., Boley, M., Vreeken, J., Scheffler, M. & Ghiringhelli, L. M. Uncovering structure-property relationships of materials by subgroup discovery. New J. Phys. 19, 013031 (2017).

Hansen, K. et al. Machine learning predictions of molecular properties: accurate many-body potentials and nonlocality in chemical space. J. Phys. Chem. Lett. 6, 2326–2331 (2015).

Reshef, D. N. et al. Detecting novel associations in large data sets. Science 334, 1518–1524 (2011).

Stevanović, V., Lany, S., Zhang, X. & Zunger, A. Correcting density functional theory for accurate predictions of compound enthalpies of formation: Fitted elemental-phase reference energies. Phys. Rev. B 85, 115104 (2012).

Abadi, M. et al. TensorFlow: Large-scale machine learning on heterogeneous systems. https://www.tensorflow.org/ (2015).

Lookman, T., Balachandran, P. V., Xue, D. & Yuan, R. Active learning in materials science with emphasis on adaptive sampling using uncertainties for targeted design. NPJ Comput. Mater. 5, 21 (2019).

Togo, A. & Tanaka, I. First principles phonon calculations in materials science. Scr. Mater. 108, 1–5 (2015).

Han, W. Perspectives for spintronics in 2d materials. APL Mater. 4, 032401 (2016).

Zhang, X., Yu, L., Zakutayev, A. & Zunger, A. Sorting Stable versus Unstable Hypothetical Compounds: The Case of Multi-Functional ABX Half-Heusler Filled Tetrahedral Structures. Adv. Funct. Mater. 22, 1425–1435 (2012).

Curtarolo, S. et al. The high-throughput highway to computational materials design. Nat. Mater. 12, 191 (2013).

Paul, J. T. et al. Computational methods for 2D materials: discovery, property characterization, and application design. J. Phys.: Condens. Matter 29, 473001 (2017).

Torrisi, S. B., Singh, A. K., Montoya, J. H., Biswas, T. & Persson, K. A. Two-dimensional forms of robust \(\text{ CO}_2\) reduction photocatalysts. NPJ 2D Mater. Appl. 4, 1–10 (2020).

Brec, R. Review on structural and chemical properties of transition metal phosphorous trisulfides \(\text{ MPS}_3\). Solid State Ionics 22, 3–30 (1986).

Susner, M. A., Chyasnavichyus, M., McGuire, M. A., Ganesh, P. & Maksymovych, P. Metal thio- and selenophosphates as multifunctional van der waals layered materials. Adv. Mater. 29, 1602852 (2017).

Chen, B. et al. Magnetic properties of layered itinerant electron ferromagnet \(\text{ Fe}_3\text{ GeTe}_2\). J. Phys. Soc. Jpn. 82, 124711 (2013).

Lu, S. et al. Coupling a crystal graph multilayer descriptor to active learning for rapid discovery of 2D ferromagnetic semiconductors/half-metals/metals. Adv. Mater. 32, 2002658 (2020).

Acknowledgements

We thank Marios Mattheakis, Robert Hoyt, Matthew Montemore, Emine Kucukbenli, Sadas Shankar, Ekin Dogus Cubuk, Pavlos Protopapas and Vinothan Manoharan for helpful discussions. For the calculations we used the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation (Grant number ACI-1548562) and the Odyssey cluster supported by the FAS Division of Science, Research Computing Group at Harvard University. T.D.R. is supported by the Harvard Future Faculty Leaders Postdoctoral Fellowship. S.B.T. is supported by the DOE Computational Science Graduate Fellowship under Grant DE-FG02-97ER25308. We acknowledge support from ARO MURI Award W911NF-14-0247.

Author information

Authors and Affiliations

Contributions

T.D.R. and E.K conceived the study. T.D.R. and W.C. carried out the calculations. T.D.R, S.D. and W.C. analyzed the results. T.D.R. wrote the manuscript with the assistance of W.C., A.Y. and E.K.. S.B.T. computed phonon spectra. D.T.L. calculated competing phases. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rhone, T.D., Chen, W., Desai, S. et al. Data-driven studies of magnetic two-dimensional materials. Sci Rep 10, 15795 (2020). https://doi.org/10.1038/s41598-020-72811-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-72811-z

This article is cited by

-

Descriptor engineering in machine learning regression of electronic structure properties for 2D materials

Scientific Reports (2023)

-

DFT-aided machine learning-based discovery of magnetism in Fe-based bimetallic chalcogenides

Scientific Reports (2023)

-

Classification of magnetic order from electronic structure by using machine learning

Scientific Reports (2023)

-

Integrating Machine Learning and Molecular Simulation for Material Design and Discovery

Transactions of the Indian National Academy of Engineering (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.