Abstract

Studies of speech processing investigate the relationship between temporal structure in speech stimuli and neural activity. Despite clear evidence that the brain tracks speech at low frequencies (~ 1 Hz), it is not well understood what linguistic information gives rise to this rhythm. In this study, we harness linguistic theory to draw attention to Intonation Units (IUs), a fundamental prosodic unit of human language, and characterize their temporal structure as captured in the speech envelope, an acoustic representation relevant to the neural processing of speech. IUs are defined by a specific pattern of syllable delivery, together with resets in pitch and articulatory force. Linguistic studies of spontaneous speech indicate that this prosodic segmentation paces new information in language use across diverse languages. Therefore, IUs provide a universal structural cue for the cognitive dynamics of speech production and comprehension. We study the relation between IUs and periodicities in the speech envelope, applying methods from investigations of neural synchronization. Our sample includes recordings from every-day speech contexts of over 100 speakers and six languages. We find that sequences of IUs form a consistent low-frequency rhythm and constitute a significant periodic cue within the speech envelope. Our findings allow to predict that IUs are utilized by the neural system when tracking speech. The methods we introduce here facilitate testing this prediction in the future (i.e., with physiological data).

Similar content being viewed by others

Introduction

Speech processing is commonly investigated by the measurement of brain activity as it relates to the acoustic speech stimulus1,2,3. Such research has revealed that neural activity tracks amplitude modulations present in speech. It is generally agreed that a dominant element in the neural tracking of speech is a 5 Hz rhythmic component, which corresponds to the rate of syllables in speech4,5,6,7,8. The speech stimulus is also tracked at lower frequencies (< 5 Hz, e.g.2,3), but the functional role of these fluctuations is not fully understood. They are assumed to relate to the “musical” elements of speech which are above the word level—called prosody. However, prosody in the neuroscience literature is rarely investigated for its structure and function in cognition.

In contrast, the role of prosody in speech and cognition are extensively studied within the field of linguistics. Such research identifies prosodic segmentation cues that are common to all languages, and that characterize what is termed Intonation Units (IUs; Fig. 1a)9,10. Importantly, in addition to providing a systematic segmentation to ongoing naturalistic speech, IUs capture the pacing of information, parceling a maximum of one new idea per IU. Thus, IUs provide a valuable construct for quantifying how ongoing speech serves cognition in individual and interpersonal contexts. The first goal of our study is to introduce this understanding of prosodic segmentation from linguistic theory to the neuroscientific community. The second goal is to put forth a temporal characterization of IUs, and hence offer a precise, theoretically-motivated interpretation of the low-frequency auditory tracking and its relevance to cognition.

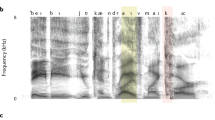

Analysis pipeline. (a) An example Intonation Unit sequence from a conversation in Du Bois et al.11. (b) Illustration of one of the characteristics contributing to the delimitation of IUs: the fast-slow dynamic of syllables. A succession of short syllables is followed by comparatively longer ones; new units are cued by the resumed rush in syllable rate following the lengthening (syllable duration measured in ms). (c) Illustration of the phase-consistency analysis: 2-s windows of the speech envelope (green) were extracted around each IU onset (gray vertical line), decomposed and compared for consistency of phase angle within each frequency. (d) Illustration of the IU-onset permutation in time, which was used to compute the randomization distribution of phase consistency spectra (see “Materials and methods” section).

When speakers talk, they produce their utterances in chunks with a specific prosodic profile, a profile which is attested in presumably all human languages regardless of their phonological and morphosyntactic structure. The prosodic profile is characterized by the intersection of rhythmic, melodic, and articulatory properties9,12,13,14. Rhythmically, chunks may be delimited by pauses, but more importantly, by a fast-slow dynamic of syllables (Fig. 1b): an increase in syllable-delivery rate at the beginning of a chunk, and/or lengthening of syllables at the end of a chunk15. Melodically, chunks have a continuous pitch contour, which is typically sharply reset at the onset of a new chunk. In terms of articulation, the degree of contact between articulators is strongest at the onset of a new chunk16, a dynamic that generates resets in volume at chunk onsets and decay towards their offsets. Other cues contribute to the perceptual construct of chunks albeit less systematically and frequently, such as a change in voice quality towards chunk offset9. Linguistic research has approached these prosodic chunks from different perspectives and under different names: Intonation(al) Phrases (e.g.13,17,18,19), intonation-groups (e.g.14), tone-groups20, and intonation units (e.g.9,12). We adopt here the term Intonation Unit to reflect that our main interest in these chunks lies in their relevance for the processing of information in naturalistic, spontaneous discourse, an aspect foregrounded by Chafe and colleagues. To this important function of IUs we turn next.

Across languages, these prosodically-defined units are also functionally comparable in that they pace the flow of information in the course of speech9,20,21,22,23. For example, when speakers develop a narrative, they do so gradually, introducing the setting, participants and the course of events in sequences of IUs, where no more than one new piece of information relative to the preceding discourse is added per IU (Box 1). This has been demonstrated both by means of qualitative discourse analysis9,21,24, and by quantifying the average amount of content items per IU. Specifically, the amount of content items per IU has been found to be very similar across languages, even when they have strikingly different grammatical profiles10. Another example for the common role of IUs in different languages pertains to the way speakers construct their (speech) actions25 (but cf.26). For example, when speakers coordinate a transition during a turn-taking sequence, they rely on prosodic segmentation (i.e., IUs): points of semantic/syntactic phrase closure are not a sufficient cue for predicting when a transition will take place, and IU design is found to serve a crucial role in timing the next turn-taking transition27,28,29.

Here we use recordings of spontaneous speech in natural settings to characterize the temporal structure of sequences of IUs in six languages. The sample includes well-studied languages from the Eurasian macro-area, as well as much lesser-known and -studied languages, spoken in the Indonesian-governed part of Papua by smaller speech communities. Importantly, our results generalize across this linguistic diversity, despite the substantial differences in socio-cultural settings and all aspects of grammar, including other prosodic characteristics. In contrast to previous research, we estimate the temporal structure of IUs using direct time measurements rather than word or syllable counts (Box 2). In addition, we quantify the temporal structure of IUs in relation to the speech envelope, which is an acoustic representation relevant to neural processing of speech. We find that sequences of IUs form a consistent low-frequency rhythm at ~ 1 Hz in the six sample languages, and relate this finding to recent neuroscientific accounts of the roles of slow rhythms in speech processing.

Materials and methods

Data

We studied the temporal structure of IUs using six corpora which included spontaneously produced conversations and unscripted narratives. The corpora were all transcribed and segmented into IUs according to the unified criteria devised by Chafe9 and colleagues (Du Bois et al.12 is a practical tutorial of this discourse transcription method). This segmentation process involves close listening to the rhythmic and melodic cues presented in the introduction, as well as performing manually-adjusted acoustic analyses, the latter particularly for the extraction of pitch contours (f0) which are used to support perceived resets in pitch. Three of the corpora were segmented by specialist teams working on their native language: the Santa Barbara Corpus of Spoken American English11, the Haifa Corpus of Spoken Hebrew35, and the Russian Multichannel Discourse corpus36. The other three corpora were segmented by a single research team whose members had varying degrees of familiarity with the languages, as part of a project studying the human ability to identify IUs in unfamiliar languages: the DoBeS Summits-PAGE Collection of Papuan Malay37, the DoBeS Wooi Documentation38, and the DoBes Yali Documentation39. The segmentation of each recording is typically performed by multiple team members and verified by a senior member experienced in auditory analyses in the language. Such was the process in the corpora above, ensuring that ambiguous cases were resolved as consistently as possible for human annotators and that the transcriptions validly represent the language at hand. Further information regarding the sample is found in Table 1 and Table S1. Supplementary Appendix 1 in the Supplementary Information elaborates on the construction of the sample and the coding and processing of IUs. From all language samples, we extracted IU onset times, noting which speaker produced a given IU.

Phase-consistency analysis

We analyzed the relation between IU onsets and the speech envelope using a point-field synchronization measure, adopted from the study of rhythmic synchronization of neural spiking activity and Local Field Potentials40. In this analysis, the rhythmicity of IU sequences is measured through the phase consistency of IU onsets with respect to the periodic components of the speech envelope (Fig. 1c). The speech envelope is a representation of speech that captures amplitude fluctuations in the acoustic speech signal. The envelope is most commonly understood to reflect the succession of syllables at ~ 5 Hz, and indeed, it includes strong 2–7 Hz modulations4,6,7,8. The vocal nuclei of syllables are the main source of envelope peaks, while syllable boundaries are the main source of envelope troughs (Fig. 1b). IU onsets can be expected to coincide with troughs in the envelope, since each IU onset is necessarily also a syllable boundary. Therefore, one can expect a high phase consistency between IU onsets and the frequency component of the speech envelope corresponding to the rhythm of syllables, at ~ 5 Hz.

A less trivial finding would be a high phase consistency between IU onsets and other periodic components in the speech envelope. Specifically, since IUs typically include more than one syllable, such an effect would pertain to frequency components below ~ 5 Hz. In this analysis we hypothesized that the syllable organization within IUs gives rise to slow periodic components in the speech envelope. If low-frequency components are negligible in the speech envelope, estimating the phase of the low-frequency components at the time of IU onsets would lead to random phase angles, a result that would translate to low phase consistency (i.e., uniformity). In another scenario, if the speech envelope captures slow rhythmicity in language other than that arising from IUs, different IUs would occur in different phases of the lower frequency components, translating again to low phase consistency. In contrast to these scenarios, finding phase consistency at a degree higher than expected under the null hypothesis would indicate both that the speech envelope captures the rhythmic characteristics of IUs and would characterize the period of this rhythmicity.

We computed the speech envelope for each sound file following standard procedure: in general terms, speech segments were band-pass filtered into 10 bands with cut-off points designed to be equidistant on the human cochlear map. Amplitude envelopes for each band were computed as absolute values of the Hilbert transform. These narrowband envelopes were averaged, yielding the wideband envelope3,8 (see Supplementary Appendix 1 for further details). We extracted 2-s windows of the speech envelope centered on each IU onset, and decomposed them using Fast Fourier Transform (FFT) with a single Hann window, no padding, following demeaning. This yielded phase estimations for frequency components at a resolution of 0.5 Hz. We then measured the consistency in phase of each FFT frequency component across speech segments using the pairwise-phase consistency metric (PPC)40, yielding a consistency spectrum. We calculated consistency spectra separately for each speaker that produced > 5 IUs and averaged the spectra within each language. Note, that the PPC measure is unbiased by the number of 2-s envelope windows entering the analysis40, and likewise that in a turn-taking sequence, it is inevitable that part of the 2-s envelope windows capture speech by more than one participant. We also conducted the analysis using 4-s windows of the speech envelope, allowing for a 0.25 Hz resolution but at the expense of less data entering the analysis. Further information regarding this additional analysis can be found in part 1 of Supplementary Appendix 2 in the Supplementary Information.

Statistical assessment

We assessed the statistical significance of peaks in the average consistency spectra using a randomization procedure (Fig. 1d). Per language, we created a randomization distribution of consistency estimates with 1000 sets of average surrogate spectra. These surrogate spectra were calculated using the speech envelope as before, but with temporally permuted IU onsets that maintained the association with envelope troughs. Troughs are defined by a minimum magnitude of 0.01 (on a scale of 0–1), and with a minimal duration between troughs of 200 ms, as would be expected from syllables, on average. By constraining the temporal permutation of IU onsets, we address the fact that each IU onset is necessarily a syllable onset, and therefore is expected to align with a trough in the envelope. We then calculated, for each frequency, the proportion of consistency estimates (in the 1000 surrogate spectra) that were greater than the consistency estimate obtained for the observed IU sequences. We corrected p-values for multiple comparisons across frequency bins ensuring that on average, False Discovery Rate (FDR) will not exceed 1%41,42.

Results

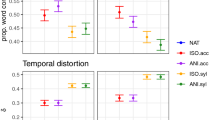

We studied the temporal structure of IU sequences through their alignment with the periodic components of the speech envelope, using a phase-consistency analysis. We hypothesized that one of the characteristics of IUs – the fast-slow dynamic of syllables—would give rise to slow periodic modulations in the speech envelope. Seeing that IUs analyzed here are informed by a cognitively-oriented linguistic theory and have a wide cross-linguistic validity we hypothesize that comparable periodicity will be found in different languages. Figure 2 displays the observed phase consistency spectra in the six sample languages. IU onsets appear at significantly consistent phases of the low-frequency components of the speech envelope, indicating that their rhythm is captured in the speech envelope, hierarchically above the syllabic rhythm at ~ 5 Hz (English: 0.5–1.5 Hz; Hebrew: 1–1.5, 2.5–3 Hz; Russian: 0.5–3 Hz; Papuan Malay: 0.5–3.5 Hz; Wooi: 0.5–3.5 Hz; and Yali: 0.5–4 Hz, all p’s < 0.001). Of note, the highest phase consistency is measured at 1 Hz in all languages except Hebrew, in which the peak is at the neighboring frequency bin, 1.5 Hz.

Characterization of the temporal structure of Intonation Units. Phase-consistency analysis results include the average of phase consistency spectra across speakers for each language. Shaded regions denote bootstrapped 95% confidence intervals43 of the averages. Significance is denoted by a horizontal line above the spectra, after correction for multiple comparisons across neighboring frequency bins using an FDR procedure. Inset: Probability distribution of IU durations within each language corpus, calculated for 50 ms bins and pooled across speakers. Overlaid are the medians (dashed line; dark gray) and the bootstrapped 95% confidence intervals of the medians (light gray).

To complement the results of the phase-consistency analysis, we estimated the median duration of IUs (Fig. 2, insets). The bootstrapped 95% confidence intervals of this estimate are mostly overlapping, to a resolution of 0.1 s, for all languages but Hebrew. For Hebrew, the median estimate indicates a shorter IU duration, which may underlie a faster rhythm of IU sequences. Note, however, that duration is only a proxy for the rhythmicity of IU sequences, as IUs do not always succeed each other without pause (Fig. S2, insets). We find reassuring the consistent trends in the two analyses, but do not pursue the post-hoc hypothesis that Hebrew deviates from the other languages. In the planned phase consistency analysis, the range of significant frequency components is consistent across languages.

We sought to confirm that this effect was not a result of an amplitude transient at the beginning of IU sequences, or the product of pauses in the recording, that affect the stationarity of the signal and may bias its spectral characterization. To this end, we repeated the analysis, submitting only IUs that followed an inter-IU interval below 1 s, that is, between 65 and 89% of the data, depending on the language (Table 1). The consistency estimates at 1 Hz were still larger than expected under the null hypothesis that IUs lack a definite rhythmic structure (Fig. S2).

Our results are consistent with preliminary characterizations of the temporal structure of IUs17,21,44. The direct time measurements we used obviate the pitfalls of length measurements in word count or syllable count, (e.g.9,10,17,23). The temporal structure of IUs cannot be inferred from reported word counts, because what constitutes a word varies greatly across languages (Box 2). Syllable count per IU may provide an indirect estimation of IU length, especially if variation in syllable duration is taken into account (e.g.45), but it does not capture information about the temporal structure of IU sequences.

Discussion

Neuroscientific studies suggest that neural oscillations participate in segmenting the auditory signal and encoding linguistic units during speech perception. Many studies focus on the role of oscillations in the theta range (~ 5 Hz) and in the gamma range (> 40 Hz). The levels of segmentation attributed to these ranges are the syllable level and the fine-grain encoding of phonetic detail, respectively1. Studies identify also slower oscillations, in the delta range (~ 1 Hz), and have attributed them to segmentation at the level of phrases, both prosodic and formal, such as semantic/syntactic phrases2,3,46,47,48,49,50,51. Previous studies have consistently demonstrated a decrease in high-frequency neural activity at points of semantic/syntactic completion52 or natural pauses between phrases53. This pattern of activity yields a slow modulation aligned to phrase structure. We harness linguistic theory to offer a conceptual framework for such slow modulations. We quantify the phase consistency between acoustical slow modulations and IU onsets, and demonstrate for the first time that prosodic units with established functions in cognition give rise to a low-frequency rhythm in the auditory signal available to listeners.

A previous study identified low-frequency modulations in the speech acoustics. Analyzing a representation of speech acoustics similar to the speech envelope used here, Tilsen and Arvaniti54 provided a linguistically-informed interpretation that these low frequency modulations are associated with stress elements of speech. There are two important differences between the present study and Tilsen and Arvaniti54. The current work directly interrogates the temporal structure of a linguistically labeled construct—the IU. Furthermore, prosodic prominence structure and IUs contrast on an important issue. While prominence structure differs between languages55, IUs are conceptually universal10. In our characterization of the temporal structure of the IUs we contribute empirical evidence to the conceptual notion of universality. The temporal structure found in the speech envelope around labeled IUs is common to languages of dramatically different prosodic systems (for example, Papuan Malay and Yali in the current work). Finally, unlike IUs, prominence is also marked by different phonetic cues in different languages, so any acoustic analysis focusing on the acoustic correlates of prominence is bound to find cross-linguistic differences.

Previous research in cognitive neuroscience has proposed to dissociate delta activity that represents acoustically-driven segmentation following prosodic phrases from delta activity that represents knowledge-based segmentation of semantic/syntactic phrases56. From the perspective of studying the temporal structure of spontaneous speech, we suggest that the distinction maintained between semantic/syntactic and prosodic phrasing might be superficial. That is because the semantic/syntactic building blocks always appear within prosodic phrases in natural language use57,58,59,60. Studies investigating semantic/syntactic building blocks often compare the temporal dynamics of intact grammatical structure to word lists or grammatical structure in an unfamiliar language (e.g.46,49,52). We argue that such studies need to incorporate the possibility that ongoing processing dynamics might reflect perceptual chunking, owing to the ubiquity of prosodic segmentation cues in natural language experience. This possibility is further supported by the fact that theoretically-defined semantic/syntactic boundaries are known to enhance the perception of prosodic boundaries, even when those are artificially removed from the speech segment. In a study that investigated the role of syntactic structure in guiding the perception of prosody in naturalistic speech61, syntactic structure was found to make an independent contribution to the perception of prosodic grouping. Another study equated prosodic boundary strength experimentally (controlling in a parametric fashion word duration, pitch contour, and following-pause duration), and found the same result: semantic/syntactic completion contributed to boundary perception62. Even studies that use visual serial word presentation paradigms rather than auditory stimuli are not immune to an interpretation of prosodically-guided perceptual chunking, which is known to affect silent reading63 (for a review see64).

Independently of whether delta activity in the brain of the listener represents acoustic landmarks, abstract knowledge, or the prosodically-mediated embodiment of abstract knowledge58, our results point to another putative role for slow rhythmic brain activity. We find that orthogonal to different grammatical systems, speakers and speech modes, speakers express their developing ideas at a rate of approximately 1 Hz. Previous studies have shown that in the brains of listeners, a wide network interacts with low-frequency auditory-tracking activity, suggesting an interface of prediction and attention-related processes, memory and the language system2,50,65,66,67. We expect that via such low-frequency interactions, this same network constraints spontaneous speech production, orchestrating the management and communication of conceptual foci9.

Finally, our findings render plausible several hypotheses within the field of linguistics. At a basic level, the consistent duration of IUs may provide a temporal upper bound to the construal of other linguistic units (e.g., morphosyntactic words).

Data availability

The custom-written code producing the analyses and figures are available online, in an Open Science Framework repository: https://osf.io/eh3y8/?view_only=6bc102a233914a4db54001345bee944c. As for data, IU time stamps for Hebrew and English can be retrieved from the authors upon request. IU time stamps for the rest of the languages as well as all audio files can be retrieved from the cited corpora.

References

Giraud, A.-L. & Poeppel, D. Cortical oscillations and speech processing: Emerging computational principles and operations. Nat. Neurosci. 15, 511–517 (2012).

Park, H., Ince, R. A. A., Schyns, P. G., Thut, G. & Gross, J. Frontal top-down signals increase coupling of auditory low-frequency oscillations to continuous speech in human listeners. Curr. Biol. 25, 1649–1653 (2015).

Gross, J. et al. Speech rhythms and multiplexed oscillatory sensory coding in the human brain. PLoS Biol. 11, e1001752 (2013).

Ding, N. et al. Temporal modulations in speech and music. Neurosci. Biobehav. Rev. https://doi.org/10.1016/j.neubiorev.2017.02.011 (2017).

Räsänen, O., Doyle, G. & Frank, M. C. Pre-linguistic segmentation of speech into syllable-like units. Cognition 171, 130–150 (2018).

Varnet, L., Ortiz-Barajas, M. C., Erra, R. G., Gervain, J. & Lorenzi, C. A cross-linguistic study of speech modulation spectra. J. Acoust. Soc. Am. 142, 1976–1989 (2017).

Greenberg, S., Carvey, H., Hitchcock, L. & Chang, S. Temporal properties of spontaneous speech—A syllable-centric perspective. J. Phon. 31, 465–485 (2003).

Chandrasekaran, C., Trubanova, A., Stillittano, S., Caplier, A. & Ghazanfar, A. A. The natural statistics of audiovisual speech. PLoS Comput. Biol. 5, e1000436 (2009).

Chafe, W. Discourse, Consciousness and Time: The Flow and Displacement of Conscious Experience in Speaking and Writing (University of Chicago Press, Chicago, 1994).

Himmelmann, N. P., Sandler, M., Strunk, J. & Unterladstetter, V. On the universality of intonational phrases: A cross-linguistic interrater study. Phonology 35, 207–245 (2018).

Du Bois, J. W. et al. Santa Barbara Corpus of Spoken American English, Parts 1–4. https://www.linguistics.ucsb.edu/research/santa-barbara-corpus (2005).

Du Bois, J. W., Cumming, S., Schuetze-Coburn, S. & Paolino, D. Discourse Transcription Santa Barbara Papers in Linguistics (University of California, Santa Barbara, 1992).

Shattuck-Hufnagel, S. & Turk, A. E. A prosody tutorial for investigators of auditory sentence processing. J. Psycholinguist. Res. 25, 193–246 (1996).

Cruttenden, A. Intonation (Cambridge University Press, Cambridge, 1997).

Seifart, F. et al. The Extent and Degree of Utterance-Final Word Lengthening in Spontaneous Speech from Ten Languages (Linguist, Vanguard, 2020).

Keating, P., Cho, T., Fougeron, C. & Hsu, C.-S. Domain-initial articulatory strengthening in four languages. In Phonetic Interpretation: Papers in Laboratory Phonology VI (eds Local, J. et al.) 145–163 (Cambridge University Press, Cambridge, 2003).

Jun, S. Prosodic typology. In Prosodic Typology: The Phonology of Intonation and Phrasing (ed. Jun, S.-A.) 430–458 (Oxford University Press, Cambridge, 2005).

Ladd, D. R. Intonational Phonology (Cambridge University Press, Cambridge, 2008).

Selting, M. et al. A system for transcribing talk-in-interaction: GAT 2. Translated and adapted for English by Elizabeth Couper-Kuhlen and Dagmar Barth-Weingarten. Gesprächsforschung—Online-Zeitschrift zur verbalen Interaktion, vol. 12, 1–51 https://www.gespraechsforschung-ozs.de/heft2011/px-gat2-englisch.pdf (2011).

Halliday, M. A. K. Intonation and Grammar in British English (DE GRUYTER, Berlin, 1967).

Chafe, W. Cognitive constraints on information flow. In Coherence and Grounding in Discourse (ed. Tomlin, R. S.) 21–51 (John Benjamins Publishing Company, Amsterdam, 1987).

Du Bois, J. W. The discourse basis of ergativity. Language 63, 805–855 (1987).

Pawley, A. & Syder, F. H. The one-clause-at-a-time hypothesis. In Perspectives on Fluency (ed. Riggenbach, H.) 163–199 (University of Michigan Press, Ann Arbor, 2000).

Ono, T. & Thompson, S. A. What can conversation tell us about syntax? In Alternative Linguistics: Descriptive and Theoretical Modes (ed. Davis, P. W.) 213–272 (John Benjamins Publishing Company, Amsterdam, 1995).

Selting, M. Prosody in interaction: State of the art. In Prosody in interaction (eds Barth-Weingarten, D. et al.) 3–40 (John Benjamins Publishing Company, Amsterdam, 2010).

Szczepek-Reed, B. Intonation phrases in natural conversation: A participants’ category? In Prosody in Interaction (eds Barth-Weingarten, D. et al.) 191–212 (John Benjamins Publishing Company, Amsterdam, 2010).

Bögels, S. & Torreira, F. Listeners use intonational phrase boundaries to project turn ends in spoken interaction. J. Phon. 52, 46–57 (2015).

Ford, C. E. & Thompson, S. A. Interactional units in conversation: Syntactic, intonational, and pragmatic resources for the management of turns. In Interaction and Grammar (eds Ochs, E. et al.) 134–184 (Cambridge University Press, Cambridge, 1996).

Gravano, A. & Hirschberg, J. Turn-taking cues in task-oriented dialogue. Comput. Speech Lang. 25, 601–634 (2011).

Mondada, L. Multiple temporalities of language and body in interaction: Challenges for transcribing multimodality. Res. Lang. Soc. Interact. 51, 85–106 (2018).

Haspelmath, M. The indeterminacy of word segmentation and the nature of morphology and syntax. Folia Linguist. 45, 31–80 (2011).

Chafe, W. A Grammar of the Seneca Language (University of California Press, Berkeley, 2015).

Haspelmath, M. Pre-established categories don’t exist: Consequences for language description and typology. Linguist Typol. 11, 119–132 (2007).

Evans, N. & Levinson, S. C. The myth of language universals: Language diversity and its importance for cognitive science. Behav. Brain Sci. 32, 429–448 (2009).

Maschler, Y. et al. The Haifa Corpus of Spoken Hebrew. https://weblx2.haifa.ac.il/~corpus/corpus_website/ (2017).

Kibrik, A. A. et al. Russian Multichannel Discourse. https://multidiscourse.ru/main/?en=1 (2018).

Himmelmann, N. P. & Riesberg, S. The DoBeS Summits-PAGE Collection of Papuan Malay 2012–2016. https://hdl.handle.net/1839/00-0000-0000-0019-FF78-5 (2016).

Kirihio, J. K. et al. The DobeS Wooi Documentation 2009–2015. https://hdl.handle.net/1839/00-0000-0000-0014-C76C-1 (2015).

Riesberg, S., Walianggen, K. & Zöllner, S. The Dobes Yali Documentation 2012–2016. https://hdl.handle.net/1839/00-0000-0000-0017-EA2D-D (2016).

Vinck, M., van Wingerden, M., Womelsdorf, T., Fries, P. & Pennartz, C. M. A. The pairwise phase consistency: A bias-free measure of rhythmic neuronal synchronization. Neuroimage 51, 112–122 (2010).

Genovese, C. R., Lazar, N. A. & Nichols, T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 15, 870–878 (2002).

Yekutieli, D. & Benjamini, Y. The control of the false discovery rate in multiple testing under dependency. Ann. Stat. 29, 1165–1188 (2001).

DiCiccio, T. J. & Efron, B. Bootstrap confidence intervals. Stat. Sci. 11, 189–228 (1996).

Chafe, W. Thought-based Linguistics (Cambridge University Press, Cambridge, 2018).

Silber-Varod, V. & Levy, T. Intonation unit size in Spontaneous Hebrew: Gender and channel differences. in Proceedings of the 7th International Conference on Speech Prosody, 658–662 (2014).

Ding, N., Melloni, L., Zhang, H., Tian, X. & Poeppel, D. Cortical tracking of hierarchical linguistic structures in connected speech. Nat. Neurosci. 19, 158–164 (2016).

Meyer, L., Henry, M. J., Gaston, P., Schmuck, N. & Friederici, A. D. Linguistic bias modulates interpretation of speech via neural delta-band oscillations. Cereb. Cortex 27, 4293–4302 (2016).

Bourguignon, M. et al. The pace of prosodic phrasing couples the listener’s cortex to the reader’s voice. Hum. Brain Mapp. 34, 314–326 (2013).

Bonhage, C. E., Meyer, L., Gruber, T., Friederici, A. D. & Mueller, J. L. Oscillatory EEG dynamics underlying automatic chunking during sentence processing. Neuroimage 152, 647–657 (2017).

Keitel, A., Ince, R. A. A., Gross, J. & Kayser, C. Auditory cortical delta-entrainment interacts with oscillatory power in multiple fronto-parietal networks. Neuroimage 147, 32–42 (2017).

Teng, X. et al. Constrained structure of Ancient Chinese poetry facilitates speech content grouping. Curr. Biol. 30, 1299–1305 (2020).

Nelson, M. J. et al. Neurophysiological dynamics of phrase-structure building during sentence processing. Proc. Natl. Acad. Sci. 114, E3669–E3678 (2017).

Hamilton, L. S., Edwards, E. & Chang, E. F. A spatial map of onset and sustained responses to speech in the human Superior Temporal Gyrus. Curr. Biol. 28, 1860–1871 (2018).

Tilsen, S. & Arvaniti, A. Speech rhythm analysis with decomposition of the amplitude envelope: Characterizing rhythmic patterns within and across languages. J. Acoust. Soc. Am. 134, 628–639 (2013).

Hayes, B. Diagnosing stress patterns. In Metrical Stress Theory: Principles and Case Studies (ed. Hayes, B.) 5–23 (University of Chicago Press, Chicago, 1995).

Meyer, L. The neural oscillations of speech processing and language comprehension: State of the art and emerging mechanisms. Eur. J. Neurosci. 48, 1–13 (2017).

Hopper, P. J. Emergent Grammar. in Proceedings of the Thirteenth Annual Meeting of the Berkeley Linguistics Society, vol. 13, 139–157 (1987).

Kreiner, H. & Eviatar, Z. The missing link in the embodiment of syntax: Prosody. Brain Lang. 137, 91–102 (2014).

Mithun, M. Re(e)volving complexity: ADDING intonation. In Syntactic Complexity: Diachrony, Acquisition, Neuro-cognition, Evolution (eds Givón, T. & Shibatani, M.) 53–80 (John Benjamins Publishing Company, Amsterdam, 2009).

Auer, P., Couper-Kuhlen, E. & Müller, F. The study of rhythm: Retemporalizing the detemporalized object of linguistic research. In Language in Time: The Rhythm and Tempo of Spoken Interaction 3–34 (Oxford University Press, Oxford, 1999).

Cole, J., Mo, Y. & Baek, S. The role of syntactic structure in guiding prosody perception with ordinary listeners and everyday speech. Lang. Cogn. Process. 25, 1141–1177 (2010).

Buxó-Lugo, A. & Watson, D. G. Evidence for the influence of syntax on prosodic parsing. J. Mem. Lang. 90, 1–13 (2016).

Fodor, J. D. Leaning to parse?. J. Psycholinguist. Res. 27, 285–319 (1998).

Breen, M. Empirical investigations of the role of implicit prosody in sentence processing. Lang. Linguist. Compass 8, 37–50 (2014).

Kayser, S. J., Ince, R. A. A., Gross, J. & Kayser, C. Irregular speech rate dissociates auditory cortical entrainment, evoked responses, and frontal alpha. J. Neurosci. 35, 14691–14701 (2015).

Schroeder, C. E. & Lakatos, P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 32, 9–18 (2009).

Piai, V. et al. Direct brain recordings reveal hippocampal rhythm underpinnings of language processing. Proc. Natl. Acad. Sci. 113, 11366–11371 (2016).

Acknowledgements

MI is supported by the Humanities Fund PhD program in Linguistics and the Jack, Joseph and Morton Mandel School for Advanced Studies in the Humanities. Our work would not have been possible without the substantial efforts carried by the creators of the corpora, their teams, and the people they recorded all over the world.

Author information

Authors and Affiliations

Contributions

This work is an outcome of author M.I.’s MA thesis under the joint supervision of authors A.N.L. and E.G. The authors were jointly active in all stages of this research and its publication.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Inbar, M., Grossman, E. & Landau, A.N. Sequences of Intonation Units form a ~ 1 Hz rhythm. Sci Rep 10, 15846 (2020). https://doi.org/10.1038/s41598-020-72739-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-72739-4

This article is cited by

-

Auditory-motor synchronization and perception suggest partially distinct time scales in speech and music

Communications Psychology (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.