Abstract

The concept of entropy connects the number of possible configurations with the number of variables in large stochastic systems. Independent or weakly interacting variables render the number of configurations scale exponentially with the number of variables, making the Boltzmann–Gibbs–Shannon entropy extensive. In systems with strongly interacting variables, or with variables driven by history-dependent dynamics, this is no longer true. Here we show that contrary to the generally held belief, not only strong correlations or history-dependence, but skewed-enough distribution of visiting probabilities, that is, first-order statistics, also play a role in determining the relation between configuration space size and system size, or, equivalently, the extensive form of generalized entropy. We present a macroscopic formalism describing this interplay between first-order statistics, higher-order statistics, and configuration space growth. We demonstrate that knowing any two strongly restricts the possibilities of the third. We believe that this unified macroscopic picture of emergent degrees of freedom constraining mechanisms provides a step towards finding order in the zoo of strongly interacting complex systems.

Similar content being viewed by others

Introduction

Today, witnessing the feedback loop of developing digital technologies and increasing amount of data collected, there has been an ever increasing need and opportunity to understand and control complex biological, social or technological systems1,2,3,4,5. The hallmark of such systems is that their global behavior emerges out of a large number of stochastic variables interacting in a non-trivial way6,7,8,9. A useful level of description is provided by (generalized) statistical mechanics, an effort to identify relations between relevant observable summary statistics of stochastic dynamics over configuration space. In many cases, the microscopic dynamical rules governing the system are not known; instead, their effect on first-order and higher-order statistics over configuration space form the basis of understanding. (By first-order statistics we mean visiting probabilities, whereas higher-order statistics refer to spatial and temporal correlations). A first classification of all systems is given by the mere size of the configuration space W in the function of the number of microscopic variables N. This is also a necessary classification for system-size independent modeling. In the absence of interactions, W(N) grows exponentially as the joint distribution over microscopic variables factorize. Non-trivial joint distributions, however, result in non-trivial restrictions on configuration space and possibly non-exponential scaling of W(N). Such systems are labelled non-extensive. In this paper, we factor sources of non-extensivity to first-order and higher-order statistical properties of the joint distribution over microscopic variables. In particular, effective classification of all higher-order statistics, however complicated they are, have been introduced under the name of generalized entropies. Generalized entropies indirectly model correlations: the specific entropic form that scales with system size (i.e., extensive, \(S\sim N\)), tells us which class the system itself belongs to.

A variety of generalized entropic functionals have been introduced to phenomenologically extend statistical mechanics to specific non-ergodic or strongly interacting systems, both within and outside the realm of physics including spin-like systems9,10,11, cosmic ray energy spectra12, multifractals13, networks14, quantum information15,16,17, special relativity18, anomalous diffusive processes19,20,21,22,23, superstatistics24,25, time series analysis26,27,28 and artificial neural networks29.

The diversity of proposed entropic functionals reflects the conceptual diversity behind the assumptions all leading, in weakly correlated systems, to the same mathematical form of the Boltmann–Gibbs–Shannon entropy, \(S_{\text {BGS}}=\sum _{i=1}^W - p_i \ln p_i\). In particular, arguments relying on thermodynamics, statistical mechanics, dynamical systems, information theory, and statistics all provide means to derive \(S_{\text {BGS}}\) as a useful measure, and they all provide different means to generalize it2,18,30,31,32,33,34,35,36,37,38,39. Here we do not commit to any of these conceptual frameworks, instead, following the work by Hanel and Thurner in Ref.30, we rely on an axiomatic characterization of generalized entropies, based on the Shannon–Khinchin axioms SK1–SK440. Assuming that most of the relevant generalized entropic forms can be written as a sum of a pointwise function g over probabilities,

with a notable exception being the class of Rényi entropies, \(S_{\mathrm{R}\'{e}\mathrm{nyi}}=\frac{1}{1-\alpha }\log \sum _{i=1}^W p_i^{\alpha }\), axioms SK1–SK4 regarding \(S_g[p]\) translate to the language of the entropic kernel g(p). Prescribing all SK1–SK4 uniquely determines g to be proportional to the Boltzmann–Gibbs–Shannon kernel, \(g_{\text {BGS}}=-p \ln p\). A surprisingly rich phenomenology of all possible generalizations to non-extensive systems can be achieved by discarding the only SK axiom that prescribe the resulting entropy to be additive, namely, the decomposability axiom SK4, \(S[p_{AB}]=\langle S[p_{A|B}] \rangle _B + S[p_B]\), expressing that the entropy of a joint distribution \(p_{AB}\) can be decomposed to the expected entropy of the conditional distribution \(p_{A|B}\) and the entropy of the marginal \(p_B\).

Interestingly, assuming equiprobable configurations (\(p_i\equiv W^{-1}\)), all possible entropies obeying to SK1–SK3 follow the asymptotic scaling law

with Hanel–Thurner (H–T) exponent \(0 < c \le 1\)30. A particularly simple representative of each possible asymptotic non-extensivity class is given by the one-parameter family of Tsallis entropies41, \(g_{q}(p)=\frac{p-p^q}{q-1}\), with \(g_q\) belonging to the class \(c=q\), limiting \(g_{\text {BGS}}\) (class \(c=1\)) as \(q\rightarrow 1\).

We note that the Hanel–Thurner exponent c has been introduced as part of a two-parameter asymptotic classification (c, d) of generalized entropies30, along with a representative entropic form \(S_{c,d}\) corresponding to each class. \(S_{c,d}\), however, is asymptotically non-equivalent to another two-parameter entropy, a generalization of that of Tsallis, \(S_{q,\delta }\)42. Indeed, the latter asymptotically scales as \(S_{q,\delta }(W)\sim W^{\delta (1-q)}\) when \(q<1\), belonging to the class \(c=1-\delta (1-q)\), \(d=0\).

Crucially, for any specific system, the functional form of the generalized entropy that is extensive maps any correlation structure beyond first-order statistics \(\{p_i\}\) to its global consequences: the scaling of configuration space W(N) with system size, classified by the H–T exponent c. In this paper, we unify this phenomenological description with the effect of first-order statistics to gain a complete picture of sources of non-extensive configuration space growth, factored to first-order and higher-order statistics. In particular, as entropies are invariant to relabeling of states \(i\leftrightarrow j\), they only depend on the fraction of states with probability p. This probability density over probabilities we call density of states \(\rho (p)\), similarly to statistical physics and condensed matter theory, where densities over log-probabilities play an important role.

The paper is organized as follows. The “Methods” section introduces the general formalism, the “Results” section discusses the role of specific density of states, and the Discussion summarizes the results. Detailed calculations are given in the Supplementary Information.

Methods

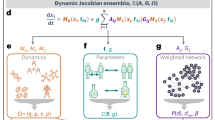

In this section, we develop a simple mathematical framework relating three central concepts of strongly correlated systems: first order statistics, quantified in terms of density of states \(\rho (p)\), higher-order statistics, modeled by generalized entropic functionals \(S_g[p]\) and the scaling of configuration space with system size W(N), classified by the H–T exponent c. Figure 1a illustrates the idea.

While doing so, we attempt to provide a step-by-step introduction to the logic of generalized statistical mechanics. Generalized statistical mechanics phenomenologically classifies all conceivable correlated systems by compressing all statistical dependences in the system’s stochastic dynamics into one relevant measure: the scaling of configuration space with system size. It uses a reverse logic: starting from the size of the configuration space W, through the prescription of extensivity of system-specific generalized entropic functional, one arrives to the system size N(W)43,44. Note that based on observing (some statistics of) the dynamics over configuration space, this is a meaningful definition of system size, whereas the mere number of variables is not: think of a configuration space defined by many copies of the same variable (i.e., maximal mutual information between them). In order to avoid confusion, from now on we refer to N as effective system size. Inverting (the asymptotics of) N(W) tells us the system’s configuration space scaling W(N), classified by the H–T exponent c through Eq. (2). We complement this algorithmic recipe, depicted in Fig. 1c, with the missing ingredient, first-order statistics \(\rho (p)\), to yield a coherent picture of all possible sources of non-extensivity, factored to first and higher-order correlations.

Asymptotically, \(W\rightarrow \infty \), any generalized entropy \(S_g\) can be written as

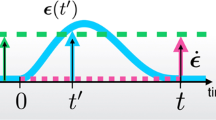

by grouping terms with the same probability in the sum, weighted by the density of states \(\rho \), visualized in Fig. 1b. Concavity of g, along with Jensen’s inequality, \(\left\langle g\left( p\right) \right\rangle _{\rho } \le g\left( \left\langle p \right\rangle _{\rho }\right) \), guarantees that \(S_g\) is maximal for the uniform distribution over states, \(\rho (p)=\delta (p-1/W)\) (see Supplementary Information).

The density of states \(\rho \) cannot be arbitrary, however: it is constrained by the normalization condition on p,

that is, the expected value of p under \(\rho \) is fixed to be 1/W, decreasing the dimension of the parameter space of \(\rho \) by 1. When needed, we emphasize this constraint by explicitly writing \(\rho (p|W)\). An additional technical difficulty stems from the fact that the support of \(\rho \) is bounded. In this paper, we choose density of states that are bounded on [0, 1] but limit well-known distributions over a semi-infinite support as \(W\rightarrow \infty \), and consequently, as \(\left\langle p \right\rangle _{\rho }\rightarrow 0\).

The particular forms of \(\rho \) we consider, with detailed calculations in the “Results” section and in the Supplementary Information, are (1) a delta function \(\rho (p)=\delta (p-1/W)\), corresponding to uniform distribution over states (microcanonical, MC), (2) a combination of multiple delta functions, describing multiple uniform domains in configuration space, possibly scaling differently with configuration space size W (multi-delta, MD), (3) a case in which a single state has macroscopic (non-disappearing) probability at the limit \(W\rightarrow \infty \) and the probability of all other states are equal (Bose–Einstein, BE), (4) an exponential density of states (exponential), (5) a log-gamma density of states that limits log-normal (log-gamma), one that is a power-law with exponent limiting \(-1\) (power law), and (6) a two-parameter family over [0, 1], the beta distribution, where we tune the one remaining free parameter to achieve a power-law tail with a tunable exponent (beta).

(a) Generalized entropies \(S_g\), providing a phenomenological classification of higher order statistics over configuration space, density of states \(\rho (p)\), summarizing first order statistics over configuration space , and configuration space scaling with system size W(N). Knowledge of any two strongly restricts the possibilities for the third. (b) Computation of entropy \(S_g\), corresponding to the shaded area, based on kernel g and density of states \(\rho \). In this example, \(g=g_{\text {BGS}}=-p\ln p\), \(\rho (p)\) is exponential, and the size of the configuration space is \(W=10^6\), corresponding to \(\left\langle p\right\rangle _{\rho } =W^{-1}=10^{-6}\). (c) Computational steps relating generalized entropy \(S_g\), density of states \(\rho \), configuration space scaling W(N), and Hanel–Thurner exponent c. Density of states and generalized entropies summarize first and higher order statistics over configuration space, respectively, whereas configuration space scaling and the H–T exponent classify complex systems based on how available configuration space scales with effective system size N. Note that the starting point is the size of configuration space W; effective system size N is determined by leveraging extensivity of the system-specific generalized entropic form.

Configuration space scaling

A simple classification of all correlated systems can be given by assessing how the associated extensive generalized entropy (\(S_g\sim N\)) reacts to configuration space rescaling, \(W\rightarrow \lambda W\). This idea, consistent with the Shannon–Khinchin axiomatic foundations of information, is articulated in terms of the Hanel–Thurner (H–T) exponent c, defined in Eq. (2). Here we generalize H–T scaling to systems with arbitrary (not necessarily uniform) visiting probabilities over states as

H–T scaling in case of a uniform distribution over configuration space is recovered by setting \(\rho (p|W)=\delta (p-1/W)\), simplifying Eq. (5) to \(R_{\lambda }=\lim _{W\rightarrow \infty }\lambda g\left( \frac{1}{\lambda W}\right) / g\left( \frac{1}{W}\right) \sim \lambda ^{1-c}, \) implying that g(p) scales as \(p^c\) as \(p\rightarrow 0^+\). For example, \(g_{\text {BGS}}=-p\ln p\sim p\), and \(g_q=\frac{p-p^q}{q-1}\sim p^q\) if \(0<q\le 1\), in agreement with \(S_{\text {BGS}}\) and \(S_q\) belonging to H–T class \(c=1\) and \(c=q\), respectively.

In a general framework, however, non-extensivity, classified by the H–T exponent c, depends on both first order and higher order statistics, accounted for by \(\rho \) and g jointly. In particular, as the density of states \(\rho \) broadens (while still obeying to the expected value constraint \(\left\langle p\right\rangle _{\rho } =W^{-1}\)), contributions to the total entropy come from configurations with a wider range of probabilities. Figure 2b shows the contribution of configurations with probability \(p<r\) to the total entropy, which we call cumulative entropy \(\Pi _g(r)\),

for specific combinations of density of states \(\rho \) and entropy kernels g.

How does broadening of \(\rho \) affect the scaling of available configuration space W(N), and its classification, given by the H–T exponent c? In the following, we perform exact calculations following the steps illustrated on Fig. 1c, for specific density of states \(\rho \) and entropy kernels g. We summarize the results in Table 1 for BGS entropy and in Table 2 for Tsallis entropies.

Results

In order to keep track of the consequences of changing the density of states \(\rho \) alone, we introduce the following nomenclature. We refer to the scaling of \(S_{g}=W\left\langle g(p)\right\rangle _{\rho }\) with W as regular if

with \(C_1\ne 0\), where \(\delta \) denotes uniform configuration probabilities over the sample space (microcanonical ensemble). Otherwise, the scaling is referred to as \(anomalous \).

Uniform distribution over configuration space (microcanonical)

The simplest example is associated with the microcanonical ensemble whose density of states is written as \(\rho (p)=\delta (p-1/W)\). This form enables us to analitically express all forms of generalized entropies as

Based on that, e.g. the \(S_{\text {BGS}}\sim \ln W\) and \(S_q\sim \frac{1-W^{1-q}}{q-1}\) dependence for the Boltzmann–Gibbs–Shannon and Tsallis entropies can be shown in a straightforward manner. Hence, extensivity of \(S_{\text {BGS}}\) is ensured by imposing exponential configuration space scaling \(W(N)\sim e^N\), whereas \(S_q\) is extensive under \(W(N)\sim N^{\frac{1}{1-q}}\) (for further details see Tables 1 and 2).

As the upper bound of \(S_q\) is always realized by the microcanonical ensemble, this case is corresponding to maximal disorder, which coincides with the SK2 maximality axiom.

Multiple uniform domains over configuration space (multi-delta)

A straightforward generalization of the classical microcanonical ensemble, let us discuss a system whose phase space is decomposeable into several disjunct \(k+1\) sub-domains (e.g., \(k+1\) different set of configurations). The volume of these sub-domains might scale differently with the size of the system being denoted by \(V_0(N),V_1(N),\ldots ,V_k(N)\). We additionally assume that the \(V_0(N)\) function is standing for the scaling of a forbidden region, and each configuration corresponding to the same n-th set of configurations is occurring with a probability proportional to the size of this population \(\sim V_n\). Hence, the density of states with the correct pre-factors can simply be formulated as

where \(\sum ^k_{j=0}V_j(N)=W(N)\). In case of \(k=1\), Eq. (9) is reducing to

Despite its simplicity, the previous bimodal form has been pointed out to naturally emerge in various complex system, such as in confined binary decision trees21 or correlated spin systems9,45. In Ref.45 the authors discuss specific examples of composite systems, that is, complex systems which are composed of many identical but distinguishable sub-systems. They introduce a \(\lambda \) parameter quantifying the correlation of the system, and discuss the behaviour of Tsallis entropy under non-equiprobable microstates (more precisely, over a multi-peaked density of states with each peak corresponding to the distinguishable sub-systems). They establish analytical expressions for the Tsallis entropy as a function of \(\lambda \), \(S^{\lambda }_q\), and found that there always exists a pair of \((\lambda , q) \) for which \(S^{\lambda }_q\) can be made extensive.

From a mathematical point of view, under the multi-delta \(\rho \) defined in Eq. (9), generalized entropies take the form of

where \(V^{*}=\max _j V_j\) defines the asymptotically leading term. In spite of the obvious analogy between Eq. (8) and the last part of Eq. (11), the two expressions can fairly differ from each other, e.g. in terms of non-extensivity classes. In the next paragraph, we proceed with providing a higher resolution picture of entropies defined under multi-delta density.

The entropy form in Eq. (11) implicitly suggests that if a particular region \(V_0\) of the configuration space is forbidden, or the regions \(V_{i=1,\dots ,k}\ne V^{*}\) are rarely visited, then the generalized entropies are principally determined by the behaviour of the dominant term \(V^{*}\). This implies that extensivity is entirely encoded in the specific dependence of \(V^{*}=V^{*}(W)\). For simplicity hereinafter we assume that the volume of the entire configuration space is scaling with the volume of the leading sub-domain as \(V^{*}(W)\sim W^{\xi }\), yielding

where \(0<\xi \le 1\) for obvious reasons (see Supplementary Information). For the linear case (\(\xi =1\)) regular sample space scaling is recovered30, however, sub-linear dependence has very interesting consequences in terms of the H–T scaling given in Eq. (2). Plugging \(V^{*}(W)\sim W^{\xi }\) into Eq. (5), i.e. keeping track of how entropies change under the rescaling of the configuration space volume we obtain

where \(c=1-\xi +\xi q\). Compared to the microcanonical ensemble where Tsallis entropy, \(S_q\) is extensive under \(W(N)\sim N^{\frac{1}{1-q}}\), here extensivity is satisfied if \(W(N)\sim N^{\frac{1}{1-\xi +\xi q}}\). For the sake of detailed comparison of the scaling exponents under different forms of \(\rho \) see Tables 1 and 2.

Complex systems with \(V^{*}(N)\sim N^{\gamma }\), where \(\gamma >1\) are commonly reported in the literature9,44. This type of sub-exponential growth of the configuration space can often be attributed to the presence of forbidden configurations and by thus, to the decrease in the number of accessible states. Based on this, the corresponding density of states is given by a bimodal form of \(\rho \) defined in Eq. (10). Owing to unattainable part of the configuration space [described by the first term in Eq. (10)], the asymptotics of the number of accessible microstates scales as \(W_{\text {accessible}}\sim V^{*}(W)\sim \left( \ln W \right) ^{\gamma }\), in contrast with the regular exponential growth, where \(W_{\text {accessible}}\sim W\). This implies the inadmissibility of BGS entropy and also that the associated extensive entropy has to be a different one than that is extensive for the microcanonical case. In general, the inadmissibility of BGS entropy for systems with confined configuration spaces has already been discussed in slightly different contexts such as in Refs.9,44.

A single macroscopic state (Bose–Einstein)

In this example we consider a system comprising of a single state with a macroscopic large probability, \(p_1=1-\frac{1}{W-1}\), together with \(W-1\) evenly distributed further states whose corresponding probabilites are given by \(p_{i=2,\dots ,W}=\frac{1}{(W-1)^2}\). Based on the analogy with a Bose–Einstein condensate, we refer to this system as Bose–Einstein, and write the corresponding density of states as

Using that, generalized entropies can analytically be expressed as

Note that Eq. (15) differs greatly from its microcanonical analogue Eq. (8), revealing that even few configurations with macroscopic probabilities can significantly alter the behaviour that we would predict based on the microcanonical ensemble.

A system hallmarked by the density of states given above in Eq. (14) is displaying strong heterogeneity in its configurations as the variance of configuration probabilities decays linearly with the expected value, \(\sigma ^2{\mathop {\sim }\limits ^{W\rightarrow \infty }}\left\langle p\right\rangle \sim W^{-1}\), contrary to the microcanonical picture, where \(\sigma ^2\sim 0\sim W^{-\infty }\). Due to the presence of macroscopically large weights, entropic forms have non-zero contributions coming from \(p\approx 1\), therefore H–T scaling is given by

where \(c=2\) for BGS and \(c=2q\) for Tsallis entropies (see Supplementary Information). Note that in both cases the c exponent is greater than that of corresponding to the microcanonical picture. Hence, this example apparently reveals how H–T scaling is changing as \(\rho (p)\) broadens. As another consequence, under the previous density of states, Tsallis entropy with deformation parameter \(q<\frac{1}{2}\) is extensive if \(W(N)\sim N^{\frac{1}{1-2q}}\) however, for \(q>\frac{1}{2}\) extensivity of \(S_q\) surprisingly requires W(N) to be monotonically decreasing (see Tables 1 and 2).

Exponential density of states (exponential)

Another characteristic type of decays can be formulated through an exponential density of states, which is simply expressed as

Although this density function satisfies the normalizaton condition only in the asymptotic sense, i.e., \(\int ^1_{0}\rho (p) {\mathrm{d}}p=1+{\mathscr{O}}(e^{-W})\), the corresponding approximation error decays rapidly with W. Hence, the expected value of the above exponential form can safely be approximated by \(\left\langle p\right\rangle _{\rho }= \frac{1}{W}+{\mathscr{O}}(e^{-W})\), in accordance with the constraints imposed in “Methods” section. The Boltzmann–Gibbs–Shannon entropy in this case takes the form of

while the Tsallis entropy with \(q<1\) deformation parameter can be given as

where the equality is obtained by neglecting asymptotically vanishing terms. Although the aforementioned density of states satisfies the necessary conditions only asymptotically, the closely related form of \(\rho (p)=\lim _{p\rightarrow 0}\left( W-1\right) \left( 1-p\right) ^{W-2}\sim We^{-Wp}\) has the nice property of exactly fulfilling both normalization and expected value constraints. For example, the BGS entropy in this case can be written as \(S_{\text {BGS}}=\psi (W+1)-\psi (2)\approx \ln W-\frac{1}{2W}-\psi (2)\), where \(\psi (x)=\frac{{\mathrm{d}} \ln \Gamma (x)}{{\mathrm{d}} x}\) denotes the Digamma function.

The results above show that an exponentially decaying \(\rho (p)\) is always resulting in regular scaling. This practically implies that all the results obtained for the microcanonical ensemble, including i.e. the H–T scaling properties can automatically be extended to the exponential case without any modifications (see Tables 1 and 2).

Log-gamma density of states (log-gamma, limiting log-normal)

A density of states that limits log-normal when \(W\rightarrow \infty \) yet is defined on the bounded support [0, 1] can be defined as the distribution of the product of independent uniform variables on [0, 1]. We call this distribution log-gamma, since in log-transformed variables, it is a sum of exponentially distributed variables, i.e., a gamma distribution. As the number of terms in the sum approaches infinity, gamma limits normal; consequently, as the number of terms in the product approaches infinity, log-gamma limits log-normal (for details, see Ref.46). Its tail on a log–log scale (limiting a quadratic function, interpolating between an exponential and a power law tail) is shown in Fig. 2a. The log-gamma density of states is defined as46

where \(w=\log _2 \left( W\right) -1\). Under the previous form of \(\rho \), BGS entropy reads (see Supplementary Information)

corresponding to a regular (logarithmic) scaling with W. In contrast, the Tsallis entropy surprisingly shows anomalous scaling for \(q\ne 1\) as

where \(c=\log _2 \left( 1+q\right) \ne q\). On one hand this means that the microcanonical and log-gamma density of states are practically indistinguishable from the point of view of BGS entropy, while on the other hand, it also implies that H–T exponent \(c=\log _2 \left( 1+q\right) \) associated with Tsallis entropies and their deformation parameters q do not coincide anymore. Consequently, under log-gamma density of states extensivity of \(S_q\) can be obtained for the systems where \(W(N)\sim N^\frac{1}{\log _2\left( \frac{2}{1+q}\right) }\). In Fig. 2c we show how \(S_{q}\) scales with W as a function of the deformation parameter for both microcanonical and log-gamma density of states.

As an interesting example, let us discuss a binary spin chain, where fixed groups of nearby spins tend to stick together so that, they form \(N_{\text {eff}}=\gamma \log _2N\) (with \(\gamma \) being a positive parameter and \(\gamma >1\)) number of sub-domains each of them behaving as if it was a single spin variable. Due to the reduction of the degrees of freedom, the number of accessible microstates scales as \(V^{*}\sim 2^{N_{\text {eff}}}\sim 2^{\gamma \log _2N}\). Configurations however, where units of the same sub-domain point to opposite directions are forbidden. We additionally assume that that the probabilities associated with the allowed configurations, i.e. the probabilities of the sub-domains for strictly pointing either upward or downward are not fixed, but drawn from an uniform distribution on [0, 1]. The above spin system realizes a density of states, which can be formulated as a combination of multi-delta and log-gamma, namely

where first term describes forbidden configurations, while the second one emerges from the accessible configurations, mathematically corresponding to the distribution of the product of \(N_{\text {eff}}\) independent and uniform spin variables (for further details see Supplementary Information). Note that BGS entropy is not extensive for the previously discussed spin system since \(S_{\text {BGS}}\sim \log _{2}N\). On the other hand, Tsallis entropy with \(q<1\) is asymptotically given by

therefore, proposing an extensive entropic measure in case of \(\gamma =\frac{1}{\log _2\left( \frac{2}{1+q}\right) }\), or reversely if \(q=2^{1-\frac{1}{\gamma }}-1\).

Power law density of states (power law)

Power-law like decay offers a much larger heterogeneity over the configuration space compared to e.g., the exponential density of states, and therefore, might dramatically alter the dependence of entropies upon W. To illustrate this, let us first define the corresponding density of states as

Surprisingly, this slow decay characterized by the strong inhomogeneity of probabilities over the configuration space yields to an anomalous scaling of both BGS and Tsallis entropy,

Note that BGS and Tsallis entropies remain finite even in the limit of \(W\rightarrow \infty \), consequently their extensivity can not be carried out anymore, disenabling thermodynamical description of the corresponding systems. In general, we proclaim that the impossibility of extensivity suggests the lack of disorder and uncertainty.

Beta density of states (beta)

A particularly interesting example of first order statistics is the normalized Beta distribution, representing family of continuous probability density functions that are parametrized by two positive shape parameters a and b, written as

where B(a, b) denotes the Beta function. In order to satisfy the previously discussed expected value constraint, the shape parameters should algebraically be related to the sample space volume as \(\left\langle p\right\rangle _{\rho }=\frac{a}{a+b}=\frac{1}{W}\), therefore \(b=a(W-1)\). According to previous relation, either a or b could be chosen arbitrarily while the correct choice for the other shape parameter has to be made in the light of the previous one. Under these settings BGS entropy can simply be expressed as

where \(\psi (x)=\frac{{\mathrm{d}} \ln \Gamma (x)}{{\mathrm{d}} x}\) denotes the Digamma function. When a is in the regime of \(a\sim \frac{1}{W-1}\ll 1\), the corresponding density of states becomes so wide that it results in anomalous scaling of the entropy,

whereas greater values \(a\gg 1\) yield regular \(S_{\text {BGS}}\sim \ln W\) scaling. The Tsallis entropy takes the form of

which again results in anomalous scaling for \(a\sim \frac{1}{W-1}\).

According to the above, first order statistics following the Beta distribution can lead to substantially different types of configuration space scaling depending on the specific choice for the shape parameter \(a=a(W)\). This can be seen by letting \(b\approx aW\) and then using the approximate form of Eq. (27) which reads

with the free parameter of a(W) controlling the broadness of the distribution. By tuning the shape parameter in Eq. (31) above, we can smoothly interpolate between an exponential and power law like \(\rho (p)\), and in parallel keep track of how our system virtually undergoes a transition from a highly disordered state (hallmarked by exponential density) to the ordered regime (characterized by power-law density).

We proceed with providing a detailed description of the above transition. For simplicity, here we restrict our analysis solely to the BGS entropy, however the results can straightforwardly be extended to the Tsallis entropy. If \(a(W)>\frac{1}{W-1}\), the first term in Eq. (28) is dominating the second term, and based on the asymptotic expansion of the Digamma function we recover the regular \(S_{\text {BGS}}\approx \psi (aW+1)\sim \ln W\) scaling. If however, \(a(W)<\frac{1}{W-1}\), the density of states takes a power law like form, and the system is governed into a highly ordered configuration. The very existence of this transition between the two different regimes is mathematically encoded in the properties of the Digamma function. At the transition line \(a(W)=\frac{1}{W-1}\) between the two regimes

Below this line the BGS entropy can not satisfy extensivity. In slightly more intuitive terms, the distribution of the configuration probabilities becomes so wide in this regime that practically no uncertainty is appearing in the system description, which therefore, cannot be made extensive. In Fig. 2d. we illustrate the scaling of the BGS entropy in multiple different cases.

(a) Continuous, parameter-free density of states we consider in this paper. (b) Contribution of configurations with probability \(p<r\) to the total entropy of the system, which we call cumulative entropy \(\Pi _g(r)\), for different combinations of density of states \(\rho \) and entropy kernels g. In each case, \(\left\langle p\right\rangle _{\rho }=W^{-1}=2^{-20}\approx 10^{-6}\). (c) Hanel–Thurner exponent, given by Eq. (5), of Tsallis entropies for the microcanonical ensemble and log-gamma (limiting log-normal) density of states. Although the H–T exponent of BGS entropy (\(q=1\)) is invariant to changing the density of states from microcanonical to log-gamma, this is no longer true for Tsallis entropies (\(q<1\)), indicating that non-extensivity class of any system is jointly determined by its extensive generalized entropy and the system’s density of states. (d) Scaling of BGS entropy \(S_{\text {BGS}}\) with configuration space size W when the system’s the density of states follows a beta distribution with a power-law tail, characterized by a(W). Note that in systems with \(a\sim W^{-\epsilon }\) with \(\epsilon \ge 1\), BGS entropy converges to a finite value in the thermodynamic limit \(W\rightarrow \infty \).

Discussion

Any dynamics, microscopic and coarse-grained, transient and stationary, takes place in the space of all configurations of a system. The size of the configuration space, W, is controlled by the system size N. For systems composed of weakly interacting variables, W scales exponentially with N; the two provides a synonymous description of system size. System size independent (statistical) models can be formulated in terms of homogeneous (e.g., intensive or extensive) functions of N, without paying much attention to the fact that dynamics actually takes place on configuration space.

In complex systems of many strongly interacting variables, this is no longer true. The size of the configuration space might scale non-exponentially with system size, depending on multiple, not yet fully understood facets of correlated, history-dependent dynamics. A macroscopic attempt to extend ideas of statistical mechanics, in particular, the idea of size-invariance, formulated in terms of well-defined thermodynamic limits, to such complex systems can be built on the concept of generalized entropies \(S_g\). Generalized entropies connect system size N with configuration space size W by implicitly re-defining system size as proportional to the generalized entropy characteristic to the system. Generalized entropies are, therefore, extensive by definition, i.e., \(S_g\sim N\). Note that this inverse logic is necessary as the space of dynamics is the configuration space; system size, of which homogeneous functions and system-size invariant models can be formulated, is auxiliary.

Generalized entropies account for higher-order statistics of the dynamics over configuration space, resulting in history-dependence (mathematically formulated as e.g., non-ergodicity), or correlated visiting probabilities. What they do not describe is visiting probabilites themselves, reflected by the fact that \(S_g\) takes these visiting probabilities as arguments. Conventional statistical mechanics suggest that changing visiting probabilities, i.e., the distribution over configurations, does not alter the (asymptotic) relation between system size and configuration space size: the Boltzmann–Gibbs–Shannon entropy \(S_{\text{BGS}}\) is extensive in all known ensembles.

In this paper, we show that this is not the case. First order statistics of visiting probabilities, formulated in terms of density of states \(\rho \), might very well change the relation between N and W. We identify three classes of density of states. Class i) does not change the asymptotic scaling of W(N) compared to the uniform (microcanonical) distribution over configuration space \(\rho =\delta (p-W^{-1})\). These density of states we call regular, as defined in Eq. (7).

Class (ii) entails density of states that change W(N) asymptotically (i.e., they are anomalous, according to Eq. 7) yet an extensive generalized entropy can be still assigned. This extensive generalized entropy is necessarily a different one that is extensive for the microcanonical case. The appropriate generalized entropy is thus dependent on the visiting probabilities, e.g., on the thermodynamic ensemble in equilibrium statistical physics. This is observed in a \(\rho \) corresponding to a system with a microstate with macroscopic probability (that we call Bose–Einstein, BE) in both weakly and strongly correlated systems, modeled by \(S_{\text{BGS}}\) and by the family of Tsallis entropies, \(S_q\), respectively. First order statistics also modify the non-extensivity scaling W(N) in strongly correlated systems in case of a \(\rho \) limiting log-normal, and a \(\rho \) is corresponding to multiple uniform domains in configuration space, whereas they do noft modify W(N) in weakly correlated systems. A paradigmatic example of the above class is provided by the description of quantum spin chains, e.g. in Refs.47,48,49. It is pointed out therein, that a sub-set of configurations is physically unattainable, thus constituting a frozen, thermodynamically inactive part of the whole configuration space. Although the specific form of the corresponding \(\rho \) is not known, the effective size of the sample space grows as \(W_{\text{accessible}}\sim 2^{\ln L}\) instead of \(W_{\text{accessible}}\sim 2^{L}\) with L being the linear size of the system. As a consequence of the inactive part of configuration space, quantum analogue of \(S_{\text{BGS}}\) (Von Neumann entropy) in this case, fails to provide an extensive measure since \(S_{\text{BGS}}\sim \ln L\). In general, including the above example, if a particular region of the sample is inaccessible (always described by a delta function at \(p=0\) in \(\rho \)) and by thus the assumption of equiprobable configurations is violated the associated extensive generalized entropy has to be different than that we would predict under the assumption of microcanonical ensemble.

The third class describes density of states having so much of their probability mass concentrated in the vicinity of 0 that W(N) saturates, and therefore, no extensive entropy can be assigned to such systems. We find that systems with a power-law density of states, with exponent approaching \(-1\) in the limit of \(W\rightarrow \infty \) belong to this class, regardless of their higher-order statistics. Such systems have non-zero visiting probability at all configurations, yet these visiting probabilities are so skewed that both \(S_{\text{BGS}}\) and \(S_q\) saturates at a finite value when \(W\rightarrow \infty \).

We believe that this unified macroscopic picture of strongly interacting and history-dependent processes, based on first and higher order statistics of visiting probabilities over configuration space, makes statistical mechanics a more flexible tool for modeling complex systems both within and outside the realm of physics.

References

Thurner, S., Klimek, P. & Hanel, R. Introduction to the Theory of Complex Systems (Oxford University Press, Oxford, 2018).

Tsallis, C. Introduction to Nonextensive Statistical Mechanics (Springer, New York, 2009).

Tsallis, C. Beyond Boltzmann–Gibbs–Shannon in physics and elsewhere. Entropy 21, 696 (2019).

Tsallis, C. Some open points in nonextensive statistical mechanics. Int. J. Bifurc. Chaos 22, 1230030 (2012).

Bar-Yam, Y. & Bialik, M. Beyond Big Data: Identifying Important Information for Real World Challenges (NECSI, Cambridge, 2013).

Corominas-Murtra, B., Hanel, R. & Thurner, S. Understanding scaling through history-dependent processes with collapsing sample space. Proc. Natl. Acad. Sci. U.S.A. 112, 5348–5353 (2015).

Souza, A., Andrade, R., Nobre, F. & Curado, E. Thermodynamic framework for compact \(q\)-Gaussian distributions. Physica A 491, 153–166 (2017).

Balian, R. From Microphysics to Macrophysics (Springer, Berlin Heidelberg, 2007).

Ruseckas, J. Probabilistic model of \(N\) correlated binary random variables and non-extensive statistical mechanics. Phys. Lett. A 379, 654–659 (2015).

Kononovicius, A. & Ruseckas, J. Stochastic dynamics of \(N\) correlated binary variables and non-extensive statistical mechanics. Phys. Lett. A 380, 1582–1588 (2016).

Jensen, H. J., Pazuki, R. H., Pruessner, G. & Tempesta, P. Statistical mechanics of exploding phase spaces: ontic open systems. J. Phys. A 51, 375002 (2018).

Yalcin, G. C. & Beck, C. Generalized statistical mechanics of cosmic rays: application to positron–electron spectral indices. Sci. Rep. 8, 1764 (2018).

Gadjiev, B. & Progulova, T. Origin of generalized entropies and generalized statistical mechanics for superstatistical multifractal systems. In International Workshop on Bayesian Inference and Maximum Entropy Methods in Science and Engineering 1641, 595–602 (2015).

Cinardi, N., Rapisarda, A. & Tsallis, C. A generalised model for asymptotically-scale-free geographical networks. J. Stat. Mech. Theory Exp. 2020, 043404 (2019).

Baek, K. & Son, W. Unsharpness of generalized measurement and its effects in entropic uncertainty relations. Sci. Rep. 6, 30228 (2016).

Bosyk, G. M., Zozor, S., Holik, F., Portesi, M. & Lamberti, P. W. A family of generalized quantum entropies: definition and properties. Quantum Inf. Process. 15, 3393–3420 (2016).

Shafee, F. Generalized Entropy from Mixing: Thermodynamics, Mutual Information and Symmetry Breaking. Preprint at arXiv:0906.2458 (2009).

Kaniadakis, G. Statistical mechanics in the context of special relativity. Phys. Rev. E 66, 056125 (2002).

Chavanis, P. H. Statistical mechanics in the context of special relativity. Eur. Phys. J. B 62, 179–208 (2008).

Plastino, A. R. & Wedemann, R. S. Nonlinear Fokker–Planck equation approach to systems of interacting particles: thermostatistical features related to the range of the interactions. Entropy 22, 163 (2020).

Hanel, R. & Thurner, S. Generalized (c, d)-entropy and aging random walks. Entropy 15, 5324–5337 (2013).

Czégel, D., Balogh, S. G., Pollner, P. & Palla, G. Phase space volume scaling of generalized entropies and anomalous diffusion scaling governed by corresponding non-linear Fokker–Planck equations. Sci. Rep. 8, 1883 (2018).

Curado, E. & Nobre, F. Equilibrium states in two-temperature systems. Entropy 20, 183 (2018).

Tsallis, C. & Souza, A. Constructing a statistical mechanics for Beck–Cohen superstatistics. Phys. Rev. E 67, 026106 (2003).

Hanel, R., Thurner, S. & Gell-Mann, M. Generalized entropies and the transformation group of superstatistics. Proc. Natl. Acad. Sci. U.S.A. 108, 6390–6394 (2011).

Kannathal, N., Choo, M. L., Acharya, U. R. & Sadasivan, P. Entropies for detection of epilepsy in EEG. Comput. Methods Programs Biomed. 80, 187–194 (2005).

Dai, Y., He, J., Wu, Y., Chen, S. & Shang, P. Generalized entropy plane based on permutation entropy and distribution entropy analysis for complex time series. Physica A 520, 217–231 (2019).

Amigó, J. M., Hirata, Y. & Aihara, K. On the limits of probabilistic forecasting in nonlinear time series analysis II: differential entropy. Chaos 27, 083125 (2017).

Gajowniczek, K., Orłowski, A. & Zabkowski, T. Simulation study on the application of the generalized entropy concept in artificial neural networks. Entropy 20, 249 (2018).

Hanel, R. & Thurner, S. A comprehensive classification of complex statistical systems and an axiomatic derivation of their entropy and distribution functions. Europhys. Lett. 93, 20006 (2011).

Tempesta, P. & Jeldtoft Jensen, H. Universality classes and information-theoretic measures of complexity via group entropies. Sci. Rep. 10, 5952 (2020).

Miguel, Á., Rodríguez, A. . R. & Tempesta, P. . A new class of entropic information measures, formal group theory and information geometry. Proc. R. Soc. Lond. A 475, 20180633 (2019).

Shafee, F. Lambert function and a new non-extensive form of entropy. IMA J. Appl. Math. 72, 785–800 (2007).

Bizet, N. C., Fuentes, J. & Obregón, O. Generalised asymptotic classes for additive and non-additive entropies. Europhys. Lett. 128, 60004 (2020).

Korbel, J., Hanel, R. & Thurner, S. Classification of complex systems by their sample-space scaling exponents. New J. Phys. 20, 093007 (2018).

Kang, J.-W., Shen, K. & Zhang, B.-W. A note on the connection between nonextensive entropy and \(h\)-derivative. Preprint at arXiv:1905.07706 (2019).

Furuichi, S., Mitroi-Symeonidis, F.-C. & Symeonidis, E. On some properties of tsallis hypoentropies and hypodivergences. Entropy 10, 5377–5399 (2014).

Amigó, J. M., Balogh, S. G. & Hernández, S. A brief review of generalized entropies. Entropy 20, 813 (2018).

Jeldtoft Jensen, H. & Tempesta, P. Group entropies: from phase space geometry to entropy functionals via group theory. Entropy 20, 804 (2018).

Khinchin, A. I. Mathematical Foundations of Information Theory (Dover Publications, New York, 1957).

Tsallis, C. Possible generalization of Boltzmann–Gibbs statistics. J. Stat. Phys. 52, 479–487 (1988).

Tsallis, C. & Cirto, L. J. L. Black hole thermodynamical entropy. Eur. Phys. J. C 73, 2487 (2013).

Scarfone, A. Entropic forms and related algebras. Entropy 15, 624–649 (2013).

Hanel, R. & Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 96, 50003 (2011).

Zander, C. & Plastino, A. R. Composite systems with extensive \(S_q\) (power-law) entropies. Physica A 364, 145–156 (2006).

Dettmann, C. P. & Georgiou, O. Product of \(n\) independent uniform random variables. Stat. Probab. Lett. 79, 2501–2503 (2009).

Souza, A. M. C., Rapčan, P. & Tsallis, C. Area-law-like systems with entangled states can preserve ergodicity. Eur. Phys. J. Spec. Topics 229, 759–772 (2020).

Tsallis, C. Black hole entropy: a closer look. Entropy 22, 17 (2020).

Caruso, F. & Tsallis, C. Nonadditive entropy reconciles the area law in quantum systems with classical thermodynamics. Phys. Rev. E 78, 021102 (2008).

Acknowledgements

We thank the two anonymous reviewers for their insightful comments on the manuscript. This research was partially supported by the European Union through projects ‘RED-Alert’ (Grant Nos.: 740688-RED-Alert-H2020- SEC-2016-2017/H2020- SEC-2016-2017-1), by the Templeton World Charity Foundation under Grant Number TWCF0268, and by the Hungarian National Research, Development and Innovation Office, Grant Nos. K 128780, GINOP-2.3.2-15-2016-00057 and KKP_19_129848. The founders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

D.C., S.G.B., G.P. and P.P. developed the concept of the study, S.G.B., D.C. derived the equations, S.G.B., G.P., P.P., D.C. contributed to the interpretation of the results, S.G.B., D.C. prepared the tables and the figures, D.C. S.G.B. and G.P. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Balogh, S.G., Palla, G., Pollner, P. et al. Generalized entropies, density of states, and non-extensivity. Sci Rep 10, 15516 (2020). https://doi.org/10.1038/s41598-020-72422-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-72422-8

This article is cited by

-

Asymptotic freedom and noninteger dimensionality

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.